Abstract

In four experiments we examined whether sensorimotor encoding influences readers’ reasoning about spatial scenes acquired through narratives. Participants read a narrative that described the geometry of a store and then pointed to the memorized locations of described objects from imagined perspectives. In Experiment 1, participants walked during learning towards the direction of every described object and then visualized these objects as being in the immediate environment. In Experiment 2 they rotated their body to the direction of the described objects instead of walking to them, while in Experiment 3 they only turned their heads towards the objects. In Experiment 4, we eliminated the instructions to visualize the objects altogether. Results from the first three experiments revealed a performance benefit for responding from the perspective that participants physically occupied at testing. However, results from Experiment 4 showed that only participants who, in a post-task questionnaire, indicated that they had linked the described environment to their immediate environment exhibited such a benefit. Findings indicate that (1) the physical change in orientation influences reasoning about described environments if the remote environments are linked to participants’ sensorimotor framework and, (2) visualization instructions are sufficient to produce such a link.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

When reading texts, people create vivid mental representations about familiar, unfamiliar, and sometimes fictitious places and events. A long tradition of research in language comprehension documents that readers represent the state of affairs described in the text by serially integrating information to construct dynamic representations known as situation models (Kintsch, 1998).

When constructing situation models from texts, readers typically monitor information continually across multiple dimensions – temporal, spatial, about the protagonist, causality, and intentionality of actions – and index the described events and actions based on these dimensions (Gernsbacher, 1990; Zwaan, Langston, & Graesser, 1995). However, research shows that these dimensions may not all be equally important when constructing and updating situation models from narratives. Generally, under normal reading conditions, readers do not seem to pay close attention to spatial information. Readers can monitor spatial information within narratives, but only under certain conditions – for example, when they are explicitly instructed to focus on spatial information (Zwaan & van Oostendorp, 1993), when they are very familiar with the space (Rinck & Bower, 1995), when spatial information is memorized prior to reading (Morrow, Greenspan, & Bower, 1987; Morrow, Bower, & Greenspan, 1989; Zwaan, Radvansky, Hilliard, & Curiel, 1998), when it is relevant to protagonist’s actions and goals (Morrow et al., 1989), when it is essential for interpreting causal events and achieving coherence (van den Broek, 1990), when instructed to detect event boundaries (i.e., changes to the story situation; Magliano, Kopp, McNerney, Radvansky, & Zacks, 2012), or during a second reading of the narrative text (Zwaan, Magliano, & Graesser, 1995). Compared to normal reading conditions, reading after instructions to keep track of objects’ locations increases the accuracy about spatial aspects of the situation model, but appears to be effortful as reflected by slower reading times (Zwaan & van Oostendorp, 1993).

The relative importance of spatial, temporal, and contextual dimensions has been addressed more directly in a study by Therriault, Rinck, and Zwaan (2006). In this study, before reading narratives, participants were instructed to focus on spatial, temporal, or protagonist-related information. Then, their reading times were recorded for crucial sentences that introduced discontinuities or shifts in these dimensions. When narratives contained protagonist or temporal discontinuities, reading times increased even when the focus was on another dimension. But, when narratives contained spatial discontinuities, reading times increased only when participants were explicitly instructed to attend to the spatial dimension (Experiment 2). These findings underscore that spatial information is more likely to be overlooked than other dimensions of the text (temporal and contextual) and that is not automatically monitored and updated during reading.

Compatible with this conclusion are the findings of a recent study of ours (Hatzipanayioti, Galati, & Avraamides 2015), in which participants encoded spatial locations from narratives and then pointed to them from imagined perspectives. The narratives described fictitious environments, such as court house and a hotel lobby, with a protagonist whose orientation in space was described to change at some point in the narrative. Findings revealed that, after reading the narratives, participants pointed to objects faster from the initial orientation of protagonist than any other orientation. This result suggests that readers defaulted to using a reference frame aligned with the protagonist’s initial orientation to encode the described locations and refrained from updating it when the protagonist was later described to rotate to a new orientation (see also Avraamides, 2003).

In short, research on text comprehension suggests that, in general, spatial information is not closely monitored or updated when constructing situation models (van Oostendorp, 1991). This disregard of the spatial dimension is in stark contrast with findings about the representation of events and scenes that are visually perceived, where viewers have been shown to monitor spatial information more closely than temporal information (see Kelly, Avraamides, & Loomis, 2007, for a discussion). For example, a study by Radvansky and Copeland (2006) showed that participants moving within a multi-room virtual environment were slower to recognize what object they were holding when they had walked into a different room after picking it up than when they moved for the same extent within the same room. This finding suggests that when experiencing environments through vision, people are sensitive to spatial shifts even without being explicitly instructed to focus on them.

Another line of research – on spatial updating – also indicates that for immediate, perceptually experienced environments, people are sensitive to spatial shifts. Spatial updating refers to the mechanism that allows people to monitor, during movement, the spatial relations between themselves and objects in the environment (Pick & Rieser, 1982; Rieser, 1989). This work suggests that people effortlessly and automatically keep track of the changing egocentric relations in their immediate environment, most likely by relying on proprioceptive and vestibular input available during movement. For example, people point to memorized locations fast and accurately from novel positions and orientations they adopt through physical movement (compared to baseline pointing from the learning viewpoint), even when this movement takes place without visual input (Presson & Montello, 1994; Rieser, 1989).

Collectively, the findings described above – suggesting that people automatically monitor changes in spatial relations when they interact with an environment experienced perceptually but not with a described environment – give rise to the following largely unexplored question. What would happen if readers linked spatial descriptions (e.g., about an environment and the protagonist’s actions) to their perceptual/sensorimotor experience? By linking spatial descriptions to their environment and to their own actions, would readers be more likely to monitor subsequent described changes in spatial relations? In other words, would linking described spatial relations to a sensorimotor framework (see De Vega, 2008) make environments learned from narratives more like those experienced visually?

Readers may disregard changes in previously encoded spatial relations and may not leverage their own movement that accompanies reading to monitor these changes, if monitoring the spatial dimension is not a priority for building and maintaining a situation model or if their own movement is not made functionally relevant to the goals of the protagonist or the described situation. But if readers perceptually simulate what is being described in the text (including the protagonist’s movement) by activating their own motor and perceptual experiences, it is possible that their physical movement, if compatible with and tethered to the protagonist’s described movement, can facilitate spatial updating in situation models. This possibility is in line with findings from studies demonstrating interactions between physical movement and the processing of described actions (e.g., Borreggine & Kaschak, 2006; Glenberg & Kaschak, 2002; Kaschak & Borreggine, 2008; Zwaan & Taylor, 2006) and overall with the idea that representations of spatial information in situation models are embodied (Zwaan, 2004).

Whether linking described spatial relations to sensorimotor/perceptual experience can encourage readers to monitor them more closely, was examined in a series of experiments by Avraamides, Galati, Pazzaglia, Meneghetti, and Denis (2013). In these experiments, the monitoring of changes in spatial perspective in situation models was examined by manipulating the alignment of the reader’s and the protagonist’s orientations. Participants read narratives that described a protagonist in fictitious environments with objects placed in various orientations. Participants had to think of themselves as being the protagonist of the text and then perform perspective taking trials by responding to statements of the form “imagine facing X, point to Z.” The study manipulated participants’ physical orientation at testing by instructing them to rotate to match or mismatch the protagonist’s described rotation. The authors hypothesized that if situation models from narratives are updated the same way as perceptual environments, participants’ rotation would influence performance. In that case, a sensorimotor alignment effect (e.g., Kelly, Avraamides, & Loomis 2007) was expected – that is, better performance from the imagined perspective that was aligned versus counter-aligned with participants’ actual orientation during testing. However, results revealed no sensorimotor alignment effect. Instead, participants performed better from the imagined perspective that was aligned with their learning orientation – the orientation they occupied when reading the narrative (evidencing a so-called encoding alignment effect), while disregarding any subsequent change of their orientation.

The results of Avraamides et al. (2013) deviate markedly from those obtained in spatial updating studies with perceptual environments in which pointing performance is strongly influenced by physical movement (e.g., Rieser, Guth, & Hill, 1986). What is more, the findings are at odds with the results from studies on situation models showing that spatial information can be automatically monitored, provided that is foregrounded by instructions or made functionally relevant (Morrow et al., 1989; Zwaan & van Oostendorp, 1993). In the study of Avraamides et al. (2013), the protagonist perspective was foregrounded by the readers’ physical movement, yet participants did not seem to update their situation model. However, a number of methodological factors can account for this. First, in Avraamides et al. (2013) participants sat on a swiveling chair throughout the experiment and adopted the protagonist’s described rotation by turning on their chair. Merely swiveling in a chair in order to change perspective may have not matched the described rotation well enough to provide strong motivation for participants to link the described situation to a sensorimotor framework. Walking to a new orientation instead of swiveling could have been more effective in linking participants’ movement to that of the protagonist. Second, participants were surrounded from three sides with desks and computer monitors, which were used to present visually the text of the narratives and the testing trials, thus limiting visual access to the rest of the room. This may have interfered with participants’ linking of the remote environment to their immediate surroundings, also preventing them from encoding the described objects in a sensorimotor framework.

In light of these issues, in the present study we re-examined whether the reader’s physical movement, when compatible with the protagonist’s described movement in a narrativeFootnote 1, can promote the encoding of locations into a sensorimotor framework that allows automatic updating with further movement by the reader, post-encoding. Our aim was to examine, under conditions of decreasing physical movement during encoding and decreasingly explicit linkages of the described environment to the immediate surroundings (e.g., through visualization instructions), how effectively participants would update their spatial representation when rotating after encoding.

Overview of experiments

In Experiment 1 we employed extensive physical movement during the processing of the linguistic information to foreground spatial information and encourage the sensorimotor encoding of described locations; then, we examined whether physical rotation towards a new orientation would cause participants to automatically update spatial relations within the described environment. To the extent that physical movement (perhaps beyond a certain threshold of engagement) helps link the described environment to the sensorimotor framework, we expect participants to update their spatial representation when rotating after learning. This should be reflected in superior performance (i.e., high accuracy and faster responses) for the imagined perspective aligned with the updated orientation compared to the baseline (i.e., a sensorimotor alignment effect). We also generally expected participants’ performance to show an independent advantage for imagined perspectives aligned with the initial orientation (i.e., encoding alignment effect) as reported by previous studies (e.g., Avraamides et al., 2013; Kelly et al., 2007). In this experiment we also manipulated visual access to the laboratory during testing, to investigate whether sensorimotor facilitation and interference would be reduced if participants carried out testing with their eyes-closed. Our conjecture was that, if participants encoded locations within a sensorimotor framework, visual access to one’s immediate surroundings (compared to occluded vision) would make more salient the memorized self-to-location relations from the participant’s actual perspective during testing, facilitating pointing from that perspective and interfering with pointing from any other perspective. Thus, we expected that preventing visual access to one’s surroundings during testing, with eyes closed, would reduce both facilitation and interference, yielding a smaller sensorimotor effect.

In Experiment 2, we eliminated the extensive physical walking during encoding and instead asked participants to rotate their body to the direction of the described objects. Similar to hypotheses of Experiment 1, if less extended physical movement can still lead to updating the situation model, participants should have a benefit in performance for the updated orientation compared to the remaining ones.

In Experiment 3, we asked participants simply to turn their heads to face each object introduced in the narrative. As in Experiments 1 and 2, any benefit of physical movement would show an advantage for the updated orientation.

Finally, in Experiment 4, we eliminated any physical movement during encoding as well as the instructions to visualize the objects, only allowing participants to walk towards the center of the laboratory room prior to encoding. In contrast to previous experiments, we expected that no sensorimotor effect would be present, unless participants could readily use the geometry of the environment to link the described locations to the immediate environment and in turn to an updatable sensorimotor framework. This was further investigated by examining participants’ answers in a short post-experiment questionnaire in which they described their strategies used to approach the task.

Insofar as both encoding and sensorimotor alignment effects could be present in any of the experiments, we conducted further analyses in each experiment by categorizing participants based on their preference, as reflected by the effect that was dominant in their response accuracy or latency. The presence of a dominant encoding effect only would imply that participants maintained the initial orientation they formed during learning, whereas the presence of an additional sensorimotor alignment effect would imply that participants updated the situation model along with their rotation towards the testing orientation.

Experiment 1

In Experiment 1, we examined whether extensive movement and visualization would encourage the use of a sensorimotor framework, by linking the spatial information presented in narratives to the immediate environment. Participants read a narrative that provided descriptions about the locations of eight objects inside a fictitious clothing store. During the learning phase participants were instructed to imagine themselves in the position of the protagonist and walk in the laboratory towards the direction of each described object as if these were actually in the store. Prior to testing, participants rotated 90o from their initial orientation and carried out perspective-taking trials which involved responding to statements of the form “imagine facing X, point to Z” with their eyes either open or closed. If participants had linked the remote environment to their sensorimotor framework, then they would update their situation model of that environment during post-encoding rotation and exhibit faster and/or more accurate performance for trials in which the imagined perspective was aligned with their orientation at test.

An additional aim of Experiment 1 was to test whether visual access to the environment when carrying out perspective taking trials about memorized remote environments (manipulated by having the eyes open or closed) influences the sensorimotor alignment effect. If participants have linked the remote environment to a sensorimotor framework during the encoding of the text, then visual information from the actual room continually specifying the participant’s physical orientation adopted by the rotation right before testing, would facilitate responding from imagined perspectives aligned with that orientation and/or interfere with responding from perspectives misaligned to it. It is possible, therefore, that restricting visual access to the immediate environment during testing (by having the eyes closed) would allow participants to inhibit more easily information specifying their actual orientation. If this is the case, we would expect a smaller sensorimotor alignment effect in the eyes-closed than the eyes-open condition. As in previous studies (e.g., Kelly et al., 2007), planned contrasts were used to determine the presence of sensorimotor and encoding alignment effects in each visual access condition.

Method

Participants

Forty student volunteers from the University of Cyprus participated in the experiment. Twenty participants were randomly assigned to each of the eyes-open and the eyes-closed conditions.

Materials

Materials included a narrative that described the interior of a clothing store. The narrative was divided in five parts with detailed descriptions about the locations of eight objects in the store (see Appendix for story material). Four of the objects were located on the canonical axes of the rectangular room (0o, 90o, 180o, and 270o orientations), while the remaining four objects were located at its corners (45o, 135o, 225o and 315o orientationsFootnote 2). All descriptions were provided in the second person to encourage readers to adopt the described perspective.

In the testing phase, locations of all objects were used either as referents specifying the imagined perspectives that participants had to imagine adopting or as targets to be pointed at. Participants carried out pointing responses with a joystick that was placed in front of them on a stool. Trials were presented as audio clips of 2-s duration delivered via headphones. A blindfold occluded participants’ vision during testing in the eyes-closed condition.

Design

The experiment followed a mixed 2 (visual access: open vs. closed) × 8 (imagined perspectives: 0o, 45o, 90o, 135o, 180o, 225o, 270o and 315o) design with imagined perspective manipulated within participants and visual access between.

Procedure

Participants signed an informed consent before the start of the experiment and were thoroughly debriefed afterwards. The experiment began with practice trials that participants completed in order to become familiarized with the pointing task. These trials involved three everyday objects (i.e., a candle, a glass, and a ball) placed around the participant in the laboratory room. Participants memorized the locations of the objects and then carried out a series of perspective-taking trials with the use of a joystick by responding to statements of the form “you face the ball, point to the candle.” They completed six trials that involved all possible pairs of objects.

Upon completing the practice trials participants were placed with their backs towards one of the long walls of the laboratory and were told that they had the entrance of a clothing store at their back. They were then given the narrative description of the store, printed on sheets of paper, to read and were told to memorize the locations of objects described in the text. Participants were also instructed to move in the laboratory as if they were in the clothing store they were reading about. In the first two sentences the narrative introduced the protagonist (i.e., themselves) moving through a room to accomplish a certain goal. Then, the narrative instructed participants to walk towards the center and adopt an initial facing orientation, hereafter referred to as 0o. Next, participants were instructed to move along the 0o direction and towards the opposite wall of the laboratory to inspect the object located directly in front of them (“From the center of the store, you see in the distance in front of you the shelves. You think you should take a look. You walk towards the direction of the shelves and you start browsing the clothes”). When participants finished with the encoding of objects by walking towards all objects located at the canonical axes of the store, they continued with the descriptions of objects located in the corners of the room. After participants encoded the locations of all the objects, they were instructed to return to the center of the room, face towards 0o, and visualize the environment of the narrative along with the objects. Participants were allowed to read the story once more if when probed by the experimenter, they indicated that did not manage to form an accurate representation of the environment.

After completing the learning phase, participants proceeded to the testing phase. The testing phase required participants to adopt a new physical orientation (referred to as the updated orientation) by rotating 90o to the right of their initial orientation. While in this position participants carried out a series of perspective-taking trials by responding with a joystick to statements of the form “you face X, point to Z,” for all combinations of objects. Response latency was measured from the end of the audio clip until the joystick shaft was deflected 30o from its vertical position. Participants were instructed to point as fast as possible but without sacrificing accuracy for speed. Each participant completed 56 trials in a random order.

Results

Data were analyzed with repeated measures analyses of variance (ANOVA) followed by planned contrasts to evaluate the presence of encoding and sensorimotor alignment effects.

Results from the ANOVA revealed that participants’ accuracy varied as a function of the imagined perspective adopted at testing, F(7, 266) = 19.828, p< .001, η 2= .34. As illustrated in Fig. 1 (panel a), a commonly-found saw tooth pattern (e.g., McNamara, 2003; Mou & McNamara, 2002) was observed in both visual access conditions, indicating better performance for imagined perspectives at canonical orientations (0o, 90o, 180o, and 270o) than diagonal orientations (45o, 135o, 225o, and 315o), t(45) = 8.13, p<.001. There was neither a significant main effect for the visual access condition nor a significant interaction with imagined perspective, F(1, 38) = .587, p= .44, η 2= .01, and F(7, 266) = .735, p= .64, η 2= .02, respectively.

Accuracy (panel a) and response latency (panel b) as a function of imagined perspective and visual access condition, Experiment 1. Orientations are measured counter-clockwise from the initial orientation (00). The updated orientation is shown as 2700 and the baseline opposite to updated orientation as 900. Error bars represent standard errors from the ANOVA

Planned contrasts comparing accuracy for the opposite to updated orientation (which was used as a baselineFootnote 3) with (1) the accuracy for the initial orientation and (2) the accuracy for the updated orientation were carried out to test the presence of an encoding alignment effect and a sensorimotor alignment effect, respectively. The contrasts showed neither an encoding alignment effect nor a sensorimotor alignment effect in the eyes-closed condition, t(19) = .13, p= .90 and t(19) = 1.28, p= .21, respectively (Fig. 2, panel a). On the other hand, a sensorimotor alignment effect was found in the eyes-open condition, t(19) = 2.10, p= .04. Participants were more accurate in imagined perspectives aligned with the updated (M= .90) than the opposite to updated (M= .80) orientation when they had their eyes open. No encoding alignment effect was present, t(19) = 1.06, p= .30.

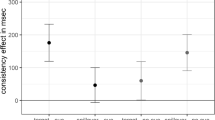

Encoding and Sensorimotor alignment effects for accuracy (panel a) and response latency (panel b), Experiment 1. For accuracy, encoding alignment effect = accuracy for initial orientation - accuracy for opposite to updated orientation, and sensorimotor alignment = accuracy for updated orientation – accuracy for opposite to updated orientation. For response latency, the terms in the subtractions were reversed. Error bars represent standard errors from the t-test

Participants’ response latency also varied as a function of the imagined perspective. This was corroborated by the presence of a significant main effect for imagined perspective, F(7,259) = 13.07, p< .001, η 2= .26. Overall, participants were faster to respond from imagined perspectives aligned with the canonical than the diagonal orientations in both visual access conditions (Fig. 1, panel b), t(47) = 5.38, p<.001. As with accuracy, the analysis revealed neither a significant main effect for visual access nor a significant interaction with imagined perspective, F(1, 37) = 2.380, p= .13, η 2= .06, and F(7, 259) = .817, p= .57, η 2= .02, respectively.

Unlike accuracy, planned contrasts for response latency revealed significant sensorimotor alignment and encoding alignment effects in both visual access conditions (Fig. 2, panel b). For the eyes-closed condition, both the sensorimotor alignment and encoding alignment effects were significant, t(19) = 2.92, p= .009 and t(19) = 2.87, p= .010, respectively. Similarly, for the eyes-open condition a significant sensorimotor alignment effect was present, t(19) = 3.4, p= .003, as well as a significant encoding alignment effect, t(19) = 2.20, p=.40. Moreover, an independent sample t-test showed that the sensorimotor alignment effect was significantly greater in the eyes-open than in the eyes-closed condition, t(38) = 2.07, p= .49. In contrast, the encoding alignment effect did not differ significantly in the two visual access conditions, t(38) = 1.41, p=.171.

Discussion

Results from Experiment 1 revealed both encoding and sensorimotor alignment effects in participants’ response latency for both visual access conditions, although the sensorimotor alignment effect in latency was greater in the eyes-open condition. In accuracy, a sensorimotor alignment effect was found in the eyes-open condition but not in the eyes-closed condition. Furthermore, no encoding alignment effect was present for the accuracy of either visual access condition. These differences in accuracy and response latency measures could be because the perspectives of interest (i.e., the learning, updated, and opposite to updated) were aligned with the geometry of the room and the axes of the reference frame that participants most likely used to construct a spatial memory. Avraamides, Theodorou, Agathokleous, and Nicolaou (2013) showed that these canonical perspectives are easy to maintain in memory in order to compute a pointing response from an imagined perspective.

This is likely to give rise to relatively comparable performance across perspectives in terms of accuracy, even though the need to carry out mental transformation (e.g., to mentally rotate one’s viewpoint to adopt an imagined perspective) may still result in differences in response latency across perspectives. Thus, accuracy compared to response latency, may be less sensitive to the orientation dependence of spatial memories.

The presence of both encoding and sensorimotor alignment effects in latency is compatible with the idea that people maintain multiple spatial representations when encoding locations in memory (Avraamides & Kelly, 2008; Mou & McNamara, 2002; Waller & Hodgson, 2006). The encoding alignment effect suggests that participants constructed an enduring spatial representation based on their initial orientation during learning, while the additional sensorimotor alignment effect indicates that they also maintained a more transient representation, which they updated during their physical rotation towards a new orientation before testing.

To further examine this possibility we categorized participants based on whether they exhibited both an encoding and a sensorimotor alignment effect versus only one of the two effects versus none of the effects. As summarized in Table 1, this categorization revealed that 65 % of participants in the eyes-closed condition exhibited both effects in latency, 10 % of participants a sensorimotor effect only, and 5 % an encoding alignment effect in latency but a sensorimotor alignment effect in accuracy. Notably, the remaining 20 % of participants (N=4) who exhibited neither effect in latency had both effects in accuracy. In the eyes-open condition, 85 % of participants exhibited both encoding and sensorimotor alignment effects in latency, whereas the remaining 15 % exhibited a sensorimotor effect only. Overall, the majority of participants in Experiment 1 exhibited both effects in latency, which supports the idea that multiple spatial representations are maintained. Moreover, the larger sensorimotor effect in the eyes-open condition supports our hypothesis that access to visual cues leads to greater sensorimotor influence. By extension, eliminating visual access to the surroundings enables people to inhibit more easily information specifying their orientation (reducing the sensorimotor alignment effect), and thus to respond more easily from imagined perspectives misaligned to their orientation. As seen in Fig. 1, this result was more likely caused by reduction of interference for responding from perspectives other than the updated and the learning perspectives. This finding supports our expectation that restricting visual access to immediate surrounding allows participants to more easily inhibit conflicting spatial information when identifying locations from imagined perspectives.

The extensive physical movement during encoding in Experiment 1 might have encouraged participants to link the remote imagined environment to an updatable sensorimotor framework, which is believed to govern the encoding of spatial relations in one’s immediate surroundings (Avraamides & Kelly, 2008; May, 2004). To explore the extent of movement that is needed for this linking to occur, in Experiment 2 we eliminated physical walking towards objects and asked participants to rotate physically to the direction of each object but only imagine walking to it. If extensive movement, such as the one entailed by physically walking to objects, and the idiothetic information available through it, is necessary to represent distal locations to a sensorimotor framework, then no sensorimotor effect should be present in Experiment 2. If, however, physical rotation is sufficient to induce such a link, then a sensorimotor effect could arise despite the absence of walking.

Experiment 2

In Experiment 2, we eliminated physical walking and allowed only physical rotations to the directions of objects, in order to examine whether the sensorimotor alignment effect would still arise in the absence of extensive physical movement. Unlike Experiment 1, we tested participants only in the eyes-closed condition, which produced a smaller sensorimotor effect, possibly by reducing cues that made the participants’ physical orientation more salient. This way, we tested the condition in which the sensorimotor effect was more likely to be eliminated.

Method

Participants

Eighteen student volunteers from the University of Cyprus participated in the experiment. All participants were tested in the eyes-closed condition.

Materials, design, and procedure

Materials and procedure were identical to those of Experiment 1 with one notable difference. While in Experiment 1 the narrative instructed participants to walk to the center of the laboratory and towards the directions of the described objects, in Experiment 2 participants walked towards the center but, each time a remote object was described, they rotated their body to face it and simply imagined walking towards it from their previous imagined position in the remote environment (i.e., as in Experiment 1 except that movement was only imagined).

Results

Participants’ accuracy varied as a function of the imagined perspective adopted at testing, F(7, 119) = 20.107, p<.001, η 2= .54. As in Experiment 1, a sawtooth pattern was present, indicating better performance for imagined perspectives aligned with the canonical than the diagonal orientations (Fig. 3, panel a), t(17) = 8.05, p<.001.

Planned contrasts for accuracy documented the presence of a significant sensorimotor alignment effect, with participants being more accurate to respond from an imagined perspective aligned with the updated than the opposite to updated orientation, t(17) = 3.33, p=.004. However, no encoding alignment effect was found, t(17) = .55, p= .58 (Fig. 4, panel a).

Response latency also varied as a function of the imagined perspective. There was a significant main effect for imagined perspective F(7, 119) = 19.144, p< .001, η 2= .53, with a sawtooth pattern indicating again faster responses from imagined perspectives aligned with the canonical than with the diagonal orientations (Fig. 3, panel b), t(17) = 5.86, p<.001.

As in Experiment 1, planned contrasts for response latency revealed statistically significant sensorimotor and encoding alignment effects (Fig. 4, panel b), t(17) = 5.36, p< .001 and t(17) = 5.81, p< .001, respectively. Participants were significantly faster in their responses from the imagined perspective aligned with the updated than with the opposite to updated orientation. They were also faster to respond from the imagined perspective that was aligned with the initial than with the opposite to updated orientation.

The categorization of participants based on the presence of an encoding and/or a sensorimotor alignment effect revealed that 17 out of 18 participants (94.5 %) showed both an encoding and a sensorimotor alignment effect in response latency, with the remaining participant exhibiting only the sensorimotor effect (Table 1).

Discussion

Results from Experiment 2 revealed a sensorimotor alignment effect in accuracy and response latency as well as an encoding alignment effect in response latency. Despite the elimination of extensive walking during encoding and the elimination of visual access during testing, the persistent sensorimotor alignment effect suggested that participants had updated their orientation when turning before testing. It seems that simply rotating to face each object during encoding sufficed for linking the remote environment to a sensorimotor framework. Also, as in Experiment 1, the documented encoding alignment effect suggests that the initial representation formed during encoding co-existed in memory with the representation participants formed when they updated their orientation along with their rotation prior testing. More evidence for this comes from the fact that all participants except one, showed both effects in latency suggesting that they maintained multiple spatial representations.

Having established here that body rotation during encoding suffices for linking a remote environment to one’s sensorimotor reference frame, in Experiment 3 we examined whether even more minimal physical movement – simply turning one’s head towards object locations – would be sufficient for such updating to occur.

Experiment 3

In Experiment 3, we completely eliminated physical rotation and allowed physical walking only towards the center of the room before testing, while also providing visualization instructions about the described store, in order to examine whether the sensorimotor alignment effect would be present without much movement during encoding. Participants were instructed to simply turn their heads to the directions described in the narrative.

Participants

Twenty-two students from the University of Cyprus participated in the experiment in exchange for monetary compensation (10€). All participants were tested in the eyes-closed condition.

Materials, design, and procedure

Materials, design and procedure were identical to those of Experiment 2, with one notable difference. In Experiment 2, participants were instructed to walk towards the center of the room and physically rotate each time an imaginary object was described to face its orientation. In Experiment 3, participants remained stationary with their back towards one wall of the room throughout the description of all objects and were instructed only to turn their head, but not their body, towards each described location. Participants were also instructed to imagine themselves moving in the room towards the locations of objects when the narrative instructed them to do. Following encoding, they walked to the center of the room where they were given visualization instructions. After that, they physically rotated to adopt the updated orientation for the testing phase to begin.

Results

As in previous experiments, results revealed that participants’ accuracy varied as a function of imagined perspective adopted at testing, F(7, 147) = 14.418, p< .001, η 2= .40. As seen in Fig. 5 (panel a), a sawtooth pattern, indicating better performance for imagined perspectives aligned with the canonical than the diagonal orientations of the room, was present, t(21) = 5.37, p<.001. Further analysis using planned contrasts revealed the presence of a significant sensorimotor alignment effect (Fig. 6, panel a), suggesting that participants updated their representation when they rotated, t(21)= 2.56, p<.05. However, no significant encoding alignment effect was found for accuracy, t(21) = 1.16, p=.25.

In terms of response latency, performance was influenced by the imagined perspective as documented by the main effect of this variable, F(7, 119) = 11.486, p< .001, η 2 = .40. The typical sawtooth pattern was present in response latency as well (Fig. 5, panel b), t(20) = 6.17, p<.001. Subsequent planned contrasts revealed significant sensorimotor and encoding alignment effects t(21)= 4.98, p< .001 and t(21) = 4.22, p<.001 (Fig. 6, panel b). That is, compared to the opposite to updated orientation, participants were faster to respond from imagined perspectives aligned with the updated orientation and the initial orientation.

As Table 1 illustrates, 64 % of participants exhibited both encoding and sensorimotor alignment effects in both accuracy and response latency. Of the remaining 36 % (N=8), half of them showed only the sensorimotor effect, 9 % (N=2) only the encoding effect, and another 9 % neither of the effects in response latency. However, those participants who showed either an encoding alignment or neither effect in response latency, all exhibited both encoding and sensorimotor effects in accuracy.

Discussion

Results in Experiment 3 revealed the presence of a sensorimotor alignment effect in both accuracy and response latency and the presence of an encoding alignment effect in response latency. Thus, despite eliminating movement during encoding, participants were able to update a spatial representation when rotating to a new orientation immediately prior to testing. This is also attested by the fact that, for both response latency and for accuracy, 82 % of participants exhibited a sensorimotor alignment effect, whether on its own or in conjunction with an encoding alignment effect.

These findings raise the possibility that visualization instructions, which foreground spatial information, might be the critical factor supporting the creation of an updatable spatial representation from narratives, with physical movement in fact not being necessary for maintaining remote objects in such a sensorimotor framework. Experiment 4 examines this possibility.

Experiment 4

In Experiment 4, we provided no explicit visualization instructions to participants to examine whether a sensorimotor alignment effect would still be present. However, as the geometric structure of the laboratory matched that of the described store, we considered it possible that at least some participants would still link the remote environment to their immediate surroundings. For this reason, after completing the pointing task, we asked participants to complete a brief questionnaire about the strategies they had used during learning and testing. We expected that a sensorimotor alignment effect would be present only for participants who, despite the absence of visualization instructions, spontaneously linked the remote objects to their immediate environment.

Participants

Thirty-four graduate and undergraduate students from the University of Cyprus, as well as members of the wider community participated in the experiment voluntarily. All participants were tested in the eyes-closed condition.

Materials, design, and procedure

Materials, design and procedure up to the practice trials were identical to all the previous experiments. The learning phase of Experiment 4 differed from that of Experiment 3 in that participants were provided with no explicit instructions about either visualizing the objects or turning their heads towards locations in the laboratory. Specifically, participants stood at the center of the laboratory and were given the story to read. They were simply told that the narrative contains a description of a store and their goal is to remember the locations of objects, without any additional specifications of visualizing the environment or following the protagonist’s movement. As in previous experiments, participants were allowed to re-read the narrative if they did not feel confident enough to proceed to testing phase. After learning, but before testing, they were instructed to rotate 90o to their right. After completing the testing trials participants were asked to fill out a short questionnaire about the strategies they used to remember the fictitious environment. They responded to questions that included the following: “Did you imagine being at the described environment?”, “Did you transfer the contents of the imagined store to the laboratory?”, “Did you link in any way the laboratory to the imagined store?”, “Did you link any objects within the laboratory to those described in the narrative?” At the end of the experiment, and in order to assess whether they had formed an accurate representation of the described environment, participants were asked to draw the described layout on a sheet of paper; this was done because pilot testing revealed that some people had problems forming a good spatial representation under the learning constraints of this experiment.

Results

Five subjects were excluded from all analyses due to very low accuracy (< 25 %). As evidenced from the drawings of the layouts, two of these participants had formed representations that were highly distorted (i.e., some objects were placed more than 900 away from their correct location).

As in the previous experiments, results showed that participants’ accuracy depended on the imagined perspective, F(7, 196) = 5.595, p< .001, η 2= .16. Performance was overall better for imagined perspectives aligned with the canonical than the diagonal orientations (Fig. 7, panel a), t(28) = 4.89, p<.001. In contrast to the previous experiments, planned contrasts showed neither an encoding nor a sensorimotor alignment effect, p= .31 for both comparisons (Fig. 8, panel a).

Response latency also depended on the imagined perspective adopted in the trial, F(7,154) = 6.093, p<.001, η 2= .21. As with accuracy findings, a sawtooth pattern indicating better performance for imagined perspectives aligned with the canonical than the diagonal orientations was found (Fig. 7, panel b), t(28) = 5.36, p< .001. Planned contrasts revealed a significant encoding alignment effect, t(28) = 3.60, p=.001, but no sensorimotor alignment effect, t(28) = .97, p=.34 (Fig. 8, panel b).

We then conducted further analyses by dividing our sample to participants who indicated that they linked the imagined environment to the laboratory (14 participants) and those who didn’t (15 participants), based on their responses on the questionnaire (e.g., ‘did you link the laboratory to the described store?’). In the analyses on accuracy performed separately for each group, no significant alignment effects (sensorimotor or encoding) were observed. However, the response latency analyses showed a significant sensorimotor alignment effect for those participants who reported linking the remote environment with their immediate surrounds, t(13) = 2.74, p< .05. No such effect was present for those who did not report making such a link, t(14)=.74, p=.47. The encoding alignment effect was significant for participants who didn’t link the distal environment with the laboratory, t(14) = 3.00, p=.01 and marginally so for those who did, t(13) = 2.01, p=.06.

Moreover, Experiment 4 revealed that out of the 14 participants who reported linking the two environments, 79 % (N=11) exhibited both encoding and sensorimotor effects in response latency. Of the remaining three participants, two showed only an encoding effect in response latency, and another one showed none of the effects in response latency (but exhibited both effects in accuracy). Of the 15 participants who reported that they did not link the described environment with the laboratory, only 33 % (N=5) showed a sensorimotor effect along with the encoding alignment effect in response latency. Of the remaining ten participants, only three exhibited a sensorimotor effect in response latency.

Discussion

Results from Experiment 4 showed that a sensorimotor alignment effect (in terms of response latency) was present only for participants who indicated that they linked the remote objects described in the narrative to their immediate surroundings. Interestingly, about half of the participants carried out such visualization in the absence of any explicit instruction. A plausible explanation is that the similarity in shape between the described environment and the lab, whose actual objects were limited to the perimeter of the room (desks and chairs against the walls), encouraged these participants to spontaneously connect the two spaces. For these participants who linked the narrative to the immediate environment, the majority of them (79 %) exhibited a sensorimotor alignment effect, in addition to an encoding alignment effect, in response latency. That is, for these individuals, the ease of making spatial judgments from the updated perspective at testing was facilitated relative to baseline. Overall, the findings of Experiment 4 indicate that visualizing remote objects as immediate leads to updating a spatial representation with physical movement.

General discussion

The findings of the present study provide important insights about updating spatial representations from described environments. In four experiments we examined whether linking spatial descriptions to perceptual experience would encourage sensorimotor encoding and effortless updating of spatial relations with post-encoding movement. Results showed that changes in described spatial relations can indeed be monitored and updated, provided that locations had been encoded in a sensorimotor framework (Avraamides & Kelly, 2008; De Vega, 2008). Such encoding seems to take place when the remote locations are linked to readers’ position and orientation, by means of visualization instructions and physical movement that is compatible with movement described in the narrative (Experiments 1–3). Furthermore, as Experiment 4 showed, even in the absence of visualization instructions and extensive physical movement, some readers can take advantage of the spatial correspondence of the perceptual and described environments to establish an updatable spatial representation.

Numerous studies in language comprehension suggest that under normal reading conditions the spatial dimension in situation models is not monitored closely (Magliano, Trabasso, & Graesser, 1999; van Oostendorp, 1991; Zwaan et al., 1995). This is in stark contrast with reasoning about visually perceived environments for which observers are shown to monitor closely spatial information, even in the absence of explicit instructions to do so (Radvansky & Copeland, 2006). For example, whereas readers are typically not sensitive to spatial discontinuities in the text, as indexed by reading times (Therriault et al., 2006), observers are sensitive to spatial discontinuities and shifts: they exhibit difficulties in recalling memorized objects that are distant to the protagonist (Curiel & Radvansky, 2002), are slower in identifying shifts in spatial regions presented in narrative films (Magliano, Miller, & Zwaan, 2001), and, in virtual reality/game conditions, respond more slowly to enemy fire after a change of location (Magliano, Radvansky, and Copeland, 2007). Taken together, findings from language comprehension and perception indicate differential treatment of the spatial aspects of events that are described vs. directly perceived. While by default spatial information is prioritized with perceptual but not with linguistic encoding, it can be monitored closely even in narratives, as long as it is foregrounded in one of several ways, e.g., by instructing readers to pay attention to spatial information (Morrow et al., 1987; Zwaan & van Oostendorp, 1993).

Our findings indicate that linking described locations with the readers’ own position and orientation in space is another way of foregrounding described spatial information. In our experiments, physical movement compatible with the protagonist’s movement, along with instructions to visualize the described objects, likely encouraged participants to link the spatial descriptions to their immediate surroundings and encode spatial locations relative to their own position in space. In turn, this sensorimotor encoding of spatial locations allowed them to update protagonist-to-object relations in their situation model when they rotated post-encoding. This result is in line with findings from spatial updating studies showing that people rely on vestibular and proprioceptive information that is available during physical movement, to keep track of changing egocentric spatial relations (e.g., Rieser, 1989). Our findings here suggest that when described spatial locations are encoded in a sensorimotor framework, they can be updated with physical movement, just like locations that are perceived directly.

Although spatial information is not easily monitored during the construction of situation models, it is can be monitored under particular circumstances. Our work here corroborates previous findings showing that when providing readers with a specific goal, such as explicit instructions to memorize the locations of objects (Therriault et al., 2006; Zwaan & van Oostendorp, 1993), or when establishing a functional relation between spatial information and the protagonist’s actions (Radvansky & Copeland, 2000), readers are able to take into account the protagonist’s rotation (which matched their own motor experience) and update the situation model they have constructed.

Our finding that, especially after their own accompanying physical movement, readers were able to keep track of changes in described protagonist-to-object spatial relations is compatible with the literature on embodied cognition. According to the embodied cognition view, language is grounded in perception and action, with linguistic processing involving the simulation of prior perceptuomotor experiences (Barsalou, 1999). An implication of this view is that the spatial congruity between visually perceived relationships and linguistic descriptions matters. For example, readers are faster to respond to pictures that match the spatial configuration implied by a description compared to when there is a mismatch (e.g., responding to the picture of a horizontal nail after reading “He pounded the nail into the wall” vs. responding to that of a vertical nail) (Stanfield & Zwaan, 2001). Such findings are also consistent with evidence that visual representations are activated during language comprehension (Wassenburg & Zwaan, 2010), even when that is not necessary for comprehension (Zwaan, Stanfield, & Yaxley, 2002).

Similarly, in our study, visualization was shown to be the key to performance. Explicit visualization instructions (Experiments 1–3) promoted the linkage between the perceptual and the described environments, and made subsequent perceptuomotor information from the reader’s rotation more accessible for the updating of their situation model. But even in the absence of visualization instructions or any accompanying movement, some readers did still monitor the spatial dimension: about half of the participants in Experiment 4 spontaneously mapped the locations of the described objects onto the laboratory. Importantly, participants who reported linking the remote environment to their laboratory surroundings updated their situation model during a physical rotation, as indicated by their pointing performance. For these readers, the spontaneous monitoring of spatial information may reflect individual preferences in strategies for encoding text. More evidence about monitoring spatial dimensions in a spontaneous fashion comes from a study by Rommers, Meyer, and Huettig (2013), who showed that even without explicit instructions readers still used visual representations spontaneously and systematically to perform a verification task (requiring to determine whether an object with an implied shape and orientation has been previously mentioned in a sentence). The authors argued that the use of visual representations mostly occurs with explicit instructions rather than automatically under normal reading conditions, in line with our findings here.

xBeyond the lack of explicit visualization instructions, it is possible that the reduced use of the sensorimotor framework in Experiment 4 also reflects methodological differences across the reported experiments, resulting in differences in reading times. In Experiments 1–3, readers may have used the time of moving within the environment to better encode the spatial layout compared to Experiment 4, where due to the absence of body movement the time for additional encoding was limited. Nevertheless, we find this possibility unlikely because, in all of the experiments, participants were allowed to read the story a second time. With the second reading of the narrative, readers likely had the opportunity to fill in possible gaps from the first pass and create a precise spatial representation (see also Zwaan et al., 1995), which is confirmed by participants’ accurate drawings of the spatial layout at the end of Experiment 4. Indeed, recording reading times could have provided insights about participants’ level of processing under the varying extents of movement, manipulated across our experiments. For example, it would have been interesting to see if participants who linked the described environments to a sensorimotor framework in Experiment 4 processed the text deeper, as indexed by longer reading times, than those participants who did not. But because in the present experiments our focus was on post-encoding performance (i.e., whether participants’ rotation following encoding would induce spatial updating), we did not record reading times during encoding. Future research could benefit from also examining differences in the encoding narratives (and not only post-encoding performance) under different conditions of movement, as reflected by reading times.

Our findings here, documenting an influence of visualization instructions, are at odds with those of Avraamides et al. (2013) who also provided visualization instructions and attempted to link described spatial relations to the movement of the readers, but found no evidence of post-encoding updating. Avraamides et al. (2013) speculated that because integrating the linearly presented information from narratives into a situation model requires considerable effort, readers may have disregarded information that was not deemed critical to the causal chain of the described events. As a result, readers might have considered the protagonist’s rotation to be inconsequential as those stories provided no motivational context for the protagonist to change orientation. If this was the case, readers could have simply ignored the described rotation, even when it was accompanied by their own physical rotation. In addition to the potential role of the contextual relevance of the movement in the story, it is possible that the apparatus used in the study of Avraamides et al. (i.e., a configuration of the three computer desks placed around participants) partly occluded participants’ visual access to the laboratory room and therefore prevented them from linking the remote object locations to their actual surroundings. In contrast, in the current experiments, the link between the described environment and the laboratory could be more easily established, since the similarity between the shape of the described environment and the laboratory (which was deliberately reshaped to match the described environment) was likely more salient from the participants’ unobstructed vantage point at encoding. This point underscores that the effectiveness with which visual imagery is generated or recruited to solve a task (e.g., learning a described environment) may depend on task-specific or context-specific constraints. In this view, readers will employ certain strategies to solve the task, including the use of imagery, if needed (see also Rommers et al., 2013; van Dam, van Dijk, Bekkering, & Rueschemeyer, 2012).

Overall, the findings from the current experiments are consistent with those from studies in which environments are apprehended directly through perception (Magliano et al., 2007), showing that spatial relations conveyed through language can be monitored with physical movement, provided that they are linked to a sensorimotor framework (Avraamides & Kelly, 2008; Kelly et al., 2007). Although physical movement during encoding may encourage such encoding, it is not necessary. Visualization instructions without movement during encoding can also be sufficient for maintaining spatial relations in a sensorimotor framework. In cases where no link is made between the described environment and the immediate surroundings, information about spatial relations tends to be overlooked. As shown in Experiment 4, in these circumstances, people maintain a spatial representation from a preferred orientation that is aligned with the body’s orientation during encoding and refrain from updating it. However, even in the absence of visualization instructions, some readers may also be compelled to use their sensorimotor framework to encode described locations, in particular when the situation affords salient correspondences between the described environment and the immediate/perceptual surroundings. These findings are in line with those from studies examining updating with perceptual scenes, supporting the idea that various input modalities give rise to spatial images that can be used to support behavior the same way regardless of the input (see Loomis, Klatzky, & Giudice 2013, for a review).

Notes

Various theories of narrative identify a common set of structural features: there is typically a setup or introduction, an establishment of a goal, an attempt at the goal, an outcome or climax, and a resolution or reaction to that outcome (Cohn, 2013). The text used in the present study exhibits some of these features of narrative structure (e.g., there is a set-up and an establishment of a goal, there are attempts at the goal), while arguably missing other features (e.g., there isn’t a clear resolution in terms of the protagonist achieving their goal). With this point acknowledged, we continue to use the term “narratives” to highlight that a protagonist, while attempting their goal, experiences shifts in spatial location, which have to be monitored by the reader to construct an accurate situation model.

In this and previous studies (e.g., Kelly et al., 2007) the opposite to updated perspective is used as the baseline because both the opposite to updated perspective and the updated perspective deviate 900 from the learning perspective, which is considered to be privileged for spatial memory. This assumption about the learning perspective is supported by many studies documenting that, in the absence of conflicting cues, people construct orientation-dependent spatial memories whose orientation is determined by the learning viewpoint (see McNamara, 2003 for a discussion).

References

Avraamides, M. N. (2003). Spatial updating of environments described in texts. Cognitive Psychology, 47, 402–431. doi:10.1016/S0010-0285(03)00098-7

Avraamides, M. N., Galati, A., Pazzaglia, F., Meneghetti, C., & Denis, M. (2013). Encoding and updating spatial information presented in narratives. The Quarterly Journal of Experimental Psychology, 66, 642–670. doi:10.1080/17470218.2012.712147

Avraamides, M. N., & Kelly, J. W. (2008). Multiple systems of spatial memory and action. Cognitive Processing, 9, 93–106. doi:10.1007/s10339-007-0188-5

Avraamides, M. N., Theodorou, M., Agathokleous, A., & Nicolaou, A. (2013). Revisiting perspective-taking: can people maintain imagined perspectives? Spatial Cognition and Computation, 13, 50–78. doi:10.1080/13875868.2011.639915

Barsalou, L. W. (1999). Perceptual symbol systems. Behavioral and Brain Sciences, 22, 577–660. doi:10.1017/s0140525x99002149

Borreggine, K. L., & Kaschak, M. P. (2006). The action sentence compatibility effect: It’s all in the timing. Cognitive Science, 30, 1097–1112. doi:10.1207/s15516709cog0000_91

Cohn, N. (2013). Visual narrative structure. Cognitive Science, 37, 413–452.

Curiel, J. M., & Radvansky, G. A. (2002). Mental maps in memory retrieval and comprehension. Memory, 10, 113–126. doi:10.1080/09658210143000245

De Vega, M. (2008). Levels of embodied meaning: From pointing to counterfactuals. In M. De Vega (Ed.), Symbols and embodiment (pp. 285–308). Oxford, UK: Oxford University Press. doi:10.1093/acprof:oso/9780199217274.003.0014

Gernsbacher, M. A. (1990). Language comprehension as structure building. Hillsdale, NJ: Erlbaum. doi:10.4324/9780203772157

Glenberg, A. M., & Kaschak, M. P. (2002). Grounding language in action. Psychonomic Bulletin and Review, 9, 558–565. doi:10.3758/BF03196313

Hatzipanayioti, A., Galati, A., & Avraamides, M. N. (2015). The protagonist’s first perspective influences the encoding of spatial information in narratives. Quarterly Journal of Experimental Psychology, 69(3), 506–520. doi:10.1080/17470218.2015.1056194

Kaschak, M. P., & Borreggine, K. L. (2008). Temporal dynamics of the action-sentence compatibility effect. The Quarterly Journal of Experimental Psychology, 61, 883–895. doi:10.1080/17470210701623852

Kelly, J. W., Avraamides, M. N., & Loomis, J. M. (2007). Sensorimotor alignment effects in the learning Environment and in novel Environments. Journal of Experimental Psychology Learning Memory and Cognition, 33, 1092–1107. doi:10.1037/0278-7393.33.6.1092

Kintsch, W. (1998). Comprehension: A paradigm for cognition. Cambridge, UK: University Press.

Loomis, J. M., Klatzky, R. L., & Giudice, N. A. (2013). Representing 3D space in working memory: Spatial images from vision, hearing, touch, and language. In S. Lacey & R. Lawson (Eds.), Multisensory imagery: Theory and applications. New York: Springer. doi:10.1007/978-1-4614-5879-1_8

Magliano, J. P., Kopp, K., McNerney, M. W., Radvansky, G. A., & Zacks, J. M. (2012). Aging and perceived event structure as a function of modality. Aging, Neuropsychology, and Cognition, 19, 264–282. doi:10.1080/13825585.2011.633159

Magliano, J. P., Miller, J., & Zwaan, R. A. (2001). Indexing space and time in film understanding. Applied Cognitive Psychology, 15, 533–545. doi:10.1002/acp.724

Magliano, J. P., Radvansky, G. A., & Copeland, D. E. (2007). Beyond language comprehension: Situation models as a form of autobiographical memory. In F. Schmalhofer & C. A. Perfetti (Eds.), Higher level language processes in the brain: Inference and comprehension processes. Mahwah, NJ: Erlbaum.

Magliano, J. P., Trabasso, T., & Graesser, A. C. (1999). Strategic processing during comprehension. Journal of Educational Psychology, 91, 615–629. doi:10.1037/0022-0663.91.4.615

May, M. (2004). Imaginal perspective switches in remembered environments: transformation versus interference accounts. Cognitive Psychology, 48, 163–206. doi:10.1016/S0010-0285(03)00127-0

McNamara, T. P. (2003). How are the locations of objects in the environment represented in memory? In C. Freksa, W. Brauer, C. Habel, & K. F. Wender (Eds.), Spatial Cognition III: Routes and navigation, human memory and learning, spatial representation and spatial reasoning, LNAI 2685 (pp. 174–191). Berlin: Springer-Verlag.

Morrow, D. G., Bower, G. H., & Greenspan, S. L. (1989). Updating situation models during narrative com- prehension. Journal of Memory and Language, 28, 292–312. doi:10.1016/0749-596x(89)90035-1

Morrow, D. G., Greenspan, S. L., & Bower, G. H. (1987). Accessibility and situation models in narrative comprehension. Journal of Memory and Language, 26, 165–187. doi:10.1016/0749-596x(87)90122-7

Mou, W., & McNamara, T. P. (2002). Intrinsic frames of reference in spatial memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 28, 162–170. doi:10.1037//0278-7393.28.1.162

Pick, H. L., Jr., & Rieser, J. J. (1982). Children’s cognitive mapping. In M. Potegal (Ed.), Spatial abilities: Development and physiological foundations (pp. 107–128). New York, NY: Academic Press.

Presson, C. C., & Montello, D. R. (1994). Updating after rotational and translational body movements: Coordinate structure of perspective space. Perception, 23, 1447–1455. doi:10.1068/p231447

Radvansky, G. A., & Copeland, D. E. (2000). Functionality and spatial relations in memory and language. Memory & Cognition, 28, 987–992. doi:10.3758/BF03209346

Radvansky, G. A., & Copeland, D. E. (2006). Walking through doorways causes forgetting: Situation models and experienced space. Memory & Cognition, 34, 1150–1156. doi:10.3758/bf03193261

Rieser, J. J. (1989). Access to knowledge of spatial structure at novel points of observation. Journal of Experimental Psychology: Learning, Memory, and Cognition, 15, 1157–1165. doi:10.1037//0278-7393.15.6.1157

Rieser, J. J., Guth, D. A., & Hill, E. W. (1986). Sensitivity to perspective structure while walking without vision. Perception, 15, 173–188. doi:10.1068/p150173

Rinck, M., & Bower, G. H. (1995). Anaphora resolution and the focus of attention in situation models. Journal of Memory and Language, 34, 110–131. doi:10.1006/jmla.1995.1006

Rommers, J., Meyer, A. S., & Huettig, F. (2013). Object shape and orientation do not routinely influence performance during language processing. Psychological Science, 24(11), 2218–2225. doi:10.1177/0956797613490746

Stanfield, T. A., & Zwaan, R. A. (2001). The effect of implied orientation derived from verbal context on picture recognition. Psychological Science, 12, 153–156. doi:10.1111/1467-9280.00326

Therriault, D. J., Rinck, M., & Zwaan, R. A. (2006). Assessing the influence of dimensional focus during situation model construction. Memory & Cognition, 34, 78–89. doi:10.3758/bf03193388

van Dam, W. O., van Dijk, M., Bekkering, H., & Rueschemeyer, S.-A. (2012). Flexibility in embodied lexical-semantic representations. Human Brain Mapping, 33, 2322–2333. doi:10.1002/hbm.21365

van den Broek, P. W. (1990). The causal inference maker: Towards a process model of inference generation in text comprehension. In D. A. Balota, G. B. Flores d'Arcais, & K. Rayner (Eds.), Comprehension processes in reading (pp. 423–446). Hillsdale, NJ: Lawrence Erlbaum Associates.

van Oostendorp, H. (1991). Inferences and integrations made by readers of script-based text. Journal of Research in Reading, 14, 1–20. doi:10.1111/j.1467-9817.1991.tb00002.x

Waller, D., & Hodgson, E. (2006). Transient and enduring spatial representations under disorientation and self-rotation. Journal of Experimental Psychology: Learning, Memory, and Cognition, 32, 867–882. doi:10.1037/0278-7393.32.4.867

Wassenburg, S. I., & Zwaan, R. A. (2010). Readers routinely represent implied object rotation: The role of visual experience. Quarterly Journal of Experimental Psychology, 63, 1665–1670. doi:10.1080/17470218.2010.502579

Zwaan, R. A. (2004). The immersed experiencer: Toward an embodied theory of language comprehension. In B. H. Ross (Ed.), The psychology of learning and motivation (Vol. 44, pp. 35–62). New York, NY: Academic Press. doi:10.1016/S0079-7421(03)44002-4

Zwaan, R. A., Langston, M. C., & Graesser, A. C. (1995). The construction of situation models in narrative comprehension: An event-indexing model. Psychological Science, 6, 292–297. doi:10.1111/j.1467-9280.1995.tb00513.x

Zwaan, R. A., Magliano, J. P., & Graesser, A. C. (1995). Dimensions of situation model construction in narrative comprehension. Journal of Experimental Psychology: Learning, Memory, and Cognition, 21, 386–397. doi:10.1037/0278-7393.21.2.386

Zwaan, R. A., Radvansky, G. A., Hilliard, A. E., & Curiel, J. M. (1998). Constructing multidimensional situation models during reading. Scientific Studies of Reading, 2, 199–220. doi:10.1207/s1532799xssr0203_2

Zwaan, R. A., Stanfield, R. A., & Yaxley, R. H. (2002). Do language comprehenders routinely represent the shapes of objects? Psychological Science, 13, 168–171. doi:10.1111/1467-9280.00430

Zwaan, R. A., & Taylor, L. J. (2006). Seeing, acting, understanding: Motor resonance in language comprehension. Journal of Experimental Psychology: General, 135, 1–11. doi:10.1037/0096-3445.135.1.1

Zwaan, R. A., & van Oostendorp, H. (1993). Do readers construct spatial representations during naturalistic comprehension? Discourse Processes, 16, 125–143. doi:10.1080/01638539309544832

Author note

The present research was supported by research grants ERC-2007-StG 206912-OSSMA from the European Research Council and KOINΩ/0609(BE)/15 from the Cyprus Research Promotion Foundation.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

English translation of the Greek narrative used in the experiments

-

1.

“Imagine that you have just entered a store in order to buy clothes for your graduation this evening. You have left it for the last minute and you think that you won’t be able to find anything for the occasion. You have been standing for a while inside the store with the entrance at your back. You now start to walk to the center of the store.

-

2.

From the center of the store, you see in the distance in front of you the shelves. You think you should take a look. You walk towards the direction of the shelves and you start browsing the clothes. You cannot find anything that you like.”

-

3.

“You keep looking and you turn your head at the right side of the store. In the center of the right wall you see the cash register. You walk towards it to ask the store employee for help. The employee says you should go to the second floor of the store. Turning your head backwards and you see the stairs right behind you. You turn and walk to the stairs. When you arrive there, you realize that you don’t have much time for this, so you decide to try a different store at another time. You walk back towards the center of the store to thank the employee. As you walk you realize that you are impressed by the decoration that you did not notice earlier. You decide to take another look around.”

-

4.

“In the corner between the entrance and the cash register you see a mannequin doll dressed in nice clothes. You walk towards it to check the price tag on it. Unfortunately, there is no tag so you turn towards the cash register to ask the store employee about it. As you start to walk to the direction of the cash register you see in the corner between the shelves and the register a fish tank. You are impressed by the size of the fish task and you walk towards it to observe the fish in it.”

-

5.

“While at the fish tank, you hear a sudden noise coming from the corner, between the shelves and the stairs. You walk there to see what happened. As you arrive, you see a broken vase, so you think that you should inform the employee. You walk towards the center of the store and you see that the employee is busy. You look around the store and you see in the corner between the stairs and the entrance a broom. You think you should help out by picking up the broken pieces so you walk towards the broom.”

Rights and permissions

About this article

Cite this article

Hatzipanayioti, A., Galati, A. & Avraamides, M.N. Updating spatial relations to remote locations described in narratives. Mem Cogn 44, 1259–1276 (2016). https://doi.org/10.3758/s13421-016-0635-6

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-016-0635-6