Abstract

Functional magnetic resonance imaging (fMRI) has emerged as a viable method to study the neural processing underlying cognition in awake dogs. Working dogs were presented with pictures of dog and human faces. The human faces varied in familiarity (familiar trainers and unfamiliar individuals) and emotional valence (negative, neutral, and positive). Dog faces were familiar (kennel mates) or unfamiliar. The findings revealed adjacent but separate brain areas in the left temporal cortex for processing human and dog faces in the dog brain. The human face area (HFA) and dog face area (DFA) were both parametrically modulated by valence indicating emotion was not the basis for the separation. The HFA and DFA were not influenced by familiarity. Using resting state fMRI data, functional connectivity networks (connectivity fingerprints) were compared and matched across dogs and humans. These network analyses found that the HFA mapped onto the human fusiform area and the DFA mapped onto the human superior temporal gyrus, both core areas in the human face processing system. The findings provide insight into the evolution of face processing.

Similar content being viewed by others

Introduction

Dogs and humans share a unique history that spans at least 18,000 years (Thalmann et al., 2013). It is undeniable that in those societies fostering the domestication of pet and working dogs, dogs have reached a unique social status unknown to any other non-human animal. Humans and dogs have developed a complex social repertoire and the study of canine cognition has begun providing rich evidence of evolutionary adaptations fostering cross-species interaction (e.g., Hare, Brown, Williamson, & Tomasello, 2002; Miklósi & Topál, 2013). For instance, dogs respond well to human communicative gestures, speech, pointing, and body posture. Dogs are clearly sensitive to human social cues and can discriminate faces. Recent evidence also indicates that there is a face-selective area in the dog brain of pet dogs for processing human and dog faces (Cuaya, Hernández-Pérez, & Cocha, 2016; Dilks et al., 2015). Using functional magnetic resonance imaging (fMRI) in awake dogs trained for detection (for reviews see Bunford, Andics, Kis, Miklósi, & Gácsi, 2017; Thompkins, Deshpande, Waggoner, & Katz, 2016), we investigated nuances in face regions of the domestic dog specific to cross-species stimulus processing and evolutionary origins of face processing. By comparing neural activation resultant of viewing human and dog face stimuli, we have identified adjacent but separate brain areas for processing of human faces and dog faces by domestic dogs. We have also mapped face-processing regions in the dog brain that are functionally analogous to those of humans. In this article, we briefly review face processing in humans followed by dogs before describing the experiment.

Face processing in humans

Face processing is a vital component of human evolution and social cognition. By extracting information from faces, humans are able to recall a person’s identity, retrieve information about that identity, and use cues (such as the emotional state of the person) to aid in socialization (Paller et al., 2003). Across studies, the neuroanatomical structures and activation patterns underlying the recognition of a person’s identity and associated mental states (such as the person’s emotion) have been guided by evolving models of face processing, typically defined by two pathways: one that processes identity information and one that processes dynamic information such as movement and intentions. Early face-processing theory focused on identity (Bruce & Young, 1986) and expanded to consider affect (Calder & Young, 2005). A later model by Haxby, Hoffman, and Gobbini (2000) became dominant and consists of a core system activated by invariant traits (identity) and an extended system activated by dynamic traits that facilitate communication, such as emotional expression or eye gaze. The Haxby model assigns processing of invariant facial aspects to the fusiform face area (FFA) and changeable aspects to the posterior region of the superior temporal sulcus (pSTS).

An extension of the Haxby model was proposed by O’Toole et al. (2002) to account for dynamic motion of familiar faces, and this model was again expanded by Bernstein and Yovel (2015). The current understanding of face processing draws separation of regions between the ventral stream through the FFA (face form) and the dorsal stream through the STS (dynamic information, e.g., motion). Functional and anatomical face processing homologs have been consistently identified across primate species in dorsal and ventral streams, and the number of face-selective areas is similar among primates (Freiwald, Duchaine, & Yovel, 2016). Some authors have provided supplemental evidence for an anterior processing area in humans where the dorsal and ventral streams may converge and where more robust familiarity representations and socially relevant aspects may be processed (Duchaine & Yovel, 2015; di Oleggio Castello, Halchenko, Guntupalli, Gors, & Gobbini, 2017). Familiarity effects tied to semantic and emotional information emphasize the social role of face recognition (Freiwald et al., 2016). Additionally, studies of familiar face processing provide evidence for simultaneous activation of emotion processing areas such as the amygdala and insula (di Oleggio Castelloet al., 2017).

Collectively, these systems provide the basis for keenly tuned human expertise in extracting information from faces. Regardless of any nuances among theories, if domestic dog processing of faces is qualitatively similar to that of humans, then we should expect to see functionally analogous regions of activation upon presentation of ecologically relevant stimuli. In this case, we should expect to see activation of regions implicated in human and dog processing of familiar and emotional faces, including the caudate (Cuaya et al., 2016), hippocampus (Paller et al., 2003), and amygdala (di Oleggio Castello et al., 2017).

Face processing in dogs

Domestic dogs can discriminate faces, even when stimulus quality and richness are degraded (e.g., Huber, Racca, Scaf, Virányi, & Range, 2013). In addition to displaying highly-tuned recognition of human faces, dogs are also successful at recognizing the emotions carried by those faces (Albuquerque et al., 2016; Barber, Randi, Müller, & Huber, 2016; Müller, Schmitt, Barber, & Huber, 2015; Nagasawa, Murai, Mogi, & Kikusui, 2011). Of note, species that are evolutionarily more closely related to humans than dogs do not perform well on such tasks of human face recognition. This finding is described as the “species-specific effect” (Pascalis & Bachevalier, 1998) of face recognition, a principle analogous to the “other-race effect” in humans. When presented with images of human faces and monkey faces, rhesus monkeys paid more attention to novel monkey faces than familiar ones, and did not show differential attention between novel and familiar faces of humans (Pascalis & Bachevalier, 1998). Further research has supported this effect, demonstrating similar results in brown capuchins and Tonkean macaques (Dufour, Pascalis, & Petit, 2006). In both studies, for human participants, attention was more differentially focused for human faces than for monkey faces, in line with a species-specific effect.

Young human children, for comparative and developmental reasons, are often included as a comparison group in studies of canine cognition. Racca, Guo, Meints, and Mills (2012) compared the ability of dogs to differentiate between emotional facial expressions by humans to that of children, who can accurately categorize emotional faces by age 4 years. Upon presentation of positive, neutral, and negative human and dog faces, the researchers found that dogs and human children showed different looking and lateralization behaviors. Specifically, data obtained from dogs suggested hemispheric processing based on a valence model of emotion processing. That is, the dogs’ responses supported right hemisphere processing to negative emotions and left hemisphere processing to positive emotions. Further, it seems that neutral expressions are processed similarly to negative expressions in dogs, possibly due to the lack of approach signals that dogs are able to extract from positive expressions. In opposition to this valence-based model, the right hemisphere model was supported by the children’s data, which suggests processing of all emotional expressions by the right hemisphere. Though this study might suggest that hemispheric processing in dogs and humans is species-specific, the authors note that juvenile humans’ hemispheric use may change in adulthood (Racca et al., 2012).

Lateralization of face processing has been further supported by Barber et al. (2016) and Siniscalchi, d’Ingeo, Fornelli, and Quaranta (2018). When presenting lab and pet dogs with faces of varying emotional content and familiarity, Barber et al. (2016) found that dogs demonstrated a strong left gaze bias for all emotive stimuli. Additionally, there were subtle differences in gaze biases according to familiarity of the model and the content of the emotional expression. This data suggests right hemisphere lateralization of face stimuli, which was also supported by Siniscalchi et al. (2018). Here, the authors identified orienting asymmetries in response to human emotional faces. Specifically, dogs oriented their heads to the left in response to expressions of anger, fear, and happiness, indicating processing in the right hemisphere. In this study, dogs exhibited no orienting biases in response to human expressions of sadness. Taken together, these studies provide support for lateralization of emotional face processing, but the question remains as to whether this lateralization is restricted to the right hemisphere for all emotional stimuli or if lateralization is split according to positive and negative valence.

Experience as the primary mediator of non-human attention to human cues is of particular interest in any investigation of cross-species social cognition. Even in studies of other species that have been domesticated by humans (e.g., horses: McKinley & Sambrook, 2000; goats: Kaminski, Riedel, Call, & Tomasello, 2005), dogs often outperform them, especially in the use of facial-based cues. Because dogs have been shown to perform well in object-choice tasks often without significant human interaction, their capacity to follow human-given cues has been considered by some to be a by-product of domestication (e.g., Hare et al., 2002). However, enculturation and experience cannot be ruled out for face processing in any species, and some research suggests that ontogenetic effects exist in non-dogs (Lyn, Russell, & Hopkins, 2010). In a study by Barber et al. (2016), the role of ontogenetic experience was emphasized, as substantial differences in face processing by pet dogs and laboratory dogs were identified. In summary, while domestication may be important in shaping human face discrimination in dogs, ontogeny cannot be overlooked.

Given the capacity for human face discrimination in dogs and potential ontological influences, we may expect to see differences in discrimination performance (particularly in regard to emotional expression) that are mediated by role. Though dogs are most commonly thought of as pets, they also fulfill a variety of working roles that may require more or less attention to human cues depending on their role. For example, scent detection dogs are often involved in tasks requiring independence and focus on olfactory cues, and are usually kenneled not experiencing the same enculturation as pet dogs, whereas service dogs must attend closely to their handler’s state of being and often live with humans. As such, processing of face identity and emotional expression may correlate with better or worse performance in specialized working roles.

Heterospecific Face Processing and Attachment

To develop a framework for studying dogs’ functioning in their relationship with humans, we look to similar research that has been conducted with humans. Stoeckel, Palley, Gollub, Niemi, and Evins (2014) used fMRI to compare responsiveness to child and pet dog images as seen by their mothers/owners. Participants completed behavioral measures for assessment of attachment to their children and dogs, after which they viewed images of their own child, their own dog, and unfamiliar dogs and children in the scanner and were asked to score them according to valence and arousal.

Attachment measures indicated that 93% of participants were extremely attached to their pet dog, considering him or her as a family member. Indeed, functional data revealed overlapping regions of brain activation including those associated with reward, emotion, and affiliation, namely the amygdala, hippocampus, and fusiform gyrus. However, two contrasts did reveal significant differences between familiar conditions. Images of one’s child led to activation of the substantia nigra/ventral tegmental area (implicated in reward and affiliation) whereas this pattern of activation was not seen with images of one’s dog. And although the amygdala was activated by both conditions, images of one’s dog led to greater activation of the fusiform gyrus than did one’s child. Stoeckel et al. (2014) note that this may be due to the lack of language-based affiliation with dogs, as human-dog interaction may be more dependent on face perception to pick up on emotion, gaze direction, and identity.

Using dog fMRI to study face processing

Despite growing evidence for complex social cognition in the domestic dog, neurological investigations are new and few. Dilks et al. (2015) first provided evidence for a specialized face processing area within the brain of pet dogs. They found that the right inferior temporal cortex was differentially activated for human videos as opposed to objects. Focusing on this region of interest found for videos, next, they presented pictures of human faces, dog faces, objects, scenes, and scrambled faces. They found that human and dog faces activated the same area of the brain as the human videos. Faces, regardless of species, resulted in greater activation than objects and scenes. Interestingly, there were no activation differences between scrambled and unscrambled faces. To this end, processing of low-level features in face stimuli could be implicated as a reason for activation in this visual area. In comparing pictures of faces versus objects, Cuaya et al. (2016) utilized whole-brain, voxel-wise analyses and identified bilateral temporal cortex (ventral-posterior regions) activation for faces, with slightly more activity in the left temporal cortex in pet dogs.

These prior studies demonstrate evidence for selective processing of human and dog faces in domestic dogs; however, prior studies have not uncovered finer spatial specificity in processing of face stimuli of different species, perhaps partly due to larger voxel sizes amongst other possible reasons such as head motion. Further, these studies have focused solely on the dog brain and have not made comparative efforts to identify functional analogy with humans. The purpose of the present study was twofold. First, we sought to identify precise (and spatially fine-grained) regions of the dog brain implicated in processing of dog and human face stimuli. Second, we sought to make comparisons between dog and human processing of face stimuli. We hypothesized, based on prior research, that the brain regions involved in dogs’ processing of conspecific and human faces would be localized to the temporal cortex (Cuaya et al., 2016; Dilks et al., 2015), but spatially different between the two conditions given our improved spatial resolution and advanced techniques for motion correction. Working detector dogs were trained, using reinforcement learning, to lie motionless and awake during fMRI and to attend to projected face stimuli that varied in emotional valence (human faces) and familiarity (human and dog faces). Through this work, we have identified separate regions within the dog brain for specific processing of human and dog faces. Further, such specificity was not driven by emotion or familiarity.

Methods

Subjects

Twelve dogs (Canis familiaris) were included in this study. All dogs were between 6 months and 3 years of age. Both male and female dogs were used in training and in scanning. All dogs remained awake for imaging, for which they were trained to lie in a prone position on the scanner bed with head inserted into a human knee coil. Positive reinforcement was provided to keep dogs as still as possible and to desensitize them to the scanner environment (Jia et al., 2014). Ethical approval for this study was obtained from the Auburn University Institutional Animal Care and Use Committee and all methods were performed in accordance with their guidelines and regulations.

Image presentation

We used a stimulus-driven visual task in which awake and unrestrained dogs were presented with still images of human and dog faces via an in-scanner projector screen. The human faces were still image captures of familiar (a dog’s handler) and unfamiliar (a stranger) people. Because familiarity with individual handlers varied, the dogs were assigned to groups based on common familiarity with handlers included in a stimulus set. Group-specific human stimulus sets were selected from a pool of 51 stimuli (17 individuals × 3 valence types). For each dog group, the familiar face set was comprised of the positive, neutral, and negative images for each of four familiar handlers. The unfamiliar face set was drawn from the pool of unfamiliar individuals and included the four positive, four neutral, and four negative images most closely matched in valence to the images in the familiar set. The dog faces were still image captures of familiar (kennel mates) and unfamiliar (stranger) dogs. The dog stimulus set included four familiar (kennel mate) dog images and four unfamiliar dog images. These did not vary in valence.

The emotional content of each human image was rated by 88 undergraduate students using a self-paced online survey. Participants were asked to score each image according to emotional valence. They were asked to identify the emotion displayed as well as the degree of that emotion on a scale from “Very Low” to “Very High.” Mean scores were calculated for individual images, resulting in a composite score for each stimulus ranging from -5 (negative) to +5 (positive; see Supplementary Material, Fig. S1 for examples). Familiar and unfamiliar faces where matched for valence. Dog images depicted dog faces in a relaxed state, as judged by the experimenters (see Supplementary Material, Fig. S2 for examples). We did not attempt to manipulate or score the dogs on valence because accuracy of human ratings of emotions in dog faces have been shown to vary in accuracy according to experience (Bloom & Friedman, 2013).

All images were digitally analyzed using Fourier analysis in order to examine their spectral content (which determines color) and absolute magnitude (which determines image intensity). No significant differences were found between human and dog faces (p = 0.45 for spectral content and p = 0.32 for absolute magnitude, as determined by independent sample t-tests), implying that differences in brain responses to these two types of stimuli will likely not be driven by low-level features such as color and intensity.

Scanning

Functional MRI data were acquired in a Siemens 3T Verio (Erlangen, Germany) scanner (see Jia et al., 2014). Each run totaled 140 seconds and included either human or dog faces (which contained both familiar and unfamiliar faces). Within each human stimulus set, there were four positive familiar images, four neutral familiar images, four negative familiar images, four positive unfamiliar images, four neutral unfamiliar images, and four negative unfamiliar images. During each scanning session, each dog was presented with all stimuli, randomly broken into runs of 140 s (12 stimuli per run). For dogs that viewed dog face stimuli, runs also included eight randomly-distributed dog face stimuli (four familiar and four unfamiliar). Face stimuli were presented via projector screen for 5 s, after which a blank screen was presented for a variable 3- to 11-s inter-stimulus interval (ISI). The optimized timing of the ISI was obtained from OPTSEQ software (https://surfer.nmr.mgh.harvard.edu/optseq/). A 1-s repetition time (TR) was used. Each dog completed four runs in a randomized order, with each run including stimuli from all conditions.

To be sure that each dog looked at each stimulus that was presented during scanning, several precautions were taken. Such precautions were necessary to assure that only trials in which the dogs attended to the stimulus were analyzed. Attention was judged by two raters per video via simultaneous video recording of the dog’s eye inside the scanner. This video recording was synchronized with stimulus presentation such that raters could parse through the video post hoc after the experiment to determine whether the dog was attending to a given stimulus or not. For each trial, if the dog’s eye was visibly open, the rater assigned a score of “yes.” If the dog’s eye was closed or not open enough for the pupil to be visible, then the rater assigned a score of “no” (see Supplementary Material, Fig. S3 for examples.) Inter-rater reliability was assessed for each trial, and only trials with perfect inter-rater agreement of attentiveness were retained for data analysis.

Data acquisition

Functional MRI data were acquired using a T2*-weighted single shot echo planar imaging (EPI) sequence with 16 axial slices, slice thickness = 3 mm, repetition time (TR) = 1,000 ms, echo time (TE) = 29 ms, field of view (FOV) = 150 × 150 mm2, flip angle (FA) = 90°, in-plane resolution 2.3 × 2.3 mm, in-plane matrix = 64 × 64 and 200 temporal volumes in each run. In addition to the visual task described above, two resting state runs were also acquired for each dog using identical scanning parameters. During the resting state scan, no visual stimuli were provided to the dog and the canines were trained to lay still with their eyes open. A human knee coil was used as a head coil for the dog brain, and all dogs were trained to keep their heads in the coil as still as possible (with eyes open) during the scanning. Anatomical images were acquired using magnetization-prepared rapid gradient echo (MPRAGE) sequence for overlay and localization (TR = 1,990 ms, TE = 2.85 ms, voxel size: 0.6 × 0.6 × 1 mm3, FA = 9°, and in-plane matrix 192 × 192, FOV = 152 × 152 mm2, number of slices: 104). An external head motion-tracking device based on an infra-red camera and reflector were used to obtain independent assessments of the dog’s head motion in high spatio-temporal resolution (see Jia et al., 2014, for details).

Image processing

Data processing was performed using SPM12 (http://www.fil.ion.ucl.ac.uk/spm/software/spm8/, Functional Imaging Lab, The Welcome Trust Centre for NeuroImaging, The Institute of Neurology at University College London). All data were subjected to standard preprocessing steps, including realignment to the first functional image, spatial normalization to our own canine template, spatial smoothing and motion censoring (discussed in Jia et al., 2014). The single camera-based external motion-tracking device provided estimates of motion that had both high temporal and spatial resolution. This information was used along with realignment parameters for data quality assessment. The data were completely discarded when the external camera detected more than one voxel motion because usable data cannot be retrieved in such a scenario even after motion censoring and interpolation. On the other hand, when motion detected from the external camera was less than one voxel, we retrospectively estimated framewise displacement using realignment parameters, and used censoring and interpolation when framewise displacement exceeded 0.2 mm (Power et al., 2015).

Following preprocessing, general linear models (GLMs) were built at the individual subject level with regressors for each condition as well as nuisance regressors derived from motion estimates obtained from image-based realignment in addition to those independently obtained from the external motion-tracking device. Using random effects analyses, effects of interests were tested at the group level. Specifically, using t-tests, voxels that had significantly different activity for human versus dog faces were ascertained. Statistical significance was determined using a threshold of p < 0.05 FDR (false discovery rate) corrected.

In order to determine whether the brain regions found above were also parametrically modulated by emotions, we used parametric regressors in the individual subject GLMs. The amplitude of these regressors was scaled between values of +5 and – 5, representing the range of the emotional valence ratings of the human face stimuli. Brain regions that were significantly parametrically modulated by emotion in human faces were then determined at the group level. A similar analysis was not performed for the dog face stimuli because the images were neutral and did not vary in emotional valence.

In order to determine whether the main effects of dog versus human faces were influenced by the familiarity of the human and dog faces, familiar versus unfamiliar contrasts were tested at the group level in both human and dog samples. We investigated whether (dog face vs. human face) and (familiar face vs. unfamiliar face) contrasts showed common voxels across the dog brain. The basic level contrasts for familiarity and emotion are reported elsewhere (Thompkins, 2016).

Identification of functionally analogous brain regions in humans

As noted in the results section, two small but distinct patches of the temporal cortex were responsive more to dog faces as compared to human faces (referred to as dog face area or DFA) as well as more responsive to human faces as compared to dog faces (referred to as human face area or HFA). The role of such small, distinct, and specific patches of the temporal cortex in canine cognition is unclear due to the lack of corresponding literature. In contrast, the functions of specific human brain regions within the temporal cortex have been very well studied. Therefore, if we were to find the functional analogues of DFA and HFA regions in the human brain, it would aid interpretation of our results. Further, it would also inform us about the evolutionary roles of these regions. Consequently, we mapped dog brain regions with significantly different activity for dog and human faces to functionally analogous regions in the human brain. This was achieved by comparing the similarity of functional connectivity fingerprints of these regions using a permutation testing framework (please refer to the following papers for details regarding this method: Mars, Sallet, Neubert, & Rushworth, 2013; Mars et al., 2014; Mars et al., 2016; Passingham, Stephan, & Kotter, 2002). This framework is based on the assumption that if Region-A in the dog brain (such as DFA or HFA) has a functional connectivity pattern similar to Region-B in the human brain, then their relative functional roles in dog and human brains, respectively, must also be similar. Therefore, our aim was to find those regions in the human brain (via an exhaustive search), which have functional connectivity profiles similar to DFA and HFA in the dog brain.

If connectivity were to be calculated between a seed (DFA or HFA in the dog brain) and all other gray matter voxels in the brain, then it would yield high-dimensional profiles that can be hard to summarize. Given that whole brain functional connectivity fingerprints have high dimensionality, we used the approach proposed by Passingham et al. (2002) where the fingerprint is derived from certain “target” regions that are selected to represent the broadest array of functions as possible. In additional to reducing dimensionality, this approach also avoids overfitting.

For the purposes of this study, “functional connectivity” between two voxels/regions was defined as the Pearson’s correlation between time series derived from those two voxels/regions. We normalized the functional connectivity data using the maximum connection strength within the given brain. Thus, normalized functional connectivity metrics then represented relative strength of connectivity and allowed us to compare connectivity profiles obtained from data acquired with different scanning parameters for dogs and humans.

The characteristics of resting state fMRI data acquired from dogs have already been described in the Data acquisition section. In humans, resting state functional connectivity was obtained using preprocessed resting state fMRI data from the Human Connectome Project (HCP) (HCP 500 Subjects + MEG2 Data Release). Our human sample included scans from young healthy adults (age range: 22–35 years) obtained at 3T. Based on Lebeau’s model (Patronek, Waters, & Glickman, 1997), 154 human subjects were manually selected to match the gender, number of scans, and age in dog-year equivalents (Table 1) with our dog sample. Data from 30 dogs (including the 12 of focus in this study) with an age range of 12–36 months were used in resting-state analyses. The HCP functional data were acquired on a Siemens Skyra 3T scanner using multiplexed gradient-echo EPI sequence with slice thickness = 3 mm, TR = 720 ms, TE = 33.1 ms, FA = 52o, FOV = 208 × 180 mm2, in-plane matrix = 104 × 90 and 1,200 temporal volumes in each run. For more details about data acquisition and preprocessing of the HCP data, please see “HCP 500 Subjects + MEG2 Data Release” reference manual (https://www.humanconnectome.org/storage/app/media/documentation/s500/hcps500meg2releasereferencemanual.pdf) as well as Smith et al. (2013).

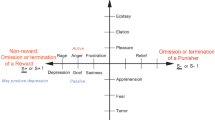

In order to determine connectivity fingerprints for each subject, 19 “targets” were identified. These included regions of interest (ROIs) involved in basic sensory, social, and cognitive functions in the dog brain, which have also been implicated in guiding canine behavior in previous dog fMRI studies (Andics et al., 2014, 2016; Berns et al., 2012, 2015, 2016; Cook et al., 2014; Cuaya et al., 2016; Dilks et al., 2015; Jia et al., 2014, 2015; Kyathanahally et al., 2015; Thompkins et al., 2016; Ramahihgari et al., 2018). Figure 1 illustrates the spatial locations of these regions in the dog and human brains, while Table 2 shows the MNI coordinates of these regions in the human brain. For the dogs, authors who were well versed with canine neuroanatomy manually marked these target regions (in our own custom standardized space as discussed in our previous publications (Jia et al., 2014, 2015; Kyathanahally et al., 2015; Thompkins et al., 2016)). The locations of the dog regions were also motivated by findings in previous dog fMRI studies cited above. We understand that this introduces an amount of subjectivity in the exact location of these ROIs, but would like to note that in the absence of a large body of canine neuroimaging findings reported in a standardized space, this is inevitable. This procedure also mirrors the approach adopted by early neuroimaging studies before the advent of standardized spaces (such as Talairach and MNI space) and co-ordinate based meta-analysis using activation likely estimation (Fox, Parsons, & Lancaster, 1998). In humans, the MNI co-ordinates of target regions were identified using the BrainMap database (www.brainmap.org). The “target” ROIs were: ventromedial prefrontal cortex (vmPFC), bilateral dorsolateral prefrontal cortex (dlPFC), bilateral ventrolateral prefrontal cortex (vlPFC), anterior cingulate cortex (ACC), posterior cingulate cortex (PCC), bilateral temporal cortex, bilateral inferior parietal lobule (IPL), visual cortex, bilateral amygdala, bilateral caudate, bilateral hippocampus, and olfactory bulb. Please note that the target ROIs in dogs and humans need not be homologous, they were picked to sample regions involved in the broadest array of functions as possible, as in previous studies (e.g., Mars et al., 2013, 2014, 2016). Also, the nomenclature used involves the location of these regions in different cortices (by employing words such as inferior, bilateral, posterior, dorsal, etc.) and we have explicitly avoided referring to specific sulci and gyri since they may have different nomenclatures in dogs and humans.

Spatial locations of “target” regions in human brain (a) and dog brain (b). Region of interest (ROI) abbreviations: ventromedial prefrontal cortex (vmPFC), bilateral dorsolateral prefrontal cortex (dlPFC), bilateral ventrolateral prefrontal cortex (vlPFC), anterior cingulate cortex (ACC), posterior cingulate cortex (PCC), bilateral temporal cortex, bilateral inferior parietal lobule (IPL), visual cortex, bilateral amygdala (Amy), bilateral caudate (Caud), bilateral hippocampus (Hippo) and the olfactory bulb (OB)

In the dog brain, “seed” regions were defined as contiguous clusters of voxels that were significantly different in the human face versus dog face comparison at the group level (i.e., DFA and HFA, as noted above). In the human brain, it is our objective to find seed regions that have connectivity fingerprints with target regions that are similar to the connectivity fingerprint of the dog seed regions (i.e., DFA and HFA) with corresponding target regions. Therefore, in the human brain, every voxel in the brain’s gray matter was considered as a seed region. For each subject, the connectivity fingerprints of “seed” regions were assessed from the correlation of the time series of the seed regions (just the voxel time series in humans and mean time series of seed ROIs in dogs) with those of “target” regions. It should be noted that the number of “targets” should not be too many to cause overfitting, yet at the same time should be sufficient to capture the diversity of the connectivity from the seed regions.

In order to find voxels in the human brain that share the same fingerprint or pattern of connectivity with “seed” regions in dogs, the Manhattan distance between the mean connectivity fingerprint of each human seed and each dog seed (averaged over the human and the dog samples, respectively) were estimated (Fig. 2). If a and b are vectors of dimension k representing two connectivity fingerprints, then the Manhattan distance between them is defined as \( {\sum}_{i=1}^k\mid {a}_i-{b}_i\mid \)

Connectivity fingerprint matching approach illustrated graphically. Individual “target” regions are indicated by each vertex of the polygon showing the connectivity fingerprint. This approach is taken given the dimensionality of the connectivity fingerprints. In the human brain, we show three arbitrary voxels for illustration in yellow, purple, and red colors and corresponding connectivity fingerprints. Note that in real data, connectivity fingerprints are obtained for all gray matter voxel seeds in the human brain. Similarly, a connectivity fingerprint of a dog “seed” region is shown in green color. Resting state functional connectivity (FC) between the seed regions and 19 pre-selected target regions were used to derive these connectivity fingerprints shown as polygons. The fingerprints obtained from the dog and human seed regions were then compared pairwise using the Manhattan distance measure. In our illustration, the yellow area in the human brain has a connectivity fingerprint with target regions that are most similar to that obtained by the dog “seed” region. Therefore, the yellow voxel in the human brain could potentially be the region functionally analogous to the green seed voxel in the dog brain

In order to test the significance of the match between connectivity fingerprints of the dog “seed” regions and each voxel in the human brain, permutation testing was employed (p < 0.05, FDR corrected). This provided a statistical criterion based on which regions in the human brain that were functionally analogous to DFA and HFA in the dog brain could be identified. This method does not utilize any anatomical constraints regarding the location of the analogous region that is identified. However, our results (presented in the Results section, below) as well as those from previous studies (Mars et al., 2016; Passingham et al., 2002) indicate that the analogous regions identified made sense from an anatomical perspective.

Results

Only 5% of the data across all dogs had to be discarded due to motion of more than one voxel detected via the external camera. An additional 7% of the data had motion of less than one voxel, but framewise displacement more than 0.2 mm. This data was recovered via censoring and interpolation and was not discarded (Power et al., 2015). Given the small amount of data that had to be discarded (i.e., 5%), each dog had enough data to perform reliable data analysis (we had a threshold of 75% of the data to be available in each dog, to be included in the data analysis, but all dogs had more usable data than our threshold).

Figure 3 shows the primary contrasts of interest. Using a whole-brain voxel-wise analysis, greater neural activation in the left temporal cortex by human face stimuli as contrasted against that of dog faces (human face > dog face) and greater activation by dog faces as compared to human faces (dog face > human face) are identified as green (cluster size = 410 voxels) and red regions (cluster size = 198 voxels), respectively. As shown in Fig. 3, we have identified adjacent, but clearly distinct, activation areas separated by stimulus origin. For the purposes of this paper, we delineate the area in green as the human face area (HFA) and the area in red as the dog face area (DFA). Both species-specific processing regions are lateralized to the left temporal lobe. We did not identify such a distinction in any other areas of the dog brain.

(a) Results of human face and dog face contrasts. Regions colored in green are representative of regions significantly (p < 0.05, FDR (false discovery rate corrected)) more active during processing of human faces as compared to dog faces (i.e., human face area (HFA)). Red regions represent areas significantly (p < 0.05, FDR corrected) more strongly activated by dog faces as compared to human faces (i.e., dog face area (DFA)). There was no suprathreshold activity in any other part of the dog brain for the dog versus human face contrasts. (b) Mean BOLD % signal change over dog and human face trials for both HFA and DFA are also shown, with the error bars representing the standard deviation

In order to test whether the contrast effects were influenced by the emotional content of the human faces, we conducted a parametric modulation analysis. Sub-regions of both the HFA and DFA were found to parametrically modulate with the emotions displayed by human faces (Fig. 4). Because separation according to emotion was not found, the specificity of these regions in the dog brain to processing dog and human faces cannot be attributed to emotion alone.

Results of human face and dog face contrasts juxtaposed with regions parametrically modulated by facial emotions. Regions colored in green (cluster size = 358 voxels) are representative of regions significantly (p < 0.05, FDR (false discovery rate corrected)) more active during processing of human faces as compared to dog faces. Red regions (cluster size = 102 voxels) represent areas significantly (p < 0.05, FDR corrected) more strongly activated by dog faces as compared to human faces. The yellow region of interest (ROI) (cluster size = 52 voxels) shows voxels that were significantly (p < 0.05, FDR corrected) modulated by emotions as well as had higher activity for human faces while the magenta ROI (cluster size = 96 voxels) shows voxels that were significantly (p <0.05, FDR corrected) modulated by emotions as well as had higher activity for dog faces. The magenta and yellow ROIs were estimated via a “Minimum Statistic compared to the Conjunction Null” conjunction test (Nichols et al., 2005). Note that no color scales are provided as this figure represents binary intersections

In order to test whether the contrasts effects were influenced by the familiarity of the human and dog faces, contrasts on (familiar > unfamiliar) human and dog faces were conducted. These contrasts did not find differences in the HFA and DFA indicating that familiarity of faces did not drive these functional differences in the left temporal lobe.

Connectivity fingerprint analyses revealed that the dog HFA maps to the human FFA and the dog DFA maps to the human STG indicating the potential for functional analogs. Of note, neuroimaging data from humans supports involvement of the fusiform face area (FFA) in the processing of structure and identity of faces and the superior temporal sulcus (STS) in dynamic features and familiar face recognition (e.g., Bernstein & Yovel, 2015).

Discussion

We found activation differences for human and dog faces in the canine temporal cortex, an area previously shown to be sensitive to faces in the dog brain. Our analyses more precisely identified the spatial location of this face region and expanded our understanding of the functional mechanisms underlying activation in this brain area. We addressed the possibility that some likely low-level features drove this separation, and did not find significant differences between human and dog faces in terms of their spectra (which determines color) or magnitude (which determines image intensity). Further, while activation was modulated by emotional valence, it did not dictate separation of activation regions, and familiarity did not play a role in the HFA or DFA. Finally, connectivity analyses revealed potentially functionally analogous networks involved in face processing in humans and dogs. The dog HFA maps to the human FFA and the dog DFA maps to the human STG.

Evidence for a region of face processing within the dog brain was provided by Dilks et al. (2015) and Cuaya et al. (2016). We have established further support for a face processing area in the dog brain and consider it functionally analogous to those found in humans, monkeys, and sheep. In all of these studies, regional activation in the temporal lobe was identified subsequent to the presentation of face stimuli. This aligns with past literature evidencing face-specific processing in humans, monkeys, and sheep, albeit with shifts in the specific location in the temporal lobe for face processing across species (Kanwisher, McDermott, & Chun, 1997; Kendrick et al., 2001; Sliwa, Planté, Duhamel, & Wirth., 2014). In addition to demonstrating that there is a region of the dog brain that is reliably activated by faces, an important addition of the current study is that of cross-species regional distinction (i.e., separate regions of processing for human and dog faces). Overall, combining findings of fMRI data from the present study, Dilks et al. (2015), and Cuaya et al. (2016), with the prior behavior studies with dogs, there appears to be support for domain specificity for face processing in domestic dogs, although it is clearly premature to conclude a specific region in the dog brain is causally involved in face perception (e.g., Weiner et al., 2017). Additionally, lateralized face processing has repeatedly been demonstrated in dogs (e.g., Barber et al., 2016; Siniscalchi, d’Ingeo, Fornelli, & Quaranta, 2018) and was supported in our results.

As discussed earlier, humans are able to use identity and cues in successful socialization (Paller et al., 2003). Current models of face processing highlight separation of regions between the ventral stream (face form) and the dorsal stream (dynamic information) (e.g., Bernstein & Yovel, 2015; O’Toole et al., 2002) with some accounts evidencing a converging of these streams to allow for further processing of socially-relevant familiarity information (di Oleggio Castello et al., 2017; Duchaine & Yovel, 2015). Stoeckel et al. (2014) found that, for images of familiar dog (pet) faces and familiar human (child) faces, human areas of activation included the amygdala, hippocampus, and fusiform gyrus. We have extended the first steps in exploring the dog’s ability to make use of such capacities in their cohabitation with humans. Dogs also appear to mimic the core and extended systems of face processing initially described by human face-processing models, as they make use of both identity and emotional information. These analogies with human processing of faces warrant further exploration with attention paid to comparative modeling. It should be noted that the functional role of the FFA in humans is debated, and an alternative view (Gauthier, Skudlarski, Gore, & Anderson, 2000) posits that it has evolved to process highly familiar objects. However, if this was true in the present study there would have been a significant difference in the dog HFA (or DFA) when contrasting familiar and unfamiliar faces.

It is not clear what drives the separation in neural activation for human and dog faces. Dilks et al. (2015) did not find such separation in pet dogs. Their lack of separation could be due to the lower spatial resolution used in their study compared to our study in addition to other factors such as head motion. That is, improved spatial resolution along with compensation for head motion measured using an external motion-tracking device may allow for parsing out of these separate brain regions. An additional factor may be that we took care to analyze data only from trials in which the dogs actually attended to the face stimuli. If trials in which the dogs did not look at the stimuli were included in the analysis, then it may be difficult to detect conspecific effects. But which neural factors drive the separation? One possibility is that neural plasticity is at play. Perhaps in the working dogs tested in the present experiments we find a separation of human and dog faces because of the importance of humans to their training. Related to this, experience with faces is needed for monkeys to form and/or maintain face-selective areas in the brain (Arcaro, Schade, Vincent, Ponce, & Livingstone, 2017). Through experience, one might expect the dogs to become fine-tuned to human faces and behavior as the success of their activities depends on it. For sure, pet dogs spend their encultured lives with humans, but clearly they interact differently with humans. Pet dogs are usually treated as part of the household family, sharing many of the activities ongoing in the family home life. Perhaps such experiences lead to a unified brain area for processing human and dog faces in pet dogs. As for the DFA, it may be that this area becomes strongly active as the dogs are responding to the social aspect of dogs. Although dogs are sensitive to human social cues, it is not surprising that a brain area important to social cognition would be more active within species, showing a species-specific effect (especially in dogs not part of a human home life). To test such a hypothesis regarding neural plasticity, we suggest longitudinal studies in which the same dogs are tested at different time points or cross-sectional groups of dogs with different amounts of experience are tested.

A growing literature continues to expand the behavioral evidence demonstrating processes that underlie social cognition in the domestic dog. Here, we have provided an investigation of social cognition that explores the distinction of social relationships related to the neural processing of visual stimuli. Future research should aim to correlate brain and behavior so as to draw conclusive connections between neuroimaging and behavioral evidence. Results of such comparisons may have significant impacts on procurement and training of dogs in working roles. That is, should behavioral assessments be validated by fMRI, practitioners would be better poised to select dogs for individual working roles, be those founded in scent detection or service. Beyond the applied usefulness of such findings, comparing the behavioral, anatomical, and functional neural mechanisms within and across species will continue to provide insight into the evolutionary origin of cognitive traits.

References

Albuquerque, N., Guo, K., Wilkinson, A., Savalli, C., Otta, E., & Mills, D. (2016). Dogs recognize dog and human emotions. Biology Letters, 12(1), 20150883.

Andics, A., Gácsi, M., Faragó, T., Kis, A., & Miklósi, Á. (2014). Voice-sensitive regions in the dog and human brain are revealed by comparative fMRI. Current Biology, 24(5), 574-578.

Andics, A., Gábor, A., Gácsi, M., Faragó, T., Szabó, D., & Miklósi, Á. (2016). Neural mechanisms for lexical processing in dogs. Science, 353(6303), 1030-1032.

Arcaro, M. J., Schade, P.F., Vincent, J. L., Ponce, C. R., & Livingstone, M. S. (2017). Seeing faces is necessary for face-domain formation. Nature Neuroscience, 20, 1404-1412. https://doi.org/10.1038/nn.4635

Barber, A. L., Randi, D., Müller, C. A., & Huber, L. (2016). The processing of human emotional faces by pet and lab dogs: evidence for lateralization and experience effects. PloS one, 11(4).

Berns, G. S., Brooks, A. M., & Spivak, M. (2012). Functional MRI in awake unrestrained dogs. PloS one, 7(5), e38027.

Berns, G. S., Brooks, A. M., & Spivak, M. (2015). Scent of the familiar: an fMRI study of canine brain responses to familiar and unfamiliar human and dog odors. Behavioural Processes, 110, 37-46.

Berns, G. S., & Cook, P. F. (2016). Why did the dog walk into the MRI?. Current Directions in Psychological Science, 25(5), 363-369.

Bernstein, M., & Yovel, G. (2015). Two neural pathways of face processing: a critical evaluation of current models. Neuroscience & Biobehavioral Reviews, 55, 536-546.

Bloom, T., & Friedman, H. (2013). Classifying dogs’(Canis familiaris) facial expressions from photographs. Behavioural Processes, 96, 1-10.

Bruce, V., & Young, A. (1986). Understanding face recognition. British Journal of Psychology, 77(3), 305-327.

Bunford, N., Andics, A., Kis, A., Miklósi, Á., & Gácsi, M. (2017). Canis familiaris as a Model for Non-Invasive Comparative Neuroscience. Trends in Neurosciences, 40(7), 438-452.

Calder, A. J., & Young, A. W. (2005). Understanding the recognition of facial identity and facial expression. Nature Reviews Neuroscience, 6(8), 641.

Cook, P. F., Spivak, M., & Berns, G. S. (2014). One pair of hands is not like another: caudate BOLD response in dogs depends on signal source and canine temperament. PeerJ, 2, e596.

Cuaya, L. V., Hernández-Pérez, R., & Concha, L. (2016). Our faces in the dog's brain: Functional imaging reveals temporal cortex activation during perception of human faces. PloS one, 11(3).

di Oleggio Castello, M. V., Halchenko, Y. O., Guntupalli, J. S., Gors, J. D., & Gobbini, M. I. (2017). The neural representation of personally familiar and unfamiliar faces in the distributed system for face perception. Scientific Reports, 7(1), 12237.

Dilks, D. D., Cook, P., Weiller, S. K., Berns, H. P., Spivak, M., & Berns, G. S. (2015). Awake fMRI reveals a specialized region in dog temporal cortex for face processing. PeerJ, 3.

Duchaine, B., & Yovel, G. (2015). A revised neural framework for face processing. Annual Review of Vision Science, 1, 393-416.

Dufour, V., Pascalis, O., & Petit, O. (2006). Face processing limitation to own species in primates: a comparative study in brown capuchins, Tonkean macaques and humans. Behavioural Processes, 73(1), 107-113.

Fox, P. T., Parsons, L. M., & Lancaster, J. L. (1998). Beyond the single study: function/location metanalysis in cognitive neuroimaging. Current Opinion in Neurobiology, 8(2), 178-187.

Freiwald, W., Duchaine, B., & Yovel, G. (2016). Face processing systems: from neurons to real-world social perception. Annual Review of Neuroscience, 39, 325-346.

Gauthier, I., Skudlarski, P., Gore, J. C., & Anderson, A. W. (2000). Expertise for cars and birds recruits brain areas involved in face recognition. Nature Neuroscience, 3(2), 191.

Hare, B., Brown, M., Williamson, C., & Tomasello, M. (2002). The domestication of social cognition in dogs. Science, 298(5598), 1634-1636.

Haxby, J. V., Hoffman, E. A., & Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends in Cognitive Sciences, 4(6), 223-233

Huber, L., Racca, A., Scaf, B., Virányi, Z., & Range, F. (2013). Discrimination of familiar human faces in dogs (Canis familiaris). Learning and motivation, 44(4), 258-269.

Jia, H., Pustovyy, O. M., Waggoner, P., Beyers, R. J., Schumacher, J., Wildey, C., … Vodyanoy, V. J. (2014). Functional MRI of the olfactory system in conscious dogs. PLoS One, 9(1).

Jia, H., Pustovyy, O. M., Wang, Y., Waggoner, P., Beyers, R. J., Schumacher, J., … Vodyanoy, V. J. (2015). Enhancement of odor-induced activity in the canine brain by zinc nanoparticles: A functional MRI study in fully unrestrained conscious dogs. Chemical Senses, 41(1), 53-67.

Kaminski, J., Riedel, J., Call, J., & Tomasello, M. (2005). Domestic goats, Capra hircus, follow gaze direction and use social cues in an object choice task. Animal Behaviour, 69(1), 11-18.

Kanwisher, N., McDermott, J., & Chun, M. M. (1997). The fusiform face area: a module in human extrastriate cortex specialized for face perception. Journal of Neuroscience, 17(11), 4302-4311.

Kendrick, K. M., da Costa, A. P., Leigh, A. E., Hinton, M. R., & Peirce, J. W. (2001). Sheep don't forget a face. Nature, 414, 165-166.

Kyathanahally, S. P., Jia, H., Pustovyy, O. M., Waggoner, P., Beyers, R., Schumacher, J., … Vodyanoy, V. J. (2015). Anterior–posterior dissociation of the default mode network in dogs. Brain Structure and Function, 220(2), 1063-1076.

Lyn, H., Russell, J. L., & Hopkins, W. D. (2010). The impact of environment on the comprehension of declarative communication in apes. Psychological Science, 21(3), 360-365.

Mars RB, Neubert FX, Verhagen L, Sallet J, Miller KL, Dunbar RIM, & Barton RA (2014). Primate comparative neuroscience using magnetic resonance imaging: Promises and challenges. Frontiers in Neuroscience, 8, 298.

Mars RB, Sallet J, Neubert FX, & Rushworth MFS. 2013.Connectivity profiles reveal the relationship between brain areas for social cognition in human and monkey temporoparietal cortex. Proceedings of the National Academy of Sciences, 110, 10806-10811.

Mars RB, Verhagen L, Gladwin TE, Neubert FX, Sallet J, & Rushworth MFS (2016). Comparing brains by matching connectivity fingerprints. Neuroscience and Biobehavioral Reviews, 60, 90-97.

McKinley, J., & Sambrook, T. D. (2000). Use of human-given cues by domestic dogs () and horses (Equus caballus). Animal Cognition, 3(1), 13-22.

Miklósi, Á., & Topál, J. (2013). What does it take to become ‘best friends’? Evolutionary changes in canine social competence. Trends in Cognitive Sciences, 17(6), 287-294.

Müller, C. A., Schmitt, K., Barber, A. L., & Huber, L. (2015). Dogs can discriminate emotional expressions of human faces. Current Biology, 25(5), 601-605.

Nagasawa, M., Murai, K., Mogi, K., & Kikusui, T. (2011). Dogs can discriminate human smiling faces from blank expressions. Animal Cognition, 14(4), 525-533.

Nichols, T., Brett, M., Andersson, J., Wager, T., & Poline, J. B. (2005). Valid conjunction inference with the minimum statistic. Neuroimage, 25(3), 653-660.

O’Toole, A.J., Roark, D.A., Abdi, H., 2002. Recognizing moving faces: a psychological and neural synthesis. Trends in Cognitive Sciences, 6, 261–266.

Paller, K. A., Ranganath, C., Gonsalves, B., LaBar, K. S., Parrish, T. B., Gitelman, D. R., … Reber, P. J. (2003). Neural correlates of person recognition. Learning & Memory, 10(4), 253-260.

Pascalis, O., & Bachevalier, J. (1998). Face recognition in primates: a cross-species study. Behavioural Processes, 43(1), 87-96.

Passingham, R. E., Stephan, K. E., & Kotter, R. (2002). The anatomical basis of functional localization in the cortex. Nature Review Neuroscience, 3(8), 606–16.

Patronek, G. J., Waters, D. J., & Glickman, L. T. (1997). Comparative longevity of pet dpgs and humans: Implication for gerontology research. Journal of Gerontology: Biological Sciences, 52A(3), B171–B178.

Power, J. D., Schlaggar, B. L., & Petersen, S. E. (2015). Recent progress and outstanding issues in motion correction in resting state fMRI. Neuroimage, 105, 536-551.

Racca, A., Guo, K., Meints, K., & Mills, D. S. (2012). Reading faces: differential lateral gaze bias in processing canine and human facial expressions in dogs and 4-year-old children. PLoS one, 7(4).

Ramaihgari B., Pustovyy, O.M., Waggoner, P., Beyers, R.J., Wildey, C., Morrison, E., Salibi, N., Katz, J.S., Denney, T.S., Vodyanoy, V.J., & Deshpande G. (2018). Zinc Nanoparticles Enhance Brain Connectivity in the Canine Olfactory Network: Evidence From an fMRI Study in Unrestrained Awake Dogs. Frontiers in Veterinary Science. 5:127. doi: https://doi.org/10.3389/fvets.2018.00127

Siniscalchi, M., d’Ingeo, S., Fornelli, S., & Quaranta, A. (2018). Lateralized behavior and cardiac activity of dogs in response to human emotional vocalizations. Scientific Reports, 8(1), 77.

Sliwa, J., Planté, A., Duhamel, J. R., & Wirth, S. (2014). Independent neuronal representation of facial and vocal identity in the monkey hippocampus and inferotemporal cortex. Cerebral Cortex, 26(3), 950-966.

Smith SM, Andersson J, Auerbach EJ, Beckmann CF, Bijsterbosch J, Douaud G, Duff E, Feinberg DA, Griffanti L, Harms MP, Kelly M, Laumann T, Miller KL, Moeller S, Petersen SE, Power J, Salimi-Khorshidi G, Snyder AZ, Vu A, Woolrich MW, Xu J, Yacoub E, Ŭgurbil K, Van Essen DC, Glasser MF (2013). Resting-state fMRI in the Human Connectome Project. NeuroImage, 80, 144-168.

Stoeckel, L. E., Palley, L. S., Gollub, R. L., Niemi, S. M., & Evins, A. E. (2014). Patterns of brain activation when mothers view their own child and dog: An fMRI study. PLoS One, 9(10).

Thalmann, O., Shapiro, B., Cui, P., Schuenemann, V. J., Sawyer, S. K., Greenfield, D. L., … Napierala, H. (2013). Complete mitochondrial genomes of ancient canids suggest a European origin of domestic dogs. Science, 342(6160), 871-874.

Thompkins, A.M. (2016). Investigating the Dog-Human Social Bond via Behavioral and fMRI Methodologies (Doctoral dissertation). Auburn University, AL.

Thompkins, A. M., Deshpande, G., Waggoner, P., & Katz, J. S. (2016). Functional Magnetic Resonance Imaging of the Domestic Dog: Research, Methodology, and Conceptual Issue. Comparative Cognition & Behavior Reviews, 11, 63-82.

Weiner, K.S., Barnett, M. A., Lorenz, S., Caspers, J., Stigliani, A., Amunts, K., Zilles, K., Fischl, B., & Grill-Spector, K. (2017). The Cytoarchitecture of Domain-specific Regions in Human High-level Visual Cortex. Cerebral Cortex, 27, 146–161, https://doi.org/10.1093/cercor/bhw361

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Thompkins, A.M., Ramaiahgari, B., Zhao, S. et al. Separate brain areas for processing human and dog faces as revealed by awake fMRI in dogs (Canis familiaris). Learn Behav 46, 561–573 (2018). https://doi.org/10.3758/s13420-018-0352-z

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13420-018-0352-z