Abstract

The orbitofrontal cortex and amygdala are involved in emotion and in motivation, but the relationship between these functions performed by these brain structures is not clear. To address this, a unified theory of emotion and motivation is described in which motivational states are states in which instrumental goal-directed actions are performed to obtain rewards or avoid punishers, and emotional states are states that are elicited when the reward or punisher is or is not received. This greatly simplifies our understanding of emotion and motivation, for the same set of genes and associated brain systems can define the primary or unlearned rewards and punishers such as sweet taste or pain. Recent evidence on the connectivity of human brain systems involved in emotion and motivation indicates that the orbitofrontal cortex is involved in reward value and experienced emotion with outputs to cortical regions including those involved in language, and is a key brain region involved in depression and the associated changes in motivation. The amygdala has weak effective connectivity back to the cortex in humans, and is implicated in brainstem-mediated responses to stimuli such as freezing and autonomic activity, rather than in declarative emotion. The anterior cingulate cortex is involved in learning actions to obtain rewards, and with the orbitofrontal cortex and ventromedial prefrontal cortex in providing the goals for navigation and in reward-related effects on memory consolidation mediated partly via the cholinergic system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction and aims

There have been considerable advances recently in understanding the connectivity and connections of the human orbitofrontal cortex and amygdala, and how they relate to emotion (Rolls et al. 2023a, d), but how these systems and processes are related to motivation has been much less explored. This paper shows how the brain systems involved in motivation are similar to those involved in emotion, and provides a framework for understanding how emotion and motivation are related to each other, and how similar brain systems are involved in both. This paper aims to make key advances in our understanding of how the orbitofrontal cortex and amygdala structure (anatomy and connectivity) is related to the two key functions performed by these brain regions, emotion and motivation.

To understand the neuroscience of both emotion and motivation, it is important to have a framework for understanding the relation between emotion and motivation. This paper first sets out a theory of emotion, and a framework for understanding the relation between emotion and motivation, and then considers how brain regions involved in emotion and motivation, the orbitofrontal cortex, anterior cingulate cortex, and amygdala, are involved in emotion and motivation. Special reference is made to these brain regions in primates including humans, to ensure that what is described in relevant to understanding brain systems involved in emotion and motivation in humans, and their disorders. Recent evidence about the connectivity of these systems in humans makes this paper very timely (Rolls et al. 2023a, d). A second aim is to show how emotion and its brain systems are highly adaptive from an evolutionary and gene specification perspective. The third aim is to consider where and how decisions are made about reward and emotional value, and separately about where and how decisions are made about the actions to obtain the rewards. The fourth aim is to consider some of the implications of this research for understanding brain function in health and disease; evolution to select for brain systems that respond to stimuli that encode rewards and punishers; memory and memory consolidation; and personality.

The approach taken here is new, in that it produces a unified approach to understanding emotion and motivation and their underlying brain mechanisms; in that it updates our understanding of the brain mechanisms of emotion (Rolls 2014b, 2018) by incorporating new evidence on the effective connectivity as well as the functional connectivity and the tractography of the brain systems involved in humans (Rolls et al. 2023a, d); in that it emphasises how evolution operates in part by selecting for brain reward systems that increase reproductive fitness; and in that it considers implications for understanding brain function in neurological and psychiatric states, how reward and emotional systems relate to episodic and semantic memory and memory consolidation, and welfare. The new results and understanding from taking this approach, including the advances related to new investigations of effective connectivity of the human brain, are summarised in "Conclusions and highlights".

A theory of emotion relevant to brain systems involved in reward value and emotion

First a definition and theory of emotion and its functions are provided, and then key brain regions involved in emotion are considered, including the orbitofrontal cortex, anterior cingulate cortex, amygdala, striatum, the dopamine system, and the insula.

A definition of emotion

A clear working definition of emotion is helpful before we consider its brain mechanisms. Emotions can usefully be defined (operationally) as states elicited by the presentation, termination or omission of rewards and punishers which have particular functions (Rolls 1999, 2000a, 2013b, 2014b, 2018). A reward is anything for which an animal (which includes humans) will work. A punisher is anything that an animal will escape from or avoid. As shown in Fig. 1, different reward/punishment contingencies are associated with different types of emotion. An example of an emotion associated with a reward might be the happiness produced by being given a particular reward, such as a pleasant touch, praise, or winning a large sum of money. An example of an emotion produced by a punisher might be fear produced by the sound of a rapidly approaching bus, or the sight of an angry expression on someone’s face. We will work to avoid such punishing stimuli. An example of an emotion produced by the omission or termination or loss of a reward is frustration or anger (if some action can be taken), or sadness (if no action can be taken). An example of an emotion produced by the omission or termination of a punisher (such as the removal of a painful stimulus, or sailing out of danger) would be relief. These examples indicate how emotions can be produced by the delivery, omission, or termination of rewarding or punishing stimuli, and go some way to indicate how different emotions could be produced and classified in terms of the rewards and punishers received, omitted, or terminated. Figure 1 summarizes some of the emotions associated with the delivery of a reward or punisher or a stimulus associated with them, or with the omission of a reward or punisher.

Some of the emotions associated with different reinforcement contingencies are indicated. Intensity increases away from the centre of the diagram, on a continuous scale. The classification scheme created by the different reinforcement contingencies consists with respect to the action of (1) the delivery of a reward (S+), (2) the delivery of a punisher (S−), (3) the omission of a reward (S+) (extinction) or the termination of a reward (S+ !) (time out), and (4) the omission of a punisher (S−) (avoidance) or the termination of a punisher (S−!) (escape). Note that the vertical axis describes emotions associated with the delivery of a reward (up) or punisher (down). The horizontal axis describes emotions associated with the non-delivery of an expected reward (left) or the non-delivery of an expected punisher (right). For the contingency of non-reward (horizontal axis, left) different emotions can arise depending on whether an active action is possible to respond to the non-reward, or whether no action is possible, which is labelled as the passive condition. In the passive condition, non-reward may produce depression. Frustration could include disappointment. The diagram summarizes emotions that might result for one reinforcer as a result of different contingencies. Every separate reinforcer has the potential to operate according to contingencies such as these. This diagram does not imply a dimensional theory of emotion, but shows the types of emotional state that might be produced by a specific reinforcer. Each different reinforcer will produce different emotional states, but the contingencies will operate as shown to produce different specific emotional states for each different reinforcer

The subjective feelings of emotions are part of the much larger problem of consciousness (Rolls 2020). The brain bases of subjective experience are a topic of considerable current interest, not only with higher order thought (HOT) theories (Rosenthal 2004; Brown et al. 2019), but also with the higher order syntactic thought (HOST) theory of consciousness (Rolls 2007a, 2012b, 2014b, 2016c, 2018, 2020) which is more computationally specific and addresses the adaptive value of the type of processing related to consciousness; and a point made here is that the orbitofrontal cortex is at least on the route to human subjective experience of emotion and affective value (see below).

I consider elsewhere a slightly more formal definition than rewards or punishers, in which the concept of reinforcers is introduced, and it is shown that emotions can be usefully seen as states produced by instrumental reinforcing stimuli (Rolls 2014b). Instrumental reinforcers are stimuli which, if their occurrence, termination, or omission is made contingent upon the making of a response, alter the probability of the future emission of that response (Cardinal et al. 2002).

Some stimuli are unlearned (innate), “primary”, reinforcers (e.g., the taste of food if the animal is hungry, or pain). Some examples of primary reinforcers are shown in Table 1 (Rolls 2014b). There may be in the order of 100 such primary reinforcers, each specified by different genes (Rolls 2014b). Each primary reinforcer can produce a different type of affective state, for example the taste of a pleasant sweet or sweet/fat texture food such as ice cream is very different from the feel of a pleasant touch vs pain; which are all in turn very different from attraction to or love for someone. Thus different types of affective state are produced by each different primary reinforcer, and the reinforcement contingencies shown in Fig. 1 apply to each of these primary reinforcers. For example, not receiving ice cream is very different emotionally from not receiving pleasant touch.

Other stimuli may become reinforcing by associative learning, because of their association with such primary reinforcers, thereby becoming "secondary reinforcers". An example might be the sight of a painful stimulus. Brain systems that learn and unlearn these associations between stimuli or events in the environment and reinforcers are important in understanding the neuroscience and neurology of emotions, as we will see below.

This foundation has been developed (Rolls 2014b) to show how a very wide range of emotions can be accounted for, as a result of the operation of a number of factors, including the following:

-

1.

The reinforcement contingency (e.g., whether reward or punishment is given, or withheld) (see Fig. 1).

-

2.

The intensity of the reinforcer (see Fig. 1).

-

3.

Any environmental stimulus might have a number of different reinforcement associations. (For example, a stimulus might be associated both with the presentation of a reward and of a punisher, allowing states such as conflict and guilt to arise.)

-

4.

Emotions elicited by stimuli associated with different primary reinforcers will be different, as described above, with some primary reinforcers each of which will produce different affective states shown in Table 1.

-

5.

Emotions elicited by different secondary reinforcing stimuli will be different from each other (even if the primary reinforcer is similar). For example, the same touch to the arm but by different people might give rise to very different emotions. Cognitive states and semantic knowledge can contribute to emotion in these ways, as well as in other ways that might arise because for example of reasoning in the rational brain system.

-

6.

The emotion elicited can depend on whether an active or passive behavioural response is possible. (For example, if an active behavioural response can occur to the omission of a positive reinforcer, then anger might be produced, but if only passive behaviour is possible, then sadness, depression or grief might occur: see Fig. 1.)

By combining these six factors, it is possible to account for a very wide range of emotions, as described by Rolls (2014b). This is important: the range of emotions that can be accounted for in this way is enormous (Rolls 2014b), and is not limited (Adolphs and Anderson 2018). It is also worth noting that emotions can be produced just as much by the recall of reinforcing events as by external reinforcing stimuli; that cognitive processing (whether conscious or not) is important in many emotions, for very complex cognitive processing may be required to determine whether or not environmental events are reinforcing. Indeed, emotions normally consist of cognitive processing that analyses the stimulus, and then determines its reinforcing valence; and then an elicited affective (emotional) state or longer term mood change if the valence is positive or negative. I note that a mood or affective state may occur in the absence of an external stimulus, as in some types of depression, but that normally the mood or affective state is produced by an external stimulus, with the whole process of stimulus representation, evaluation in terms of reward or punishment, and the resulting mood or affect being referred to as emotion (Rolls 2014b).

The functions of emotions

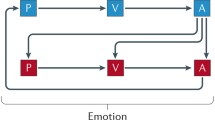

The most important function of emotion is as part of the processes of learning goal-directed actions to obtain rewards or avoid punishers. The first process is stimulus-reinforcer association learning; emotional states are produced as a result (Rolls 2014b). An example might be learning that the sight of a person is associated with rewards, which might produce the emotion of happiness. This process is implemented in structures such as the orbitofrontal cortex and amygdala (Figs. 2, 3, 4) (Rolls and Grabenhorst 2008; Grabenhorst and Rolls 2011; Rolls 2014b).

Multiple routes to the initiation of actions and responses to rewarding and punishing stimuli in primates including humans. The lowest (spinal cord and brainstem) levels in the hierarchy are involved in reflexes, including for example reflex withdrawal of a limb to a nociceptive stimulus, and unlearned autonomic responses. The second level in the hierarchy involves associative learning in the amygdala and orbitofrontal cortex between primary reinforcers such as taste, touch and nociceptive stimuli and neutral stimuli such as visual and auditory stimuli from association cortex (e.g. inferior temporal visual cortex) to produce learned autonomic and some other behavioural responses such as approach. The anteroventral viscero-autonomic insula may be one link from the orbitofrontal cortex to autonomic output. A third level in the hierarchy is the route from the orbitofrontal cortex and amygdala via the basal ganglia especially the ventral striatum to produce implicit stimulus–response habits. A fourth level in the hierarchy important in emotion is from the orbitofrontal cortex to the anterior cingulate cortex for actions that depend on the value of the goal in action–outcome learning. For this route, the orbitofrontal cortex implements stimulus-reinforcer association learning, and the anterior cingulate cortex action–outcome learning (where the outcome refers to receiving or not receiving a reward or punisher). A fifth level in the hierarchy is from the orbitofrontal cortex [and much less the amygdala (Rolls et al. 2023a)] via multiple step reasoning systems involving syntax and language. Processing at this fifth level may be related to explicit conscious states. The fifth level may also allow some top-down control of emotion-related states in the orbitofrontal cortex by the explicit processing system. Pallidum/SN—the globus pallidus and substantia nigra

The systems level organization of the brain for emotion in primates including humans. In Tier 1, representations are built of visual, taste, olfactory and tactile stimuli that are independent of reward value and therefore of emotion. In Tier 2, reward value and emotion are represented. A pathway for top-down attentional and cognitive modulation of emotion is shown in purple. In Tier 3 actions are learned in the supracallosal (or dorsal) anterior cingulate cortex to obtain the reward values signaled by the orbitofrontal cortex and amygdala that are relayed in part via the pregenual anterior cingulate cortex and vmPFC. Decisions between stimuli of different reward value can be taken in the ventromedial prefrontal cortex, vmPFC. In Tier 3, orbitofrontal cortex inputs to the reasoning/language systems enable affective value to be incorporated and reported. In Tier 3, stimulus–response habits can also be produced using reinforcement learning. In Tier 3 autonomic responses can also be produced to emotion-provoking stimuli. Auditory inputs also reach the amygdala. V1—primary visual (striate) cortex; V2 and V4—further cortical visual areas. PFC—prefrontal cortex. The Medial PFC area 10 is part of the ventromedial prefrontal cortex (vmPFC). VPL—ventro-postero-lateral nucleus of the thalamus, which conveys somatosensory information to the primary somatosensory cortex (areas 1, 2 and 3). VPMpc—ventro-postero-medial nucleus pars parvocellularis of the thalamus, which conveys taste information to the primary taste cortex

Maps of architectonic areas in the orbitofrontal cortex (left, ventral view of the brain) and medial prefrontal cortex (right, medial view of the brain) of humans. Left: the medial orbitofrontal cortex includes areas 13 and 11 (green). The lateral orbitofrontal cortex includes area 12 (red). (Area 12 is sometimes termed area 12/47 in humans. The figure shows three architectonic subdivisions of area 12.) Almost all of the human orbitofrontal cortex except area 13a is granular. Agranular cortex is shown in dark grey. The part of area 45 shown is the orbital part of the inferior frontal gyrus pars triangularis. Right: the anterior cingulate cortex includes the parts shown of areas 32, 25 (subgenual cingulate), and 24. The ventromedial prefrontal cortex includes areas 14 (gyrus rectus) 10m and 10r. AON—anterior olfactory nucleus; Iai, Ial, Iam, Iapm—subdivisions of the agranular insular cortex. (After Öngür et al. (2003) Journal of Comparative Neurology with permission of John Wiley & Sons, Inc., modified from a redrawn version by Passingham and Wise (2012).)

The second process is instrumental learning of an action made to approach and obtain the reward (an outcome of the action) or to avoid or escape from the punisher (an outcome). This is action–outcome learning, and involves brain regions such as the anterior cingulate cortex when the actions are being guided by the goals (Rushworth et al. 2011, 2012; Rolls 2014b, 2018, 2019a, 2021b, 2023d). Emotion is an integral part of this, for it is the state elicited in the first stage, by stimuli that are decoded as rewards or punishers (Rolls 2014b). The behaviour is under control of the reward value of the goal, in that if the reward is devalued, for example by feeding a food until satiety is reached, then on the very next occasion that the stimulus (the food) is offered, no action will be performed to try to obtain it (Rolls 2014b).

The striatum, rest of the basal ganglia, and dopamine system can become involved when the behaviour becomes automatic, and habit-based, that is, uses stimulus–response connections (Figs. 2, 3). In this situation, very little emotion may be elicited by the stimulus, as the behaviour has now become automated as a stimulus–response habit. For this type of learning, if the reward is devalued outside the situation, then the very next time that the stimulus is offered, the automated response is likely to be performed, providing evidence that the behaviour is no longer being guided by the reward value of the stimulus. The dopamine system is involved in this type of rather slow habit-based learning, it is thought by providing an error signal to the striatum which implements this type of habit learning (Schultz 2016c, b, 2017). The dopamine system probably receives its inputs from the orbitofrontal cortex (Rolls 2017; Rolls et al. 2023d). These brain systems are considered further below.

Other functions of emotion include the elicitation of autonomic responses, via pathways for example from the orbitofrontal cortex to the anteroventral visceral/autonomic insula and to the subgenual cingulate cortex (Critchley and Harrison 2013; Rolls 2013b, 2014b, 2019b, a; Quadt et al. 2022).

Stimuli can elicit behaviours in a number of ways via different routes to action in primates including humans, as shown in Fig. 2. An important point made by Fig. 2 is that there are multiple routes to output including to action that can be produced by stimuli that produce emotional states. Here emotional states are the states elicited by reward and punishing/non-reward stimuli, as illustrated in Fig. 1. The multiple routes are organized in a set of hierarchies, with each level in the system added later in evolution, but with all levels left in operation over the course of evolution (Rolls 2016c). The result of this is that a response such as an autonomic response to a stimulus that happens also to be rewarding might be produced by only the lower levels of the system operating, without necessarily the highest e.g. explicit levels being involved. The lowest levels in the hierarchy illustrated in Fig. 2 are involved in reflexes, including for example reflex withdrawal of a limb to a nociceptive stimulus, and autonomic responses. The second level in the hierarchy can produce learned autonomic and some other behavioural responses to for example a previously neutral visual or auditory stimulus after it has been paired with a nociceptive stimulus or with a good taste stimulus. This route involves stimulus-reinforcer learning in the amygdala and orbitofrontal cortex. A third level in the hierarchy shown in Fig. 2 is the route from the orbitofrontal cortex and amygdala via the basal ganglia especially the ventral striatum to produce implicit stimulus–response habits. A fourth level in the hierarchy that is important in emotion is from especially the orbitofrontal cortex to the anterior cingulate cortex for goal-directed action. The emotional states implemented at this level may not necessarily be conscious. A fifth level in the hierarchy shown in Fig. 2 is from the orbitofrontal cortex [and much less the amygdala (Rolls et al. 2023a)] via multiple step reasoning systems involving syntax and language, which can be associated with explicit conscious states (especially I argue if a higher order syntactic thought system for correcting lower order thoughts is involved (Rolls 2008, 2014b, 2020, 2023d), see "A reasoning, rational, route to action"). It is emphasized that each of these types of output have adaptive value in preparing individuals to deal physiologically and behaviourally with what may generally be described as emotion-provoking events.

The neuroscience of emotion in humans and other primates

A framework for understanding the neuroscience of emotion in humans and other primates

A framework is shown in Fig. 3, and is built on evidence from neuronal recordings, the effects of brain damage, and fMRI in humans and macaques some of which is summarized below (Rolls 2014b, 2018, 2019a, 2021b, 2023d; Rolls et al. 2020b). Part of the evidence for what is shown in Fig. 3 comes from reward devaluation, in which when the reward value is changed, for example by feeding to satiety, neural responses to stimuli are little affected in Tier 1, but decrease to zero in Tier 2. Part of the evidence comes from the learning of associations between stimuli and reward value, which occurs mainly in Tier 2. Part of the evidence comes from the effects of brain damage on emotion, which occur primarily after damage to the orbitofrontal cortex and amygdala in Tier 2, and the cingulate cortex in Tier 3 (Rolls 2021c). The organization of reward value processing and therefore emotion in the rodent brain is very different (Rolls 2019b, 2021b, 2023d), and a brief summary about this is provided in "Brain systems for emotion and motivation in primates including humans compared to those in rodents".

In the context of what is shown in Fig. 3, the focus next is on key brain areas involved in emotion in humans and other primates, the orbitofrontal cortex, anterior cingulate cortex, and amygdala.

The orbitofrontal cortex

The connections and connectivity of the orbitofrontal cortex

The orbitofrontal cortex cytoarchitectonic areas of the human brain are shown in Fig. 4 (left). The medial orbitofrontal cortex includes areas 13 and 11 (Öngür et al. 2003). The lateral orbitofrontal cortex includes area 12 (sometimes in humans termed 12/47) (Öngür et al. 2003). The anterior cingulate cortex includes the parts shown in Fig. 4 (right) of areas 32, 25 (subgenual cingulate), and 24 (see also Figs. 5 and 6). The ventromedial prefrontal cortex includes areas 14 (gyrus rectus), 10m and 10r.

Summary of the effective connectivity of the human medial orbitofrontal cortex. The medial orbitofrontal cortex has taste, olfactory and inferior temporal visual cortex inputs, and connectivity with the hippocampus, pregenual anterior cingulate cortex, ventromedial prefrontal cortex (vmPFC), posterior cingulate cortex (e.g. 31), parietal cortex, inferior prefrontal cortex, and frontal pole. The main regions with which the medial OFC has connectivity are indicated by names with the words in black font. The width of the arrows and the size of the arrow heads in each direction reflects the strength of the effective connectivity. The abbreviations are listed in Rolls et al. (2023d)

Summary of the effective connectivity of the human lateral orbitofrontal cortex. The lateral orbitofrontal cortex has taste, olfactory and inferior temporal visual cortex inputs, and connectivity with the hippocampus, supracallosal (dorsal) anterior cingulate cortex, inferior and dorsolateral prefrontal cortex, and frontal pole. However, the lateral OFC also has connectivity with language regions (the cortex in the superior temporal sulcus and Broca’s area). The main regions with which the lateral OFC has connectivity are indicated by names with the words in black font. The width of the arrows and the size of the arrow heads in each direction reflects the strength of the effective connectivity. The abbreviations are listed in Rolls et al. (2023d)

Some of the main connections of the orbitofrontal cortex in primates are shown schematically in Fig. 3 (Carmichael and Price 1994, 1995; Barbas 1995, 2007; Petrides and Pandya 1995; Pandya and Yeterian 1996; Ongür and Price 2000; Price 2006, 2007; Saleem et al. 2008; Mackey and Petrides 2010; Petrides et al. 2012; Saleem et al. 2014; Henssen et al. 2016; Rolls 2017, 2019d, b, Rolls et al. 2020b). The orbitofrontal cortex receives inputs from the ends of every ventral cortical stream that processes the identity of visual, taste, olfactory, somatosensory, and auditory stimuli (Rolls 2019b, 2023d). At the ends of each of these cortical processing streams, the identity of the stimulus is represented independently of its reward value (Rolls 2023d). This is shown by neuronal recordings in primates (Rolls 2019b). For example, the inferior temporal cortex represents objects and faces independently of their reward value as shown by visual discrimination reversals, and by devaluation of reward tests by feeding to satiety (Rolls et al. 1977; Rolls 2012c, 2016c, 2019b). Similarly, the insular primary taste cortex represents what the taste is independently of its reward value (Yaxley et al. 1988; Rolls 2015, 2016d, 2019b, 2023d).

Outputs of the orbitofrontal cortex reach the anterior cingulate cortex, the striatum, the insula, and the inferior frontal gyrus (Rolls 2019a, 2023d; Rolls et al. 2023d), and enable the reward value representations in the orbitofrontal cortex to influence behaviour (Fig. 3, green). The orbitofrontal cortex projects reward value outcome information (e.g. the taste of food) to the anterior cingulate cortex, where it is used to provide the reward outcomes for action–outcome learning (Rushworth et al. 2012; Rolls a, 2019b, 2023d). The orbitofrontal cortex also projects expected reward value information (e.g. the sight of food) to the anterior cingulate cortex where previously learned actions for that goal can be selected. The orbitofrontal cortex projects reward-related information to the ventral striatum (Williams et al. 1993), and this provides a route, in part via the habenula, for reward-related information to reach the dopamine neurons (Rolls 2017), which respond inter alia to positive reward prediction error (Bromberg-Martin et al. 2010; Schultz 2016b). The striatal/basal ganglia route is used for stimulus–response, habit, learning (Everitt and Robbins 2013; Rolls 2014b, 2023d), with dopamine used to provide reward prediction error in reinforcement learning (Schultz 2016c; Cox and Witten 2019). As that system uses dopamine in reinforcement learning of stimulus–response habits, it is much less fast to learn than the orbitofrontal cortex (outcome) with anterior cingulate cortex (action) system for action-outcome goal-based learning, and for emotion (Rolls 2021b). The orbitofrontal cortex projects to the insula as an output pathway and includes a projection to the viscero-autonomic cortex in the antero-ventral insula (Hassanpour et al. 2018; Quadt et al. 2022) that helps to account for why the insula is activated in some tasks in which the orbitofrontal cortex is involved (Rolls 2016d, 2019b, 2023d). This antero-ventral part of the insula (Quadt et al. 2022) is just ventral to the primary taste cortex, and has very strong connections in primates to (and probably from) the orbitofrontal cortex (Baylis et al. 1995). The orbitofrontal cortex also projects to the inferior frontal gyrus, a region that on the right is implicated in stopping behaviour (Aron et al. 2014).

New evidence on the connectivity of the orbitofrontal cortex in humans is shown in Figs. 5, 6, 7, based on measurements of effective connectivity between 360 cortical regions and 24 subcortical regions measured in 171 humans from the Human Connectome Project, and complemented with functional connectivity and diffusion tractography (Rolls et al. 2023d). Effective connectivity measures ‘causal’ effects (in that they take into account time delays) in each direction between every pair of brain regions. (Although time delays are a signature of causality, further evidence is needed to prove causality, such as interventions (Rolls 2021f, e).) The effective connectivities of the orbitofrontal cortex with other brain regions are summarised in Figs. 5, 6, 7 (Rolls et al. 2023a; d). The medial and lateral orbitofrontal cortex between them (and they have effective connectivity with each other) receive taste, somatosensory, olfactory, visual, and auditory inputs that are needed to build the reward and punishment value representations that are found in these regions but much less in the preceding cortical areas that provide these inputs (Rolls 2019d, 2019b, 2021a, 2023d). Taste and somatosensory inputs provide information about primary reinforcers or outcome value, and the orbitofrontal cortex contains visual and olfactory neurons that can learn and reverse in one trial the associations with primary reinforcers and so represent expected value (Thorpe et al. 1983). This is consistent with the schematic diagram in Fig. 3.

Effective connectivity of the human orbitofrontal cortex, vmPFC, and anterior cingulate cortex shown in the middle, with inputs on the left and outputs on the right. The effective connectivity was measured in 171 participants imaged at 7 T by the Human Connectome Project, and was measured between the 360 cortical regions in the HCP-multimodal parcellation atlas (Glasser et al. 2016a), with subcortical regions using the HCPex atlas (Huang et al. 2022). The effective connectivity measures the effect in each direction between every pair of cortical regions, uses time delays to assess the directionality using a Hopf computational model which integrates the dynamics of Stuart–Landau oscillators in each cortical region, has a maximal value of 0.2, and is described in detail elsewhere (Rolls et al. 2022a; b, 2023d). The width of the arrows is proportional to the effective connectivity in the highest direction, and the size of the arrowheads reflects the strength of the effective connectivity in each direction. The effective connectivities shown by the numbers are for the strongest link where more than one link between regions applies for a group of brain regions. Effective connectivities with hippocampal memory system regions are shown in green; with premotor/mid-cingulate regions in red; with the inferior prefrontal language system in blue; and in yellow to the basal forebrain nuclei of Meynert which contains cholinergic neurons that project to the neocortex and to the septal nuclei which contain cholinergic neurons that project to the hippocampus. The Somatosensory regions include 5 and parietal PF and PFop, which also connect to the pregenual anterior cingulate but are not shown for clarity; the Parietal regions include visual parietal regions 7, PGi and PFm. (From Rolls et al (2023d))

In more detail (Fig. 5) (Rolls et al. 2023a; d), parts of the medial orbitofrontal cortex (11l, 13l, OFC and pOFC, which are interconnected) have effective connectivity with the taste/olfactory/visceral anterior agranular insular complex (AAIC); the piriform (olfactory) cortex; the entorhinal cortex (EC); the inferior temporal visual cortex (TE1p, TE2a, TE2p); superior medial parietal 7Pm; inferior parietal PF which is somatosensory (Rolls et al. 2023e, f); with parts of the posterior cingulate cortex (31pv, 7m, d23ab) related to memory (Rolls et al. 2023i); with the pregenual anterior cingulate cortex (s32, a24, p24, p32, d32) and much less with the supracallosal anterior cingulate cortex (only 33pr); with ventromedial prefrontal 10r, 10d and 9m; with the frontal pole (10pp, p10p, a10p); with lateral orbitofrontal cortex (47m, 47s, a47r); and dorsolateral prefrontal cortex (46 and a9-46v) (Rolls et al. 2023e). Medial orbitofrontal cortex regions also have effective connectivity directed towards the caudate nucleus and nucleus accumbens (Rolls et al. 2023d).

Also with some detail, the lateral orbitofrontal cortex areas a47r, p47r and 47m share generally similar effective connectivities (Fig. 6) (Rolls et al. 2023a; d) from the visual inferior temporal cortex (TE areas); from parts of the parietal cortex [PFm which receives visual and auditory object-level information and IP2 which is visuomotor (Rolls et al. 2023f)]; from the medial orbitofrontal cortex (11l, 13l, pOFC); from the inferior frontal gyrus regions including IFJ, IFS and BA45; from the dorsolateral prefrontal cortex (8Av, 8BL, a9-46v and p9-46v) implicated in short-term memory (Rolls 2023d; Rolls et al. 2023e); and from the frontal pole (a10p, p10p, 10pp) (Rolls et al. 2023a; d). 47m (which is relatively medial in this group) also has effective connectivity with the hippocampal system (Hipp, EC, perirhinal, and TF), and with ventromedial prefrontal region 10r; and with the frontal pole [10d, and 9m (Rolls et al. 2023c)]. The diffusion tractography provides in addition evidence for connections of these parts of the lateral orbitofrontal cortex with the anterior ventral insular region (AVI) and the frontal opercular areas FOP4 and FOP5 which include the insular primary taste cortex (Rolls 2015, 2016d; Rolls et al. 2023a, d); with the anterior agranular insular complex (AAIC) which may be visceral (Rolls 2016d) and also has taste-olfactory convergence (De Araujo et al. 2003a); with the middle insular region (MI) which is somatosensory (Rolls et al. 2023e); and with the piriform (olfactory) cortex.

The human orbitofrontal cortex has connectivity to the hippocampal memory/navigation system that is both direct, and via the ventromedial area 10 regions (10r, 10d, 10v and 9m), pregenual anterior cingulate cortex, and the memory-related parts of the posterior cingulate cortex (Fig. 7). It is proposed that this connectivity provides a key input about reward/punishment value for the hippocampal episodic memory system, adding to the ‘what’, ‘where’, and ‘when’ information that are also key components of episodic memory (Rolls 2022b; Rolls et al. 2023d). Damage to the vmPFC/anterior cingulate cortex system is likely to contribute to episodic memory impairments by impairing a key component of episodic memory, the reward/punishment/emotional value component (Rolls 2022b; Rolls et al. 2023d). Moreover, the medial orbitofrontal cortex connects to the nucleus basalis of Meynert and the pregenual cingulate to the septum, and damage to these cortical regions may contribute to memory impairments by disrupting cholinergic influences on the neocortex and hippocampus (Rolls 2022b; Rolls et al. 2023d). Navigation is generally towards goals, usually rewards, and it is proposed that this connectivity provides the goals for navigation to the hippocampal system to enable the hippocampus to be involved in navigation towards goals (Rolls 2022b, 2023c; Rolls et al. 2023d).

Two regions of the lateral orbitofrontal cortex, 47l and 47s, are especially connected with language systems in the temporal pole, cortex in the superior temporal sulcus (STS), and inferior frontal gyrus including Broca’s area 45 and 44 (Rolls et al. 2022a). This provides a route for subjective reports to be made about the pleasantness or unpleasantness of stimuli and events (Rolls 2023d).

In the context that the anterior cingulate cortex is implicated in learning associations between actions and the rewards or punishers associated with the actions (Noonan et al. 2011; Rushworth et al. 2012; Rolls 2019a, 2023d), the part of the anterior cingulate cortex that is most likely to be involved in action–outcome learning is the supracallosal (or dorsal) anterior cingulate cortex. That part has effective connectivity with somato-motor areas involved in actions, but which as shown in Fig. 7 receives inputs from the medial orbitofrontal cortex and pregenual anterior cingulate cortex that it is proposed provide the reward/punishment ‘outcome’ signals necessary for action–outcome learning (Rolls 2023d; Rolls et al. 2023d).

The human medial orbitofrontal cortex represents reward value

The primate including human orbitofrontal cortex is the first stage of cortical processing that represents reward value (red in Fig. 3) (Rolls 2019b, d, 2021b). For example, in devaluation experiments, taste, olfactory, visual, and oral texture neurons in the macaque orbitofrontal respond to food when hunger is present, and not after feeding to satiety when the food is no longer rewarding (Rolls et al. 1989; Critchley and Rolls 1996). An example of a devaluation experiment is shown in Fig. 8, which shows that as the value of the taste of glucose is reduced by feeding glucose to satiety, a typical orbitofrontal cortex neuron responding to the taste of food when it is rewarding at the start of the experiment gradually reduces its response to zero as the reward value reaches zero because glucose had been consumed. In fact, the experiment shows more than this, for the effect is relatively specific to the food eaten to satiety: there was little reduction of the firing rate to the flavour of fruit (black currant) juice after glucose had been fed to satiety. Correspondingly, the black currant juice was still rewarding after feeding to satiety with glucose (Fig. 8). Thus satiety is somewhat specific to the reward that has been received, and this is termed sensory-specific satiety. In fact, sensory-specific satiety was discovered when we were recording from lateral hypothalamic neurons responding to the taste and/or sight of food (Rolls et al. 1986). We traced back the computation to the orbitofrontal cortex, in which neurons show sensory-specific satiety to a primary reinforcer, the taste of food (Rolls et al. 1989), and to a secondary reinforcer, the sight and smell of food (Critchley and Rolls 1996). Devaluation effects are not found in the stages that provide taste information to the orbitofrontal cortex, the insular/opercular primary taste cortex (Rolls et al. 1988; Yaxley et al. 1988), nor in the brain region that provides visual inputs to the orbitofrontal cortex, the inferior temporal visual cortex (Rolls et al. 1977). This is some of the evidence on which Fig. 3 is based. The devaluation procedure has been adopted by others (Rudebeck et al. 2017; Murray and Rudebeck 2018; Murray and Fellows 2022).

(Reproduced from Rolls et al. 1989, Copyright 1989 Society for Neuroscience.)

The effect of feeding to satiety with glucose solution on the responses (firing rate ± s.e.m.) of a neuron in the orbitofrontal (secondary taste) cortex to the taste of glucose (open circles) and of blackcurrant juice (BJ). The spontaneous firing rate is also indicated (SA). Below the neuronal response data, the behavioural measure of the acceptance or rejection of the solution on a scale from + 2 (strong acceptance) to − 2 (strong rejection) is shown. The solution used to feed to satiety was 20% glucose. The monkey was fed 50 ml of the solution at each stage of the experiment as indicated along the abscissa, until he was satiated as shown by whether he accepted or rejected the solution. Pre is the firing rate of the neuron before the satiety experiment started.

This discovery of sensory-specific satiety has enormous implications, for it is proposed to apply to all rewards and to no punishers (Rolls 2014b, 2018, 2022a), and has the evolutionary adaptive value that behaviour switches from one reward to another. This ensures for example that a wide range of nutrients will be ingested [as we showed in experiments we performed with Oxford undergraduates after the neurophysiological discovery (Rolls et al. 1981a, b, c)] (though obesity is a resulting risk if a wide range of nutrients becomes easily available for humans) (Rolls 2016a); and more generally tends to promote reproductive success for the genes, in that a wide range of possible rewards will be explored (Rolls 2014b, 2018) (see "Some implications and extensions of the understanding of emotion, motivation, and their brain mechanisms"). Sensory-specific satiety is thus a key factor in emotion.

Further evidence that reward value is represented in the orbitofrontal cortex is that in visual discrimination reversal experiments, neurons in the macaque orbitofrontal cortex reverse the visual stimulus to which they respond in as little as one trial when the reward vs punishment taste received as an outcome for the choice reverses (Thorpe et al. 1983; Rolls et al. 1996). This is rule-based reversal, in that after a previously rewarded visual stimulus is no longer rewarded, the macaques choose the other stimulus on the very next trial, even though its previous reward association was with punishment, as illustrated in Fig. 10c which also illustrates a non-reward neuron active at the time of the reversal (Thorpe et al. 1983). (Non-reward refers here to not obtaining an expected reward.) This capability requires a rule to be held in memory and reversed by non-reward (Deco and Rolls 2005c; Rolls and Deco 2016) (which is described as model-based), is very appropriate for primates including humans who in social situations may benefit from being very responsive to non-reward vs reward signals, and may not occur in rodents (Rolls 2019b, 2021b; Hervig et al. 2020). The macaque orbitofrontal cortex also contains visual neurons that reflect face expression and face identity (both necessary to decode the reward/punishment value of an individual) (Thorpe et al. 1983; Rolls et al. 2006), and also social categories such as young faces (Barat et al. 2018). Information about face expression and movements important in social communication probably reaches the orbitofrontal cortex from neurons we discovered in the cortex in the macaque superior temporal sulcus that respond to these stimuli (Hasselmo et al. 1989a, b), in what is a region now accepted as important for decoding visual stimuli relevant to social behaviour (Pitcher et al. 2019; Pitcher and Ungerleider 2021). Economic value is represented in the orbitofrontal cortex, in that for example single neurons reflect the trade-off between the quality of a reward and the amount that is available (Padoa-Schioppa and Cai 2011; Padoa-Schioppa and Conen 2017). These investigations show that some orbitofrontal cortex neurons respond to outcome value (e.g. the taste of food), and others to expected value (of future rewards). The expected value neurons are not positive reward prediction error neurons, for they keep responding to the expected reward even when there is no prediction error (Rolls 2021b). Consistent with this, lesions of the macaque medial orbitofrontal cortex areas 13 and 11 make the animals less sensitive to reward value, as tested in devaluation experiments in which the animal is fed to satiety (Rudebeck et al. 2017). Neurotoxic lesions of the macaque orbitofrontal cortex produce effects that are difficult to interpret (Murray and Rudebeck 2018; Sallet et al. 2020), perhaps because these lesions have not always been based on knowledge of where neurons and activations related to reversal learning are found, and the difficulty of disabling all such orbitofrontal cortex neurons. Further, the tasks used in these studies are sometimes complicated, whereas a prototypical task is deterministic one-trial Go-NoGo rule-based visual discrimination reversal between the a visual stimulus and taste (Thorpe et al. 1983; Rolls et al. 1996), or in humans between a visual stimulus and winning or losing points or money (Rolls et al. 2020c). Rodents appear not to be able to perform this one-trial rule-based visual-reward reversal task (Hervig et al. 2020).

Neuroimaging experiments in humans produce consistent evidence (De Araujo et al. 2003a; Kringelbach et al. 2003; Kringelbach and Rolls 2003; Grabenhorst and Rolls 2008; Grabenhorst et al. 2008a), and allow the types of reward to be extended to include monetary reward (O'Doherty et al. 2001; Xie et al. 2021), face expressions (Kringelbach and Rolls 2003), and face beauty (O'Doherty et al. 2003). Further, in humans activations of the medial orbitofrontal cortex are linearly related to the subjective (conscious) pleasantness of stimuli (Grabenhorst and Rolls 2011; Rolls 2019b). These reward-related effects are found for odors (Rolls et al. 2003b), flavor (De Araujo et al. 2003a; Kringelbach et al. 2003), pleasant touch (Rolls et al. 2003c; McCabe et al. 2008), monetary reward (O'Doherty et al. 2001; Xie et al. 2021), and amphetamine (Völlm et al. 2004). A recent study with 1140 participants emphasizes these points, by showing that the medial orbitofrontal cortex is activated by reward [such as winning money or candies), and that the lateral orbitofrontal cortex is activated by not winning (Fig. 9 (Xie et al. 2021)].

(Modified from Xie et al. 2021.)

The lateral orbitofrontal cortex is activated by not winning, and the medial orbitofrontal cortex by winning, in the monetary incentive delay task. The lateral orbitofrontal cortex region in which activations increased towards no reward (No Win) in the monetary incentive delay task are shown in red in 1140 participants at age 19 and in 1877 overlapping participants at age 14. The conditions were Large win (10 points) to Small Win (2 points) to No Win (0 points) (at 19; sweets were used at 14). The medial orbitofrontal cortex region in which activations increased with increasing reward from No Win to Small Win to High Win) is shown in green. The parameter estimates are shown from the activations for the participants (mean ± sem) with the lateral orbitofrontal in red and medial orbitofrontal cortex in green. The interaction term showing the sensitivity of the medial orbitofrontal cortex to reward and the lateral orbitofrontal cortex to non-reward was significant at p = 10–50 at age 19 and p < 10–72 at age 14. In a subgroup with depressive symptoms as shown by the Adolescent Depression Rating Scale, it was further found that there was a greater activation to the No Win condition in the lateral orbitofrontal cortex; and the medial orbitofrontal cortex was less sensitive to the differences in reward value.

Further, humans with orbitofrontal cortex lesions may also be less sensitive to reward, as shown by their reduced subjective emotional feelings (Hornak et al. 2003), and their difficulty in identifying face and voice emotion-related expressions, which are important for emotional and social behaviour (Hornak et al. 1996, 2003).

The human lateral orbitofrontal cortex represents punishers and non-reward, and is involved in changing emotional behaviour

The macaque orbitofrontal cortex has neurons that respond when an expected reward is not received (Thorpe et al. 1983), and these have been termed non-reward neurons (Rolls 2014b, 2019b, d, 2021b) (see example in Fig. 10c). They can be described as negative reward prediction error neurons, in that they respond when a reward outcome is less than was expected (Rolls 2019b). These neurons do not respond to expected punishers [e.g. the discriminative stimulus for saline in Fig. 10c (Thorpe et al. 1983)], but other neurons do respond to expected punishers (Rolls et al. 1996), showing that non-reward and punishment are represented by different neurons in the orbitofrontal cortex. The finding of non-reward neurons is robust, in that 18/494 (3.6%) of the neurons in the original study responded to non-reward (Thorpe et al. 1983), consistent results were found in different tasks in a complementary study (10/140 non-reward neurons in the orbitofrontal cortex or 7.1%) (Rosenkilde et al. 1981), and an fMRI study has shown that the macaque lateral orbitofrontal cortex is activated when an expected reward is not obtained during reversal (Chau et al. 2015) (Fig. 10d). The hypothesis is that the non-reward responsiveness of these neurons is computed in the orbitofrontal cortex, because this is the first brain region in primates at which expected value and outcome value are represented, as summarized in Fig. 3 and with the evidence set out fully by Rolls (2019b, 2021b, 2023d), and these two signals are those required to compute non-reward, that is, that reward outcome is less than the expected value.

a Evidence that the human lateral orbitofrontal cortex is activated by non-reward. Activation of the lateral orbitofrontal cortex in a visual discrimination reversal task on reversal trials, when a face was selected but the expected reward was not obtained, indicating that the subject should select the other face in future to obtain the reward. a A ventral view of the human brain with indication of the location of the two coronal slices (b, c) and the transverse slice (d). The activations with the red circle in the lateral orbitofrontal cortex (OFC, peaks at [42 42 − 8] and [− 46 30 − 8]) show the activation on reversal trials compared to the non-reversal trials. For comparison, the activations with the blue circle show the fusiform face area produced just by face expressions, not by reversal, which are also indicated in the coronal slice in c. b A coronal slice showing the activation in the right orbitofrontal cortex on reversal trials. Activation is also shown in the supracallosal anterior cingulate region (Cingulate, green circle) that is also known to be activated by many punishing, unpleasant, stimuli (see Grabenhorst and Rolls (2011)). (From NeuroImage 20 (2), Morten L. Kringelbach and Edmund T. Rolls, Neural correlates of rapid reversal learning in a simple model of human social interaction, pp. 1371–83, Copyright, 2003, with permission from Elsevier.). b Activations in the human lateral orbitofrontal cortex are related to a signal to change behaviour in the stop-signal task. In the task, a left or right arrow on a screen indicates which button to touch. However on some trials, an up-arrow then appears, and the participant must change the behaviour, and stop the response. There is a larger response on trials on which the participant successfully changes the behaviour and stops the response, as shown by the contrast stop-success–stop-failure, in the ventrolateral prefrontal cortex in a region including the lateral orbitofrontal cortex, with peak at [− 42 50 − 2] indicated by the cross-hairs, measured in 1709 participants. There were corresponding effects in the right lateral orbitofrontal cortex [42 52 − 4]. Some activation in the dorsolateral prefrontal cortex in an area implicated in attention is also shown. (After Deng, Rolls et al. 2016). c Non-reward error-related neurons maintain their firing after non-reward is obtained. Responses of an orbitofrontal cortex neuron that responded only when the macaque licked to a visual stimulus during reversal, expecting to obtain fruit juice reward, but actually obtained the taste of aversive saline because it was the first trial of reversal (trials 3, 6, and 13). Each vertical line represents an action potential; each L indicates a lick response in the Go-NoGo visual discrimination task. The visual stimulus was shown at time 0 for 1 s. The neuron did not respond on most reward (R) or saline (S) trials, but did respond on the trials marked S x, which were the first or second trials after a reversal of the visual discrimination on which the monkey licked to obtain reward, but actually obtained saline because the task had been reversed. The two times at which the reward contingencies were reversed are indicated. After responding to non-reward, when the expected reward was not obtained, the neuron fired for many seconds, and was sometimes still firing at the start of the next trial. It is notable that after an expected reward was not obtained due to a reversal contingency being applied, on the very next trial the macaque selected the previously non-rewarded stimulus. This shows that rapid reversal can be performed by a non-associative process, and must be rule-based. (After Thorpe et al. 1983.) d Bold signal in the macaque lateral orbitofrontal related to win-stay/lose-shift performance, that is, to reward reversal performance. (After Chau et al 2015)

Corresponding to this, the human lateral orbitofrontal cortex is activated when a reward is not obtained in a visual discrimination reversal task (Kringelbach and Rolls 2003) (Fig. 10a), and when money is not received in a monetary reward task (O'Doherty et al. 2001; Xie et al. 2021), and in a one-trial reward reversal task (Rolls et al. 2020c). Further, the human lateral orbitofrontal cortex is also activated by punishing, subjectively unpleasant, stimuli (Grabenhorst and Rolls 2011; Rolls 2019b, d, 2021b). Examples include unpleasant odors (Rolls et al. 2003b), pain (Rolls et al. 2003c), losing money (O'Doherty et al. 2001), and receiving an angry face expression indicating that behaviour should change in a reversal (Kringelbach and Rolls 2003). The human right lateral orbitofrontal cortex/inferior frontal gyrus is also activated when behavioural correction is required in the stop-signal task (Fig. 10b) (Aron et al. 2014; Deng et al. 2017). These discoveries show that one way in which the orbitofrontal cortex is involved in decision-making and emotion is by representing rewards, punishers, and errors made during decision-making. This is supported by the problems that orbitofrontal cortex damage produces in decision-making, which including failing to respond correctly to non-reward, as described next.

Consistent with this neurophysiological and neuroimaging evidence, lesions of the orbitofrontal cortex can impair reward reversal learning during decision-making in humans (Rolls et al. 1994; Hornak et al. 2004; Fellows 2011), who continue responding to the previously rewarded, now non-rewarded, stimulus. The change in contingency between the stimulus and reward vs non-reward is not processed correctly. In macaques, damage to the lateral orbitofrontal cortex impairs reversal and extinction (Butter 1969; Iversen and Mishkin 1970), and damage of the lateral orbitofrontal cortex area 12 extending around the inferior convexity impaired the ability to make choices based on whether reward vs non-reward had been received (Rudebeck et al. 2017; Murray and Rudebeck 2018). Further evidence that the lateral orbitofrontal cortex is involved in learning contingencies between stimuli and reward vs non-reward is that in humans, lateral orbitofrontal cortex damage impaired this type of 'credit assignment' (Noonan et al. 2017). This type of flexibility of behaviour is important in primate including human social interactions, and indeed many of the effects of damage to the human orbitofrontal cortex, including the difficulty in responding appropriately to the changed circumstances of the patient, and the changed personality including impulsivity, can be related to these impairments in responding to non-reward and punishers (Rolls et al. 1994; Berlin and Rolls 2004; Berlin et al. 2004; Hornak et al. 2004; Rolls 2018, 2019b, d, 2021c, b; Rolls et al. 2020b).

The ventromedial prefrontal cortex and reward-related decision-making

The ventromedial prefrontal cortex (vmPFC, which can be taken to include the gyrus rectus area 14 and parts of 10m and 10r, Fig. 4) receives inputs from the orbitofrontal cortex, and has distinct connectivity (with strong functional connectivity with the superior medial prefrontal cortex, cingulate cortex, and angular gyrus Du et al. 2020; Rolls et al. 2023d)). The vmPFC has long been implicated in reward-related decision-making (Bechara et al. 1997, 2005; Glascher et al. 2012), this region is activated during decision-making contrasted with reward valuation (Grabenhorst et al. 2008b; Rolls and Grabenhorst 2008), and it has the signature of a decision-making region of increasing its activation in proportion to the difference in the decision variables, which correlates with decision confidence (Rolls et al. 2010a, b; Rolls 2019b, 2021b). Consistently, in macaques single neurons in the ventromedial prefrontal cortex rapidly come to signal the value of the chosen offer, suggesting that this vmPFC system serves to produce a choice (Strait et al. 2014), also consistent with the attractor model of decision-making (Rolls and Deco 2010; Rolls et al. 2010a, b; Rolls 2014b, 2016c, 2021b).

The attractor model of decision-making is a neuronal network with associatively modifiable recurrent collateral synapses between the neurons of the type prototypical of the cerebral cortex (Wang 2002; Rolls and Deco 2010; Rolls 2021b) (see Fig. 11). The decision variables (the inputs between which a decision needs to be made) are applied simultaneously, and the network, after previous training with these decision variables, reaches a state where the population of neurons representing one of the decision variables has a high firing rate (Rolls and Deco 2010; Deco et al. 2013; Rolls 2016c, 2021b). There is noise or randomness in this model of decision-making that is related to the approximately Poisson distributed firing times of neurons for a given mean firing rate. This approach to decision-making (see also Rolls et al. 2010a, b), illustrated in Fig. 11, provides a much more biologically well-founded model with integrate-and-fire neurons coupled in an attractor network than accumulator models of decision-making in which noise is added to two variables to see which one wins (Deco et al. 2013; Shadlen and Kiani 2013).

a Attractor or autoassociation single network architecture for decision-making. The evidence for decision 1 is applied via the λ1 inputs, and for decision 2 via the λ2 inputs. The synaptic weights have been associatively modified during training in the presence of λ1 and at a different time of λ2. When λ1 and λ2 are applied, each attractor competes through the inhibitory interneurons (not shown), until one wins the competition, and the network falls into one of the high firing rate attractors that represents the decision. The noise in the network caused by the random spiking of the neurons means that on some trials, for given inputs, the neurons in the decision 1 (D1) attractor are more likely to win, and on other trials the neurons in the decision 2 (D2) attractor are more likely to win. This makes the decision-making probabilistic, for, as shown in c, the noise influences when the system will jump out of the spontaneous firing stable (low energy) state S, and whether it jumps into the high firing state for decision 1 (D1) or decision 2 (D2). b The architecture of the integrate-and-fire network used to model decision-making (see text). c A multistable “effective energy landscape” for decision-making with stable states shown as low “potential” basins. Even when the inputs are being applied to the network, the spontaneous firing rate state is stable, and noise provokes transitions into the high firing rate decision attractor state D1 or D2 (see Rolls and Deco 2010; Rolls et al. 2010a, b; Rolls 2021b)

A key conceptual point can be made here about reward-related decision-making, which will typically be between two or more rewards. The inputs (decision variables, λ1 and λ2 in Fig. 11) that drive each of the reward attractor neuronal populations in Fig. 11, need to produce as output the identity of the reward signal, so that behaviour can be directed towards making these goal neurons fire. Effectively, the two sets of output neurons in Fig. 11, each driven by λ1 and λ2 in Fig. 11, are the reward neurons, competing with each other through the inhibitory interneurons. This results in the output of the decision-making network being the identity of the reward that won, and that can be used as the goal for behaviour. It is not useful to have a common currency for reward, if common currency means some general reward representation (Cabanac 1992). Instead, the output of the decision-making needs to be the specific reward that won in the computation, and the fact that this is an attractor network provides a way to maintain the firing of the winning neurons so that they can continue firing to act as the goal for the motivated behaviour (Rolls 2014b, 2021b). To place this in the context of emotion: each pleasure associated with each type of reward (with examples in Table 1) must be different, and feel different, so that we know that we have been successful in obtaining the correct reward that was being sought. Of course, having different rewards on the same scale of magnitude is useful, so that the decision-making network weights the two inputs on the same reward value scale (Grabenhorst et al. 2010a).

The amygdala

The amygdala in rodents, in which the orbitofrontal cortex is much less developed than in primates (Passingham and Wise 2012; Passingham 2021), has been implicated in emotion-related responses such a conditioned autonomic responses, conditioned freezing behavior, cortical arousal, and learned incentive effects in fear conditioning in which an auditory tone is associated with foot shock (LeDoux 1995, 1996; Quirk et al. 1996). Synaptic modification in the amygdala is implicated in the learning of these types of response (Davis 1992, 1994; Davis et al. 1995; Rogan et al. 1997; LeDoux 2000a, b; Davis 2011). In macaques, bilateral lesions of the amygdala impair the learning of fear-potentiated startle to a visual cue (Antoniadis et al. 2009). In macaques, connections reach the lateral and basal amygdala from the inferior temporal visual cortex, the superior temporal auditory cortex, the cortex of the temporal pole, and the cortex in the superior temporal sulcus (Van Hoesen 1981; Amaral et al. 1992; Ghashghaei and Barbas 2002; Freese and Amaral 2009). The visual and auditory inputs from these cortical regions may be associated in the primate amygdala with primary reinforcers such as taste from the anterior insular primary taste cortex, and with touch and nociceptive input from the insular somatosensory cortex (Leonard et al. 1985; Rolls 2000c, 2014b; Kadohisa et al. 2005a, b; Wilson and Rolls 2005; Rolls et al. 2018). The outputs of the primate amygdala include connections to the hypothalamus, autonomic centres in the medulla oblongata, and ventral striatum (Heimer et al. 1982; Amaral et al. 1992; Freese and Amaral 2009; Rolls 2014b). In addition, the monkey amygdala has direct projections back to many areas of the temporal, orbitofrontal, and insular cortices from which it receives inputs (Amaral et al. 1992), including even V1 (Freese and Amaral 2005), and to the hippocampal system (Stefanacci et al. 1996). In addition, different fMRI responses of the macaque inferior temporal cortex to different face expressions were reduced after amygdala lesions (Hadj-Bouziane et al. 2012).

Although the primate amygdala thus has some of the same connections as the orbitofrontal cortex in monkeys (see Fig. 3) (Rolls 2014b, 2023d), in humans it has much less connectivity with the neocortex than the orbitofrontal cortex (Fig. 12) (Rolls et al. 2023a). In humans, the amygdala receives primarily from auditory cortex A5, and semantic regions in the superior temporal gyrus and temporal pole regions; the piriform (olfactory) cortex; the lateral orbitofrontal cortex 47m; somatosensory cortex; the memory-related hippocampus, entorhinal cortex, perirhinal cortex, and parahippocampal cortex; and from the cholinergic nucleus basalis (Rolls et al. 2023a). The amygdala has effective connectivity to the hippocampus, entorhinal and perirhinal cortex; to the temporal pole; and to the lateral orbitofrontal cortex (Fig. 12) (Rolls et al. 2023a). Given the paucity of amygdalo-neocortical effective connectivity in humans, and the richness of its subcortical outputs in rodents (Quirk et al. 1996) and in humans (Klein-Flugge et al. 2022), it is proposed that the human amygdala is involved primarily in autonomic and conditioned responses via brainstem connectivity, rather than in reported (declarative) emotion (Rolls et al. 2023a).

Effective connectivity of the human amygdala: schematic diagram. The width of the arrows reflects the effective connectivity with the size of the arrowheads reflecting the connectivity in each direction. The connectivity from most cortical areas (anterior temporal lobe STGa and TGd, STSda and A5, and pyriform olfactory cortex) is only towards the amygdala. The connectivity with the hippocampal system (Hipp, entorhinal cortex EC, and perirhinal cortex PeEc) is in both directions. The sulci have been opened sufficiently to show the cortical regions in the sulci. The cortical regions are defined in the Human Connectome Project Multi-Modal Parcellation atlas (Glasser et al. 2016a; Huang et al. 2022). The abbreviations are provided elsewhere (Huang et al. 2022; Rolls et al. 2023a)

This new evidence about the connectivity of the human amygdala is consistent with the evidence that the amygdala is an evolutionarily old brain region, and appears to be overshadowed by the orbitofrontal cortex in humans (Rolls 2014b, 2019b, 2021b, c, 2023d; Rolls et al. 2020b). For example, the effects of damage to the human amygdala on emotion and emotional experience are much more subtle (Adolphs et al. 2005; Whalen and Phelps 2009; Delgado et al. 2011; Feinstein et al. 2011; Kennedy and Adolphs 2011; Damasio et al. 2013; LeDoux and Pine 2016; LeDoux et al. 2018; Rolls et al. 2023a) than of damage to the orbitofrontal cortex (Rolls et al. 1994; Hornak et al. 1996, 2003, 2004; Camille et al. 2011; Fellows 2011; Rolls 2019b). Indeed, LeDoux and colleagues have emphasized the evidence that the human amygdala is rather little involved in subjective emotional experience (LeDoux 2012; LeDoux and Pine 2016; LeDoux and Brown 2017; LeDoux et al. 2018; LeDoux 2020; Taschereau-Dumouchel et al. 2022). That is in strong contrast to the orbitofrontal cortex, which is involved in subjective emotional experience, as shown by the evidence just cited. LeDoux’s conundrum is: if not the amygdala for subjective emotional experience, then what (LeDoux 2020)? My answer is: the human orbitofrontal cortex is the key brain region involved in subjective emotion (Rolls 2014b, 2019b, 2023d; Rolls et al. 2023a). Further, consistent with the poor rapid reversal learning found by amygdala neurons (Sanghera et al. 1979; Rolls 2014b, 2021b) compared to orbitofrontal cortex neurons, it has been found that neuronal responses to reinforcement predictive cues in classical conditioning update more rapidly in the macaque orbitofrontal cortex than amygdala, and activity in the orbitofrontal cortex but not the amygdala was modulated by recent reward history (Saez et al. 2017).

The problem of over-interpreting the role of the amygdala in emotion was that rodent studies showed that some responses such as classically conditioned autonomic responses and freezing are elicited by the amygdala with its outputs to brainstem systems, and it was inferred that therefore the amygdala is involved in emotion in the way that it is experienced by humans (LeDoux 1995, 1996, 2000a; Quirk et al. 1996). It turned out later that humans with amygdala damage had similar response-related changes, but little impairment in subjectively experienced and reported emotions (Whalen and Phelps 2009; Delgado et al. 2011; Damasio et al. 2013; LeDoux and Pine 2016; LeDoux et al. 2018; Rolls et al. 2023a). It is important therefore it is argued not to infer subjective reported emotional states in humans from responses such as conditioned autonomic and freezing responses (Rolls et al. 2023a). This dissociation of autonomic response systems from subjectively felt and reported emotions in humans is further evidence against the James-Lange theory of emotion and the related somatic marker hypothesis (Damasio 1994, 1996) (see Rolls (2014b) and the Appendix).

Although as described further below the amygdala may be overshadowed in humans by the orbitofrontal cortex, which has connectivity with the amygdala and that could influence amygdala neuronal responses, it is of interest that in macaques, some amygdala neurons not only respond to faces (Leonard et al. 1985), but also respond to socially relevant stimuli when macaques interact socially (Grabenhorst et al. 2019; Grabenhorst and Schultz 2021).

The anterior cingulate cortex

Based on cytoarchitecture, connectivity and function, the anterior cingulate cortex can be divided into a pregenual part (regions s32, a24, p24, p32, and d32 in Fig. 5) that is activated by rewards, and a supracallosal or dorsal part (regions a32pr, a24pr, 33pr, p32pr and p23pr in Fig. 6) activated by punishers and non-reward (Grabenhorst and Rolls 2011; Rolls et al. 2023d), with further background provided in Vogt (2009, 2019).

The human pregenual cingulate cortex is activated by many of the same rewards as the medial orbitofrontal cortex; and the supracallosal anterior cingulate cortex is activated by many of the same punishers, and by non-reward during reward reversal, as the lateral orbitofrontal cortex (Grabenhorst and Rolls 2011; Rolls 2019a, 2021b; Rolls et al. 2020c) (see e.g. Fig. 10a). Thus value representations reach the anterior cingulate cortex (ACC). To provide examples, pain activates an area typically 10–30 mm posterior to and above the most anterior (i.e. pregenual) part of the ACC, in what can be described as the supracallosal (or dorsal) anterior cingulate cortex (Vogt et al. 1996; Vogt and Sikes 2000; Rolls et al. 2003c). Pleasant touch activated the pregenual cingulate cortex (Rolls et al. 2003c; McCabe et al. 2008). Pleasant temperature applied to the hand also produces a linear activation proportional to its subjective pleasantness in the pregenual cingulate cortex (Rolls et al. 2008b). Somatosensory oral stimuli including viscosity and the pleasantness of the texture of fat in the mouth also activate the pregenual cingulate cortex (De Araujo and Rolls 2004; Grabenhorst et al. 2010b). Pleasant (sweet) taste also activates the pregenual cingulate cortex (de Araujo et al. 2003b; De Araujo and Rolls 2004) where attention to pleasantness (Grabenhorst and Rolls 2008) and cognition (Grabenhorst et al. 2008a) also enhances activations. Pleasant odours also activate the pregenual cingulate cortex (Rolls et al. 2003b), and these activations are modulated by word-level top-down cognitive inputs that influence the pleasantness of odours (De Araujo et al. 2005), and also by top-down inputs that produce selective attention to odour pleasantness (Rolls et al. 2008a). Unpleasant odours activate the supracallosal ACC (Rolls et al. 2003b). The pregenual cingulate cortex is also activated by the ‘taste’ of water when it is rewarding because of thirst (de Araujo et al. 2003c), by the flavour of food (Kringelbach et al. 2003), and by monetary reward (O'Doherty et al. 2001). Moreover, the outcome value and the expected value of monetary reward activate the pregenual cingulate cortex (Rolls et al. 2008c). Grabenhorst and Rolls (2011) show the brain sites of some of these activations.

In these investigations, the anterior cingulate activations were linearly related to the subjective pleasantness or unpleasantness of the stimuli, providing evidence that the anterior cingulate cortex represents value on a continuous scale, which is characteristic of what is found in the sending region, the orbitofrontal cortex (Rolls 2019a, b, d,2021b). Moreover, evidence was found that there is a common scale of value in the pregenual cingulate cortex, with the affective pleasantness of taste stimuli and of thermal stimuli delivered to the hand producing identically scaled BOLD activations (Grabenhorst et al. 2010a).

We now consider how these value representations are used in the anterior cingulate cortex (ACC). We start with the evidence that primate orbitofrontal cortex neurons represent value, but not actions or behavioural responses (Thorpe et al. 1983; Padoa-Schioppa and Assad 2006; Grattan and Glimcher 2014; Rolls 2019b, d, 2023d), and therefore project value-related information but not action information to the anterior cingulate cortex. In contrast, there is evidence that the anterior cingulate cortex is involved in associating potential actions with the value of their outcomes, in order to select an action that will lead to the desired goal (Walton et al. 2003; Rushworth et al. 2007, 2011; Grabenhorst and Rolls 2011; Kolling et al. 2016; Morris et al. 2022). Indeed, consistent with its strong connections to motor areas (Morecraft and Tanji 2009), lesions of the ACC impair reward-guided action selection (Kennerley et al. 2006; Rudebeck et al. 2008), in humans the ACC is activated when information about outcomes guides choices (Walton et al. 2004; Morris et al. 2022), and neurons in the ACC encode information about actions, outcomes, and prediction errors for actions (Matsumoto et al. 2007; Luk and Wallis 2009; Kolling et al. 2016). For example, if information about three possible outcomes (different juice rewards) had to be associated with two different actions, information about both specific actions and specific outcomes was encoded by neurons in the ACC (Luk and Wallis 2009).

Given the evidence described above, and the connectivity shown in Fig. 7 (Rolls et al. 2023d), it is now proposed that the part of the anterior cingulate cortex involved in action–outcome learning is the supracallosal (dorsal) part, because this has effective connectivity to premotor cortical areas involved in actions with the body, including the mid-cingulate cortex. The route for value input to reach the supracallosal anterior cingulate cortex appears to be from the pregenual anterior cingulate cortex and medial orbitofrontal cortex (Fig. 7 (Rolls et al. 2023d)). The findings that aversive stimuli including pain activate the supracallosal anterior cingulate cortex may relate to the fact that actions to escape from or avoid aversive, unpleasant, stimuli often involve actions of the body, such as those involved in fight, flight or limb withdrawal (Rolls et al. 2023d). The supracallosal anterior cingulate cortex was also implicated in human action–outcome learning in a learning theory-based analysis (Morris et al. 2022).

Further, given the evidence described above, and the connectivity shown in Fig. 7 (Rolls et al. 2023d), it is now proposed that the pregenual anterior cingulate cortex, which receives from the medial orbitofrontal cortex and ventromedial prefrontal cortex (vmPFC), and connects to the hippocampal system (Rolls et al. 2023d), in part via the memory-related parts of the posterior cingulate cortex (Rolls et al. 2023i), provides a route for affective value to be incorporated into hippocampal system episodic memory (Rolls 2022b, 2023a, c), and also to provide the information about goals that is required for navigation (Rolls 2022b, 2023c; Rolls et al. 2023d). Indeed, it has been pointed out that navigation typically involves multistep routes to reach a goal (Rolls 2021d, 2023c; Rolls et al. 2023d).

Further, the pregenual anterior cingulate cortex has connectivity to the septal region which has cholinergic neurons that project to the hippocampus (Fig. 7) (Rolls et al. 2023d), and this may contribute (Rolls 2022b) to the memory problems that can be present in humans with damage to the anterior cingulate cortex and vmPFC region (Bonnici and Maguire 2018; McCormick et al. 2018; Ciaramelli et al. 2019).