Abstract

We compared the ability of angry and neutral faces to drive oculomotor behaviour as a test of the widespread claim that emotional information is automatically prioritized when competing for attention. Participants were required to make a saccade to a colour singleton; photos of angry or neutral faces appeared amongst other objects within the array, and were completely irrelevant for the task. Eye-tracking measures indicate that faces drive oculomotor behaviour in a bottom-up fashion; however, angry faces are no more likely to capture the eyes than neutral faces are. Saccade latencies suggest that capture occurrs via reflexive saccades and that the outcome of competition between salient items (colour singletons and faces) may be subject to fluctuations in attentional control. Indeed, although angry and neutral faces captured the eyes reflexively on a portion of trials, participants successfully maintained goal-relevant oculomotor behaviour on a majority of trials. We outline potential cognitive and brain mechanisms underlying oculomotor capture by faces.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Human faces are highly relevant to our social lives, and it is crucial to detect them efficiently to produce adaptive responses. At the neurological level, faces benefit from cerebral networks especially tuned to their processing (Gauthier et al., 2000; Harel, Kravitz, & Baker, 2013; Puce, Allison, Asgari, Gore, & McCarthy, 1996), and neurons respond specifically to faces at early stages of visual processing (as early as 40 to 60 ms; Morand, Harvey, & Grosbras, 2014). At the behavioural level, we are able to detect faces within complex scenes extraordinarily fast, in about 100 ms (Crouzet, Kirchner, & Thorpe, 2010; Girard & Koenig-Robert, 2011). However, it is still unclear exactly what information is extracted from the face in this brief window or how that information contributes to detection. Here, we explore whether a critical aspect of faces—their emotional expressions—can facilitate detection and capture attention.

The physical properties of our visual system limit the amount of information in a scene that can be processed at once, and so selection must take place. This is achieved through attentional mechanisms, which select stimuli that will benefit from further processing while filtering others that will be ignored. Some very salient stimuli, such as flashes of light or colour singletons, can be selected in an automatic fashion without intention to look for them (Theeuwes, 1992; Theeuwes, Kramer, Hahn, & Irwin, 1998). In a recent study, we provided eye-tracking evidence that faces, which are much more complex, can also capture attention in a stimulus-driven manner, even if they are presented peripherally and are completely irrelevant to the task (Devue, Belopolsky, & Theeuwes, 2012; see also Laidlaw, Badiudeen, Zhu, & Kingstone, 2015; Weaver & Lauwereyns, 2011).

Building on the finding that faces do capture attention irrespective of one’s goals, one may then wonder whether the distinguishing features of a face, which determine its identity, age, sex, race, health, or emotional expression, can in themselves influence early selection processes. These characteristics are also highly relevant in guiding one’s actions and need to be detected and decoded fast. If attentional capture by specific facial characteristics is demonstrated, it would suggest that the features and configural information that constitute these characteristics can be processed preattentively, before selection takes place.

The primary goal of the current study was to assess whether a facial attribute that makes faces particularly important—namely, emotional expression—can affect early selection processes and so capture the eyes. Some previous studies suggest that emotional faces are prioritized over neutral ones (e.g., David, Laloyaux, Devue, & Cleeremans, 2006; Fox et al., 2000; Koster, Crombez, Verschuere, & De Houwer, 2004; Lundqvist, Bruce, & Öhman, 2015; Mogg, Holmes, Garner, & Bradley, 2008; Schoth, Godwin, Liversedge, & Liossi, 2015; van Honk, Tuiten, de Haan, van den Hout, & Stam, 2001; Vermeulen, Godefroid, & Mermillod, 2009). However, because these studies used manual response times as an indirect index of attentional capture, the attentional biases they report can be difficult to interpret. Moreover, the attentional stages at which these biases arise remain unclear. For example, faces are often presented centrally—that is, within people’s focus of attention—or in the context of a task that involves their processing (e.g., when the face or some particular aspect of it is the target of a search task), making their detection consistent with top-down goals. Such paradigms do not allow a genuine test of whether emotional expressions drive attentional selection in a bottom-up fashion. There is considerable evidence from several paradigms that, once attended, emotional faces are more difficult to disengage from than neutral faces (see Belopolsky, Devue, & Theeuwes, 2011; Fox, Russo, & Dutton, 2002; Georgiou et al., 2005; Koster et al., 2004; Schutter, de Haan, & van Honk, 2004). But, it is less clear whether emotional faces are more effective than neutral faces at driving early attentional selection processes when they are task-irrelevant and share no features with targets.

Here, we examined eye movements executed in the presence of irrelevant emotional faces in order to uncover the mechanisms supporting a potential selection bias in their favour. Can they capture the eyes more effectively than neutral faces under these circumstances? We modified the eye-tracking paradigm used by Devue et al. (2012) to compare the effect of irrelevant angry and neutral faces on oculomotor behaviour. In this paradigm, participants see a circular array of coloured dots and have to make a saccade towards a colour singleton; a simple task that relies on parallel search. Photographs of irrelevant objects (including faces) appear in a concentric circle inside the dot array; participants are instructed to ignore these (see Fig. 1). This paradigm remedies many of the problems inherent in previous research. Eye movements closely parallel attentional processes and so provide a more direct measure of attention than do manual response times. Moreover, the task allows us to examine the impact of faces when they are peripheral to fixation and entirely irrelevant to the task.

Example of search display. Participants had to make a saccade towards the circle with a unique colour while ignoring the objects. One critical object (an angry face, a neutral face, or a butterfly in the current experiment) was always present among the six objects. This example shows a “mismatch trial” where the critical object and the colour singleton are in different locations (for the greyscale version, please note that the coloured dots were isoluminant - in this example, the colour singleton is orange and sits by the tomato, whereas the five other dots are green). On “match trials,” critical objects appeared in the same segment as the colour singleton. Faces used in the experiment were from the Nim Stim Face Stimulus Set (www.macbrain.org), not shown here (Colour figure online)

In their original experiment, Devue and colleagues (2012) found that the mere presence of a face changed performance. Faces guided the eyes to their location – people reached the colour target faster and more accurately if it appeared in the same area as a face (called a “match” trial). Faces also captured the eyes – people made more mistakes and were slower to reach the target if the face was at an alternate location (called a “mismatch” trial). These effects were attenuated (but not eliminated) when faces were inverted, suggesting that both salient visual features (apparent in both upright and inverted faces) and configural information (apparent only in upright faces) play a role in oculomotor capture. In the present study, we used the same paradigm but manipulated the expression of the irrelevant faces. If facial expressions do affect early attentional selection processes in a bottom-up fashion, then emotional faces in the present experiment should capture and guide the eyes more effectively than neutral faces. We also assessed the role of low-level visual features in a second experiment presenting inverted faces.

How should emotional faces affect eye movements? One possibility is that oculomotor capture by facial expressions is unlikely because of the hierarchical architecture of the visual system, in which simple visual features are processed first and then integrated (Hubel & Wiesel, 1968; Riesenhuber & Poggio, 1999; Van Essen, Anderson, & Felleman, 1992). Further limitations are imposed on peripheral faces because of decreased acuity at greater eccentricities (e.g., Anstis, 1998) and a loss of information conveyed by high spatial frequencies (HSF) which are useful for extracting facial details (Johnson, 2005). In support of this view, there is no strong evidence that information related to identity, race, or gender (which are partly dependent on mid and high spatial frequencies; Smith, Volna, & Ewing, 2016; Vuilleumier, Armony, Driver, & Dolan, 2003) specifically draws attention to the location of a face. Although it seems possible to identify faces with minimal levels of attention (Reddy, Reddy, & Koch, 2006), neither one’s own face nor other personally familiar faces captures attention in a bottom-up fashion (Devue & Brédart, 2008; Devue, Laloyaux, Feyers, Theeuwes, & Brédart, 2009; Keyes & Dlugokencka, 2014; Laarni et al., 2000; Qian, Gao, & Wang, 2015). While familiar faces can clearly bias attention, they do so by delaying disengagement once the face has been attended (Devue & Brédart, 2008; Devue, Van der Stigchel, Brédart, & Theeuwes, 2009; Keyes & Dlugokencka, 2014). Face identification may require additional processing that engages attention after detection (Or & Wilson, 2010). Similarly, race and gender do not automatically attract attention. For instance, these facial aspects can be ignored when they appear in a flanker interference paradigm (Murray, Machado, & Knight, 2011) and arrays of faces need to be inspected serially in order to find a specific race target (Sun, Song, Bentin, Yang, & Zhao, 2013). In sum, facial aspects such as familiarity, identity, or race may be formed by a combination of visual information that is too complex to influence early selection processes.

Some evidence suggests that facial expressions may be similarly unable to capture attention. Hunt and colleagues (Hunt, Cooper, Hungr, & Kingstone, 2007) showed that irrelevant schematic angry faces are not advantaged over happy faces in visual search tasks. However, in this study (and some others described above), faces were schematic stimuli, which lack facial information that may normally be used for detection by the visual system. It is possible that any capture by emotional expression would be driven by visual information available in natural faces that is not present in schematic faces. It would therefore be important to determine whether these null effects extend to photographs of emotional faces. Furthermore, the face of interest was presented among sets of faces; but the ability of the visual system to process several faces simultaneously is known to be limited (Bindemann, Burton, & Jenkins, 2005).

While it may appear unlikely, there remain several reasons why emotional expressions may still drive attention in a bottom-up fashion, perhaps more so than other facial characteristics. First, emotional information, including facial expressions, is largely carried by low spatial frequencies (LSF; Vuilleumier et al., 2003; but see Deruelle & Fagot, 2005), which are accessible at periphery. Indeed, the processing of arousing emotional stimuli (e.g., spiders and nudes; Carretié, Hinojosa, Lopez-Martin, & Tapia, 2007; and faces; Alorda, Serrano-Pedraza, Campos-Bueno, Sierra-Vazquez, & Montoya, 2007) is preserved in low-passed filtered images, that is, in images from which high spatial frequencies have been removed. Second, the facial characteristics that contribute to a given emotional expression are fairly consistent across individuals and potentially less variable than subtle facial deviations making up identity (and possibly even age or gender). The visual system could thus have encoded statistical regularities pertaining to facial expressions (Dakin & Watt, 2009; Smith, Cottrell, Gosselin, & Schyns, 2005), allowing them to attract attention in a bottom-up fashion. In support of this idea, some authors have shown that detection advantages for emotional expressions are based on low-level visual features that do not necessarily reflect evaluative or affective processes (Calvo & Nummenmaa, 2008; Horstmann, Lipp, & Becker, 2012; Nummenmaa & Calvo, 2015; Purcell & Stewart, 2010). Third, it is thought that emotional information can be processed very fast, and even be prioritized over neutral information, through specific neuronal pathways including the amygdala, which is primarily sensitive to LSF (Alorda et al., 2007; Öhman, 2005; Vuilleumier et al., 2003), and/or via cortical enhancement mechanisms (for reviews, see Carretié, 2014; Pourtois, Schettino, & Vuilleumier, 2013; Yiend, 2010). Prioritization of emotional faces may therefore occur at a preattentive level (Smilek, Frischen, Reynolds, Gerritsen, & Eastwood, 2007).

To test whether irrelevant angry faces capture attention, we examined the percentage of trials in which the first saccade was erroneously directed at an angry face instead of at the target (relative to neutral faces and butterflies, an animate control object). We also examined the effect of the spatial location of angry faces, neutral faces, and butterflies by comparing performance on match versus mismatch trials on four measures of oculomotor behaviour: correct saccade latency, saccade accuracy, search time, and number of saccades required to reach the target.

Latency measures also allowed us to address a second question, which arises from previous observations that, although neutral faces capture attention more than other objects, they do not do so consistently (e.g., faces captured attention on 13.12% of trials in Devue et al., 2012). A plausible explanation is that automatic shifts of covert attention towards faces result in a saccade only when insufficient oculomotor control is exerted during the trial (Awh, Belopolsky, & Theeuwes, 2012; Bindemann, Burton, Langton, Schweinberger, & Doherty, 2007); that is, in the absence of good oculomotor control, faces (and perhaps especially angry faces) are better able to compete with goal-relevant targets. Saccade latency is a robust indicator of oculomotor control, with longer latencies reflecting more time devoted to the preparation of a saccade (Morand, Grosbras, Caldara, & Harvey, 2010; Mort et al., 2003; Walker & McSorley, 2006; Walker, Walker, Husain, & Kennard, 2000). We thus expect mismatch trials in which faces capture attention to be characterised by shorter latencies than those in which the target was correctly reached because faces (and perhaps especially angry faces) should compete with the target most successfully when control is poor. Moreover, on mismatch trials in which participants successfully reach the target, latencies should be longer (indicating greater control) when displays contain faces (and perhaps especially angry faces; see Schmidt, Belopolsky, & Theeuwes, 2012) than when they contain butterflies. Finally, correct saccades on trials where faces compete with the target (mismatch trial) should require more control, indexed by longer latencies, than on match trials.

Experiment 1

Method

Participants

We estimated sample size with an a priori power analysis based on the within-subjects effect size (ηp 2 = .49) for the difference in oculomotor capture rates between upright neutral faces (i.e., 13.12% ± 5.94), inverted neutral faces (10.8% ± 4.33), and butterflies (the control stimulus; 8.5% ± 3.7) in our previous eye-tracking study (Devue et al., 2012). The calculation yielded a sample size of 13 participants to achieve power of .95. Because the effect of facial expression (that is, angry versus neutral faces) may be more subtle than the effect of face inversion (upright versus inverted faces), we aimed to double that number while anticipating for data loss. We therefore recruited 29 participants (four men), at Victoria University of Wellington. They were between ages 18 and 45 years (M = 22.03 years, SD = 5.15), and had normal or corrected-to-normal vision, good colour vision, and no reported ocular abnormalities. They signed an informed consent prior to their inclusion in the study and received course credits or movie vouchers as compensation for their time. The study was approved by the Human Ethics Committee of Victoria University. Data collection for four participants could not be completed due to unexpected technical issues (N = 2) or because they elected not to complete the experiment (N = 2).

Material and procedure

Participants were tested individually in a dimly lit room on an Acer personal computer with a 22-inch flat-screen monitor set to a resolution of 1024 × 768 pixels. A viewing distance of 60 cm was maintained by a chin rest. The left eye was tracked with an EyeLink 1000-plus desktop mount eye-tracking system at a 1000 Hz sampling rate. Calibration was performed before the experimental trials and halfway through the task using a nine-point grid. Stimulus presentation and eye-movement recording was controlled by E-Prime 2.0 software (Psychology Software Tools, Pittsburgh, PA).

Displays consisted of six coloured circles with a diameter of 1.03° each, presented on a white background at 8.42° of eccentricity on the circumference of a virtual circle. They were all the same colour (green or orange) except for one (orange or green), which varied randomly on each trial. Six greyscale objects, each fitting within a 2.25° × 2.25° space, were arranged in a concentric circle, each along the same radius as a coloured dot, but at 6.1° of eccentricity (see Fig. 1). One of the six objects was always a critical object of interest: an angry face, a neutral face, or a butterfly (the animate control condition). The five remaining objects were inanimate filler objects belonging to clear distinct categories (toys, musical instruments, vegetables, clothing, drinkware, and domestic devices; eight exemplars per category). Participants were instructed to make an eye movement to the circle that was a unique color and to ignore the objects. There was no mention of faces. Eight angry and eight neutral male face stimuli, photographed in a frontal position, were taken from the Nim Stim Face Stimulus Set (Models # 20, 21, 23, 24, 25, 34, 36 and 37; www.macbrain.org). Hair beyond the oval shape of the head was removed with image manipulation software (Gimp; www.gimp.org) so that all faces had about the same shape while keeping a natural aspect. Brightness and contrast of the faces were adjusted with Gimp to visually match each other, butterflies, and the remaining set of objects. Analyses confirmed that mean brightness values did not significantly differ between images of angry faces (M = 198.75, SD = 3.04), neutral faces (M = 197.68, SD = 5.1) and butterflies (M = 193.4, SD = 10.89), F(2, 21) = 1.25, p = .307, ηp 2 = .106. Mean contrast values, approximated by using the standard deviations of the full range of brightness values per individual image, did not differ significantly between the three types of images, F(2, 21) = 3.00, p = .07, ηp 2 = .222; the marginal effect was due to butterflies (M = 73.31, SD = 8.5) being slightly more contrasted than angry (M = 66.85, SD = 2.79) and neutral faces (M = 67.7, SD = 4.26), p = .05 and p = .064, respectively, which, importantly did not differ from each other, p = .769.

The target circle and critical object (angry face, neutral face, butterfly) each appeared equally often at one of their six possible locations, in all possible combinations (6 × 6 = 36), so that the location of a critical object was unrelated to that of the target circle. Each combination was repeated 10 times per critical object, producing 360 trials per critical object type. There were thus 1,080 trials in total, presented in a random order. For each critical object type, there were 60 trials in which its position matched that of the target circle; that is, they were aligned along the same radius of the virtual circle. On the remaining 300 trials, the positions mismatched.

Each trial started with a drift correction screen triggered by a press of the space bar, followed by a jittered fixation cross with a duration between 1,100 and 1,500 ms, presented in black against a white background. The cross was followed by a 200 ms blank (white) screen before the presentation of the target display, which lasted 1,000 ms. Participants heard a high-toned beep if they moved their eyes away from the central area before the presentation of the display and a low-toned beep if they had not moved their eyes 600 ms after the display appeared.

Participants took breaks and received feedback on their mean correct response time every 54 trials. Before the experimental task, they performed 24 practice trials without critical objects.

Design and data analyses

Saccades were detected by the Eyelink built-in automatic algorithm with minimum velocity and acceleration thresholds of 30°/s and 8,000°/s2, respectively. The direction of a saccade was defined by the 60° of arc that corresponded to each target; that is, a saccade was identified as correct if it fell anywhere within the segment that subtended 30° of arc to either side of the target. Trials with anticipatory (first saccade latency ≤ 80 ms after the display onset) or late (first saccade latency ≥ 600 ms) eye movements were discarded.

Oculomotor capture and fixation duration. First, we examined the percentage of trials in which participants looked first at the critical object instead of the target during mismatch trials, and fixation duration, that is, the time spent fixating these critical objects after they captured the eyes. We expected faces to capture the eyes more often than butterflies (Devue et al., 2012). Angry faces may or may not capture the eyes more often than neutral ones but may be fixated longer when capture does occur (Belopolsky et al., 2011).

Oculomotor behaviour. Second, we examined the effect of the spatial location of angry and neutral faces (as compared to the butterfly control object) on oculomotor behaviour. We analysed four different eye-movements measures (i.e., consistent with Devue et al., 2012): mean correct saccade latency (i.e., the time necessary for the eyes to start moving and proceed directly to the target after onset of the display), mean saccade accuracy (i.e., the percentage of trials in which the first saccade was directed to the target), mean search time (i.e., the time elapsed between the display onset and the moment the eyes reached the target for the first time, regardless of path), and mean number of saccades to reach the target. Differences between critical objects in their ability to attract attention were indicated by an interaction between critical object type and matching conditions. These were followed up by planned comparisons to test the effect of matching on each of the three critical objects. If angry and neutral faces are prioritized, we expect better performance on match than on mismatch trials when the critical object is a face but not when it is a butterfly. For each of the four measures, we then directly compared the effect of angry and neutral faces on performance. Again, we report the critical interaction between facial expression (angry, neutral) and matching, which tests whether angry and neutral faces differ in their ability to attract attention. If angry faces are more potent than neutral faces, the impact of matching should be stronger for angry faces than for neutral ones.

Oculomotor control. In a third set of analyses, we examined the impact of faces on oculomotor control, as reflected by saccade latency. We calculated mean latency for each saccade outcome (correct or incorrect) in each matching condition and for each critical object type separately. For any given match trial, there are two possible outcomes: correct saccade to the target (i.e., “correct match”) or incorrect saccade to a nontarget circle/noncritical object (i.e., “error match”). For any given mismatch trial, there are three possible outcomes: correct saccade to the target (i.e., “correct mismatch”), incorrect saccade to a mismatching critical object (i.e., capture trials), or incorrect saccade to a nontarget circle/noncritical object (i.e., “error mismatch”). Combining matching conditions and performance thus gives five possible saccadic outcomes in total for each critical object type. Note that this analysis partly overlaps with the analyses of correct saccade latency reported above, but it targets a different question—specifically, whether saccade latency is a predictor of saccade outcome. Overall, we expected correct saccades to have longer latencies than incorrect saccades. Next, we followed up by testing the simple effect of each critical object for each of the five saccade outcome/matching combinations.

We made three main predictions. First, if instances of oculomotor capture by faces are due to lapses in oculomotor control, we expected the associated latencies to be shorter than latencies of correct saccades. Second, if faces trigger automatic shifts of covert attention in their direction, correct saccades in the presence of mismatching faces should be more difficult to program and require more control than in the presence of a mismatching butterfly: This would be reflected by longer latencies in the former case than in the latter. Third, on match trials, faces and the target are in the same segment and do not compete for attention, so these trials should require less control than mismatch trials. We thus expected shorter latencies on match trials than on mismatch trials containing faces. Similar logic holds for the comparison of angry to neutral faces.

In all analyses, degrees of freedom are adjusted (Greenhouse–Geisser) for sphericity violation where necessary.

Results and discussion

We discarded data of two participants who had less than 65% (i.e., 2 SD below average) usable trials (i.e., neither anticipatory nor late saccades), and of one other with low accuracy (i.e., 27.7%, 2 SD below average). The final sample comprised 22 participants (21 female, one male; M age = 22.55 years, SD = 5.79) who had 94.55% usable trials on average.

Oculomotor capture and fixation duration

We performed a one-way analysis of variance (ANOVA) with critical object type (angry face, neutral face, butterfly) as a within-subjects factor on the mean percentage of oculomotor capture trials and associated fixation durations. Results, visible on the left panels of Fig. 2, show that critical object type significantly affects oculomotor capture, F(1.124, 23.597) = 11.421, p = .002, ηp 2 = .352. Both angry (M = 13.17%, SD = 5.56) and neutral faces (M = 14.34%, SD = 6.05) captured the eyes more frequently than butterflies (M = 9.45%, SD = 3.34), t(21) = 3.084, p = .006, and t(21) = 3.63, p = .002, respectively; and, surprisingly, neutral faces did so more often than angry ones, t(21) = 2.961, p = .007. The same ANOVA on mean fixation duration following oculomotor capture was not significant, F(2, 42) = .269, p = .765, ηp 2 = .013, indicating that erroneously fixated faces and butterflies were fixated for similar amounts of time.

Oculomotor behaviour

We conducted four 3 × 2 repeated measures ANOVAs with critical object type (angry face, neutral face, butterfly) and matching (match, mismatch trials) as within-subjects factors. Results are presented on the left panels of Fig. 3. For correct saccade latencies, the predicted interaction between matching and critical object type was significant, F(2, 42) = 4.203, p = .022, ηp 2 = .167, matching had a significant effect on latency when the critical object was an angry face, t(21) = 5.649, p < .001, or a neutral face, t(21) = 2.546, p = .019, but not when it was a butterfly, t(21) = 1.45, p = .162. In the follow-up 2 × 2 repeated measures ANOVA with facial expression (angry, neutral) and matching (match, mismatch trials) as within-subjects factors, the interaction between facial expression (angry vs. neutral) and matching was not significant, F(1, 21) = 2.27, p = .147, ηp 2 = .098, indicating that the two facial expressions affected saccade latency in a similar fashion.

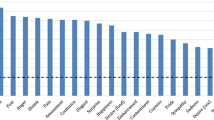

Effects of spatial location (match/mismatch) of critical objects in Experiment 1 (upright faces, left panels) and Experiment 2 (inverted faces, right panels). Mean correct saccade latency (a), mean accuracy (b), mean search time (c) and mean number of saccades to reach the target (d), for each type of critical object type included in the display (angry face, neutral face, or butterfly). Error bars represent 95% confidence intervals for within-subjects comparisons (Morey, 2008)

For saccade accuracy, there was a marginal predicted interaction between matching and critical object type, F(1.576, 33.089) = 2.76, p = .089, ηp 2 = .116. Participants were more accurate on match than on mismatch trials when the critical objects were angry and neutral faces, t(21) = 2.932, p = .008, and t(21) = 4.166, p < .001, respectively, but not when they were butterflies, t(21) = .903, p = .377. The follow-up 2 × 2 ANOVA testing the interaction between facial expression (angry, neutral) and matching was not significant, F(1, 21) = .541, p = .47, ηp 2 = .025, indicating that the presence of angry and neutral faces impacted accuracy similarly.

For search time, the interaction between matching and critical object type was significant, F(1.413, 29.682) = 3.983, p = .042, ηp 2 = .159. Matching affected speed when critical objects were angry, t(21) = 4.021, p = .001, and neutral faces, t(21) = 3.942, p = .001, but not when they were butterflies, t(21) = 1.2, p = .243. A follow-up assessment of the interaction between facial expression and matching showed that both facial expressions affected search time in a similar way, F(1, 21) = .468, p = .502, ηp 2 = .022.

Finally, for the mean number of saccades to reach the target, there was a marginal predicted interaction between matching and critical object type, F(2, 42) = 2.957, p = .063, ηp 2 = .123. Participants made fewer saccades to reach the target on match than on mismatch trials when critical objects were angry and neutral faces, t(21) = 2.647, p = .015 and t(21) = 4.395, p < .001 respectively, but not when they were butterflies, t(21) = .77, p = .45. The interaction between facial expression and matching, as assessed in a 2 × 2 follow-up analysis, was not significant, F(1, 21) = 1.347, p = .259, ηp 2 = .06, suggesting that angry and neutral faces both affected search in a similar way.

In sum, this experiment replicates previous findings that irrelevant faces drive the eyes to their location (Devue et al., 2012). These effects were observed across multiple eye-movement measures. Importantly, we found that both angry and neutral faces capture the eyes more often than butterflies, but angry faces do not have a greater impact than neutral faces on any measure. Although both faces captured the eyes more often than butterflies, they did not hold them any longer.

Oculomotor control

We performed a 5 × 3 repeated measures ANOVA with saccade outcome (correct match, correct mismatch, capture, error mismatch, error match) and critical object type (angry face, neutral face, butterfly) as within-subjects factors. Results are shown in Fig. 4. The analysis showed that saccade outcome was significantly associated with saccade latency, F(2.481, 52.098) = 170.32, p < .001, ηp 2 = .89. Pairwise comparisons (collapsed across critical object type) with Bonferroni corrections (adjusted p values are reported; i.e., original p × 10 paired comparisons of the five possible outcomes) showed significantly longer latencies for correct saccades (M correctMatch = 218 ms, SD = 36; M correctMismatch = 223 ms, SD = 37) than for the three types of incorrect saccades (M capture = 199 ms, SD = 34; M errorMismatch = 199 ms, SD = 36; M errorMatch = 198 ms, SD = 36), all p’s < .001. Incorrect saccade latencies did not differ between each other, all p’s > .999. For correct saccades, latencies were shorter on match trials, than on mismatch trials, p = .001. Although there was a main effect of critical object type, F(1.553, 32.615) = 4.22, p = .032, ηp 2 = .167, the interaction between saccade outcome and critical object type was not significant, F(8, 168) = 1.11, p = .361, ηp 2 = .05. This is not in keeping with the oculomotor behaviour measures above that showed that butterflies do not affect oculomotor behaviour whereas faces do. This is likely due to large differences in latencies between correct and incorrect saccades, combined with a highly consistent pattern of latencies for error saccades across critical object conditions, washing out any subtle differences across critical object types.

Saccade latency as a function of the critical object type. Included in the display are the five possible matching/outcome combinations in Experiment 1 (top panel) and Experiment 2 (bottom panel). Error bars represent 95% confidence intervals for within-subjects comparisons (Morey, 2008). ** p ≤ .001; n.s. = nonsignificant difference between pairs of outcomes, p > .05. Note that correct saccades took significantly longer to be initiated than incorrect ones, even in the presence of matching faces

To assess more precisely the effect of critical object type in each situation, we conducted five follow-up one-way repeated measures ANOVAs testing the simple effect of critical object type (angry face, neutral face, butterfly) in each of the five possible saccade outcome/matching combinations. For all three types of incorrect trials (i.e., capture, error mismatch, error match), there was no significant effect of critical object type on saccade latency, F(2, 42) = 1.99, p = .148, ηp 2 = .087; F(1.484, 31.167) = 1.82, p = .185, ηp 2 = .08; and F(2, 42) = .038, p = .963, ηp 2 = .002, respectively. As for correct saccades, on mismatch trials, latency was also not significantly influenced by critical object type, F(2, 42) = .136, p = .873, ηp 2 = .006. By contrast, on match trials, there was a significant effect of critical object type, F(2, 42) = 5.46, p = .008, ηp 2 = .206, due to angry faces eliciting shorter latencies than butterflies, t(21) = 3.59, p = .005 (Bonferroni adjusted p values are reported; i.e., original p × 3). Latencies of saccades towards neutral faces had intermediate values and did not differ from latencies of saccades to either angry faces or butterflies, both t(21) = 1.59, p = .379.

This series of analyses shows that unlike correct saccades, incorrect saccades occur on occasions where insufficient control is exerted to maintain a task-related goal. All the incorrect saccades, including saccades captured by critical objects, were characterized by comparably short latencies. Follow-up analyses suggest that the effect whereby latencies are shorter on correct match trials than on correct mismatch trials is driven by the presence of faces (significantly so by angry faces) nearby the target on match trials.

Experiment 2

The aim of the second experiment was to evaluate the role of low-level visual features associated with angry and neutral faces in driving oculomotor behaviour. Inversion makes the discrimination of various facial aspects difficult, including facial expression, whereas it has little effect on the processing of individual features. Inversion is thought to disrupt holistic or configural processing of faces that convey their meaning (e.g., Freire, Lee, & Symons, 2000). Hence, if the effects of faces on oculomotor behaviour are driven by configural information, then inversion should reduce attentional capture (see Devue et al., 2012). Further, if some low-level visual features displayed by neutral faces are more potent than those in angry faces (as suggested by the slightly more frequent capture by neutral than angry faces in Experiment 1), we should observe the same pattern of results here, that is, stronger oculomotor capture by neutral than by angry faces during mismatch trials. In contrast, if the small difference in capture by angry and neutral faces is somehow due to their different affective meaning, inversion should decrease or even abolish the difference between angry and neutral faces.

Method

We recruited 26 new participants from the Victoria University of Wellington community. They were between ages 18 and 30 years and reported normal or corrected-to-normal vision. They received course credits or movie or shopping vouchers for their participation. Procedure and stimuli were exactly the same as in the previous experiment except that angry and neutral faces were now inverted by flipping the images on the horizontal axis.

Data analyses

We performed the same series of analyses as in Experiment 1, focusing first on instances of oculomotor capture and associated fixation durations; second on the effect of spatial location of the critical objects (match/mismatch) on oculomotor behaviour; third on the association between performance and saccade latency as a proxy for oculomotor control summoned in the presence of different critical object types. In addition, we formally compared capture rates by the different types of faces across experiments.

Results and discussion

We discarded the data of three participants: one who only had 77.6% of usable trials (i.e., 3 SD below the mean number of usable trials), one because of technical difficulties during the experiment, and one who elected not to complete the experiment. The final sample comprised 23 participants (18 female, five male; M age = 21.13 years, SD = 4.19). These participants had 94.9% usable trials on average.

Oculomotor capture and fixation duration

Results are presented in the right panels of Fig. 3. The one-way ANOVA with critical object type as a within-subjects factor conducted on the percentage of capture trials was significant, F(2, 44) = 4.52, p = .016, ηp 2 = .171. Neutral faces captured the eyes more often (M = 9.44%, SD = 3.13) than angry faces (M = 8.19%, SD = 3.92), t(22) = 2.478, p = .021, and more often than butterflies (M = 8.16%, SD = 2.43), t(22) = 2.74, p = .012, whereas angry faces and butterflies did not differ, t(22) = .958, p = .96. In line with Experiment 1, the same ANOVA on the mean fixation duration of the critical object after capture was not significant, F(2, 44) = 1.047, p = .36, ηp 2 = .045.

Comparison of oculomotor capture in the two experiments

Analyses above indicate that neutral faces capture attention more than angry faces, even when they are inverted. To compare the magnitude of the capture effect with that seen for upright faces, we conducted a 2 × 2 mixed-effects ANOVA with expression (angry, neutral) as within-subjects factor and experiment (upright, inverted) as between-subjects factor on the mean percentage of oculomotor capture trials. A main effect of experiment, F(1, 43) = 12.636, p = .001, ηp 2 = .227, confirms that capture was strongly attenuated when faces were inverted (M = 8.8%, SD = 3.57) relative to upright faces (M = 13.76%, SD = 5.77), replicating previous findings in the same paradigm (Devue et al., 2012). There was also a main effect of expression, F(1, 43) = 14.064, p = .001, ηp 2 = .246, due to neutral faces (M = 11.83%, SD = 5.34) capturing the eyes more often than angry ones (M = 10.63%, SD = 5.37). Importantly, the interaction between Experiment and Expression was not significant, F(1, 43) = .02, p = .889, ηp 2 = 0, confirming that this unexpected pattern of greater capture by neutral than angry faces was consistent across experiments and survived a significant decrement in capture due to inversion.

Oculomotor behaviour

Results are shown in the right panels of Fig. 2. The series of 3 × 2 repeated measures ANOVAs with critical object type (angry face, neutral face, butterfly) and matching as within-subject factors on correct saccade latency, saccade accuracy, total search time, and mean number of saccades necessary to reach the target, showed that none of the critical interactions between critical object type and matching were significant, all F’s < 1.3. This indicates that inverted faces did not significantly affect oculomotor behaviour.

Overall, it seems that inversion dramatically reduces but does not completely abolish attentional capture by faces.

Oculomotor control

Results are presented in the bottom panel of Fig. 4. We performed a 5 × 3 repeated measures ANOVA on saccade latencies associated with saccade outcome (correct match, correct mismatch, critical object capture, error mismatch, error match) and critical object type (angry face, neutral face, butterfly) as within-subjects factors. As in Experiment 1, saccade latencies were significantly linked to the saccade outcome, F(1.453, 31.962) = 52.088, p < .001, ηp 2 = .703. All the correct saccades (M correctMatch = 228 ms, SD = 32; M correctMismatch = 229 ms, SD = 32) had longer latencies than incorrect ones (M capture = 204 ms, SD = 28.5; M errorMismatch = 203 ms, SD = 25; M errorMatch = 200.5 ms, SD = 24), all p’s < .001. Again, incorrect saccades, including instances of capture, all had similar latencies, p’s > .85. However, unlike in Experiment 1, correct saccades had similar latencies on match and on mismatch trials, p > .999. There was no significant effect of critical object type, F(1.335, 29.366) = .078, p = .925, ηp 2 = .004, and no significant interaction, F(4.091, 90.003) = .893, p = .524, ηp 2 = .039.

This experiment again shows that successful saccades require more control than incorrect ones. Just like instances of capture by upright faces and incorrect saccades to other objects, instances of capture by inverted faces are the product of reflexive saccades. The presence of an inverted face within the display (matching or mismatching) does not affect the amount of control exerted to correctly program a saccade towards the target, showing that unlike upright faces, inverted faces do not have facilitatory effect when in proximity of the target.

General discussion

Using five different eye-movement measures, we replicate our previous finding using the same paradigm that irrelevant faces capture the eyes in a bottom-up fashion (Devue et al., 2012). However, angry and neutral faces did not differ on any measure except one, and then in an opposite direction to predictions. Both types of faces captured the first saccade more often than a butterfly but, surprisingly, neutral faces captured the eyes slightly more often than angry faces.

The second experiment with inverted faces shows a drastic attenuation of the effect of faces on all measures, confirming the important contribution of configural aspects that make upright faces meaningful (Devue et al., 2012). Oculomotor capture must also be partly driven by low-level visual features though, rather than by affective content (or lack thereof), because neutral inverted faces still captured the eyes significantly more often than either butterflies or angry faces (see also Bindemann & Burton, 2008; Laidlaw et al., 2015). It could be that neutral faces are more potent than angry ones because they contain more canonical or diagnostic facial features (Guo & Shaw, 2015; Nestor, Vettel, & Tarr, 2013). Sets of facial features that are seen more frequently are encoded more robustly, and therefore could be more diagnostic for face detection (Nestor et al., 2013). Stronger capture by neutral faces than by angry ones may also suggest avoidance. This interpretation is inconsistent, however, with all the other oculomotor measures. Alternatively, despite our efforts to balance low-level features, some artefact might remain in the specific stimuli that we used, making neutral faces slightly more salient than angry ones, irrespective of their orientation. Importantly, regardless of the underlying mechanism, the fact that neutral faces captured the eyes slightly more often than angry ones ensures that the absence of difference between angry and neutral faces on other measures does not reflect low power to detect effects of facial expression.

The equivalence of angry and neutral faces as distractors may seem at odds with the common claim that emotional stimuli capture attention. However, the current findings add to a growing body of evidence with faces (Fox et al., 2000; Fox, Russo, Bowles, & Dutton, 2001; Horstmann et al., 2012; Horstmann & Becker, 2008; Nummenmaa & Calvo, 2015), words (Calvo & Eysenck, 2008; Georgiou et al., 2005), fear-related stimuli (Devue, Belopolsky, & Theeuwes, 2011; Soares, Esteves, & Flykt, 2009; Vromen, Lipp, & Remington, 2015), or complex scenes (Grimshaw, Kranz, Carmel, Moody, & Devue, 2017; Lichtenstein-Vidne, Henik, & Safadi, 2012; Maddock, Harper, Carmel, & Grimshaw, 2017; Okon-Singer, Tzelgov, & Henik, 2007), showing that the emotional value of a stimulus does not affect early selection processes in a purely bottom-up fashion. These studies all suggest that the processing of emotional information is not automatic but depends on the availability of attentional resources, and is partly guided by top-down components such as expectation, motivation, or goal-relevance.

For example, some neuroimaging studies have shown decreased amygdala activation in response to emotional faces presented as central (Pessoa, Padmala, & Morland, 2005) or peripheral (Silvert et al., 2007) distractors under demanding tasks compared to less demanding ones, showing that processing of emotional stimuli is dependent on attentional resources, even at the neural level. In a study using spider-fearful participants, Devue et al. (2011) showed that presentation contingencies can create expectations leading to strong attentional biases towards emotional stimuli. They used a visual search task in which task-irrelevant black spiders were presented as distractors in arrays consisting of green diamonds and one green target circle. Spiders captured fearful participants’ attention more than other irrelevant distractors (i.e., black butterflies), but only when each type of distractor appeared in distinct blocks of trials. When spiders and butterflies were presented in a random order within the same block, they both captured spider-fearful participants’ attention. Thus, spiders did not capture attention because they were identified preattentively but because the blocked presentation created the expectation that any black singleton in the array would be a spider. Finally, Hunt et al. (2007) showed that attentional biases towards emotional faces may be contingent on goal-relevance. They compared the ability of schematic angry and happy distractor faces to attract the eyes when emotion was task-irrelevant and when it was task-relevant. They found that angry and happy distractor faces interfered with the search when targets were of the opposite valence but that neither emotional face captured the eyes more than other distractors when emotion was an irrelevant search feature.

The paradigm used in the present experiment strives to eliminate top-down and other confounds that could explain apparent bottom-up capture by emotional stimuli in many previous studies: angry and neutral faces are presented randomly; they are completely irrelevant to the task, in that their position does not predict the position of the target, they never appear in possible target locations, and the target-defining feature (i.e., colour) is completely unrelated to any type of facial feature; and they appear at periphery, so that they are not forced into the observer’s focus of attention. Simultaneously however, the presentation and task conditions maximise potential for angry faces to capture the eyes more than neutral ones if emotion was indeed processed preattentively: displays present one face at a time, avoiding competition between several faces (Bindemann et al., 2005); the task involves a minimal cognitive load; it summons a distribution of covert attention over the whole display, since the colour singleton appears in a random location and changes colour from one trial to another; and finally, faces and other objects are in the path towards the colour singleton, ensuring that they are comprised within the attentional window deployed to complete the task successfully (Belopolsky & Theeuwes, 2010).

We are therefore confident that we established optimal conditions to test whether emotion modulates attentional selection of faces and can be confident in our demonstration that it does not. We posit that plausible adaptive cognitive and neural mechanisms can account for oculomotor capture by faces as a class. Preattentive mechanisms that scan the environment to detect faces automatically (Elder, Prince, Hou, Sizintsev, & Olevskiy, 2007; Lewis & Edmonds, 2003; ’t Hart, Abresch, & Einhäuser, 2011) may exist to compensate for the difficulty in distinguishing subtle facial characteristics at periphery or in unattended central locations (Devue, Laloyaux, et al., 2009). This could be achieved through magnocellular channels that extract low spatial frequencies that are used for holistic processing (Awasthi, Friedman, & Williams, 2011a, 2011b; Calvo, Beltrán, & Fernández-Martín, 2014; Girard & Koenig-Robert, 2011; Goffaux, Hault, Michel, Vuong, & Rossion, 2005; Johnson, 2005; Taubert, Apthorp, Aagten-Murphy, & Alais, 2011). Holistic processing can be demonstrated as fast as 50 ms after exposure to a face (Richler, Mack, Gauthier, & Palmeri, 2009; Taubert et al., 2011) and is indexed by an early face-specific P100 ERP component (Nakashima et al., 2008). Face detection, which presumably results from processing of low spatial frequencies in the superior colliculus, pulvinar, and amygdala (Johnson, 2005), could then trigger very fast reflexive orienting responses through rapid integration between regions responsible for oculomotor behaviour (i.e., also comprising the superior colliculus, in addition to the frontal eye fields, and the posterior parietal cortex) and regions responsible for face processing (e.g., face fusiform area; Morand et al., 2014).

By bringing the face into foveal vision, this orienting reflex could facilitate the extraction of further information conveyed by medium and high spatial frequency information (Awasthi et al., 2011a, 2011b; Deruelle & Fagot, 2005; Gao & Maurer, 2011; see also Underwood, Templeman, Lamming, & Foulsham, 2008, for a similar argument with objects within complex scenes) to complement the partial information gathered at periphery via low spatial frequencies, for example, about familiarity (Smith et al., 2016) or facial expression (Vuilleumier et al., 2003). Fixating a face enables finer facial discrimination (e.g., wrinkles associated with facial expressions; see Johnson, 2005) and may help, nay be necessary, to reach a definite decision about the meaning of the face in terms of facial expression, identity, gender, race, or intentions. The small cost of such bottom-up capture by faces is that they may unnecessarily detract our attention from our current activity. However, participants in our study seemed to be able to quickly resume their ongoing task, as they did not dwell on a face after it captured their eyes any longer than they did a butterfly. In a similar situation, people did dwell longer on a task-irrelevant face if it happened to be familiar to them (see Devue, Van der Stigchel, et al., 2009), suggesting that social goals might sometimes override task goals after a face has been attended.

The bottom-up face detection mechanism we describe is not purely automatic in the strict sense of the term. On most trials, participants managed to maintain the goal set and were not captured by faces. If goal-directed saccades towards the colour singleton and stimulus-driven saccades towards salient faces are programmed in a competitive way in a common saccade map (e.g., Godijn & Theeuwes, 2002), low oculomotor capture rates show that people are mostly successful in meeting the task requirements.

Our data show clear associations between oculomotor control, indexed by first saccade latencies, and success on the colour singleton search task. Overall, correct saccades were characterized by longer latencies than incorrect ones, which indicates that the former require efficient oculomotor control strategies, even in such a simple parallel search task (Mort et al., 2003; Ptak, Camen, Morand, & Schnider, 2011; Walker & McSorley, 2006; Walker et al., 2000). This pattern is largely independent of the type of critical object present within the display (i.e., angry face, neutral face, or butterfly). Indeed, instances of oculomotor capture by faces and other incorrect saccades were associated with shorter saccade latencies than correct saccades. This is in line with previous findings in an antisaccade paradigm suggesting that saccades erroneously directed at faces are reflexive involuntary saccades (Morand et al., 2010). A possible reason for the homogeneity in latencies for all types of incorrect saccades, is that associated latencies were at floor and that incorrect saccades were initiated as fast as it is practically possible in the present paradigm. This makes lapses in oculomotor control leading to oculomotor capture by faces indistinguishable from other errors.

Interestingly, and unexpectedly, programming a correct saccade in the presence of a competing face on mismatch trials does not seem to require greater control than in the presence of a mismatching butterfly. This may suggest that the control strategy successfully employed on those trials allows faces to be effectively ignored, and maybe even prevents covert shifts of attention in their direction. Notably, latencies on correct mismatch trials were overall longer than on correct match trials, in which a critical object is in the same segment as the target. This effect was driven by faces: Executing a correct saccade towards the critical object/target location on match trials was faster for angry faces than for butterflies (with neutral faces being associated with intermediate latency values). This reduction in latency suggests that faces have a facilitatory effect on match trials. Importantly, however, these latencies were still much longer than latencies associated with incorrect saccades, showing that correct saccades towards a face/target location are still programmed under top-down control. In other words, the reduction in latency on match trials is not due to a bottom-up capture by the neighbouring face leading to a reflexive saccade in its direction.Footnote 1 Instead, the facilitation may originate in neighbouring activation from salient goal-related features (colour) and salient facial features, which combine during the elaboration of the saccade map in the superior colliculus to meet the activation threshold for the execution of the saccade faster (e.g., Belopolsky, 2015). In Experiment 2, this small effect was completely abolished, indicating that it was driven by the canonical representation of upright faces in Experiment 1.

The factors that determine whether control will be successfully applied on any given trial remain to be elucidated. One possibility is that outcome may depend on spontaneous fluctuations in attentional preparation linked to tonic activity in the locus coeruleus, as suggested by recent pupillometry studies in macaques (Ebitz, Pearson, & Platt, 2014; Ebitz & Platt, 2015) and in humans (Braem, Coenen, Bombeke, van Bochove, & Notebaert, 2015; Gilzenrat, Nieuwenhuis, Jepma, & Cohen, 2010).

Conclusion

Altogether, our study suggests that the visual system has evolved so that the occurrence of a face, a potentially socio-biologically relevant event, can be detected in a bottom-up fashion based on low-level canonical features. We show strikingly similar patterns of oculomotor behaviour in the presence of neutral and angry faces, which suggests that the goal of this reflexive detection may be to bring the face into foveal vision in order to then extract features that define its meaning. This bottom-up detection can however be prevented; oculomotor control was successfully used on most trials to produce goal-directed eye-movements.

Notes

An alternative explanation could be that faces elicit reflexive saccades in their direction instead of a correct fixation on the matching colour singleton on a proportion of trials, leading to average shorter latencies as compared to mismatch trials (we thank an anonymous reviewer for this suggestion). To investigate this possibility, we examined more precisely the landing position of correct saccades on match trials. We found that saccades did land closer to the matching critical object than to the coloured target circle in about 25% of cases across all critical object conditions (angry faces: 25.5% ± 17; neutral faces: 25.5% ± 16; butterflies: 25.1% ± 16).

A 2 × 3 ANOVA with endpoint (target, critical object) and critical object type (angry face, neutral face, butterfly) as within-subjects factors on the proportion of trials of each type showed that a majority of saccades ended on the colour singleton target rather than on the neighbouring critical object. Indeed, there was a significant main effect of endpoint, F(1,21) = 57, p < .001, ηp 2 = .731. However, there was no main effect of critical object type, F < 1, and no interaction, F < 1. This suggests that saccades landing on critical objects were likely landing errors (undershoot) due to the presence of the object picture on the same axis as the target, effects that have been reported elsewhere and in similar proportions (e.g., Findlay & Blythe, 2009; McSorley & Findlay, 2003). Importantly, the likelihood of these errors was not modulated by the nature of the object itself (see also Foulsham & Underwood, 2009).

In addition, we examined latencies of these different saccades with a 2 × 3 ANOVA with endpoint (target, critical object) and critical object type (angry face, neutral face, butterfly) as within-subjects factors. There was a significant main effect of critical object type, F(2, 36) = 3.65, p = .36, ηp 2 = .168, but no significant effect of endpoint, F < 1, and no interaction between critical object type and endpoint, F(2, 36) = 1.18, p = .319, ηp 2 = .062. This rules out the possibility that landing errors on faces result from bottom-up capture leading to reflexive saccades in their direction.

References

Alorda, C., Serrano-Pedraza, I., Campos-Bueno, J. J., Sierra-Vazquez, V., & Montoya, P. (2007). Low spatial frequency filtering modulates early brain processing of affective complex pictures. Neuropsychologia, 45(14), 3223–3233. doi:10.1016/j.neuropsychologia.2007.06.017

Anstis, S. (1998). Picturing peripheral acuity. Perception, 27(7), 817–825. doi:10.1068/p270817

Awasthi, B., Friedman, J., & Williams, M. A. (2011a). Faster, stronger, lateralized: Low spatial frequency information supports face processing. Neuropsychologia, 49(13), 3583–3590. doi:10.1016/j.neuropsychologia.2011.08.027

Awasthi, B., Friedman, J., & Williams, M. A. (2011b). Processing of low spatial frequency faces at periphery in choice reaching tasks. Neuropsychologia, 49(7), 2136–2141. doi:10.1016/j.neuropsychologia.2011.03.003

Awh, E., Belopolsky, A. V., & Theeuwes, J. (2012). Top-down versus bottom-up attentional control: A failed theoretical dichotomy. Trends in Cognitive Sciences, 16(8), 437–443. doi:10.1016/j.tics.2012.06.010

Belopolsky, A. V. (2015). Common priority map for selection history, reward and emotion in the oculomotor system. Perception, 44(8/9), 920–933. doi:10.1177/0301006615596866

Belopolsky, A. V., Devue, C., & Theeuwes, J. (2011). Angry faces hold the eyes. Visual Cognition, 19(1), 27–36. doi:10.1080/13506285.2010.536186

Belopolsky, A. V., & Theeuwes, J. (2010). No capture outside the attentional window. Vision Research, 50(23), 2543–2550. doi:10.1016/j.visres.2010.08.023

Bindemann, M., & Burton, A. M. (2008). Attention to upside-down faces: An exception to the inversion effect. Vision Research, 48(25), 2555–2561. doi:10.1016/j.visres.2008.09.001

Bindemann, M., Burton, A. M., Langton, S. R., Schweinberger, S. R., & Doherty, M. J. (2007). The control of attention to faces. Journal of Vision, 7(10), 15. doi:10.1167/7.10.15

Bindemann, M., Burton, A. M., & Jenkins, R. (2005). Capacity limits for face processing. Cognition, 98, 177–197. doi:10.1016/j.cognition.2004.11.004

Braem, S., Coenen, E., Bombeke, K., van Bochove, M. E., & Notebaert, W. (2015). Open your eyes for prediction errors. Cognitive, Affective, & Behavioral Neuroscience, 15(2), 374–380. doi:10.3758/s13415-014-0333-4

Calvo, M. G., Beltrán, D., & Fernández-Martín, A. (2014). Processing of facial expressions in peripheral vision: Neurophysiological evidence. Biological Psychology, 100, 60–70. doi:10.1016/j.biopsycho.2014.05.007

Calvo, M. G., & Eysenck, M. W. (2008). Affective significance enhances covert attention: Roles of anxiety and word familiarity. Quarterly Journal of Experimental Psychology, 61(11), 1669–1686. doi:10.1080/17470210701743700

Calvo, M. G., & Nummenmaa, L. (2008). Detection of emotional faces : Salient physical features guide effective visual search. Journal of Experimental Psychology: General, 137(3), 471–494. doi:10.1037/a0012771

Carretié, L. (2014). Exogenous (automatic) attention to emotional stimuli: A review. Cognitive, Affective, & Behavioral Neuroscience, 14(4), 1128–1258. doi:10.3758/s13415-014-0270-2

Carretié, L., Hinojosa, J. A., Lopez-Martin, S., & Tapia, M. (2007). An electrophysiological study on the interaction between emotional content and spatial frequency of visual stimuli. Neuropsychologia, 45(6), 1187–1195. doi:10.1016/j.neuropsychologia.2006.10.013

Crouzet, S. M., Kirchner, H., & Thorpe, S. J. (2010). Fast saccades toward faces: Face detection in just 100 ms. Journal of Vision, 10(4), 16. doi:10.1167/10.4.16

Dakin, S. C., & Watt, R. J. (2009). Biological “bar codes” in human faces. Journal of Vision, 9(4), 2. doi:10.1167/9.4.2

David, E., Laloyaux, C., Devue, C., & Cleeremans, A. (2006). Change blindness to gradual changes in facial expressions. Psychologica Belgica, 46(4), 253–268. doi:10.5334/pb-46-4-253

Deruelle, C., & Fagot, J. (2005). Categorizing facial identities, emotions, and genders: Attention to high- and low-spatial frequencies by children and adults. Journal of Experimental Child Psychology, 90, 172–184. doi:10.1016/j.jecp.2004.09.001

Devue, C., Belopolsky, A. V., & Theeuwes, J. (2011). The role of fear and expectancies in capture of covert attention by spiders. Emotion, 11(4), 768–775. doi:10.1037/a0023418

Devue, C., Belopolsky, A. V., & Theeuwes, J. (2012). Oculomotor guidance and capture by irrelevant faces. PLoS ONE, 7(4), e34598. doi:10.1371/journal.pone.0034598

Devue, C., & Brédart, S. (2008). Attention to self-referential stimuli: Can I ignore my own face? Acta Psychologica, 128(2), 290–297. doi:10.1016/j.actpsy.2008.02.004

Devue, C., Laloyaux, C., Feyers, D., Theeuwes, J., & Brédart, S. (2009). Do pictures of faces, and which ones, capture attention in the inattentional-blindness paradigm? Perception, 38(4), 552–568. doi:10.1068/p6049

Devue, C., Van der Stigchel, S., Brédart, S., & Theeuwes, J. (2009). You do not find your own face faster; you just look at it longer. Cognition, 111(1), 114–122. doi:10.1016/j.cognition.2009.01.003

Ebitz, R. B., Pearson, J. M., & Platt, M. L. (2014). Pupil size and social vigilance in rhesus macaques. Frontiers in Neuroscience, 8, 100. doi:10.3389/fnins.2014.00100

Ebitz, R. B., & Platt, M. L. (2015). Neuronal activity in primate dorsal anterior cingulate cortex signals task conflict and predicts adjustments in pupil-linked arousal. Neuron, 85(3), 628–640. doi:10.1016/j.neuron.2014.12.053

Elder, J. H., Prince, S. J. D., Hou, Y., Sizintsev, M., & Olevskiy, E. (2007). Pre-attentive and attentive detection of humans in wide-field scenes. International Journal of Computer Vision, 72(1), 47–66. doi:10.1007/s11263-006-8892-7

Findlay, J. M., & Blythe, H. I. (2009). Saccade target selection: Do distractors affect saccade accuracy? Vision Research, 49(10), 1267–1274. doi:10.1016/j.visres.2008.07.005

Foulsham, T., & Underwood, G. (2009). Does conspicuity enhance distraction? Saliency and eye landing position when searching for objects. The Quarterly Journal of Experimental Psychology, 62(6), 1088–1098. doi:10.1080/17470210802602433

Fox, E., Lester, V., Russo, R., Bowles, R. J., Pichler, A., & Dutton, K. (2000). Facial expressions of emotion: Are angry faces detected more efficiently? Cognition & Emotion, 14(1), 61–92. doi:10.1080/026999300378996

Fox, E., Russo, R., Bowles, R., & Dutton, K. (2001). Do threatening stimuli draw or hold visual attention in subclinical anxiety? Journal of Experimental Psychology: General, 130(4), 681–700. doi:10.1037//0096-3445.130.4.681

Fox, E., Russo, R., & Dutton, K. (2002). Attentional bias for threat: Evidence for delayed disengagement from emotional faces. Cognition & Emotion, 16(3), 355–379. doi:10.1080/02699930143000527

Freire, A., Lee, K., & Symons, L. A. (2000). The face-inversion effect as a deficit in the encoding of configural information: Direct evidence. Perception, 29(2), 159–170. doi:10.1068/p3012

Gao, X., & Maurer, D. (2011). A comparison of spatial frequency tuning for the recognition of facial identity and facial expressions in adults and children. Vision Research, 51(5), 508–519. doi:10.1016/j.visres.2011.01.011

Gauthier, I., Tarr, M. J., Moylan, J., Skudlarski, P., Gore, J. C., & Anderson, A. W. (2000). The fusiform “face area” is part of a network that processes faces at the individual level. Journal of Cognitive Neuroscience, 12(3), 495–504. doi:10.1162/089892900562165

Georgiou, G. A., Bleakley, C., Hayward, J., Russo, R., Dutton, K., Eltiti, S., & Fox, E. (2005). Focusing on fear: Attentional disengagement from emotional faces. Visual Cognition, 12(1), 145–158. doi:10.1080/13506280444000076

Gilzenrat, M. S., Nieuwenhuis, S., Jepma, M., & Cohen, J. D. (2010). Pupil diameter tracks changes in control state predicted by the adaptive gain theory of locus coeruleus function. Cognitive, Affective, and Behavioral Neuroscience, 10(2), 252–269. doi:10.3758/CABN.10.2.252

Girard, P., & Koenig-Robert, R. (2011). Ultra-rapid categorization of Fourier-spectrum equalized natural images: Macaques and humans perform similarly. PLoS ONE, 6(2), e16453. doi:10.1371/journal.pone.0016453

Godijn, R., & Theeuwes, J. (2002). Programming of endogenous and exogenous saccades: Evidence for a competitive integration model. Journal of Experimental Psychology: Human Perception and Performance, 28(5), 1039–1054

Goffaux, V., Hault, B., Michel, C., Vuong, Q. C., & Rossion, B. (2005). The respective role of low and high spatial frequencies in supporting configural and featural processing of faces. Perception, 34(1), 77–86. doi:10.1068/p5370

Grimshaw, G. M., Kranz, L., Carmel, D., Moody, R. E., & Devue, C. (2017). Contrasting reactive and proactive control of emotional distraction. Manuscript submitted for publication. Available at https://osf.io/preprints/psyarxiv/esdgy/

Guo, K., & Shaw, H. (2015). Face in profile view reduces perceived facial expression intensity: An eye-tracking study. Acta Psychologica, 155, 19–28. doi:10.1016/j.actpsy.2014.12.001

Harel, A., Kravitz, D., & Baker, C. I. (2013). Beyond perceptual expertise: Revisiting the neural substrates of expert object recognition. Frontiers in Human Neuroscience, 7, 885. doi:10.3389/fnhum.2013.00885

Horstmann, G., & Becker, S. I. (2008). Attentional effects of negative faces: Top-down contingent or involuntary? Perception & Psychophysics, 70(8), 1416–1434. doi:10.3758/PP.70.8.1416

Horstmann, G., Lipp, O. V., & Becker, S. I. (2012). Of toothy grins and angry snarls—Open mouth displays contribute to efficiency gains in search for emotional faces. Journal of Vision, 12(5), 7. doi:10.1167/12.5.7

Hubel, D. H., & Wiesel, T. N. (1968). Receptive fields and functional architecture of monkey striate cortex. Journal of Physiology, 195, 215–243. doi:10.1113/jphysiol.1968.sp008455

Hunt, A. R., Cooper, R. M., Hungr, C., & Kingstone, A. (2007). The effect of emotional faces on eye movements and attention. Visual Cognition, 15, 513–531. doi:10.1080/13506280600843346

Johnson, M. H. (2005). Subcortical face processing. Nature Reviews Neuroscience, 6(10), 766–774. doi:10.1038/nrn1766

Keyes, H., & Dlugokencka, A. (2014). Do I have my attention? Speed of processing advantages for the self-face are not driven by automatic attention capture. PLoS ONE, 9(10), e110792. doi:10.1371/journal.pone.0110792

Koster, E. H. W., Crombez, G., Verschuere, B., & De Houwer, J. (2004). Selective attention to threat in the dot probe paradigm: Differentiating vigilance and difficulty to disengage. Behaviour Research and Therapy, 42(10), 1183–1192. doi:10.1016/j.brat.2003.08.001

Laarni, J., Koljonen, M., Kuistio, A. M., Kyrolainen, S., Lempiainen, J., & Lepisto, T. (2000). Images of a familiar face do not capture attention under conditions of inattention. Perceptual and Motor Skills, 90(3, Pt. 2), 1216–1218. doi:10.2466/pms.2000.90.3c.1216

Laidlaw, K. E. W., Badiudeen, T. A., Zhu, M. J. H., & Kingstone, A. (2015). A fresh look at saccadic trajectories and task irrelevant stimuli: Social relevance matters. Vision Research, 111(Pt. A), 82–90. doi:10.1016/j.visres.2015.03.024

Lewis, M. B., & Edmonds, A. J. (2003). Face detection: Mapping human performance. Perception, 32(8), 903–920. doi:10.1068/p5007

Lichtenstein-Vidne, L., Henik, A., & Safadi, Z. (2012). Task relevance modulates processing of distracting emotional stimuli. Cognition & Emotion, 26(1), 42–52. doi:10.1080/02699931.2011.567055

Lundqvist, D., Bruce, N., & Öhman, A. (2015). Finding an emotional face in a crowd: Emotional and perceptual stimulus factors influence visual search efficiency. Cognition & Emotion, 29(4), 621–633. doi:10.1080/02699931.2014.927352

Maddock, A., Harper, D., Carmel, D., & Grimshaw, G. M. (2017). Motivation enhances control of positive and negative emotional distractions. Manuscript submitted for publication.

McSorley, E., & Findlay, J. M. (2003). Saccade target selection in visual search: Accuracy improves when more distractors are present. Journal of Vision, 3(11), 877–892. doi:10.1167/3.11.20

Mogg, K., Holmes, A., Garner, M., & Bradley, B. P. (2008). Effects of threat cues on attentional shifting, disengagement and response slowing in anxious individuals. Behaviour Research and Therapy, 46(5), 656–667. doi:10.1016/j.brat.2008.02.011

Morand, S. M., Grosbras, M.-H., Caldara, R., & Harvey, M. (2010). Looking away from faces: Influence of high-level visual processes on saccade programming. Journal of Vision, 10(3), 16. doi:10.1167/10.3.16

Morand, S. M., Harvey, M., & Grosbras, M. H. (2014). Parieto-occipital cortex shows early target selection to faces in a reflexive orienting task. Cerebral Cortex, 24(4), 898–907. doi:10.1093/cercor/bhs368

Morey, R. D. (2008). Confidence intervals from normalized data: A correction to Cousineau (2005). Tutorials in Quantitative Methods for Psychology, 4(2), 61–64. doi:10.3758/s13414-012-0291-2

Mort, D. J., Perry, R. J., Mannan, S. K., Hodgson, T. L., Anderson, E., Quest, R., & Kennard, C. (2003). Differential cortical activation during voluntary and reflexive saccades in man. NeuroImage, 18(2), 231–246. doi:10.1016/S1053-8119(02)00028-9

Murray, J. E., Machado, L., & Knight, B. (2011). Race and gender of faces can be ignored. Psychological Research, 75(4), 324–333. doi:10.1007/s00426-010-0310-7

Nakashima, T., Kaneko, K., Goto, Y., Abe, T., Mitsudo, T., Ogata, K., & Tobimats, S. (2008). Early ERP components differentially extract facial features: Evidence for spatial frequency-and-contrast detectors. Neuroscience Research, 62(4), 225–235. doi:10.1016/j.neures.2008.08.009

Nestor, A., Vettel, J. M., & Tarr, M. J. (2013). Internal representations for face detection—An application of noise-based image classification to BOLD responses. Human Brain Mapping, 34(11), 3101–3115. doi:10.1002/hbm.22128

Nummenmaa, L., & Calvo, M. G. (2015). Dissociation between recognition and detection advantage for facial expressions: A meta-analysis. Emotion, 15(2), 243–256. doi:10.1037/emo0000042

Ohman, A. (2005). The role of the amygdala in human fear: Automatic detection of threat. Psychoneuroendocrinology, 30(10), 953–958. doi:10.1016/j.psyneuen.2005.03.019

Okon-Singer, H., Tzelgov, J., & Henik, A. (2007). Distinguishing between automaticity and attention in the processing of emotionally significant stimuli. Emotion, 7(1), 147–157. doi:10.1037/1528-3542.7.1.147

Or, C. C., & Wilson, H. R. (2010). Face recognition: Are viewpoint and identity processed after face detection? Vision Research, 50(16), 1581–1589. doi:10.1016/j.visres.2010.05.016

Pessoa, L., Padmala, S., & Morland, T. (2005). Fate of unattended fearful faces in the amygdala is determined by both attentional resources and cognitive modulation. NeuroImage, 28(1), 249–255

Pourtois, G., Schettino, A., & Vuilleumier, P. (2013). Brain mechanisms for emotional influences on perception and attention: What is magic and what is not. Biological Psychology, 92(3), 492–512. doi:10.1016/j.biopsycho.2012.02.007

Ptak, R., Camen, C., Morand, S., & Schnider, A. (2011). Early event-related cortical activity originating in the frontal eye fields and inferior parietal lobe predicts the occurrence of correct and error saccades. Human Brain Mapping, 32(3), 358–369. doi:10.1002/hbm.21025

Puce, A., Allison, T., Asgari, M., Gore, J. C., & McCarthy, G. (1996). Differential sensitivity of human visual cortex to faces, letterstrings, and textures: A functional magnetic resonance imaging study. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience, 16(16), 5205–5215.

Purcell, D. G., & Stewart, A. L. (2010). Still another confounded face in the crowd. Attention, Perception, & Psychophysics, 72(8), 2115–2127. doi:10.3758/APP.72.8.2115

Qian, H., Gao, X., & Wang, Z. (2015). Faces distort eye movement trajectories, but the distortion is not stronger for your own face. Experimental Brain Research, 233(7), 2155–2166. doi:10.1007/s00221-015-4286-9

Reddy, L., Reddy, L., & Koch, C. (2006). Face identification in the near-absence of focal attention. Vision Research, 46(15), 2336–2343. doi:10.1016/j.visres.2006.01.020

Richler, J. J., Mack, M. L., Gauthier, I., & Palmeri, T. J. (2009). Holistic processing of faces happens at a glance. Vision Research, 49(23), 2856–2861. doi:10.1016/j.visres.2009.08.025

Riesenhuber, M., & Poggio, T. L. B. (1999). Hierarchical models of object recognition in cortex. Nature Neuroscience, 2(11), 1019–1025. doi:10.1038/14819

Schmidt, L. J., Belopolsky, A. V., & Theeuwes, J. (2012). The presence of threat affects saccade trajectories. Visual Cognition, 20(3), 284–299. doi:10.1080/13506285.2012.658885

Schoth, D. E., Godwin, H. J., Liversedge, S. P., & Liossi, C. (2015). Eye movements during visual search for emotional faces in individuals with chronic headache. European Journal of Pain, 19(5), 722–732. doi:10.1002/ejp.595

Schutter, D. J. L. G., de Haan, E. H. F., & van Honk, J. (2004). Functionally dissociated aspects in anterior and posterior electrocortical processing of facial threat. International Journal of Psychophysiology, 53(1), 29–36. doi:10.1016/j.ijpsycho.2004.01.003

Silvert, L., Lepsien, J., Fragopanagos, N., Goolsby, B., Kiss, M., Taylor, J. G., Raymond, J. E., Shapiro, K. L., Eimer, M., & Nobre, A. C. (2007). Influence of attentional demands on the processing of emotional facial expressions in the amygdala. NeuroImage, 38(2), 357–366

Smilek, D., Frischen, A., Reynolds, M. G., Gerritsen, C., & Eastwood, J. D. (2007). What influences visual search efficiency? Disentangling contributions of preattentive and postattentive processes. Perception & Psychophysics, 69(7), 1105–1116. doi:10.3758/BF03193948

Smith, M. L., Cottrell, G. W., Gosselin, F., & Schyns, P. G. (2005). Transmitting and decoding facial expressions. Psychological Science, 16(3), 184–189. doi:10.1111/j.0956-7976.2005.00801.x

Smith, M. L., Volna, B., & Ewing, L. (2016). Distinct information critically distinguishes judgments of face familiarity and identity. Journal of Experimental Psychology: Human Perception and Performance. doi:10.1037/xhp0000243

Soares, S. C., Esteves, F., & Flykt, A. (2009). Fear, but not fear-relevance, modulates reaction times in visual search with animal distractors. Journal of Anxiety Disorders, 23(1), 136–144. doi:10.1016/j.janxdis.2008.05.002

Sun, G., Song, L., Bentin, S., Yang, Y., & Zhao, L. (2013). Visual search for faces by race: A cross-race study. Vision Research, 89, 39–46. doi:10.1016/j.visres.2013.07.001

’t Hart, B. M., Abresch, T. G. J., & Einhäuser, W. (2011). Faces in places: Humans and machines make similar face detection errors. PLoS ONE, 6(10), e25373. doi:10.1371/journal.pone.0025373

Taubert, J., Apthorp, D., Aagten-Murphy, D., & Alais, D. (2011). The role of holistic processing in face perception: Evidence from the face inversion effect. Vision Research, 51(11), 1273–1278. doi:10.1016/j.visres.2011.04.002

Theeuwes, J. (1992). Perceptual selectivity for color and form. Perception & Psychophysics, 51, 599–606

Theeuwes, J., Kramer, A. F., Hahn, S., & Irwin, D. E. (1998). Our eyes do not always go where we want them to go: Capture of the eyes by new objects. Psychological Science, 9(5), 379–385

Underwood, G., Templeman, E., Lamming, L., & Foulsham, T. (2008). Is attention necessary for object identification? Evidence from eye movements during the inspection of real-world scenes. Consciousness and Cognition, 17(1), 159–170. doi:10.1016/j.concog.2006.11.008

Van Essen, D. C., Anderson, C. H., & Felleman, D. J. (1992). Information processing in the primate visual system: An integrated systems perspective. Science, 255(5043), 419–423. doi:10.1126/science.1734518

van Honk, J., Tuiten, A., de Haan, E., van den Hout, M., & Stam, H. (2001). Attentional biases for angry faces: Relationships to trait anger and anxiety. Cognition & Emotion, 15(3), 279–297. doi:10.1080/02699930126112

Vermeulen, N., Godefroid, J., & Mermillod, M. (2009). Emotional modulation of attention: Fear increases but disgust reduces the attentional blink. PLoS ONE, 4(11), e7924. doi:10.1371/journal.pone.0007924