Abstract

Detecting and analyzing various defect types in semiconductor materials is an important prerequisite for understanding the underlying mechanisms and tailoring the production processes. Analysis of microscopy images that reveal defects typically requires image analysis tasks such as segmentation and object detection. With the permanently increasing amount of data from experiments, handling these tasks manually becomes more and more impossible. In this work, we combine various image analysis and data mining techniques to create a robust and accurate, automated image analysis pipeline for extracting the type and position of all defects in a microscopy image of a KOH-etched 4H-SiC wafer.

Graphical abstract

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Microscopy and image analysis has been an important tool for investigating defects in materials and their microstructures. Manually detecting, e.g., cracks, grains or dislocations in photographs and digital images requires expertise, experience, and—depending on the size of the image or the number of features of interest—also a significant amount of time. Recently, high-throughput data analysis, which is concerned with handling huge numbers of specimens (e.g., even exceeding thousands of specimens) or with very large regions of interest (e.g., consisting of several thousands of images) is becoming more prominent in the era of data. Example of such approaches can be found in the work of Xiang et al.[1] where combinatorial high-throughput methods were introduced to discover new materials. Another example is the work by Castelli et al.[2] who analyzed a large space of 5400 different materials to obtain 15 promising candidates for developing new photoelectrochemical cells with improved light absorption.

In the area of wide-bandgap semiconductor material, silicon carbide (SiC) is the leading candidate with a high mechanical, chemical, and thermal stability. It has been shown to be highly suitable for power device applications. Therefore, efforts to produce high-quality SiC by reducing defects (especially dislocations) during the physical vapor transport (PVT) crystal growth process are important and require further optimization. Visual inspection and analyzing dislocation in semiconductors helps to understand the formation of defects during the PVT growth process and ultimately ensures the wafer’s quality during the production process. Such analysis is based on images produced by optical and scanning electron microscopy of the wafer surface or transmission electron microscopy techniques and X-ray topography which allow to follow single dislocation lines within the wafer. Those tasks are commonly done manually or based on classical image analysis methods that perform pixel-based operations, such as thresholding, line thinning, watersheding, to name but a few. These methods typically work only for a particular image and require significant experimentation for determining suitable parameters. In recent years, machine learning approaches have become popular in many research areas, e.g., to automate and accelerate the process of material discovery and design,[3,4,5,6] or to automate computer vision tasks such as object detection or segmentation.[7,8,9] However, obtaining large enough datasets for supervised training is still a challenge since it is time-consuming to annotate large datasets with potentially vast numbers of objects. Thus, “synthetically generated data” becomes helpful and enables the algorithm to train more effectively, e.g., Tremblay et al.[10 generated synthetic objects on top of a random image background and shows that training with synthetic data performs better than with real images. Trampert et al.[11] created artificial grain structures and used only a few hand-labeled real microscopy images to train a convolutional neural network (CNN). Similarly, Govind et al.[12] created artificial transmission electron microscopy images and used those to train a CNN for image segmentation tasks.

In this paper, we propose a combination of different data analysis techniques and deep learning methods in the material science domain for solving two main tasks: The first task is to generalize and automatize the process of creating a dictionary pool of etch-pit images that can be used for creating artificial training data. The second task is to use a deep learning framework to segment and count the occurrence of three different dislocation types (basal plane dislocation (BPD), threading edge dislocation (TED), and threading screw dislocation (TSD)) that commonly appear as etch-pits in KOH etching microscopy images of a 4H-SiC wafer. This allows to analyze a huge number of microscopy images with high fidelity to estimate dislocation distributions of different types and thereby helps to understand mechanisms that lead to defect formation and organization.

The paper is organized as follows: after the introduction section, in “Materials and methods” section, we describe all methods that were used in our work. In “Results and discussion” section, we present and discuss the results from the automated clustering process and the instance segmentation of various dislocation types. “Conclusion” section is the conclusion.

Materials and methods

The goal of this work is to analyze the dislocation content of a large SiC wafer of 10\({\hbox {cm}}\) in diameter. Subsequently, we start by describing the growth and preparation of the SiC crystal. This is then followed by introducing the imaging of the wafer as well as the automated machine learning pipeline for image analysis.

Materials, specimen preparation and microscopy

The SiC sample was cut from a crystal boule grown via the PVT method utilizing a RTD-6800 diamond wire saw. The crystal was sliced parallel to the seed direction, resulting in samples with a 4° off-axis angle with respect to the (0001)-plane, the same as the employed seed.

As a next step, the wafer was polished to remove the deformation zone induced by the sawing process. KOH platelets are heated up to 520°C. The sample is preheated and subsequently lowered into the melt inside a nickel sample holder for 7 min. After the etching step is completed, the sample is taken out of the melt, cooled down and cleaned with HCl and de-ionized water to remove any KOH residue. The employed setup is an in-house development of the group of one of the co-authors. The process is automated, spanning the preheating phase to the cool-down phase. One of the two resulting pieces came into close contact with the sample holder, inducing the sample holder’s geometry as a pattern, as seen in the microscopic images. This pattern is, therefore, not indicative of the sample’s crystal lattice property but, instead, a result of the reduced exposure to the KOH melt.

To reveal the location of dislocations the sample was etched with molten KOH. For this, the Si-face of a SiC wafer was cut parallel to the (0001)-plane and was etched selectively by KOH etching, revealing etch pits where dislocation lines pierce the surface. Both Si-face and C-face are etched simultaneously, but only the Si-face will be considered due to its anisotropic etching nature of etch pits. BPDs are located in the basal plane, i.e., parallel to the (0001)-plane. Thus, they can be detected by KOH etching due to the present small off-axis angle which is 4\(^\circ\) with respect to the basal plane.

Microscopy images of the KOH-etched sample’s Si-faces were taken utilizing a Zeiss Axio Imager.M1m microscope. The magnification was set to 20 times, and the mapping and stitching were carried out by the accompanying Zeiss Zen microscopy software. The whole wafer is shown in Fig. 1(a) and is divided into 20 sections 3 for the scanning process. Each of them again consists of around 2000 images with \(1292\times 968\) pixels [(see Fig. 1(b) and (c)], resulting in a total of 40000 images covering the whole wafer.

Automated creation of an etch pit dictionary

In the following we introduce a data analysis pipeline for obtaining image regions that contain a single etch pit for a BPD, a TSD, and a TED with various Burgers vectors. In this part, the objective is not to identify all etch pits in the wafer; the objective is to automatically find a number of good examples (i.e., image sections with a single, clearly visible etch pit) that are suitable for creating semi-synthetic training data by superimposing such etch pit examples in an artificial image.

Identification of image regions that contain an etch pit

Each gray scale microscopy image [Fig. 2(b)] consists of \(1292\times 968\) pixels. As a preprocessing step, distortions and inhomogeneous contrast were removed in each image through the rolling-ball[13] and CLAHE (Contrast limited adaptive histogram equalization technique) method[14] (see Appendix A for a brief explanation). A binarization threshold was then applied to the image to reveal the darker etch pits (note, that during these steps it is not important that no all etch pits are identified). Additional image processing techniques such as erosion, opening, and dilation using the OpenCV library were used for separating contiguous pixel groups [Fig. 2(c)]. To estimate the shape, a possibly rotated ellipse is fitted to each of the obtained pixel groups [Fig. 2(d)]. By characterizing the shape and size of the ellipse it is possible to exclude pixel groups that do not correspond to etch pits. As shown in Ref. 15 this requires the calculation of three parameters, the “lengthiness” of the ellipse, the “copactness” of the pixel cluster and the “circularity.” If these characteristic values exceed certain values (here: 3, 0.6, and 0.6, respectively), then the pixel groups have a too extreme shape to be etch pits (see Ref. 15 for further details). These pixel groups act as a mask through which individual regions of interest containing a single etch pit are obtained (the masks were additionally expanded by a boarder of 10 pixels). This process is done automatically for all 40000 images covering the entire wafer and resulting in a total number of approximately 1.7million etch pit images. However, not all of these etch pit images can be used for further analysis, because, e.g., two etch pits might overlap (class 0 in Fig. 2(f) shows such examples), and therefore it would not be possible to uniquely determine the dislocation line character. To exclude these “bad examples” from the further analysis steps, a deep learning-based classification method is used, as introduced subsequently.

Binary classification for selecting good candidates of etch pit images

Generating synthetic training data by superimposing a number of each etch-pit images requires images of high-quality. Therefore, it is necessary to differentiate between a suitable and unsuitable candidate from the pool of extracted etch pits datasets obtained above. Suitable images contain only a single etch pit and no artifacts, see the examples shown in Fig. 2(f). To decide which of the images is a suitable image for further analysis, a CNN is used as a classifier in a supervised learning setup.

As network architecture a multiple-channel CNN with a ResNet34 as a backbone was used. The input channels used for this type of CNN consists of the original gray scale image, a magnitude spectrum of the Fast-Fourier transform of the image as well as of the wavelet transform [see Fig. 2(e)]. These three channels together contain significantly more information than just the gray scale values used in regular CNNs and are very beneficial for the training and testing accuracy.

As training dataset we select (by quick visual inspection which takes less than an hour) 1000 arbitrary etch pit images that are clearly good candidates and 1000 that are clearly unsuitable. The size of each image is \(64\times 64\) pixels. We also perform data augmentation such as rotating the image by various degrees as well as adding noise to the image to ensure that the model generalizes well. The resulting dataset is split into 5 parts that are used for a k-fold cross-validation, where we ensured that there is no class imbalance. The test dataset is chosen as one of the five parts. The performance of the trained network is evaluated in terms of classification accuracy, defined as the ratio between the sum of the correctly predicted records and the total number of predicted records.

In the data analysis pipeline, the input to the trained network is one of the image sections Fig. 2(c) and the output, i.e., the prediction of the network is the class label 0 or 1. There, 0 indicates that the image section contains multiple overlapping etch pits or even other (image or crystallographic) defects while 1 indicates an image section that contains exactly one etch pit and is suitable for further processing.

Automated clustering of different dislocation types

Subsequently, we continue with only those image section that were identified as good candidates for further analysis. The next goal is to identify the exact dislocation type (i.e., BPD, TED or TSD), which is so far not known. In principle this is again a classification task. However, labeling the training data (i.e., sorting all training images into several different dislocation categories) is very time consuming and additionally prone to errors.

A different approach consists in unsupervised learning where the training is not based on pre-assigned class labels. To sort etch pits images into various categories, clustering methods can help by automatically grouping similar images. For determining what “similar” means, clustering methods often operate in a feature space which represents the images by a reduced set of essential features. Effectively, this implies an automated feature extraction followed by a dimensionality reduction, cf. Figure 2(g).

For constructing this reduced feature space we firstly use VGG-16 neural network[16] to convert etch pit images into feature vectors. No training is done; instead, a pretrained network with weights taken from ImageNet-1k[17] has been use for this purpose. VGG-16 is a simple convolutional neural network that consists of 13 convolutional layers followed by three fully connected layers. The first fully connected layer is taken as the 4096-dimensional feature vector and contains a multi-scale lower-level representation of the image which is suitable for clustering. However, the number of dimensions is still high and can cause problems for clustering methods due to the sparsity of the feature space. Thus, a further dimensionality reduction of the feature space is performed.

A sophisticated method for unsupervised dimensionality reduction is the so-called uniform manifold approximation and projection UMAP.[18] It preserves the global data structure and is still computationally manageable. The mathematical background of this technique is related to Laplacian eigenmaps[19] which distribute data uniformly on (sub)manifolds of the data space. For more detail on the algorithm and description refer to[18]. As hyper-parameter of UMAP we used 10 neighbors, 32 random states, and a minimum distance of 0.3. The other parameters are taken as the default values of the library package.[18] The 4096-dimensional dataset from the VGG-16 feature vectors are then reduced to only three components (two of which are shown in Fig. 2(g).

Finally, we use HDBSCAN (Hierarchical Density-Based Spatial Clustering of Applications with Noise)[20] to automatically cluster the data of the three-dimensional feature space into three separate groups, which can then easily be identified as BPD, TED, and TSD as discussed below.

Predicting dislocations in all sections of the wafer

At the end of the previous steps we have obtained a number of small images each of which contains a single etch pit. We additionally know to which kind of dislocation this etch pit corresponds. The images together with the types are contained in the “etch-pit dictionary.” This allows now to generate synthetic image data based on these small images.

Synthetic image generation

Artificial images with etch pits are created by (i) creating a background and (ii) randomly or systematically placing images from the dictionary on the background and simultaneously creating a mask for the semantic segmentation.

Background images (\(1024\times 1024\) pixels) are grown by a non-parametric sampling method for texture synthesis.[21] From an initial seed (\(200\times 200\) pixels), which is taken from a real microscopy image, the texture of the background image is grown one pixel at a time by a Markov random field model. Each newly synthesized pixel is generated from the center of the chosen neighborhood, which is similar to the pixel of the neighborhood. These neighborhoods are already found by the algorithm.

As a parameter, the minimum and maximum number of BPDs, TEDs, and TSDs that can appear in each of the images is defined. The algorithm randomly chooses the number of each dislocation type within these ranges. Each image is randomly selected from the image pool and pasted on the randomly chosen background image. An additional method is to place dislocations along a straight line segment, mimicking low angle grain boundaries. Altogether, around 580000 etch pit images were used to create randomized training datasets. The resulting synthetic images are obtained together with the mask for semantic segmentation and with the position of each dislocation etch pit. We generate about 10000 images for the training datasets and 1000 images for validation. The size of each synthetic image is \(512\times 512\) pixels.

Instance segmentation and classification of dislocation types

The instance segmentation and classification of dislocation types are done by training a Mask R-CNN[22] deep learning model, which has been implemented in Detectron2[23] (a Facebook AI Research’s next-generation library that provides detection and segmentation algorithms), which is built based on the Pytorch platform. Mask R-CNN[22] extends Faster R-CNN by adding a branch for predicting segmentation masks on each Region of Interest (RoI) in parallel with the existing branch for classification and bounding box regression. The mask branch is a small fully connected network applied to each RoI, predicting a segmentation mask in a pixel-to-pixel manner. Mask R-CNN is simple to implement and to train given the Faster R-CNN framework, which facilitates a wide range of flexible architecture designs. Additionally, the mask branch only adds a small computational overhead, enabling a fast experimentation.

In our implementation we train the model with a ResNet101 as backbone using pre-trained COCO weights. The dataset is converted into COCO format, in which the annotations contain a list of dictionaries with the required information, suitable for Detectron2. The performance of the segmentation is evaluated in terms of the root mean squared error (RMSE), which is defined as

where N is the number of values, \(y_{i}\) is the i-th truth value and \(\hat{y}_{i}\) is the i-th predicted value. For the evaluation of the performance, 5-fold cross-validation was conducted giving for each fold the accuracy of 0.89, 0.92, 0.96, 0.94, and 0.92, respectively, which results in an average accuracy of \(\approx 0.92\).

This was the last step in this lengthy data analysis pipeline. We are now able to detect the position and dislocation type in each of the images of the full wafer, as shown in Fig. 2(k). The Burgers vector of the respective defect can be obtained in a conventional manner by computing the radius of the etch pit (compare).[15]

Results and discussion

In the following we start by investigating the physical soundness of the data analysis results. Afterward, the dislocation predictions are discussed within the materials scientific context.

Robustness of the clustering

The first part of above introduced data analysis pipeline (etch pit dictionary creation) was designed to be as robust as possible. In particular, it was designed such that missing even a larger fraction of etch pits should not have any impact on the quality of the dictionary. As an important part we will now investigate the clustering results, Fig. 2(h) in more detail.

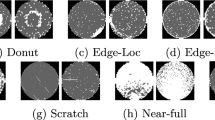

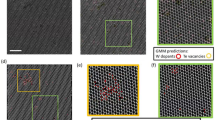

There are three different dislocation types, which commonly appear in microscopy images of SiC after etching with molten KOH: BPDs have a sea-shell-like shape, while TED and TSD have a round or hexagonal shape with a core in the center region. Larger cross sections indicate larger Burgers vectors. In Fig. 2(g) and the magnification in Fig. 7 Appendix C we observe that similar etch pits are located close to each other in the latent space generated by UMAP, which is beneficial for the clustering process. Figure 2(h) shows three clusters which are obtained by applying the HDBSCAN clustering method to the three-dimensional UMAP latent space. For visualization purposes, they are further reduced to two dimensions, shown in gray, orange, and blue color, respectively. Note, that (again for visualization purposes) in Fig. 2(g) we only show 1000 data points from part 9 of the wafer with embedded images of those etch pits.

How accurate was the clustering? Do the different colors in fact correspond to the three types of dislocation (BPDs, TEDs, and TSDs)? To answer this question, we take a look at the correlation between the three feature components shown in Fig. 3. First of all, we observe that three components are sufficient to distinctly separate the point clusters, e.g., in the plot of component 1 vs. 3 TSDs are even linearly separable from the other dislocation types. Similarly, plotting components 1 vs 2 and ignoring the TSDs makes BPDs and TEDs linearly separable as well. The three components can not directly be interpreted in terms of physical features. However, this is not a shortcoming of the method as it allows to detect patterns and structure in the data that would otherwise be difficult to obtain.

Left: Visualization of all three components after clustering. Right: Magnification of the data projected on the first two components (i.e., the middle plot in the leftmost column of the left figure) along with examples of the etch pit images. The value ranges of the three latent space components are the same, and therefore, no scales are given.

Exploring the structure of the latent space can also be done by taking a look at how the corresponding images change when moving from one cluster to the next. Figure 3 (right) shows several example images in the latent space. Position A and G both indicate very extreme shapes: both are rather large and have a strong contrast. However, A belongs to the group of lengthy shapes (the basal plane dislocations) while G belongs to the threading screw dislocations and is rather round. In between is the group of threading edge dislocations. They are smaller than the BPDs but still roundish. The clustering method successfully separates even those images that are close to each other, e.g., E and F. Visual inspection shows that E and F are indeed different. The same holds for B and C which implies that the three groups of dislocations can be unambiguously separated, making the method very robust for this application. However, it should be mentioned that clustering as an unsupervised learning approach is not able to give information about the degree of reliability of the results. This still requires to check the plausibility of the results, e.g., by an expert taking a look at a few images for their correct assignment to clusters.

Reliability of predicting various dislocation types

For the purpose of validating the trained segmentation model three different datasets were created that were not used in the training process:

-

The first dataset contains 1000 synthetic images with a low etch pit density where the number of etch pits were randomly varied within the ranges of 0..5 TSDs, 0..10 TEDs, and 0..20 BPDs.

-

The second dataset again consists of 1000 synthetic images. However, each of them contain, on average, a larger number of etch pits. Here, we used the ranges of 0..20, 0..50, and 0..200 for the quantities of the three dislocation types.

-

The third dataset consists of 100 real microscopy images that were manually annotated by a domain expert.

Figure 4(a) and (d) of panel A show examples of synthetic microscopy images for the case of low dislocation density and high dislocation density, respectively. The images contain all three types of dislocations, for which a number of etch-pit images are randomly chosen from the dictionary. The dislocation images of high density are unrealistic since such densities are typically not found in commercial SiC wafers. However, including this extreme case turned out to be beneficial for the training process of the instance segmentation since the difficult cases increase the variance of the dataset and thereby helps the model to generalize better.

Panel A: Results comparison between ground truth and predicted segmentation. (a) and (d) grayscale images of synthetic low and high dislocation density; (g) real microscopy image; b and e: ground truth segmentation from the synthetic image, (h) ground truth segmentation from hand labeling, (c), (f) and (i) predicted results from the deep learning. Panel B: Visualization of the three types of dislocation density in the whole wafer.

The ground truths and predictions are shown in the middle and right column of Fig. 4 panel A. To quantify the error the RMSE is calculated for all dislocation types resulting in \(e_\text {BPD}=13\), \(e_\text {TED}=23\), and \(e_\text {TSD}=3\) for the case of high dislocation density. These values are much smaller for the case of lower dislocation density which are \(e_\text {BPD}=1\), \(e_\text {TED}=4\), and \(e_\text {TSD}=0.5\), respectively. For the real images, the RMSE values are obtained as in \(e_\text {BPD}=3\), \(e_\text {TED}=5\), and \(e_\text {TSD}=2\). Analyzing the prediction performance of the model for all images of the validation datasets results in the distributions of absolute classification errors as shown in Fig. 6. The dataset with the high dislocation results by design in the worst performance: the dataset was created to be the most challenging one. By contrast, the validation dataset with the lower density the model shows a very good prediction accuracy. The ultimate test, however, is the real dataset since it is a subset of the whole wafer. For this, the variance of the errors is larger than that for the low density synthetic dataset. However, on average, only 1.9 BPDs, 0.9 TEDs, and 1.3 TSDs were misclassified. In many cases, there even was no misclassification at all. Assuming that this dataset is statistically representative of the full waver it can be assumed that this high level of accuracy also will hold for the analysis of the full wafer.

Analysis of the full wafer

Analyzing the full waver is a formidable task since it consists of altogether about 40000 individual images. The above introduced data analysis pipeline is suitable for such a task because even though training of the Mask R-CNN network is computationally expensive, making predictions about location and type of etch pits in new images is significantly faster. The total time required for the prediction across entire 100\({\hbox {mm}}\) wafer is roughly 75\({\hbox {min}}\) with the batch size of 4 when running on an 8-core workstation with Intel Core i9-10850K CPU, 32 GB RAM and a Nvidia GeForce RTX 3060 GPU with 12 GB RAM.

Altogether 1.7 millions etch pits were identified and located. Figure 8 in the appendix summarizes the dislocation content for each of the 20 wafer subdivision, each of which again consists of a large number of individual images. BPDs are the predominant type of dislocation in most of the regions while on average TEDs occur 50% less. The number of TSDs is the lowest in all wafer parts.

To illustrate the result of this analysis the resulting dislocation density distribution of the three dislocation types obtained for the whole wafer is shown in Fig. 4 panel B. The density distribution of BPD is the highest with a maximum value of around 1.6. 10\(^{5}\) \({\hbox {cm}}^{-2}\), while the maxima of TED and TSD are approximately 1.2 \(10^5\)\({\hbox {cm}}^{-2}\) and 0.8 \({10^5}\)\({\hbox {cm}}^{-2}\), respectively. We observe that TEDs and TSDs are mainly concentrated in the central region and on the boundary of the wafer. The center of the wafer is mainly BPDs-free and then takes a high value. Due to the overlapping of the image sections, artifacts from imperfect image registration in form of two vertical lines occur (cf. middle and right figure of Fig. 4(b). The slightly tilted vertical lines in the left plot are arrays of BPDs, forming several low angle grain boundaries that are distributed approximately in an equidistant manner. They occurred due to the high curvature of the growth interface and the shear stress acting on the grown crystal[24] and became visible only through this analysis. Due to the inhomogeneous etching process, the cross-hatched patterns in the TED and TSD defect maps do not correspond to a real variation in defect density. Furthermore, a varying TED density can be seen on the upper left side approximately every \(30^0\) originating from the center of the wafer.

Are there any dislocations missing?

In our study, we assumed that most of the etch pits are either BPDs, TEDs or TSDs. However, in reality, there can also be dislocations of mixed line characters. This type of etch-pit occurs because of the switching direction of dislocations, e.g., a TED can be deflected into a BPD, or vice versa, due to macro-step formation.[25] Similarly, TSDs can bent into BPDs and vice versa (“macro step flow”)[26] Furthermore, “shallow dislocations” were observed to form a round pit (with a curved bottom) without a core. Since dislocations cannot start or end inside the crystal, this structure can result from a dislocation half-loop[27] For those pits, all dislocations are somewhat tilted.[28]

Clearly, all of these cases are not explicitly considered in our analysis and are rather assigned to one of the three classes. Here, the clustering results might help to identify particular etch pit shapes by, e.g., taking a look at the extreme ends of a cluster, similar to what was done in Fig. 3. Another option to extend this investigation is to further cluster the content of a single cluster of images and thereby to obtain further subdivisions of that cluster. This might be a good starting point to detect new sub-groups of etch pit images that are similar in some aspects, which could be, e.g., the case for a mixed dislocation.

Conclusion

We have proposed a complex image analysis pipeline that consists of a number of classical image analysis techniques, supervised deep learning methods as well as unsupervised approaches. A particular emphasize was the robustness and accuracy of the framework. Generation of semi-synthetic training data was key for training an instance segmentation neural network without manually creating data annotation. This helped to rapidly analyze thousands of images and obtain accurate and spatially resolved information about the distribution of different dislocation types.

Our method can be applied to quickly identify areas of defect density inhomogeneities, for example, areas of high BPD density due to localized stress during crystal growth. The increasing diameter standard of SiC wafers of up to 200\({\hbox {mm}}\) directly implies that KOH etching images have to be analyzed automatically since such a high amount of data cannot be handled otherwise.

Ultimately, high-throughput analysis methods as the one in this work will contribute to understanding of defects in SiC which is the basis for optimizing the process parameters during growth.

Data availability

Postprocessed data is available at zenodo (https://doi.org/10.5281/zenodo.11229837), raw microscopy data is available upon request.

Code availability

Code is available upon request.

References

X.-D. Xiang, X. Sun, G. Briceno, Y. Lou, K.-A. Wang, H. Chang, W.G. Wallace-Freedman, S.-W. Chen, P.G. Schultz, A combinatorial approach to materials discovery. Science 268(5218), 1738–1740 (1995). https://doi.org/10.1126/science.268.5218.1738

I.E. Castelli, T. Olsen, S. Datta, D.D. Landis, S. Dahl, K.S. Thygesen, K.W. Jacobsen, Computational screening of perovskite metal oxides for optimal solar light capture. Energy Environ. Sci. 5(2), 5814–5819 (2012). https://doi.org/10.1039/C1EE02717D

F. Ren, L. Ward, T. Williams, K.J. Laws, C. Wolverton, J. Hattrick-Simpers, A. Mehta, Accelerated discovery of metallic glasses through iteration of machine learning and high-throughput experiments. Sci. Adv. 4(4), 1566 (2018). https://doi.org/10.1126/sciadv.aaq1566

P. Lyngby, K.S. Thygesen, Data-driven discovery of 2d materials by deep generative models. NPJ Comput. Mater. 8, 1–8 (2022). https://doi.org/10.1038/s41524-022-00923-3

S. Srinivasan, R. Batra, D. Luo, T. Loeffler, S. Manna, H. Chan, L. Yang, W. Yang, J. Wen, P. Darancet et al., Machine learning the metastable phase diagram of covalently bonded carbon. Nat. Commun. 13(1), 1–12 (2022). https://doi.org/10.1038/s41467-022-30820-8

Z. Rao, P.-Y. Tung, R. Xie, Y. Wei, H. Zhang, A. Ferrari, T.P.C. Klaver, F. Körmann, P.T. Sukumar, A.K. Silva, Y. Chen, Z. Li, D. Ponge, J. Neugebauer, O. Gutfleisch, S. Bauer, D. Raabe, Machine learning-enabled high-entropy alloy discovery. Science 378(6615), 78–85 (2022). https://doi.org/10.1126/science.abo4940

B.L. DeCost, H. Jain, A.D. Rollett, E.A. Holm, Computer vision and machine learning for autonomous characterization of am powder feedstocks. JOM 69(3), 456–465 (2017). https://doi.org/10.1007/s11837-016-2226-1

J.P. Horwath, D.N. Zakharov, R. Mégret, E.A. Stach, Understanding important features of deep learning models for segmentation of high-resolution transmission electron microscopy images. NPJ Comput. Mater. 6(6), 1–9 (2020). https://doi.org/10.1038/s41524-020-00363-x

A.R. Durmaz, M. Müller, B. Lei, A. Thomas, D. Britz, E.A. Holm, C. Eberl, F. Mücklich, P. Gumbsch, A deep learning approach for complex microstructure inference. Nat. Commun. 12(1), 1–15 (2021). https://doi.org/10.1038/s41467-021-26565-5

J. Tremblay, A. Prakash, D. Acuna, M. Brophy, V. Jampani, C. Anil, T. To, E. Cameracci, S. Boochoon, S. Birchfield, Training deep networks with synthetic data: Bridging the reality gap by domain randomization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 969–977 (2018). https://doi.org/10.48550/arXiv.1804.06516

P. Trampert, D. Rubinstein, F. Boughorbel, C. Schlinkmann, M. Luschkova, P. Slusallek, T. Dahmen, S. Sandfeld, Deep neural networks for analysis of microscopy images-synthetic data generation and adaptive sampling. Crystals 11(3), 258 (2021). https://doi.org/10.3390/cryst11030258

K. Govind, D. Oliveros, A. Dlouhy, M. Legros, S. Sandfeld, Deep learning of crystalline defects from tem images: a solution for the problem of “never enough training data. Mach. Learn. 5(1), 015006 (2024). https://doi.org/10.1088/2632-2153/ad1a4e

S.R. Sternberg, Biomedical image processing. Computer 16(01), 22–34 (1983). https://doi.org/10.1109/MC.1983.1654163

G.R. Vidhya, H. Ramesh, Effectiveness of contrast limited adaptive histogram equalization technique on multispectral satellite imagery. In: Proceedings of the International Conference on Video and Image Processing, pp. 234–239 (2017). https://doi.org/10.1145/3177404.3177409

B.D. Nguyen, M. Roder, A. Danilewsky, J. Steiner, P. Wellmann, S. Sandfeld, Automated analysis of x-ray topography of 4h-sic wafers: Image analysis, numerical computations, and artificial intelligence approaches for locating and characterizing screw dislocations. J. Mater. Res. 6, 1–12 (2023). https://doi.org/10.1557/s43578-022-00880-z

K. Simonyan, A. Zisserman, Very deep convolutional networks for large-scale image recognition. http://arxiv.org/abs/1409.1556 (2014)

O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein et al., Imagenet large scale visual recognition challenge. Int. J. Comput. Vis.D 115, 211–252 (2015)

L. McInnes, J. Healy, J. Melville, Umap: Uniform manifold approximation and projection for dimension reduction. http://arxiv.org/abs/1802.03426 (2018)

M. Belkin, P. Niyogi, Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput. 15(6), 1373–1396 (2003). https://doi.org/10.1162/089976603321780317

L. McInnes, J. Healy, Accelerated hierarchical density based clustering. In: Data Mining Workshops (ICDMW), 2017 IEEE International Conference On, pp. 33–42 (2017). https://arxiv.org/pdf/1705.07321.pdf . IEEE

A.A. Efros, T.K. Leung, Texture synthesis by non-parametric sampling. In: Proceedings of the Seventh IEEE International Conference on Computer Vision, vol. 2, pp. 1033–1038 (1999). https://doi.org/10.1109/ICCV.1999.790383 . IEEE

K. He, G. Gkioxari, P.Dollár, R. Girshick, Mask r-cnn. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2961–2969 (2017). https://arxiv.org/pdf/1703.06870.pdf

Y. Wu, A. Kirillov, F. Massa, W.-Y. Lo, R. Girshick, Detectron2. https://github.com/facebookresearch/detectron2 (2019)

P.J. Wellmann, J. Steiner, S. Strüber, M. Arzig, N. Uhlmann, The processing chain of the wide bandgap semiconductor sic–how small steps enabled a mature technology. Diam. Relat. Mater. 136, 109895 (2023). https://doi.org/10.1016/j.diamond.2023.109895

H. Li, Y. Peng, X. Yang, X. Xie, X. Chen, X. Hu, X. Xu, Investigation on dislocation and deflection morphology of pvt-grown on-axis 4h-sic crystals. J. Phys. D 55(45), 454002 (2022). https://doi.org/10.1088/1361-6463/ac8f57

T. Mitani, K. Eto, K. Momose, T. Kato, Massive reduction of threading screw dislocations in 4h-sic crystals grown by a hybrid. Appl. Phys. Express 14(8), 085506 (2021). https://doi.org/10.35848/1882-0786/ac15c1

Y. Ishikawa, Y. Yao, K. Sato, Y. Sugawara, K. Danno, H. Suzuki, T. Bessho, Y. Kawai, N. Shibata, Detection of shallow dislocations on 4h-sic substrate by etching method. Acta Phys. Polon. A 25, 120 (2011). https://doi.org/10.12693/APhysPolA.120.A-25

N. Tsubouchi, Y. Mokuno, S. Shikata, Characterizations of etch pits formed on single crystal diamond surface using oxygen/hydrogen plasma surface treatment. Diam. Relat. Mater. 63, 43–46 (2016). https://doi.org/10.1016/j.diamond.2015.08.012

K. He, X. Zhang, S. Ren, J. Sun, Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016). http://openaccess.thecvf.com/content_cvpr_2016/html/He_Deep_Residual_Learning_CVPR_2016_paper.html

M. Tan, Q. Le, Efficientnet: Rethinking model scaling for convolutional neural networks. In: International Conference on Machine Learning, pp. 6105–6114 (2019). PMLR. http://proceedings.mlr.press/v97/tan19a.html

Funding

Open Access funding enabled and organized by Projekt DEAL. Financial support of the Deutsche Forschungsgemeinschaft (DFG) under the contract numbers DA357/7-1, WE2107/15 and SA2292-6 is greatly acknowledged.

Author information

Authors and Affiliations

Contributions

Binh Duong Nguyen: Conceptualization, Methodology, Software, Writing, Formal analysis. Johannes Steiner: Conceptualization, Writing, Resources, Formal analysis. Peter Wellmann: Conceptualization, Writing, Resources, Formal analysis, Funding Acquisition. Stefan Sandfeld: Conceptualization, Methodology, Software, Writing, Formal analysis, Supervision, Funding Acquisition.

Corresponding authors

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Classical image analysis: Modifying the distortion and the unequal contrast

In a first step we enhance the quality of the original images. By applying the rolling-ball[13] and CLAHE (Contrast limited adaptive histogram equalization technique),[14] the features (etch-pit) are becoming more clear and the distortion which causes an unbalance to the contrast of the image are removed. The results are shown in Fig. 5.

We can see that the unbalance of the contrast in the original image [see Fig. 5(a)] causes a non-optimal result when we take threshold this image. A large number of features of the etch pits in the lower right corner are lost [see Fig. 5(b)]. On the other hand, when we perform the modification, the shading of the image looks more equal across the image [see Fig. 5(c)], with the result of detailed features of dislocation etch pits in the whole image when applying the threshold [see Fig. 5(d)].

Appendix B: Differences between ground truth and prediction on various test datasets

See Fig 6.

Appendix C: Detailed images from the dimensional reduction and automated clustering

See Fig. 7 shows the magnification of Fig. 2g and Fig. 2h. Figure 8 summarizes the dislocation content for each of the 20 wafer subdivision, each of which again consists of a large number of individual images. Obviously, BPD’s are the predominant type of dislocation in almost all regions (exception: part 8, 12 and 13, see Fig. 1 for the location of these parts).

Visualization of the etch pit distribution after reducing the dimensionality to three latent variables (the top figure shows the first two variables) and after clustering (bottom). It can be seen that already the dimensionality reduction by UMAP significantly sorts the images, e.g., the most circular etch pits are located at the right. After automated clustering, the three different groups of images are clearly separated.

Appendix D: Unsupervised clustering results from various combination of deep learning techniques and clustering approaches

In the main text, we have demonstrated that the combination of VGG-16 and UMAP resulted in a direct neighbor-ship of similar etch-pits which was important for obtaining overall very satisfactory results. To evaluate the performance of this combination, we have compared it with 13 other combinations of various neural networks with UMAP. The others neural network consists of ResNet[29] (with 18, 34, 50, 101 and 152 layers) and EfficientNet[30] from B0 to B7. The evaluation is done on 3000 etch-pits that are selected belonging to BPD, TED and TSD. From the scatter plots and the cluster map plots shown in Figs. 9 and 10 we can see that UMAP groups etch-pits from different dislocation types very effectively if the features vector is obtained from the VGG-16 or the ResNet50, whereas parts or the whole cluster overlap for other method combinations. The component values are scaled to the range 0..1 for comparison purpose.

Additionally, a similar evaluation was also done using principal component analysis (PCA) (see Figs. 11 and 12) and T-distributed stochastic neighbor embedding (t-SNE) (see Figs. 13 and 14). In PCA, although most of the similar etch-pits stay close together, there is no clear separation between pits of different classes. T-SNE shows a clear separating boundary between clusters similar to UMAP in the some method combinations, such as with VGG-16 or ResNet. Therefore, UMAP or T-SNE are both suitable methods. However, with larger datasets, the analysis with UMAP is recommended because the clustering process requires significantly less computational time.[18]

Visualization values of the component 1 and component 2 from the combination of various neural networks with UMAP. The values along the vertical direction indicate component value. These values are grouped into three dislocation types: BPD, TED and TSD with corresponding colors: blue, orange and green in the left most column.

Visualization values of the component 1 and component 2 from the combination of various neural networks with PCA. The values along the vertical direction indicate component value. These values are grouped into three dislocation types: BPD, TED and TSD with corresponding colors: blue, orange and green in the left most column.

Visualization values of the component 1 and component 2 from the combination of various neural networks with T-SNE. The values along the vertical direction indicate component value. These values are grouped into three dislocation types: BPD, TED and TSD with corresponding colors: blue, orange and green in the left most column.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nguyen, B.D., Steiner, J., Wellmann, P. et al. Combining unsupervised and supervised learning in microscopy enables defect analysis of a full 4H-SiC wafer. MRS Communications 14, 612–627 (2024). https://doi.org/10.1557/s43579-024-00563-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1557/s43579-024-00563-2