Abstract

Machine learning (ML) enables the development of interatomic potentials with the accuracy of first principles methods while retaining the speed and parallel efficiency of empirical potentials. While ML potentials traditionally use atom-centered descriptors as inputs, different models such as linear regression and neural networks map descriptors to atomic energies and forces. This begs the question: what is the improvement in accuracy due to model complexity irrespective of descriptors? We curate three datasets to investigate this question in terms of ab initio energy and force errors: (1) solid and liquid silicon, (2) gallium nitride, and (3) the superionic conductor Li\(_{10}\)Ge(PS\(_{6}\))\(_{2}\) (LGPS). We further investigate how these errors affect simulated properties and verify if the improvement in fitting errors corresponds to measurable improvement in property prediction. By assessing different models, we observe correlations between fitting quantity (e.g. atomic force) error and simulated property error with respect to ab initio values.

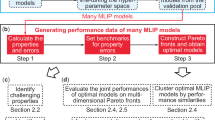

Graphical abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Numerous macroscopic physical and chemical properties are predicted solely by the underlying interaction and motion of atoms. Molecular dynamics (MD) simulations are essential for studying this motion, and have enabled computational prediction and discovery in materials science [1], energy storage [2, 3], catalysis [4], and molecular biology [5]. High fidelity atomic forces for integrating Newton’s equations of motion can be obtained from quantum mechanical calculations such as density functional theory (DFT), but unfavorable computational scaling of ab initio methods prevents simulations of relevant length and time scales for many applications. Ultimately, the accuracy of an MD simulation comes down to how these atomic forces are generated. To overcome this limitation, much progress has been made in the past decade by machine learning the quantum mechanical potential energy surface (PES) [6]. These ML surrogates of the PES possess a favorable linear scaling with number of atoms like traditional empirical potentials, and have been shown to be more accurate in a number of scenarios [7]. To achieve physically realistic and energy conserving simulations, ML potentials traditionally involve the use of an invariant atom-centered basis expansion that transform atomic environments into inputs suitable to ML models [8]. The mapping of these inputs to atomic energies and forces can take a variety of mathematical forms. Common ML models span a range of functional complexity including linear regression, neural networks (NNs), or Gaussian processes. Today a wide variety of descriptors (features, inputs, etc.) exist for atomistic machine learning, such as bispectrum components in the spectral neighbor analysis potential (SNAP) [9], complete bases such as atomic cluster expansion (ACE) descriptors [10], and traditional radial and angular bases such as in Behler-Parrinello atom-centered symmetry functions [11]. Despite this wide development of descriptors for quantifying atomic environments, the effect of different ML models utilizing these descriptors as inputs remains relatively unexplored.

In this manuscript we quantify the gain in accuracy that can be expected when increasing model complexity, irrespective of choice of descriptors. Here model complexity can be defined by both nonlinearity and number of fitting coefficients. For example, a linear regression model with many fitting coefficients is more complex compared to a linear regression model with fewer fitting coefficients. Likewise, a neural network model may be seen as more complex than linear regression models with a similar number of fitting coefficients. Treating the models and descriptors of ML potentials separably gives insight where computational expense can be optimized in favor of accuracy. We also consider three different descriptor sets that are available within LAMMPS; bispectrum components [9], atomic cluster expansion [10], and proper orthogonal descriptors [12].

To compare the performance of different models irrespective of descriptor choice, we fit linear (which includes quadratic kernel tricks) and nonlinear (e.g. neural network) ML models with identical descriptors as input. The gain in accuracy from using nonlinear models is quantified not only for validation errors in terms of fitting quantities (energies and forces), but also how errors in fitting quantities affect simulated properties. Here we focus on the distinction between fitting quantities, which can include validation/test errors in atomistic quantities such as energies and forces, and simulated properties which are calculated from using atomistic quantities like forces as inputs to calculations. This distinction between categories of prediction accuracy is greatly needed in the field as the volume of published models has been rapidly increasing, and their translation to a larger MD user base warrants this detail of reporting. To this end, we curate three datasets for comparing ML potential accuracy and its effect on selected property predictions. Additionally, exposing the accuracy limits that are determined by model form and/or descriptor basis uncovers physically interpretable insight into the nature of chemical bonds governed contained in the ground state PES to which these models are fit.

First we benchmark the accuracy of linear, quadratic, and NN SNAP potentials on a silicon (Si) dataset containing solid and liquid phase ab initio molecular dynamics (AIMD) configurations, and quantify the benefit a non-linear mapping from a single set of descriptors offers. Next, we perform the same model comparisons with a more chemically complex gallium nitride (GaN) dataset containing AIMD trajectories from 300 to 2300 K; here we observe if the accuracy advantage offered by NNs translates to improvements in phonon properties when using multiple descriptor sets (SNAP, ACE). Finally, we curate a dataset for superionic diffusion in the solid electrolyte Li\(_{10}\)Ge(PS\(_{6}\))\(_{2}\) (LGPS), to determine if complex model forms and descriptor sets offer an advantage over simple models for the macroscopically observable property of Li ion diffusion. We also benchmark our linear and NN models on another superionic conductor lithium phosphorus sulfide (LiPS) studied by graph neural networks (GNN) in literature [13].

While many studies have shown model comparisons in ab initio energy/force errors, it remains relatively unexplored how these errors correlate with the desired quantity of interest that the potential will be used for. Decoupling the effects of model form complexity from descriptor set is key in this understanding. Fundamentally, we are attempting to resolve the accuracy gap between ab initio and classical MD predictions, but for material properties obtained from a thermodynamic sampling of states. To this end, we seek to elucidate the required accuracy in ab initio energies/forces for accurately simulating material properties. Since the definition of accurate property prediction depends on individual research needs, it is important to show a range of model fitting errors and their associated simulated property errors. Such knowledge will enable researchers to choose models that are accurate enough for their purposes, since more complicated but accurate models may introduce additional unnecessary cost to simulate and/or train.

It is worth noting that these trade-offs are only exposed in ML interatomic potentials, where traditional empirical potentials (Tersoff, EAM, COMB, ReaxFF) have a fixed accuracy with respect to computational cost. Decisions regarding increased cost of using complex NN models for the gain in accuracy, compared to simpler and more performant linear models are chosen by the user. Linear models also come with a range of other advantages, such as fast training times and convenient measures of Bayesian uncertainties [14], so understanding the sacrifice in accuracy will help researchers weigh these pros and cons. To facilitate researchers in using our results to choose and develop their own models, we utilize our FitSNAP software [15] as a ML interface to LAMMPS, with capabilities to fit models of varying complexity while keeping descriptor settings constant. FitSNAP allows training of various model/descriptor combinations, e.g. SNAP or ACE descriptors with both linear or neural network models. Our distinction between the ML potential descriptors and the model is illustrated in Fig. 1.

Illustration of our distinction between ML potential descriptors and models. Interatomic distances \({\varvec{R}}_{ij}\) between a central atom i and neighbors j are transformed into a set of K descriptors denoted by a vector \({\varvec{B}}_{i}\). In our work these features are calculated in LAMMPS and used as inputs for model optimization in FitSNAP, allowing seamless deployment to LAMMPS for MD simulations. Note that the treatment of atom types is not illustrated here, different model coefficients are prescribed for each element. Current LAMMPS supported descriptors included SNAP and ACE, which we can use in different models with FitSNAP. FitSNAP also provides a custom descriptor calculator that simply takes in a LAMMPS neighbor list, which is useful for prototyping different descriptors or using graph neural networks.

Note that graph neural networks of atomic interactions will replace the mapping of \(\{{\varvec{R}}_{i1}, \ldots , {\varvec{R}}_{iN} \}\rightarrow {\varvec{B}}_{i}\) with additional interaction layers to construct an analogous descriptor.

The modular separation between descriptors and models in Fig. 1 allows us to take advantage of performant descriptor and descriptor gradient calculations in LAMMPS, which are then input to automatic differentiation frameworks such as PyTorch [16] and JAX [17] for optimization. Combining the LAMMPS implementation of descriptors with ML frameworks such as PyTorch allows performant NN force training via a modified iterated backpropagation algorithm, explained in more detail in the Methods section. This modular separation between descriptors and models also allows researchers to explore which descriptor/model combination best suit their needs. While this separation of descriptor and model offers distinct advantages, it is important to note that this separation is entirely abstract and conceptual. Indeed, NNs may be viewed as linear models if considering the final layer of the network, which can be understood as a transformed set of descriptors that combine linearly at the output. In this view, the question becomes how much does a NN improve the original set of descriptors. Nonetheless we seek to answer how much fitting accuracy is improved by feeding descriptors into different models, and quantify the improvement in simulated property prediction therefrom. We begin by considering analytical differences between some ML potential models.

ML potential model forms

In general, atom-centered ML potentials seek a relation between energy of an atom and its environment. The total energy is then a sum over all atomic energies, written as

where \({\varvec{B}}_i\) is the feature vector for atom i, otherwise known as the descriptors which quantify the atomic environment. Linear ML potentials are the simplest models by assuming a linear relationship between descriptors and the atomic energy, so that

where \({\varvec{\beta }}\) is a vector of \(\beta _{k}\) model coefficients corresponding to descriptor k out of K descriptors. For multi-element systems, we may use a different set of coefficients for each element type as in weighted density descriptors [9], or a unique set of coefficients for combinations of elements as in ACE [10] or chemSNAP [18]. Nonlinear terms, hence more complexity, may be introduced in linear models via kernel tricks; one popular example here involves quadratic ML potentials such as quadratic SNAP [19]. Here, one may think of the energy in Eq. 2 as a first order Taylor expansion in descriptor space. A second order expansion may be written as

where \({\varvec{\alpha }}\) is a symmetric \(K \times K\) matrix of model coefficients. The quadratic potential of Eq. 3 is readily optimized in the same linear regression manner as Eq. 2, with the cost of significantly more fitting coefficients. This increased number of coefficients, however, offers more flexibility and improved fitting errors [19, 20]. Even with their improvements in accuracy, quadratic models can retain computational costs close to linear models since we may use the same descriptors for the first and second order terms as seen in Eq. 3. Of course quadratic models are more expensive to train due to their greatly increased number of coefficients, but this training cost can still be cheaper than more complicated nonlinear models such as neural networks. Atom-centered neural network potentials approximate atomic energies with multilayer perceptrons that are functions of the descriptors, written in terms of matrix products of layer weights as

where \({\varvec{W}}_l\) denotes the weights for layer l, and \(\sigma\) is the activation function used at each layer. The last node of the multilayer perceptron predicts a scalar energy \(E_i\), and is therefore not transformed with the activation function \(\sigma\). One may expect that NN models such as Eq. 4 offer more complexity/flexibility than the linear and quadratic models in Eqs. 2 and 3 since there is no limit on the degree of nonlinearity in NN models, and because of the universal approximation theorems [21, 22]. Whether or not the improved accuracy offered by this extra flexibility matters in representing the true PES or for modelling material properties, however, is an unexplored question that we investigate in this study.

We may better appreciate how these model types are related and how they offer different degrees of flexibility for modelling the PES by considering a general view of interatomic forces for atom-centered ML potentials. The force on atom i arises from the negative gradient of the total potential energy in Eq. 1 with respect to the position of atom i. For atom-centered ML potentials, these forces are generally written as

where \(\beta _{jk}\) represents the derivative \(\partial E_j / \partial B_{jk}\) for each neighboring atom j. All the aforementioned models possess different \(\beta\) that offer varying degree of flexibility when modelling interatomic forces, shown mathematically below.

Eq. 6 shows that linear ML potentials assume neighboring force contributions \(F_{ij}\) are proportional to a the descriptor derivatives by the coefficient \(\beta _k\). Meanwhile, \(\beta _{jk}\) for quadratic models is a linear combination of all other descriptors. Finally, NNs provide the highest possible order of nonlinearity since the derivatives of a multilayer perceptron with respect to the inputs is yet another multilayer perceptron. In principle Eq. 6 shows that NNs offer the most flexibility in modelling interatomic forces, by not assuming any functional form of the descriptors. It remains to be seen, however, how much this extra complexity affects force accuracy, and whether this improvement in force accuracy improves property prediction. To begin investigating this question we first apply these models to silicon, one of the simplest systems benchmarked by ML potentials.

Solid and liquid silicon

In the literature, a Si dataset was used to demonstrate state-of-the-art accuracy with the recently developed ACE descriptors [23], and another dataset was developed to demonstrate the ability of GAP to model a wide variety of phase spaces and properties [24]. To this end, silicon has become a common benchmark system for simple comparisons. Here, we curate a simple dataset for the purpose of comparing different models on phonon properties and liquid structure. Our dataset includes AIMD configurations from 300 K to the melting point, along with 2000 K liquid AIMD simulations, with more details in the SI. Before simulating properties with our potentials, however, we seek to benchmark their accuracy on the energies and forces within the dataset. This will show to what extent more accurate fits give better ab initio property agreement. In this regard, we seek a fair method of comparing different model accuracies on fitting quantities irrespective of training hyperparameters, which requires a closer look at the loss function.

Hyperparameters common to all ML potential models and optimization methods reside in the loss function. When training to energies and forces we use the same L2 norm loss function for all models, given by

where M is the number of configurations, \(N_m\) is the number of atoms in configuration m, \(\hat{E}_m\) is the model energy of a configuration, and \(E_m\) is the target ab initio energy. The right-most term is a L2 norm in force components where \(\hat{F}_{a,i,m}\) is the model force component on atom i in configuration m with Cartesian direction a. Likewise for the target ab initio force components \(F_{a,i,m}\). For linear and NN models we solve this L2 norm via singular value decomposition (SVD) and gradient descent, respectively, with more details in the SI. Regularization penalty functions were not used, but are available within the FitSNAP framework. Quadratic models are also readily solved via SVD since they can be written as a linear combination of descriptors as in Eq. 3. Linear models can trained with gradient descent, but SVD offers fast training times that have been utilized to create successful potentials in the past [9, 18, 20, 25,26,27], so we wish to retain this advantage in a model comparison. The common hyperparameters between linear and nonlinear models are therefore loss function energy weights \(w_m^E\) and force weights \(w_m^F\).

For linear models, effort is often dedicated to optimizing these energy/force weight hyperparameters by looping over SVD fits combined with multi-objective optimization on the energy/force weights [9, 18, 20, 25, 26, 28]. Gradient descent training for NNs is more costly, however, so we do not individually optimize the weight hyperparameters here. A rigorous study on the effect of other hyperparameters such as descriptor cutoffs is beyond the scope of this study, but such a study would be valuable using tools recently developed by the community [29]. Instead, we perform fits with a variety of force/energy weight ratios \(w^F/w^E\), where the same ratio is applied to all configurations in the training set. These \(w^F/w^E\) ratios are chosen in a range of \(10^{-8}\) to \(10^{4}\), with more details on the exact values in the SI. By comparing the best energy and force errors on a validation set using a variety of \(w^F/w^E\) ratios, we achieve a fair comparison of model accuracy irrespective of loss function hyperparameters. For an initial comparison we fit linear, quadratic, and NN models on our silicon data set using only SNAP descriptors, to observe the effect of model complexity alone. Descriptor complexity is also observed by using SNAP descriptor settings \(j_{max} = 3\) (31 descriptors) and \(j_{max} = 4\) (56 descriptors). We refer the reader to literature for understanding how the \(j_{max}\) parameter influences the number of SNAP descriptors [9]. For all \(w^F/w^E\) ratios, we report the average validation error on five random 10% validation sets, along with a standard deviation thereof. Scanning a variety of \(w^F/w^E\) ratios we find that all models saturate at different energy and force accuracy, as seen in Fig. 2(a), with notable trade offs between these two fitted properties.

(a) Force/energy tradeoff for linear, quadratic, and NN models with SNAP descriptors trained on our silicon data set. Different SNAP descriptor settings are \(j_{max} = 3\) (31 descriptors) and \(j_{max} = 4\) (56 descriptors). Each point represents a different force/energy weight ratio. The reported errors are an average of 5 random validation set errors with horizontal and vertical lines showing standard deviation in energy and force, respectively. NN1 refers to a 32 \(\times\) 32 hidden node architecture, NN2 is 250 \(\times\) 250, and NN3 is 500 \(\times\) 2000. (b) Radial distribution function mean absolute error over the radii shown in the inset, between potentials and AIMD. (c) Phonon frequency mean percent error across the Brillouin zone shown in the inset. (d) Thermal conductivity mean percent error across the range of temperatures from 100 to 1000 K, as shown in the inset. Note that more complex models (quadratic and NN) possess the lowest force errors and accurately capture all simulated properties.

It is important to note the force error saturation experienced by all models as shown in Fig. 2(a). We proposed this saturation level should be used to fairly compare how well different models can match the PES shape or its derivatives. Furthermore, properties that rely on sampling a MD trajectory at equilibrium are hypothesized to be highly sensitive to force accuracy that determines atomic motions in some equilibrium state. Other immediately observable and important trends involve the improvement in force accuracy due to increasing model complexity, irrespective of descriptor choice. For example, SNAP descriptors with \(j_{max} = 3\) saturate near 150 meV/, as seen with the blue triangles in Fig. 2(a). Simply feeding these same descriptors into quadratic or small NNs [\(32 \times 32\) hidden node architecture denoted by NN1 in Fig. 2(a)] decreases the force saturation level down to 100 meV/Å, as seen with the red and grey triangles. A similar improvement is seen with more detailed SNAP descriptors using \(j_{max} = 4\), where the linear models (blue circles) saturate at 125 meV/Å while quadratic and small NN models (red and grey circles, respectively) saturate at 80 meV/Å. Further complicating the model with larger NNs, however, does not offer much improvement in forces. This is shown with the black diamond and square in Fig. 2(a), representing NNs with architectures of \(250 \times 250\) and \(500 \times 2000\) node architectures, respectively. Using these much larger models only yielded a 5 meV/Å improvement over the smaller NNs. This agrees with previous experiments in literature, which found deeper/wider NNs have little effect on energy accuracy [30]. Nonetheless we observe a clear improvement in force accuracy due to quadratic and NN models over linear models, using the same descriptors. This begs the question: does this improvement in forces translate into accurate dynamics and material properties that require sampling a trajectory?

Although it has been noted in literature that force errors in general are not enough to say a potential is reliable for stable MD or property prediction [31], we find for our models here that force error correlates well with liquid structure and phonon property error. The easiest dynamical quantity to reproduce was the 2000 K liquid RDF in Fig. 2(b), where we achieved low RDF MAE (< 0.01 RDF units) with models possessing up to \(\sim\) 120 meV/Å force error. More liquid structure data such as angular distribution functions for specific \(w^F/w^E\) ratios are shown in the SI. Solid state phonon frequencies, on the other hand, exhibited a pronounced correlation with force error as shown in Fig. 2(c). This is not surprising due to the fact that vibrational frequencies depend on second order derivatives of the PES, so models that best match forces (first derivative) should better capture how force changes with atomic displacement. Indeed, the only potentials capable of reaching 1% frequency error are those with the lowest force errors; quadratic and NN SNAP with \(j_{max} = 4\). Likewise for thermal conductivity error in Fig. 2(d), the same quadratic and NN SNAP \(j_{max} = 4\) potentials accurately reproduced thermal conductivity within 10%. These quadratic and NN SNAP \(j_{max} = 4\) potentials achieved the lowest force errors of 80 meV/Å and were the only potentials of simultaneously reproducing all AIMD quantities (liquid structure, phonon frequencies, and thermal conductivity) to within reasonable agreement (0.01 RDF MAE, 1% frequency error, and less than 10% thermal conductivity error). This shows the practical advantage of using atom-centered descriptors such as SNAP with more complex models, and that the 50 meV/Å improvement in forces can yield a noticeable improvement in solid/liquid quantities of interest. It is important to note that this does not come with a significant increase in computational cost when simulating MD, as most of the cost is associated with the descriptor calculation. More details on computational cost are reported in the SI, and be aware that software factors such as PyTorch interfaces to allow NN potentials in LAMMPS are constantly changing. Aside from the slightly increased computational cost of MD, researchers should also acknowledge the increased cost of training for NN models.

The SVD procedure for training linear SNAP on a data set of 2000 configurations like we use here completes on the order of minutes. Meanwhile, the 1000 epochs of gradient descent training used for our NNs can take 12 h on a single CPU core with our silicon data set. Linear solvers therefore possess a notable advantage of fast training times, allowing one optimize other hyperparameters like those associated with SNAP descriptors to match more important quantities, such as stability metrics, defect/surface energies, and others [28]. Noting these advantages in training cost, one may be attracted to the prospect of using quadratic models, especially since they saturate near the same force errors as compared to NNs. Both quadratic and small NN models with different SNAP descriptors \(j_{max} = 3\) and \(j_{max} = 4\) converge at similar force errors near 100 meV/Å and 80 meV/Å, respectively. This might suggest that quadratic and NN models arrive at the same approximation of the ab initio PES, which is important to know because researchers should not waste time fitting both models if they are indeed the same solution.

To test whether quadratic and NN models arrive at the same solution, we compare the derivatives of the PES with respect to model descriptors. These first derivatives are the \(\beta _{jk}\) given in Eq. 6, and plotted as a function of their respective bispectrum components in Fig. 3.

Comparisons of model derivatives with respect to descriptors for our silicon data set, showing that quadratic and small NNs arrive at similar solutions of the PES up to first order in descriptors. (a) First derivatives of energy with respect to the first bispectrum component, denoted by \(\beta _{j1}\), as a function of the first bispectrum component \(B_{j1}\) for each atom j. These first derivatives are constants for a linear model, but unique for each atom in the case of quadratic and NN. For this first bispectrum component, there is significant overlap between quadratic and NN. (b) First derivative of energy with respect to the second bispectrum component, showing less overlap. (c) Parity plot showing a correlation between quadratic and NN \(<\beta _{jk}>\) averaged over all atoms j in the data set. (d) Parity plot for second derivatives \(<\alpha _{j, kl}>\) averaged over all atoms, which is constant for quadratic models.

By comparing model first derivatives with respect to descriptors, we are comparing the shape of the PES up to first order in the descriptors. Some bispectrum components share similar first derivatives between quadratic and NN SNAP models, e.g. Figure 3(a) shows that the clouds of first derivatives of the first bispectrum components significantly overlap for all data points when evaluated by quadratic and NN models trained on the silicon set. The derivative with respect to the second bispectrum component shown in Fig. 3(b), however, shows less overlap. Overall, we find a correlation in the average value of first derivatives with respect to descriptors, shown by the parity plot for quadratic and NN \(\beta _{jk}\) shown in Fig. 3(c). Quadratic and NN models therefore arrive at similar approximations of the PES up to first order in the descriptors. Up to second order, however, the quadratic and NN models are different, shown by the parity plot in average second derivatives \(\alpha _{j,kl} = \partial ^2 E_j / \partial B_{jk} \partial B_{jl}\).

Although quadratic and NN models arrive at different approximations of the PES up to second order, these differences do not significantly affect force, phonon property, or liquid structure accuracy. This may be surprising since quantities like vibrational frequencies directly depend on spatial force derivatives; at some level, properties become insensitive to differences in PES approximations, and we seemed to have reached this point with quadratic and NN SNAP. Though we acknowledge there is a deeper study to be had of which material properties would be sensitive to these differences, we highlight the unique solutions provided by these two model forms. It therefore might be advantageous for researchers to fit both models and retain all fits even though they have similar errors, because differences up to second or higher orders may be responsible for other phenomena not studied here, such as improvements in stability or extrapolation to much different phase spaces. Exploring the differences in accuracy for other systems will also show whether the agreement between quadratic and NN models is consistent. Since silicon is a relatively simple single element system, we can introduce training complexity by considering a system with two element types. To that end, we see if similar model trends arise by considering our gallium nitride AIMD data set.

Gallium nitride thermal transport

GaN is an important material in power electronics where large heat fluxes limit performance in devices [32]. Phonon transport in GaN is therefore well studied and there are reports in literature of accurate potentials for GaN [33]. Here we quantify the improvement of quadratic and NN models over linear models like we did for Si. We perform this comparison using descriptors that treat multi-element systems to see if NNs offer noticeable gain in accuracy with different types of descriptors. Specifically, we now add ACE descriptors to the set of comparisons due to their explicit treatment of multi-element pairs [10]. In the ACE formalism each pair of atoms has its own set of descriptors and model coefficients based on element type. For example, GaN has 4 sets of coefficients encompassing Ga-Ga, Ga-N, N-Ga, and N-N interactions. Meanwhile, traditional "weighted density" SNAP reduces all element types in the environment onto a single descriptor value, with separate model coefficients reserved only for distinct elements as the central atom instead of element-element pairs. There is also an explicit multi-element version of SNAP, but we have not introduced this formalism with NNs within FitSNAP [18].

Due to explicit multi-element treatment it is therefore expected that ACE can achieve lower errors compared to weighted density SNAP; this has been noted recently when comparing ACE with SNAP and many other potentials [23]. When feeding ACE and SNAP descriptors into NNs, however, we use the same multi-element treatment of atom-centered NN models. Here we assign a unique NN to each central atom based on its type, as common in literature for ANI [34] and Behler-Parrinello NNs [11]. The force/energy tradeoff for GaN using SNAP descriptors with various models, and ACE descriptors with linear and NN models, is shown in Fig. 4(a).

GaN force and energy saturation for different models using SNAP and ACE descriptors. Each point represents a model fit with a different force/energy ratio. Blue symbols are linear models with different descriptors, red symbols are quadratic models, and grey symbols are NN models. Note the same force saturation behavior as seen for Si, with more flexible models saturating at lower force errors. It is important to note that our choice of ACE descriptors was limited by the amount we could store in RAM for NN models, since we store all the descriptor derivatives in Eq. 5 in RAM with the current FitSNAP implementation. This could be circumvented by using autograd all the way back to atomic positions for calculating the force, instead of storing descriptor gradients in memory. It is important to note that ACE is an extremely flexible potential and one could construct a more accurate ACE potential than presented here by either adding more basis functions and/or increasing body order; for our purposes we simply explore the benefit of using NNs with a constant set of descriptors.

Linear ACE saturates at a lower force error than linear SNAP. This is not surprising, especially since the ACE settings we used result in 120 descriptors for each element type pair, while SNAP \(j_{max} = 3\) and \(j_{max} = 4\) involve only 31 and 56 descriptors for each element, respectively. Our ACE basis could therefore be thought of as more comprehensive than our SNAP basis used here. One may therefore expect less of an improvement in errors when considering a nonlinear ACE model, since the descriptors already offer a comprehensive description of the environment. Indeed, NN ACE only exhibits an improvement in forces from 80 meV/Å to 60 meV/Å when keeping descriptors constant. This improvement is also limited by our implementation of multi-element NNs; linear ACE possesses unique coefficients for each element type pair but our NNs are unique for each element type. It would be more commensurate to use different NNs for each element type pair, which should offer more flexibility. Nonetheless we see less of an improvement compared to SNAP descriptors, which experience almost a two-fold reduction in force errors as seen in Fig. 4(a).

With SNAP descriptors, we see the same saturation of quadratic and NN models at similar force errors, as we saw in Si. This improvement in forces has a measurable effect on vibrational frequencies and thermal transport, as shown in Fig. 5.

(a) Correlation between force error and phonon frequency error for various potentials. Each point represents a fit with a different force/energy weight ratio. (b) Correlation between force error and thermal conductivity error. Note that thermal conductivity is a higher order property and therefore some potentials may obtain small errors for the wrong reasons, so there is less of a correlation compared to frequencies. It is important to note that with a sufficient basis, such as ACE, one can achieve low property errors with linear models on par with nonlinear models using other bases. Here we used the same linear ACE model from Fig. 4(a), although more comprehensive ACE bases could be used as well.

In Fig. 5 we see a clear positive correlation between force error and phonon frequency error averaged over symmetry directions in the Brillouin zone, with more details in the SI. In Fig. 5 we observe a weaker correlation between force error and thermal conductivity error. This is expected since thermal conductivity is a higher order property and therefore some potentials may obtain small errors for physically improper reasons, such as cancellation of errors due to improper phonon contributions. There is therefore less of a correlation compared to frequencies, as we also saw for Si. Nonetheless, quadratic and NN models with \(j_{max} = 4\) offer enough flexibility to simultaneously achieve low frequency errors of 1% and thermal conductivity errors < 10%. The two-fold improvement in forces when using SNAP descriptors with quadratic and NN models is therefore important for describing phonon transport in GaN. We note that past efforts in modelling GaN involved the use of Taylor expansion potentials whose fitting coefficients are the PES spatial derivatives, which allowed nearly exact reproduction of ab initio phonon dispersion [35]. The potentials made here may not be as computationally efficient as low order Taylor expansions but retain the ability to be used in scenarios beyond the solid phase that Taylor expansions are limited to, such as melting or diffusion.

Lithium ion diffusivity

Si and GaN are still relatively simple systems from a molecular modelling standpoint and therefore served as good candidates for observing improvements in accuracy, without confounding variables such as complicated chemical forces or other difficult training data. These systems alone, however, may not be as commensurate with other efforts of the community to study systems with more element types and more complicated dynamical properties. We therefore seek evermore difficult multi-element systems and dynamical properties to benchmark our linear and nonlinear models on. For this purpose we turn to Li ion conductors, which were recently used in literature to exhibit profound ability to match AIMD properties with state-of-the-art graph neural network potentials [36].

Li ion superionic conductors are solids that exhibit Li diffusivity on par with liquid electrolytes [37]. These materials are of recent interest in the energy storage community because they may contribute to the commercialization of all-solid-state batteries [38]. It is therefore of interest for researchers to study the atomistic mechanisms allowing for superionic diffusion, which may allow engineering of materials with favorable diffusion or synthesis properties [39]. To achieve this level of atomistic insight, interatomic potentials for Li superionic conductors are required. ML potentials for Li ion systems to date use deep neural networks that resulted in excellent agreement with AIMD diffusion [36, 40]. These systems remain somewhat challenging for the ML potential community, with only a few NN potentials available. It may therefore be believed that NN models are required to model such systems with 3-4 element types and unique diffusion mechanisms. This model complexity of existing Li ion potentials therefore suggests that energies and forces cannot be represented by low order functional forms of atom-centered descriptors.

To test whether NNs offer advantages for modelling Li superionic conductors as we saw with Si and GaN, we curate an AIMD data set for Li\(_{10}\)Ge(PS\(_{6}\))\(_{2}\) (LGPS). This dataset contains AIMD trajectories from 300 K to the melting point of around 2000 K, with more details in the SI. We trained a variety of potentials to 5000 AIMD configurations sampled across these temperatures. From our experience with Si and GaN in Figs. 2 and 4, we compare force saturation errors since this is a fair comparison for how well the models can fit the PES. Force errors associated with our best fits are tabulated in Table 1. Validation will be performed by simulating ion diffusion at 600 K.

Here we use linear SNAP with \(j_{max} = 4\) and NN SNAP with \(j_{max} = 3\) and \(j_{max} = 4\).

To benchmark more linear models on Li conductors, we include another recently developed potential based on proper orthogonal decomposition [12]. POD descriptors use a form of proper orthogonal decomposition traditionally applied in continuum mechanics, formulated for discrete atomistic applications. Our POD potential here has a total of 3314 coefficients composed from 11 2-body descriptors and 80 3-body descriptors. These POD descriptors treat multi-element interactions such that each atom pair or triplet has its own coefficients, but use the same basis functions, so that the computational cost is independent of the number of elements. The number of fitting coefficients for our linear SNAP model is number of descriptors times number of elements resulting in \(56 \times 4 = 224\) fitting coefficients. It is therefore not surprising that linear POD alone obtains much better force errors than linear SNAP. As has been seen before with Si and GaN, simply adding more descriptors to a model results in a notable increase in accuracy. Adding SNAP descriptors to POD for Li\(_{10}\)Ge(PS\(_{6}\))\(_{2}\) (LGPS) also reduced force error from 63.3 meV/Å to 48.1 meV/Å. Note there are diminishing returns on accuracy with increased basis size, as was mentioned in Wood et al. [19].

To observe the effect of model complexity on simulating ion diffusion, we first focus on SNAP descriptors input to linear and NN models. MD simulation results for our LGPS data set and a literature LiPS data set are shown in Fig. 6.

LGPS and LiPS structure and diffusivity. (a) LGPS RDFs for Li ions using linear and NN SNAP potentials compared to AIMD at 600 K. (b) LGPS mean square displacement at 600 K for the SNAP potentials. (c) LiPS RDFs for Li ions using linear and NN SNAP potentials, showing the linear SNAP could not properly stabilize LiPS with the given literature trajectory. (d) LiPS mean square displacement at 520 K simulated with NN SNAP potentials and data taken from the NequIP GNN potential results from literature [36].

From 6 we see a clear improvement in MD simulations of ion diffusion using NN SNAP compared to linear SNAP. It is important to note that these comparisons involved the same SNAP descriptors for \(j_{max} = 4\); we did not optimize SNAP hyperparameters for linear models as was successfully done for other complicated multi-element systems [28]. If we chose this route for linear SNAP, it may be possible to include metrics like RDF or MSD as objective functions, and use multi-objective optimization of SNAP hyperparameters to find linear fits that perform as well as NNs. Such a procedure, however, was not required for NN SNAP. Overall for Li\(_{10}\)Ge(PS\(_{6}\))\(_{2}\) (LGPS), NN SNAP exhibits better agreement with AIMD Li ion diffusivity compared to linear SNAP, as well as a nearly two-fold improvement in force errors.

For LiPS, we use the data set available in literature which consists of 520 K AIMD trajectories [36]. We were unable properly stabilize LiPS using linear SNAP with this data set; for Li\(_{10}\)Ge(PS\(_{6}\))\(_{2}\) (LGPS) we required high temperature AIMD data up to 1600 K for stabilizing linear SNAP. This is seen by the disagreement of RDFs in Fig. 6(c). NN SNAP, on the other hand, does not require high temperature configurations to stabilize this system; the existing LiPS data set in literature was therefore sufficient to accurately model the structure of Li ions. In Fig. 6(d) we see the improvement offered by using NN SNAP with 56 descriptors (\(j_{max} = 4\)) compared to 31 descriptors (\(j_{max} = 3\)). The best NN SNAP potential exhibits much closer agreement to the AIMD diffusion curve compared to linear SNAP, although not as well as the state-of-the-art graph based NequIP model. This is not suprising considering the extremely impressive force MAE of 4.7 meV/Å achieved by NequIP for LiPS [36]. Our best NN SNAP model obtained a force MAE of 80 meV/Å with computational cost of \(\sim\) \(1 \times 10^{-4}\) s per timestep per atom per CPU core.

Figure 6 might suggest that nonlinear models are simply better or necessary for modelling mass transfer in complex multi-element systems, but this may not be the case. A linear basis with sufficiently detailed descriptors can also achieve the same level of AIMD agreement. We demonstrate this for Li\(_{10}\)Ge(PS\(_{6}\))\(_{2}\) (LGPS) using the recently developed POD descriptors with a linear model. Results of LGPS Li ion diffusion simulations using POD [12] are shown in Fig. 7.

Linear POD simulation results for LGPS. (a) Radial distribution function of Li ions with all other atoms, comparing AIMD and POD. (b) Diffusivity simulations for LGPS at 600 K. POD was trained on the same LGPS dataset as NN-SNAP. This shows that a complex linear model with a sufficient number fitting coefficients can achieve similar AIMD stability and diffusion agreement compared to more flexible NN models.

As seen in Fig. 7(a), linear POD exhibits excellent agreement with AIMD Li ion RDF structure while also simulating the correct diffusion rate in Fig. 7(b). Our results here show, for the first time, the ability of linear models to accurately model diffusion in Li superionic conductors. Linear models possess pros and cons compared to deep learning methods, so this is a worthy addition to the molecular modelling toolkit used by researchers. If more accuracy is ever desired, our results with SNAP descriptors show that simply feeding these features into a NN can result in a two-fold improvement in force accuracy. The resulting impact on simulated properties is positive, evidenced by our investigation of Si, GaN, Li\(_{10}\)Ge(PS\(_{6}\))\(_{2}\) (LGPS), and LiPS.

Discussion

We observed improvement in simulated properties calculated with nonlinear models that use the same features as linear models. This improvement is attributed to the enhanced force accuracy offered by more flexible nonlinear models compared to linear models. A fair comparison to quantify this force improvement was achieved by varying loss function force/energy weight hyperparameters, where it was found that all models saturate at a different agreement in forces. This observation of a force saturation level may be used by researchers to fairly determine which models best match the shape of the PES, irrespective of loss function energy/force weight hyperparameters. Our observation on the correlation between force accuracy and simulated property accuracy can also aid researchers in determining a force threshold required for their particular application; this is important if researchers want to choose the simplest or cheapest model for the task at hand.

The correlation between force accuracy and simulated property accuracy is best observed with properties that are less prone to cancellation of errors, such as vibrational frequencies. Higher order properties that are measured by a thermodynamic sampling of states such as thermal conductivity, mass diffusivity, and liquid structure, on the other hand, are known to exhibit cancellation of errors where potentials can achieve poor force/energy errors but still produce accurate property values [35]. It is also important to note that force/energy accuracy is not always enough for obtaining usable potentials that perform stable MD simulations and property prediction [31]. For our data sets and models, however, we showed that the improvement in force errors when using NNs does not sacrifice MD stability. We observe here that our potentials with most accurate forces exhibit the best property agreement. This was achieved with Si, GaN, and LGPS by simply feeding descriptors traditionally used with linear models, such as SNAP and ACE, into quadratic or NN models.

Despite the improved accuracy from using NNs with a given set of atom-centered descriptors, it is important to note that linear models with a sufficient basis and larger number of fitting coefficients can outperform NNs with a less descriptive basis or fewer features. We showed this using the more complicated Li ion systems. For LGPS and LiPS, an original set of 56 SNAP descriptors input to a linear model was not sufficient to accurately model diffusion. With the smaller diversity in training data for the LiPS set [36], we even had difficulty stabilizing the linear model for MD simulations. Using this original set of 56 SNAP descriptors with a NN model greatly improved stability along with the simulated mass diffusivity. This does not mean NNs are required to obtain such accuracies, however, as we illustrated with the recently developed POD descriptors [12]. Our POD potential involves a linear model that uses a total of 91 descriptors and 3314 fitting coefficients; this resulted in better force errors than NN-SNAP with 56 descriptors input to a 32 \(\times\) 32 NN architecture, and similar agreements in mass diffusivity. With the systems we studied here, we therefore cannot claim that particular materials or properties always require nonlinear model forms for reliable simulations. For simpler/smaller sets of descriptors, however, nonlinear model forms are required to achieve the desired level of ab initio property agreement showed in this study (1% error in phonon frequencies, 0.01 MAE in RDF, and < 10 % error in thermal conductivity and mass diffusivity). Nonetheless, the results herein show that a linear model with a sufficient basis and number of fitting coefficients can achieve similar results compared to NNs. It remains to be seen in future work if this will always be the case for systems that are more complicated than the four element LGPS system studied here, such as rare earth metals [41], metal organic frameworks [42], or many-element alloys [43].

Aside from more complicated chemical systems, NNs might also possess an advantage in more complicated simulated properties. Indeed, our study is limited to properties at equilibrium such as liquid structure, phonon frequencies, and transport properties. All of these properties require small extrapolations from an equilibrium structure. It is therefore paramount that researchers heavily weight the equilibrium structure when making potentials for equilibrium properties. For example, calculating phonon frequencies on a structure with non-zero forces will result in negative frequencies. This can be alleviated by assigning larger weights in the loss function of Eq. 7 for the equilibrium configurations, or oversampling the equilibrium configurations when training. For non-equilibrium phenomena, however, one may need to sufficiently model multiple equilibrium states as well as transitions between these states. In such non-equilibrium scenarios, active learning approaches may also be necessary to gather appropriate data, instead of fitting purely to AIMD simulations at equilibrium like we did here. This is currently a topic of work applied to NN and linear models [14, 44].

Conclusion

Overall we sought to answer the original question of how much gain in accuracy can be expected by feeding descriptors into different ML models, where we saw up to a 50% improvement in force accuracy when using nonlinear models such as NNs compared to linear models. For the Si, GaN, and Li ion systems studied here, this resulted in significant improvement in simulated property errors with respect to AIMD simulations. We can therefore scale up ab initio accuracy to significantly larger length and time scales by simply taking existing and widely used descriptors and feeding them into nonlinear models. Despite this improvement when using nonlinear models with SNAP and ACE descriptors, we also showed that linear models with a sufficient basis can achieve property simulations on par with NNs. We showed this using linear POD with more fitting coefficients than our NN models. This is an important result because linear and NN regression both have unique advantages and disadvantages, and molecular modelling researchers may use both of these capabilities with the publicly available tools developed herein.

A fruitful topic for future work is to investigate whether simpler descriptors (e.g. 56 SNAP descriptors) input to complicated models (e.g. NN) possess advantages/disadvantages compared to complex descriptors (e.g. thousands of POD or ACE descriptors) input to simple models (e.g. linear). There may be other advantages and disadvantages of linear and NN models not studied in detail here, such as MD stability/usability or extrapolation ability in non-equilibrium events such as chemical reactions. The ability to use both linear and nonlinear models expands the community toolbox for such future studies, and for creating potentials that describe a variety of systems/scenarios. To this end, we created FitSNAP as an open-source software possessing all the abilities shown in this manuscript; fitting linear and NN models with different descriptors such as SNAP or ACE, then immediately deploying the model for high performance MD in LAMMPS [45]. Our linear POD potential is also available as a LAMMPS package where users can perform linear regression with training data and immediately use the potential after. To conclude, we presented a variety of potentials available for researchers in the open-source LAMMPS/FitSNAP ecosystem and benchmarked expected improvements in fitting and simulation accuracy from using such models for simulating transport properties.

Methods

All models with SNAP and ACE descriptors were trained using the FitSNAP software, which we provide open-sourced with full documentation on linear and NN training procedures. The POD potential was trained using least squares on a linear system of equations, as implemented in the LAMMPS ML-POD package. NN models were trained using a modified form of iterated backpropagation [46]. The iterated backpropagation algorithm we implemented in FitSNAP is as follows.

-

1.

Calculate all descriptors and their spatial derivatives in LAMMPS.

-

2.

Perform a forward pass that builds a computational graph in an automatic differentation framework; we use PyTorch [16].

-

3.

Perform a backward pass to obtain the derivatives of the NN output with respect to inputs i.e. the array of values \(\beta _{jk}\) defined in Eq. 6.

-

4.

Using the array \(\beta _{jk}\), calculate atomic forces in LAMMPS with Eq. 5

-

5.

Perform a second forward pass in PyTorch to calculate loss function defined in Eq. 7

-

6.

Perform a second backward pass to obtain loss function derivatives with respect to model coefficients, which are used in gradient descent minimization.

A key aspect of this iterated backpropagation method is that it eliminates the need to store gradients of model outputs with respect to model coefficients for the entire batch. Instead, we only need to store loss function derivatives with respect to model coefficients. Previous implementations of force training were strongly limited by available physical memory [47]. Relaxing this constraint makes it possible to explore more diverse combinations of model complexity and force training protocols, such as larger models and/or batch sizes.

Data availibility

The FitSNAP software and associated examples for training interatomic potentials are available on our FitSNAP GitHub page at https://github.com/FitSNAP/FitSNAP. More training data is located at https://github.com/FitSNAP/fitsnap-datasets/. The software for fitting POD potentials is obtained from the LAMMPS ML-POD package.

References

K. Choudhary, B. DeCost, C. Chen, A. Jain, F. Tavazza, R. Cohn, C.W. Park, A. Choudhary, A. Agrawal, S.J. Billinge et al., Recent advances and applications of deep learning methods in materials science. npj Comput. Mater. 8(1), 59 (2022)

H. Tafrishi, S. Sadeghzadeh, R. Ahmadi, Molecular dynamics simulations of phase change materials for thermal energy storage: a review. RSC Adv. 12(23), 14776–14807 (2022)

N. Yao, X. Chen, Z.-H. Fu, Q. Zhang, Applying classical, ab initio, and machine-learning molecular dynamics simulations to the liquid electrolyte for rechargeable batteries. Chem. Rev. 122(12), 10970–11021 (2022)

J. Vandermause, Y. Xie, J.S. Lim, C.J. Owen, B. Kozinsky, Active learning of reactive Bayesian force fields applied to heterogeneous catalysis dynamics of h/pt. Nature Commun. 13(1), 5183 (2022)

Q. Bai, S. Liu, Y. Tian, T. Xu, A.J. Banegas-Luna, H. Pérez-Sánchez, J. Huang, H. Liu, X. Yao, Application advances of deep learning methods for de novo drug design and molecular dynamics simulation. Wiley Interdiscip. Rev.: Comput. Mol. Sci. 12(3), 1581 (2022)

V.L. Deringer, M.A. Caro, G. Csányi, Machine learning interatomic potentials as emerging tools for materials science. Adv. Mater. 31(46), 1902765 (2019)

Y. Mishin, Machine-learning interatomic potentials for materials science. Acta Mater. 214, 116980 (2021)

A.P. Bartók, R. Kondor, G. Csányi, On representing chemical environments. Phys. Rev. B 87(18), 184115 (2013)

A.P. Thompson, L.P. Swiler, C.R. Trott, S.M. Foiles, G.J. Tucker, Spectral neighbor analysis method for automated generation of quantum-accurate interatomic potentials. J. Comput. Phys. 285, 316–330 (2015)

R. Drautz, Atomic cluster expansion for accurate and transferable interatomic potentials. Phys. Rev. B 99(1), 014104 (2019)

J. Behler, M. Parrinello, Generalized neural-network representation of high-dimensional potential-energy surfaces. Phys. Rev. Lett. 98(14), 146401 (2007)

N.-C. Nguyen, A. Rohskopf, Proper orthogonal descriptors for efficient and accurate interatomic potentials. J. Comput. Phys. 480, 112030 (2023)

C.W. Park, M. Kornbluth, J. Vandermause, C. Wolverton, B. Kozinsky, J.P. Mailoa, Accurate and scalable graph neural network force field and molecular dynamics with direct force architecture. npj Comput. Mater. 7(1), 73 (2021)

A. Zhu, S. Batzner, A. Musaelian, B. Kozinsky, Fast uncertainty estimates in deep learning interatomic potentials. Preprint at http://arxiv.org/abs/2211.09866 (2022)

A. Rohskopf, C. Sievers, N. Lubbers, M. Cusentino, J. Goff, J. Janssen, M. McCarthy, D.M.O. Zapiain, S. Nikolov, K. Sargsyan, D. Sema, E. Sikorski, L. Williams, A. Thompson, M. Wood, FitSNAP: atomistic machine learning with LAMMPS. J. Open Source Softw. 8(84), 5118 (2023)

A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga, et al., Pytorch: an imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 32 (2019)

J. Bradbury, R. Frostig, P. Hawkins, M.J. Johnson, C. Leary, D. Maclaurin, G. Necula, A. Paszke, J. VanderPlas, S. Wanderman-Milne, et al., Jax: composable transformations of python+ numpy programs (2018)

M.A. Cusentino, M.A. Wood, A.P. Thompson, Explicit multielement extension of the spectral neighbor analysis potential for chemically complex systems. J. Phys. Chem. A 124(26), 5456–5464 (2020)

M.A. Wood, A.P. Thompson, Extending the accuracy of the snap interatomic potential form. J. Chem. Phys. 148(24), 241721 (2018)

Y. Zuo, C. Chen, X. Li, Z. Deng, Y. Chen, J. Behler, G. Csányi, A.V. Shapeev, A.P. Thompson, M.A. Wood et al., Performance and cost assessment of machine learning interatomic potentials. J. Phys. Chem. A 124(4), 731–745 (2020)

K. Hornik, M. Stinchcombe, H. White, Multilayer feedforward networks are universal approximators. Neural Netw. 2(5), 359–366 (1989)

B.C. Csáji et al., Approximation with artificial neural networks. Faculty of Sciences, Etvs Lornd University 24(48), 7 (2001)

Y. Lysogorskiy, C.V.D. Oord, A. Bochkarev, S. Menon, M. Rinaldi, T. Hammerschmidt, M. Mrovec, A. Thompson, G. Csányi, C. Ortner et al., Performant implementation of the atomic cluster expansion (pace) and application to copper and silicon. npj Comput. Mater. 7(1), 97 (2021)

A.P. Bartók, J. Kermode, N. Bernstein, G. Csányi, Machine learning a general-purpose interatomic potential for silicon. Phys. Rev. X 8(4), 041048 (2018)

M.A. Wood, M.A. Cusentino, B.D. Wirth, A.P. Thompson, Data-driven material models for atomistic simulation. Phys. Rev. B 99(18), 184305 (2019)

S. Nikolov, M.A. Wood, A. Cangi, J.-B. Maillet, M.-C. Marinica, A.P. Thompson, M.P. Desjarlais, J. Tranchida, Data-driven magneto-elastic predictions with scalable classical spin-lattice dynamics. npj Comput. Mater. 7(1), 153 (2021)

K. Nguyen-Cong, J.T. Willman, S.G. Moore, A.B. Belonoshko, R. Gayatri, E. Weinberg, M.A. Wood, A.P. Thompson, I.I. Oleynik, Billion atom molecular dynamics simulations of carbon at extreme conditions and experimental time and length scales. In: Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, pp. 1–12 (2021)

E. Sikorski, M. Cusentino, M. McCarthy, J. Tranchida, M. Wood, A. Thompson, Machine learned interatomic potential for dispersion strengthened plasma facing components. Preprint at http://arxiv.org/abs/2212.01432 (2022)

D.F. Toit, V.L. Deringer, Cross-platform hyperparameter optimization for machine learning interatomic potentials. J. Chem. Phys. 10(1063/5), 0155618 (2023)

D. Oca Zapiain, M.A. Wood, N. Lubbers, C.Z. Pereyra, A.P. Thompson, D. Perez, Training data selection for accuracy and transferability of interatomic potentials. npj Comput. Mater. 8(1), 189 (2022)

X. Fu, Z. Wu, W. Wang, T. Xie, S. Keten, R. Gomez-Bombarelli, T. Jaakkola, Forces are not enough: Benchmark and critical evaluation for machine learning force fields with molecular simulations. Preprint at http://arxiv.org/abs/2210.07237 (2022)

J. Tsao, S. Chowdhury, M. Hollis, D. Jena, N. Johnson, K. Jones, R. Kaplar, S. Rajan, C. Walle, E. Bellotti et al., Ultrawide-bandgap semiconductors: research opportunities and challenges. Adv. Electron. Mater. 4(1), 1600501 (2018)

E. Minamitani, M. Ogura, S. Watanabe, Simulating lattice thermal conductivity in semiconducting materials using high-dimensional neural network potential. Appl. Phys. Express 12(9), 095001 (2019)

X. Gao, F. Ramezanghorbani, O. Isayev, J.S. Smith, A.E. Roitberg, Torchani: a free and open source pytorch-based deep learning implementation of the ani neural network potentials. J. Chem. Inf. Model. 60(7), 3408–3415 (2020)

A. Rohskopf, S. Wyant, K. Gordiz, H.R. Seyf, M.G. Muraleedharan, A. Henry, Fast & accurate interatomic potentials for describing thermal vibrations. Comput. Mater. Sci. 184, 109884 (2020)

S. Batzner, A. Musaelian, L. Sun, M. Geiger, J.P. Mailoa, M. Kornbluth, N. Molinari, T.E. Smidt, B. Kozinsky, E (3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials. Nature commun. 13(1), 2453 (2022)

J.B. Boyce, B.A. Huberman, Superionic conductors: transitions, structures, dynamics. Phys. Rep. 51(4), 189–265 (1979)

Y. Kato, S. Hori, R. Kanno, Li10gep2s12-type superionic conductors: synthesis, structure, and ionic transportation. Adv. Energy Mater. 10(42), 2002153 (2020)

X. He, Q. Bai, Y. Liu, A.M. Nolan, C. Ling, Y. Mo, Crystal structural framework of lithium super-ionic conductors. Adv. Energy Mater. 9(43), 1902078 (2019)

G. Winter, R. Gómez-Bombarelli, Simulations with machine learning potentials identify the ion conduction mechanism mediating non-Arrhenius behavior in lgps. Preprint at http://arxiv.org/abs/2211.05713 (2022)

K. Hachiya, Y. Ito, Interatomic potentials for rare-earth metals. J. Phys.: Condens. Matter. 11(34), 6543 (1999)

B.J. Bucior, N.S. Bobbitt, T. Islamoglu, S. Goswami, A. Gopalan, T. Yildirim, O.K. Farha, N. Bagheri, R.Q. Snurr, Energy-based descriptors to rapidly predict hydrogen storage in metal-organic frameworks. Mol. Syst. Des. Eng. 4(1), 162–174 (2019)

E.P. George, D. Raabe, R.O. Ritchie, High-entropy alloys. Nature Rev. Mater. 4(8), 515–534 (2019)

Y. Lysogorskiy, A. Bochkarev, M. Mrovec, R. Drautz, Active learning strategies for atomic cluster expansion models. Phys. Rev. Mater. 7(4), 043801 (2023)

A.P. Thompson, H.M. Aktulga, R. Berger, D.S. Bolintineanu, W.M. Brown, P.S. Crozier, P.J. Veld, A. Kohlmeyer, S.G. Moore, T.D. Nguyen et al., Lammps-a flexible simulation tool for particle-based materials modeling at the atomic, meso, and continuum scales. Comput. Phys. Commun. 271, 108171 (2022)

J.S. Smith, N. Lubbers, A.P. Thompson, K. Barros, Simple and efficient algorithms for training machine learning potentials to force data. Preprint at http://arxiv.org/abs/2006.05475 (2020)

A. Singraber, T. Morawietz, J. Behler, C. Dellago, Parallel multistream training of high-dimensional neural network potentials. J. Chem. Theory Comput. 15(5), 3075–3092 (2019). https://doi.org/10.1021/acs.jctc.8b01092. (PMID: 30995035)

Acknowledgments

This paper describes objective technical results and analysis. Any subjective views or opinions that might be expressed in the paper do not necessarily represent the views of the U.S. Department of Energy or the United States Government. A.R., J.G., A.T., and M.W. acknowledge funding support from the Exascale Computing Project (17-SC-20-SC), a collaborative effort of the U.S. Department of Energy Office of Science and the National Nuclear Security Administration, and U.S. Department of Energy, Office of Fusion Energy Sciences (OFES) under Field Work Proposal Number 20-023149. Dr. Nguyen and Dionysios Sema acknowledge the United States Department of Energy under contract DE-NA0003965. Dr. Nguyen also acknowledges the Air Force Office of Scientific Research under Grant No. FA9550-22-1-0356 for supporting his work. K.G. and A.H. acknowledge support from the National Science Foundation (NSF) career award to A.H. (award no. 1554050) and the Office of Naval Research (ONR) under a MURI program (Grant No. N00014-18-1-2429). This article has been authored by an employee of National Technology and Engineering Solutions of Sandia, LLC under Contract No. DE-NA0003525 with the U.S. Department of Energy (DOE). The employee owns all right, title and interest in and to the article and is solely responsible for its contents. The United States Government retains and the publisher, by accepting the article for publication, acknowledges that the United States Government retains a non-exclusive, paid-up, irrevocable, world-wide license to publish or reproduce the published form of this article or allow others to do so, for United States Government purposes. The DOE will provide public access to these results of federally sponsored research in accordance with the DOE Public Access Plan https://www.energy.gov/downloads/doe-public-access-plan.

Author information

Authors and Affiliations

Contributions

AR developed software to support ML models in FitSNAP and LAMMPS, along with curating data sets and property simulations. JG implemented ACE descriptors in FitSNAP. DS further aided in training and development. NCN trained POD potentials and aided with the LAMMPS implementation in the ML-POD package. KG and AH helped curate LGPS training data and mass diffusion calculations. APT and MAW led the development of FitSNAP and LAMMPS to support new features seen in this study.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rohskopf, A., Goff, J., Sema, D. et al. Exploring model complexity in machine learned potentials for simulated properties. Journal of Materials Research 38, 5136–5150 (2023). https://doi.org/10.1557/s43578-023-01152-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1557/s43578-023-01152-0