Abstract

Implementing Artificial Intelligence for chemical applications provides a wealth of opportunity for materials discovery, healthcare and smart manufacturing. For such applications to be successful, it is necessary to translate the properties of molecules into a digital format so they can be passed to the algorithms used for smart modelling. The literature has shown a wealth of different strategies for this task, yet there remains a host of limitations. To overcome these challenges, we present two-dimensional images of chemical structures as molecular representations. This methodology was evaluated against other techniques in both classification and regression tasks. Images unlocked (1) superior augmentation strategies, (2) application of specialist network architectures and (3) transfer learning, all contributing to superior performance and without prior specialised knowledge on cheminformatics required. This work takes advantage of image feature maps which do not rely on chemical properties and so can represent multi-component systems without further property calculations.

Graphical abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Accurately representing the properties of molecules is a critical challenge in the adoption of Artificial Intelligence (AI) for chemical applications. Thus, molecular representation is defined as the process of capturing the complex molecular detail and converting it to a machine-readable form, which can be used as inputs for AI algorithms. Approaches to molecular representation include molecular descriptors, fingerprints, Simplified Molecular Input Line Entry System (SMILES), and molecular graphs with information stored in a variety of encoded file formats as reviewed by [1]. These approaches vary in success but are all difficult for a common user to implement and often require significant computational resources. For an algorithm to make use of the input, the representation of a molecule must either effectively identify key properties that can be correlated to a target, perhaps on a multidimensional scale, or the representation must determine a degree of structural similarity between input molecules. In this work, the networks extract feature maps from images that do not rely on chemical structure or property details.

The use of AI is especially relevant to industrial manufacturing of chemical products, in particular pharmaceuticals [2]. As focus shifts towards Industry 4.0, companies are striving for the widespread adoption of AI to overcome high attrition rates and achieve overall more sustainable manufacturing approaches [3]. Therefore, as a case study, to showcase the potential of image inputs, two open-source datasets were selected each representing key challenges in chemical manufacturing. As pharmaceuticals account for a large portion of the research in this space, these sets were chosen to focus on key areas of interest in solid-state chemistry, a critical step in the drug discovery process.

Solid form impacts both the manufacturing process and efficacy of pharmaceutical products [4, 5]. Crystallisation is used as a primary purification technique to isolate Active Pharmaceutical Ingredients (API) during synthesis. Altering crystallisation conditions has the potential to impact both the physical and chemical properties of the solid-state API [6]. Furthermore, manufacturing processes must consider the solid form, as downstream processing relies heavily on bulk properties such as particle size and flowability [7]. A further level of complexity can be introduced through the synthesis of Co-Crystals (CC)s [8]. These multi-component materials can be carefully designed to offer increased solubility, bioavailability, and stability [9]. Due to the vast degree of variation which can be induced by changing the crystallisation components and methods, the process must be carefully engineered to produce the most desirable critical quality attributes.

Molecular descriptors are often the first choice for molecular representation in chemical applications of machine learning. Reasons for this include ready availability from open-source software packages and their growing historical record of publication. Examples that focus on the solid form engineering applications relevant to the case study presented in this work include crystallisation propensity, CC propensity, solubility, and even amorphous properties such as glass forming ability [10,11,12,13,14,15,16]. Descriptors represent tangible molecular properties that a user can readily translate into practical terms which can be controlled experimentally to affect the application’s target [17]. This is not a perfect scenario; however, as searching vast multidimensional inputs can make it difficult to gather data sufficiently large to cover the search space as well as making identification of specific important properties a challenge. If feature importance rankings are desirable, one is also unable to use dimensionality reduction to combat such limitations, as the original features are not preserved in such methods. As work into molecular representation has advanced, other methods of generating meaningful representations have been reported, which generate varying numbers of molecular descriptors [18], creature unique molecular fingerprints [19, 20], or generate features through unsupervised learning [21]. In addition, the use of molecular graphs has attracted recent attention as discussed by [1]. Although promising candidates for molecular representation, in this study, molecular graphs have been excluded due to the lack of evidence in their use representing multi-component chemical systems. Furthermore, graphs have been identified as computationally demanding and do not offer the wider accessibility to AI methods that the authors hope using images can provide. The literature suggests that results vary depending on the choice of molecular representation used and so comparison against all suitable methods was essential for any new method [22]. To date, chemical applications of AI typically focus on testing different machine-learning or deep-learning algorithms and as such, there is no consideration as to if the information within the chemical representation is optimal.

During testing different molecular representations, capturing Three-Dimensional (3D) information, a feature heavily dependent on molecular conformation is particularly challenging. Most software implementations use Simplified Molecular Input Line Entry System (SMILES) as inputs which are Two Dimensional (2D), thus, forcing 3D information to be approximated from a predicted molecular conformation rather than the one observed experimentally [23]. SMILES is also challenging to work with as multiple SMILES can represent the same molecule; therefore, to overcome this, canonical SMILES was proposed [24]. It should be noted that the different representations are not necessarily detrimental to performance, and this has even been leveraged as an augmentation strategy when training models and so should not alone be responsible for avoiding the use of such notation [25]. Finally, and most notably, often molecular representation software fails for certain molecules, something which proves very unhelpful when both generating training data and even more so if testing molecules of interest in a deployment scenario.

When considering the context of this work, there are yet further limitations to consider. For tasks involving multiple components, such as CC prediction, many representations often fall short in their ability to represent the system as a whole, as the employed methods typically see a simple concatenation of the individual component descriptor sets. This fails to account for any emergent properties present as a result of the mixed components. Multicomponent solvents are a prominent example of this issue, where using descriptors of each component does not accurately describe the properties of the mixture itself [26]. Images are able to overcome this limitation, as the feature map extracted by the convolutional layers is independent of the chemical properties, and as such, there is no information loss or misrepresentation during the concatenation process. This means that there is no need to calculate emergent chemical properties to use as descriptor style features for predicting a desired target.

This work takes its inspiration from the use of deep learning for non-traditional image recognition tasks. Such applications have been showcased by [27] in their book, including sound classification, fraud detection, and malware identification. These examples highlight the potential for representing data as images, even when doing so is not an immediately obvious step. By using images to represent data, transfer learning can be implemented to take advantage of pre-trained network architectures that have been rigorously researched and assessed for their capability to accurately classify images. Assessment for such networks can be seen in the annual ImageNet Large-Scale Visual Recognition Challenge [28]. Combining the simplicity of generating chemical structures with these specialist network architectures offers vast potential in improving the performance of intelligent models both within solid form engineering and in wider chemical applications. At the time of writing, literature has recorded two examples of images used as inputs to models for chemical applications of deep learning [29, 30].

In this work, transfer learning with ResNet architectures was applied for improved performance. We provide for the first time, comprehensive comparisons to other methods of molecular representations using publicly available datasets. Previously untested datasets were used to prove that the methods can be extended to new applications as well as showing that images are suitable inputs to deep-learning models in both classification and regression tasks. Previous work assesses convolutional neural networks against other machine-learning methods, but there is no comparison between molecular inputs. Finally, we demonstrate the modelling of multi-component systems where two inputs must be mapped to one output, something as yet unseen in chemical literature to the best of our knowledge. To overcome the limitations associated with current molecular representations, this work demonstrates that the use of images representing a molecule’s skeletal structure captures sufficient, relevant features. This representation is not only in a machine-readable format, but the images can easily be generated by a user through the use of readily available molecular drawing packages. The advantages of image inputs were evaluated by comparing the performance against published models with a number of different molecular representations. All the datasets and methods used to assess performance in this work have been made open-source and are accessible alongside their respective publications.

Results and discussion

Model performance

Metrics for all of the models are shown in Table 1. In all cases, the ResNet models with augmentations outperformed all other models.

Attributing the performance to the use of images alone would paint a misleading picture. As previously mentioned, ResNet architectures are highly specialised for their intended deep-learning applications which will undoubtedly contribute to a portion of the observed performance gain. Further consideration must also be assigned to the additional advantages that are available through the use of augmentations that can be leveraged when working with images as model inputs. Data augmentation is known to improve a model’s ability to generalise. When augmenting the inputs, a user is artificially enlarging both the diversity and the size of the training data which in turn improves its performance and reduces the risk of overfitting [31]. The value in these augmentations is made especially clear when looking at the solubility data set, where the model performs worse than those with other inputs when augmentations are not used. In both data sets there is a significant performance gain suggesting that augmentation strategies should be common practice when using image models.

During training, the models used learning rate decay and as such it is essential to consider the risk of overfitting. Figure 1 shows the loss for the validation and training sets during the training cycle. As expected, the training loss gradually reduces over time; however, it is observed that towards the end of the training process, there is an increase in validation loss, which is indicative of overfitting. For this reason, it is essential to stop training at a suitable point so as to avoid modelling noise in the data.

Model evaluation

Despite the advantages of image inputs, as with all models, mistakes are present during inference. By visualising the validation examples in which the model was incorrect, the user can begin to identify problematic functional groups or molecular structures. Figure 2 shows validation examples in which the model was incorrect or uncertain about its prediction, displaying the input image with the predicted label, actual label, loss, and the probability which describes how certain the model is of its prediction. In the bottom right case, the model gets the prediction correct; however, it is uncertain; hence, the result makes it into the top candidates to assess in this case. The molecules displayed are taken during the final validation pass during the first iteration of the cross-validation process.

In the CC dataset, single-ring aromatic compounds with two functional groups attached to the ring (e.g. 3-Methylbenzamide) appear to cause problems for the model. By comparing the image models with and without augmentations applied, one can suggest that the augmentation strategy was not entirely effective in resolving this issue, as these structures appear in both cases. A more high-level analysis seems to suggest that the lower molecular weight structures, being those with more simple structures, are harder to accurately predict for. This follows logically as more simple structures will have less unique details and as such prove more difficult for the model to differentiate. Analysis methods like this are a clear advantage when compared to assessing vast descriptor tables, and conclusions can be further used to inform further experimental study or design new augmentation strategies in order to try and minimise the errors in predictions.

As the domain of the different inputs was not the same, it was important that testing was not limited exclusively to deep-learning methods. Experience and literature show that when working with tabular data, random forests often match or outperform neural networks [32]. This is especially apparent when working with datasets of limited size, or where the domain of the data prevents the use of domain specific-network architectures as is the case for tabular data [33]. Neural networks have seen vast success in applications including image recognition, speech recognition, and natural language processing; however, when working with tabular data, they present limitations. As such, measuring the advantages of using images and residual networks against random forests models was key to demonstrating that there truly is an advantage to the proposed method.

Input generation

Using meaningful and unique chemical identifiers remains a challenge when passing molecular information to a computer. In this work, SMILES was used to uniquely identify each molecule. Despite its popularity, SMILES still presents issues especially when they are the start point for representation calculations. SMILES is a 2D representation which lacks any kind of 3D conformational detail. As a result of this, when calculating 3D descriptors, there are assumptions that must be made, thus introducing uncertainty. Although this uncertainty is reproducible and consistent, it impacts how meaningful the features can be, something especially important to consider if a representation is used to correlate molecular properties to a given target. 3D factors affect the properties of a molecule and so it is therefore important that a user gives these details to the model with the greatest possible degree of accuracy.

In contrast, using images removes the challenge of extrapolating the dimensionality as they are, like the SMILES codes, 2D. Images are significantly less reliant on exact conformations for accurate representations compared to descriptor calculations, as different conformations of the molecule can be accounted for through careful selection of appropriate image augmentation steps. Furthermore, images rely on the network to extract meaningful features rather than providing specific chemical property information that is challenging to get completely correct. It is reasonable to argue that 2D images also lack the 3D chemical information that descriptors do, but the contrast in approach must be considered here. In a descriptor style model, the algorithm is aiming to correlate chemical properties to the output, where as in the images case, it is the feature map which is a result of the convolutional operations. As the convolutional features are not reliant on any chemical information and give no consideration to the context of the image they act upon, missing the 3D chemical detail is unlikely to limit the predictive power. Passing 3D molecular structures as inputs could well offer advantages but the increase in complexity and computational demand would be significant.

Data pre-processing and augmentations

The importance of careful image generation cannot be overstated in this work. When generating the training data, software packages with drawing tools or the ability to produce images are an important step in the process. For this work, RDKit was specifically chosen to ensure all images were reproducible and consistent, whilst additionally acting to minimise the level of diversity that can be introduced through hand drawing molecules. Deep-learning methods have been applied to hand-written digits and text and have even seen high levels of success despite the associated challenges. Training an effective image-processing network, which is able to translate hand-drawn structures to suitable inputs for property prediction is far beyond the scope of this work. The focus here is in extracting useful features that the network can map to the desired output and as such, it was necessary to remove as many of the common issues associated with manual drawing as was possible to maximise the potential for success. Even with the use of software tools, there is still a reasonable level of diversity that a user can introduce if they are to draw a molecule. Therefore, it is essential that the model is trained on more than a single example of each structure so that the network is exposed to as many conformational changes as it is likely to see. This is done through the use of augmentations. When using images as inputs, the variety of augmentation options far exceeds those compared to representations captured as tabular data. Despite the wealth of opportunity, image augmentations must be chosen with caution when working with molecules. Having generated uniform inputs where bond lengths, bond angles and functional group structures are reproducible, it is unnecessary to expose the network to overly distorted structures. This is because there is no reason for a distorted structure to be presented during inference, assuming the input generation remains controlled. As a result, any functional group that appears unusual to the network is a result of its genuine structure rather than a poorly constructed input. For this reason, augmentations involving distortions or warping were not included. There is a strong possibility that translations, rotations or reflections will be seen between molecules containing similar chemical structures. This is especially likely if a user was to generate structures manually using alternative software packages, as there is no universal system for reproducible structure drawing with a predefined orientation. In addition, if structures were to be generated manually, careful consideration must be given to ensure that the final image is the same pixel size as the images used to train the model. Convolutional neural networks are by design equipped to handle the translational effects and so augmentations in this work were only applied to cope with the effects of rotations and reflections rather than the position of the molecule in the image [34].

Deployment

Using images of molecular structures as inputs allows end users to easily generate inputs without requiring the programming expertise often needed for descriptor calculations. This approach is not only advantageous due to the accuracy of the predictions, but the simplicity associated with drawing chemical structures (even when software packages such as ChemDraw/RDKit are required) far outweighs the lengthy and complex process of calculating molecular descriptors. Furthermore, manually drawing structures for evaluation removes the challenges associated with failed descriptor calculations that often occur when working with molecules such as ions or salts. It is important that structures are generated using software packages as they maintain a level of consistency in aspects such as bond lengths, bond angles, and functional group notation. This image uniformity is likely important to maintaining performance. To account for a more diverse set of input images, augmentation strategies are recommended for future studies. This is particularly relevant when the images are generated from a wide range of software packages and passed to a single model. Although this was beyond the scope of this work, the authors suggest RanDepict as an effective solution to handling inputs from different drawing packages [35].

For high-throughput evaluation, images are faster to generate than the other inputs used in this work, and having the flexibility to either manually or autonomously generate a user readable input can only be advantageous. When trying to draw conclusions from the predictions, having a structure that users can see and interpret offers far more potential for success than scanning vast tables of seemingly arbitrary descriptor values. With that said, images are not able to correlate specific properties to the target label in the manner in which descriptors can. Such correlations often act across multiple dimensions making interpretation difficult as dimensionality reduction techniques must be implemented, destroying the original features.

Conclusions

This work presents the use of 2D chemical images as molecular representations allowing for the utilisation of transfer learning, taking advantage of specialist deep-learning network architectures and, thus, achieving a superior performance when compared to chemical descriptor models. Images also allow the user to leverage data augmentation which further increases the predictive capabilities of the model by expanding the size and diversity of the datasets. The evaluation process shows the potential of the models in both classification and regression tasks as well as providing benchmarks using other common forms of molecular representation to demonstrate the transfer learning approach has a clear advantage. Images as molecular representations do not require specialist chemometrics understanding. We foresee that this methodology will address limitations in descriptor and fingerprint methods widening the scope for the application of AI in the materials discovery community.

Methods

Datasets

The datasets selected represent key areas of interest in the field of chemical manufacturing and can be used as examples of both classification and regression tasks. The datasets in this work include aqueous solubility and co-crystal propensity via mechanochemistry, each regarding small organic molecules.

Aqueous solubility

This dataset contains unique SMILES codes and their corresponding logS solubility values in water at 25 °C. The full dataset used in this work was a combination of the open-source AqSolDB [36], and the data published by [37] Cleaning steps were applied to remove any repeated SMILES codes.

Co-crystal propensity via mechanochemistry

This dataset published by [14] offers 1000 co-crystallisation events and records their outcome as determined by powder X-ray diffraction. Crystalline products were recorded as 1 and amorphous products recorded as 0.

Data preparation

SMILES codes were used as unique chemical identifiers in all cases. These were employed as inputs when generating all of the representations used. The images were generated systematically using the RDKit cheminformatics Python package (https://github.com/rdkit/rdkit). Both the solubility images and CC images were generated at 250 × 250 pixels. In the CC dataset where two input molecules make up each data point, images were generated for each molecule individually and then stacked one above the other resulting in a 250 × 500 pixel image (see Fig. 3). The image sizes were chosen to balance the computation time required for training and the clarity of the molecular structure in the image. Using larger images gave rise to excessive white space, which in some cases impacted performance. Smaller images could not be easily interpreted by the end user; a limitation that significantly detracts from the interpretability of the predictions. It is important to note that preliminary work found using greyscale images provided no performance improvement; therefore, only colour images were used in this study

For assessing the model against literature methods, the following input styles were used:

-

Spectrophore [19]

-

PubChem Fingerprint [38]

-

Extended Connectivity Fingerprint (ECFP) [20]

-

MACCS Key Fingerprint [39]

-

Mol2Vec [21]

-

RDKit Descriptors (https://www.rdkit.org/)

-

Mordred Chemical Descriptors [18]

Mordred descriptors were generated using the Mordred Python package (https://github.com/mordred-descriptor/mordred). All other non-image representations were generated with DeepChem (https://github.com/deepchem/deepchem) and its inbuilt functionality [40]. In this work, any single descriptor that failed for all compounds was removed. In the case of the CC dataset, descriptors were individually calculated for each component and then combined through concatenation. Cleaning was applied independently for every representation calculated in an effort to minimise the number of descriptors lost to failed calculations. Where whole molecules failed to calculate they were removed. This changed the number of training examples; however, given the change in size was minimal, the benchmark was included. This was not true for the PubChem fingerprint on the solubility set where the failed calculation rate was too high to remain a useful benchmark.

Data augmentation

All of the data augmentation methods outlined below were applied only to the training dataset. Due to the nature of the different datasets, augmentation was applied independently to each dataset as outlined.

Solubility data augmentation

Rotations were applied (6 × 30°) to each training example first, and then the resulting images were subjected to three reflections. These reflections occurred along the horizontal axis, along the vertical axis and along both axes together. A full example of the augmentation process is shown in Fig. 4.

Co-crystal data augmentation

Components were additionally stacked in both possible positions, namely component 1 above component 2 and vice versa. Following this, all of the augmentations outlined for the solubility dataset were carried out (Fig. 4).

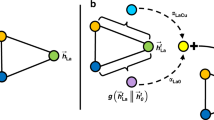

Model implementation

A ResNet architecture was used to evaluate the performance of images as inputs. Full details on ResNet architectures along with a comprehensive list of the model’s performance in competitions can be seen in the original publication showcasing their use [41]. The network was loaded with pre-trained weights from the ImageNet dataset, and two untrained fully connected layers were added at the end of the network see Fig. 5.

Implementation of the random forest algorithm was carried out using the Scikit-Learn python package.

Evaluation and metrics

For performance evaluation, each dataset was split into training and validation subsets using tenfold cross-validation. In every case, three independent trials were carried out to ensure any differences in performance were both statistically significant and not arising due to the seeding in the random aspects of the models. All the metrics recorded were calculated by taking the mean of the three trials. The metrics used to assess the performance were accuracy (ACC) and Area Under the Receiver Operating Characteristic Curve (ROC) for the classification task. In the regression task, R2, mean-squared error (MSE), root-mean-squared error (RMSE), and mean absolute error (MAE) were recorded. These metrics were chosen as they are all commonly used in the literature for evaluating AI models.

Code availability

The source code for this project is available at https://github.com/MRW-Code/images_as_molecular_representations.

References

L. David, A. Thakkar, R. Mercado, O. Engkvist, Molecular representations in AI-driven drug discovery: a review and practical guide. J. Cheminform. 12(1), 56 (2020). https://doi.org/10.1186/s13321-020-00460-5

S. Nagaprasad, D.L. Padmaja, Y. Qureshi, S.L. Bangare, M. Mishra, B.D. Mazumdar, Investigating the impact of machine learning in pharmaceutical industry. J. Pharm. Res. Int. 33, 6–14 (2021). https://doi.org/10.9734/JPRI/2021/v33i46A32834

K.-K. Mak, M.R. Pichika, Artificial intelligence in drug development: present status and future prospects. Drug Discov. Today 24, 773–780 (2019). https://doi.org/10.1016/j.drudis.2018.11.014

L.S. Taylor, D.E. Braun, J.W. Steed, Crystals and crystallization in drug delivery design. Cryst. Growth Des. 21(3), 1375–1377 (2021). https://doi.org/10.1021/acs.cgd.0c01592

C.R. Gardner, C.T. Walsh, Ö. Almarsson, Drugs as materials: valuing physical form in drug discovery. Nat. Rev. Drug Discov. 3(11), 926–934 (2004). https://doi.org/10.1038/nrd1550

J.K. Haleblian, Characterization of habits and crystalline modification of solids and their pharmaceutical applications. J. Pharm. Sci. 64(8), 1269–1288 (1975). https://doi.org/10.1002/jps.2600640805

N. Pudasaini, P.P. Upadhyay, C.R. Parker, S.U. Hagen, A.D. Bond, J. Rantanen, Downstream processability of crystal habit-modified active pharmaceutical ingredient. Org. Process Res. Dev. 21(4), 571–577 (2017). https://doi.org/10.1021/acs.oprd.6b00434

N. Qiao, M. Li, W. Schlindwein, N. Malek, A. Davies, G. Trappitt, Pharmaceutical cocrystals: an overview. Int. J. Pharm. 419(1), 1–11 (2011). https://doi.org/10.1016/j.ijpharm.2011.07.037

D.J. Good, N. Rodríguez-Hornedo, Solubility advantage of pharmaceutical cocrystals. Cryst. Growth Des. 9(5), 2252–2264 (2009). https://doi.org/10.1021/cg801039j

A. Ghosh, L. Louis, K.K. Arora, B.C. Hancock, J.F. Krzyzaniak, P. Meenan, S. Nakhmanson, G.P.F. Wood, Assessment of machine learning approaches for predicting the crystallization propensity of active pharmaceutical ingredients. CrystEngComm 21(8), 1215–1223 (2019). https://doi.org/10.1039/C8CE01589A

J.G.P. Wicker, R.I. Cooper, Will it crystallise? Predicting crystallinity of molecular materials. CrystEngComm 17(9), 1927–1934 (2015). https://doi.org/10.1039/C4CE01912A

A. Alhalaweh, A. Alzghoul, W. Kaialy, D. Mahlin, C.A.S. Bergström, Computational predictions of glass-forming ability and crystallization tendency of drug molecules. Mol. Pharm. 11(9), 3123–3132 (2014). https://doi.org/10.1021/mp500303a

J.G.P. Wicker, L.M. Crowley, O. Robshaw, E.J. Little, S.P. Stokes, R.I. Cooper, S.E. Lawrence, Will they co-crystallize? CrystEngComm 19(36), 5336–5340 (2017). https://doi.org/10.1039/C7CE00587C

J.R. Gröls, B. Castro-Dominguez, Mechanochemical co-crystallization: insights and predictions. Comput. Chem. Eng. (2021). https://doi.org/10.1016/j.compchemeng.2021.107416

D.S. Palmer, N.M. O’Boyle, R.C. Glen, J.B.O. Mitchell, Random forest models to predict aqueous solubility. J. Chem. Inf. Model. 47(1), 150–158 (2007). https://doi.org/10.1021/ci060164k

R.M. Bhardwaj, A. Johnston, B.F. Johnston, A.J. Florence, A random forest model for predicting the crystallisability of organic molecules. CrystEngComm 17(23), 4272–4275 (2015). https://doi.org/10.1039/C4CE02403F

T. Barnard, H. Hagan, S. Tseng, G.C. Sosso, Less may be more: an informed reflection on molecular descriptors for drug design and discovery. Mol. Syst. Des. Eng. 5(1), 317–329 (2020). https://doi.org/10.1039/C9ME00109C

H. Moriwaki, Y.-S. Tian, N. Kawashita, T. Takagi, Mordred: a molecular descriptor calculator. J. Cheminform. 10(1), 4 (2018). https://doi.org/10.1186/s13321-018-0258-y

R. Gladysz, F.M.D. Santos, W. Langenaeker, G. Thijs, K. Augustyns, H.D. Winter, Spectrophores as one-dimensional descriptors calculated from three-dimensional atomic properties: applications ranging from scaffold hopping to multi-target virtual screening. J. Cheminform. 10, 9 (2018). https://doi.org/10.1186/s13321-018-0268-9

D. Rogers, M. Hahn, Extended-connectivity fingerprints. J. Chem. Inf. Model. 50, 742–754 (2010). https://doi.org/10.1021/ci100050t

S. Jaeger, S. Fulle, S. Turk, Mol2vec: unsupervised machine learning approach with chemical intuition. J. Chem. Inf. Model. 58, 27–35 (2018). https://doi.org/10.1021/acs.jcim.7b00616

A. Bender, How similar are those molecules after all? Use two descriptors and you will have three different answers. Expert Opin. Drug Discov. 5(12), 1141–1151 (2010). https://doi.org/10.1517/17460441.2010.517832

D. Weininger, SMILES, a chemical language and information system. 1. Introduction to methodology and encoding rules. J. Chem. Inf. Comput. Sci. 28(1), 31–36 (1988)

N.M. O’Boyle, Towards a Universal SMILES representation—a standard method to generate canonical SMILES based on the InChI. J. Cheminform. 4(1), 22 (2012). https://doi.org/10.1186/1758-2946-4-22

E.J. Bjerrum, SMILES enumeration as data augmentation for neural network modeling of molecules. CoRR (2017). arXiv:1703.07076

E. Torabian, M.A. Sobati, New structure-based models for the prediction of flash point of multi-component organic mixtures. Thermochim. Acta 672, 162–172 (2019). https://doi.org/10.1016/j.tca.2018.11.012

J. Howard, S. Gugger, Deep Learning for Coders with Fastai and PyTorch (O’Reilly Media, Sebastopol, 2020), pp. 36–39

O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 115(3), 211–252 (2015)

T. Shi, Y. Yang, S. Huang, L. Chen, Z. Kuang, Y. Heng, H. Mei, Molecular image-based convolutional neural network for the prediction of ADMET properties. Chemometr. Intell. Lab. Syst. 194, 103853 (2019). https://doi.org/10.1016/J.CHEMOLAB.2019.103853

Y. Matsuzaka, Y. Uesawa, Optimization of a deep-learning method based on the classification of images generated by parameterized deep snap a novel molecular-image-input technique for quantitative structure-activity relationship (QSAR) analysis. Front. Bioeng. Biotechnol. 7, 65 (2019)

C. Shorten, T.M. Khoshgoftaar, A survey on image data augmentation for deep learning. J. Big Data 6(1), 60 (2019). https://doi.org/10.1186/s40537-019-0197-0

M. Fernández-Delgado, E. Cernadas, S. Barro, D. Amorim, Do we need hundreds of classifiers to solve real world classification problems? J. Mach. Learn. Res. 15, 3133–3181 (2014)

S. Wang, C. Aggarwal, H. Liu, Random-forest-inspired neural networks. ACM Trans. Intell. Syst. Technol. (2018). https://doi.org/10.1145/3232230

S. Albawi, T.A. Mohammed, S. Al-Zawi, Understanding of a convolutional neural network, in 2017 International Conference on Engineering and Technology (ICET) (2017), pp. 1–6. https://doi.org/10.1109/ICEngTechnol.2017.8308186

H.O. Brinkhaus, K. Rajan, A. Zielesny, C. Steinbeck, Randepict—random chemical structure depiction generator. ChemRxiv (2022). https://doi.org/10.26434/chemrxiv-2022-t1kbb

M.C. Sorkun, A. Khetan, S. Er, AqSolDB, a curated reference set of aqueous solubility and 2D descriptors for a diverse set of compounds. Sci. Data 6(1), 143 (2019). https://doi.org/10.1038/s41597-019-0151-1

Q. Cui, S. Lu, B. Ni, X. Zeng, Y. Tan, Y.D. Chen, H. Zhao, Improved prediction of aqueous solubility of novel compounds by going deeper with deep learning. Front. Oncol. (2020). https://doi.org/10.3389/fonc.2020.00121

S. Kim, Exploring chemical information in PubChem. Curr. Protoc. (2021). https://doi.org/10.1002/cpz1.217

J.L. Durant, B.A. Leland, D.R. Henry, J.G. Nourse, Reoptimization of MDL keys for use in drug discovery. J. Chem. Inf. Comput. Sci. 42, 1273–1280 (2002). https://doi.org/10.1021/ci010132r

B. Ramsundar, P. Eastman, P. Walters, V. Pande, K. Leswing, Z. Wu, textitDeep Learning for the Life Sciences (O’Reilly Media, Sebastopol, 2019). https://www.amazon.com/Deep-Learning-Life-Sciences-Microscopy/dp/1492039837

K. He, X. Zhang, S. Ren, J. Sun, Deep residual learning for image recognition, in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2016), pp. 770–778

Acknowledgments

The authors would like to thank Dr Tom Fincham Haines and the Department of Computer Science at the University of Bath for their support in accessing the hardware resources needed for this work.

Funding

The authors would like to acknowledge the PhD studentship funded by CMAC Future Manufacturing Research Hub and the Centre for Sustainable and Circular Technologies at the University of Bath.

Author information

Authors and Affiliations

Contributions

MRW conceived of the idea, developed the code, ran the computations, and compiled the results. UMH assisted in developing the method of representing two component systems. MRW took the lead in preparing the manuscript with support from BCD, all other authors provided critical feedback leading to the final manuscript. BCD, UMH, and CCW supervised the project. BCD and CCW were responsible for the PhD funding to support MRW.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there are no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wilkinson, M.R., Martinez-Hernandez, U., Wilson, C.C. et al. Images of chemical structures as molecular representations for deep learning. Journal of Materials Research 37, 2293–2303 (2022). https://doi.org/10.1557/s43578-022-00628-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1557/s43578-022-00628-9