Abstract

In this themed collection we aim to broadly review some of the critical, recent progress in the application of AI/ML to various aspects of computational materials science and materials science more broadly. In this collection spread across two issues, we have assembled a collection of articles from leaders in the broad domain of applying AI/ML, which we collectively refer to as ML, in computational materials science. Together these articles curate the critical, recent progress in the application of ML to various aspects of materials science. These include ML approaches for understanding and driving electron microscopy, designing energy materials and the discovery of principles and materials relevant to the design of materials for the future, studying crystal nucleation and growth, the use of ML to describe force fields governing material and molecular behavior, and other topics.

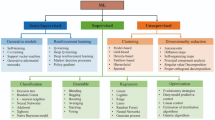

Graphical abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The materials science community across academia, national laboratories, and industry has long benefited from and contributed to the development of increasingly sophisticated quantitative methods. More recently, data-driven approaches for materials science have seen a heavy use of machine learning (ML) and artificial intelligence (AI)-based ideas. These approaches have made it possible to reveal predictive patterns in the triad of structure–property-function relationships across all branches of materials sciences and engineering. The synergistic interactions between materials science and data sciences continue to flourish with tremendous advances in computing power, software, and algorithms, as well as enormous increases in data available from experiment and simulation. Perhaps it is hard to say which of the two has expanded more in the last decade—the amount of data available through increasingly sophisticated simulations and experiments, or the sophistication of the ML/AI algorithms available to make sense of the data. One has also witnessed robust developments where data sciences and materials experimentation are no longer two separate entities but instead deeply integrated. In these so-called active learning paradigms, data-driven approaches are used to guide further experiments, often in a closed-loop iterative manner. Such autonomous materials discovery paradigms are enabling navigating material space in a high throughput yet efficient and controlled manner.

The impact of AI/ML is not limited to just smarter combinatorial design of materials. It is now also possible to train AI and ML models to gain fundamental understanding and extract patterns at spatiotemporal scales that were previously impossible with conventional computational materials modeling or with the best available theories. Powerful open-source toolkits for AI/ML model training and architecture selection have also made ML more accessible to researchers with diverse training backgrounds. As a result, in laboratories across the world, scientists and engineers are identifying ways in which they can incorporate AI and ML into their research.

Nevertheless, some key challenges remain in the application of AI and ML to materials discovery. While the text-based extraction of prior experimental efforts for data-driven models is maturing,1 progress is only just beginning in the systematic analysis of experimental images.2,3,4 Furthermore, positive publishing bias means that AI models are challenged to find good sources of failed experiments. Work has indicated the benefit of these failed experiences to inform AI models and that human bias can influence extracted predictions.5,6 Creative strategies have been devised to simulate negative data for classification tasks.7 Within the context of ML for the acceleration of physics-based modeling, a key outstanding challenge is the quality of the data source. For example, many materials prediction models are trained on approximate density functional theory (DFT), inheriting the bias of the underlying functional. Whereas ML-derived functionals represent one emerging strategy to overcome limitations in DFT,8 there remains no one-size-fits-all physics-based model that is established to be predictive across materials space. Thus, strategies that incorporate the uncertainty of the physics-based method9 or make recommendations10 about the most suitable method to employ are needed. There is indeed early evidence that AI tools can also support experts in their choice of physics-based methodology. In this issue of MRS Bulletin, we summarize key ways researchers are advancing AI in spite of these potential challenges.

AI methods are increasingly useful in interpreting, analyzing, and complementing the static and dynamic data sets generated from different spectroscopic and microscopic techniques. In this connection, Kalinin et al.11 as well and Chan et al.12 review the state of the art in how ML can be used to better understand the huge amounts of data being generated in electron and scanning probe microscopy techniques. Their articles show how such understandings can be complemented with theory, and leveraged in a closed-loop manner to perform automated microscopy experiments and eventually open the path toward direct atomic fabrication with active learning augmented microscopy.

The contribution by Saar et al.13 looks at yet another aspect of how ML and specifically active learning is revolutionizing autonomous materials design. They show how ML can be used for autonomous model exploration relevant to materials discovery. They also consider the relevant question of ML-related education for physical sciences students and report efforts they have undertaken to educate a large number of physical sciences and engineering students in ML. Their efforts have involved the creative development and use of the so-called LEGOLAS education kit—a LEGO* based low-cost Autonomous Scientist. Please read their article in the Material Matters column of this issue of MRS Bulletin.

Articles from the groups of Viswanathan14 and Mannodi-Kanakkithodi15 consider the broad question of high-throughput materials design using ML. The contribution from Viswanathan and co-workers describes an automated workflow named AutoMat for the automated discovery of electrochemical materials critical to large-scale electrification. AutoMat accelerates the computational steps fundamental to such materials design through a variety of advances in theory, software engineering, and machine learning. The contribution from Mannodi-Kanakkithodi and group overviews high-throughput computations and ML methods for the important problem of halide perovskite discovery. They examine specific approaches that make it possible to predict in an accelerated manner various materials properties and screen through enormous chemical spaces. The key approaches they describe involve a tight integration of DFT simulations with ML.

The article by Ceriotti16 carefully examines the future of simulations itself. It discusses how ML-based theoretical methods will enable molecular simulations with quantum level accuracy, yet with the cost of performing classical simulations. The article highlights various developments that have made ML-based interatomic potentials a viable option for materials simulations. At the same time, the article also makes a case for how more sophisticated electronic-structure calculations will be continually needed as we push the boundaries of computational materials science and what we aim to achieve through it.

ML methods are starting to automate and revolutionize the fields of crystal structure prediction as well as predicting stable polymorphs, their free energies and kinetics. In this context, we have submissions from the Day17 group as well as the groups of Rogal and Sarupria.18 Day and collaborators do a deep dive into the problem of crystal structure prediction with ML methods. They cover progress made and challenges remaining in multiple aspects of crystal structure prediction, including the evaluation of accurate energies, mapping the structural landscape, and inverse design of molecules given a target property in mind.

Fundamental to crystallization is the process of nucleation, which is a prototypical rare event that cannot be simulated using classical molecular dynamics even with the fastest available supercomputers. Rogal and Sarupria present the state of the art in performing specialized yet robust molecular simulations that can reach the experimentally relevant time scales for nucleation and growth processes. These types of simulations are now making it possible to directly observe nucleation in all-atom resolution of generic systems, and also gain insight into the reaction coordinate or driving forces behind the nucleation.

Arguably deeply connected with the process of crystallization is that of self-assembly, albeit often with different length scales and driving forces. This term is loosely used for the process in which different systems’ constituents organize themselves into highly ordered structures. Huang et al. describe19 the use of ML algorithms for the development of kinetic network models (KNMs) that aid interpretation of MD simulations. Huang and colleagues describe how KNMs can capture self-assembly processes such as crystallization of colloidal particles. They also describe an outlook where increasingly deep learning algorithms such as graph neural networks can be exploited to understand and interpret these self-assembly events from complex trajectories.

To conclude, the articles in these two issues present an overview of some of the challenges the computational and broader materials science community is able to solve with recent AI/ML methods. The articles also discuss numerous open problems and avenues for future research, hinting at a scientific discipline that has a vibrant, active future ahead. Naturally, we cannot span the entirety of the fields in a limited number of articles and thus in no way are these issues meant to be complete. Just to name a few, an important topic we did not cover here is the fair, open, and equitable access to data for training ML models—we refer to this recent excellent overview instead.20 A second important topic not considered here was the interpretation of AI-based models in materials science.21 Widely used theories such as classical nucleation theory or the Allen–Cahn equation could have their approximations. However, they provide one with not just predictive power, but also intuition into the underlying physics and chemistry. AI and ML tools arguably provide more predictive power than these revered theories, but often this comes at the cost of understanding. By developing interpretable AI models perhaps this gap can be bridged. We hope the collection of articles will be found useful by the materials science community.

Data availability

No data were used in writing this review article.

References

O. Kononova, T. He, H. Huo, A. Trewartha, E.A. Olivetti, G. Ceder, iScience 24, 102155 (2021). https://doi.org/10.1016/j.isci.2021.102155

W. Jiang, E. Schwenker, T. Spreadbury, N. Ferrier, M.K.Y. Chan, O. Cossairt, A two-stage framework for compound figure separation. Preprint arXiv:2101.09903 (2021)

E. Schwenker, W. Jiang, T. Spreadbury, N. Ferrier, O. Cossairt, M.K.Y. Chan, EXSCLAIM!—An automated pipeline for the construction of labeled materials imaging datasets from literature. Preprint arXiv:2103.10631 (2021)

K.T. Mukaddem, E.J. Beard, B. Yildirim, J.M. Cole, J. Chem. Inf. Model. 60, 2492 (2019). https://doi.org/10.1021/acs.jcim.9b00734

P. Raccuglia, K.C. Elbert, P.D.F. Adler, C. Falk, M.B. Wenny, A. Mollo, M. Zeller, S.A. Friedler, J. Schrier, A.J. Norquist, Nature 533, 73 (2016). https://doi.org/10.1038/nature17439

X. Jia, A. Lynch, Y. Huang, M. Danielson, I. Lang’at, A. Milder, A.E. Ruby, H. Wang, S.A. Friedler, A.J. Norquist, J. Schrier, Nature 573, 251 (2019). https://doi.org/10.1038/s41586-019-1540-5

E.L. Cáceres, N.C. Mew, M.J. Keiser, J. Chem. Inf. Model. 60(12), 5957 (2020). https://doi.org/10.1021/acs.jcim.0c00565

J. Kirkpatrick, B. McMorrow, D.H. Turban, A.L. Gaunt, J.S. Spencer, A.G. Matthews, A.J. Cohen, Science 374(6573), 1385 (2021)

P. Pernot, A. Savin, J. Chem. Phys. 148(24), 241707 (2018)

S. McAnanama-Brereton, M.P. Waller, J. Chem. Inf. Model. 58(1), 61 (2018)

S.V. Kalinin, M. Ziatdinov, S.R. Spurgeon, C. Ophus, E.A. Stach, T. Susi, J. Agar, J. Randall, MRS Bull. 47(9) (2022)

D. Unruh, V.S.C. Kolluru, A. Baskaran, Y. Chen, M.K.Y. Chan, MRS Bull. (in press)

L. Saar, H. Liang, A. Wang, A. McDannald, E. Rodriguez, I. Takeuchi, A.G. Kusne, MRS Bull. 47(9) (2022)

E. Annevelink, R. Kurchin, E. Muckley, L. Kavalsky, V. Hegde, V. Sulzer, S. Zhu, J. Pu, D. Farina, M. Johnson, D. Gandhi, A. Dave, H. Lin, A. Edelman, B. Ramsundar, J. Saal, C. Rackauckas, V. Shah, B. Meredig, V. Viswanathan, MRS Bull. (in press)

J. Yang, A. Mannodi-Kanakkithodi, MRS Bull. 47(9) (2022)

M. Ceriotti, MRS Bull. (in press)

R. Clements, J. Dickman, J. Johal, J. Martin, J. Glover, G.M. Day, MRS Bull. (in press)

S. Sarupria, S.W. Hall, J. Rogal, MRS Bull. 47(9) (2022)

B. Liu, Y. Qiu, E.C. Goonetilleke, X. Huang, MRS Bull. 47(9) (2022)

M. Scheffler, M. Aeschlimann, M. Albrecht, T. Bereau, H.J. Bungartz, C. Felser, M. Greiner, A. Groß, C.T. Koch, K. Kremer, W.E. Nagel, Nature 604(7907), 635 (2022)

J. Schmidt, M.R.G. Marques, S. Botti, M.A.L. Marques, NPJ Comput. Mater. 5(1), 83 (2019)

Acknowledgments

This work was supported by the US Department of Energy, Office of Science, Basic Energy Sciences, CPIMS Program, under Award No. DE-SC0021009 (P.T.) and by the Office of Naval Research under Grant No. N00014-20-1-2150 (H.J.K.).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kulik, H.J., Tiwary, P. Artificial intelligence in computational materials science. MRS Bulletin 47, 927–929 (2022). https://doi.org/10.1557/s43577-022-00431-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1557/s43577-022-00431-1