Abstract

Background

Work-related stress is detrimental to individual health and incurs substantial social costs. Interventions to tackle this problem are urgently needed, with mHealth solutions being a promising way of delivering accessible and standardized interventions on a wide scale. This study pilot tests a low-intensive mHealth intervention designed to mitigate the negative consequences of stress through promoting recovery strategies.

Methods

Nursing school students (N = 16) used the intervention for a month. Data were collected immediately before, immediately after, and one month after the end of the intervention. Additionally, intensive longitudinal data were collected daily during the time of the intervention. Primary outcome measures include recruitment and retention rates, engagement with and acceptability of the intervention, as well as evaluating the quality of measurement instruments.

Results

Recruitment and retention rates provide a benchmark that we need to invite 10–12 times the intended target sample size. Engagement and acceptability metrics are promising overall, showing key areas that need to be adapted to improve the intervention. Measurement quality is acceptable with instruments mostly functioning as intended.

Conclusion

Results show that the intervention and study protocol are feasible for conducting a randomized controlled trial given a few adjustments. The randomization algorithm needs to match the sample size in order to allocate evenly distributed experimental groups. Acceptability of the intervention may be improved through adapting the recommended recovery strategies. Some additional outcome measures are suggested to provide a more comprehensive picture of intervention effects.

Trial registration

NCT06228495. Registered retrospectively 01/10/2024.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Theoretical background

mHealth intervention for work-related stress

Work-related stress is a well-known risk factor for mental ill-health, including burnout syndrome and depression [1, 2]. These health issues are detrimental both to individuals and organizations as they are linked with increased sick leave, turnover rates, and productivity loss [3]. Interventions to prevent this problem are urgently needed to support the health and wellbeing of employees as well as decrease the social costs associated with stress-related health issues.

mHealth solutions are among the most promising options for providing effective, accessible, and scalable interventions in an organizational context [4, 5]. These kinds of interventions have been found to be effective for stress management and related health outcomes [6]. In addition, mHealth interventions are significantly easier to scale and standardize compared with conventional on-site workplace interventions [7, 8]. Given the large number of mobile phone users in today’s world, mHealth solutions provide unique opportunities for making interventions widely available.

One way to mitigate the negative consequences of work-related stress is through interventions promoting recovery strategies—behavioral and psychological strategies that alleviate short-term stress reactions [9]. Different forms of recovery behaviors (e.g., detaching from work and mindfulness) are well-known to have positive effects on stress-related health problems [10]. A behavior change intervention that successfully increases the quantity and quality of recovery strategies may thus be effective in combating the long-term negative effects of stress.

To effectively increase the uptake of recovery strategies the intervention needs to support behavior change [11]. Behavior change techniques (BCT)—replicable components of an intervention designed to enable behavior change—provide a systematic method for designing behavior change interventions. The BCT framework presents a taxonomy of 93 behavior change techniques based on consensus from an expert panel, providing many benefits for the development and evaluation of an intervention [12].

Including BCTs in an intervention – for example self-monitoring, feedback, goal-setting, knowledge-shaping, and rewards—increases the chances that the intervention will successfully induce behavioral change and have a positive effect on intended health outcomes [11]. Additionally, the structure, components, and content of the intervention should be rooted in theory-driven models of habit formation to further support long-term behavior change [13]. For instance, daily reminders which cue self-monitoring at a specific time support developing a habit of daily self-reflection.

Employing the BCT framework also has additional benefits, including improved replicability and faithful implementation [14]. By using replicable and well-defined intervention components it is significantly easier to accurately replicate the intervention in future studies. This aspect of replicability is also important for implementation, ensuring that real-world interventions implemented in workplaces are sufficiently similar to the intervention tested in a study.

The present study

This study presents a preventive, low-intensive mHealth intervention designed to mitigate the negative consequences of work-related stress. Low-intensive interventions and active monitoring are recommended as a first response for subthreshold diagnoses, which are prevalent in the overall population [15, 16]. This aligns with the “stepped care” approach, in which widely accessible and low-intensive initiatives are offered prior to more intensive and costly treatments [17].

The intervention is a mobile application used once daily over a period of four weeks. Each daily interaction takes a few minutes, prompting users to reflect on their current mood and also provides suggestions for a variety of recovery strategies. Thus, users get into a daily habit of self-monitoring their stress and energy levels, as well as receive knowledge about effective tools for managing stress. See the Methods section for more details regarding the intervention.

The motivation of the present study is to pilot test the intervention and a study protocol in preparation for a future randomized controlled trial. Pilot testing the intervention and study procedures at an early stage is critical to identify potential pitfalls that need to be addressed before conducting a full-scale trial [18, 19]. Through investigating the study and intervention in preparatory phases we can refine the study protocol and intervention design in order to maximize the chances of a successful RCT.

A novel aspect of the intervention that needs to be tested is the daily format in which users respond to questionnaires and engage with intervention content every day (see the Methods section for more details on the intervention design). This structure minimizes the effort associated with the intervention and supports a daily habit of employing recovery strategies. Furthermore, the intensive longitudinal design provides a fine-grained view of how each participant´s experience changes dynamically over time, providing unique insights into daily fluctuations and the impact of the intervention on an individual level [20].

With mHealth interventions it is also critical to pay attention to constructs such as engagement and acceptability. Engagement with mHealth interventions is notoriously problematic with many studies reporting low engagement rates, negatively impacting the potential effectiveness of the intervention [21, 22]. It is thus important to ensure an engaging and acceptable intervention design to optimize intervention effects.

Notably, engagement and acceptability are complex and overlapping constructs that are used in different ways depending on the scientific field and purpose of the study. For the purposes of this study, engagement is defined in terms of how often participants use the intervention and how engaging they find the mobile application. Acceptability refers to wider aspects such as how appropriate, relevant, and satisfactory the intervention and digital tool is overall [23].

A last motivation of the study involves assessing whether the methods used to measure study outcomes function as intended. In part, it is necessary to ascertain that participants complete questionnaires and that these measures comprehensively reflect the intended intervention effects. Regarding the daily repeated measures, it is important that these are sensitive enough to detect within-person changes to be able to maximize the information gained from the intensive longitudinal data [20].

Aims and objective

The objective of the study is to pilot test the study protocol and intervention design of a preventive, low-intensive mHealth intervention for work-related stress in preparation of conducting a full-scale randomized controlled trial. Primary research questions include:

-

1)

Data collection procedure—What is the recruitment and retention rate of invited participants? Does the randomization algorithm function properly?

-

2)

Engagement—How often do participants use the intervention? Do they find the application easy and engaging to use?

-

3)

Acceptability—Do participants find the intervention overall satisfactory and perceive it as beneficial? Is the digital tool technically stable?

-

4)

Measurement quality—What is the completion rate for questionnaire items? What is the within-person variability in the daily measures?

Methods

Study design

Three groups of participants received different versions of the intervention, each version containing a distinct set of recovery strategies (see Sect. “Intervention versions” for more details on the intervention versions). Participants were sequentially allocated to groups in a 1:1:1 ratio using blocked randomization with randomly selected block sizes (3, 6, 9). The first author of the paper generated the allocation sequence, enrolled participants, and assigned participants to their intervention group. The allocation sequence was generated using “sealed envelope”, an online software for creating blocked randomisation lists (Sealed Envelope Ltd. 2022). All study participants were blinded, not informed of the group they belong to. The study took place during the period May 2022 – December 2022. Ethical approval was granted by the Swedish Ethical Review Authority. All methods employed in the study were conducted in accordance with appropriate guidelines and regulations, including the CONSORT checklist [24].

The intervention and data collection were implemented through the mobile application m-Path, a software designed for real-time monitoring as well as creating and providing interventions [25]. The study used a PPF (pre-, post-, follow-up) structure in which outcomes were measured immediately before the intervention, immediately after the intervention, and one month after the end of the intervention as depicted in Fig. 1. Data were also collected daily as part of the intervention (see the following section). Enrollment for the study was continuous, such that invited participants could choose when to start the study and intervention.

The whole study protocol—including informed consent, data collection, and intervention material—was automated. An invitation email contained all necessary information for starting and completing the study. A link in the invitation email along with a specific code allowed participants to download the m-Path application and receive informed consent through the application. Upon consenting, the pre-intervention measure was available through the app and the intervention started a day after completing the pre-intervention measure (in case participants did not complete the pre-intervention measure within a week, the intervention would start at that point).

All content was entered into the application by the first author through the m-Path back-end system. m-Path is available both on Android and iOS and was distributed to participants through Google Play and App Store respectively.

Intervention details

DIARY

DIARY – Daily Intervention for Active Recovery—is a 28-day intervention during which participants are prompted once daily to engage with intervention content. Each daily intervention interaction includes a short questionnaire with questions regarding sleep quality, current mood (e.g., tense, relaxed), and energy levels. Participants were prompted to open the application through a notification at 18:00 each evening. In case they did not fill out the questionnaire, an additional reminder notification was sent out at 20:00. The questionnaire closed each night at 03:00 am, at which point it was no longer possible to access the questionnaire for that day. The questionnaire took at most 5 min to complete.

Upon completing the daily questionnaire participants received a prompt – a “bit-size” amount of information regarding stress and recovery as well as suggestions for a specific recovery strategy. A sample prompt informs about micro-breaks: “Another way to recover from work stress is to take breaks during the day – moments of relaxation when you completely let go of work demands. However, when there is lots going on and we feel stressed it can be difficult to find the time for longer breaks. Perhaps there are no clear opportunities for resting in between tasks. In these cases, it is especially important to do short interruptions – micro-breaks – to sit down, close your eyes, and breathe deeply for a minute.”

Three different sets of prompts were developed promoting different kinds of recovery strategies—(1) social support, (2) psychological strategies, and (3) physical activity – creating three versions of the intervention given to separate groups of participants. Dividing participants into three groups functioned as a way to test different versions of the intervention, as well as to investigate how to randomize participants to different experimental groups in a future randomized trial. All versions of the intervention were identically structured (apart from the recommended recovery strategies) to ensure that differences in outcomes are due to recovery strategies and not related to the intervention format, engagement levels, adherence, technology, or other confounding factors.

Figure 2 depicts what the intervention looks like as it is operationalized in the phone. Initially self-monitoring is completed through multiple-choice questions answered sequentially. Afterwards, a text describing a particular recovery strategy is presented. Another important feature is that users can track their monitored values in graphs.

Intervention versions

Social support

One version of the intervention prompted users to engage in social support which is thought to buffer against the negative effects of stress [26, 27]. This effect is present in occupational settings, with several studies indicating that social support plays an important role in preventing burnout among nurses [28, 29]. Furthermore, interventions targeting social support in the workplace suggest that these have positive effects on mental health [30, 31]. Sample strategies included asking for help from co-workers, listening with compassion, and sharing authentic emotions.

Physical activity

Another version of the interventions promoted an increase of physical activity in daily life. Physical activity is well-known to improve various health outcomes similar to our outcomes of interest, for instance reducing stress and burnout symptoms [32, 33]. Additionally, physical activity interventions in the workplace are widely used and have been found effective in many studies [34, 35]. Sample strategies included taking walks, going to the gym, and using the stairs instead of the elevator.

Psychological strategies

A final version of the intervention promoted a variety of psychological strategies for stress reduction. Sample strategies included sleep quality improvement tips, mindfulness, and work detachment – evidence-based strategies that have a positive effect on outcomes of interest [36, 37]. Workplace interventions targeting these kinds of strategies have been found to be effective [38, 39].

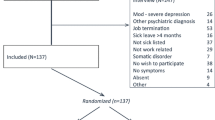

Participants

Radiology nursing students from Karolinska Institutet were invited to take part in the study. An e-mail from the research group was sent out to all students (N = 90) in three classes of the radiology nursing program. The e-mail contained an invitation to take part in the study, participant information, as well as instructions for how to download m-Path and join the study. Additionally, a member of the research group gave a short presentation about the study to all invited participants. Informed consent was obtained through the application prior to collecting any data. Table 1 shows demographic data from all participants.

Though the study population (students) is seemingly different from our target population (workers) there are several reasons for why this study population is relevant for this trial. Firstly, the education is a specialist nursing education with a strong focus on preparing students for their working lives, similar to a vocational program. Significant portions of the education, including during the time of data collection, involve on-site training in real-world working conditions. Additionally, the mean age (around 30 years) is of an adult working age rather than that typical of students. Importantly, this early-career period when new at work is a risk factor for stress-related health problems and thus a key target demographic for our intervention [40, 41].

Outcomes

Figure 3 presents all outcomes and their operationalizations.

Data collection procedure is measured through recruitment rate, retention rate, and evaluating the randomization algorithm. Recruitment rate was calculated as the percentage of participants who joined in the study (filled in informed consent) relative to all who were invited to the study. Retention rate was calculated as the percentage of participants who completed each measure (pre-, post-, follow-up) relative to all participants. Randomization algorithm was evaluated by calculating the distribution of participants in the three intervention groups.

Engagement was measured using adherence and the App Engagement Scale. Adherence was operationalized as a count variable coded 0–28 representing the number of days that a given participant used the intervention. The App Engagement Scale is a 7-item questionnaire designed to measure engagement with mobile applications [42], translated into Swedish by the research team. This translation has been used previously by the research team and has preliminary evidence of good reliability [43]. Items (e.g. “I enjoyed using the app”) are scored on a 1–5 ordered categories scale (1 = Not at all, 5 = Fully agree). This measure was only included in the post-intervention measure.

Acceptability was measured using a set of single-item measures evaluating whether the intervention was relevant to the user, if they would like to use it again, quality of the prompts, and technical stability. These items were only included in the post-intervention measure. All questions and response options regarding acceptability metrics are available in Appendix A.

Measurement quality was evaluated based on completion rate and within-person variance of daily measures. Within-person variance was calculated as the intraclass correlation coefficient (ICC) from an intercept-only multilevel model with daily stress as outcome. Completion rate was calculated as the percentage of questionnaire items that were answered by participants. The following are the outcomes and corresponding questionnaires planned for the randomized controlled trial:

-

Exhaustion and disengagement from work was measured using the Oldenburg Burnout Inventory, an instrument designed to measure burnout in an occupational context including the dimensions exhaustion and disengagement [44]. This study used a Swedish translation with a subset of 7 items [45]. Items (e.g. “after work I often feel tired and exhausted”) are scored on a 4-point ordered categories scale (1 = Not at all, 4 = Exactly).

-

Emotional exhaustion was measured using the Shirom-Melamed Burnout Questionnaire (SMBQ), an instrument designed to assess burnout symptoms [46]. The study used a Swedish translation and subset of 6 items focused on the emotional exhaustion dimension of burnout [47, 48]. Items (“My batteries are empty”) are rated on a 1–7 ordered categories scale (1 = Almost never, 7 = Almost always).

-

Anxiety was measured using the GAD-7 questionnaire, a 7-item instrument designed to assess generalized anxiety disorder [49]. This study uses a Swedish translation [50]. Items (e.g. “Feeling nervous, anxious, or on edge”) were scored on a 1–4 ordered categories scale (1 = Not at all, 4 = Nearly every day).

-

Recovery was measured using the Recovery Experience Questionnaire, a 16-item questionnaire designed to measure four dimensions of recovery – detachment, relaxation, autonomy, and mastery – using four items for each dimension [51]. This study uses a Swedish translation [52]. Items (“In my free time I don’t think about work”) are scored on a 1–7 ordered categories scale (1 = Almost never, 7 = Almost always).

-

Mindfulness was measured using the Mindful Attention Awareness Scale, a 15-item measure designed to assess attention and awareness of “what is occurring in the present moment” [53]. This study used a Swedish translation with six items centered around emotional self-awareness [54]. Items (“I could be experiencing some emotion and not be conscious of it until some time later”) are rated on a 1–7 ordered categories scale (1 = Almost never, 7 = Almost always).

-

Stress was measured daily as the mean value of three items inspired by the Stress-Energy Questionnaire [55]. This study used a Swedish translation which has been validated in a previous study by the research team (Lukas J, Kowalski L, Bujacz A: Psychometric properties of the daily measurement of stress in a daily diary study of Swedish Healthcare Workers, in preparation). Items (“During the last day, to what extent have you felt tense / pressed / frustrated?”) were rated on a 6-point ordered categories scale (1 = Not at all, 6 = Very much). This variable was measured daily during the intervention and was not included in the pre-, post-, and follow-up measures.

Results

Data collection procedure

Recruitment & retention

Sixteen participants were recruited out of 90 invited participants, representing a recruitment rate of 17.8%. 15 participants (93.8%) completed the pre-intervention measure, 11 participants (68.8%) completed the post-intervention measure, and 7 participants (43.8%) completed the follow-up measure.

Randomization algorithm

The randomization process generated slightly uneven intervention group sizes (N = 7, N = 5, N = 4). The imperfect distribution was likely due to selecting block sizes which were too large for the number of participants who joined the study. Block sizes need to be adjusted to fit the number of participants in order to ensure an equal group distribution.

Engagement

Protocol adherence

Participants completed on average 14.3 (SD = 8. 01) out of 28 days of the intervention, representing an adherence rate of 51%. There was a large variance in adherence, ranging from 4 – 28 days.

App Engagement Scale

Complete results from the App Engagement Scale (M = 4.36, SD = 0.66) are presented in Table 2.

Acceptability

Perceived benefit

Table 2 shows results from all single-item measures of perceived benefit. The mean ratings varied between 2.82 – 3.36 on a scale from 1–4 (one item scaled 1–6 had a mean rating of 4.55).

Technical stability

Five out of 11 participants experienced no technical issues at all. Three participants experienced some technical issues, for example being unable able to open questionnaires or enter responses. However, written comments from these participants indicate that these issues were minor and did not cause substantial problems. Unfortunately, data from three participants is missing due to issues with data retrieval.

Measurement quality

Figure 4 shows all outcome measures for all groups across all time-points. Though the purpose of this study was not to evaluate effectiveness of the intervention, the outcomes in this sample moved in a positive direction. Mindfulness and recovery experiences increased over time, while symptoms of emotional exhaustion, anxiety, and exhaustion and disengagement from work decreased across the time-points.

Completion rate

Participants responded to all items in the measures they took part in, providing a 100% completion rate of items in the questionnaires. See Sect. “Measurement quality” for a discussion on this topic.

Within-person variance

An intercept-only multilevel model with daily stress as outcome had an intraclass correlation coefficient (ICC) of 0.42. The ICC corresponds to the proportion of variability explained by within-group differences compared with between-group differences:

Lower values indicate that a larger proportion of variability in the outcome measure is due to within-person differences. An ICC of 0.42 shows that 58% (the inverse of 0.42) of variability is explained by within-person changes over time. This is a substantial part of variability, suggesting that the measure is sensitive to capturing within-person differences over time and also that this dimension is relevant to study intervention effects.

Discussion

Key results

The overall results point to this being a feasible intervention and study design to conduct a full-scale randomized controlled trial. The study indicates a promising recruitment rate though somewhat low retention rates, providing an important guideline for how many participants should be invited to reach a target sample size. Engagement is satisfactory with decent adherence and high app engagement ratings. Acceptability metrics are overall very promising, though the quality of prompts needs improvement. Measurement quality is good overall with a high completion rate and substantial within-person variability. A few adjustments are recommended to further refine the intervention and study protocol before conducting an RCT.

Data collection procedure

The data collection procedure indicated a low retention rate but provided an important benchmark for how many participants need to be invited. The randomization algorithm produced an uneven distribution and needs to be improved.

Recruitment and retention

17.8% of invited participants chose to take part in the study, indicating that roughly 1 in 5 of invited people will join the study. This recruitment rate is reflected in another study population – Kowalski et al., [43] conducted a similar study in which 24% of invited participants were recruited – so recruitment rates around this number seem to be consistent in these kinds of studies. While these may seem like low numbers, it is important to keep in mind that this is a real-world context and not the typical recruitment for clinical trials. Given that we have a wide invitation of participants on a volunteer-basis, we should expect lower recruitment rates than when targeting eligible candidates who will receive compensation.

Importantly, however, retention rates drop off quite markedly, especially for the follow-up measure which was completed by 44% of participants. Given the relatively low retention rate, an improvement in the study protocol would involve mitigating this drop-out effect. This could be done for instance through reminder e-mails and general encouragement to keep participating in the study. Notably, the follow-up retention rate observed in this study may be especially low because the follow-up measure coincided with vacation when participants may have been less inclined to answer.

Finally, rather than viewing recruitment and retention rates only as problems to be solved (though efforts should certainly be made to maximize recruitment and retention), these numbers provide an important benchmark for how many participants need to be invited to reach a target sample size. Based on the results of the current study, we need to recruit 10–12 times as many people as are needed in a final statistical analysis. Given that the planned RCT has wide inclusion criteria as well as a flexible, continuous, and automated recruitment process, it is absolutely feasible to invite sufficiently large numbers of participants.

Randomization algorithm

One problem with the data collection procedure was an uneven distribution of participants in the different intervention groups (N = 7, N = 5, N = 4). The randomized block sizes (3, 6, 9) were too large for the number of participants resulting in an imperfect distribution. It is important for future data analysis that groups are evenly distributed, for which reason we will adjust block sizes accordingly.

Engagement

The intervention had satisfactory adherence and received very high app engagement ratings.

Adherence varied greatly among participants with some using it daily and others using it 4–5 times, averaging a 51% adherence rate (14.3 out of 28 days). Importantly, engagement with digital interventions is a widespread challenge with many studies reporting low adherence rates [21, 56]. Adherence also varies widely depending on the type of intervention, making it difficult to interpret a given rate without sufficient reference to the specific context.

Given the context of this study and project, a 51% adherence rate is deemed satisfactory. Firstly, it is an improvement over a prior version of the intervention which had an average adherence of 39% [43]. In addition, DIARY is an unguided intervention that users engage with wholly on their own terms; instructions even state that one may use the intervention exactly as often as one likes. Compared with guided interventions – for example receiving support from a therapist and having an outspoken treatment plan – unguided interventions typically show lower adherence [57, 58].

Lastly, adherence rates could likely be further improved based on findings from a previous study investigating user engagement with DIARY [43]. For instance, involving employers to encourage intervention use and increasing use intention among participants are additional measures that could be included in the study protocol to increase engagement. Other studies suggest that tailoring and social influence are key factors for promoting engagement, something that may be included in future versions of DIARY [22, 59].

Results from the App Engagement Scale indicate that the intervention and app design are sufficiently user-friendly and engaging to participants. The App Engagement Scale had a mean score of 4.36 out of 5, which is a very positive rating [42]. This questionnaire regards the user experience of the mobile application, asking if users find it easy, enjoyable, and motivating to use. Notably, this score is a substantial improvement over the rating 3.44 observed for a prior version of the intervention using a different digital tool [43].

Acceptability

Participants found the intervention overall acceptable and technically stable, though the prompts need improvement.

Perceived effectiveness

Single-item acceptability metrics indicate that participants were overall satisfied with the intervention and found it suitable. On average, participants found the intervention content “mostly relevant” and would “most likely” want to use such an app again in the future (see Table 2). These are both promising metrics, indicating that the content of the intervention is relevant to this population and that it was sufficiently well-designed and helpful that they would want to access it again.

Some ratings regarding the intervention´s perceived effectiveness were slightly lower: participants did not feel the prompts were very useful to them (2.82 out of 4) and did not wholly experience that the intervention helped them deal with challenges in life more effectively (4.55 out of 6). These results indicate that the prompts may need to be refined to be more helpful to participants. Comments from this study and qualitative data from a previous study on DIARY [43] indicate that the prompts may be too simple and/or repetitive to be optimally beneficial.

One way of improving the prompts would be to base them on a well-established framework outlining a variety of effective strategies for optimizing recovery processes. A problem with the current prompts which became evident during development was that the underlying recovery “type” for each intervention version (social support, psychological strategies, physical activity) was too narrowly defined, resulting in prompts being quite repetitive and one-dimensional. In effect, the same recovery strategy was suggested repeatedly with minor modifications.

Rather than trying to isolate the “best” type of recovery strategy and center a whole intervention around this type of recovery, it may be more fruitful to recommend users a wide range of different recovery strategies. Most models of well-being include multiple components and needs, suggesting that multiple types of strategies may contribute to improving mental health [60, 61]. Including a wide repertoire of recovery strategies may thus be conducive for optimal recovery.

Providing a variety of different types of recovery strategies may be beneficial for other reasons as well. Firstly, it increases the likelihood of users finding a strategy that is possible to implement on a given day and that matches diverse lifestyles. Secondly, recovery may be most effective when it corresponds to current needs because different stressors require different types of recovery to optimally mitigate their negative effects [62]. Relaxation exercises might be helpful to unwind from a cognitively demanding day, while talking to a close friend is more appropriate if one experiences high emotional demands at work. A larger toolbox of recovery strategies makes it more likely that users will find strategies most beneficial to them at any given moment.

One way to include a varied and well-balanced set of recovery strategies grounded on a theoretical foundation would be to craft prompts based on the DRAMMA framework [63]. This framework integrates various models of recovery and well-being, outlining six different types of experiences during leisure time that support mental health: detachment, relaxation, autonomy, mastery, meaning, and affiliation. Interventions using this model have been found to be effective in improving relevant outcomes in a working population [64]. By developing prompts according to a well-rounded framework which includes a large variety of recovery strategies, it is more likely that prompts will be helpful to users and address a wider range of recovery needs.

Technical stability

Results also indicate that the intervention is overall technically stable with several participants not having any technical issues whatsoever. The few reported technical difficulties were very minor and did not cause users substantial issues. This is a clear improvement over a previous iteration of DIARY which had considerable technical problems [43]. Another study using m-Path also found the software to be technically stable with acceptable usability ratings [65]. These results are very promising, but even so, efforts will be made to mitigate any technical issues before future studies.

Measurement quality

Very high completion rates, with a potential caveat. Daily measures show substantial within-person variability. Some outcomes may need to be changed to better answer the research questions.

Completion rate

Participants provided complete data on the measures they participated in, answering all items for all questionnaires in the measures they took part in. Although a 100% completion rate is considered excellent, it may also illuminate potential problems with the data collection procedure. Participants did not have the option of skipping any questions, and responses were not saved on the server until participants completed the entire questionnaire. Because of this only fully completed measures were registered, resulting in a 100% completion rate. It is possible that some participants stopped midway through the measure and so did not have their partial responses registered. The low retention rate may reflect that some participants, even though they partially answered a measure, were not registered as having completed the measure.

Because of the strict criteria for registering data – inability to skip questions and only registering fully completed measures – we may miss out on valuable data. One way to mitigate this issue is by loosening the criteria for collecting data, for instance by giving participants the option to skip questions. Additionally, one can adapt the data collection system so that partial data is registered in the database. This will likely lead to collecting more data, even if it is sometimes incomplete, and may have the added benefit of improving retention rates.

Another important question regarding the measurements regards whether the outcome measures are appropriate to fully understand the intervention effects. Most outcome measures proved relevant, however, based on suggested changes to the intervention, new outcome measures may be more appropriate. The Recovery Experience Questionnaire (REQ) does not capture all dimensions of the DRAMMA framework and may thus not provide information about all different types of recovery strategies (for instance, the dimensions Affiliation and Meaning are missing from this instrument). Instead, the DRAMMA-Q may be a better suited instrument to ensure we get a comprehensive picture of the various recovery strategies [66].

Within-person variability

The daily stress measure proved to be useful for measuring individual change over time, with within-person variability accounting for 58% of the observed variance. This indicates that measuring stress on a daily level is important to capture the experience of participants and may yield important insights into how stress fluctuates on a daily level. These insights can in turn be used to further improve interventions and other efforts to mitigate the negative consequences of stress.

Limitations

A primary limitation of this study is the relatively small and homogenous participant pool. Though a small sample size is common in pilot studies, it is possible that a too restricted sample is not large enough to successfully uncover the full range of potential limitations of the study design. A larger sample is more likely to fully “test” all aspects of the study protocol, ensuring that there are no outstanding issues that will become apparent during a full-scale trial.

Additionally, because all participants were university students recruited from the same location it makes the sample rather different from the target sample, negatively affecting the generalizability of the findings. The full-scale trial intends to include a heterogenous sample with participants from a working population, including different occupations, locations, age groups etc. Because the participant demographics of this study do not reflect the target population of the future trial it is possible that the conclusions drawn from these results lack sufficient external validity. Thus, this study may not accurately predict potential issues that could arise with a different and more heterogenous participant pool.

However, because the educational program is similar to a vocational program with students spending considerable time in real-life working conditions, the sample may meaningfully reflect our target population. In addition, the mean age of 30 represents a key demographic factor given that early-career professionals may be in special need of this kind of intervention [40, 41].

A last study limitation is that there is insufficient data to thoroughly analyze prompt quality and understand how to further improve prompts. A nuanced approach to understanding the prompt quality would involve comparing ratings of individual prompts. However, due to the small sample size in each intervention group and imperfect adherence there is limited data for each prompt rating and, thus, a more in-depth analysis of prompt quality is not statistically possible.

Technical limitations of m-Path include user data being stored on the device rather than in a user account, negatively affecting security and flexibility. Since users do not have an account, they cannot access the intervention or their data from any other device than their own phone. Users also cannot log out from their m-Path profile, so it is not possible to encrypt their data from someone with access to their phone.

Conclusion

The overall results indicate that the study protocol and intervention design, with some modifications, are feasible for conducting a large-scale randomized controlled trial. By changing the way that data is registered in the database, we may collect more data and likely improve retention rates. Block sizes of the randomization algorithm will be adapted to better match the sample size in order to ensure equally sized experimental groups. New prompts will be crafted based on the DRAMMA model to improve the acceptability of the intervention. Some outcome measures will be changed to provide a more comprehensive picture of intervention effects.

Availability of data and materials

Data is not made publicly available due to the small number of participants and informed consent stating that only aggregated data will be shared. Aggregated data is available upon request to the corresponding author.

References

Aronsson G, Theorell T, Grape T, Hammarström A, Hogstedt C, Marteinsdottir I, Skoog I, Träskman-Bendz L, Hall C. A systematic review including meta-analysis of work environment and burnout symptoms. BMC Public Health. 2017;17(1):264. https://doi.org/10.1186/s12889-017-4153-7.

Theorell T, Hammarström A, Aronsson G, Träskman Bendz L, Grape T, Hogstedt C, Marteinsdottir I, Skoog I, Hall C. A systematic review including meta-analysis of work environment and depressive symptoms. BMC Public Health. 2015;15(1):738. https://doi.org/10.1186/s12889-015-1954-4.

Hassard J, Teoh KRH, Visockaite G, Dewe P, Cox T. The cost of work-related stress to society: a systematic review. J Occup Health Psychol. 2018;23(1):1–17. https://doi.org/10.1037/ocp0000069.

Howarth A, Quesada J, Silva J, Judycki S, Mills PR. The impact of digital health interventions on health-related outcomes in the workplace: a systematic review. Digit Health. 2018;4:205520761877086. https://doi.org/10.1177/2055207618770861.

Phillips EA, Gordeev VS, Schreyögg J. Effectiveness of occupational e-mental health interventions: a systematic review and meta-analysis of randomized controlled trials. Scand J Work Environ Health. 2019;45(6):560–76. https://doi.org/10.5271/sjweh.3839.

Egger SM, Frey S, Sauerzopf L, Meidert U. A literature review to identify effective web- and app-based mHealth interventions for stress management at work. Workplace Health Saf. 2023:216507992311708. https://doi.org/10.1177/21650799231170872.

Torous J, Bucci S, Bell IH, Kessing LV, Faurholt-Jepsen M, Whelan P, Carvalho AF, Keshavan M, Linardon J, Firth J. The growing field of digital psychiatry: current evidence and the future of apps, social media, chatbots, and virtual reality. World Psychiatry. 2021;20(3):318–35. https://doi.org/10.1002/wps.20883.

von Thiele Schwarz U, Nielsen K, Edwards K, Hasson H, Ipsen C, Savage C, Simonsen Abildgaard J, Richter A, Lornudd C, Mazzocato P, Reed JE. How to design, implement and evaluate organizational interventions for maximum impact: the Sigtuna Principles. Eur J Work Organ Psy. 2021;30(3):415–27. https://doi.org/10.1080/1359432X.2020.1803960.

Sonnentag S. The recovery paradox: portraying the complex interplay between job stressors, lack of recovery, and poor well-being. Res Organ Behav. 2018;38:169–85. https://doi.org/10.1016/j.riob.2018.11.002.

Sonnentag S, Cheng BH, Parker SL. Recovery from work: advancing the field toward the future. Annu Rev Organ Psych Organ Behav. 2022;9(1):33–60. https://doi.org/10.1146/annurev-orgpsych-012420-091355.

Webb TL, Joseph J, Yardley L, Michie S. using the internet to promote health behavior change: a systematic review and meta-analysis of the impact of theoretical basis, use of behavior change techniques, and mode of delivery on efficacy. J Med Internet Res. 2010;12(1):e4. https://doi.org/10.2196/jmir.1376.

Michie S, Richardson M, Johnston M, Abraham C, Francis J, Hardeman W, Eccles MP, Cane J, Wood CE. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: building an international consensus for the reporting of behavior change interventions. Ann Behav Med. 2013;46(1):81–95. https://doi.org/10.1007/s12160-013-9486-6.

Gardner B, Rebar AL, Lally P. How does habit form? Guidelines for tracking real-world habit formation. Cogent Psychol. 2022;9(1):2041277. https://doi.org/10.1080/23311908.2022.2041277.

Michie S, Johnston M, Carey R. Behavior change techniques. In: Gellman MD, editor. Encyclopedia of behavioral medicine. Springer International Publishing; 2020. p. 206–13. https://doi.org/10.1007/978-3-030-39903-0_1661.

Domhardt M, Baumeister H. Psychotherapy of adjustment disorders: current state and future directions. World J Biol Psychiatry. 2018;19(sup1):S21–35. https://doi.org/10.1080/15622975.2018.1467041.

Haller H, Cramer H, Lauche R, Gass F, Dobos GJ. The prevalence and burden of subthreshold generalized anxiety disorder: a systematic review. BMC Psychiatry. 2014;14(1):128. https://doi.org/10.1186/1471-244X-14-128.

Bower P, Gilbody S. Stepped care in psychological therapies: access, effectiveness and efficiency: narrative literature review. Br J Psychiatry. 2005;186(1):11–7. https://doi.org/10.1192/bjp.186.1.11.

Eldridge SM, Lancaster GA, Campbell MJ, Thabane L, Hopewell S, Coleman CL, Bond CM. Defining feasibility and pilot studies in preparation for randomised controlled trials: development of a conceptual framework. PLoS ONE. 2016;11(3):e0150205. https://doi.org/10.1371/journal.pone.0150205.

Teresi JA, Yu X, Stewart AL, Hays RD. Guidelines for designing and evaluating feasibility pilot studies. Med Care. 2022;60(1):95–103. https://doi.org/10.1097/MLR.0000000000001664.

Bolger N, Laurenceau JP. Intensive longitudinal methods: an introduction to diary and experience sampling research. New York: Guilford Press; 2013.

Meyerowitz-Katz G, Ravi S, Arnolda L, Feng X, Maberly G, Astell-Burt T. Rates of attrition and dropout in app-based interventions for chronic disease: systematic review and meta-analysis. J Med Internet Res. 2020;22(9):e20283. https://doi.org/10.2196/20283.

Yardley L, Spring BJ, Riper H, Morrison LG, Crane DH, Curtis K, Merchant GC, Naughton F, Blandford A. Understanding and promoting effective engagement with digital behavior change interventions. Am J Prev Med. 2016;51(5):833–42. https://doi.org/10.1016/j.amepre.2016.06.015.

Sekhon M, Cartwright M, Francis JJ. Acceptability of healthcare interventions: an overview of reviews and development of a theoretical framework. BMC Health Serv Res. 2017;17(1):88. https://doi.org/10.1186/s12913-017-2031-8.

Eldridge SM, Chan CL, Campbell MJ, Bond CM, Hopewell S, Thabane L, Lancaster GA. CONSORT 2010 statement: extension to randomised pilot and feasibility trials. BMJ. 2016:i5239. https://doi.org/10.1136/bmj.i5239.

Mestdagh M, Verdonck S, Piot M, Niemeijer K, Kilani G, Tuerlinckx F, Kuppens P, Dejonckheere E. m-Path: an easy-to-use and highly tailorable platform for ecological momentary assessment and intervention in behavioral research and clinical practice. Front Digit Health. 2023;5:1182175. https://doi.org/10.3389/fdgth.2023.1182175.

Ozbay F, Johnson DC, Dimoulas E, Morgan CA, Charney D, Southwick S. Social support and resilience to stress: from neurobiology to clinical practice. Psychiatry (Edgmont (Pa:Township)). 2007;4(5):35–40.

Taylor SE. Social support: a review. In: The Oxford handbook of health psychology. Oxford: Oxford University Press; 2011. p. 189–214.

Velando‐Soriano A, Ortega‐Campos E, Gómez‐Urquiza JL, Ramírez‐Baena L, De La Fuente EI, Cañadas‐De La Fuente GA. Impact of social support in preventing burnout syndrome in nurses: a systematic review. Jpn J Nurs Sci. 2020;17(1). https://doi.org/10.1111/jjns.12269.

Woodhead EL, Northrop L, Edelstein B. Stress, social support, and burnout among long-term care nursing staff. J Appl Gerontol. 2016;35(1):84–105. https://doi.org/10.1177/0733464814542465.

Hogan BE, Linden W, Najarian B. Social support interventions. Clin Psychol Rev. 2002;22(3):381–440. https://doi.org/10.1016/S0272-7358(01)00102-7.

Peterson U, Bergström G, Samuelsson M, Åsberg M, Nygren Å. Reflecting peer-support groups in the prevention of stress and burnout: randomized controlled trial. J Adv Nurs. 2008;63(5):506–16. https://doi.org/10.1111/j.1365-2648.2008.04743.x.

Czosnek L, Lederman O, Cormie P, Zopf E, Stubbs B, Rosenbaum S. Health benefits, safety and cost of physical activity interventions for mental health conditions: a meta-review to inform translation efforts. Ment Health Phys Act. 2019;16:140–51. https://doi.org/10.1016/j.mhpa.2018.11.001.

Naczenski LM, de Vries JD, van Hooff MLM, Kompier MAJ. Systematic review of the association between physical activity and burnout. J Occup Health. 2017;59(6):477–94. https://doi.org/10.1539/joh.17-0050-RA.

Conn VS, Hafdahl AR, Cooper PS, Brown LM, Lusk SL. Meta-analysis of workplace physical activity interventions. Am J Prev Med. 2009;37(4):330–9. https://doi.org/10.1016/j.amepre.2009.06.008.

Lock M, Post D, Dollman J, Parfitt G. Efficacy of theory-informed workplace physical activity interventions: a systematic literature review with meta-analyses. Health Psychol Rev. 2021;15(4):483–507. https://doi.org/10.1080/17437199.2020.1718528.

Karabinski T, Haun VC, Nübold A, Wendsche J, Wegge J. Interventions for improving psychological detachment from work: a meta-analysis. J Occup Health Psychol. 2021;26(3):224–42. https://doi.org/10.1037/ocp0000280.

Luken M, Sammons A. Systematic review of mindfulness practice for reducing job burnout. Am J Occup Ther. 2016;70(2):7002250020pl–10. https://doi.org/10.5014/ajot.2016.016956.

Vega-Escaño J, Porcel-Gálvez AM, de Diego-Cordero R, Romero-Sánchez JM, Romero-Saldaña M, Barrientos-Trigo S. Insomnia interventions in the workplace: a systematic review and meta-analysis. Int J Environ Res Public Health. 2020;17(17):6401. https://doi.org/10.3390/ijerph17176401.

Vonderlin R, Biermann M, Bohus M, Lyssenko L. Mindfulness-based programs in the workplace: a meta-analysis of randomized controlled trials. Mindfulness. 2020;11(7):1579–98. https://doi.org/10.1007/s12671-020-01328-3.

Hariharan TS, Griffin B. A review of the factors related to burnout at the early-career stage of medicine. Med Teach. 2019;41(12):1380–91. https://doi.org/10.1080/0142159X.2019.1641189.

Rudman A, Gustavsson JP. Early-career burnout among new graduate nurses: a prospective observational study of intra-individual change trajectories. Int J Nurs Stud. 2011;48(3):292–306. https://doi.org/10.1016/j.ijnurstu.2010.07.012.

Bakker D, Rickard N. Engagement in mobile phone app for self-monitoring of emotional wellbeing predicts changes in mental health: MoodPrism. J Affect Disord. 2018;227:432–42. https://doi.org/10.1016/j.jad.2017.11.016.

Kowalski L, Finnes A, Koch S, Bujacz A. User engagement with organizational mhealth stress management intervention – a mixed methods study. Preprint available at SSRN: 2023. https://doi.org/10.2139/ssrn.4517832.

Halbesleben JRB, Demerouti E. The construct validity of an alternative measure of burnout: investigating the English translation of the Oldenburg Burnout Inventory. Work Stress. 2005;19(3):208–20. https://doi.org/10.1080/02678370500340728.

Peterson U, Bergström G, Demerouti E, Gustavsson P, Åsberg M, Nygren Å. Burnout levels and self-rated health prospectively predict future long-term sickness absence: a study among female health professionals. J Occup Environ Med. 2011;53(7):788–93. https://doi.org/10.1097/JOM.0b013e318222b1dc.

Shirom A, Melamed S. A comparison of the construct validity of two burnout measures in two groups of professionals. Int J Stress Manag. 2006;13(2):176–200. https://doi.org/10.1037/1072-5245.13.2.176.

Lundgren-Nilsson Å, Jonsdottir IH, Pallant J, Ahlborg G. Internal construct validity of the Shirom-Melamed Burnout Questionnaire (SMBQ). BMC Public Health. 2012;12(1):1. https://doi.org/10.1186/1471-2458-12-1.

Sundström A, Söderholm A, Nordin M, Nordin S. Construct validation and normative data for different versions of the Shirom‐Melamed burnout questionnaire/measure in a Swedish population sample. Stress Health. 2022:smi.3200. https://doi.org/10.1002/smi.3200.

Spitzer RL, Kroenke K, Williams JBW, Löwe B. A brief measure for assessing generalized anxiety disorder: the GAD-7. Arch Intern Med. 2006;166(10):1092. https://doi.org/10.1001/archinte.166.10.1092.

Johansson R, Carlbring P, Heedman Å, Paxling B, Andersson G. Depression, anxiety and their comorbidity in the Swedish general population: point prevalence and the effect on health-related quality of life. PeerJ. 2013;1:e98. https://doi.org/10.7717/peerj.98.

Sonnentag S, Fritz C. The Recovery Experience Questionnaire: development and validation of a measure for assessing recuperation and unwinding from work. J Occup Health Psychol. 2007;12(3):204–21. https://doi.org/10.1037/1076-8998.12.3.204.

Almén N, Lundberg H, Sundin Ö, Jansson B. The reliability and factorial validity of the Swedish version of the Recovery Experience Questionnaire. Nordic Psychol. 2018;70(4):324–33. https://doi.org/10.1080/19012276.2018.1443280.

Brown KW, Ryan RM. Mindful attention awareness scale. Am Psychol Assoc. 2011. https://doi.org/10.1037/t04259-000.

Hansen E, Lundh L, Homman A, Wångby-Lundh M. Measuring mindfulness: pilot studies with the Swedish versions of the mindful attention awareness scale and the Kentucky inventory of mindfulness skills. Cogn Behav Ther. 2009;38(1):2–15. https://doi.org/10.1080/16506070802383230.

Hadzibajramovic E, Ahlborg G, Grimby-Ekman A, Lundgren-Nilsson Å. Internal construct validity of the stress-energy questionnaire in a working population, a cohort study. BMC Public Health. 2015;15(1):180. https://doi.org/10.1186/s12889-015-1524-9.

Perski O, Blandford A, West R, Michie S. Conceptualising engagement with digital behaviour change interventions: a systematic review using principles from critical interpretive synthesis. Transl Behav Med. 2017;7(2):254–67. https://doi.org/10.1007/s13142-016-0453-1.

Moskowitz JT, Addington EL, Shiu E, Bassett SM, Schuette S, Kwok I, Freedman ME, Leykin Y, Saslow LR, Cohn MA, Cheung EO. Facilitator contact, discussion boards, and virtual badges as adherence enhancements to a web-based, self-guided, positive psychological intervention for depression: randomized controlled trial. J Med Internet Res. 2021;23(9):e25922. https://doi.org/10.2196/25922.

Musiat P, Johnson C, Atkinson M, Wilksch S, Wade T. Impact of guidance on intervention adherence in computerised interventions for mental health problems: a meta-analysis. Psychol Med. 2022;52(2):229–40. https://doi.org/10.1017/S0033291721004621.

Borghouts J, Eikey E, Mark G, De Leon C, Schueller SM, Schneider M, Stadnick N, Zheng K, Mukamel D, Sorkin DH. Barriers to and facilitators of user engagement with digital mental health interventions: systematic review. J Med Internet Res. 2021;23(3):e24387. https://doi.org/10.2196/24387.

Diener E, Heintzelman SJ, Kushlev K, Tay L, Wirtz D, Lutes LD, Oishi S. Findings all psychologists should know from the new science on subjective well-being. Can Psychol. 2017;58(2):87–104. https://doi.org/10.1037/cap0000063.

Ryan RM, Deci EL. Self-determination theory. In Maggino F, editor. Encyclopedia of quality of life and well-being research. Springer International Publishing; 2022. p. 1–7. https://doi.org/10.1007/978-3-319-69909-7_2630-2.

de Jonge J, Dormann C. Stressors, resources, and strain at work: a longitudinal test of the triple-match principle. J Appl Psychol. 2006;91(6):1359–74. https://doi.org/10.1037/0021-9010.91.5.1359.

Newman DB, Tay L, Diener E. Leisure and subjective well-being: a model of psychological mechanisms as mediating factors. J Happiness Stud. 2014;15(3):555–78. https://doi.org/10.1007/s10902-013-9435-x.

Virtanen A, Van Laethem M, Bloom J, Kinnunen U. Drammatic breaks: break recovery experiences as mediators between job demands and affect in the afternoon and evening. Stress Health. 2021;37(4):801–18. https://doi.org/10.1002/smi.3041.

Weermeijer JDM, Wampers M, de Thurah L, Bonnier R, Piot M, Kuppens P, Myin-Germeys I, Kiekens G. Usability of the experience sampling method in specialized mental health care: pilot evaluation study. JMIR Form Res. 2023;7:e48821. https://doi.org/10.2196/48821.

Kujanpää M, Syrek C, Lehr D, Kinnunen U, Reins JA, de Bloom J. Need satisfaction and optimal functioning at leisure and work: a longitudinal validation study of the DRAMMA Model. J Happiness Stud. 2021;22(2):681–707. https://doi.org/10.1007/s10902-020-00247-3.

Acknowledgements

We would like to thank the radiology nursing program at Karolinska Institutet for a fruitful collaboration and support with inviting participants. Also, we want to extend gratitude to all participants who contributed their time and effort during the study.

Funding

Open access funding provided by Karolinska Institute. The research was funded by a grant from Strategic Research Area Health Care Science (SFO-V): “A strategic area in health care science research at Karolinska Institutet and Umeå University for increased collaboration with healthcare providers and the business sector, and the renewal and expansion of advanced education”.

Author information

Authors and Affiliations

Contributions

L.K. has contributed to conceptualization, data curation, data collection, formal analysis, methodology, project administration, and writing. A.F. has contributed to conceptualization, supervision, reviewing, and editing. S.K. has contributed to supervision, methodology, reviewing, and editing. A.S. has contributed to conceptualization, methodology, and reviewing. A.B. has contributed to conceptualization, data analysis, project administration, funding acquisition, methodology, supervision, reviewing, and editing.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethical approval was granted by the Swedish Ethical Review Authority (reference numbers 2020–01795 and 2022–01546-02). All participants consented to take part in the study by accepting the informed consent statement: “I consent to participate and that my information is handled in the way described in the participant information document”.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kowalski, L., Finnes, A., Koch, S. et al. Recovery at your fingertips: pilot study of an mHealth intervention for work-related stress among nursing students. BMC Digit Health 2, 64 (2024). https://doi.org/10.1186/s44247-024-00120-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s44247-024-00120-w