Abstract

Background

While many digital mental health interventions (DMHIs) have been shown to be effective, such interventions also have been criticized for poor engagement and retention rates. However, several questions remain about how users engage with DMHIs, how to define engagement, and what factors might help improve DMHI engagement.

Main abstract

In this narrative review, we show that although DMHIs are criticized for poor engagement, research suggests engagement rates are quite variable across studies and DMHIs. In some instances, engagement rates are high, even in real-world settings where there is evidence of a subset of users who could be considered ‘superusers’. We then review research on the barriers and facilitators to DMHI engagement, highlighting that qualitative research of users’ perceptions does not always align with quantitative research assessing relationships between these barriers/facilitators and actual engagement with DMHIs. We also introduce several potential issues in conceptualizations of DMHI engagement that may explain the mixed findings, including inconsistent definitions of engagement and assumptions about linear relationships between engagement and outcomes. Finally, we outline evidence suggesting that engagement with DMHIs is comparable to mobile application use broadly as well as engagement with more traditional forms of mental health care (i.e., pharmacological, psychotherapy).

Conclusions

In order to increase the number of people who can benefit from DMHIs, additional research on engagement and retention is necessary. Importantly, we believe it is critical that this research move away from several existing misconceptions about DMHI engagement. We make three recommendations for research on DMHI engagement that we believe, if addressed, are likely to substantially improve the impact of DMHIs: (1) the need to adopt a clearly defined, common definition of engagement, (2) the importance of exploring patterns of optimal engagement rather than taking a ‘one size fits all’ approach, and (3) the importance of defining success within DMHIs based on outcomes rather than the frequency or duration of a user’s engagement with that DMHI.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Background

In 2005, when digital health was in its infancy, Eysenbach wrote a seminal article entitled “The Law of Attrition” highlighting the low retention and engagement rates in eHealth studies of digital interventions [1]. Almost two decades later, digital mental health interventions (DMHIs) are still criticized for poor engagement and retention rates [2,3,4,5], though little research has directly focused on engagement with DMHIs. Moreover, while it is generally accepted that engagement refers to the frequency or duration of DMHI usage (e.g., total number of activities or modules completed) and adherence refers to the extent to which a user completes an intervention as intended (e.g., proportion of DMHI content accessed, number of weeks of the intervention when a user was active), operational definitions of these terms vary considerably across studies. In this article, we revisit the issue of engagement with DMHIs, review the current evidence for barriers and facilitators of engagement with DMHIs, and highlight several methodological and conceptual issues that make evaluating and defining engagement particularly challenging.

Engagement and retention rates in DMHIs

DMHI engagement in research trials

Although many DMHIs are criticized for low engagement and retention rates, engagement rates tend to be underreported in published research [6] and are rarely reported publicly for available DMHIs (though there are services that provide estimates of app engagement for a fee). Among studies that do report on engagement, however, there is a great deal of variability in engagement rates. Some studies report low levels of engagement, consistent with Eysenbach’s [1] original analysis. For example, one study of university students in Macau found that 36.84% of their sample failed to engage with the DMHI whatsoever, and only 23.68% completed the five-session intervention [7]. A systematic review of DMHIs for young people also reported that of the reviewed studies that reported on engagement, participants typically completed less than half of the intervention content [8].

Other studies report less troublesome engagement rates. A pilot RCT of the DMHI Moodivate reported that 66.7% of participants were still active after one month (i.e., utilized the app during the 4th week), 50% were active after two months (i.e., utilized the app during the 8th week), and 42.9% of participants used the app an average of once a day throughout the trial [9]. Some studies even report remarkably high engagement rates. In a study of Intellicare, a suite of mental care apps, Mohr et al. found that 99 of the 105 participants (94%) initiated treatment via Intellicare, and of those, 96% continued to use the apps at five weeks and 90% continued to use the apps at eight weeks [10]. A meta-analysis of 140 trials testing online treatments for anxiety also found that 98% of participants enrolled in clinical trials initiated treatment, and on average, 81% of treatments were completed [11]. Similarly, in a small study of PRIME-D, Schlosser et al. found that although participants were only encouraged to sign on at least once a week, participants logged on an average of 4.5 times per week [12].

Considered together, these studies suggest that engagement with DMHIs may not be inherently nor consistently low, but that there is a great deal of variability in engagement and adherence rates across studies and DMHIs. Indeed, reviews of DMHI studies report wide ranges of engagement and adherence. For example, one review of randomized controlled trials (RCTs) on smartphone-delivered DMHIs reported that, across studies, rates of failure to download or use the DMHI ranged from 0 to 58%, whereas rates of complete adherence (i.e., completing all intervention requirements) ranged from 2 to 92% [13]. Similarly, a systematic review of DMHIs delivered in workplace settings found that although the average level of adherence (i.e., completing all intervention components) across studies was 45%, adherence rates for individual studies ranged from 3 to 95% [14].

Real-world engagement

Arguably, engagement rates from RCTs investigating DMHIs are inflated compared to real-world deployments [15,16,17]. Indeed, one study found that levels of engagement with self-guided DMHIs were four times higher in RCTs compared to real-world usage of the same DMHI [16]. Conceivably, multiple factors including monetary incentives, differences between research participants and real-world users such as higher levels of motivation as a result of screening or consenting procedures, greater perceived accountability via calls and/or visits with study staff, and rigid inclusion/exclusion criteria, may contribute to higher levels of engagement in RCTs relative to real-world settings.

However, studies of real-world engagement with DMHIs similarly report substantial variability in engagement rates. One review of 10 real-world studies of seven publicly available DMHIs found that rates of minimal usage ranged from 21% to 88%, moderate usage ranged from 7% to 42%, and sustained usage (completing all intervention modules or engaging longer than six weeks) or completing the intervention ranged from 0.5% to 28.6% [18].Footnote 1 Other research suggests some DMHIs may have high numbers of monthly active users, whereas others have nearly none [19].

Furthermore, there may be subsets of real-world users that engage at particularly high levels. For example, a cross-sectional survey of 12,151 users of the Calm meditation app found that the average length of engagement with their DMHI was 11.49 months and 60% were using the app five or more times per week [20]. Although this was a convenience sample that is unlikely to be representative of the average user, particularly because users had to open at least two Calm emails and complete at least two sessions during the previous 30 days in order to qualify for the study, it highlights that there are subsets of real-world DMHI users who are very engaged, both in terms of frequency and duration of usage.

Patterns of engagement

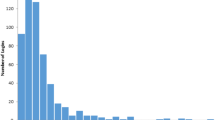

DMHI studies, with some exceptions [21], suggest that engagement rates exhibit a non-linear pattern where they are the highest after signing up for the DMHI, decrease over time, and ultimately level off [22, 23]. For example, one study found the largest decreases in engagement within the first two to four weeks [23], whereas another study of a DMHI for Body Dysmorphic Disorder found that approximately three quarters of users’ engagement occurred during the first six weeks [24]. Although these data could suggest that users are disengaging from the DMHI over time, the relationship between time and engagement may be more nuanced.

One issue is that many studies report average levels of engagement at a sample level, which may be problematic given the high variability in engagement rates discussed above. More recently, research has started applying statistical approaches that permit exploring patterns of engagement among different clusters of users. In one such study, Chen et al. examined patterns of usage across the Intellicare platform, which houses 13 different DMHIs [25]. They identified four clusters of users. Two clusters reflected overall use, regardless of the DMHI utilized: a low usage group that had low levels of engagement across all DMHIs, and a high usage group that had comparatively higher levels of engagement overall. The other two clusters reflected a preference for a specific DMHI within the platform (i.e., Daily Feats, and Day to Day). Although the low usage cluster was the largest of the four groups, making up just over 45% of their sample, the two clusters exhibiting higher levels of engagement with a specific DMHI made up just over 47% of the sample when combined.

In another study, Agachi et al. used hidden Markov modeling to examine patterns of activity versus inactivity among DMHI users over a 78-week observational period [26]. They found that, on average, 70% of participants were considered to be inactive, whereas 18% were considered to be engaging at the average level, and 12% had high levels of engagement. Importantly, they found that users classified as inactive or high engagers were relatively stable; users who were inactive in Week 1 had a 91% likelihood of remaining inactive in Week 2, whereas users who were high engagers in Week 1 had an 82% likelihood of remaining highly engaged in Week 2. Users classified as average engagers, however, had the highest risk of transitioning to a lower rate of activity—with a 64% likelihood of remaining moderately engaged from Week 1 to Week 2, but a 31% likelihood of dropping to inactive. This suggests that efforts to improve engagement among those who are inactive may be less effective, and that strategies should be adopted to help maintain (or increase) levels of engagement amongst those who are moderately engaged after starting a DMHI.

These strategies may be particularly important when users begin using a DMHI as there is some indirect evidence that DMHI users who overcome the initial risk of dropout may be likely to remain active over time. For example, one study of 93 mental health apps found that the median 15-day retention across apps was 3.9% and the median 30-day retention was 3.3%, suggesting minimal dropoff after this initial two-week period [3]. Similarly, Dahne et al. found that although there was a drop of nearly 20% in active users between the second and third week of their trial, the rate of active users remained flat between the third and sixth weeks (with smaller drops in active users occurring at the seventh and eighth weeks) [9]. Looking at more long-term engagement, a retrospective analysis of Vida Health’s DMHI also found that among users who completed an assessment after a 12-week intensive intervention phase, 77% were still active users after 6 months, and 58% were still active after nine months [27].

Other studies have employed similar statistical approaches to identify types of users using other characteristics as well as engagement patterns. Aziz et al. found that approximately 93% of their DMHI users fell into one of two clusters which corresponded with life satisfaction and varying patterns of regular use [28]. A larger cluster (68% of users) could be described as having a greater need, due to lower levels of life satisfaction, and engaged with the DMHI almost daily, whereas the second, smaller, cluster (25% of users) exhibited less consistent use but had comparatively greater life satisfaction. In other words, users’ engagement patterns may have been primarily driven by whether users perceived a need for a DMHI—and those with less need (e.g., those with higher life satisfaction) may not engage as frequently.

Taken together, these studies suggest that a more individualized approach to conceptualizing or studying engagement with DMHIs is important, as users do not engage with these interventions in the same way. More specifically, Agachi et al.’s research suggests that a large proportion of users may be inactive almost right away, and that these users may be particularly difficult to re-engage [26]. More research should focus on what might predict these clusters of activity; however, Aziz et al.’s study suggests that one potential predictor may be perceived need [28]. Interestingly, if perceived need is an important driver of initial engagement with a DMHI, this may also help to explain why engagement becomes less frequent over the first six weeks: if the DMHI is effective, the user likely experiences improvements in these first six weeks, thereby reducing the perceived need and the motivation to continue engaging at the same level. Chen et al.’s study further suggests that some users may prefer a variety of content, as evidenced by the cluster of users who engaged at a high level across the suite of Intellicare apps, whereas others may show a preference for a specific type of content or intervention [25]. Research exploring the different predictors of these types of engagement patterns could also prove beneficial to DMHI developers, who may be able to use this information to personalize their interventions by adjusting their content to suit the user’s preference. And, as we discuss in more detail later, understanding the extent to which different patterns of engagement can be effective—and whether different people benefit from specific patterns—is an important next step in this line of research.

Summary

Despite broad criticism that engagement and retention rates are particularly bad for DMHIs, the research suggests there is substantial variability in these rates. This research also suggests that one cohort of users—perhaps the largest cohort—sign up for a DMHI but show little to no engagement. This engagement pattern tends to be stable, making this cohort particularly difficult to engage. Given how easily most DMHIs can be accessed and downloaded, this pattern may suggest that many people download a DMHI without any commitment or intention to begin using it. However, there are other cohorts of users who exhibit high levels of engagement, and this pattern of engagement is also relatively stable over time. Consequently, identifying the factors that predict these high levels of engagement may be particularly important to increasing the size of this particular cohort, thereby increasing the number of users who can benefit from DMHIs.

Barriers and facilitators to engagement with DMHIs

Some authors have posited that low engagement with DMHIs may be reflective of poor design, a failure to address problems users are most concerned about, or being viewed as not respecting privacy, being untrustworthy, or not useful in emergencies [2]. While these have been cited as causes of low engagement, there is currently very little evidence supporting these as causal mechanisms of low engagement.

Other, more recent systematic reviews have taken a less problem-focused, and a more solutions-focused approach towards engagement with DMHIs, by not only isolating potential barriers to engagement but also highlighting potential facilitators of engagement. For example, in their review of 208 papers published between 2010 and 2019, Bourghouts et al. identified three categories of barriers and facilitators: user characteristics, program features, and technology and environmental characteristics [29]. Given the spike in interest in DMHIs since 2019, in the next section, we provide an updated review of this literature; a summary is also provided in Table 1.

User characteristics

Conceivably, the variability in engagement rates, and the different patterns of engagement, among DMHI users may be a result of characteristics of the users themselves. Unfortunately, few studies have examined the relationship between participant characteristics and engagement with the DMHI—and even fewer studies have been conducted with the aim of understanding how these characteristics predict engagement. Among studies that have considered these characteristics, the most commonly assessed variables are, not surprisingly, gender and age—consequently, our current understanding of which individual difference variables influence engagement with DMHIs remains quite limited and warrants further exploration.

Gender

In terms of uptake of DMHIs, studies have found higher rates of adoption among women than among men [26, 30], which are consistent with the lower rates of mental health care adoption among men more generally [31, 32]. When examining engagement among those who have already adopted a DMHI, however, the impact of gender is less clear. Some studies have found that rates of engagement are lower among men [8, 30, 33, 34] but, in others, this effect has been limited to specific aspects of engagement. For instance, one study found that women spent more time overall in a suite of mental health apps, but there was no significant effect of gender on the number of completed sessions or on session length [10]. Another study found a significant relationship between gender and logins, but not with other engagement metrics [12]. Several other studies have found no significant effect of gender [15, 35, 36] or that women are more likely to be inactive than men [26].

One potential explanation for the inconsistencies across studies is that gender does not directly predict engagement but is related to other factors that impact engagement (e.g., DMHI adoption, perceived stigma). In this case, the extent to which gender predicts engagement may depend on specific features of the DMHI, resulting in substantial variability across studies. Indeed, research suggests that male participants were more likely to report using a DMHI out of curiosity relative to female participants, and identify a low perceived need as a reason for not adopting a DMHI [30]. Other research suggests that men are less interested in self-help interactive programs or activities intended to help reduce stress, and more interested in information delivered in video game formats, compared to women [37]. Thus, although more research is needed to understand the impact of gender on adoption of, and engagement with, DMHIs, research aimed at unpacking whether this differs based on types of DMHIs, or features of the DMHIs, would be particularly useful. In addition, to our knowledge, all research exploring the relationship between gender and adoption/engagement has treated gender as a binary variable and, consequently, little is known about how those with other gender identities might engage with DMHIs. Given the high prevalence of mental health concerns among gender diverse populations [38], further work in this area is critical.

Age

Much like gender, research on the influence of age on engagement has yielded largely mixed results. In their review, Borghouts et al. reported that while some studies find greater levels of engagement among users between the ages of 16 and 50, other studies find greater levels of engagement among users over the age of 30 [29]. A more recent study similarly found that participants aged 35 or older were more likely to watch videos within the DMHI compared to those aged 18 to 34, but no significant effects of age were found for other measures of engagement [35]. Taken together, these findings suggest that engagement may be highest among those users aged 30 to 50.

However, this conclusion is partially driven by the fact that adoption and engagement are sometimes conflated—and the effect of age on adoption of DMHIs and engagement with DMHIs may differ. For instance, in our own research of real-world users of a digital well-being intervention, we found that while older adults (aged 65 or older) are less likely to sign up for DMHIs, rates of optimal engagement are comparable in samples of older adults to younger age groups among those who start using the DMHI [39]. Similarly, a recent pilot feasibility study of one DMHI, Wysa, for worker’s compensation claimants found that more than 70% of their onboarded population was between 25 and 56 years of age, but the age group with the highest rate of engagement and retention was between 57 and 75 years of age [40]. Understanding the impact of age on engagement is further complicated by the fact that youth and older adults are often treated as separate samples, making a comparison of engagement rates from these cohorts to other age groups difficult.

Race and ethnicity

Perhaps the most under-studied user characteristic when examining predictors of adoption and engagement with DMHIs is race and ethnicity. In fact, a recent systematic review of DMHIs found that only 48% of published studies reported on their sample’s race and ethnicity characteristics, most of which did not examine the impact of race or ethnicity as a predictor of engagement or outcomes [41]. However, what research exists to date suggests, optimistically, that race or ethnicity may have little impact on engagement. In our own research, we have found no statistically significant differences in rates of optimal engagement based on self-reported race or ethnicity [42]. Similarly, research with adolescents found no significant effect of race on completion rates for a digital, single-session intervention [15], and a study exploring usage of the Intellicare suite of DMHIs found no significant effect of race or ethnicity on number of sessions, length of sessions, or total time spent in the programs [10].

Although these effects are promising, other research suggests there may be unique barriers to uptake and engagement based on race and ethnicity that are important to consider in order to make DMHIs as accessible as possible. Specifically, Kodish, Schueller, and Lau explored perceived barriers to uptake of DMHIs among college students, and particularly students of color. Ratings from academic and industry experts identified numerous barriers to uptake for college students, including 10 barriers that were considered relevant to specific race/ethnic groups. The two most important barriers were a mistrust of mental health services, systems, and providers, followed by a lack of culturally-responsive services [43].

Other characteristics

Although less commonly examined, studies have also shown that lower levels of education are associated with a higher risk of dropout [44]. Relatedly, lower levels of health literacy, digital literacy, and mental health literacy have all been linked to poorer adherence to DMHIs [29, 36]. Thus one potential avenue to improve engagement—at least in some populations—is to provide more education around DMHIs, and more support for people with lower levels of digital or health literacy within DMHIs themselves. The Digital Clinic at Beth Israel Deaconess Medical Center has advocated for the integration of digital navigators in the implementation of DMHIs. These are trained individuals who work alongside clinicians to help new users identify an appropriate DMHI, troubleshoot technical aspects of the DMHI, and derive clinically meaningful insights from DMHI data [45]. Although digital navigators are trained in engagement techniques [46], to our knowledge, there is no empirical data on whether the integration of digital navigators improves engagement or adherence. Nevertheless, this type of approach is important to address potential barriers to DMHI adoption and engagement among users who are at a greater risk of dropout due to lower levels of education, literacy, or comfort with technology.

Studies have also explored whether the severity of mental health symptoms influences engagement with DMHIs; however, the evidence here is mixed. While some research suggests that more severe mental health symptoms may be a barrier to engagement [29, 44, 47], other studies have found better levels of adherence among people with more severe mental health symptoms including depression [34], anxiety, and perceived stress [48]. Conceivably, these inconsistent results may be due to the fact that mental health symptoms do not directly predict engagement with DMHIs, but may impact a user’s level of motivation, which has been shown to predict engagement more consistently [47, 49].

Notably, although personality traits appear to influence interest in using DMHIs [50] and the type of DMHI content users prefer [51], research on the impact of personality on engagement remains scant. One study found that openness to experience and resistance to change predicted adherence to a mindfulness-based DMHI among cancer patients, but these effects were no longer significant when controlling for gender [34]. Another study found that moderate extraversion and high neuroticism were associated with more consistent use of mental health applications relative to high extraversion and low neuroticism [28]. Given the lack of consistency in effects of other user-level predictors of DMHI engagement, additional research exploring whether personality traits predict engagement—either directly, or indirectly via other mechanisms like motivation—is an important avenue for future DMHI research.

Program features and engagement with DMHIs

The category of facilitators and barriers of engagement with DMHIs that has received the most empirical attention is program features, likely because these are variables that can be manipulated more easily, expeditiously, and in a more cost-effective manner. Qualitative research with DMHI users suggests that users perceive usability, efficiency, and effectiveness as important determinants of DMHI engagement. More specifically, participants have reported that poor usability or programs that require too much time are barriers to engagement [52], whereas a DMHI’s effectiveness, interesting content, reminders, and being able to track progress are perceived as facilitators to engagement [47, 49, 52]. However, these studies provide little insight about what specific features may improve factors like usability or perceived effectiveness—and research suggests that ratings of user experience alone do not predict user retention [5]. Some research has focused on the potential impact of specific features, most of which has focused on human support or chatbots, and prompts.

Human support

Although several studies have shown that guided, or supported, DMHIs have stronger effects than self-guided, or unsupported, interventions [53,54,55,56], and that higher levels of engagement with coaching predict better outcomes [12], the evidence for how the addition of human support impacts engagement with DMHIs is less clear. Qualitative research suggests that users report a preference for human support, such as e-coaching [8, 47], and indicate that not having someone to talk to makes it easier to discontinue usage [47]. Evidence for whether such support increases actual engagement and retention is mixed, however.

Some early reviews and meta-analyses did find higher levels of engagement in supported interventions [56]. Similarly, early studies showed that adding therapist support delivered via coaching by telephone [57], or non-clinical support via peer-to-peer feedback [58], increases adherence and engagement. However, more recent reviews testing the impact of coaching have either found no significant effect of support on engagement or adherence to DMHIs [36, 55, 59], or inconsistent results across studies [60, 61] or types of engagement [62]. In their review, Bernstein et al. argued that the lack of information about how engagement was defined, as well as lack of information about the coaching itself, may contribute to the inconsistent results across studies [60].

In addition, research has shown that therapeutic alliance within both supported and unsupported DMHIs predicts engagement with the DMHI [63]; therefore, the extent to which therapist- or coach-delivered support predicts engagement may depend on the extent to which these providers can successfully build a therapeutic alliance with users of DMHIs (which is rarely measured in these studies). In fact, engagement with therapist- or coach-delivered support is often characterized by low engagement rates in studies, which may negatively impact therapeutic alliance. In a recent pilot feasibility study of a DMHI implemented in outpatient orthopedic settings, while the weekly engagement rate with the DMHI was 57%, coaching engagement was 33%, with the median number of messages sent to a coach, or text-based sessions with a coach, being zero [64]. Another study found that only 8.5% of their participants sent more than five messages to a therapist within a guided DMHI over 14 weeks, and the majority of their participants (56.4%) sent no messages whatsoever [65]. Interestingly, one study comparing optional versus standard therapist support as part of a digital intervention for depression and anxiety found that the number of messages exchanged between user and therapist were lower when support was optional, as were completion rates, but changes in depression and anxiety were comparable across the two groups [66]. This suggests that to be effective for boosting engagement and adherence, coaching may need to be integrated as a standard, rather than an optional, feature of the DMHI.

Other research suggests there may be heterogeneity in users’ preferences for the type, or amount, of support they receive within DMHIs. For instance, in a study of employees with access to a workplace DMHI, several participants indicated they never engaged with e-coaching or felt unclear about the role of the e-coach. Among the employees who did engage with e-coaching, some participants indicated wanting the coach to be more proactive and have more contact overall, whereas others wanted to be able to contact the coach on their own terms [47]. The extent to which coaching helps improve engagement and retention may, therefore, depend on the extent to which that support matches the user’s support preferences. Overall, although the notion that coaching should increase engagement and retention is intuitive, given the relative lack of systematic research testing the effects of human support on engagement, and the mixed findings across studies, whether such support truly facilitates engagement remains unclear.

Although coaching or therapist-support tends to be the most common form of human support in DMHI research, other forms of human support may also influence engagement in DMHIs. Qualitative studies suggest that the ability to connect with peers via the DMHI is a desirable feature [7, 8], particularly among youth [49, 67]. And although research exploring the potential benefits of social support integrated into DMHIs on engagement and adherence is quite limited, the current evidence is promising. In one study, people who had access to an anonymous, online discussion group in combination with a minimally-guided DMHI were more likely to log on to the DMHI compared to participants who did not have access to the discussion group [67, 68]. Another study found that engagement rates were higher for an online stress management program for workplace settings when it was accompanied by a weekly support group compared to a fully self-guided program, although support groups in this case met face-to-face rather than virtually [69]. Adding social networking components to a DMHI has also been shown to increase engagement [70].

Emerging work suggests that the format of this type of support may also be relevant. Research evaluating an Internet-based stress management program with the addition of an online message board found that 85% of participants who accessed the message board at least once said it was not helpful because they did not have time or interest in participating, they experienced difficulties accessing the message board, or that the message board was not active enough [71]. Similarly, research suggests that videoconferencing may not be a desirable format for support groups. In one study, researchers found that 32% of participants assigned to the DMHI condition including weekly videoconferencing dropped out of the study after being assigned to that condition, significantly higher than what they observed in the other conditions [72]. In another study, the same authors found low participation rates in videoconferencing sessions, and no difference in engagement among participants assigned to the DMHI with weekly videoconferencing, or the DMHI with only email prompts [73].

Taken together, although early research suggested that human support may be the pathway to improved engagement and adherence, additional research is needed to understand how to improve engagement with the support as well—and what type of support is optimal for improving engagement. More importantly, human support alone may not address some of the primary barriers to engagement with DMHIs. For example, Renfrew et al. argued that time constraints tend to be the biggest barrier to engagement with DMHIs, and that human support may not only fail to remove this barrier but may actually enhance it [74]. Thus while beneficial to some DMHI users, human support is unlikely to help increase engagement universally.

Chatbots

Because human support inevitably limits the scalability of DMHIs, there has been growing interest in whether this level of support can be provided by other sources, like artificially intelligent chatbots. Several existing DMHIs use chatbots, or relational agents, to deliver their intervention content, and research suggests that users can develop a therapeutic alliance with these chatbots [75, 76]. Although few studies have examined whether chatbots can also increase engagement, there is some promising evidence that the addition of a chatbot helps to increase engagement compared to self-guided versions of the same DMHIs [77, 78]. Moreover, with the recent interest in and increased adoption of products leveraging generative artificial intelligence (e.g., large language models such as ChatGPT or Google’s Bard), future research should also consider the extent to which these facilitate engagement.

Prompts

Research on the impact of prompts on engagement and adherence includes phone reminders, emails, and push notifications. A recent review suggests that the evidence for the benefits of phone reminders, particularly above and beyond the benefits of automated reminders, is mixed [61]. Of the four reviewed studies, two found incremental benefits of phone reminders over automated reminders [79, 80], but two found no significant effects [81, 82]. More importantly, phone reminders are unlikely to be practical outside controlled research settings due to scalability and privacy concerns.

Although automated reminders are more practical, the research to date suggests these may be minimally effective at improving engagement and retention. A review and meta-analysis of studies exploring the impact of prompts on engagement with digital interventions, including DMHIs, found that although there was some evidence that participants who received technology-based prompts engaged more frequently compared to those who received no engagement strategy, this effect was limited to a dichotomous definition of engagement and was considered small to moderate in size [83].

Importantly, some types of automated prompts (e.g., push notifications) may be more effective than others (e.g., emails). For example, in their 78-week observational study of patterns of DMHI activity, Agachi et al. found that receiving and opening an email increased a user’s probability of moving from an inactive status to an active status by only 3% [26]. Notably, the type of email influenced the extent to which the email was successful in boosting engagement. Specifically, receiving and opening a welcome email was associated with a 12% increased probability of moving from the inactive category to an engaged category, compared to 3% when receiving and opening a health campaign email. Some emails may even have negative effects on engagement; receiving and opening a newsletter, special offer email, or reactivation email all were associated with an increased probability of inactivity [26].

As with human support, one aspect that appears to limit the impact of email reminders is low engagement. Industry benchmarks have shown that average email open rates across industries is 21.33%, with an average click rate of 2.62% [84]. Average open rates and click rates for the Health and Fitness industry, the Medical, Dental and Healthcare industry, or the Software and Web App industry were all in line with these industry averages as well. And although few studies report on open rates, those that have also suggest relatively low levels of engagement with email prompts. Agachi et al. reported that 60.5% of participants opened a reminder email, on average, and open rates varied substantially over the course of the study (from 26.65% to 76.1%) [26]. Another study of a digital health tool for diabetes reported that their most successful email prompt had a 43.2% open rate, and among those who opened the email, 28.6% subsequently visited the website. Their poorest performing email had a 22% open rate and generated no subsequent website visits [85].

Although there is evidence from other domains that SMS messaging may be more effective in terms of open and click-through rates [86], surprisingly little DMHI research has focused specifically on the impact of SMS messaging as an engagement feature. To our knowledge, only one study examined the direct effect of incorporating SMS messaging to automated emails alone, which found no significant differences in engagement between participants who received only automated emails or those who received automated emails and personalized SMS messages from a coach [73]. More research exploring the potential benefits of SMS messaging, particularly outside of a mode of delivery for coaching or therapeutic interventions, is needed.

Another way of delivering prompts to users that may circumvent the requirement that a user opens an email (or text message) to receive the information is to use push notifications delivered by smartphones. Although qualitative research has shown that the lack of push notifications within DMHIs is perceived negatively by users [87] and some research suggests DMHI engagement is more likely on days where users receive a prompt compared to days without prompts [88], other research suggests push notifications may be more effective at engaging users who are already engaged, rather than re-engaging inactive users. For example, one study found that as more time passed since a user’s last interaction with the intervention, the less likely a push notification would successfully lead them to log in. By contrast, the higher the frequency of usage at the time the push notification was sent, the more likely it would successfully lead them to log in [89]. In addition, frequent push notifications are also reported as a barrier to engagement [90], suggesting push notifications ultimately need to be used strategically to improve engagement.

One factor that appears to influence the effectiveness of push notifications is timing. One study found that delivering tailored health messages via push notifications led to small, but significant, effects on a user’s likelihood to use the DMHI within 24 h. And although not statistically significant, the authors found some evidence that delivering these messages on a weekend, especially mid-day, was most effective [91]. Similarly, the nature of the notification also appears to matter. Research shows that push notifications that are personalized based on real-time assessments of a user’s engagement may have the broadest impact on different forms of DMHI engagement [92], and that notifications with personalized suggestions may be more effective than those with personalized insights [89]. Taken together, push notifications appear to be one of the more promising types of prompts for engaging DMHI users, though more work is needed to better understand how to optimize these notifications.

Other program features

Other research has started to shed some light on whether specific program features may benefit engagement with DMHIs. Broadly, studies suggest that users prefer DMHIs that are customizable [29]. For example, one study explored whether the ability to customize an avatar would increase a participant’s engagement with digital attention bias modification training. The authors found evidence that participants who were able to customize their avatar experienced greater benefits from the training task compared to those who were assigned an avatar, which they suggested was due to deeper levels of engagement with the task [93]. Similarly, studies have shown that children and youth report a preference for DMHIs where they can customize their profile [49].

Studies also suggest that programs that offer incentives may have higher levels of engagement. Some researchers have suggested that one potential explanation for the higher levels of DMHI engagement in RCTs compared to real-world settings is that RCT participants receive monetary incentives [15]. Monetary incentives have been shown to increase engagement with other types of digital interventions [94, 95], and there is some evidence that adding monetary incentives to a DMHI increases the number of active days and activities completed among users [96]. Monetary incentives are unlikely to be a feasible approach for most DMHIs, however research should explore whether other types of incentives can have similar benefits.

One way to incentivize engagement is to show users how engaging with the DMHI leads to improvements in mental health outcomes. In qualitative research, users report that perceived symptom improvement facilitates engagement [29, 52] and that they prefer being able to track their progress over time [47], whereas quantitative evidence for the effects of mental health improvement is less clear. One study found that the addition of a mood tracking questionnaire had minimal effects on engagement with a mindfulness app, particularly beyond one week [97]. Other research has found that changes in depression during an intensive phase of a DMHI program does not predict sustained engagement [27]. As discussed earlier, it is conceivable that, paradoxically, while users believe that effectiveness facilitates engagement, improvements in their mental health reduce the need for the DMHI, thereby decreasing—rather than increasing—engagement—a notion we revisit in a later section.

Environmental characteristics

Environmental characteristics refer to contextual factors outside the individual, or intervention, that may promote or hinder engagement with a DMHI, such as factors related to implementation. Currently, this is the set of predictors of engagement that has received the least amount of empirical attention, and much of the research that exists is qualitative in nature and focused on users’ perceptions of facilitators and barriers to engagement rather than measuring their impact on actual engagement.

Referral source and support

Qualitative research suggests that users perceive endorsements of a DMHI by others as a facilitator to uptake and engagement. In workplace settings, those with access to a web-based stress management program noted that encouragement and promotion from their employer and managers facilitated engagement with the program [47]. Similarly, another study found that consultants who were leading e-mental health sessions within workplace environments identified enthusiastic managers and tech-savvy champions as likely facilitators of DMHI uptake [98]. Cross-sectional surveys assessing interest in DMHIs also suggest that people indicate a greater interest in DMHIs that come recommended by clinicians, particularly mental health practitioners [99].

Although these studies suggest that people perceive these endorsements as facilitators to uptake and engagement, little quantitative research has tested whether endorsements from managers, clinicians, peers, or family actually impact engagement rates. One study did find that participants who learned about an Internet-based anxiety program via media outlets like television or newspapers were 1.76 times more likely to be retained, and those who learned about the program from friends or family were 1.42 times more likely to be retained, relative to participants who learned about the program some other way (e.g., brochures, support group, Facebook); however, this study defined retention as completing post-intervention assessments rather than engagement with the Internet-based program itself, irrespective of assessment completion [100].

Moreover, patterns of engagement across DMHI studies do not demonstrate a clear advantage when DMHIs are endorsed by these groups. Some studies have reported above industry standard engagement rates when participants learned about the DMHI through their employer [101] or during a visit to an orthopedic clinic [64]. However, other studies recruiting participants from clinic or workplace settings have failed to find higher than industry standard engagement rates. One such study recruited participants who had had a recent myocardial infarction from cardiac clinics in Sweden and found that while almost all participants (96.6%) initiated the first program module, only 54% of their sample completed the introductory module and 15% initiated a subsequent module [65]. In another study, only 8.1% of eligible employees across two companies adopted a DMHI, where employees were invited to information sessions via emails from HR managers [102].

Other environmental characteristics

One of the most common barriers to engagement identified by DMHI users in qualitative research is time constraints [29, 47, 49, 52]. Research further suggests that interventions users feel they can integrate into their daily lives [29, 49] and are accessible via technology they are already using regularly [49] promote engagement. In workplace settings, it may be important to ensure DMHIs are accessible outside the workplace, as users found it difficult to engage when their workload was high [47]. In addition, several studies have identified cost as a potential barrier to DMHI engagement [29, 52]. These costs may include costs directly associated with access to the DMHI, but also include phone- or Internet-related costs, which are required to engage with a DMHI.

Summary

Although numerous studies have explored the potential facilitators and barriers to engagement with DMHIs, most of these studies have relied on users’ accounts of the factors that promoted or interfered with their engagement. However, these perceptions do not necessarily correspond with predictors of objective measures of engagement, and the current research on predictors of objective measurements of engagement yields little clarity on what factors may promote engagement. While understanding users’ perceptions is important, additional research testing how different user, program, and environmental characteristics influence DMHI adoption and engagement, and user retention, is critical to understanding what levers may impact user perceptions as well as actual engagement with DMHIs.

Challenges and opportunities for research on engagement with DMHIs

What does ‘Engagement’ mean?

As we noted earlier, one of the biggest challenges within DMHI research on engagement is the lack of a clear, consistent definition of engagement. In the studies reviewed above, operational definitions of engagement vary widely, which may account for the mixed findings. The varied definitions also highlight an even bigger philosophical problem: what does engagement with DMHIs actually mean?

Adoption vs. Engagement

Although most studies of engagement define engagement as user interactions with the DMHI, such as the number of features or amount of content accessed, number of logins, or the length of time spent on the DMHI [61, 83], others include definitions of engagement that measure interest in adopting a DMHI as measured by signups [29]. However, factors that prompt someone to download a DMHI may be very different from those that promote engagement with the intervention.

Conceivably, people may download a mobile app out of curiosity or because it was recommended by someone a patient trusts (e.g., friend, provider), but they may not be prepared to commit to engaging with the DMHI as intended. Notably, across all mobile apps, retention drops precipitously after just one day, with one-day retention rates at 22.6% and 25.6%, for Android and Apple users respectively [103]. Other research also has shown that the most downloaded mental health apps are not necessarily the ‘stickiest’ (a ratio of monthly active users to app downloads) [104], leading some researchers to argue that downloads may reflect a DMHI’s level of popularity or marketing success, rather than a solid indicator of users’ willingness to engage [5]. This is consistent with the fact that, as discussed above, approximately 70% of DMHI users are considered inactive after signup, and this inactivity is persistent [26]. Collectively, this evidence suggests that, in the same way a patient may consult with a physician or complete an intake appointment with a mental health provider, but not follow through with treatment recommendations (e.g., filling a prescription, returning for a psychotherapy visit), people may download or sign up for a DMHI and then decide, for a variety of reasons, that the intervention is not something they want to engage with further at the present time.

As a result, computing engagement metrics from the point of downloading or signing up rather than initiating actual use of the intervention may be artificially reducing engagement metrics. Perhaps to truly understand engagement, some benchmark should be used to differentiate a user who is genuinely interested in starting the intervention versus someone who is in an exploratory phase of DMHI adoption.

Objective vs. Subjective engagement

Although adopting a definition of engagement that excludes uptake or adoption helps to reduce some of the heterogeneity described above, studies of DMHI engagement are also typically limited to objective metrics of engagement that are passively collected within the intervention itself. Consequently, the most commonly used metrics include outcomes like the number of logins or activities completed, which may only address technological engagement, rather than other important aspects of engagement for success with DMHIs.

In a recent review, Nahum-Shani et al. defined engagement with digital interventions as “a state of energy investment involving physical, affective, and cognitive energies directed toward a focal stimulus or task” [105]. Usage metrics, like activity completion, can potentially measure the physical energy users direct towards the intervention, but are unlikely to capture affective or cognitive energies [106]. Arguably, one user could complete an activity rather passively, merely going through the motions while being distracted by things going on in their environment, whereas another user could engage more deeply with that same activity by completing it in a quiet room and with a greater level of investment—but both would be recorded as having completed the activity and, in turn, as having identical levels of ‘engagement’. This is further complicated by the fact that sometimes completing an activity within a DMHI and deriving the intended therapeutic benefit are one and the same, but in other instances the DMHI activity is meant to prompt a subsequent therapeutic activity or skill to be completed at some point in the future, which is not always measurable (e.g., completing an activity after interaction with the DMHI has concluded) [107]. Taken together, simple activity completion may be a less than optimal definition of engagement, at least where clinical outcomes are concerned, and more sophisticated frameworks may be necessary to better characterize engagement with DMHIs. For example, Nahum-Shani et al. have proposed the affect-integration motivation and attention-context-translation (AIM-ACT) model to facilitate improved understanding of engagement with DMHIs [105].

Some researchers have called for measuring other, less objective, forms of engagement. Graham and her colleagues have argued that subjective engagement—which they define as user ratings of usefulness, satisfaction, and ease of use—may be more important to clinical outcomes than objective engagement, like technology use [108, 109]. Preliminary findings from at least one RCT suggest that these subjective engagement metrics predict changes in depression and anxiety over the course of an eight week intervention [108]; however, this study did not measure or control for objective engagement, making it impossible to determine whether users who found the DMHI more useful, easier to use, or were more satisfied also demonstrated correspondingly higher levels of objective engagement, as described above. This definition of subjective engagement also seems to focus more on a user’s perception of the DMHI itself, whereas other researchers have described subjective engagement more as the extent to which a user immerses themselves in the program [110].

Measuring this kind of engagement—what we call psychological engagement—is particularly difficult. Studies have attempted to do so using questionnaires, interviews or think aloud paradigms [110]; however, these do not translate well to real-world settings, where assessing engagement is particularly important. Conceivably, other usage metrics may be useful in estimating this kind of engagement. One way to do this is to measure the quality of a user’s engagement using objective approaches, like the degree of success achieved with DMHI activities or examining the content of text-based responses to questions asked as part of those activities [107]. This type of approach is becoming more feasible with sophisticated data-analytic techniques, such as machine learning, which have been applied to differentiate between high- and low-quality interactions with one DMHI [111]. In our own research, we also applied text analysis to determine that when an artificially intelligent chatbot designed for adherence fidelity delivered activities within a DMHI, users wrote more text and used words more directly relevant to the nature of the activity, compared to self-guided versions of those activities [78].

Multidimensional definitions of engagement

Another approach is to leverage the multiple objective usage metrics captured by DMHIs to move beyond a simple unidimensional definition of engagement, to a multidimensional definition that incorporates and synthesizes a variety of metrics. One recent study took this approach by conducting exploratory factor analysis on a variety of passively-measured engagement metrics that captured the amount of DMHI usage overall, the amount of specific DMHI features, frequency of usage, periods of inactivity, consistency of use, and length of DMHI sessions [24]. Their factor analysis identified two engagement factors: frequency and duration of use. A cluster analysis further identified participants who exhibited different patterns of engagement with respect to these two factors: (1) users who engaged with the DMHI less frequently, but whose DMHI sessions were longer in duration; (2) users who engaged with the DMHI more frequently, but for shorter durations; and (3) users who had relatively low levels of both frequency and duration. Importantly, the authors found no significant differences in improvement in outcomes based on engagement pattern, though (not surprisingly) participants who had low levels of both types of engagement demonstrated a higher rate of attrition relative to other participants [24].

Another recent study found evidence of a latent factor structure for engagement with a DMHI designed for trauma recovery that integrated both objective and subjective measures, namely users’ attention or interest in the DMHI, positive affect, subjective measurements of DMHI usage, and objective measurements of DMHI pages viewed [112]. Although these frameworks require replication, multidimensional approaches to the definition of engagement such as these represent an important avenue for future DMHI research, and for achieving a deeper understanding of DMHI engagement.

What is an optimal level of engagement?

Another related challenge is determining what optimal engagement is—that is, how much engagement with a DMHI is necessary to produce a desired outcome? Even in the field of analog treatments, there are difficulties associated with knowing exactly how many sessions it will take for an individual patient to benefit from intervention and, consequently, operationalizing what qualifies as optimal “engagement” remains a challenge [113]. Criticisms of DMHI engagement largely tacitly assume that greater utilization of the DMHI corresponds to better outcomes, and research on engagement often shares this same assumption. However, the relationship between engagement and outcomes is not so clear.

Some studies have found that higher levels of usage do predict better outcomes. One study of computerized cognitive training with young adults found that training time was positively related to improvements in depressive symptoms [114]. Another found a significant correlation between DMHI usage and improvements in social anxiety outcomes, but only for a mobile version, not a computer-based version [115]. But most studies examining the relationship between continuous measures of engagement and improved clinical outcomes fail to find an association between DMHI use and improvements in outcomes [9, 12, 21, 22, 116,117,118]. In fact, in a recent study, Peiper et al. examined trajectories of change in depressive symptoms among users engaging with a therapist-guided DMHI and found that the treatment profile associated with the highest volume of engagement (the moderately severe profile) actually had the smallest effect size in terms of changes in depressive symptoms [119]. Similarly, a small pilot study comparing two different DMHIs found that the DMHI with the lower frequency of logins had a stronger impact on depression, although there was no relationship between DMHI use and improvement in depression overall [120]. Some of the discrepancies in this literature may be attributable to the fact that different DMHIs likely rely on different hypothesized mechanisms of action (e.g., Cognitive-Behavioral Therapy [CBT], explicit training of neurocognitive functions, such as emotion recognition or attention mechanisms), and the relationship between engagement and outcomes may differ based on the mechanism of action. Unfortunately, too few studies are available to fully understand how varying mechanisms of action may impact engagement.

Another potential explanation for these non-significant relationships is that the relationship between engagement and improvement in clinical outcomes is not linear. Conceivably, a certain engagement threshold needs to be met to obtain a therapeutic benefit, but incremental benefits beyond that threshold may be small or even diminish over time. That is, beyond this threshold, engaging with the DMHI may help to maintain gains, rather than contributing to further improvements, in which case the trajectory of change would plateau rather than continue linearly. Some studies have employed categorical approaches to evaluating the relation between engagement with a DMHI and outcomes to overcome challenges associated with a pattern of non-linearity. Indeed, studies that categorize users based on engagement (rather than treating engagement as a continuous variable) have reported significantly greater improvements among users with higher levels of engagement. For example, Inkster et al. found that ‘high users’ (defined as users who engaged with the Wysa app on the two screening days and at least one day in between) had significantly greater improvements in depressive symptoms compared to ‘low users’ (defined as used who engaged with the app only on the two screening days) [121]. Our research has also found that users who engage at an optimal level (completing an average of at least two activities per week) report significantly greater improvements in outcomes relative to users engaging below the optimal level [39, 122, 123]. These findings are consistent with the fact that many studies that failed to find a relationship between engagement and improvements in outcomes reported relatively high engagement rates [9, 12, 21, 22]. Consequently, most of their participants may have surpassed this ‘optimal usage’ threshold and conferred the intended benefits, thereby restricting the range of scores and attenuating the relationship between engagement and outcomes.

Another potential explanation is that different users require different dosage levels to receive similar benefits (e.g., some users may require lower levels of engagement, whereas others may require a greater volume, or longer periods of engagement). For example, some researchers have recently criticized the classification of patients who drop out of traditional face-to-face therapy as retention failures, arguing that some patients may discontinue services because they have achieved the desired therapeutic benefit [124]. Indeed, in their analysis of cases of youth attending a community clinic, 17% of patients initially classified as retention failures were reclassified as recovered because they met criteria for reliable change.

To our knowledge, this kind of approach has not been used for DMHIs, but it highlights the importance of considering a user’s level of response to the intervention in addition to their level of engagement. One could argue that different patterns of disengagement with DMHIs may be indicative of different reasons for discontinued usage. For instance, in their study, Dahne et al. found a notable drop in active users between Week 2 and 3 of their 8-week trial, followed by a smaller, but notable drop again between Weeks 7 and 8 [9]. Another study conducted by Pham et al. found steep decreases in DMHI usage between Weeks 1 and 2 and Weeks 3 and 4, but relative stability in the number of users between Weeks 2 and 3 [125]. Users who discontinue usage shortly after signing up likely have different reasons for disengaging compared to users who disengage after several weeks of use. Indeed, as we discussed earlier, users who experience improvements in mental health outcomes may subsequently have less need for the DMHI, resulting in less frequent engagement over time.

This highlights another potential misconception about engagement with DMHIs: that sustained engagement is ideal. Although some DMHIs are prescriptive and time-limited, others have been designed to be available as needed, and on a long-term basis. Sustained engagement may not be the goal with the latter; rather episodic engagement may be expected. If users begin engaging with a DMHI due to a perceived need and then discontinue (or reduce) use of that DMHI after experiencing some meaningful improvement, they may re-engage with the DMHI later if the perceived need returns. This concept is similar to ‘booster sessions’ utilized in the context of CBT wherein individuals who have completed a course of treatment return at a later date for additional support, if necessary [126]. To date, research on patterns of engagement have not explored this kind of episodic engagement and likely do not follow users long enough to observe this type of pattern.

Similarly, Weingarden et al.’s research showing that participants exhibited comparable levels of improvement regardless of whether they had more frequent, but briefer, periods of engagement versus less frequent, but longer, periods of engagement with a DMHI [24] suggests that optimal engagement does not reflect a one size fits all concept. Moving beyond the assumption that sustained engagement is always the goal, to explore other potentially beneficial patterns of engagement—particularly among DMHI responders—is critical to better understanding how to promote optimal engagement and improve outcomes.

What is a minimally effective dose?

Along with the assumption that sustained engagement with DMHIs is always the goal, there is also often an implicit assumption that more frequent engagement is always ideal. For example, one review of mHealth apps designed for people with noncommunicable diseases found that 69% of the apps included in their review were intended for daily usage [36]. Although frequent and consistent usage can help with habit formation [127], this also sets the bar for adherence to such DMHIs exceptionally high. More importantly, as noted above, the frequency of engagement does not reliably predict improvements in targeted outcomes.

Given the high levels of dropout during the initial phases of engaging with DMHIs, an alternative approach may be to design interventions that deliver a minimally effective dose as quickly as possible, increasing the number of users who can achieve meaningful improvement in outcomes. Although they are less common, brief DMHIs, including single-session interventions, have been shown to be effective and present an additional approach to scaling mental health support [128,129,130,131]. In fact, studies have shown that individuals flagged as struggling with their mental health based on their activity on social media platforms can successfully be directed to complete a brief, one-minute intervention. Moreover, individuals who completed this brief intervention subsequently reported reduced feelings of hopelessness (at least in the short term) and were more likely to engage with other recommended resources [132]. Although much of the work on single-session DMHIs has been conducted among youth [128, 133, 134], they have also been shown to be acceptable and feasible among young adults [130, 132].

Brief interventions may not solve the issue of engagement, as completion rates for single-session interventions still show room for improvement [128, 133], particularly in real-world settings [15]. Despite being brief in nature, studies suggest that as many as 65% of people who start a single-session intervention fail to complete it [133]. However, because these interventions are effective [128,129,130,131], scalable [131] and cost-effective [135], they warrant substantially more attention, particularly with regard to their utility among adults as well as their appropriateness for integration into new and existing DMHIs.

Are levels of engagement worse in DMHIs than in other contexts?

A final important consideration about the criticisms of DMHIs for low engagement and retention is that the patterns of engagement associated with DMHIs are not unique. Previous evaluations of the use of mobile Android applications have demonstrated a similar pattern of use wherein a substantial decrease in daily use is observed by Day 3 (a reduction of 77%), on average, and even among the Top 10 applications, daily use is approximately 50% by Day 90 [136]. Notably, this pattern of declining use occurs at a similar rate regardless of the popularity of the mobile application.

Not only are DMHIs and corresponding rates of engagement often discussed within the context of other mobile applications, but these discussions also often—either implicitly or explicitly—frame this discussion with respect to how DMHI engagement fares relative to more traditional approaches to mental health care (e.g., face-to-face psychotherapy, psychopharmacological interventions). We believe this approach is misguided for a variety of reasons. First, it assumes that for a DMHI to work as intended, it must be utilized at rates comparable to traditional face-to-face or pharmacological interventions. However, not all DMHIs are intended to supplant existing approaches to mental health treatment; in fact, regulatory bodies like the Food and Drug Administration stipulate that prescription digital therapeutics be utilized as an adjunctive treatment to other, more traditional forms of therapy [137]. Consequently, intended (and optimal) levels of engagement may be very different across the care modalities.

Furthermore, this approach implicitly conveys that engagement with traditional forms of mental health care are superior or ideal. Engagement with these traditional forms of mental health care is rarely reviewed and not well characterized throughout the literature. Comparisons of engagement rates across these modalities may also be inappropriate as they are measuring different behaviors. Engagement rates with DMHIs may be easier to directly quantify, even from the point of initial interest, as engagement behavior can be tracked on an ongoing basis once a user signs up. In contrast, estimates of psychiatric medication or therapy dropout/non-adherence tend to rely on more subjective approaches to quantifying adherence (e.g., patient self-report; clinician’s judgment), and these vary from study to study, and provider to provider. Notably, however, technology is increasingly being integrated into the monitoring of metrics like medication adherence (e.g., “smart” bottle caps; AI that detects facial expressions and motion) to better capture data regarding these indicators of adherence [138].

In particular, there is a critical difference between “initiating” interest in a traditional intervention modality (e.g., obtaining a prescription, attending a visit with a mental health provider) and in a DMHI (e.g., downloading or signing up for a DMHI). The latter takes substantially less effort and, consequently, a higher level of initial attrition for DMHIs may be expected due to this easier entry point. If similar levels of disengagement occur within traditional forms of mental health care—such as an individual identifying a potential mental health provider via an Internet search, but never making an appointment—these are not typically measured. This further highlights the problem we raised earlier with conceptualizations of engagement that presume that downloading a DMHI indicates a likelihood to engage. Measurement of engagement with more traditional forms of mental health care inherently begin with a more committed patient compared to DMHIs, which contributes to the perception that engagement and retention is worse in DMHIs.

Pharmacotherapy

For pharmacotherapy, engagement refers to treatment compliance, which could include the non-fulfillment adherence described earlier (i.e., not filling a prescription) as well as non-persistence (i.e., discontinuing a medication without a practitioner’s guidance) and non-conformance (i.e., not taking the medication as prescribed) [139]. Non-fulfillment adherence is less commonly reported, though national polls suggest that approximately 19% of U.S. adults do not fill a prescription due to cost (the percentage of unfilled prescriptions may be even higher when considering other reasons) [140]. Among patients who initiate treatment, research suggests a wide range of compliance rates, much like those observed with DMHIs. One study found compliance rates ranging from 40% to 90%, with an average compliance rate of 65% [141]. Other studies show that approximately 50% of patients exhibit non-persistence by discontinuing antidepressants prematurely [142], and one review of psychiatric medication adherence reported non-adherence rates ranging between 28% and 57% for MDD and anxiety disorders [143].

Psychotherapy

Engagement with in-person psychotherapy relies on a different delivery modality as well as different mechanisms of action depending on the underlying theoretical orientation (e.g., CBT, Acceptance and Commitment Therapy [ACT]). As a result, engagement in this context might examine how many appointments were adhered to, whether or not a patient engaged with ‘homework’ or followed through with practice of other skills recommended, and/or evaluate whether a 10–12 session treatment program was completed [113]. Research suggests a substantial proportion of patients drop out of traditional psychotherapy before—or shortly after—initiating treatment. In a review of 115 studies on CBT, pretreatment dropout ranged from 11.4% to 21.66% for individuals with an anxiety or depressive disorder [144]. Research further suggests that among patients who successfully have their first psychotherapy visit, 34% will not return for a second visit within 45 days [145]. When evaluating attrition during treatment, rates range from 19.6% to 36.4% for anxiety and depressive disorders, and overall attrition rates, including both pretreatment and posttreatment rates, is approximately 35% [144]. Similarly, therapists report that approximately one-third of their patients terminate therapy [113, 146].

Thus, while criticisms of DMHI engagement often implicitly assume that engagement with other forms of mental health care are superior, the research suggests that rates of adherence to both psychotherapy and pharmacotherapy are variable and likely comparable to DMHI engagement. This is particularly true once we account for differences in the meaning of initiating care across modalities—while the highest level of attrition in DMHIs occurs following download or signup, this information cannot be captured adequately for traditional forms of mental health care. What is more, there are likely common barriers and facilitators to intervention adherence across modalities, including cost, perceived stigma, and time constraints. Combined efforts to assess these barriers empirically would be advantageous for improving access to mental health care.

Conclusion

Nearly two decades after Eysenbach’s seminal article [1], DMHIs continue to be criticized for low engagement and retention rates. Unfortunately, as we have reviewed here, there are few clear predictors of DMHI adoption, or of engagement or retention, and consensus has not been reached with respect to operational definitions of terms such as engagement and adherence. What is more, broad or general criticisms that DMHIs suffer from lower engagement relative to more traditional forms of intervention (e.g., in-person therapy, medication), or to other mobile applications, do not appear to be well supported. Engagement and retention rates are variable across studies, and DMHIs; although some do suffer from low engagement, others show exceptionally high engagement and retention rates.

We believe it is important to move beyond the simple criticism that DMHI engagement rates are low and move towards a more nuanced understanding of engagement. It is our belief that current discussions of DMHI engagement are driven by several misconceptions: (1) that more frequent engagement is ideal, (2) that sustained engagement is ideal, and (3) that discontinued usage is considered a retention failure. Future work—both empirical and applied—needs to challenge these assumptions. To that end, we make several recommendations for future DMHI research in order to improve our understanding of optimal engagement:

-

1.

Adopt clearly defined, common definitions of ‘engagement’ and ‘adherence’. One of the primary limitations of research on adoption of and engagement with DMHIs is the lack of consistent findings, making it difficult to identify predictors of adoption or engagement. This is largely driven by the inconsistent definitions of engagement and adherence across studies—some of which even confound engagement with adoption. There should be careful consideration of how engagement and adherence are operationally defined, with a particular emphasis on multidimensional definitions of engagement. Adopting a common definition of these constructs would likely help reduce heterogeneity in research findings and ultimately help to clarify what factors may predict engagement and retention.

-

2.

Explore different patterns of engagement rather than assume a ‘one size fits all’ approach. Emerging research exploring different patterns of DMHI usage, or clusters of DMHI users, has shed light on how people may use DMHIs differently. It is unlikely that one way of engaging with a DMHI will be effective for all users; therefore, identifying effective patterns of engagement, and whether some people will benefit from a specific pattern, will allow for more individualized instructions for use and potentially increase the proportion of users benefiting from the intervention. Moreover, some patterns may be indicative of users who are at risk of drop out and could be used to determine effective means of re-engaging those users as well.

-

3.

Define ‘success’ as the degree of improvement, rather than DMHI engagement. Most research defines adherence, or the extent to which a user engages with a DMHI as intended, in terms of the amount of DMHI content accessed or the number of weeks a DMHI user is active, rather than by how much they improved. However, ‘dosage’ for most of these interventions is arbitrary, not based on data that supports its effectiveness. Consequently, treating intervention responsiveness as the outcome, rather than intervention adherence is an important avenue to identifying patterns of optimal engagement and minimally effective doses. Additionally, as described in (2) above, studying the different patterns of engagement that may result in improvement among different types of users is particularly important to understand optimal dosage for a particular user and help to optimize intervention responsiveness.