Abstract

Background

Speech perception in cochlear implants (CI) is affected by frequency resolution, exposure time, and working memory. Frequency discrimination is especially difficult in CI. Working memory is important for speech and language development and is expected to contribute to the vast variability in CI speech reception and expression outcome. The aim of this study is to evaluate CI patients’ consonants discrimination that varies in voicing, manner, and place of articulation imparting differences in pitch, time, and intensity, and also to evaluate working memory status and its possible effect on consonant discrimination.

Results

Fifty-five CI patients were included in this study. Their aided thresholds were less than 40 dBHL. Consonant speech discrimination was assessed using Arabic consonant discrimination words. Working memory was assessed using Test of Memory and Learning-2 (TOMAL-2). Subjects were divided according to the onset of hearing loss into prelingual children and postlingual adults and teenagers. Consonant classes studied were fricatives, stops, nasals, and laterals. Performance on the high frequency CVC words was 64.23% ± 17.41 for prelinguals and 61.70% ± 14.47 for postlinguals. These scores were significantly lower than scores on phonetically balanced word list (PBWL) of 79.94% ± 12.69 for prelinguals and 80.80% ± 11.36 for postlinguals. The lowest scores were for the fricatives. Working memory scores were strongly and positively correlated with speech discrimination scores.

Conclusions

Consonant discrimination using high frequency weighted words can provide a realistic tool for assessment of CI speech perception. Working memory skills showed a strong positive relationship with speech discrimination abilities in CI.

Similar content being viewed by others

Background

Speech recognition is the ultimate goal of cochlear implantation. Auditory speech perception in CI depends on fundamental aspects of acoustics and psychoacoustics phenomena. These include adequacy of frequency and temporal resolution of the acoustic signal, pitch, and temporal discrimination. Auditory learning also plays an essential role in speech perception and is dependent on environmental exposure and memory processes [1].

Cochlear implant patients show differences in their abilities to receive speech [2]. Many factors contribute to the variability in CI outcomes such as differences in auditory perceptual resolution of acoustic signal, cognitive, and linguistic capabilities [3]. One such higher-level process is working memory (WM), which is the temporary storage mechanism for signal awareness, sensory perception, and information retrieval from long-term memory. Speech requires WM to encode, store, and retrieve phonological and lexical representations of words for speech perception and production [4].

CI patients continue to show deficits in basic auditory processes, such as temporal and amplitude discrimination, gap detection, and frequency discrimination. Frequency discrimination is essential for speech perception, especially in demanding listening conditions, for the identification and the localization of auditory signals and for the appreciation of music.

Many studies showed poor frequency discrimination skills in CI which can be explained by poor pitch perception. The limited electrode number may limit accurate harmonic representation of the acoustic signal. Spectral resolution is also limited by the spread and interaction of currents between adjacent electrodes and the uneven neural survival along the length of the cochlea [5].

The major limitation of frequency resolution is that electrical stimulation has a restricted capacity to convey temporal fine structure (TFS) cues which contribute to the processing of linguistic information and are indicative of speech perception, especially in noisy situations [6].

This limited frequency discrimination abilities of CI and the fact that the currently used speech testing materials have reached ceiling effects [7] have led to an increasing need for more difficult tests, which provide fine-grained information on perception of consonants and vowels. The Arabic consonants speech discrimination test is expected to provide a realistic tool for assessment of speech perception and phoneme perception analysis [8].

The aims of this study were to evaluate behavioral speech discrimination in CI using consonant discrimination materials and to evaluate working memory in those patients and assess its possible effect on their consonant discrimination abilities.

Methods

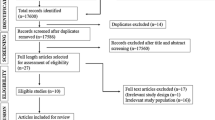

This study was conducted on 55 CI patients. They were divided according to the onset of hearing loss into two groups:

Group I: Prelinguals which includes 35 CI children implanted before the age of 5 years and have been using CI for at least 3 years. Their aided free field thresholds had to be ≤ 40 dBHL at 250, 500, 1000, 2000, and 4000 Hz. They received audiological and phoniatrics rehabilitation for at least 3 month. Their IQ levels were 80 or more on the Arabic version of Stanford Binet test [9].

Group II: Postlinguals which includes 20 CI patients with aided free field thresholds of ≤ 40 dBHL at 250, 500, 1000, 2000, and 4000 Hz. They received audiological rehabilitation for at least 3 months and their IQ levels were 80 or more on the Arabic version of Stanford Binet test [9].

Medical ethics were considered and the patients were informed that they will be a part of a research study. Adult patients and children’s caregivers were asked to sign a written consent.

All tested patients were subjected to the following:

-

1.

Complete history taking.

-

2.

Measurement of aided free field thresholds for 250, 500, 1000, 2000, and 4000 Hz using Madsen Itera audiometer in a sound treated room.

-

3.

Psychometry: Intelligent quotient (IQ) using the Arabic version of Stanford Binet test [9].

-

4.

Speech recognition scores are as follows:

-

4.1.

Phonetically balanced word lists. Each patient was subjected to Arabic monosyllabic phonetically balanced words appropriate for age either phonetically balanced word discrimination lists [10] or phonetically balanced kindergarten word lists (PBKG) [11]. They were introduced to the patients through loudspeakers. Each test consists of 25 monosyllabic words. Scores are provided for the number of correctly identified words expressed as percentages correct.

-

4.2.

Arabic consonant discrimination lists. The original test consisted of 2 lists of high frequency. The original test consisted of 2 lists of high frequency weighted CVC words [12]. Consonant classes which are fricatives, stops, nasals and laterals are represented in sense word list of 120 words and a non-sense list of 156 words. All words of both lists were analyzed in frequency domain and tested on high frequency hearing loss patients for validity and reliability.

s. The original test consisted of 2 lists of high frequency weighted CVC words [12]. Consonant classes which are fricatives, stops, nasals, and laterals are represented in sense word list of 120 words and a non-sense list of 156 words. All words of both lists were analyzed in frequency domain and tested on high frequency hearing loss patients for validity and reliability.

-

4.1.

A modified version of these lists was developed to shorten the test items and without changing the consonant and vowel frequency of representation [8].

For sensible word list, 24 words were chosen for fricatives, 18 words for stops, 4 for nasals, and 4 for laterals. So, the total for sensible word list was 50 words.

For non-sensible word lists, 26 words were chosen for fricatives, 18 words for stops, 4 for nasals, and 4 for laterals. So, the total for non-sensible word list was 52 words.

Although the original test includes both sensible and non-sensible syllables, in this study only sensible syllables were used. This choice was done since both pre and post lingual hearing loss patients may have more difficulty for meaningless sounds and thus possible bias in errors occur.

The words were introduced to the patients through loudspeakers at 40 dB SL as it was found that this level was the most comfortable to all patients. Scores are provided for the number of correctly identified phonemes and words, expressed as percentages correct.

Performance on phonemic errors was categorically identified and scored as follows:

Probability of error for each class of consonant=\( \frac{\mathrm{Total}\ \mathrm{errors}\ \mathrm{for}\ \mathrm{a}\ \mathrm{class}\ \mathrm{of}\ \mathrm{consonant}\ \mathrm{across}\ \mathrm{subjects}}{Total\ stimuli\ for\ that\ class\ across\ subjects} \) %

Working memory assessment

Forty patients (20 prelinguals and 20 postlinguals) were subjected to items of The Test of Memory and Learning-2 (TOMAL-2) [13]. This test was designed to verify the presence of memory problem in patients and to estimate the severity of the disorder [14]. The original TOMAL-2 has been subjected to translation and modification to suit the Egyptian culture and environment and to overcome the differences in the language structure between English and Arabic language [13].

Only the items testing auditory memory was used which are as follows:

-

(1)

Memory for stories (MFS) which requires verbal recall of a short story heard from the examiner. It provides a measure of meaningful recall.

-

(2)

Word selective reminding (WSR) which is a verbal free-recall task in which the subject tries to repeat a word list presented verbally. It tests learning and immediate recall in verbal memory.

-

(3)

Object recall (OR) where the examinee is asked to recall a series of pictures introduced to him/her.

-

(4)

Paired recall (PR) which is a verbal paired-associate learning task in which a list of word pairs is asked to be recalled when the first word of each pair is provided.

-

(5)

Digits forward (DF): DF measures low-level recall of a sequence of number.

-

(6)

Digits backward (DB): The examinee recalls the numbers in reverse order.

-

(7)

Letters forward (LF) which is a language-related analog to digit span task using letters instead of numbers.

-

(8)

Letters backward (LB): This task is a language-related analog to the digits backward task using letters.

-

(9)

Verbal delayed recall subtests: The patient is asked to recall the items he was interviewed for in the MFS and WSR subsets after 30 min to assess learning and the decay of memory.

-

(10)

The following indices of TOMAL-2 test were calculated:

The verbal memory index (VMI) which assesses memory for information presented verbally and reproduced in a sequential manner. This task is important in diagnosing learning disabilities with primary deficits in speech and language issues. This index is comprised of memory for stories, word selective reminding, object recall, and paired recall.

Associative recall index (ARI) which consists of memory for story and paired recall.

The verbal delayed recall index (VDRI) serves as a measure of “forgetting.” It assesses memory for information presented verbally for recall (memory for story and word selective reminding) after a delayed period.

Statistical analysis of the data

Data were fed to the computer and analyzed using IBM SPSS software package version 20.0. (Armonk, NY: IBM Corp). Qualitative data were described using number and percent. Quantitative data were described using range (minimum and maximum), mean and standard deviation. Chi-square test for categorical variables, to compare between the two groups while Monte Carlo correction for chi-square when more than 20% of the cells have expected count less than 5. Student t test was assessed for normally distributed quantitative variables to compare between the two groups. Pearson coefficient to correlate between two normally distributed quantitative variables. Significance of the obtained results was judged at the 5% level.

Results

Demographic data of the studied cases (Table 1)

Age at implant, duration, and CI brand (Table 2)

Aided free field thresholds of studied cases (Fig. 1)

Speech discrimination scores (Tables 3, 4)

Figure 2 shows that among all classes of consonants tested by modified Arabic discrimination lists fricatives are the most affected with a probability of error of 25.14 ± 13.11% for prelinguals and 32.43 ± 12.02% for postlinguals.

Working memory scores

Forty patients (20 prelinguals and 20 postlinguals) were subjected to the items of TOMAL-2 which assess auditory memory. Figure 3 shows the performance of all prelingual and postlingual CI patients on memory for stories (MFS), word selective reminding (WSR), object recall (OR), paired recall (PR), digit forward (DF), letter forward (DF), digit backward (DB), letter backward (DB), memory for stories delayed (MFSD), and word selective reminding delayed (WSRD). Table 5 shows comparison between prelinguals and postlinguals working memory items scores. The scores were higher in postlinguals although reached significance only in digit forward, digit backward, and letter backward subgroups.

Correlation between speech discrimination scores and working memory indices scores (Tables 6, 7, Figs. 4, 5)

The indices used were verbal memory index (VMI), verbal delayed recall index (VDRI), and associative recall index (ARI). These indices were correlated to scores on phonetically balanced word list (PBWL) and the results are shown in Table 6 and Fig. 4. The indices were also correlated to scores on high frequency discrimination list and the results are shown in Table 7 and Fig. 5. These results showed that there is a positive correlation between working memory indices and speech perception scores on both lists. The correlation was much stronger with high frequency list than with phonetically balanced word list (PBWL).

Discussion

Cochlear implants have shown satisfactory progress in speech perception for children and adults with severe to profound hearing loss. However, speech perception and language development show a considerable variability among CI users.

The goal of this study is to assess the consonant discrimination ability of CI users and to assess if working memory recall abilities have an impact on consonant discrimination performance.

Present results showed that speech discrimination scores for PBWLs in patients with CI are lower than normal hearing (NH) individuals with a wide range of variability despite pure tone thresholds being better than 40 dB HL. The PBWLs scores agreed with those of Turgeon C. who showed scores for monosyllabic phonetically balanced words for adult CI users to range from very poor reaching even zero to an excellent performance reaching 92% correct with a mean of 54% and wide variability with a standard deviation of 33 [15]. Also, Zhang’s results for consonant-nucleus-consonant (CNC) words varied across CI patients with a mean and a standard deviation of 58.04% ± 28.66 [16].

Paired t test was used to compare the performance of CI patients on phonetically balanced word lists appropriate to their age and modified Arabic consonant speech sounds in both pre- and postlingual patients. The scores on high frequency weighted materials were significantly lower than those of PBWL and that the most affected class of consonants is the fricatives. Fricatives have high frequency spectrum especially for the voiceless ones, as the noise segment for the voiceless fricatives is wider than the voiced ones [17]. The noise segment begins at 2000 Hz for /ς/ consonant, below 3000 Hz for /s/ consonant then extends upward to 10000 Hz. The friction noise for /ɵ/ sound is very weak across the spectrum and begins at 4000/Hz/. For /f/ sound, the friction noise begins at 6000-8000 Hz. The energy of the voiced fricatives /ħ/, /x/, /δ/, /z/, /ε/, and /ʁ/ appeared as formant like bands which extend with that of the following vowel. The noise source of /ħ/ had a cut off frequency at 2000 Hz than that of /z/ is at 4000 Hz while that of /δ/ at 6000 Hz [18].

Stieler et al. assessed perception of 5 Ling phonemes (aa, uu, ii, ss, and sh) in children with CI. The tested patients had difficulties with differentiation of phonemes based on high-frequency fricatives (ss–sh) even when having normal thresholds in free field (20–30 dB SPL; 0.25–6 kHz) [19].

Peng et al. found that children with bilateral CI were able to discriminate consonant contrasts using fine-grained spectral-temporal cues above chance level but poorer than their NH peers. While the electrodogram outputs suggest that CI provides some access to spectral cues that distinguish between consonants, it is likely that these spectral cues were too coarse for the saliency they needed to achieve a performance similar to NH [20].

The temporal information of the speech signal is decomposed into envelope (2–50 Hz), periodicity (50–500 Hz), and temporal fine structure (500–10,000 Hz). The envelope is the slow variations in the speech signal. Periodicity corresponds with the vibrations of the vocal cords, which conveys fundamental frequency (F0) information. Temporal fine structure (TFS) is the fast fluctuations in the signal, and contributes to pitch perception, sound localization, and binaural segregation of sound sources. All stimulation strategies represent high-frequency sounds only by place coding. The stimulation rate in every implant is constant, between 500 and 3500 pulses/s. Low-frequency sounds are represented by both temporal and place coding [21].

Poorer consonant discrimination in CI is attributed to the difficulty in recognizing pitch differences compared to normal which leads to difficulties in music appreciation, understanding of speech in noise, and understanding of tonal language. This may be due to the lack of sharp frequency tuning in electric hearing and defective ability of CI to discriminate the fundamental frequency (F0) of complex sounds [22].

One of the most important speech cues that help with higher-level speech perception speech is voice characteristics which are F0 and vocal tract length (VTL). VTL perception is severely impaired in CI users due to channel interactions and smeared spectral resolution [23]. This degree in resolution may also affect central resolution and memory storage.

Assessment of working memory using TOMAL-2 test revealed that most of the patients in the two groups fall into the deficient and very deficient subgroups indicating that WM in CI patients was poorer than normal. This may be attributed to the deficient auditory sensory input which disrupts accurate encoding of verbal information necessary for phonological processing and memory [24]. One assumption is that WM processes poorly defined pitch contrasts in the short time of its operation. These contrasts remain poorly defined in their final storage and future recalls on a longer temporal domain.

Davidson et al. compared WM in NH children and children with CI. Children with CIs scored significantly lower on simple and complex verbal WM tasks compared with their NH age mates; however, verbal WM deficits for CI group persist even with good audibility. Children with CIs have deficits in WM related to storing and processing verbal information. These deficits extend to receptive vocabulary and verbal reasoning [24]. For pre- and postlingually deaf, auditory deprivation will occur after a period of lack of sensory input. This process entails a degeneration of the auditory system, both peripherally and centrally, including a degradation of neural spiral ganglion cells [25]. Auditory deprivation during critical developmental phases leads to atypical development of executive functions [26].

On comparison of memory scores between prelinguals and postlinguals, the scores were higher in postlinguals although reached significance only in digit forward, digit backward, and letter backward subgroups. In postlingually deaf adults, the neural pathways in the brain have been shaped by acoustic sound perception before onset of deafness. The degree of success with a CI depends on how the brain compares the new signal with what was heard previously [27].

Moberly et al. found that adults with CIs performed on par with NH peers on measures of verbal WM that did not explicitly tax phonological skills: forward and backward digit span. However, on tasks of WM that placed greater demands on phonological capacities, serial recall of words, CI users were less accurate, suggesting that poor phonological sensitivity accounted for the difference in performance. Thus, these deficits can be attributed to a problem in storage, not processing [28].

Functional neuroimaging studies show that in adults, a network of prefrontal, parietal, and anterior cingulate regions are activated in WM contexts and activation in these regions increases with increasing working memory load [29]. Many studies report less activation in children than in adults in these regions and the amount of activation increases with age, mirroring improvements in behavioral performance [30]. In contrast, other studies report more diffuse patterns of activation in children in WM tasks reflecting that neural activation becomes progressively more focused with development [31].

We found a significant positive correlation between WM indices (verbal memory index, associative recall, and verbal delayed recall index) and speech perception scores on both lists. The correlation was much stronger with high frequency list than with PBWL. Talebi et al. assessed CI children memory performance, their speech perception and their speech production. They found a positive and significant relation between the memory scores and auditory perception and between memory and speech intelligibility. These results emphasize that CI children memory performance has a significant effect on their speech production [32].

Speech perception and language processing are dependent on fast and efficient phonological coding of auditory input in verbal short-term memory, i.e., stable phonological representations. Thus, verbal short-term memory operates as a linkage between auditory speech input and the stored language knowledge in the long-term memory [33].

The degraded auditory input of CI children results in underspecified phonological representations in their verbal short-term memory and as phonological WM is important for rapidly encoding and processing degraded and underspecified speech signals transmitted to the auditory nerve by a CI, this in turn would affect speech perception, verbal, and visuospatial reasoning abilities and ultimately language and academic performance [34].

Tao et al. found significant correlations of disyllable recognition and digit span scores in adult CI users. WM performance was significantly poorer for CI than for NH participants, suggesting that CI users experience limited WM capacity and efficiency [35]. In postlingual CI users, prolonged hearing loss can lead to degeneration of long-term phonological representations. Signal degradation impedes the ability of listeners to recover phonological representations, even when those representations remain intact internally [28].

Conclusions

The variability in CI users’ outcome is reflected as a wide range of performance on speech perception tasks. CI patients still lag behind normal hearing peers in speech recognition performance especially consonant discrimination. Frequency discrimination using high frequency weighted words can provide a realistic tool for assessment of speech perception by CI users.

Working memory skills seem to be retarded in CIs due to periods of auditory deprivation and the relatively degraded auditory message delivered to the implant. Differences in WM seem to contribute to the vast amounts of individual differences in the spoken language outcomes of adult and pediatric CI users.

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- ARI:

-

Associative recall index

- CI:

-

Cochlear implants

- CNC:

-

Consonant-nucleus-consonant

- CVC:

-

Consonant vowel consonant

- DB:

-

Digits backward

- dBHL:

-

Decibel hearing level

- DF:

-

Digits forward

- F0:

-

Fundamental frequency

- Hz:

-

Hertz

- IQ:

-

Intelligence quotient

- LB:

-

Letters backward

- LF:

-

Letters forward

- MFS:

-

Memory for stories

- MFSD:

-

Memory for stories delayed

- NH:

-

Normal hearing

- OR:

-

Object recall

- PBKG:

-

Phonetically balanced kindergarten word lists

- PBWL:

-

Phonetically balanced word list

- PR:

-

Paired recall

- TFS:

-

Temporal fine structure

- TOMAL-2:

-

Test of memory and learning-2

- VDRI:

-

The verbal delayed recall index

- VMI:

-

The verbal memory index

- VTL:

-

Vocal tract length

- WM:

-

Working memory

- WSR:

-

Word selective reminding

- WSRD:

-

Word selective reminding delayed

References

Kirk KI, Hudgins M (2016) Speech perception and spoken word recognition in children with cochlear implants. In: Young NM, K.K. I (eds) Pediatric Cochlear Implantation. Springer, United States, p. 145-161.

Xu L, Chen X, Lu H, Zhou N, Wang S, Liu Q et al (2011) Tone perception and production in pediatric cochlear implants users. Acta Otolaryngol 131(4):395–398

Forli F, Arslan E, Bellelli S, Burdo S, Mancini P, Martini A et al (2011) Systematic review of the literature on the clinical effectiveness of the cochlear implant procedure in paediatric patients. Acta Otorhinolaryngol Ital 31(5):281–298

Baddeley A (2012) Working memory: theories, models, and controversies. Annu Rev Psychol 63:1–29. https://doi.org/10.1146/annurev-psych-120710-100422

Fu Q-J, Chinchilla S, Galvin JJ (2004) The role of spectral and temporal cues in voice gender discrimination by normal-hearing listeners and cochlear implant users. J Assoc Res Otolaryngol 5(3):253–260. https://doi.org/10.1007/s10162-004-4046-1

D’Alessandro HD, Mancini P (2019) Perception of lexical stress cued by low-frequency pitch and insights into speech perception in noise for cochlear implant users and normal hearing adults. Eur Arch Otorhinolaryngol 276(10):2673–2680

Blamey P, Artieres F, Başkent D, Bergeron F, Beynon A, Burke E et al (2013) Factors affecting auditory performance of postlinguistically deaf adults using cochlear implants: an update with 2251 patients. Audiol Neurootol 18(1):36–47

El Ghazaly M, Talaat M, Mourad M (2014) Evaluation of speech perception in patients with ski slope hearing loss using Arabic consonant speech discrimination lists. Adv Arab Acad Audio-Vestibul J 1(1):32–37. https://doi.org/10.4103/2314-8667.137563

Melika L. Stanford Binet Intelligence Scale (4th Arabic version). 2nd ed. Cairo: Victor Kiorlos Publishing; 1998.

Soliman S (1976) Speech discrimination audiometryusing Arabic phonetically-balanced words. Ain Shams Med J 27:27–30

Soliman S, EL-Mahallawy T (1984) Simple speech test material as a predictor for speech reception threshold (SRT) in preschool children. Master Dissertation: Audiology Unit, Ain Shams, Egypt

Khairy G (2000) Arabic consonants discrimination test for high frequency hearing loss listeners. Master Dissertation: Faculty of Medicine: University of Alexandria

Hanafy M (2015) Application of the adapted learning and memory test for evaluating the Egyptian children having memory problems. Master Dissertation, Egypt: Faculty of Medicine, University of Alexandria

Reynolds CR, Voress JK (2007) Test of memory and learning, 2nd edn. PRO-ED, Austin, TX

Turgeon C, Champoux F, Lepore F, Ellemberg D (2015) Deficits in auditory frequency discrimination and speech recognition in cochlear implant users. Cochlear Implants Int 16(2):88–94

Zhang F, Underwood G, McGuire K, Liang C, Moore DR, Fu Q-J (2019) Frequency change detection and speech perception in cochlear implant users. Hear Res 379:12–20

Plack C (2005) A journey through the auditory system. In: Wilkinson E (ed) The sense of hearing. Lawrence Erlbaum associates, Newjersy, pp 9–35

Moore BC (2007) Cochlear hearing loss: physiological, psychological and technical issues.John Wiley & Sons.

Stieler O, Sekula A, Karlik M (2011) Audiologic-acoustic evaluation of speech perception in children with cochlear implants. Acta Physica Polonica A 119(6A):1077–1080

Peng ZE, Hess C, Saffran JR, Edwards JR, Litovsky RY (2019) Assessing fine-grained speech discrimination in young children with bilateral cochlear implants. Otol Neurotol 40(3):e191–e197

Wouters J, McDermott HJ, Francart T (2015) Sound coding in cochlear implants: from electric pulses to hearing. IEEE Signal Proc Mag 32(2):67–80

Carroll J, Zeng F-G (2007) Fundamental frequency discrimination and speech perception in noise in cochlear implant simulations. Hear Res 231(1-2):42–53

Gaudrain E, Başkent D (2015) Discrimination of vocal characteristics in cochlear implants. Proceddings of the 38th Annual Mid-winter Meeting of the Association for Research in Otolaryngology, Baltimore, MD

Davidson LS, Geers AE, Hale S, Sommers MM, Brenner C, Spehar B (2019) Effects of early auditory deprivation on working memory and reasoning abilities in verbal and visuospatial domains for pediatric cochlear implant recipients. Ear Hear 40(3):517–528

Feng G, Ingvalson EM, Grieco-Calub TM, Roberts MY, Ryan ME, Birmingham P et al (2018) Neural preservation underlies speech improvement from auditory deprivation in young cochlear implant recipients. Proc Natl Acad Sci U S A 115(5):E1022–E1031

Kronenberger WG, Beer J, Castellanos I, Pisoni DB, Miyamoto RT (2014) Neurocognitive risk in children with cochlear implants. JAMA Otolaryngol Head Neck Surg 140(7):608–615

Rødvik AK, Tvete O, Torkildsen JK, Wie OB, Skaug I, Silvola JT (2019) Consonant and vowel confusions in well-performing children and adolescents with cochlear implants, measured by a nonsense syllable repetition test. Front Physiol 10:1813

Moberly AC, Harris MS, Boyce L, Nittrouer S (2017) Speech recognition in adults with cochlear implants: the effects of working memory, phonological sensitivity, and aging. J Speech Lang Hear Res 60(4):1046–1061

D’Ardenne K, Eshel N, Luka J, Lenartowicz A, Nystrom LE, Cohen JD (2012) Role of prefrontal cortex and the midbrain dopamine system in working memory updating. Proc Natl Acad Sci U S A 109(49):19900–19909

Kharitonova M, Winter W, Sheridan MA (2015) As working memory grows: a developmental account of neural bases of working memory capacity in 5-to 8-year old children and adults. J Cogn Neurosci 27(9):1775–1788

Finn AS, Sheridan MA, Kam CLH, Hinshaw S, D’Esposito M (2010) Longitudinal evidence for functional specialization of the neural circuit supporting working memory in the human brain. J Neurosci 30(33):11062–11067

Talebi S, Arjmandnia AA (2016) Relationship between working memory, auditory perception and speech intelligibility in cochlear implanted children of elementary school. Iran Rehabil J 14(1):35–42

de Hoog BE, Langereis MC, van Weerdenburg M, Keuning J, Knoors H, Verhoeven L (2016) Auditory and verbal memory predictors of spoken language skills in children with cochlear implants. Res Dev Disabil 57:112–124

Nittrouer S, Caldwell-Tarr A, Lowenstein JH (2013) Working memory in children with cochlear implants: problems are in storage, not processing. Int J Pediatr Otorhinolaryngol 77(11):1886–1898

Tao D, Deng R, Jiang Y, Galvin JJ III, Fu Q-J, Chen B (2014) Contribution of auditory working memory to speech understanding in mandarin-speaking cochlear implant users. PLoS One 9(6):e99096

Acknowledgements

Not applicable.

Funding

None.

Author information

Authors and Affiliations

Contributions

ME analyzed and interpreted the patient data regarding speech discrimination scores and is a major contributor in the manuscript writing. MM contributed to the concept of the study and was a major contributor to the manuscript editing. NH collected the patient data regarding working memory test scores and helped in analysis and interpretation of those data. MT contributed to cochlear implant data collection and contributed to manuscript preparation. The manuscript has been read and approved by all the authors, that the requirements for authorship as stated earlier in this document have been met, and that each author believes that the manuscript represents honest work.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Medical ethics were considered and the patients were informed that they will be a part of a research study, and adult patients and children’s caregivers were asked to sign a written consent.

The study was approved by the ethics committee of the Faculty of Medicine, Alexandria University (IRB NO: 00007555-FWA NO: 00018699).

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

El Ghazaly, M.M., Mourad, M.I., Hamouda, N.H. et al. Evaluation of working memory in relation to cochlear implant consonant speech discrimination. Egypt J Otolaryngol 37, 24 (2021). https://doi.org/10.1186/s43163-021-00078-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43163-021-00078-w