Abstract

Background

Marshall design process is the most common method used for estimating the Optimum asphalt content (OAC) and this process is called the asphalt mix design. However, this method is time-consuming, labor-intensive, and its results are subjected to variations.

Results

This paper employs artificial neural network (ANN) for the estimation of Marshall test parameters (OAC, Stability, Flow, Air voids, Voids in mineral aggregate) using the aggregate gradation as the input of the prediction process. Multiple ANNs are tested in order to optimize the NN hyperparameters and produce accurate predictions. Different activation functions, number of hidden layers, and number of neurons per hidden layer are tested and heatmaps are generated to compare the performance of every ANN. Results show that the optimum ANN hyperparameters change depending on the predicted parameter. Finally, the deep NN can predict the OAC, stability, flow, density, air voids, and voids in mineral aggregate with R values of 0.91, 0.8, 0.53, 0.65, 0.77, and 0.66.

Conclusion

The linear activation function is the most efficient activation function and generates more accurate results than the logistic and the hyperbolic tangent functions. Additionally, it is shown that the deep neural network approach represents a major innovative tool for the prediction of the asphalt mix properties as results of this approach outperforms results of the shallow ANN that consists of a single hidden layer which is the only approach used in the literature. Thus, the use of the deep ANN can be useful during the phase of the design of the asphalt mix process because of its ability to predict variables with high accuracy. For example, the ANN with 3 hidden layers and 16 neurons per layer with the linear activation function can predict the OAC with high accuracy (R = 0.91), which can be helpful in the design process as the ANN can be employed for the prediction of the OAC of the asphalt mix.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.1 Background

Asphalt concrete was originally invented with the purpose of carrying the high pressure and heavy loads generated from aircrafts [1]. In general, pavement consists of multiple layers to transfer the heavy loads to the soil without causing soil failure [2]. The process of asphalt pavement design consists of two main processes. The first process is the thickness design which focuses on estimating the required thickness of each pavement layer to transfer traffic load safely to the soil. The second process, which is the main focus of this paper, is called the asphalt mix design and it focuses on estimating the optimum aggregate and bitumen characteristics in the mix [3,4,5,6,7,8]. Usually, the asphalt mix design process is carried out through laboratory tests with the goal of estimating the optimum asphalt content (OAC) in the asphalt mix [9] as the OAC has a significant influence on the final performance of the mix [10]. Low asphalt content leads to harsh mix which causes durability issues, while high asphalt content leads to rutting, flushing, and insufficient air voids [11].

Marshall mix design method was firstly proposed in 1939 by Bruce Marshall in the Mississippi State Highway Department [1]. Marshall test was widely adopted in 1948 with slight modifications from country to country [9]. Currently, Marshall test remains the most common way used for estimating the optimum asphalt content [12, 13]. Marshall test procedure requires the preparation of at least fifteen samples for five different asphalt contents (three samples for each) then draw the design curves [14, 15] and estimate the OAC that satisfies a predefined criterion which depends on the Maximum stability, Maximum density or unit weight, predefined air voids percent, and a min value for voids in mineral aggregate [9]. Finally, the OAC is estimated as the average value that corresponds to the maximum stability, maximum unit weight, and prespecified air voids percentage [14, 15]. As a result, the estimation of the OAC is subjected to significant deviations, as it relies on the average value of a group of different ACs. Additionally, Marshall design method requires significant time for sample preparation and testing [14, 16]. Consequently, a large number of studies focus on developing alternative methods for Marshall test to save time.

AC is a composite material that consists of aggregate and bitumen. Aggregate makes up a high proportion of volume and mass of mixtures (around 95% of the mix weight), hence it is considered as the most important constituent of asphalt concrete [17] so characteristics of the asphalt mix mainly depend on the aggregate used and its gradation [18]. Thus, it is predicted that the aggregate properties have an enormous impact on the mixture properties. Aggregate gradation can be defined as the distribution of particle sizes expressed as a percentage of the total weight [18, 19] and considered as the centerpiece property of aggregate which needs careful consideration due to its effect on mix properties and performance of HMA mixtures, including air void, stability, stiffness, durability, permeability, workability, fatigue resistance, frictional resistance, resistance to moisture damage [18, 20] and also rutting resistance of asphalt concrete under traffic and environmental loads [21]. As a result, this parameter is considered as a very important parameter in the process of mixture design. For example, changing the aggregate gradation changes the required asphalt content because the asphalt content is responsible for coating the aggregate surface and filling the voids between the aggregate particles [16]. Moreover, the main sources of the mix strength or stability are the friction between the aggregate particles, interlocking resistance between the aggregate particles and the consistency of the bitumen used [14, 17].

Therefore, the main objective of this paper is to test the effectiveness of using ANN for accurate and fast prediction of the asphalt mix properties (mechanical and volumetric properties) to enhance the mix design process in the laboratory. The ANNs developed in this study were trained and tested on the basis of laboratory data of HMAs for two types of aggregate. The tests were carried out in the Highway and Airport Engineering Research Laboratory, Cairo University, Egypt for the most common aggregate (3D, 4C) and bitumen (60/70) used in Egypt.

Over the past few years, ANNs have been used in the prediction problem and indeed in the pavement engineering field. The study by Kaseko and Ritchie (1993) is one of the oldest studies that employed ANN for the detection of pavement cracks [22]. This was followed by Gagarin, Flood, and Albrecht (1994) study that employed ANN for the estimation of different truck attributes from the strain response readings from bridges [23]. In 1995, Cal employed an ANN that takes plasticity index, water content, and liquid limit as an input for the prediction of the soil classification [24]. In 1998, Roberts and Attoh-Okine employed an ANN for the prediction of the pavement condition [25]. Similarly, the study by Attoh-Okine (2001) proposed an ANN that uses the pavement characteristics and the surrounding conditions such as weather, age, and traffic condition in the prediction of the pavement condition [26]. In 2010, Tapkın et al. proposed an ANN for estimating Marshal test results for dense asphalt mixes that are modified with different types and percentages of an additive which is the polypropylene [10]. In 2014, Khuntia et al. used ANN for the prediction of Marshall test results for the polyethylene modified mixes using two techniques which are ANNs and support vector machine [27]. In 2016, Ozturk et al. proposed an ANN for the prediction of the volumetric properties of the asphalt mix [28]. In 2017, Ivica and Ivan proposed an ANN for the prediction of the air voids and the asphalt content of asphalt mixes in Croatia [29]. In 2018, Baldo et al. used the ANN technique for the prediction of the mechanical properties of asphalt mixes (stability and flow) [9]. In 2019, Nguyen et al. used artificial intelligence techniques for the prediction of the characteristics of the stone matrix asphalt [30].

Since 2006, when Hinton proposed several techniques for deep learning structures, configuring deep networks with more than three layers have shown widespread success in training neural networks. Artificial Neural Networks (ANN) is a learning algorithm that imitates the human neural system. An ANN consists of multiple nodes, called neurons, that communicate through synapses. Typically, there are three sets of layers: input layer, hidden layer, and output layer and each type of layer plays unique roles. The Input layer receives input information, the output layer yields output signals, and the intermediate layer (hidden layer) receives signals from the input layer and manipulates the information to give results to the output layer. An ANN model can have multiple intermediate layers [31]. In general, ANNs can be defined as parallel distributed processors that have the potential to store the learned knowledge and use it in the future. The fundamental unit of any ANN is the artificial neuron. The function of the neuron is to process the input signals and modulate its own response through an activation function or sometimes called the transfer function. The activation function determines the interruption or transmission of the outgoing impulse. Each neuron computes a weighted sum of elements of the input vector (Xs) through weights associated with the connections (W) [32]. Then, the neuron output value is calculated by applying the assigned transfer function to the weighted sum as follows:

There are many types of neural networks such as feedforward and feedback. Also, there are many types of training techniques deepening on the data such as supervised and unsupervised learning. In this study, the supervised learning is employed for the proposed ANN. Additionally, the backpropagation learning algorithm is the most common training algorithm used for the training of ANN, so the backpropagation technique is used for the training of the proposed ANN in this paper. Additionally multiple ANN will be tested to optimize the ANN architecture.

2 Methods

2.1 Material selection

In general, two aggregate gradations are commonly used in Egypt (4C and 3D) [10,11,12,13,14,15,16,17,18]. Between 2015 and 2016, the highway and research laboratory, Cairo University, Egypt collected 2073 asphalt mix samples from different locations all over Egypt. Out of these 2073 samples, 1186 are wearing course samples, and 887 are binder course samples. Results of laboratory tests show that gradation 4C is the most common gradation used in the wearing course, and gradation 3D is the most common gradation used in the binder course samples followed by grade gradation 4C as shown in Fig. 1. On the other hand, the figure shows the poor quality control in sites as most of the collected samples do not satisfy any aggregate gradations according to the Egyptian code for urban and rural roads. For the bitumen or binder used in Egypt, the most common binder grade used in Egypt is binder 60–70. These results comply with the results of two recent studies by Mousa et al. (2018) and Othman and Abdelwahab (2021) [10, 18] in Egypt which showed that binder grade 60–70 and aggregate 4C and 3D are the most common binder and aggregate gradations used in Egypt [10].

2.2 Characteristics of the materials used

In this study, 102 asphalt mix samples are prepared and tested using bitumen 60–70 and dolomite aggregate gradations 4C and 3D. Properties of the aggregate used (3D and 4C) are summarized in Table 1 and tier limits are shown in a sieve size distribution graph in Fig. 2. Additionally, properties of the bitumen used (60/70) are summarized in Table 2.

2.3 Marshall test

For every asphalt mix, five binder contents were chosen, and three samples were prepared and tested for every single binder content. Thus, for each mix, at least 15 samples were prepared and tested in the standard Marshall test to estimate the flow, stability values, and the volumetric characteristics. Then, for every binder content, the average value of these three samples was used as an indicator for the stability, flow, and the remaining volumetric properties. At this point, the five design curves were drawn to estimate the OAC that corresponds to the maximum stability, maximum density, and specific percentage of air voids in the mix as follows:

In this paper, the standard Marshall test is used to estimate the optimum asphalt content for 102 asphalt mixes samples prepared using bitumen 60–70 and aggregates (3D and 4C). Out of these 102 samples, 63 were prepared using aggregate grade 3D and 39 were prepared using aggregate grade 3C.

2.4 ANN structure

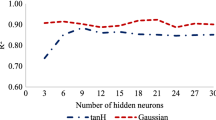

The accuracy of any ANN depends on setting the hyperparameters and selecting the appropriate ANN architecture. However, till the moment, there is no scientific way for the selection of the hyperparameters or the architecture. Thus, the optimum ANN architecture is usually selected following the trial-and-error technique. Thus, in this study multiple ANN are tested using different activation functions, different number of hidden layers, and different number of neurons per hidden layer.

The activation function or the transfer function may take multiple analytical expressions. In this study, three different activation functions are tested. These activation functions are shown in Fig. 3 and can be expressed as follows:

-

The Linear activation function or called the Rectified Linear Unit (ReLU) function

$$f\left(x\right)= \left\{\begin{array}{*{20}l}x & & x>0\\ 0 & & x<0\end{array}\right.$$ -

The logistic activation function or called the sigmoid function

$$f\left(x\right)= \frac{1}{1+{e}^{-x}}$$ -

The hyperbolic activation function or called the tanh activation function

$$f\left(x\right)= \frac{{e}^{x}-{e}^{-x}}{{e}^{x}+{e}^{-x}}= \frac{2}{1+{e}^{-2x}}-1$$

For every activation function, multiple ANN architectures are tested with different number of hidden layers and different number of neurons per layer. For every activation function, 64 different ANN architectures are tested starting from an ANN with one hidden layer to an ANN with 4 hidden layers. Moreover, for every hidden layer, different number of neurons per layer are tested starting from 4 neurons per layer to 20 neurons per layer. Figure 4 shows the general architecture of the ANNs tested in this study. Additionally, while initial weights of the ANN significantly influence the final performance of the ANN and the speed of the training process, there is no specific approach for estimating the best initial weights. Thus, initial weights are assigned randomly at the beginning of the training process.

For the training of the ANN, the dataset was randomly divided into two sets: training set (61%-62 samples) and testing set (49%-40 samples) while the backpropagation technique was used to train the data for 500 iterations. For the testing set, 50% of the testing samples were used to monitor the performance of the training process to avoid overfitting and this segment of the dataset is called the validation set. Additionally, the early stopping technique was employed to avoid overfitting. Overfitting occurs when the training set error declines while the validation or the testing set error increases. Thus, the early stopping technique stops the training once the validation set error starts to increase [34, 35]. However, the training of the neural network is a stochastic process, and the error of the validation set might go up or down at any point. Thus, the first overfitting point might not be the perfect point to stop the training. As a result, the performance of the ANN is monitored for a number of iterations and if the validation set error keeps increasing the training process should stop. On the other hand, if the validation set error declines, the training process should continue [36]. In this study, 100 iterations are used to monitor the validation set error. After these 100 iterations, if the validation set error declined again the training process proceeds, other than that the training process is halted.

3 Results

The selection of the optimum ANN hyperparameters should be based on the evaluation of the ANNs’ ability to predict the outputs [37, 38], which through statistical indicators. The main goal of the evaluation process or testing process is to estimate the ANN generalization error. The term generalization can be defined as the ability of the model to return high accuracy results on unknown data that is not used in the training process. The most common statistical indicator is the coefficient of correlation (R) that measures the linear relation between the ANN predictions \(({X}_{prediction})\) and the target values of the actual values (\({X}_{actual}\)) and it is calculated as follows:

The coefficient of correlation of the different ANNs for the testing set are shown in Tables 3, 4, 5, 6, 7 and 8, and for visualization purposes, the cells are highlighted from red which indicates the lowest value to green which indicates the highest value according to their R-value.

3.1 OAC

Table 3 reports the R values in the prediction of the OAC for the different ANNs. Results show that the logistic activation function produces the highest R-value, and the highest R-value (= 0.91) is achieved using three hidden layers and 16 neurons per layer. Additionally, for the three activation functions, no specific pattern can be observed with respect to the number of neurons per hidden layer or the number of hidden layers in the ANN.

3.2 Stability

Table 4 reports the R values in the prediction of the stability value for the different ANNs. Results show that, in general, the R values of the linear activation function are better than the R-values produced using the other activation functions. On the other hand, the highest R-value (= 0.8) is achieved using an ANN with three hidden layers, 9 neurons per layer, and the hyperbolic tanh activation function. Additionally, for the logistic and tanh activation functions, no specific pattern can be observed with respect to the number of neurons per hidden layer or the number of hidden layers in the ANN. However, for the linear activation function, the performance of the ANN gets worth with the increase in the number of the hidden layers.

3.3 Flow

Table 5 reports the R-values in the prediction of the flow value for the different ANNs. Results show that, in general, the R-values of the linear activation function are better than the R values produced using the other activation functions. On the other hand, the highest R-value (= 0.53) is achieved using an ANN with two hidden layers, 15 neurons per layer, and the logistic activation function. Additionally, for the three activation functions, the performance of the ANN, in general, gets worth with the increase in the number of the hidden layers.

3.4 Density

Table 6 reports the R-values in the prediction of the density value for the different ANNs. Results show that, in general, the R-values of the linear activation function are better than the R values produced using the other activation functions, and the highest R-value (= 0.65) is achieved using an ANN with two hidden layers, 11 neurons per layer. Additionally, for the three activation functions, the performance of the ANN, in general, gets worth with the increase in the number of the hidden layers.

3.5 AV

Table 7 reports the R-values in the prediction of the AV value for the different ANNs. Results show that, in general, the R values of the linear activation function are better than the R-values produced using the other activation functions. On the other hand, the highest R-value (= 0.77) is achieved using an ANN with four hidden layers, 8 neurons per layer, and the hyperbolic tanh activation function. Additionally, for the logistic and tanh activation functions, no specific pattern can be observed with respect to the number of neurons per hidden layer or the number of hidden layers in the ANN. However, for the linear activation function, the performance of the ANN gets worth with the increase in the number of the hidden layers.

3.6 VMA

Table 8 reports the R-values in the prediction of the VMA value for the different ANNs. Results show that, in general, the R-values of the linear activation function are better than the R-values produced using the other activation functions. On the other hand, the highest R-value (= 0.66) is achieved using an ANN with four hidden layers, 7 neurons per layer, and the hyperbolic tanh activation function. Additionally, for the three activation functions, no specific pattern can be observed with respect to the number of neurons per hidden layer or the number of hidden layers in the ANN.

4 Discussion

It can be seen that the linear activation function produces the best R-values; however, the linear activation function does not provide the highest R-value for all the outputs. Additionally, the optimum ANN architecture changes according to the desired output. Thus, in this section, a search for the optimum ANN that produces the best results for all outputs will be investigated.

4.1 Optimum ANN architecture for all output variables

Instead of choosing the optimum ANN that optimizes the performance for a single desired output. This section focuses on choosing the optimum ANN architecture that optimizes that performance for all predictions. In other words, this section focuses on choosing the optimum ANN architecture that produces the best predictions for all the outputs. Thus, a weight is assigned to each output R-value and then the weighted sum is calculated for every signal ANN. In this case, the new R-value is calculated as follows:

The updated R-values are shown in Table 9. It can be seen that the linear activation function, in general, provides the best results for all the predictors, followed by the logistic activation function, then the hyperbolic tanh activation function. From the updated table, the optimum ANN that provides the best predictions for all the six predictions has two hidden layers, 11 neurons per layer, and using the linear activation function as shown in Fig. 5. A comparison between the best possible ANN architecture and the optimum ANN for all predictions is shown in Table 10. It is clear that the difference between the two ANN is not significant for the OAC, stability, flow, density, and AV; however, there is a considerable error in the predictions of the VMA for the optimum ANN for all predictions when compared with the optimum ANN for the VMA.

4.2 Optimum ANN for the prediction of the asphalt mix mechanical characteristics (stability and flow)

Similar to the previous section, this section focuses on choosing the optimum ANN architecture that optimizes that ANN predictions of the asphalt mix mechanical properties. Thus, a weight is assigned to each output R-value and then the weighted sum is calculated for every signal ANN. In this case, the new R-value is calculated as follows:

The updated R-values are shown in Table 11. It can be seen that the linear activation function, in general, provides the best results for all the predictors, followed by the logistic activation function, then the hyperbolic tanh activation function. From the updated table, results show that the optimum ANN that provides the best predictions of the asphalt mix mechanical properties is the same as the optimum ANN for all predictions. This ANN has two hidden layers, 11 neurons per layer, and using the linear activation function.

4.3 Optimum ANN for the prediction of the asphalt mix volumetric characteristics (AV, VMA, and density)

Similar to the previous two section, this section focuses on choosing the optimum ANN architecture that optimizes that ANN predictions of the asphalt mix volumetric properties. Thus, a weight is assigned to each output R-value and then the weighted sum is calculated for every signal ANN. In this case, the new R-value is calculated as follows:

The updated R-values are shown in Table 12. It can be seen that the linear activation function, in general, provides the best results for all the predictors, followed by the logistic activation function, then the hyperbolic tanh activation function. From the updated table, results show that the optimum ANN that provides the best predictions of the asphalt mix volumetric properties is the same as the optimum ANN for all predictions and the same as the optimum ANN for the prediction of the asphalt mix mechanical properties. This ANN has two hidden layers, 11 neurons per layer, and using the linear activation function.

5 Conclusions

This study focuses on the prediction of the OAC, mechanical, and volumetric properties of the hot asphalt mix from the aggregate gradation using ANN. 204 different ANN are tested using different activation functions, number of hidden layers, and number of neurons per layer in order to choose the ANN that optimizes the prediction accuracy based on results of 102 asphalt mix samples that were prepared and tested in the standard Marshall test. The main outcomes of this study can be summarized as follows:

-

The optimum ANN architecture changes depending on the desired output as shown in Table 13.

-

The use of the hyperbolic tangent function provides the worst level of accuracy, followed by the logistic activation function. On the other hand, the linear activation function is the most efficient activation function.

-

The optimum ANN for the prediction of all the six output variables consists of two hidden layers, every hidden layer has elven neurons, and with a linear activation function. This architecture is also the best architecture for the prediction of either the mechanical properties of the mix, or the volumetric properties.

-

Results of this study show that the development of deep ANN represents a major innovative tool for the prediction of the asphalt mix properties as it is verified that the deep ANN is useful for the predictions of any of the desired outputs as summarized in Table 1.

-

The use of the ANN can be useful during the phase of the design of the asphalt mix process because of its ability to predict variables with high accuracy. For example, the ANN with 3 hidden layers and 16 neurons per layer with the linear activation function can predict the OAC with high accuracy (R = 0.91), which can be helpful in the design process as the ANN can be employed for the design of the hot asphalt mix. In this case, the ANN can be used for the prediction of the OAC instead of the standard Marshall test procedures that require the preparation of fifteen samples. Then, only three samples are prepared and tested to estimate the design parameters and make sure they match the design criteria. This approach saves time, resources, and the required effort to estimate the OAC.

Availability of data and materials

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- OAC:

-

Optimum Asphalt Content

- ANN:

-

Artificial Neural Network

- %P(I):

-

Percentage passing from sieve i

- AV:

-

Air voids

- VMA:

-

Voids in Mineral Aggregate

References

The Asphalt Institute. Mix design methods for asphalt concrete and other hot mix types. Manual series No. 2 (1988)

Rakaraddi PG, Gomarsi V. Establishing relationship between CBR with different soil properties. IJRET. 2015;182–188

Feiteira Dias JL, Picado-Santos LG, Capitão SD (2014) Mechanical performance of dry process fine crumb rubber asphalt mixtures placed on the Portuguese road network. Construct Build Mater 73:247–254

Liu QT, Wu SP (2014) Effects of steel wool distribution on properties of porous asphalt concrete. Key Eng Mater 599:150–154

Garcıa A, Norambuena-Contreras J, Bueno M, Partl MN (2014) Influence of steel wool fibers on the mechanical, thermal, and healing properties of dense asphalt concrete. J Test Evaluat 42(5):20130197

Pasandın AR, Perez I (2015) Overview of bituminous mixtures made with recycled concrete aggregates. Construct Build Mater 74:151–161

Zaumanis M, Mallick RB, Frank R (2016) 100% hot mix asphalt recycling: challenges and benefits. Transp Res Procedia 14:3493–3502

Wang L, Gong H, Hou Y, Shu X, Huang B (2017) Advances in pavement materials, design, characterisation, and simulation. Road Mater Pavement Design 18(3):1–11

Serkan T, Abdulkadir C, Ün U (2010) Prediction of Marshall test results for polypropylene modified dense bituminous mixtures using neural networks. Expert Syst Appl 37:4660–4670. https://doi.org/10.1016/j.eswa.2009.12.042

Mousa KM, Abdelwahab HT, Hozayen HA. Models for estimating optimum asphalt content from aggregate gradation. In: Proceedings of the institution of civil engineers—construction materials; 2018. https://doi.org/10.1680/jcoma.18.00035

Kandhal PS, Cross SA (2006) Effect of aggregate gradation on measured asphalt content. Transp Res Rec 1417:21–28

Ozturk H, Saglik A, Demir B, Güngör A. An artificial neural network base prediction model and sensitivity analysis for marshall mix design; 2016. https://doi.org/10.14311/EE.2016.224.

Gomaa AE. Marshall test results prediction using artificial neural network, MSc thesis, Arab Academy for Science and Technology, Cairo, Egypt; 2014

Liu W, Li H, Tian B (2011) Research on designing optimum asphalt content of asphalt mixture by calculation and experimental method. Appl Mech Mater 97:23–27

Aljassar AH, Ali MA, Alzaabi A (2002) Modelling Marshall test results for optimum asphalt-concrete mix design. Kuwait J Sci Engrg 29(1):181–195

Baldo N, Manthos E, Pasetto M (2018) Analysis of the mechanical behaviour of asphalt concretes using artificial neural networks. Adv Civil Eng. https://doi.org/10.1155/2018/1650945

Sangsefidi E, Ziari H, Sangsefidi M (2015) The effect of aggregate gradation limits consideration on performance properties and mixture design parameters of hot mix asphalt. KSCE J Civil Eng 20(1):385–392

Othman K, Abdelwahab H. 2021. Prediction of the optimum asphalt content using artificial neural networks. Metallurgical and Materials Engineering Journal, Association of Metallurgical Engineers of Serbia AMES

Abukhettala ME. The relationship between marshall stability, flow and rutting of the New Malaysian hot-mix asphalt mixtures, MSc Thesis, Faculty of Civil Engineering, Universiti Teknologi Malaysia; 2006

Trang Nhat T. The relationship of bitumen content, aggregate surface area, and extraction time using asphalt ignition furnace, MSc Thesis, Faculty of Civil Engineering, Universiti Teknologi Malaysia; 2008

Pan T, Tutumluer E, Carpenter SH (2006) Effect of coarse aggregate morphology on permanent deformation behavior of hot mix asphalt. J Transp Eng 132(7):580–589

Kaseko MS, Ritchie SG (1993) A neural network based methodology for pavement crack detection. Transp Res C 1(4):275–291

Gagarin N, Flood I, Albrecht P (1994) Computing truck attributes with artificial neural networks. J Comput Civil Eng ASCE 8(2):179–200

Cal Y (1995) Soil classification by neural network. Adv Eng Softw 22:95–97

Roberts CA, Attoh-Okine NO (1998) A comparative analysis of two artificial neural networks using pavement performance prediction. Comput Aided Civil Infrastruct Eng 13(5):339–348

Attoh-Okine NO (2001) Grouping pavement condition variables for performance modeling using self-organizing maps. Comput Aided Civil Infrastruct Eng 16(2):112–125

Khuntia S, Das A, Mohanty M, Panda M (2014) Prediction of Marshall parameters of modified bituminous mixtures using artificial intelligence techniques. Int J Transp Sci Technol 3:211–228. https://doi.org/10.1260/2046-0430.3.3.211

Ozturk H, Saglik A, Demir B, Güngör A. An artificial neural network base prediction model and sensitivity analysis for marshall mix design. https://doi.org/10.14311/EE.2016.224 (2016)

Ivica A, Ivan M (2017) Development of ANN and MLR models in the prediction process of the hot mix asphalt (HMA) properties. Can J Civil Eng. https://doi.org/10.1139/cjce-2017-0300

Nguyen H, Le T, Pham C, Le T, Ho L, Le V, Pham B, Ly H (2019) Development of hybrid artificial intelligence approaches and a support vector machine algorithm for predicting the marshall parameters of stone matrix asphalt. Appl Sci. https://doi.org/10.3390/app9153172

Haykin S (1994) Neural networks, a comprehensive foundation. Prentice Hall, New Jersey

McCulloch W, Pitts W (1943) A logical calculus of the ideas immanent in nervous activity. Bull Math Biophys 5:115–133

ECP (Egyptian Code Provisions) ECP(104/4): Egyptian code for urban and rural roads. Part (4): Road material and its tests. Housing and Building National Research Center, Cairo, Egypt, 2008

Goodfellow L, Bengio Y, Courville A (2016) Deep learning (adaptive computation and machine learning series), Kindle. MIT Press, Cambridge

Christopher B (2006) Pattern recognition and machine learning (information science and statistics). Springer, Berlin

Prechelt L (1998) Early stopping—but when? In: Orr GB, Müller KR (eds) Neural networks: tricks of the trade. Lecture notes in computer science, vol 1524. Springer, Berlin

Hastie T, Friedman J, Tibshirani R (2009) Model assessment and selection. The elements of statistical learning: data mining, inference, and prediction, 2nd edn. Springer, New York, pp 219–249

Raschka, S. Model evaluation, model selection, and algorithm selection in machine learning. arXiv 2018, arXiv:1811.12808v2.

Acknowledgements

Not applicable.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

(KO) literature search and review, research methodology, data preparation, data analysis, manuscript writing and reviewing. The author read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Othman, K. Prediction of the hot asphalt mix properties using deep neural networks. Beni-Suef Univ J Basic Appl Sci 11, 40 (2022). https://doi.org/10.1186/s43088-022-00221-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43088-022-00221-3