Abstract

Background

There are challenges associated with measuring sustainment of evidence-informed practices (EIPs). First, the terms sustainability and sustainment are often falsely conflated: sustainability assesses the likelihood of an EIP being in use in the future while sustainment assesses the extent to which an EIP is (or is not) in use. Second, grant funding often ends before sustainment can be assessed.

The Veterans Health Administration (VHA) Diffusion of Excellence (DoE) program is one of few large-scale models of diffusion; it seeks to identify and disseminate practices across the VHA system. The DoE sponsors “Shark Tank” competitions, in which leaders bid on the opportunity to implement a practice with approximately 6 months of implementation support. As part of an ongoing evaluation of the DoE, we sought to develop and pilot a pragmatic survey tool to assess sustainment of DoE practices.

Methods

In June 2020, surveys were sent to 64 facilities that were part of the DoE evaluation. We began analysis by comparing alignment of quantitative and qualitative responses; some facility representatives reported in the open-text box of the survey that their practice was on a temporary hold due to COVID-19 but answered the primary outcome question differently. As a result, the team reclassified the primary outcome of these facilities to Sustained: Temporary COVID-Hold. Following this reclassification, the number and percent of facilities in each category was calculated. We used directed content analysis, guided by the Consolidated Framework for Implementation Research (CFIR), to analyze open-text box responses.

Results

A representative from forty-one facilities (64%) completed the survey. Among responding facilities, 29/41 sustained their practice, 1/41 partially sustained their practice, 8/41 had not sustained their practice, and 3/41 had never implemented their practice. Sustainment rates increased between Cohorts 1–4.

Conclusions

The initial development and piloting of our pragmatic survey allowed us to assess sustainment of DoE practices. Planned updates to the survey will enable flexibility in assessing sustainment and its determinants at any phase after adoption. This assessment approach can flex with the longitudinal and dynamic nature of sustainment, including capturing nuances in outcomes when practices are on a temporary hold. If additional piloting illustrates the survey is useful, we plan to assess the reliability and validity of this measure for broader use in the field.

Similar content being viewed by others

Background

Evaluating sustainment of evidence-informed practices is challenging

There is growing interest in sustainment of evidence-informed practices (EIPs) [1, 2]; however, the literature on how to best measure sustainment over time is still developing [3]. Understanding sustainment of EIPs is challenging, which Birken et al. suggest is due to a lack of conceptual clarity and methodological challenges [4].

First, the terms sustainability and sustainment are often used interchangeably [4]. While these terms are related, there are important distinctions. Sustainability assesses the likelihood of an EIP being in use at a future point in time; it is measured by assessing contextual determinants (i.e., factors which decisively affect the nature or outcome of something) [5]. For example, the EIP is perceived to have low sustainability due to inadequate funding or lack of priority. Operationally, the goal is to determine whether the conditions indicative of sustaining EIPs are in place, and if not, to guide efforts to put such conditions into place [6, 7].

In contrast, sustainment assesses the extent to which an EIP is (or is not) in use after a specific period of time after initial implementation; for example, the RE-AIM Framework specifies that the sustainment period begins at least 6 months after initial implementation is completed [8]. Sustainment is measured by assessing outcomes (i.e., the way a thing turns out; a consequence), e.g., the EIP is in use/not in use. Operationally, the goal is to determine if EIPs are still in place following the end of implementation support [9]. Distinguishing between sustainability and sustainment will help researchers develop shared language and advance implementation science [4, 10].

Second, grant funding periods often end after implementation is completed, so initial and long-term sustainment cannot be assessed due to time and resource constraints [4]. As a result, most measure development has focused on sustainability (which can be measured at any point in time during grant funding periods) not sustainment (which cannot be assessed until after a sufficient amount of time has elapsed) [11]. For example, systematic reviews have highlighted factors influencing sustainability [12,13,14], and the Program Sustainability Assessment Tool (PSAT) [15] and Program Sustainability Index [16] are frequently used instruments to assess sustainability of innovations, but do not include measures for sustainment.

Limitations to current sustainment instruments

The existing literature conceptualizes a mix of items as sustainment outcomes, including the presence or absence of an EIP after implementation is completed, such as the continued use of the EIP (and its core components) [11, 17,18,19] or the level of institutionalization of the EIP [18, 19]. In addition, the literature discusses continued “attention to the issue or problem” addressed by the EIP, even when the specific EIP is no longer in use or is replaced by something else, as a sustainment outcome [18]. Finally, there are several outcomes referenced in the literature that have been used to measure both sustainability and sustainment, such as continued institutional support [11, 17,18,19] and continued funding for the EIP [11], as well as the continued benefit of the EIP [17,18,19] (see Table 1). In effect, there is overlap in the literature between sustainment determinants and sustainment outcomes, which increases confusion and hinders advancement in the field. Finally, most instruments do not include open-text boxes, which are an important “resource for improving quantitative measurement accuracy and qualitatively uncovering unexpected responses” [20].

Although existing literature offers a variety of single-item sustainment measures for researchers to use, there are few complete pragmatic multi-item instruments. A narrative review by Moullin et al. identified 13 instruments for measuring sustainment. However, they highlighted the need for more pragmatic approaches since many of the existing multi-item sustainment instruments were “overly intervention or context specific” and “lengthy and/or complex” [21]. For example, the Stages of Implementation Completion (SIC) is innovation specific [22] while the Sustainment Measurement System Scale (SMSS) contains 35 items [23]. Furthermore, most multi-item instruments were not well-suited for frontline employees to complete; they were more suited for individuals with expertise in implementation science frameworks [21]. Pragmatic instruments are needed to increase the likelihood participants will understand and respond to all items, especially when it is difficult to incentivize participants over time.

Organizational context and role of the authors

Our team is embedded within and employed by the United States (US) Veterans Health Administration (VHA), the largest integrated healthcare system in the US. VHA has over 1000 medical centers and community-based outpatient clinics; more information on VHA can be found at www.va.gov.

The VHA Diffusion of Excellence (DoE) is one of few large-scale models of diffusion; it seeks to identify and disseminate EIPs across the VHA system. DoE practices include innovations supported by evidence from research studies and administrative or clinical experience [24, 25] that strive to address patient, employee, and/or facility needs. The DoE sponsors “Shark Tank” competitions, in which regional and facility leaders bid on the opportunity to implement a practice with approximately 6 months of non-monetary external implementation support. Over 1,500 practices were submitted for consideration between Cohorts 1 and 4 (2016–2019) of Shark Tank; the DoE designated 45 as Promising Practices and these were adopted at 64 facilities (some practices were adopted by more than one facility). For additional detail on the VHA, the DoE, and promising practices, see Additional file 1 as well as previous publications [9, 26,27,28].

Our team was selected in 2016 to conduct an external evaluation of the DoE, to guide program improvements and assess the impact of the program on VHA (see previous publications and Additional file 1 for more information about the evaluation [9, 27,28,29]). In earlier phases of our evaluation, we focused on implementation and initial sustainment of DoE practices [28]. In brief, we conducted interviews after the 6-month external implementation support period to understand the level of implementation success as well as barriers and facilitators to implementation at the facilities [28]. Participants described a high level of successful implementation after the initial 6-month period of support. Due to extensive external implementation support, facilities were able to complete implementation unless significant barriers related to “centralized decision making, staffing, or resources” delayed implementation [28]. We then evaluated the initial sustainment of these practices by asking facilities to complete follow-up surveys (on average 1.5 years after external support ended). Over 70% of the initially successful teams reported their practice was still being used at their facility. Additionally, over 50% of the initially unsuccessful teams reported they had since completed implementation and their practice was still being used at their facility [28]. Although some of these initially unsuccessful facilities implemented their practice after external support ended, research suggests that many EIPs are not sustained once implementation support has ceased [30]. As a result, we shifted our focus to the evaluation of ongoing sustainment of DoE practices. The objective of this manuscript is to (1) describe the initial development and piloting of a pragmatic sustainment survey tool and (2) present results on ongoing practice sustainment.

Methods

Survey development

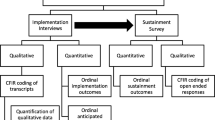

To assess the ongoing sustainment of DoE practices, we sought to develop a pragmatic survey that was (1) easy to understand for those without implementation science expertise (i.e., simple), (2) quick to complete (i.e., less than 10 min), and (3) appropriate for 45 different practices (i.e., generic) [21, 31]. Our primary evaluation question for the survey was: Is there ongoing sustainment of DoE practices? To assess this question, we used the last known status of a facility (based on the last interview or survey completed) and branching logic to route respondents through the survey based on their individual facility’s situation (see Fig. 1). Based on our working definition of sustainment, items were conceptualized as primary or secondary outcomes; secondary items were derived from the literature to enhance the survey and provide additional contextual information (see below and Table 1). Furthermore, “Please Describe” open-text boxes were included following all questions so participants could provide additional detail. Descriptions of each outcome are briefly described below; see Table 1 for outcomes mapped to the literature and Additional file 2 for the complete survey.

Terms and definitions

Primary outcome

As described earlier, our primary outcome is used as the overarching benchmark to determine if a DoE practice is sustained.

-

Practice sustainment: extent to which the DoE practice and its core components and activities are in use [11, 17,18,19]

Secondary outcomes

Given the importance of assessing more than whether the practice was in use, the survey included several items from the literature as secondary outcomes. These secondary outcomes provide additional information on the current status of the practice.

-

Institutionalization: extent to which the DoE practice is part of routine care and work processes [18, 19]

-

Priority: extent to which there is attention to the issue or problem addressed by the DoE practice, i.e., “heightened issue salience” [18]

-

Buy-in/capacity/partnership: extent to which key stakeholders and partners support the DoE practice [11, 17,18,19]

-

Funding: extent to which funding is provided to support the DoE practice [11]

-

Benefit: extent to which the DoE practice is having the intended outcomes [17,18,19]

-

Improvements/adaptation: extent to which the DoE practice is being improved and/or adapted [17]

-

Spread/diffusion: extent to which the DoE practice is spreading or diffusing to other locations [18]

Additional survey questions

To assess the fluid and longitudinal nature of sustainment, if a respondent answered “No” to the primary outcome, i.e., the DoE practice was not in use (see above and Table 1) they were asked about future plans to re-implement. If a facility’s representative reported they planned to re-implement their DoE practice, they were retained in the sample for future sustainment surveys. In addition, due to the timing of the sustainment survey (only a few months after the Centers for Disease Control and Prevention (CDC) issued guidance to cancel and/or reschedule non-essential clinical activities) [32], it included questions about the pandemic (see Additional file 2).

Data collection

In June 2020, surveys were emailed to representatives of the 64 facilities in Cohorts 1 – 4 that adopted one of the 45 DoE Promising Practices. See Additional file 1 for practice descriptions. Survey follow-up periods ranged from 1 to 3 years, depending on the cohort (i.e., when the practice was adopted). Incentives were not provided to VHA employees because surveys were expected to be completed during working hours. The survey was piloted using the REDCap® platform. Per regulations outlined in VHA Program Guide 1200.21, this evaluation has been designated a non-research quality improvement activity.

Data analysis

We calculated the overall response rate and used descriptive statistics (number, percent) to summarize the multiple choice and Likert scale questions. We used directed content analysis, guided by the Consolidated Framework for Implementation Research (CFIR), to analyze open-text box responses [33]. The CFIR is a determinant framework that defines constructs across five domains of potential influences on adoption, implementation, and sustainment [5]: (1) characteristics of the intervention (e.g., evidence strength and quality), (2) outer setting (e.g., patient needs and resources), (3) inner setting (e.g., tension for change), (4) characteristics of Individuals (e.g., self-efficacy), and (5) process (e.g., planning). As one of the most widely cited determinant frameworks, the CFIR was selected to guide analysis in order to facilitate the comparison and translation of our results with other projects. The codebook included deductive CFIR constructs as well as new inductive codes and domains that arose in the data, including relationships between constructs [34]. We used relationship coding to provide a high-level overview of how different constructs interact or relate to each other. See Table 2 for an excerpt of our CFIR informed codebook. Using a consensus-based process [34], two evaluators (CR, AN) coded qualitative data from the open-text boxes and discussed to resolve discrepancies.

Primary outcome

We began analysis by comparing alignment of quantitative and qualitative responses to the primary outcome (i.e., “Is this practice still being used or done at your site?”) (see Table 1: Item 1). Seven facility representatives reported in the survey’s open-text box that their practice was on a temporary hold due to COVID-19 but answered the primary outcome question differently; two answered “Yes”, two answered “No”, and three answered “Partially”. As a result, the team reclassified the primary outcome of those facilities into a new category under Sustained: Temporary COVID-Hold (see Fig. 2). Following this reclassification, the number and percent of facilities in each sustainment category was calculated by cohort.

Secondary outcomes

We calculated the number and percent of facilities for Items 2–8 (see Table 1) within each of our primary outcome categories from Item 1 (sustained, partially sustained, not sustained) (see Table 1: Item 1). We also analyzed the concordance between Items 1 (practice sustainment) and 2 (practice institutionalization) in the survey.

Results

Primary outcome

A representative from forty-one facilities (41/64; 64%) completed the survey in the summer of 2020 while 23 (35.9%) facility representatives were lost to follow-up; the rate of missing data was lower after the first DoE cohort. Among responding facilities, 29/41 (70.7%) facilities were sustaining their practice, 1/41 (2.4%) facilities were partially sustaining their practice, 8/41 (9.5%) facilities were not sustaining their practice, and 3/41 (7.3%) facilities had never implemented their practice (see Table 3). Sustainment rates increased across Cohorts 1–4. The CFIR constructs and inductive codes associated with primary outcome text responses are included in parentheses below; the facilitates/leads to relationship is illustrated with “>” and the hinders/stops relationship is illustrated with “|”. Please refer to Table 2 for code definitions.

Sustaining facilities

Twenty-nine facilities (N = 41, 70.7%) were sustaining their practice (see Table 3). Of these 29 facilities, 22 (75.9%) were ongoing during the COVID-19 pandemic while 7 (24.1%) were on a temporary COVID-Hold (see Table 4). The differences between these two sustaining groups of facilities are described below.

Sustaining facilities: ongoing

In late March 2020, the CDC issued guidance to cancel and/or reschedule “non-essential clinical activities, including elective procedures, face-to-face outpatient visits, diagnostic testing, and procedures” [32]. However, 22/29 facility representatives (75.9% of sustaining facilities) reported their practice was ongoing during this time. Many clinical practices were able to continue because they provided essential care for patients or were already virtual in nature (Innovation Type: Essential or Virtual & Tension for Change > Sustained: Ongoing). In fact, the pandemic served to increase the need and therefore the spread of virtual practices:

[Virtual care] is under a huge expansion. We are just now looking at adding Nursing [virtual care] clinics […] everything [virtual care] has expanded with COVID. (Facility 4_IF02a)

In contrast, other practices were ongoing during the pandemic because they adapted the practice’s in-person events to virtual events (External Policies & Incentives > Adapting > Sustained: Ongoing):

We are currently orchestrating our third annual Summit (virtually because of COVID). (Facility 3_IF09c)

The other ongoing practices were designed to benefit employees or represented administrative process changes that were not impacted by the pandemic (Employee Needs and Resources > Tension for Change > Sustained: Ongoing):

As a [department] we use this regularly and inform our employees of their current status as we continue to perform our normal tasks and duties. (Facility 2_IF06a)

Sustaining facilities: COVID-hold

Although the majority of sustaining facilities were ongoing, 7/29 facility representatives (24.1% of sustaining facilities) reported they placed their practice on a temporary hold following the CDC guidance [32] (External Policies & Incentives & Innovation Type: Non-Essential > Sustained: COVID-Hold). As illustrated in the following quotes, these facilities could not reasonably nor safely adapt their practice and offer it virtually (Patient Needs & Resources | Adapting > Sustained: COVID-Hold):

Due to COVID-19, we are unable to use this program at this time. We are currently being encouraged to do telehealth from home. We believe this program would carry additional risks [to Veterans] should it be used by telehealth rather than face to face. (Facility 2_IF11_2)

Other practices became less applicable when very few patients were present in the hospital, e.g., practices seeking patient feedback or reporting patient metrics (External Policies & Incentives | Tension for Change > Sustained: COVID-Hold).

Due to the pandemic we did not have the metrics to utilize the [practice] so it was placed on hold. (Facility 1_IF05)

Partially sustaining facility

Only one facility (N = 41, 2.4%) was partially sustaining their practice (see Table 3). The respondent explained partial sustainment by noting the practice was in use “in some specialty clinics, palliative care and hospice.” (Facility 3_IF04)

Not sustaining facilities

Eight facilities (N = 41, 19.5%) were not sustaining their practice (see Table 3). Within this group, 6/8 (75%) had a previous last known status of no sustainment and 2/8 (25%) had a previous last known status of sustained or partially sustained.

Not sustaining facilities: facilities that were previously not sustaining

As noted in the Methods section (see Survey Development), facilities that had a last known status of no sustainment were given an introductory question to determine if they had re-implemented their practice in the interim. Six facilities (N = 8, 75% of the not sustaining facilities) had not re-implemented for various reasons. Two of these facilities had not re-implemented due to losing necessary staffing and not having completed re-hiring (Engaging Key Stakeholders > Not Re-Implemented).

[The] person that initiated this practice left and it was not followed through with new staff. (Facility 2_IF07b)

Two other facilities had not re-implemented because the practice was incompatible with patient needs, facility resources, or existing workflows (Patient Needs & Resources & Available Resources | Compatibility > Not Re-Implemented).

[The practice] did not meet the needs of our Veterans in [service] [and there were] issues with [the equipment] maintaining network connection [which] slowed [service] workflow. (Facility 1_IF03c)

One facility had not re-implemented after there was a policy change disallowing the practice to continue (External Policy & Incentives > Not Re-Implemented). There was no qualitative data explaining why the 6th facility did not re-implement.

Not sustaining facilities: facilities that were previously sustaining

In contrast, two of the currently not sustaining facilities (N = 8, 25% of not sustaining facilities) had a previous status of sustained or partially sustained. Representatives from these facilities reported a lack of sustainment occurred in the previous year due to losing necessary employees (Engaging Key Stakeholders > Not Sustained) or finding that the practice was ineffective in their community (Community Characteristics > Not Sustained).

One of our [employee] positions has been vacant since January and the other [employee] position was realigned under a specific specialty care service. (Facility 3_IF01a)

Not sustaining facilities: plans to re-implement practice

To better understand the fluid nature of sustainment, facilities that were not sustaining their practice were given a follow-up survey question to determine if they intended to re-implement their practice in the future. Three of the eight (38%) not sustaining facilities intended to re-implement their practice in the future (two previously not sustaining facilities and one newly not sustaining facility) (see Table 5).

Two of these facilities explained that while they had lost necessary staffing, they were in process or planning to replace them to re-implement in the future (Engaging: Key Stakeholders > Not Sustained).

We recently hired a new Provider and are in the processes of getting her setup with [service] access/equipment. (Facility 2_IF02b)

Secondary outcomes

The following sections describe results from secondary outcomes, which were used to contextualize the primary outcome. Of note, there was a high level of missing data for the secondary outcome questions; our branching logic omitted secondary outcome questions for facilities that did not have their practice in place, i.e., did not re-implement or sustain, including two facilities that were reclassified from Not Sustained to Sustained: COVID-Hold (see Tables 6 and 7; Footnote §). As a result, only practice effectiveness and practice institutionalization are presented below. The branching logic is illustrated in Fig. 1; reclassification of outcomes is illustrated in Fig. 2.

Practice institutionalization

Overall, there was a high level of concordance (96%) between sustainment and institutionalization outcomes (see Table 6). In addition, two of the three facility representatives that reported partial institutionalization also reported partial sustainment, reflecting initial concordance; however, those two facilities were reclassified from partially sustained to sustained: COVID-hold during analysis (see Table 6, Foot Note † and Fig. 2).

Though less frequent, three facilities had discordant sustainment and institutionalization outcomes. The qualitative data from the survey provided additional context to explain some of the reasons for this discordance. For example, the facility representative that reported partial sustainment (see above) reported the practice was institutionalized where the practice was in use, but it was only in use “in some specialty clinics, palliative care and hospice” (see Table 6, Footnote *). Another facility representative reported the practice was sustained but not institutionalized; though the practice was in use where it was initially implemented, they stated “we want it to expand” (Facility 4_IF02a) (see Table 6, Footnote ‡).

Practice effectiveness

Of the 29 facilities sustaining their practice, 23 representatives (79.3%) reported the practice was demonstrating effectiveness (see Table 7). They reported using a variety of measures appropriate to their practices to track effectiveness, including patient-level (e.g., clinical measures, satisfaction rates), employee-level (e.g., turnover rates), and system-level metrics (e.g., time and cost savings). For example, one facility representative reported their practice led to a “decrease[d] LOS [length of stay for patients in the hospital] and higher patient satisfaction scores.” (Facility 4_IF07b).

One representative (N = 29, 3.4%) reported the practice was partially demonstrating effectiveness, stating they had received feedback from employees that the practice was not fully meeting their needs and they were considering adapting the practice to make it more effective at their facility (Facility 2_IF07a) (see Table 7, Footnote *). Two representatives (N = 29, 6.9%) reported the practice was not demonstrating effectiveness; one representative reported the practice “was found to be ineffective with our non-traditional patient population” and they were “transitioning to new presentation and process,” (Facility 4_IF09c) while the other reported they were “not tracking” and therefore were not able to demonstrate effectiveness (Facility 4_IF02a) (see Table 7, Footnote ‡).

Discussion

With the growing attention on sustainment of EIPs, there is a need for clarity in defining and measuring sustainability versus sustainment. Given that funding often ends before longer-term sustainment can be assessed, it is important for researchers to develop pragmatic sustainment measures that can be used when there are fewer resources and incentives for participants.

As part of an ongoing evaluation of the VHA DoE, we developed and piloted a pragmatic survey to assess ongoing sustainment across diverse practices. Based on the relatively high response rate (over 60%) and logical responses provided, we can discern several pragmatic features: it was short, easy to understand, and applicable across a wide range of practices [21, 31].

Survey results indicated a high rate (over 70%) of practice sustainment among responding facilities, which suggests that the VHA DoE is a promising large-scale model of diffusion. Sustainment rates increased across Cohorts 1–4, with later cohorts reporting higher rates of sustainment than earlier cohorts. Ongoing enhancements made to the VHA DoE processes over time (e.g., refining methods to select Promising Practices, better preparing facilities for implementation) may have helped improve sustainment rates over time. It’s also possible lower rates in Cohorts 1–2 (2016 and 2017) highlight challenges to sustainment over longer periods. However, only two additional facilities discontinued their practice in the year prior to the survey and these were part of Cohort 3 (2018). Future sustainment surveys with these and new cohorts will help build understanding about changes over time and factors that help or hinder ongoing sustainment. Our ability to continue following these practices is a unique strength of this evaluation.

Lessons learned

One: multiple-choice responses

There were several important lessons learned that will improve our ongoing evaluation efforts and subsequent surveys. First, our primary measure failed to capture nuance in the data related to practices being temporarily on hold. Our survey was piloted during the COVID-19 pandemic, during which the CDC issued guidance to cancel and/or reschedule non-essential in-person healthcare. As a result, several respondents used the open-text boxes to explain that their practice was in place but on hold during the pandemic, and that they planned to resume operations in the future. However, facility representatives were not consistent in how they answered the primary question; responses ranged from sustained to partially sustained to not sustained. Based on content analysis of open-text explanations, we systematically reclassified these responses as sustained: COVID-hold “to mitigate survey bias and ensure consistency” [20] (see Fig. 2). Though temporary holds were common in our evaluation due to the pandemic, EIPs may be paused for a variety of reasons that do not necessarily indicate discontinuation and lack of sustainment. For example, two facility representatives reported their practice was not sustained because they lost employees, but they were in the process of re-hiring; in effect, though the reason was different, these practices were on hold similar to practices paused by the pandemic. It is important to note that turnover and gaps in staffing aligns with a key finding from our earlier work: when implementation and sustainment are achieved via the efforts of a single key employee, it is impossible to reliably sustain the practice when that person leaves or simply takes vacation [28].

In the future, we will add responses to capture whether the practice has been discontinued permanently or is temporarily not in use/not in place. In addition to better fitting the data, this refinement allows the measure to be used at any time point from initial adoption to sustainment; although adoption, implementation, and sustainment are defined differently based on the measurement point, they all assess whether the innovation is being used or delivered [5]. This refinement further shortens the survey by eliminating the need for a follow-up question about re-implementation of the practice.

Two: sustainment determinants and outcomes

Second, the sustainment literature often conflates sustainment determinants with sustainment outcomes. Table 1 lists measures conceptualized as outcomes in the literature that were included in our survey. However, if a facility representative reported the practice was not in use (our primary outcome), many of the secondary outcomes were not applicable to that facility. For example, if a practice was not in use, asking whether the practice was demonstrating effectiveness would be illogical; continued effectiveness is a determinant to successful sustainment, not an outcome. Since we did not include secondary outcomes for those who reported they were not sustaining their practice, there was a high rate of missing data for these items by design. Future versions of the survey will reconceptualize Items 3–7 in Table 1 as sustainment determinants which aligns with the SMSS but with fewer items [23].

Item 2 (Practice Institutionalization) was correlated with our primary sustainment outcome (Item 1). Goodman and Steckler define institutionalization as the “long-term viability and integration of a new program within an organization” [35]. Institutionalization is conceptualized as a deeper, more mature form of sustainment; where the practice is fully routinized and embedded into clinical practice, beyond just relying on the effort of a single person [36]. Basic sustainment (whether a practice is in use) would be a prerequisite for practice institutionalization. Finally, Item 9 (Practice Spread/Diffusion) will be conceptualized as a diffusion outcome. Rogers defines diffusion as “the process through which an innovation […] spreads via certain communication channels over time” [37] within and across organizations [38]. Survey respondents may report sustainment within their own setting with or without diffusion to additional sites. A key goal for the DoE is to widely diffuse effective practices across clinical settings within and outside VHA.

Three: open-text reponses

Third, we used “please explain” as a prompt for our open-text boxes to provide respondents with an opportunity to contextualize their experiences. However, the information they provided often focused on the rationale for the response rather than barriers and facilitators that led to their reported outcome. For example, when a facility representative reported a practice was sustained, they provided a rationale for their answer (e.g., all core components were in place) vs. a description of facilitators that allowed them to sustain their practice (e.g., continued funding). Changing this prompt to “Why?” and reconceptualizing Items 3–7 of our survey as sustainment determinants (see above) will more directly assess relevant barriers and facilitators.

Four: sustainability

Fourth, we will add a sustainability question (i.e., elicit prospects for continued sustainment) to the survey for all respondents. Although we asked not sustaining facilities a prospective question about plans to re-implement, we did not ask sustaining facilities a prospective question about continued sustainment. Our previous work indicated that predictions of sustainment were relatively accurate [28]. Sustainment is dynamic and may ebb and flow over time; those working most closely with the practice are best positioned to assess prospects for future sustainment as well as anticipated barriers. Low ratings of sustainability could provide an opportunity for early interventions to stave off future failure to sustain.

Pragmatic sustainment surveys and future directions

It is important to note that following piloting of our survey, Moullin et al. published the Provider REport of Sustainment Scale (PRESS); it contains three Likert Scale items: 1. Staff use [EIP] as much as possible when appropriate; 2. Staff continue to use [EIP] throughout changing circumstances; 3. [EIP] is a routine part of our practice [39]. To our knowledge, the PRESS is the first validated pragmatic sustainment instrument and addresses key issues highlighted by Moullin et al. in their previous narrative review [21] (see Background: Limitations to current instruments). Although the PRESS is an excellent instrument, we believe that our updated survey tool will offer unique strengths.

First, our measure is intended to be used annually; in order to maintain an up-to-date participant list, we need to know which practices are discontinued permanently vs. temporarily on hold. As a result, the multiple response options in our survey will allow us to capture important nuance in the data (see Lessons learned one). Second, a brief section on determinants will allow us to understand the barriers and facilitators that explain the sustainment status (see Lessons learned two). Third, the inclusion of a sustainability item (i.e., the likelihood of future sustainment) offers DoE leadership earlier opportunities to intervene when facility representatives predict their practice may fail to be sustained (see Lessons learned three). Finally, the inclusion of open-text responses contributes to measurement accuracy, provides additional contextual information, and facilitates uncovering unexpected themes [20], all of which are urgently needed in an increasingly uncertain world (see Lessons learned four). If additional piloting shows the survey is useful, we plan to assess the reliability and validity of this tool for broader use in the field.

Limitations

Although we tried to limit bias, this is a real-world quality improvement project, and there are several limitations that may have skewed our results: (1) the use of self-report, potential social desirability, and lack of fidelity assessment; 2) the size of our sample; and (3) the rate of missing data. Regarding the first limitation: Although self-report may be less objective than in-person observation, it is commonly used to assess sustainment (e.g., both the SMSS [23] and PRESS [39] rely on self-report), because it is more pragmatic and feasible [39]. Given travel and funding limitations, self-report was the only option in our project. To limit social desirability bias, we informed participants that we were not part of the DoE team, that the survey was completely voluntary, and that no one outside of the evaluation team would have access to their data. In addition, our survey was unable to include innovation-specific components of fidelity due to the diverse nature of DoE practices. However, the generic nature of the tool is one of its strengths; although this work has been limited to the VHA, it was used across a wide portfolio of diverse practices. Thus, the tool may be useful in other healthcare systems. Regarding the second limitation: Although the sample size was small, all representatives involved in implementation were invited to complete the survey. However, as additional cohorts participate in the DoE, the sample size will increase, allowing us to pilot the survey with additional facilities and practices. Regarding the third limitation: Although the rate of missing data was 40%, it generally decreased with each new cohort; this may be a function of shorter time periods elapsed since initial implementation; however, we plan to continue including non-responding facilities in future surveys until they have been lost to follow-up for 3 years.

Conclusions

We provide further clarity for concepts of sustainability and sustainment and how each is measured. The initial development and piloting of our pragmatic survey allowed us to assess the ongoing sustainment of DoE practices, demonstrating that the DoE is a promising large-scale model of diffusion. If additional piloting illustrates the survey tool is useful, we plan to assess its reliability and validity for broader use in the field; given our survey was used with a diverse portfolio of practices, it may serve as a useful survey tool for other evaluation efforts.

Availability of data and materials

The datasets generated and/or analyzed during the current evaluation are not available due to participant privacy but may be available from the corresponding author on reasonable request.

Abbreviations

- CFIR:

-

Consolidated Framework for Implementation Research

- DoE:

-

Diffusion of Excellence

- EIP:

-

Evidence-informed practice

- VHA:

-

Veterans Health Administration

References

Nevo I, Slonim-Nevo V. The myth of evidence-based practice: towards evidence-informed practice. Br J Soc Work. 2011;41:1176–97. https://doi.org/10.1093/bjsw/bcq149.

Kumah EA, McSherry R, Bettany-Saltikov J, van Schaik P. Evidence-informed practice: simplifying and applying the concept for nursing students and academics. Br J Nurs. 2022;31:322–30. https://doi.org/10.12968/bjon.2022.31.6.322.

Borst RAJ, Wehrens R, Bal R, Kok MO. From sustainability to sustaining work: What do actors do to sustain knowledge translation platforms? Soc Sci Med. 2022;296:114735. https://doi.org/10.1016/j.socscimed.2022.114735.

Birken SA. Advancing understanding and identifying strategies for sustaining evidence-based practices: a review of reviews, vol. 13; 2020.

Damschroder LJ, Reardon CM, Opra Widerquist MA, Lowery J. Conceptualizing outcomes for use with the Consolidated Framework for Implementation Research (CFIR): the CFIR Outcomes Addendum. Implement Sci. 2022;17:7. https://doi.org/10.1186/s13012-021-01181-5.

Fortune-Greeley A, Nieuwsma JA, Gierisch JM, Datta SK, Stolldorf DP, Cantrell WC, et al. Evaluating the Implementation and Sustainability of a Program for Enhancing Veterans’ Intimate Relationships. Mil Med. 2015;180:676–83.

Stolldorf DP, Fortune-Britt AK, Nieuwsma JA, Gierisch JM, Datta SK, Angel C, et al. Measuring sustainability of a grass-roots program in a large integrated healthcare delivery system: The Warrior to Soul Mate Program. J Mil Veteran Fam Health. 2018;4:81–90.

Glasgow RE, Harden SM, Gaglio B, Rabin B, Smith ML, Porter GC, et al. RE-AIM Planning and Evaluation Framework: Adapting to New Science and Practice With a 20-Year Review. Front Public Health. 2019;7:64. https://doi.org/10.3389/fpubh.2019.00064.

Jackson GL, Cutrona SL, White BS, Reardon CM, Orvek E, Nevedal AL, et al. Merging Implementation Practice and Science to Scale Up Promising Practices: The Veterans Health Administration (VHA) Diffusion of Excellence (DoE) Program. Jt Comm J Qual Patient Saf. 2021;47:217–27. https://doi.org/10.1016/j.jcjq.2020.11.014.

Chambers D. Building a Lasting Impact: Implementation Science and Sustainability; 2013.

Palinkas LA, Spear SE, Mendon SJ, Villamar J, Reynolds C, Green CD, et al. Conceptualizing and measuring sustainability of prevention programs, policies, and practices. Transl Behav Med. 2020;10:136–45. https://doi.org/10.1093/tbm/ibz170.

Whelan J, Love P, Millar L, Allender S, Bell C. Sustaining obesity prevention in communities: a systematic narrative synthesis review: Sustainable obesity prevention. Obes Rev. 2018;19:839–51. https://doi.org/10.1111/obr.12675.

Wiltsey Stirman S, Kimberly J, Cook N, Calloway A, Castro F, Charns M. The sustainability of new programs and innovations: a review of the empirical literature and recommendations for future research. Implement Sci. 2012;7:17. https://doi.org/10.1186/1748-5908-7-17.

Hailemariam M, Bustos T, Montgomery B, Barajas R, Evans LB, Drahota A. Evidence-based intervention sustainability strategies: a systematic review. Implement Sci. 2019;14:57. https://doi.org/10.1186/s13012-019-0910-6.

Luke DA, Calhoun A, Robichaux CB, Elliott MB, Moreland-Russell S. The Program Sustainability Assessment Tool: A New Instrument for Public Health Programs. Prev Chronic Dis. 2014;11:130184. https://doi.org/10.5888/pcd11.130184.

Mancini JA, Marek LI. Sustaining Community-Based Programs for Families: Conceptualization and Measurement*. Fam Relat. 2004;53:339–47. https://doi.org/10.1111/j.0197-6664.2004.00040.x.

Lennox L, Maher L, Reed J. Navigating the sustainability landscape: a systematic review of sustainability approaches in healthcare. Implement Sci. 2018;13:27. https://doi.org/10.1186/s13012-017-0707-4.

Scheirer MA, Dearing JW. An Agenda for Research on the Sustainability of Public Health Programs. Am J Public Health. 2011;101:2059–67. https://doi.org/10.2105/AJPH.2011.300193.

Shelton RC, Chambers DA, Glasgow RE. An Extension of RE-AIM to Enhance Sustainability: Addressing Dynamic Context and Promoting Health Equity Over Time. Front Public Health. 2020;8:134. https://doi.org/10.3389/fpubh.2020.00134.

Richards NK, Morley CP, Wojtowycz MA, Bevec E, Levandowski BA. Use of open-text responses to recode categorical survey data on postpartum contraception use among women in the United States: A mixed-methods inquiry of Pregnancy Risk Assessment Monitoring System data. PLOS Med. 2022;19:e1003878. https://doi.org/10.1371/journal.pmed.1003878.

Moullin JC. Advancing the pragmatic measurement of sustainment: a narrative review of measures, vol. 18; 2020.

Saldana L. The stages of implementation completion for evidence-based practice: protocol for a mixed methods study. Implement Sci. 2014;9:43. https://doi.org/10.1186/1748-5908-9-43.

Palinkas LA, Chou C-P, Spear SE, Mendon SJ, Villamar J, Brown CH. Measurement of sustainment of prevention programs and initiatives: the sustainment measurement system scale. Implement Sci. 2020;15:71. https://doi.org/10.1186/s13012-020-01030-x.

Kilbourne AM, Goodrich DE, Miake-Lye I, Braganza MZ, Bowersox NW. Quality Enhancement Research Initiative Implementation Roadmap: Toward Sustainability of Evidence-based Practices in a Learning Health System. Med Care. 2019;57:S286–93. https://doi.org/10.1097/MLR.0000000000001144.

Rycroft-Malone J, Harvey G, Kitson A, McCormack B, Seers K, Titchen A. Getting evidence into practice: ingredients for change. Nurs Stand. 2002;16:38–43.

Clancy C. Creating World-Class Care and Service for Our Nation’s Finest: How Veterans Health Administration Diffusion of Excellence Initiative Is Innovating and Transforming Veterans Affairs Health Care. Perm J. 2019:23. https://doi.org/10.7812/TPP/18.301.

Vega R, Jackson GL, Henderson B, Clancy C, McPhail J, Cutrona SL, et al. Diffusion of Excellence: Accelerating the Spread of Clinical Innovation and Best Practices across the Nation’s Largest Health System. Perm J. 2019:23. https://doi.org/10.7812/TPP/18.309.

Nevedal AL, Reardon CM, Jackson GL, Cutrona SL, White B, Gifford AL, et al. Implementation and sustainment of diverse practices in a large integrated health system: a mixed methods study. Implement Sci Commun. 2020;1:61. https://doi.org/10.1186/s43058-020-00053-1.

Jackson GL, Damschroder LJ, White BS, Henderson B, Vega RJ, Kilbourne AM, et al. Balancing reality in embedded research and evaluation: Low vs high embeddedness. Learn Health Syst. 2021. https://doi.org/10.1002/lrh2.10294.

Hunter SB, Han B, Slaughter ME, Godley SH, Garner BR. Predicting evidence-based treatment sustainment: results from a longitudinal study of the Adolescent-Community Reinforcement Approach. Implement Sci. 2017;12:75. https://doi.org/10.1186/s13012-017-0606-8.

Stanick CF, Halko HM, Nolen EA, Powell BJ, Dorsey CN, Mettert KD, et al. Pragmatic measures for implementation research: development of the Psychometric and Pragmatic Evidence Rating Scale (PAPERS). Transl Behav Med. 2021;11:11–20. https://doi.org/10.1093/tbm/ibz164.

Azam SA, Myers L, Fields BKK, Demirjian NL, Patel D, Roberge E, et al. Coronavirus disease 2019 (COVID-19) pandemic: Review of guidelines for resuming non-urgent imaging and procedures in radiology during Phase II. Clin Imaging. 2020;67:30–6. https://doi.org/10.1016/j.clinimag.2020.05.032.

Hsieh H-F, Shannon SE. Three Approaches to Qualitative Content Analysis. Qual Health Res. 2005;15:1277–88. https://doi.org/10.1177/1049732305276687.

Saldana J. The coding manual for qualitative researchers. 2nd ed: SAGE; 2015.

Goodman RM, Steckler A. A framework for assessing program institutionalization. Knowl Soc Int J Knowl Transfe. 1989;2:57–71.

Zakumumpa H, Kwiringira J, Rujumba J, Ssengooba F. Assessing the level of institutionalization of donor-funded anti-retroviral therapy (ART) programs in health facilities in Uganda: implications for program sustainability. Glob Health Action. 2018:11. https://doi.org/10.1080/16549716.2018.1523302.

Rogers EM. A Prospective and Retrospective Look at the Diffusion Model. J Health Commun. 2004;9:13–9. https://doi.org/10.1080/10810730490271449.

Lundblad JP. A review and critique of rogers’ diffusion of innovation theory as it applies to organizations. Organ Dev J. 2003;21:50–64.

Moullin JC, Sklar M, Ehrhart MG, Green A, Aarons GA. Provider REport of Sustainment Scale (PRESS): development and validation of a brief measure of inner context sustainment. Implement Sci. 2021;16:86. https://doi.org/10.1186/s13012-021-01152-w.

Acknowledgements

The opinions expressed in this article are those of the authors and do not represent the views of the Veterans Health Administration (VHA) or the US Government. The authors would like to thank Ms. Elizabeth Orvek, MS, MBA for help programming the survey and Ms. Jennifer Lindquist, MS and Mr. Rich Evans, MS for statistical analysis support. In addition, the authors want to express their sincere gratitude to the VHA employees who participated in this evaluation and shared their experiences with us.

Funding

This evaluation was funded by the Veterans Health Administration (VHA) Quality Enhancement Research Initiative (QUERI) [PEC-17-002] with additional funding subsequently provided by the VHA Office of Rural Health through the Diffusion of Excellence (DoE).

Author information

Authors and Affiliations

Contributions

All authors were engaged in the national evaluation of the Diffusion of Excellence (DoE). BH and RV lead the DoE and collaborate with the evaluation team. GJ, LD, SC, AG, HK, and GF designed and supervised the overall evaluation. MA, BW, and KDL provided project management support. LD, CR, AN, and MOW led data collection, analysis, and manuscript writing for this aspect of the evaluation. All authors were involved in the critical revision of the manuscript for intellectual content. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Per regulations outlined in VHA Program Guide 1200.21, this evaluation has been designated a non-research quality improvement activity.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Cohort 1 – 4 Practice Descriptions. This file provides descriptions of each of the Promising Practices that were included in this evaluation.

Additional file 2.

Sustainment Survey. This file is the survey that was piloted with participants in this evaluation.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Reardon, C.M., Damschroder, L., Opra Widerquist, M.A. et al. Sustainment of diverse evidence-informed practices disseminated in the Veterans Health Administration (VHA): initial development and piloting of a pragmatic survey tool. Implement Sci Commun 4, 6 (2023). https://doi.org/10.1186/s43058-022-00386-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43058-022-00386-z