Abstract

Low-dose computed tomography (LDCT) has gained increasing attention owing to its crucial role in reducing radiation exposure in patients. However, LDCT-reconstructed images often suffer from significant noise and artifacts, negatively impacting the radiologists’ ability to accurately diagnose. To address this issue, many studies have focused on denoising LDCT images using deep learning (DL) methods. However, these DL-based denoising methods have been hindered by the highly variable feature distribution of LDCT data from different imaging sources, which adversely affects the performance of current denoising models. In this study, we propose a parallel processing model, the multi-encoder deep feature transformation network (MDFTN), which is designed to enhance the performance of LDCT imaging for multisource data. Unlike traditional network structures, which rely on continual learning to process multitask data, the approach can simultaneously handle LDCT images within a unified framework from various imaging sources. The proposed MDFTN consists of multiple encoders and decoders along with a deep feature transformation module (DFTM). During forward propagation in network training, each encoder extracts diverse features from its respective data source in parallel and the DFTM compresses these features into a shared feature space. Subsequently, each decoder performs an inverse operation for multisource loss estimation. Through collaborative training, the proposed MDFTN leverages the complementary advantages of multisource data distribution to enhance its adaptability and generalization. Numerous experiments were conducted on two public datasets and one local dataset, which demonstrated that the proposed network model can simultaneously process multisource data while effectively suppressing noise and preserving fine structures. The source code is available at https://github.com/123456789ey/MDFTN.

Similar content being viewed by others

Introduction

Computed tomography (CT) is a widely used medical imaging technique in clinical practice. Owing to its fast scanning speed, high image resolution, and ability to display fine lesions or tissue structures, it plays an increasingly important role in diagnosing lung diseases, neurological lesions, and cardiovascular abnormalities [1]. However, numerous clinical studies have demonstrated that superimposed X-ray scans can cause radiation damage to patients’ normal tissues and increase the risk of cancer [2,3,4,5]. The reduction in radiation dose during X-ray scanning has garnered significant attention from scholars. Various approaches, such as reducing the tube current and tube voltage and increasing the helical pitch, have been explored to lower the radiation dose of X-ray CT. However, these methods often produce images with significant noise and artifacts. Therefore, the development of low-dose CT (LDCT) imaging methods to enhance image quality is imperative [6].

In general, a practical method for enhancing the overall image quality is to establish a reasonable model that accurately simulates noisy anatomy. Prior to the widespread adoption of deep learning (DL) in low-dose CT imaging, researchers primarily concentrated on three approaches to noise reduction: sinogram-domain filtering methods [7,8,9,10], iterative reconstruction methods [11,12,13,14,15,16], and post-processing methods [17,18,19,20]. First, to restore the local structure of the sinogram domain, a distinct local filter kernel is generated for each input measurement. This approach aims to preserve edge information while reducing noise in the reconstructed image. Examples of such filters include the noise-adaptive bilateral [9] and structure-adaptive sinogram filters [10]. Iterative reconstruction methods are widely used [11, 12]. These methods combine prior information from the image domain with the data characteristics of the projection domain to reconstruct high-quality CT images. For example, total variation regularization [13] utilizes the L1 norm of the image gradient as an image constraint, which effectively suppresses noise in LDCT image reconstruction. To enhance the preservation of edge information, Yu et al. [14] introduced a method that utilizes the L0 norm as a regularization constraint and employs variable separation and alternate directions to solve nonconvex optimization problems. Other approaches such as dictionary learning methods [16] aim to extract local structural information from image patches and achieve high-quality image reconstruction. In comparison to the previous two methods, post-processing methods [19, 20] can be directly applied to reconstructed CT images without being influenced by the equipment or scanning system. Although these methods have been extensively used in various clinical imaging scenarios, there remain limitations in terms of detailed reconstructions.

With the increasing popularity of artificial intelligence technology, reconstruction methods using DL have been widely employed in LDCT and have demonstrated remarkable imaging performance [21,22,23,24,25,26,27,28,29,30,31,32]. Chen et al. [25] introduced a self-encode-decode network (RED-CNN) that demonstrates the potential of DL to reduce image noise related to anatomical structures. Shan et al. [27] proposed a modularized adaptive processing neural network (MAP-NN) for process-oriented image denoising. Du et al. [28] developed a modularized iterative network framework to address the issues of detail loss and gradient disappearance in MAP-NNs. Other researchers have explored self-supervised and unsupervised methods for LDCT image noise suppression [33,34,35,36]. To address the domain-shift image-denoising problem, Wang et al. [37] utilized noise estimation and transfer learning to propose a domain-adaptive denoising network, which showed promising results in addressing the issue of varying data distributions in clinical LDCT. Yang et al. [38] introduced a hypernetwork-based, physics-driven, personalized federated learning (FL) approach (HyperFed) to address domain shifts and privacy issues. Li et al. [39] adopted a Gaussian mixture model (GMM) to quantify the noise distribution in CT images. Based on this quantification, they proposed an unsupervised GMM-UNNET method to mitigate the issues related to noise distribution drift arising from varying scanning protocols. Li et al. [40] introduced a generative adversarial network with noise-encoding transfer learning, which effectively generates paired clinical LDCT images to address the domain-adaptation issue. Moreover, several researchers have integrated DL with iterative reconstruction techniques to further enhance the quality of LDCT images [41,42,43,44,45]. Zhang et al. [44] introduced a comprehensive learning-enabled adversarial reconstruction method for enhancing the structural fidelity and visual perception of LDCT images. Hu et al. [45] combined iterative optimization with DL using residual learning to improve the convergence and versatility of the LDCT.

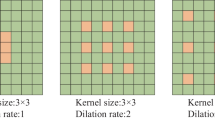

Although these methods can enhance the quality of LDCT images with uncertain noise, they have a limited ability to simultaneously process LDCT images from multiple sources and rely solely on a specific dataset. CT devices from various manufacturers employ different low-dose scanning protocols, hardware devices, and data processing procedures, resulting in diverse distributions of LDCT images, as illustrated in Fig. 1. Consequently, researchers typically conduct multisource low-dose CT denoising with continual learning or domain-adaptive learning [46, 47]; however, this results in lower efficiency or catastrophic forgetting problems. Despite the extensive use of DL models in medical imaging, the inherent scarcity and imbalance of medical datasets significantly hinder their performance of DL models. Therefore, it is crucial to design a learning-once model that can effectively handle multisource LDCT images and successfully address the challenges associated with small datasets. The objective of this study is to develop a robust model capable of simultaneously handling multisource datasets and surpassing the performance of individual models through continual learning.

In this study, a parallel-processing model called multi-encoder deep feature transformation network (MDFTN) is introduced, which is designed to denoise multisource, low-dose CT images. First, a multibranch parallel encoder is utilized to extract diverse features from multisource datasets. A deep feature transformation module (DFTM) then compresses these features into a shared feature space, enabling the mutual enhancement of features from different datasets. Finally, each decoder performs an inverse operation for multisource loss estimation and generates multisource LDCT images. During backward propagation in network training, joint loss functions are employed to calculate the gradient of each layer and update all the network weights accordingly. Through collaborative training, the proposed MDFTN leverages the complementary advantages of multisource data distribution to enhance its adaptability and generalization.

The remainder of this article is organized as follows: Methods section presents a thorough description of the method employed, including the network framework and DFTM. In Results section, the effectiveness of the proposed method is assessed using both multisource simulation and real-world clinical datasets. The experimental results are detailed and relevant ablation experiments are discussed in this section. Finally, a comprehensive discussion and conclusions are presented in Discussion.

Methods

CT denoising model

Regarding deep-learning-based LDCT denoising methods, the denoising model is considered a mapping function that transforms the LDCT input into a normal-dose CT (NDCT) output. Let \(x \in LDCT^{{H{ \times }W}}\) and \(y \in NDCT^{{H{ \times }W}}\) represent the LDCT and NDCT images, respectively, and \(W\) and \(H\) denote the width and height of the image matrix, respectively. Thus, the relationship between the two can be expressed as follows:

\(m\) is a complex degradation process that primarily involves quantum and electronic noises, among other factors. The CT denoising model is considered an inverse problem, in which a deep network model is used to construct a feature map \(f\), given an LDCT image, to estimate the NDCT image. This relationship is represented by Eq. (2).

In theory, DL-based methods have the potential to enhance denoising performance by extracting more comprehensive feature distributions from the network model. This allows the estimation of network parameters using DL techniques.

Network framework overview

Inspired by the excellent performance of refs. [48,49,50], a novel MDFTN is proposed with collaborative training for denoising multisource low-dose CT images, as illustrated in Fig. 2. The proposed MDFTN consists of multiple encoders and decoders along with a DFTM, each of which is responsible for a specific task. First, during forward propagation in network training, low-dose images from multisource datasets are fed into CNN-based encoders. These encoders independently extract diverse levels of image features in parallel rom their respective data sources. Encoders play a pivotal role in simultaneously extracting data from diverse sources, thereby mitigating the risk of forgetting the data. This capability is particularly beneficial when dealing with multisource datasets, as it addresses the challenges associated with continuous learning or domain-adaptive learning and enhances the efficiency and effectiveness of data processing. Second, the DFTM combines and compresses the various features into a shared feature space. This shared feature space allows the extraction of consistent features from different data sources, thereby complementing the features of different datasets. Finally, each decoder performs an inverse operation for multisource loss estimation to generate distinct high-resolution CT images from the shared features. During backward propagation in network training, joint loss functions are employed to calculate the gradient of each layer and update all the network weights accordingly. Through collaborative training, the proposed MDFTN leverages the complementary advantages of multisource data distribution to enhance its adaptability and generalization.

Encoders and decoders

As shown in Fig. 2, multisource image reconstruction tasks are performed with multiple parallel encoders. Each image is processed by its corresponding DL mapping function. The encoder and decoder are modified based on the U-net network [51], performing two downsampling and two upsampling operations. Furthermore, encoders have similar network structure but receive different inputs, which are LDCT images from multisource datasets. Here, residual blocks (Fig. 3a) are used instead of cascading convolutional layers in the classical U-net network [51]. 1 \({ \times }\) 1 convolution is adopted to adaptively fuse a series of features at different levels, and 3 \({ \times }\) 3 convolution is exploited to extract global information. To expedite network training, the encoders are designed to simultaneously learn and reconstruct information. It is assumed that the degraded LDCT images of different sources \(\left( {\begin{array}{*{20}c} {x_{a}^{1} ,x_{a}^{2} ,...,x_{a}^{m} } \\ \begin{gathered} x_{{\text{b}}}^{1} ,x_{b}^{2} ,...,x_{b}^{m} \\ \cdot \cdot \cdot \\ \end{gathered} \\ {x_{n}^{1} ,x_{n}^{2} ,...,x_{n}^{m} } \\ \end{array} } \right) \in R^{{H{ \times }W}}\) and features extracted by encoders are \(\left( \begin{gathered} \begin{array}{*{20}c} {F_{a} } \\ {F_{b} } \\ { \cdot \cdot \cdot } \\ \end{array} \hfill \\ F_{n} \hfill \\ \end{gathered} \right) \in R^{{\frac{H}{4}{ \times }\frac{W}{4}{ \times }256}}\). The multi-parallel coding task is defined as Eq. (4), where \(\theta_{1}\),\(\theta_{2}\),…,\(\theta_{n}\) are the parameters of encoders \(E_{1}\),\(E_{2}\),…,\(E_{n}\).

During network training, downsampling is achieved through an average pooling operation, whereas upsampling is achieved through a transposed convolution operation. During the decoding process, deep features from different image encoders are mapped and connected to the middle layer of the corresponding decoder, as shown in Fig. 2. Here, the decoders incorporate spatially enhanced kernel generation (SEKG) modules [52], which perform spatial attention using a simple 3 \({ \times }\) 3 depthwise separable convolution. In addition, they include channel attention, which utilizes average pooling and convolution mapping layers to generate the information weight \(\Theta\). The dimensions of the \(\Theta\) are (n \({ \times }\) c \({ \times }\) k \({ \times }\) k)\({ \times }\) h \({ \times }\) w, where n, c, k, h, and w represent the batch size, number of channels, convolution kernel, height, and width, respectively. The unfold operation extracts a sliding local area \(F_{unfold} { \in }{\mathbf{\mathbb{R}}}^{{c \times k^{2} \times h \times w}}\) from the input feature x with a patch size of k = 3 and a stride of s = 1. The new regional feature \(F_{unfold} { \in }{\mathbf{\mathbb{R}}}^{{c \times k^{2} \times h \times w}}\) is then adjusted using the spatial-channel weight \(\Theta\). Many researchers have demonstrated that attention mechanisms [53,54,55] can effectively adjust local information by identifying key features in an input image and assigning them higher weights. This enables the model to retain finer details. Therefore, the SEKG [52] module is added after the upsampling process, which aims to learn and utilize specific features that are optimal for denoising, thereby enhancing the image quality. Shortcut connections are used to compensate for information distortion caused by upsampling. Additionally, a Parametric Rectified Linear Unit [56] (PReLU) is introduced after convolution to expedite the convergence of the network.

DFTM

In recent years, researchers have been diligently working on designing innovative feature fusion modules aimed at seamlessly integrating features from different sources to explore the potential value of data more comprehensively. Zhang et al. [57] proposed a fast and flexible denoising network that seamlessly integrates noise image estimation and noise level estimation to eliminate intricate noise patterns effectively. Gao et al. [58] introduced a multistream denoising network that incorporates a multiscale fusion module to effectively capture noise across various scales. Zhang et al. [50] proposed a transformer-integrated multiencoder network that uses a feature fusion module to compress and fuse features from image, prior, and transformer encoders to eliminate finite-angle artifacts. Based on the insights of these researchers, a DFTM module was designed. As depicted in Fig. 2, the DFTM module is used to fuse and compress diverse features. First, all the features are connected to the middle layer \(\left[ {F_{a} ,F_{b} , \cdot \cdot \cdot ,F_{n} } \right] \in R^{{\frac{H}{4}{ \times }\frac{W}{4}{ \times (}256 + 256 + , \cdot \cdot \cdot , + 256)}}\); Subsequently, the intermediate features are condensed into shared features \(F_{share} \in R^{{\frac{H}{4}{ \times }\frac{W}{4}{ \times }256}}\) through convolutional layers, and FTM modules with varying depths extract identical features from different sources to obtain shared features at different image levels. This facilitates the mutual complementation of features between different datasets.

Previous studies have indicated that incorporating residual structures [59,60,61] into models can improve the model performance while minimizing information loss. In this study, to transform the features extracted from a multisource dataset using encoders into shared features, the residual structures are modified and implemented in the experiments. For the FTM (Fig. 3b), four convolution filters are used, and 1 \({ \times }\) 1 convolution is used to fuse the features after global averaging pooling. A sigmoid activation function is used to reduce the feature weight to a range of 0-1, thereby adjusting the importance of the input features. All the channels have a size of 256. To avoid information loss, input features from the original LDCT image are connected to the output. The processing of the DFTM module is illustrated using the following formulas:

where \(F_{share}\) represents shared features and \(C\) and cat represent 3 \({ \times }\) 3 convolution and concatenation operations, respectively.\(F_{CP}\) consists of two 3 \({ \times }\) 3 convolutions, followed by PReLU to extract global residual information.\(C_{1}\) and \(PC_{1}\) denote a 1 \({ \times }\) 1 convolution layer, PReLU, and a convolution layer of 1 \({ \times }\) 1, respectively.\(AP\) represents the average pooling.\(\upsigma\) denotes the sigmoid activation function and \({ \otimes }\) represents the element-wise multiplication operation.\(F_{DM}\) refers to the final output.

Loss function

Previous research has indicated that selecting an appropriate loss function can enhance the capability of the model to accurately capture the feature distribution of the data. Moreover, the loss function serves as a metric for assessing the training progress of the model and provides feedback for fine-tuning the predictive parameters and hyperparameters to minimize the loss function and achieve more precise predictions. The least absolute deviation (L1) loss function calculates the absolute difference between the estimated and true values and is insensitive to outliers, which is beneficial for maintaining the model stability when there are exceptional values. The structural similarity index measurement (SSIM) [62] measures the similarity of images from three perspectives: luminance, contrast, and structure. The higher the SSIM value, the more similar the two images. In image-denoising tasks, the SSIM loss function can better preserve the details and texture information of an image, and improve the quality of the reconstructed image. Therefore, in this study, L1 and SSIM have been selected to construct a composite loss function. The formulation of the loss function is expressed in Eq. (8).

where \(I_{pred}^{a}\)\(I_{pred}^{b}\),…,\(I_{pred}^{n}\) indicate the predicted denoising results from multiple sources, and \(I_{ref}^{a}\),\(I_{ref}^{b}\),…,\(I_{ref}^{n}\) represent NDCT reference images.\(\alpha\) and \(\uplambda\) are the hyperparameters of the different loss terms, and \(L_{SSIM}\) is the SSIM loss function.

In this study, the loss function is primarily influenced by the training process of vertical FL [63]. In the vertical FL training process, the objective function of vertical FL is represented by Eq. (9).

where \(L = \sum\limits_{k = 1}^{K} {L_{k} }\), \(K\) is the total institution,\(x_{i}^{k}\), and \(y_{i}^{k}\) represent the LDCT image and NDCT image of institution \(k\). Each institution has a model \(\{ f_{{w^{k} }} \}_{k = 1}^{k}\), and \(w^{k}\) is the corresponding weight. The vertical FL is solved for the local parameter \(w^{1} ,w^{2} , \cdot \cdot \cdot ,w^{k}\) according to the global loss function \(L\) until the network converges. MDFTN also uses a joint loss function to solve the parameter weights. Therefore, the total loss function \(L_{total}\) used in this study is given by Eq. (10).

where \(n\) is total institution and \(w^{n}\) is the corresponding weight of each source data.

Results

Dataset

Multisource synthesized clinical dataset

In the experiments, three datasets were utilized to validate the proposed method: the AAPM-Mayo [31], private synthetic clinical [40] and RPLHR-CT [64] datasets. These datasets were acquired using a Siemens CT scanner, ScintCare CT128 scanner (Minfound Medical Co. Ltd., China) and Philips CT devices. The size of each image is 512 \({ \times }\) 512. To confirm the effectiveness of the proposed network, 6000 (2000 \({ \times }\) 3) pairs of normal-dose and corresponding low-dose images were randomly selected from the three LDCT datasets. The training, verification, and testing sets consisted of 4800 (1600 \({ \times }\) 3), 600 (200 \({ \times }\) 3), and 600 (200 \({ \times }\) 3) pairs, respectively. Second, to further test the robustness of the model, a new private dataset with high and low noise levels was added to test the model, which was synthesized using NDCT images from the AAPM-Mayo dataset [31] using the method described in ref. [65] and was only used for the test process. To test the robustness of the model, 200 pairs of images with high and low noise levels were selected randomly. In addition, to further assess the domain-shift problem of the model, an external, independent synthetized dataset was employed in this study, which was used only for the test process and not for the training process. The dataset is a pair of CT-synthesized images obtained by the method in ref. [65] from Siemens CT images with a slice thickness of 5 mm and a tube current of more than 200 mA. For the domain-shift test, 200 pairs were randomly selected.

Multisource real clinical dataset

In addition to verifying the effectiveness of the network on the synthesized datasets, validation was also conducted using real clinical LDCT images – Siemens and Minfound clinical datasets–acquired from the Siemens CT scanner and the ScintCare CT128 scanner, which was only used for the test process and was not involved in the training process. The Siemens clinical dataset comprised 10 patients with a total of 2028 LDCT images. The tube current and slice thickness were 65 mA and 1.5 mm, respectively. For the Minfound clinical dataset, 584 LDCT images were obtained from two patients with a tube voltage of 120 KVp, tube current of 80 mA and 40 mA, and slice thicknesses of 1.25 mm and 2.5 mm. The details of the real clinical datasets used in the experiment are listed in Table 1.

Experimental details

During the training process, 80 \({ \times }\) 80 patches were randomly cropped from the 512 \({ \times }\) 512 LDCT image. The minimum batch size was set to 16. The Adam [66] algorithm was utilized to optimize the network during training. The learning rate was set to 0.0001 with a drop rate of 0.5 for every 20 epochs. The hyperparameters \(\alpha\) and λ of the loss function were set to 1 and 0.001, respectively. The network was trained for 100 iterations. The entire experiment was conducted using Python with the PyTorch framework on an NVIDIA TITAN V GPU.

The network was compared with five neural network algorithms: RED-CNN [25], WGAN-VGG [26], WGAN-RAM [67], MAPNN [27], and MINFMCNN [28]. To provide a clearer understanding of these comparison algorithms as well as well as the proposed network, Table 2 presents the operational details, parameters and runtime of each test example for each method. The runtime of a single image is the ratio of the total time to the number of images. The total time is the duration required to compute the testing datasets (600 (200 \({ \times }\) 3)) using the parameters of the trained model.

Given that the proposed network encodes multisource data in a parallel manner, it requires more parameters than the other methods. By contrast, MDFTN has a shorter inference time because multisource data are processed simultaneously. To ensure a fair comparison, all the networks utilized the same training and test datasets as those used in the model. To quantitatively analyze the image quality after denoising, three quality quantitative evaluation indices were employed: peak signal-to-noise ratio (PSNR), structural similarity index measurement (SSIM), and root mean square error (RMSE). PSNR measures the denoising effect and SSIM measures the structural similarity between two images. The larger the PSNR and SSIM values, the closer the results are to the ground truth images, indicating that higher-quality images are produced.

Experimental results

Results of multisource synthesized datasets

This subsection presents the visual and quantitative results of the different methods on multiple source datasets. Figures 4, 5 and 6 show the visual results for each network and the corresponding quantitative results (PSNR/SSIM). Orange numbers indicate the highest quantitative indices. Figure 4 displays the visual results and enlarged views of the proposed network (MDFTN) and the comparison algorithms on the AAPM-Mayo synthesized dataset. Two representative lesion images were selected, and the corresponding zoomed-in regions of interest (ROIs) (indicated by red rectangles) were extracted from the predicted results and true images. The yellow circles denote metastases, whereas the blue and green arrows denote fine structures. From Fig. 4b1-b8, it is evident that all the methods are capable of reducing the noise level of the images and enhancing the distinction of metastasis to some extent. However, in the edge region indicated by the green arrow, the algorithm demonstrates clear advantages in preserving the details. In cases where the CT images contain more tissue structures, such as abdominal CT images, Fig. 4c2 exhibits more complex noise and artifacts than Fig. 4a2 in the LDCT image. It can be seen from Fig. 4c1-c8 that the image processed by the MDFTN exhibits clearer contrast than the other algorithms, particularly in the ROIs of Fig. 4d3-d8. When compared to the NDCT images, the resulting image processed by the WGAN-RAM (Fig. 4d5) has more noise and artifacts, resulting in poor resolution near the ligamentum teres in the liver (indicated by the blue arrow). At this noise level, the overall images of the ROIs obtained by the WGAN-VGG (Fig. 4d4), MAPNN (Fig. 4d6), and MINFMCNN (Fig. 4d7) are relatively similar, but the details appear blurry. Notably, the detailed features of the ligamentum teres indicated by the blue arrows demonstrate that the MDFTN (Fig. 4d8) produces clearer results than the RED-CNN (Fig. 4d3). Overall, the MDFTN method achieves a more detailed structure and quantitative index, as shown in Fig. 4a8 and Fig. 4c8.

Results and magnified views of data from the AAPM-Mayo synthesized dataset provided for comparison. a1-d1 NDCT; a2-d2 LDCT; a3-d3 RED-CNN; a4-d4 WGAN-VGG; a5-d5 WGAN-RAM; a6-d6 MNPNN; a7-d7 MINFMCFF; and a8-d8 MDFTN. The respective ROI for each predicted image is displayed below the image itself. A yellow circle denotes a metastasis, whereas blue and green arrows indicate fine structures. The orange number signifies the highest quantitative index. The display window is set at [-160, 240] HU

Results and magnified views of data from a private synthesized dataset with different noise levels are provided for comparison. a1-b8 High noise levels; c1-d8 Low noise levels; a1-d1 NDCT; a2-d2 LDCT; a3-d3 RED-CNN; a4-d4 WGAN-VGG; a5-d5 WGAN-RAM; a6-d6 MNPNN; a7-d7 MINFMCFF; and a8-d8 MDFTN. The corresponding ROI for each predicted image is shown below itself. The green arrows indicate some fine structure regions. The orange number represents the highest quantitative index. The display window is [-160, 240] HU

Results and magnified views of data from the synthesized Minfound dataset and RPLHR-CT dataset are provided for comparison. The corresponding ROI for each predicted image is shown below itself. The difference images between the predicted results of different methods and NDCT are labeled as (c1-c8) and (f1-f8), respectively. The orange number represents the highest quantitative index. The purple circles denote flat regions for noise suppression analysis, while the yellow circles highlight detailed structures for visual comparison. The display window range is set at [-160, 240] HU

To further verify the robustness of the trained model in processing LDCT images with different noise levels, the test results of the model using a privately synthesized dataset with high and low noise levels are also presented. The dataset was obtained by using the method described in ref. [65]. Figure 5 shows the visualized results and enlarged views of MDFTN and the comparison algorithms. It is evident that Figs. 5a1-b8 exhibit more noise than Figs. 5c1-d8. Upon examining Fig. 5, it is apparent that the proposed MDFTN effectively suppresses noise while preserving the detailed structures (indicated by green arrows) under both high and low noise levels. Tables 3 and 4 present the quantitative outcomes of the different algorithms on privately synthesized datasets with high and low noise levels, respectively. It was evident that MDFTN delivers the highest PSNR and SSIM scores.

Figure 6 shows the visual results of the proposed network (MDFTN) along with the comparison algorithms for the synthesized Minfound and RPLHR-CT datasets. It includes enlarged views and difference maps for a detailed analysis. From Fig. 6, it is apparent that the visual impact of the human eye on image visualization is not significant. Therefore, difference images were generated between the predicted results processed by the different methods and the NDCT, as shown in Figs. 6c1-c8 and f1-f8. Figures 6a1-c8 present the visualization results for the synthesized Minfound dataset. Upon observing Figs. 6b1-b8 and c1-c8, all the methods effectively removed noise from the low-frequency region (indicated by the purple circle). However, the proposed method demonstrates a more significant denoising effect, resulting in a higher quantitative index. Figures 6d1-f8 show the visualization results of the RPLHR-CT dataset, with a specific focus on the skeletal region. In terms of image detail, the WGAN-RAM (Figs. 6e5 and f5) appears to be more blurred than the other algorithms. Upon analyzing the difference image in the bottom row of Fig. 6, it is evident that RED-CNN (Fig. 6f3), MINFMCNN (Fig. 6f7), and MDFTN (Fig. 6f8) demonstrate similar structure preservation. However, MINFMCNN and MDFTN exhibit fewer residual details, suggesting that they possess superior detail-preservation capabilities, as highlighted by the yellow circles.

In addition to visual comparisons with other algorithms, the PSNR/SSIM/RMSE metrics were utilized to quantitatively evaluate the proposed MDFTN and other methods. Tables 5, 6 and 7 show the PSNR, SSIM, and RMSE measures for the comparison methods applied to the different datasets. Compared with the LDCT index, all methods improved the PSNR and SSIM indices to some extent and reduced the RMSE index. It is evident that MDFTN achieves the highest PSNR and SSIM results and the lowest RMSE for both the AAPM-Mayo and the synthesized Minfound datasets. On the RPLHR-CT dataset, the MINFMCNN achieves the highest PSNR and SSIM results, followed by the proposed algorithm. However, for the other two datasets, MINFMCNN performs worse than the proposed method. Therefore, it is evident that the proposed method exhibits better generalization and robustness than the other algorithms. This is because MDFTN utilizes collaborative training across various datasets, leveraging the complementary advantages of multisource data distribution to enhance its adaptability and improve its generalization. In summary, the proposed MDFTN demonstrates promising performance in terms of noise reduction and structural preservation when processing multiple source datasets simultaneously.

Results of independent synthesized datasets

To further assess the domain-shift problem of the model, an external independently synthesized dataset was employed in this study. Figure 7 displays the visual results and enlarged views of the proposed network (MDFTN) and comparison algorithms on independently synthesized datasets. It can be seen from Figs. 7b1-b8 that the image processed by MDFTN exhibits remarkable similarity to the real image compared to the other algorithms, particularly in the border region indicated by the green arrow. Table 8 presents the quantitative results of the different models for the independently synthesized datasets. It is evident that MDFTN achieves the highest PSNR and SSIM results and the lowest RMSE for the independently synthesized datasets.

Results and magnified views of data from independent synthesized datasets are provided for comparison. a1-b1 NDCT; a2-b2 LDCT; a3-b3 RED-CNN; a4-b4 WGAN-VGG; a5-b5 WGAN-RAM; a6-b6 MNPNN; a7-b7 MINFMCFF; and a8-b8 MDFTN. The corresponding ROI for each predicted image is shown below itself. The green arrows indicate boundary texture. The orange number represents the highest quantitative index. The display window is [-160, 240] HU

Results of multisource real clinical datasets

Considering the presence of feature distribution differences between the simulated and real clinical LDCT images, the universality and stability of the network were further verified using real Siemens and Minfound LDCT data. To ensure flexibility, clinical data were exclusively used for network testing by employing the optimal parameters trained on the synthesized datasets. Figures 8a1-b7 show the visualization results of the proposed network (MDFTN) and the comparison algorithms on real Siemens clinical LDCT data, and Figs. 8c1-d7 illustrate the visualization results of the Minfound LDCT data, with corresponding zoomed ROIs (represented by red rectangles) cropped from the predicted images. It can be observed from Fig. 8a1-d1 that the LDCT images contain significant amounts of noise and artifacts. Although all algorithms can improve image quality, they still have limitations. In comparison with the overall image in Figs. 8a1-a7, the result predicted by the MDFTN algorithm is more favorable for doctors owing to fewer residual artifacts. As depicted in Figs. 8b2-b7, the WGAN-VGG (Fig. 8b3), WGAN-RAM (Fig. 8b4), and MAPNN (Fig. 8b5) still exhibit some noise and artifacts, potentially impacting doctors’ observations during clinical diagnosis. MDFTN effectively removes a significant amount of noise and artifacts, as indicated by the blue arrows. The marginal areas of the liver and spleen, indicated by green arrows (Fig. 8b7) are clearer than those of the other algorithms. On the real Minfound LDCT data (Figs. 8d2-d7), MDFTN (Fig. 8d7) exhibits a sharper contrast than MAPNN (Fig. 8d5) in the bone boundary region represented by the green arrow. In the low-frequency region indicated by the blue arrow, RED-CNN (Fig. 8d2), WGAN-VGG (Fig. 8d3), and WGAN-RAM (Fig. 8d4) still contain some artifacts, resulting in poor contrast observation in the low-frequency region. The results for MAPNN (Fig. 8d5), MINFMCNN (Fig. 8d6), and MDFTN (Fig. 8d7) are similar in the area indicated by the blue arrow. In summary, the results of clinical trials on a real Siemens LDCT dataset and the Minfound LDCT dataset demonstrate that MDFTN has certain advantages in noise removal and artifact suppression compared with the other algorithms.

Results and magnified views of data from the real Siemens LDCT dataset and the Minfound LDCT dataset are provided for comparison. a1-d1 LDCT; a2-d2 RED-CNN; a3-d3 WGAN-VGG; a4-d4 WGAN-RAM; a5-d5 MNPNN; a6-d6 MINFMCFF; and a7-d7 MDFTN. The corresponding ROI for each predicted image is shown below itself. The blue arrows denote flat regions while green arrows indicate high-contrast edge structures. The display window is [-160, 240] HU

Ablation experiment

In this subsection, the ablation studies of the proposed network are described. First, the denoising performance of the proposed network are examined in the single-source model, and then the effectiveness of the FTM and attention mechanism SEKG [52] are verified. Third, the versatility of the proposed network was assessed using the RED-CNN network.

Results of single-source MDFTN

The full MDFTN framework was used to implement collaborative training to improve the denoising performance of the LDCT. In this study, a single-source MDFTN was employed to assess the effects of collaborative training. Figure 9 shows the visualization results of the MDFTN with and without different components on the synthesized Minfound dataset. Figures 9a3-c3 and 9a6-c6 show the denoising results of the single- and multisource MDFTN, respectively. In the first row, compared to Figs. 9a3 and a6, the full MDFTN (Fig. 9a6) appears to exhibit less noise and a higher evaluation index than the single-source MDFTN (Fig. 9a3). Upon examining the flat regions highlighted by yellow circles and green arrows, the complete MDFTN model demonstrates superior preservation of details in the soft tissue areas. Table 9 presents the averages of the qualitative results. These quantitative results demonstrate that the full network exhibits significant improvements in terms of PSNR, SSIM, and RMSE. The above results indicate that the full network can not only simultaneously process LDCT images from multiple sources but also effectively integrate advantageous features from different datasets during the training process.

Ablation results and magnified views of data from the synthesized Minfound dataset are used for the single-source-site, without the FTM and without the attention mechanism SEKG. a1-c1 NDCT; a2-c2 LDCT; a3-c3 Sing Source-Site; a4-c4 No-FTM; a5-c5 No-SEKG; a6-c6 MDFTN. No-FTM means without the FTM. No-SEKG means without the attention mechanism SEKG [52]. The corresponding ROI to each predicted image is shown below itself. c1-c6 denote the difference images between the predicted results of different methods and NDCT. The orange number represents the highest quantitative index. The yellow circles and green arrows indicate subtle details within low-contrast structured regions. The display window range is set at [-160, 240] HU

Effectiveness of FTM and SEKG

First, the effectiveness of the FTM are verified in the model. Figures 9a4-c4 show the visual results, enlarged views, and difference images of the network without the FTM on the synthesized Minfound dataset. As can be observed from the MDFTN (Fig. 9b6) and No-FTM (Fig. 9b4), the full network clearly emphasizes the edges in the low-frequency region, as indicated by the green arrow, whereas the details in No-FTM (Fig. 9b4) appear unclear. In the third row of the difference images, it can be seen that the results of the full network are closer to the actual conditions in the heart region, marked by the yellow circle. The quantitative results for no-FTM and MDFTN in Table 9 also demonstrate the beneficial impact of FTM on the overall network.

Subsequently, the effectiveness of the SEKG [52] introduced in the decoder was assessed on three synthesized datasets. Figures 9a5-c5 illustrate the visual results, enlarged views, and difference images of the network without SEKG on the synthesized Minfound dataset. The denoising result of MDFTN (Fig. 9c6) is closer to the background image than that of No-SEKG (Fig. 9c5) in the area indicated by the green arrow. Table 9 shows that the inclusion of the attention mechanism SEKG can improve the quantitative results of the PSNR and SSIM for the network across the three datasets. These results demonstrate that SEKG enhances the performance of the overall network.

The versatility of the proposed network framework

To further verify the versatility of the proposed network framework, the RED-CNN network was incorporated into the framework, resulting in RED-CNN-DFTM. Figure 10 shows the visualization results of the original RED-CNN and the modified RED-CNN (RED-CNN-DFTM) on the synthesized Minfound dataset. Based on the visual observation in Fig. 10, the RED-CNN-DFTM (Fig. 10b4) prediction results are considerably lower than the noise level of the RED-CNN (Fig. 10b3). Moreover, the visibility of the low-density region marked by the yellow circle demonstrates a noticeable improvement in the denoising performance of RED-CNN. In terms of high-contrast edge details, RED-CNN-DFTM outperforms RED-CNN in distinguishing the bone boundaries, as indicated by the green arrows. Compared with the difference map of RED-CNN (Fig. 10c3), RED-CNN-DFTM (Fig. 10c4) exhibits a closer resemblance to the real image in terms of noise and textured background. To further observe the image-denoising performance across the three datasets, Table 10 presents the average quantitative results of RED-CNN-DFTM and RED-CNN. RED-CNN-DFTM improved the PSNR and SSIM indicators of the images compared to RED-CNN. The above results show that the collaborative learning network architecture is indeed helpful for the simultaneous denoising of multisource datasets while achieving superior denoising performance.

Ablation results and magnified views of data from the synthesized Minfound dataset are used to verify the versatility of the proposed network framework. a1-c1 NDCT; a2-c2 LDCT; a3-c3 RED-CNN; a4-c4 RED-CNN-DFTM. The corresponding ROI to each predicted image is shown below itself. c1-c4 denote difference images between the predicted results of different methods and NDCT. The orange number represents the highest quantitative index. The yellow circles indicate the low-density region, while the green arrows indicate the high-contrast region. The display window is [-160, 240] HU

Convergence analysis

Because images produced by CT devices from different manufacturers exhibit distinct data distributions, it is crucial to assess the convergence of the MDFTN network, which is jointly trained on multiple source datasets simultaneously. Figure 11 shows a comparison of the convergence rates of the PSNR and RMSE as functions of the epoch number during training on the multisource datasets. As depicted in Figs. 11a, b, c, the PSNR increases rapidly in the initial stages of training, followed by a gradual increase until it stabilizes after the 40th epoch. The RMSE follows a similar trend, stabilizing after the 40th epoch. As shown in Figs. 11c and f, the PSNR and RMSE values exhibit substantial fluctuations during the initial training stage of the RPLHR-CT dataset. This is primarily attributed to the distinct dataset variations when the network updates its parameters. However, it is noteworthy that the network tends to converge after 40 epochs. These findings effectively validate the capability of the MDFTN to simultaneously train on multiple source datasets.

Hyperparameter analysis

In this subsection, the impact of the loss function hyperparameter λ value in Eq. (8) are investigated on the network’s performance. Table 11 presents the quantitative results obtained by training λ values in [0, 1, 0.1, 0.01, 0.005, 0.001, 0.0001] on the multisource datasets. Through comparison, it is observed that the highest average PSNR and SSIM results are achieved when λ = 0.001. As a result, the loss-function hyperparameter λ = 0.001was chosen.

Discussion

Despite the widespread use of DL models in medical imaging, they have a limited ability to simultaneously process LDCT images from multiple sources by relying solely on a specific dataset. In practical clinical applications, specific networks are used to process CT data from different manufacturers, which may restrict the universality of the model. To address these issues, a learning-once model is presented that efficiently processes multisource LDCT images, allowing the network model to better process data from diverse imaging sources. Based on this, a novel MDFTN is proposed to improve LDCT imaging performance for multisource data. The proposed MDFTN comprises multiple encoders and decoders along with a DFTM. During forward propagation in network training, parallel encoders extract distinct features from their respective data sources, whereas DFTM facilitates the mutual enhancement of multisource data features. In the backward propagation phase of network training, joint loss functions are utilized to calculate the gradient of each layer and subsequently update all the network weights accordingly. Through collaborative training, the proposed MDFTN leverages the complementary advantages of multisource data distribution to enhance its adaptability and generalization.

Given the flexibility of MDFTN, the proposed network framework can be expanded to accommodate various multitask LDCT denoising applications. Unlike previous denoising methods that rely solely on specific datasets, the collaborative training mechanism augments the model’s capacity for generalization across a range of datasets. Furthermore, when addressing LDCT denoising issues across multiple institutions, privacy and security concerns regarding patient data may arise. In such cases, the DFTM can be removed and a shared global model introduced to render MDFTN more suitable for privacy-protection imaging scenarios. Although the proposed MDFTN offers several advantages, it has certain limitations. First, when the DFTM combines and extracts shared features from each encoder, it may lose some globally significant information as the module depth increases. This is because, as the depth of the module increases, gradient disappearance or gradient explosion may occur during backpropagation, making it difficult for the model to learn global information. Secondly, given the varying data distributions across different anatomical sites and CT devices, CT clinical scan data are significantly complex. In this study, the dataset for experimental verification was mainly for the chest and abdominal sections, and more sections need to be applied to the model. In the future more effective deep supervision and fusion methods, will be explored to enhance the performance. In particular, an auxiliary loss function will be incorporated into the intermediate layers of the network, thereby enabling the model to consider both global and local information during training. This approach has the potential to significantly improve the model’s understanding of the data, leading to more accurate and comprehensive results. Secondly, it is crucial to broaden the clinical datasets for LDCT denoising, specifically by incorporating multidose, multiprotocol, and multianatomical site datasets. In addition, considering the significance of LDCT image denoising in clinical practice, the potential applications of the proposed method in real clinical settings are discussed and the challenges that it may face. Although MDFTN can help doctors achieve rapid and precise diagnoses of various diseases by concurrently processing data from different hospitals, it still faces several challenges that need to be addressed. The accurate amassing of paired data is essential for training highly generalized LDCT denoising models. However, obtaining a substantial number of high-quality, precisely matched CT images remains a significant challenge. Therefore, a critical future task is to enhance the capability of the model to denoise real clinical unlabeled LDCT images by designing innovative domain-adversarial loss functions.

Conclusions

This study aims to address the challenges posed by the different distributions of multisource data and data scarcity through the utilization of DL. To address this problem, a learning-once model is proposed that incorporates multisource encoders and a DFTM module. This model allows the simultaneous processing of multisource data and outperforms a single model with continuous learning. Through collaborative training, the proposed MDFTN network effectively leverages the complementary advantages of the features presented in multisource data, resulting in improved imaging performance and generalization for multisource image denoising. Numerous experiments were conducted on two public datasets and one local dataset, demonstrating that the proposed network model can simultaneously process multisource data while effectively suppressing noise and preserving fine structures.

Availability of data and materials

Synthesized Clinical Datasets are about the AAPM-Mayo dataset, the private synthetic clinical dataset and the RPLHR-CT dataset. Where AAPM-Mayo dataset is obtained at [31] http://www.aapm.org/GrandChallenge/LowDoseCT/, private synthetic clinical dataset comes from [40] https://doi.org/10.1109/TMI.2023.3261822, and RPLHR-CT dataset comes from [64] https://doi.org/10.1007/978-3-031-16446-0_33.

Clinical data are not publicly available because they contain private patient health information. Data supporting the findings of the present study are available upon reasonable request.

Abbreviations

- CT:

-

Computed tomography

- DFTM:

-

Deep feature transformation module

- DL:

-

Deep learning

- FL:

-

Federated learning

- GMM:

-

Gaussian mixture model

- LDCT:

-

Low-dose computed tomography

- MAP-NN:

-

Modularized adaptive processing neural network

- MDFTN:

-

Multi-encoder deep feature transformation network

- NDCT:

-

Normal-dose computed tomography

- PReLU:

-

Parametric Rectified Linear Unit

- PSNR:

-

Peak signal-to-noise ratio

- SEKG:

-

Spatially enhanced kernel generation

- SSIM:

-

Structural similarity index measurement

- RMSE:

-

Root mean square error

- ROIs:

-

Regions of interest

References

Wang G, Yu HY, De Man B (2008) An outlook on X-ray CT research and development. Med Phys 35(3):1051-1064. https://doi.org/10.1118/1.2836950

De González AB, Mahesh M, Kim KP, Bhargavan M, Lewis R, Mettler F et al (2009) Projected cancer risks from computed tomographic scans performed in the United States in 2007. Arch Intern Med 169(22):2071-2077. https://doi.org/10.1001/archinternmed.2009.440

Smith-Bindman R, Lipson J, Marcus R, Kim KP, Mahesh M, Gould R et al (2009) Radiation dose associated with common computed tomography examinations and the associated lifetime attributable risk of cancer. Arch Intern Med 169(22):2078-2086. https://doi.org/10.1001/archinternmed.2009.427

Zondervan RL, Hahn PF, Sadow CA, Liu B, Lee SI (2013) Body CT scanning in young adults: examination indications, patient outcomes, and risk of radiation-induced cancer. Radiology 267(2):460-469. https://doi.org/10.1148/radiol.12121324

Miglioretti DL, Johnson E, Williams A, Greenlee RT, Weinmann S, Solberg LI et al (2013) The use of computed tomography in pediatrics and the associated radiation exposure and estimated cancer risk. JAMA Pediatr 167(8):700-707. https://doi.org/10.1001/jamapediatrics.2013.311

McLeavy CM, Chunara MH, Gravell RJ, Rauf A, Cushnie A, Staley Talbot C et al (2021) The future of CT: deep learning reconstruction. Clin Radiol 76(6):407-415. https://doi.org/10.1016/j.crad.2021.01.010

Wang J, Lu HB, Li TF, Liang ZR (2005) Sinogram noise reduction for low-dose CT by statistics-based nonlinear filters. In: Proceedings of the medical imaging 2005: image processing, SPIE, San Diego, 12-17 February 2005. https://doi.org/10.1117/12.595662

La Rivière PJ (2005) Penalized-likelihood sinogram smoothing for low-dose CT. Med Phys 32(6Part1):1676-1683. https://doi.org/10.1118/1.1915015

Manduca A, Yu LF, Trzasko JD, Khaylova N, Kofler JM, McCollough CM et al (2009) Projection space denoising with bilateral filtering and CT noise modeling for dose reduction in CT. Med Phys 36(11):4911-4919. https://doi.org/10.1118/1.3232004

Balda M, Hornegger J, Heismann B (2012) Ray contribution masks for structure adaptive sinogram filtering. IEEE Trans Med Imaging 31(6):1228-1239. https://doi.org/10.1109/TMI.2012.2187213

Li M, Zhang C, Peng CT, Guan YH, Xu P, Sun MS et al (2016) Smoothed l0 norm regularization for sparse-view X-ray CT reconstruction. Biomed Res Int 2016:2180457. https://doi.org/10.1155/2016/2180457

Komolafe TE, Wang K, Du Q, Hu T, Yuan G, Zheng J et al (2020) Smoothed L0-constraint dictionary learning for low-dose X-ray CT reconstruction. IEEE Access 8:116961-116973. https://doi.org/10.1109/ACCESS.2020.3004174

Sidky EY, Kao CM, Pan XC (2006) Accurate image reconstruction from few-views and limited-angle data in divergent-beam CT. J X-Ray Sci Technol 14(2):119-139

Yu W, Wang CX, Huang M (2017) Edge-preserving reconstruction from sparse projections of limited-angle computed tomography using ℓ0-regularized gradient prior. Rev Sci Instrum 88(4):043703. https://doi.org/10.1063/1.4981132

Zhang C, Zhang T, Zheng J, Li M, Lu YF, You JL et al (2015) A model of regularization parameter determination in low-dose X-ray CT reconstruction based on dictionary learning. Comput Math Methods Med 2015:831790. https://doi.org/10.1155/2015/831790

Zhang C, Zhang T, Li M, Peng CT, Liu ZB, Zheng J (2016) Low-dose CT reconstruction via L1 dictionary learning regularization using iteratively reweighted least-squares. BioMed Eng OnLine 15(1):66. https://doi.org/10.1186/s12938-016-0193-y

Romano Y, Elad M (2013) Improving K-SVD denoising by post-processing its method-noise. In: Proceedings of the 2013 IEEE international conference on image processing, IEEE, Melbourne, 15-18 September 2013. https://doi.org/10.1109/ICIP.2013.6738090

Geraldo RJ, Cura LMV, Cruvinel PE, Mascarenhas NDA (2017) Low dose CT filtering in the image domain using MAP algorithms. IEEE Trans Radiat Plasma Med Sci 1(1):56-67. https://doi.org/10.1109/TNS.2016.2635131

Sagheer SVM, George SN (2020) A review on medical image denoising algorithms. Biomed Signal Process Control 61:102036. https://doi.org/10.1016/j.bspc.2020.102036

Kang E, Yoo J, Ye JC (2018) Deep convolutional framelet denosing for low-dose CT via wavelet residual network. IEEE Trans Med Imaging 37(6):1358-1369. https://doi.org/10.1109/TMI.2018.2823756

Liu J, Zhang TY, Kang YQ, Wang Y, Zhang YK, Hu DL et al (2023) Deep residual constrained reconstruction via learned convolutional sparse coding for low-dose CT imaging. Biomed Signal Process Control 85:104868. https://doi.org/10.1016/j.bspc.2023.104868

Diwakar M, Kumar M (2018) A review on CT image noise and its denoising. Biomed Signal Process Control 42:73-88. https://doi.org/10.1016/j.bspc.2018.01.010

Zhang J, Zhou H, Niu Y, Lv JC, Chen J, Cheng Y (2021) CNN and multi-feature extraction based denoising of CT images. Biomed Signal Process Control 67:102545. https://doi.org/10.1016/j.bspc.2021.102545

Li SZ, Li Q, Li RR, Wu W, Zhao JJ, Qiang Y et al (2022) An adaptive self-guided wavelet convolutional neural network with compound loss for low-dose CT denoising. Biomed Signal Process Control 75:103543. https://doi.org/10.1016/j.bspc.2022.103543

Chen H, Zhang Y, Kalra MK, Lin F, Chen Y, Liao PX et al (2017) Low-dose CT with a residual encoder-decoder convolutional neural network. IEEE Trans Med Imaging 36(12):2524-2535. https://doi.org/10.1109/TMI.2017.2715284

Yang QS, Yan PK, Zhang YB, Yu HY, Shi YY, Mou XQ et al (2018) Low-dose CT image denoising using a generative adversarial network with Wasserstein distance and perceptual loss. IEEE Trans Med Imaging 37(6):1348-1357. https://doi.org/10.1109/TMI.2018.2827462

Shan HM, Padole A, Homayounieh F, Kruger U, Khera RD, Nitiwarangkul C et al (2019) Competitive performance of a modularized deep neural network compared to commercial algorithms for low-dose CT image reconstruction. Nat Mach Intell 1(6):269-276. https://doi.org/10.1038/s42256-019-0057-9

Du Q, Tang YF, Wang JP, Hou XW, Wu ZY, Li M et al (2023) X-ray CT image denoising with MINF: A modularized iterative network framework for data from multiple dose levels. Comput Biol Med 152:106419. https://doi.org/10.1016/j.compbiomed.2022.106419.

Yin XR, Coatrieux JL, Zhao QL, Liu J, Yang W, Yang J et al (2019) Domain progressive 3D residual convolution network to improve low-dose CT imaging. IEEE Trans Med Imaging 38(12):2903-2913. https://doi.org/10.1109/TMI.2019.2917258

Geng MF, Meng XX, Yu JY, Zhu L, Jin LJ, Jiang Z et al (2022) Content-noise complementary learning for medical image denoising. IEEE Trans Med Imaging 41(2):407-419. https://doi.org/10.1109/TMI.2021.3113365

AAPM (2017) Low Dose CT Grand Challenge. http://www.aapm.org/GrandChallenge/LowDoseCT/. Accessed 1 June 2019

Tang YF, Du Q, Wang JP, Wu ZY, Li YX, Li M et al (2022) CCN-CL: A content-noise complementary network with contrastive learning for low-dose computed tomography denoising. Comput Biol Med 147:105759. https://doi.org/10.1016/j.compbiomed.2022.105759

Huang YQ, Xia WJ, Lu ZX, Liu Y, Chen H, Zhou JL et al (2021) Noise-powered disentangled representation for unsupervised speckle reduction of optical coherence tomography images. IEEE Trans Med Imaging 40(10):2600-2614. https://doi.org/10.1109/TMI.2020.3045207

Liao HF, Lin WA, Zhou SK, Luo JB (2020) ADN: Artifact disentanglement network for unsupervised metal artifact reduction. IEEE Trans Med Imaging 39(3):634-643. https://doi.org/10.1109/TMI.2019.2933425

Unal MO, Ertas M, Yildirim I (2022) An unsupervised reconstruction method for low-dose CT using deep generative regularization prior. Biomed Signal Process Control 75:103598. https://doi.org/10.1016/j.bspc.2022.103598

Wang JP, Tang YF, Wu ZY, Du Q, Yao LB, Yang XD et al (2023) A self-supervised guided knowledge distillation framework for unpaired low-dose CT image denoising. Comput Med Imaging Graph 107:102237. https://doi.org/10.1016/j.compmedimag.2023.102237

Wang JP, Tang YF, Wu ZY, Tsui BMW, Chen W, Yang XD et al (2023) Domain-adaptive denoising network for low-dose CT via noise estimation and transfer learning. Med Phys 50(1):74-88. https://doi.org/10.1002/mp.15952

Yang ZY, Xia WJ, Lu ZX, Chen YY, Li XX, Zhang Y (2023) Hypernetwork-based physics-driven personalized federated learning for CT imaging. IEEE Trans Neural Netw Learn Syst. https://doi.org/10.1109/TNNLS.2023.3338867

Li DY, Bian ZY, Li S, He J, Zeng D, Ma JH (2022) Noise characteristics modeled unsupervised network for robust CT image reconstruction. IEEE Trans Med Imaging 41(12):3849-3861

Li M, Wang JP, Chen Y, Tang YF, Wu ZY, Qi YJ et al (2023) Low-dose CT image synthesis for domain adaptation imaging using a generative adversarial network with noise encoding transfer learning. IEEE Trans Med Imaging 42(9):2616-2630. https://doi.org/10.1109/TMI.2023.3261822

Zhang ZC, Liang XK, Dong X, Xie YQ, Cao GH (2018) A sparse-view CT reconstruction method based on combination of densenet and deconvolution. IEEE Trans Med Imaging 37(6):1407-1417. https://doi.org/10.1109/TMI.2018.2823338

He J, Chen SL, Zhang H, Tao X, Lin WH, Zhang SL et al (2021) Downsampled imaging geometric modeling for accurate CT reconstruction via deep learning. IEEE Trans Med Imaging 40(11):2976-2985. https://doi.org/10.1109/TMI.2021.3074783

Xia WJ, Lu ZX, Huang YQ, Liu Y, Chen H, Zhou JL et al (2021) CT reconstruction with PDF: Parameter-dependent framework for data from multiple geometries and dose levels. IEEE Trans Med Imaging 40(11):3065-3076. https://doi.org/10.1109/TMI.2021.3085839

Zhang YK, Hu DL, Zhao QL, Quan GT, Liu J, Liu QG et al (2021) CLEAR: Comprehensive learning enabled adversarial reconstruction for subtle structure enhanced low-dose CT imaging. IEEE Trans Med Imaging 40(11):3089-3101. https://doi.org/10.1109/TMI.2021.3097808

Hu DL, Zhang YK, Liu J, Luo SH, Chen Y (2022) DIOR: Deep iterative optimization-based residual-learning for limited-angle CT reconstruction. IEEE Trans Med Imaging 41(7):1778-1790. https://doi.org/10.1109/TMI.2022.3148110

McMahan HB, Moore E, Ramage D, Hampson S, Agüera y Arcas B (2017) Communication-efficient learning of deep networks from decentralized data. Artificial intelligence and statistics. PMLR 54:1273-1282. https://doi.org/10.48550/arXiv.1602.05629

Li T, Sahu AK, Talwalkar A, Smith V (2020) Federated learning: Challenges, methods, and future directions. IEEE Signal Process Mag 37(3):50-60. https://doi.org/10.1109/MSP.2020.2975749

Geng MF, Tian ZF, Jiang Z, You YF, Feng XM, Xia Y et al (2021) PMS-GAN: Parallel multi-stream generative adversarial network for multi-material decomposition in spectral computed tomography. IEEE Trans Med Imaging 40(2):571-584. https://doi.org/10.1109/TMI.2020.3031617

Huang ZX, Liu XF, Wang RP, Chen ZX, Yang YF, Liu X et al (2021) Learning a deep CNN denoising approach using anatomical prior information implemented with attention mechanism for low-dose CT imaging on clinical patient data from multiple anatomical sites. IEEE J Biomed Health Inform 25(9):3416-3427. https://doi.org/10.1109/JBHI.2021.3061758

Zhang YK, Hu DL, Yan ZH, Zhao QX, Quan GT, Luo SH et al (2023) TIME-Net: Transformer-Integrated Multi-Encoder Network for limited-angle artifact removal in dual-energy CBCT. Med Image Anal 83:102650. https://doi.org/10.1016/j.media.2022.102650

Ronneberger O, Fischer P, Brox T (2015) U-Net: Convolutional networks for biomedical image segmentation. In: Proceedings of the 18th international conference on medical image computing and computer-assisted intervention, Springer, Munich, 5-9 October 2015. https://doi.org/10.1007/978-3-319-24574-4_28

Shen H, Zhao ZQ, Zhang WD (2023) Adaptive dynamic filtering network for image denoising. In: Proceedings of the AAAI conference on artificial intelligence, Association for the Advancement of Artificial Intelligence, Washington, 7-14 February 2023. https://doi.org/10.1609/aaai.v37i2.25317

Lu JS, Xiong CM, Parikh D, Socher R (2017) Knowing when to look: Adaptive attention via a visual sentinel for image captioning. In: Proceedings of the 2017 IEEE conference on computer vision and pattern recognition, IEEE, Honolulu, 21-26 July 2017. https://doi.org/10.1109/CVPR.2017.345

Chen L, Zhang HW, Xiao J, Nie LQ, Shao J, Liu W et al (2017) SCA-CNN: Spatial and channel-wise attention in convolutional networks for image captioning. In: Proceedings of the 2017 IEEE conference on computer vision and pattern recognition, IEEE, Honolulu, 21-26 July 2017. https://doi.org/10.1109/CVPR.2017.667

Wang QL, Wu BG, Zhu PF, Li PH, Zuo WM, Hu QH (2020) ECA-Net: efficient channel attention for deep convolutional neural networks. In: Proceedings of the 2020 IEEE/CVF conference on computer vision and pattern recognition, IEEE, Seattle, 14-19 June 2020. https://doi.org/10.1109/CVPR42600.2020.01155

He KM, Zhang XY, Ren SQ, Sun J (2015) Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification. In: Proceedings of the 2015 IEEE international conference on computer vision, IEEE, Santiago, 7-13 December 2015. https://doi.org/10.1109/ICCV.2015.123

Zhang K, Zuo WM, Zhang L (2018) FFDNet: Toward a fast and flexible solution for CNN-based image denoising. IEEE Trans Image Process 27(9):4608-4622. https://doi.org/10.1109/TIP.2018.2839891

Gao Y, Gao F, Dong JY (2021) Hyperspectral image denoising based on multi-stream denoising network. In: Proceedings of the 2021 IEEE international geoscience and remote sensing symposium IGARSS, IEEE, Brussels, 11-16 July 2021. https://doi.org/10.1109/IGARSS47720.2021.9553548

He KM, Zhang XY, Ren SQ, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the 2016 IEEE conference on computer vision and pattern recognition, IEEE, Las Vegas, 27-30 June 2016. https://doi.org/10.1109/CVPR.2016.90

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the 2017 IEEE conference on computer vision and pattern recognition, IEEE, Honolulu, 21-26 July 2017. https://doi.org/10.1109/CVPR.2017.243

Zhang YL, Tian YP, Kong Y, Zhong BN, Fu Y (2021) Residual dense network for image restoration. IEEE Trans Pattern Anal Mach Intell 43(7):2480-2495. https://doi.org/10.1109/TPAMI.2020.2968521

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600-612. https://doi.org/10.1109/TIP.2003.819861

Zhou B, Xie HD, Liu Q, Chen XC, Guo XQ, Feng ZC et al (2023) FedFTN: Personalized federated learning with deep feature transformation network for multi-institutional low-count PET denoising. Med Image Anal 90:102993

Yu PX, Zhang HY, Kang H, Tang W, Arnold CW, Zhang RG (2022) RPLHR-CT dataset and transformer baseline for volumetric super-resolution from CT scans. In: Proceedings of the 25th international conference on medical image computing and computer assisted intervention, Springer, Singapore, 18-22 September 2022. https://doi.org/10.1007/978-3-031-16446-0_33

Yu LF, Shiung M, Jondal D, McCollough CH (2012) Development and validation of a practical lower-dose-simulation tool for optimizing computed tomography scan protocols. J Comput Assist Tomogr 36(4):477-487

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization.CoRR, arXiv preprint arXiv:1412.6980. https://doi.org/10.48550/arXiv.1412.6980

Li M, Du Q, Duan LW, Yang XD, Zheng J, Jiang HC et al (2021) Incorporation of residual attention modules into two neural networks for low-dose CT denoising. Med Phys 48(6):2973-2990. https://doi.org/10.1002/mp.14856

Acknowledgements

Not applicable.

Funding

This work was supported in part by the National Key Research and Development Program of China, No. 2022YFC2404103; in part by the Jiangsu Provincial Key Research and Development Program Social Development Project, No. BE2022720; in part by the Natural Science Foundation of China, No. 62001471; and in part by the Suzhou Science and Technology Plan Project, No. SYG202345.

Author information

Authors and Affiliations

Contributions

LY contributed to the conception and design of the study; LY and JW contributed to data analysis; JW and ML contributed to the acquisition of data; LY drafted the manuscript; and ZW, QD, XY, ML and JZ supervised the study. All the authors have read and approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

All authors in the present study declared that they have no conflicts of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yao, L., Wang, J., Wu, Z. et al. Parallel processing model for low-dose computed tomography image denoising. Vis. Comput. Ind. Biomed. Art 7, 14 (2024). https://doi.org/10.1186/s42492-024-00165-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s42492-024-00165-8