Abstract

Background

Facilitator-led debriefings are well-established for debriefing groups of learners in immersive simulation-based education. However, there has been emerging interest in self-led debriefings whereby individuals or groups of learners conduct a debriefing themselves, without the presence of a facilitator. How and why self-led debriefings influence debriefing outcomes remains undetermined.

Research aim

The aim of this study was to explore how and why in-person self-led debriefings influence debriefing outcomes for groups of learners in immersive simulation-based education.

Methods

An integrative review was conducted, searching seven electronic databases (PubMed, Cochrane, Embase, ERIC, SCOPUS, CINAHL Plus, PsychINFO) for peer-reviewed empirical studies investigating in-person self-led debriefings for groups of learners. Data were extracted, synthesised, and underwent reflexive thematic analysis.

Results

Eighteen empirical studies identified through the search strategy were included in this review. There was significant heterogeneity in respect to study designs, aims, contexts, debriefing formats, learner characteristics, and data collection instruments. The synthesised findings of this review suggest that, across a range of debriefing outcome measures, in-person self-led debriefings for groups of learners following immersive simulation-based education are preferable to conducting no debriefing at all. In certain cultural and professional contexts, such as postgraduate learners and those with previous debriefing experience, self-led debriefings can support effective learning and may provide equivalent educational outcomes to facilitator-led debriefings or self-led and facilitator-led combination strategies. Furthermore, there is some evidence to suggest that self-led and facilitator-led combination approaches may optimise participant learning, with this approach warranting further research. Reflexive thematic analysis of the data revealed four themes, promoting self-reflective practice, experience and background of learners, challenges of conducting self-led debriefings and facilitation and leadership. Similar to facilitator-led debriefings, promoting self-reflective practice within groups of learners is fundamental to how and why self-led debriefings influence debriefing outcomes.

Conclusions

In circumstances where simulation resources for facilitator-led debriefings are limited, self-led debriefings can provide an alternative opportunity to safeguard effective learning. However, their true value within the scope of immersive simulation-based education may lie as an adjunctive method alongside facilitator-led debriefings. Further research is needed to explore how to best enable the process of reflective practice within self-led debriefings to understand how, and in which contexts, self-led debriefings are best employed and thus maximise their potential use.

Similar content being viewed by others

Background

As a medium for deliberate reflective practice, debriefing is commonly cited as one of the most important aspects for learning in immersive simulation-based education (SBE) [1,2,3]. Defined as a ‘discussion between two or more individuals in which aspects of performance are explored and analysed’ ([4], p., 658), debriefing should occur in a psychologically safe environment for learners to reflect on actions, assimilate new information with previously constructed knowledge, and develop strategies for future improvement within their real-world context [5, 6]. Debriefings are typically led by facilitators who guide conversations to ensure content relevance and achievement of intended learning outcomes (ILOs) [7]. The quality of debriefing is thought to be highly reliant on the skills and expertise of the facilitator [8,9,10,11], with some commentators arguing the skill of the facilitator as the strongest independent predictor of successful learning [2]. Literature from non-healthcare industries tend to support this notion, suggesting that facilitators enhance reflexivity, concentration, and goal setting, whilst contributing to the creation and maintenance of psychological safety, leading to improved debriefing effectiveness [12, 13]. However, this interpretation is not universally held and has been increasingly challenged [14,15,16,17,18].

It is in this context that there has been an emergence of self-led debriefings (SLDs) in SBE. There is currently no consensus definition of SLDs within the literature, with the term encompassing a variety of heterogenous practices, thus causing a confusing narrative for commentators to navigate as they report on debriefing practices. We have therefore defined ‘self-led debriefing’ as debriefings conducted by the learners themselves without the immediate presence of a trained faculty member. Several reviews have investigated the overall effectiveness of debriefings, with a select few drawing comparisons between SLDs and facilitator-led debriefings (FLDs) as part of their analysis [2,3,4, 7, 10, 17, 19,20,21,22]. The consensus from these reviews is that there is limited evidence of superiority of one approach over the other. However, only four of these reviews conducted a critical analysis of the presence of facilitators within debriefings [2, 19, 20, 22]. Moreover, in one review [19], a narrow search strategy identified only one study comparing SLDs with FLDs [14]. To our knowledge, only one published review has explored SLDs specifically, investigating whether the presence of a facilitator in individual learner debriefings, in-person or virtual, impacted on effectiveness [23]. Within these parameters, the review concluded equivalent outcomes for well-designed SLDs and FLDs, however did not explore the influence of in-person SLDs on debriefing outcomes for groups of learners in immersive SBE. The value and place of SLDs within this context, either in isolation or in comparison with FLDs, therefore warrants further investigation.

Within the context of immersive SBE, and in-person group debriefings specifically, we challenge the concept of ‘one objective reality’, instead advocating for the existence of multiple subjective realities constructed by individuals or groups. The experiences of learners influence both their individual and group perceptions of reality and therefore different meanings may emerge from the same nominal simulated learning event (SLE) [24]. As such, this study has been undertaken through the lens of both constructionism and constructivism, with key elements deriving from both paradigms. Constructionism espouses the profound impact that societal and cultural norms have on determining how subjective experiences influence an individual’s formation of meaning within the world, or context, that they inhabit [25, 26]. Constructivism is a paradigm whereby, from their subjective experiences, individuals socially construct concepts and schemas to cultivate personal meanings and develop a deeper understanding of the world [26, 27]. In the context of in-person group debriefings, the creation of such meaning, and therefore learning, may be shaped and influenced by the presence or absence of facilitators [24].

The discourse surrounding requirements for facilitators and their level of expertise in debriefings has important implications due to the resources required to support faculty development programmes [2, 8, 9, 28]. SLDs are a relatively new concept offering a potential alternative to well-established FLD practices. Evidence exploring the role of in-person SLDs for groups of learners in immersive SBE is emerging but is yet to be appropriately synthesised. The aim of this integrative review (IR) is to collate, synthesise and analyse the relevant literature to address a gap in the evidence base, thereby informing simulation-based educators of best practices. The research question is: with comparison to facilitator-led debriefings, how and why do in-person self-led debriefings influence debriefing outcomes for groups of learners in immersive simulation-based education?

Methods

The traditional perception of systematic reviews as the gold-standard review type has been increasingly challenged, especially within health professions educational research [29]. An IR systematically examines and integrates findings from studies with diverse methodologies, including quantitative, qualitative, and theoretical datasets, allowing for deep and comprehensive interrogation of complex phenomena [30]. This approach is particularly relevant in SBE, where researchers employ a plethora of study designs from differing theoretical perspectives and paradigms. An IR is therefore best suited to answer our research question and help satisfy the need for new insights such that our understanding of SBE is not restricted [31].

This IR has been conducted according to Whittemore & Knafl’s framework [30] and involved the following five steps: (1) problem identification; (2) literature search; (3) data evaluation; (4) data analysis; and (5) presentation of findings. Whilst the key elements of this study’s methods are presented here, a detailed account of the review protocol has also been published [24]. The protocol highlights the rationale and justification of the chosen methodology, explores the underpinning philosophical paradigms, and critiques elements of the framework used [24].

Problem identification

We modified the PICOS (population, intervention/interest, comparison, outcome, study design) [32] framework to help formulate the research question for this study (Table 1), supplementing the ‘comparison’ arm with ‘context’ as described by Dhollande et al. [33]. This framework suited our study in which the research question is situated within the context of well-established FLD practices within SBE. Simultaneously, it recognises that studies with alternative or no comparative arms can also contribute valuable insights into how and why SLDs influence debriefing outcomes.

Literature search

Search strategy

Using an extensive and broad strategy to optimise both the sensitivity and precision of the search, we searched seven electronic bibliographic databases (PubMed, Cochrane, Embase, ERIC, SCOPUS, CINAHL Plus, PsychINFO), up until and including October 2022. The search terms are presented below in a logic grid (Table 2). Using a comparator/context arm minimised the risk of missing studies describing SLDs as what they are not—i.e. ‘without a facilitator’. A full delineation of each search strategy, including keywords and Boolean operators, for each electronic database is available (Additional file 1). Additionally, we conducted a manual search of reference lists from relevant studies and SBE internet resources. We enlisted the expertise of a librarian to ensure appropriate focus and rigour [34, 35].

Inclusion and exclusion criteria

Articles were included in this review if their content met the following criteria: (1) in-person debriefings following immersive simulated learning events; (2) debriefings including more than one learner; (3) healthcare professionals or students as learners; (4) published peer-reviewed empirical research; (5) reported in English. Forms of grey literature, such as doctoral theses, conference or poster abstracts, opinion or commentary pieces, letters, websites, blogs, instruction manuals and policy documents were excluded. Similarly, studies that described clinical event, individual, non-immersive or virtual debriefings were also excluded. Date of publication was not an exclusion criterion.

Study selection

Following removal of duplicates using bibliographical software package EndNote™ 20, we screened the titles and abstracts of retrieved studies for eligibility. Full texts of eligible studies were examined. Application of the inclusion and exclusion criteria determined which of these studies were appropriate for inclusion in this IR. We used a modified version of the Preferred Reporting Items for Systematic Review and Meta-Analysis Protocols (PRISMA) reporting tool [36] to document this process.

Data evaluation

The process of assessing quality and risk of bias is complex in IRs due to the diversity of study designs, with each type of design generally necessitating differing criteria to demonstrate quality. In the context of this complexity, we used the Mixed Methods Appraisal Tool (MMAT) which details distinct criteria tailored across five study designs: qualitative, quantitative randomised-controlled trials (RCTs), quantitative non-RCTs, quantitative descriptive and mixed methods [37].

Data analysis

Data was analysed using a four-phase constant comparison method originally described for qualitative data analysis [38, 39]. Data are compared item by item so that similar data can be categorised and grouped together, before further comparison between different groups allows for an analytical synthesis of the varied data originating from diverse methodologies. These phases include (1) data reduction; (2) data display; (3) data comparison; and (4) conclusion drawing and verification [30, 38, 39]. Following data reduction and extraction, we performed reflexive thematic analysis (RTA) according to Braun & Clarke’s [40] framework to identify patterns, themes and relationships that could help answer our research question and form new perspectives and understandings of this complex topic [41]. RTA is an approach underpinned by qualitative paradigms, in which researchers have a central and active role in the interpretative analysis of patterns of data and their meanings, and thus subsequent knowledge formation [40]. RTA is particularly suited to IRs exploring how and why complex phenomena might exist and relate to one another, as it enables researchers to analyse diverse datasets reflexively. It can therefore facilitate the construction of unique insights and perspectives that may otherwise be missed through other forms of data analysis. A comprehensive justification, explanation and critique of this process can be found in the accompanying IR protocol [24].

Results

Study selection and quality assessment

The search revealed a total of 1301 publications, of which 357 were duplicates. After screening titles and abstracts, 69 studies were identified for full-text screening. From this, a total of 18 studies were included for data extraction and synthesis (Fig. 1). Reasons for study exclusion are listed in Additional file 2.

Modified PRISMA flow diagram detailing summary report of search strategy [36]

All 18 studies were appraised using the MMAT (Table 3). Five questions, adjusted for differing study designs, were asked of each study, and assessed as ‘yes’, ‘no’ or ‘can’t tell’. The methodological qualities and risk of bias within individual studies impacted the analysis of their data and the subsequent weighting and contribution to the results of this review. The quality assessment process therefore influences the interpretations that can be drawn from such a collective dataset. Whilst the studies demonstrated varying quality, scoring between 40 and 100% of ‘yes’ answers across the five questions, no studies were excluded from the review based on the quality assessment. There were wide discrepancies in the quality of different components of the mixed methods studies. For example, Boet et al. [15] scored 0% for the qualitative component and 100% for the quantitative component of their mixed methods study. The quantitative results were therefore weighted more significantly than the qualitative component in the data analysis and its incorporation into the results of this review. Meanwhile, Boet et al.’s [16] qualitative study scored 100%, thus strengthening the influence and contribution of the results from that study within this IR.

Study characteristics

Key characteristics of articles, including the study aim and design, sample characteristics, descriptions of SLE and SLD formats, data collection instruments, and key reported study findings, are summarised in Table 4. The search elicited one qualitative study, eight quantitative RCTs, six quantitative non-RCTs, one quantitative descriptive study and two mixed methods studies. All 18 studies originated from socio-economically developed countries with six studies originating from South Korea [44,45,46, 51,52,53], five from the USA [42, 43, 48, 54, 55], and the remainder from Canada [15, 16], Australia [56, 57], Spain [49, 50], and Switzerland [47]. Two studies were multi-site [51, 52]. The immersive SLE activities were of varying formats, designs, and durations. Sixteen studies described team-based scenarios [15, 16, 42, 44,45,46,47,48,49,50,51,52, 54,55,56,57] whilst two used individual scenarios [43, 53], with learners then debriefing in groups of more than one learner. Four studies incorporated simulated participants in the scenarios [42, 43, 45, 46]. All studies obtained ethical approval and were published after 2013.

Learner characteristics

In total, the 18 studies recruited 2459 learners. Of these, the majority were undergraduate students of varying professional backgrounds: 1814 nursing, 210 medical, 158 dental, 73 occupational therapy, and 39 physiotherapy students. Only 165 learners were postgraduate professionals: 129 doctors and 26 nurses. In all but four studies [15, 16, 49, 54], learners worked with their own professional group rather than as part of an interprofessional team.

Self-led debriefing format

The specific debriefing activities, whether SLDs, FLDs or a combination of both, took several different formats and lasted between 3 and 90 min. Most SLDs utilised a written framework or checklist to guide learners through the debriefing, although this was unclear in two studies [42, 44]. Two studies required learners to independently self-reflect, via a written task, prior to commencing group discussion [49, 50]. Some studies included video playback within their debriefings [15, 16, 42, 43, 49,50,51,52].

Data collection instruments and outcome measures

In total, 38 different data collection instruments were used across the 18 studies. These are listed along with their components and incorporated scales if described in sufficient detail within the primary study (Table 4). The validity and reliability of these instruments is variable. Indeed, 13 data collection instruments were developed by study authors without data on validity or reliability. Authors used one or more instruments to measure outcomes in five key domains (Table 5).

Key reported findings of studies

There was significant heterogeneity between the designs, aims, samples, SLD format, outcome measures and contexts of the 18 studies, with often conflicting and inherently biased findings due to study designs and outcome measures used. Nine studies reported equivalent outcomes regarding some elements of either debriefing quality, participant performance or competence, self-confidence or self-assessment of competence and participant satisfaction [15, 45,46,47,48,49, 52, 53, 56]. However, of these nine, five also reported that SLDs were significantly less effective if using other elements of the outcome measures [45, 46, 49, 52, 56]. In addition to these five, two studies reported decreased effectiveness of SLDs in comparison to FLDs or a combination of SLD + FLD [43, 50]. Conversely, only Lee et al. [52] and Oikawa et al. [48] reported any significant improvements with selected outcome measures with SLDs compared with FLDs, whilst Kündig et al. [47] reported improvements in two performance parameters with SLDs when compared with no debriefing.

Four studies investigated using a combination strategy of SLD + FLD and demonstrated either significantly improved or equivalent outcomes compared with either SLDs or FLDs only [49,50,51, 56]. Kang and Yu [51] reported significantly improved outcomes for problem-solving and debriefing satisfaction, but no differences in debriefing quality or team effectiveness. Other studies reported the opposite with significantly improved team effectiveness and debriefing quality, but no improvements in problem-solving or debriefing experience [49, 50]. Tutticci et al. [56] reported both significant and non-significant improvements in reflective thinking, dependent on which scoring tool was used. These findings, however, are in the context of variable quality appraisal scores (Table 3), wide variation in SLD formats and data collection instruments, and improved outcomes regardless of the method of debriefing used.

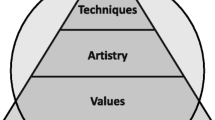

Thematic analysis results

We undertook reflexive thematic analysis (RTA) of the data set, revealing four themes and 11 subthemes (Fig. 2). The process of tabulating themes and an exemplar of coding strategy and theme development can be found in Additional files 3 and 4.

Theme 1: Promoting self-reflective practice

The analysis of data revealed that promoting self-reflective practice is the most fundamental component of how and why SLDs influence debriefing outcomes. Debriefings can encourage groups of learners to critically reflect on their shared simulated experiences leading to enhanced cognitive, social, behavioural and technical learning [15, 16, 42, 43, 45,46,47,48, 50, 51, 53, 54, 56, 57]. Different components within SLDs, including structured frameworks, video playback, and debriefing content, may influence such self-reflective practice. Most authors advocated a printed framework or checklist to help guide learners through the SLD process. However, despite this, SLDs were found to be less structured than FLDs [16]. The Gather-Analyse-Summarise framework [59] was most commonly used [46, 49,50,51, 53]. One study compared two locally developed debriefing instruments, the Team Assessment Scales (TAS) and Quick-TAS (Q-TAS), concluding that the Q-TAS was more effective in enabling the analysis of actions, but equivalent in all other measures [54].

Video playback offered a form of feedback for learners that encouraged reflective processing of scenarios [15, 16, 52]. One article concluded quoting a learner: ‘I learned it’s worthwhile to revisit situations like this. I know I won’t always have video to critique, but being able to rethink through the appointment will be helpful to review which tactics helped and which ones need to be revised’ ([42], p., 929). In such a manner, video playback enables learners to perceive behaviours of which they were previously unaware [15]. Whilst many studies lacked interrogation of content within SLDs, Boet et al. [16] provided an extensive analysis, reporting that interprofessional SLDs centred on content such as situational awareness, leadership, communication, roles, and responsibilities. Furthermore, it was through learners’ perceived performance of this content that offered entry points into reflection [16]. Some studies required learners to document their thoughts and impressions [44, 45, 47, 48, 50, 53]. However, the influence of content documentation on promoting self-reflective practice was inconclusive.

Combined SLD + FLD strategies involved learner and faculty co-debriefing [56], or SLDs preceding FLDs [49,50,51]. Using the Reflective Thinking Instrument one study reported FLD and combined SLD + FLD groups demonstrated significantly higher levels of reflective thinking amongst learners compared with SLD groups [56]. Within the limitations of a tool with poor validity and reliability, this study provides the best evidence that a combination approach to debriefing groups may be the most beneficial method for encouraging learner critical self-reflection. This finding is supported by results from three other studies showing improved outcomes with combined debriefing strategies, across team effectiveness [49], debriefing quality [50], problem-solving processes [51] and satisfaction with debriefing [50, 51].

Theme 2: Experience and background of learners

The experience and background of learners has a profound impact on how and why SLDs influence debriefing outcomes. Previous SBE experience may significantly impact the ability of learners to meaningfully engage with the SLD process and influences their expectations as to how a simulated scenario will progress [15, 16]. Furthermore, previous experience with FLDs may positively contribute to rich reflective discussion within SLDs as learners are better placed to integrate FLD goals and processes within a new context [16]. Whilst its influence on the conduct of SLDs is less clear, Boet et al. [16] note that real-world clinical experience allows learners to recontextualise their simulated experiences more readily and may therefore act as an entry point into the reflective process. In teams from the same professional background, learners appreciated the value of learning from constructive exchanges of opinion between colleagues operating at the same level [42, 44, 45], and role-modelling teamwork behaviours [48], whilst interprofessional SLDs may help break down traditional working silos, and support learning in contexts that replicate clinical practice [15]. Finally, learners originated mainly from either South Korea or North America. Cultural differences between Korean and Western learners may affect debriefing practices, with Korean students being described as less expressive than their Western colleagues [46]. The impact of cultural diversity on SLD methods, however, was not specifically investigated [44, 46, 53].

Theme 3: Challenges of conducting SLDs

Challenges of conducting SLDs were constructed from the dataset, including closing knowledge gaps, reinforcement of erroneous information, and resource allocation. The absence of expert facilitators may present a missed learning opportunity, whereby erroneous information could be discussed and consolidated, thus negatively affecting subsequent performance [44, 45, 47, 51] and potentially persisting into clinical practice [46]. There was consistent student preference for FLDs over SLDs which may indicate learners seeking expert reassurance and accurate debriefing content not readily available from peers [43, 50]. By reducing the requirement for expensive faculty presence, a significant motivating factor for investigating and employing SLDs is the potential for reducing costs [15, 16, 44,45,46, 49, 57]. However, SLDs do not appear to negate the need for faculty presence completely, but rather limit their role for specific elements within a SLE [15, 16]. Furthermore, the most influential impact on debriefing outcomes may be the incorporation of SLDs in combination with, rather than at the expense of, FLDs [49,50,51, 56]. Finally, most articles integrated a FLD-element within their SLE, thereby negating positive impacts on resource allocation [15, 16, 42,43,44,45,46, 49,50,51, 54,55,56,57].

Theme 4: Facilitation and leadership

The facilitation and leadership of SLDs may have a considerable impact on how and why SLDs influence debriefing outcomes. Only five articles described how learners were allocated as leaders and facilitators of SLDs [43, 54,55,56,57]. Random allocation of learners to lead and facilitate SLDs occurred either prior to, or on the day of the SLE [54,55,56]. In two studies, learners took turns leading the debrief such that all learners facilitated at least one SLD [43, 57]. No articles discussed the influence of leadership and facilitation on learners, nor the learners’ reactions, thoughts, or feelings towards the role or the content and reflective learning with subsequent debriefings. In two articles describing the same learner sample, only one of 17 interprofessional SLDs was nurse-led, all others being led by a medical professional [15, 16]. Such situations may have unintended implications by reinforcing stereotypes and hierarchical power imbalances.

Learners were trained to lead the SLDs in only two studies. In one, learners were randomly allocated to lead the SLDs, and were directed to online resources, including videos, checklists, and relevant articles, to help prepare for this role prior to the SLE [56]. No information concerning learners’ engagement with the resources was documented. In another study, learners were given 60 min training on providing constructive feedback to peers, which did not lead to improved outcomes for debriefing quality, performance, or self-confidence [43].

Discussion

The aim of this IR was to collate, synthesise and analyse the relevant literature to explore, with comparison to FLDs, how and why in-person SLDs influence debriefing outcomes for groups of learners in immersive SBE. The review identified 18 empirical studies with significant heterogeneity in respect to designs, contexts, learner characteristics, and data collection instruments. It is important to recognise that the review’s findings are limited by the variety and variability in quality of the data collection instruments and debriefing outcome measures used in these studies, as well as by some of the study designs themselves. Nevertheless, the findings of this review suggest that, across a range of debriefing outcomes, in situations where resources for FLDs are limited, SLDs can provide an alternative opportunity to safeguard effective learning. In some cultural and professional contexts, and for certain debriefing outcome measures, SLDs and FLDs may provide equivalent educational outcomes. Additionally, a small cohort of studies suggest that combined SLD + FLD strategies may be the optimal approach. Furthermore, SLDs influence debriefing outcomes most powerfully by promoting self-reflection amongst groups of learners.

Promoting self-reflection

Aligned with social constructivist theory [80], the social interaction of collaborative group learning in a reflective manner can lead to the construction, promotion and sharing of a wide ranging of interpersonal and team-based skills [81, 82]. Currently, there is a lack of evidence concerning which frameworks are best suited to maximise such reflection [10], especially in SLDs. Whilst framework use is associated with improvements in debriefing quality and subsequent performance, some evidence suggests that, in terms of promoting reflective practice, the specific framework itself is of less importance than the skills of the facilitator using it and the context in which it is applied [7, 9, 10]. In SLDs, there is no facilitator to guide this process, and as such, one may infer that the framework itself may have relatively more influence on debriefing outcomes and the reflective process of learners when compared with their use in FLDs. Conversely, whichever framework is used, the quality of the SLDs were rated highly, implying that it may be the structure provided by the framework, as opposed to the framework content, that is the critical factor for promoting reflection. Based on the findings of their qualitative study in which self-reflexivity, connectedness and social context informed learning within debriefings, Gum et al. [83] developed a reflective conceptual framework rooted in transformative learning theory [84], which purported to enable learners to engage in critical discourse and learning. By placing learners at the centre of their model, and by focusing on the three themes previously mentioned, this framework seems suited to groups of learners in SLDs. However, like many other debriefing frameworks, it remains untested in SLD contexts. In a study of business students, Eddy et al. [85] describe using an online tool that captured and analysed individual team members’ perceptions of an experience anonymously. The tool then prioritised reported themes to create a customised guide for teams to use in a subsequent in-person group SLD. The study reported that using this tool resulted in superior team processes and subsequent greater team performance when compared to SLDs using a generic debriefing guide only. Such tools may have a place in promoting self-reflection in healthcare SBE, such as with postgraduate learners with previous experiences of debriefings or those who have undertaken training in debriefing facilitation.

Furthermore, other structures or techniques that may help influence and promote self-reflection amongst groups of learners in SLDs are, as yet, untested in this context. For example, SLDs could take the form of in-person or online post-scenario reflective activities, in which learners work collaboratively on pre-determined tasks that align to ILOs. Examples such as escape room activities in SBE, in which learners work together to solve puzzles and complete tasks through gamified scenarios, have used concepts grounded in self-determination theory [86], with promising results in terms of improving self-reflection and learning outcomes [87, 88]. Meanwhile, individual virtual SLD interventions, rooted in Kolb’s experiential learning theory [89], have been tested and purport to enable critical reflection amongst users [90, 91]. Whilst such approaches may be relatively resource-intensive to create, they could be applied to SLDs for groups of learners in immersive SBE and prove resource-efficient once established.

Video playback

In both individual and group SLD exercises, video playback can allow learners to self-reflect, analyse performance, minimise hindsight bias, and identify mannerisms or interpersonal behaviours that may otherwise remain hidden [15, 42, 52, 92,93,94,95]. These findings are supported by situated learning theory whereby learning can be associated with repeated cycles of visualisation of, and engagement with, social interactions and interpersonal relationships which enable co-construction of knowledge amongst learners [96]. Conversely, in group SLD contexts, watching video playback may have unintended consequences for psychological safety, making learners feel self-conscious and anxious, and impact negatively on their ability to meaningfully engage with reflective learning [93]. A systematic review concluded that the benefits of video playback are highly dependent on the skill of the facilitator rather than the video playback itself [95], and as such its role influencing debriefing outcomes in SLDs remains uncertain.

Combining self-led and facilitator-led debriefings

The findings of this review suggest that employing combinations of SLDs and FLDs may optimise participant learning [49,50,51, 56], whilst acknowledging that this may also be dependent on other variables such as the expertise of debriefers and contexts within which debriefings occur. Whilst the reported improved outcomes are situated in the context of in-person SLDs for groups of learners, they are supported by the wider literature. For example, a Canadian research group investigated combined in-person and virtual individual SLD formats with FLDs, reporting improved debriefing outcomes across multiple domains including knowledge gains, self-efficacy, maximising reflection, and debriefing experience [90, 97,98,99]. SLD components of the combined strategy enable learners to reflect, build confidence, identify knowledge gaps, collect, and organise their thoughts and prepare for group interaction prior to a FLD [90, 97,98,99]. However, limitations of these studies include the unreliability of outcome measures.

Facilitation and leadership

Only two studies provided training for learners in how to facilitate debriefings and provide constructive feedback [43, 56]. This is surprising given the emphasis of faculty development in the SBE literature [6, 9, 28, 100]. RTA of the data highlighted how the potential influence of previous experience with FLDs may influence learners’ ability to actively engage in the reflective nature of the SLD process [15, 16]. This brings into question whether learners should have some familiarity of debriefing processes, either via previous experience or targeted training, prior to facilitating group SLDs.

Variables such as learners’ debriefing experiences and educational context have implications for the interpretation of the findings of this review. Having previous experience with FLDs may potentially influence learners’ abilities to actively engage in the reflective nature of the SLD process [15, 16] bringing into question whether learners should have some familiarity of debriefing processes, either via prior experience or targeted training, prior to being expected to facilitate or lead a group SLD. This further raises questions about whether SLDs may or may not be more suitable for certain populations, such as students undergoing early training or postgraduates who are relatively more experienced in SBE. Training peers as facilitators, who then act in an ‘instructor’ role, rather than as part of the learner group, has also been reported as an effective method to positively influence debriefing outcomes [101, 102]. However, training learners to facilitate SLDs involves significant resource commitments, thus negating some of the initial reasons for instigating SLDs.

Data collection instruments and outcome measures

The studies included in this review used multiple data collection tools to gauge the influence of SLDs on debriefing outcomes across five domains (Table 5). The diversity in approaches to outcome measurement is problematic as it impedes the ability to compare studies fairly, effectively, and robustly [103]. Certain instruments, such as the Debriefing Assessment for Simulation in Healthcare- Student Version [68] and the Debriefing Experience Scale [69], are validated and reliable tools for assessing learner perceptions of, and feelings towards, debriefing quality in certain contexts. However, learner perceptions of debriefing quality do not necessarily translate to objective evaluation of debriefing practices. Additionally, some studies relied on learner self-confidence and self-reported assessment questionnaires for their outcome measures, despite self-perceived competence and confidence being a poor surrogate marker for clinical competence [104]. Commonly used tools measuring debriefing quality may not be suitable for SLDs and having a ‘one-size-fits-all’ approach could invalidate results [105]. To our knowledge, there is no validated or reliable tool currently available that specifically assesses the debriefing quality of SLDs.

Psychological safety

One important challenge of conducting SLDs, which was not constructed through the RTA of this dataset, is ensuring psychological safety of learners in debriefings. Psychological safety is defined as ‘a shared belief held by members of a team that the team is safe for interpersonal risk taking’ ([106], p., 350) and its establishment, maintenance and restoration in debriefings is of paramount importance for learners participating in SBE [107, 108]. Oikawa et al. [48] stated that ‘self-debriefing may augment reflection through the establishment of an inherently safe environment’ ([48], p., 130), although how safe environments are ‘inherent’ within SLDs is unclear. Tutticci et al. [56] quote secondary sources [83, 109] stating that peer groups can improve collegial relationships and engender safe learning environments that improve empathy whilst reducing the risk of judgement. Conversely, it may also transpire that psychologically unsafe environments are fostered, leading to unintended harmful practices. In interprofessional contexts where historical power imbalances, hierarchies and professional divisions can exist [11, 110, 111], and in which facilitator skill has been the most frequently cited enabler of psychological safety [112], one can infer that threats to psychological safety may be accentuated in SLDs.

In contrast, researchers found the process of engaging in an individual SLD enhanced psychological safety by helping learners decrease their stress and anxiety, thus leading to more active engagement and meaningful dialogue in subsequent FLDs [99]. Another study reported learners describing the familiarity of connecting with known peers within SLDs fostered psychological safety and enabled learning [98]. However, these studies were excluded from this review due to having individual rather than group SLDs. Nevertheless, their findings that combined SLD + FLD strategies enable psychological safety may partially explain the findings of this review, and psychological safety may therefore be a central concept in understanding how and why SLDs influence debriefing outcomes.

For teams regularly working together in clinical contexts, their antecedent psychological safety has a major influence on any SLEs they undertake [113]. This subsequently impacts on how team members, both individually and collectively, experience psychological safety within their real clinical environment [113]. The place of SLDs in such contexts, along with their potential advantages and risks, remains undetermined.

Limitations

This review specifically investigates in-person group debriefings, and therefore, the results may not be applicable to individual or virtual SLD contexts. The inclusion criteria allowed for published peer-reviewed empirical research studies in English, excluding grey literature. This may introduce bias with some evidence suggesting that excluding grey literature can lead to over-exaggerated conclusions [114, 115], and concerns regarding publishing bias [116]. We also acknowledge that the choices made in constructing and implementing our search strategy (Additional file 1) may have impacted the total number of articles identified for inclusion in this review. Finally, the heterogeneity of the included studies limits the certainty with which generalisable conclusions can be made. Conversely, heterogeneity enables a diverse body of evidence to be analysed and better informs the need for future research and where gaps may lie.

Recommendations for future research

The findings of this review have highlighted several areas requiring further research. Firstly, the role of combining group SLDs with FLDs should be explored, both quantitatively and qualitatively, to explain its place within immersive SBE. Secondly, to inform best practice, different methods, structures and frameworks of group SLDs need investigating to assess what may work, for whom and in which context. This extends to further research investigating different groups, such as interprofessional learners, to ascertain if certain contexts are more suitable for SLDs than others. Such work may feed into the production of guidelines to help standardise SLD practices across these differing contexts. Thirdly, assessment and testing of data collection instruments is required, as current tools are not fit for purpose. Clarification of what is suitable and measurable in terms of debriefing quality and learning outcomes, especially in relation to group SLDs, is needed. Finally, whilst research into fostering psychological safety in FLDs is emerging, the same is not true in the context of SLDs and this needs to be explored to ensure that SLDs are not psychologically harmful for learners.

Conclusions

To our knowledge this is the first review to explore how and why in-person SLDs influence debriefing outcomes for groups of learners in immersive SBE. The findings address an important gap in the literature and have significant implications for simulation-based educators involved with group debriefings across a variety of contexts. The synthesised findings of this review suggest that, across a range of debriefing outcome measures, in-person SLDs for groups of learners following immersive SBE are preferable to conducting no debriefing at all. In certain cultural and professional contexts, such as postgraduate learners and those with previous debriefing experience, SLDs can support effective learning and may provide equivalent educational outcomes to FLDs or SLD + FLD combination strategies. Furthermore, there is some evidence to suggest that SLD + FLD combination approaches may optimise participant learning, with this approach warranting further research.

Under certain conditions and circumstances, SLDs can enable learners to achieve suitable levels of critical self-reflection and learning. Similar to FLDs, promoting self-reflective practice within groups of learners is the fundamental method of how and why SLDs influence debriefing outcomes because it is through this metacognitive skill that effective learning and behavioural consolidation or change can occur. However, more work is required to ascertain for whom and in what contexts SLDs may be most appropriate. In situations where resources for FLDs are limited, SLDs may provide an alternative opportunity to enable effective learning. However, their true value within the scope of immersive SBE may lie as an adjunctive method alongside FLDs.

Availability of data and materials

The datasets used and/or analysed during the current study are available within the article and supplementary files or from the corresponding author on reasonable request.

References

Dieckmann P, Friis SM, Lippert A, Østergaard D. The art and science of debriefing in simulation: ideal and practice. Med Teach. 2009;31:e287–94.

Fanning RM, Gaba DM. The role of debriefing in simulation-based learning. Simul Healthc. 2007;2(2):115–25.

Levett-Jones T, Lapkin S. A systematic review of the effectiveness of simulation debriefing in health professional education. Nurse Educ Today. 2014;34:e58–63.

Cheng A, Eppich W, Grant V, Sherbino J, Zendejas B, Cook DA. Debriefing for technology-enhanced simulation: a systematic review and meta-analysis. Med Educ. 2014;48:657–66.

Al Sabei SD, Lasater K. Simulation debriefing for clinical judgement: a concept analysis. Nurse Educ Today. 2016;45:42–27.

Cheng A, Morse KJ, Rudolph J, Arab AA, Runnacles J, Eppich W. Learner-centered debriefing for healthcare simulation education: lessons for faculty development. Simul Healthc. 2016;11(1):32–40.

Sawyer T, Eppich W, Brett-Fleegler M, Grant V, Cheng A. More than one way to debrief: a critical review of healthcare simulation debriefing methods. Simul Healthc. 2016;11(3):209–17.

Cheng A, Palaganas J, Eppich W, Rudolph J, Robinson T, Grant V. Co-debriefing for simulation-based education: a primer for facilitators. Simul Healthc. 2015;10:69–75.

Cheng A, Grant V, Huffman J, Burgess G, Szyld D, Robinson T, et al. Coaching the debriefer: peer coaching to improve debriefing quality in simulation programs. Simul Healthc. 2017;12(5):319–25.

Endacott R, Gale T, O’Connor A, Dix S. Frameworks and quality measures used for debriefing in team-based simulation: a systematic review. BMJ Simul Technol Enhanc Learn. 2019;5:61–72.

Kumar P, Paton C, Simpson HM, King CM, McGowan N. Is interprofessional co-debriefing necessary for effective interprofessional learning within simulation-based education? IJoHS. 2021;1(1):49–55.

Tannenbaum SI, Cerasoli CP. Do team and individual debriefs enhance performance? A meta-analysis. Hum Factors. 2013;55(1):231–45.

Allen JA, Reiter-Palmon R, Crowe J, Scott C. Debriefs: teams learning from doing in context. Am Psychol. 2018;73(4):504–16.

Boet S, Bould MD, Bruppacher HR, Desjardins F, Chandra DB, Naik VN. Looking in the mirror: self-debriefing versus instructor debriefing for simulated crises. Crit Care Med. 2011;39(6):1377–81.

Boet S, Bould MD, Sharma B, Reeves S, Naik VN, Triby E, et al. Within-team debriefing versus instructor-led debriefing for simulation-based education: a randomized controlled trial. Ann Surg. 2013;258(1):53–8.

Boet S, Pigford A, Fitzsimmons A, Reeves S, Triby E, Bould MD. Interprofessional team debriefings with or without an instructor after a simulated crisis scenario: an exploratory case study. J Interprof Care. 2016;30(6):717–25.

Garden AL, Le Fevre DM, Waddington HL, Weller JM. Debriefing after simulation-based non-technical skill training in healthcare: a systematic review of effective practice. Anaesth Intensive Care. 2015;43(3):300–8.

Keiser NL, Arthur W Jr. A meta-analysis of the effectiveness of the after-action review (or debrief) and factors that influence its effectiveness. J Appl Psychol. 2021;106(7):1007–32.

Dufrene C, Young A. Successful debriefing- best methods to achieve positive learning outcomes: a literature review. Nurse Educ Today. 2014;34:372–6.

Kim Y, Yoo J. The utilization of debriefing for simulation in healthcare: a literature review. Nurse Educ Pract. 2020;43:102698.

Lee J, Lee H, Kim S, Choi M, Ko IS, Bae J, et al. Debriefing methods and learning outcomes in simulation nursing education: a systematic review and meta-analysis. Nurse Educ Today. 2020;87:104345.

Niu Y, Liu T, Li K, Sun M, Sun Y, Wang X, et al. Effectiveness of simulation debriefing methods in nursing education: a systematic review and meta-analysis. Nurse Educ Today. 2021;107:105113.

MacKenna V, Díaz DA, Chase SK, Boden CJ, Loerzel V. Self-debriefing in healthcare simulation: an integrative literature review. Nurse Educ Today. 2021;102:104907.

Kumar P, Somerville SG. Exploring self-led debreifings in simulation-based education: an integrative review protocol. IJoHS. 2023;1–10.

Rees CE, Crampton PES, Monrouxe LV. Revisioning academic medicine through a constructionist lens. Acad Med. 2020;95(6):846–50.

Crotty M. The foundations of social research: Meaning and perspective in the research process. Sage Publications; 2003.

Brown MEL, Dueñas AN. A medical science educator’s guide to selecting a research paradigm: Building a basis for better research. Med Sci Educ. 2020;30:545–53.

Kumar P, Collins K, Paton C, McGowan N. Continuing professional development for faculty in simulation-based education. IJoHS. 2021;1(1):63.

Norman G, Sherbino J, Varpio L. The scope of health professions education requires complementary and diverse approaches to knowledge synthesis. Perspect Med Educ. 2022;11(3):139–43.

Whittemore R, Knafl K. The integrative review: updated methodology. J Adv Nurs. 2005;52(5):546–53.

Eppich W, Reedy G. Advancing healthcare simulation research: innovations in theory, methodology, and method. Adv Simul. 2022;7:23.

Methley AM, Campbell S, Chew-Graham C, McNally R, Cheraghi-Sohi S. PICO, PICOS and SPIDER: a comparison study of specificity and sensitivity in three search tools for qualitative systematic reviews. BMC Health Serv Res. 2014;14(1):579.

Dhollande S, Taylor A, Meyer S, Scott M. Conducting integrative reviews: a guide for novice nursing researchers. J Res Nurs. 2021;26(5):427–38.

Aromataris E, Riitano D. Constructing a search strategy and searching for evidence: a guide to the literature search for a systematic review. Am J Nurs. 2014;114(5):49–56.

Lefebvre C, Glanville J, Briscoe S, Featherstone R, Littlewood A, Marshall C, et al. Chapter 4: Searching for and selecting studies. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA. Cochrane handbook for systematic reviews of interventions (version 6.3). 2022. https://training.cochrane.org/handbook. Accessed 5 Jul 2023.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71.

Hong QN, Pluye P, Fàbregues S, Bartlett G, Boardman F, Cargo M, et al. Mixed Methods Appraisal Tool (MMAT), Version 2018 User Guide. McGill University, Department of Family Medicine, Ontario. 2018. http://mixedmethodsappraisaltoolpublic.pbworks.com/w/file/fetch/146002140/MMAT_2018_criteria-manual_2018-08-08c.pdf. Accessed 5 Jul 2023.

Miles MB, Huberman AM. Qualitative data analysis: an expanded sourcebook. 2nd ed. Sage Publications; 1994.

Miles MB, Huberman AM, Saldana J. Qualitative data analysis: a methods sourcebook. 3rd ed. Sage Publications; 2014.

Braun V, Clarke V. Thematic analysis: a practical guide. Sage Publications; 2021.

Kutcher AM, LeBaron VT. A simple guide for completing an integrative review using an example article. J Prof Nurs. 2022;40:13–9.

Quick KK. The role of self- and peer-assessment in dental students’ reflective practice using standardized patient encounters. J Dent Educ. 2016;80(8):924–9.

Andrews E, Dickter DN, Stielstra S, Pape G, Aston SJ. Comparison of dental students’ perceived value of faculty vs. peer feedback on non-technical clinical competency assessments. J Dent Educ. 2019;83(5):536–45.

Ha E. Effects of peer-led debriefing using simulation with case-based learning: written vs. observed debriefing. Nurse Educ Today. 2020;84:104249.

Ha E, Lim EJ. Peer-led written debriefing versus instructor-led oral debriefing: using multimode simulation. Clin Simul Nurs. 2018;18:38–46.

Kim SS, De Gagne JC. Instructor-led vs. peer-led debriefing in preoperative care simulation using standardized patients. Nurse Educ Today. 2018;71:34–9.

Kündig P, Tschan F, Semmer NK, Morgenthaler C, Zimmerman J, Holzer E, et al. More than experience: a post-task reflection intervention among team members enhances performance in student teams confronted with a simulated resuscitation task- a prospective randomised trial. BMJ Simul Technol Enhanc Learn. 2020;6(2):81–6.

Oikawa S, Berg B, Turban J, Vincent D, Mandai Y, Birkmire-Peters D. Self-debriefing vs instructor debriefing in a pre-internship simulation curriculum: night on call. Hawaii J Med Public Health. 2016;75(5):127–32.

Rueda-Medina B, Gómez-Urquiza JL, Molina-Rivas E, Tapia-Haro R, Aguilar-Ferrándiz ME, Correa-Rodríguez M. A combination of self-debriefing and instructor-led debriefing improves team effectiveness in health science students. Nurse Educ. 2020;46(1):E7–E11.

Rueda-Medina B, Schmidt-RíoValle J, González-Jiménez E, Fernández-Aparicio Á, Aguilar-Ferrándiz ME, Correa-Rodríguez M. Peer debriefing versus instructor-led debriefing for nursing simulation. J Nurs Educ. 2021;60(2):90–5.

Kang K, Yu M. Comparison of student self-debriefing versus instructor debriefing in nursing simulation: a quasi-experimental study. Nurse Educ Today. 2018;65:67–73.

Lee M, Kim S, Kang K, Kim S. Comparing the learning effects of debriefing modalities for the care of premature infants. Nurs Health Sci. 2020;22:243–53.

Na YH, Roh YS. Effects of peer-led debriefing on cognitive load, achievement emotions, and nursing performance. Clin Simul Nurs. 2021;55:1–9.

Paige JT, Kerdolff KE, Roger CL, Garbee DD, Yu Q, Cao W, et al. Improvement in student-led debriefing analysis after simulation-based team training using a revised teamwork assessment tool. Surgery. 2021;170(6):1659–64.

Schreiber J, Delbert T, Huth L. High fidelity simulation with peer debriefing: influence of student observation and participant roles on student perception of confidence with learning and feedback. J Occup Ther Educ. 2020;4(2):8.

Tutticci N, Coyer F, Lewis PA, Ryan M. Student facilitation of simulation debrief: Measuring reflective thinking and self-efficacy. Teach Learn Nurs. 2017;12(2):128–35.

Curtis E, Ryan C, Roy S, Simes T, Lapkin S, O’Neil B, et al. Incorporating peer-to-peer facilitation with a mid-level fidelity student led simulation experience for undergraduate nurses. Nurse Educ Pract. 2016;20:80–4.

Jaye P, Thomas L, Reedy G. ‘The diamond’: a structure for simulation debrief. Clin Teach. 2015;12(3):171–5.

Phrampus PE, O’Donnell JM. Debriefing using a structured and supported approach. In: Levine AI, DeMaria S, Schwartz AD, Sim AJ, editors. The comprehensive textbook of healthcare simulation. Springer; 2013. p. 73–84.

Kim J, Neilipovitz D, Cardinal P, Chiu M, Clinch J. A pilot study using high-fidelity simulation to formally evaluate performance in the resuscitation of critically ill patients: The University of Ottawa Critical Care Medicine, High-Fidelity Simulation, and Crisis Resource Management I Study. Crit Care Med. 2006;34(8):2167–74.

Do S, Son K, Byun J, Lim J. Development and construct validation of the Korean Achievement Emotions Questionnaire (K-AEQ). Kor J Educ Psychol. 2011;25(4):945–70.

Pekrun R, Goetz T, Frenzel AC, Barchfield P, Perry RP. Measuring emotions in students’ learning and performance: the Achievement Emotions Questionnaire (AEQ). Contemp Educ Psychol. 2011;36(1):36–48.

Kim AY, Park IY. Construction and validation of academic self-efficacy scale. Kor J Educ Res. 2001;39(1):95–123.

Dickter DN, Stielstra S, Mackintosh S, Garner S, Finocchio VA, Aston SJ. Development of the Ambulatory Team Observed Structured Clinical Evaluation (ATOSCE). Med Sci Educ. 2013;23(3S):554–8.

Frankel A, Gardner R, Maynard L, Kelly A. Using the Communication And Teamwork Skills (CATS) assessment to measure health care team performance. Jt Comm J Qual Patient Saf. 2007;33(9):549–58.

Baptista RCN, Martins JCA, Pereira MFCR, Mazzo A. Satisfaccion de los estudiantes con las experiencias clinicas simuladas: Validacion de escala de evaluacion. Rev Lat Am Enfermagem. 2014;22(5):709–15.

Josephsen J. Cognitive load measurement, worked-out modelling, and simulation. Clin Simul Nurs. 2018;23:10–5.

Simon R, Raemer DB, Rudolph JW. Debriefing Assessment for Simulation in Healthcare (DASH)© - Student version, short form. Boston, Massachusetts: Center for Medical Simulation; 2010. https://harvardmedsim.org/debriefing-assessment-for-simulation-in-healthcare-dash/. Accessed 5 Jul 2023

Reed SJ. Debriefing experience scale: development of a tool to evaluate the student learning experience in debriefing. Clin Simul Nurs. 2012;8(6):e211–7.

Hur GH. Construction and validation of a global interpersonal communication competence scale. KSJCS. 2003;47(6):380–408.

Luszczynska A, Scholz U, Schwarzer R. The general self-efficacy scale: multicultural validation studies. Aust J Psychol. 2005;139(5):439–57.

Ko IS, Kim HS, Kim IS, Kim SS, Oh EG, Kim EJ, et al. Development of scenario and evaluation for simulation learning of care for patients with asthma in emergency units. J Korean Acad Fundam Nurs. 2010;17(3):371–81.

Arora S, Ahmed M, Paige J, Nestel D, Runnacles J, Hull L, et al. Objective structured assessment of debriefing: bringing science to the art of debriefing in surgery. Ann Surg. 2012;256(6):982–9.

Heppner PP, Petersen CH. The development and implications of a personal problem-solving inventory. J Couns Psychol. 1982;29(1):66–75.

Lee WS, Park SH, Choi EY. Development of a Korean problem solving process inventory for adults. J Korean Acad Fundam Nurs. 2008;15(4):548–57.

Kember D, Leung DYP, Jones A, Loke AY, McKay J, Sinclair K, et al. Development of a questionnaire to measure the level of reflective thinking. Assess Eval High Educ. 2000;25(4):381–95.

Mann K, Gordon J, MacLeod A. Reflection and reflective practice in health professions education: a systematic review. Adv Health Sci Educ. 2009;14(4):595–621.

Jeffries PR. A framework for designing, implementing, and evaluating simulations used as teaching strategies in nursing. Nurs Educ Perspect. 2005;26(2):96–103.

Cooper S, Cant R, Porter J, Sellick K, Somers G, Kinsman L, et al. Rating medical emergency teamwork performance: Development of the Team Emergency Assessment Measure (TEAM). Resuscitation. 2010;81(4):446–52.

Vygotsky LS. In: Cole M, John-Steiner V, Scribner S, Souberman E, editors. Mind in Society: Development of Higher Psychological Processes. Harvard University Press; 1978.

Arabi AN, Kennedy CA. The perceptions and experiences of undergraduate healthcare students with debriefing methods. Simul Healthc. 2023;18(3):191–202.

Shabani K, Khatib M, Ebadi S. Vygotsky’s zone of proximal development: Instructional implications and teachers’ professional development. Engl Lang Teach. 2010;3(4):237–48.

Gum L, Greenhill J, Dix K. Sim TRACTTM: a reflective conceptual framework for simulation debriefing. J Transform Educ. 2011;9(1):21–41.

Mezirow J. Transformative dimensions in adult learning. Jossey-Bass; 1991.

Eddy ER, Tannenbaum SI, Mathieu JE. Helping teams to help themselves: comparing two team-led debriefing methods. Pers Psychol. 2013;66:975–1008.

Ten Cate OTJ, Kusurkar RA, Williams GC. How self-determination theory can assist our understanding of the teaching and learning processes in medical education. AMEE Guide No. 59. Med Teach. 2011;33(12):961–73.

Guckian J, Eveson L, May H. The great escape? The rise of the escape room in medical education. Future Healthc J. 2020;7(2):112–5.

Valdes B, Mckay M, Sanko JS. The impact of an escape room simulation to improve nursing teamwork, leadership and communication skills: a pilot project. Simul Gaming. 2020;52(1):54–61.

Kolb DA. Experience as the source of learning and development. Prentice Hall; 1984.

Verkuyl M, Hughes M, Atack L, McCulloch T, Lapum JL, Romaniuk D, et al. Comparison of self-debriefing alone or in combination with group debrief. Clin Simul Nurs. 2019;37:32–9.

Verkuyl M, MacKenna V, St-Amant O. Using self-debrief after a virtual simulation: The process. Clin Simul Nurs. 2021;57:48–52.

Bussard ME. Self-reflection of video-recorded high-fidelity simulations and development of clinical judgement. J Nurs Educ. 2016;55(9):522–7.

Ha E. Attitudes toward video-assisted debriefing after simulation in undergraduate nursing students: an application of Q methodology. Nurse Educ Today. 2014;34:978–84.

Wilbanks BA, McMullan S, Watts PI, White T, Moss J. Comparison of video-facilitated reflective practice and faculty-led debriefings. Clin Simul Nurs. 2020;42:1–7.

Zhang H, Mörelius E, Goh SHL, Wang W. Effectiveness of video-assisted debriefing in simulation-based health professions education. Nurse Educ. 2019;44(3):E1–6.

Lave J, Wenger E. Situated learning: legitimate peripheral participation. Cambridge University Press; 1991.

Verkuyl M, Atack L, Larcina T, Mack K, Cahus D, Rowland C, et al. Adding self-debrief to an in-person simulation: a mixed methods study. Clin Simul Nurs. 2020;47:32–9.

Verkuyl M, Lapum JL, St-Amant O, Hughes M, Romaniuk D, McCulloch T. Exploring debriefing combinations after a virtual simulation. Clin Simul Nurs. 2020;40:36–42.

Verkuyl M, Richie S, Cahuas D, Rowland C, Ndondo M, Larcina T, et al. Exploring self-debriefing plus group-debriefing: a focus group study. Clin Simul Nurs. 2020;43:3–9.

Cheng A, Eppich W, Kolbe M, Meguerdichian M, Bajaj K, Grant V. A conceptual framework for the development of debriefing skills: a journey of discovery, growth, and maturity. Simul Healthc. 2020;15(1):55–60.

Brown J, Collins G, Gratton O. Exploring the use of student-led simulated practice learning in pre-registration nursing programmes. Nurs Stand. 2017;32(4):50–8.

Lairamore C, Reed CC, Damon Z, Rowe V, Baker J, Griffith K, et al. A peer-led interprofessional simulation experience improves perceptions of teamwork. Clin Sim Nurs. 2019;34:22–9.

Williamson PR, Altman DG, Blazeby JM, Clarke M, Devane D, Gargon E, et al. Developing core outcome sets for clinical trials: issues to consider. Trials. 2012;13:132.

Yates N, Gough S, Brazil V. Self-assessment: with all its limitations, why are we still measuring and teaching it? Lessons from a scoping review. Med Teach. 2022;44(11):1296–302.

Ali AA, Musallam E. Debriefing quality evaluation in nursing simulation-based education: an integrative review. Clin Simul Nurs. 2018;16:15–24.

Edmondson A. Psychological safety and learning behaviour in work teams. Adm Sci Q. 1999;44:350–83.

Kolbe M, Eppich W, Rudolph J, Meguerdichian M, Catena H, Cripps A, et al. Managing psychological safety in debriefings: a dynamic balancing act. BMJ Simul Technol Enhanc Learn. 2020;6(3):164–71.

Rudolph JW, Raemer DB, Simon R. Establishing a safe container for learning in simulation: the role of the presimulation briefing. Simul Healthc. 2014;9(6):339–49.

Lincoln M, McAllister L. Peer learning in clinical education. Med Teach. 1993;15(1):17–26.

Bunderson JS, Reagans RE. Power, status, and learning in organizations. Organ Sci. 2011;22(5):1182–94.

Palaganas JC, Epps C, Raemer DB. A history of simulation-enhanced interprofessional education. J Interprof Care. 2014;28(2):110–5.

Lackie K, Hayward K, Ayn C, Stilwel P, Lane J, Andrews C, et al. Creating psychological safety in interprofessional simulation for health professional learners: a scoping review of the barriers and enablers. J Interprof Care. 2022;11:1–16.

Purdy E, Borchert L, El-Bitar A, Isaacson W, Bills L, Brazil V. Taking simulation out of its “safe container”- exploring the bidirectional impacts of psychological safety and simulation in an emergency department. Adv Simul. 2022;7:5.

Hopewell S, McDonald S, Clarke M, Egger M. Grey literature in meta-analyses of randomized trials of health care interventions. Cochrane Database Syst Rev 2007; (2): MR000010. https://doi.org/10.1002/14651858.MR000010.pub3. Accessed 5 Jul 2023.

McAuley L, Pham B, Tugwell P, Moher D. Does the inclusion of grey literature influence estimates of intervention effectiveness reported in meta-analyses? Lancet. 2000;356(9237):1228–31.

Dalton JE, Bolen SD, Mascha EJ. Publication bias: the elephant in the review. Anesth Analg. 2016;123(4):812–3.

Acknowledgements

Firstly, we would like to thank Scott McGregor, University of Dundee librarian, for his expertise and invaluable advice when formulating and refining the search strategy for this review. We would also like to thank Kathleen Collins and Kathryn Sharp for their scholarly insights into the project.

Funding

No funding declared.

Author information

Authors and Affiliations

Contributions

P.K. is lead author and led the conception and design of this study as part of his Masters in Medical Education research project with the University of Dundee. S.S. supervised the project. Both authors contributed to the writing and editing of this manuscript and have reviewed and approved the final article.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

All authors give consent for this manuscript to be published.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1.

Final search strategies of electronic bibliographic databases.

Additional file 2.

List of studies identified for full-text screening and reasons for exclusion.

Additional file 3.

Tabulating themes developed via reflexive thematic analysis.

Additional file 4.

Exemplar of coding strategy and theme development.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Kumar, P., Somerville, S. Exploring in-person self-led debriefings for groups of learners in simulation-based education: an integrative review. Adv Simul 9, 5 (2024). https://doi.org/10.1186/s41077-023-00274-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41077-023-00274-z