Abstract

Background

Prehospital (ambulance) care can reduce morbidity and mortality from trauma. Yet, there is a dearth of effective evidence-based interventions and implementation strategies. Emergency Medical Services Traumatic Shock Care (EMS-TruShoC) is a novel bundle of five core evidence-based trauma care interventions. High-Efficiency EMS Training (HEET) is an innovative training and sensitization program conducted during clinical shifts in ambulances. We assess the feasibility of implementing EMS-TruShoC using the HEET strategy, and feasibility of assessing implementation and clinical outcomes. Findings will inform a main trial.

Methods

We conducted a single-site, prospective cohort, multi-methods pilot implementation study in Western Cape EMS system of South Africa. Of the 120 providers at the study site, 12 were trainers and the remaining were eligible learners. Feasibility of implementation was guided by the RE-AIM (reach, effectiveness, adoption, implementation, and maintenance) framework. Feasibility of assessing clinical outcomes was assessed using shock indices and clinical quality of care scores, collected via abstraction of patients’ prehospital trauma charts. Thresholds for progression to a main trial were developed a priori.

Results

The average of all implementation indices was 83% (standard deviation = 10.3). Reach of the HEET program was high, with 84% learners completing at least 75% of training modules. Comparing the proportion of learners attaining perfect scores in post- versus pre-implementation assessments, there was an 8-fold (52% vs. 6%) improvement in knowledge, 3-fold (39% vs. 12%) improvement in skills, and 2-fold (42% vs. 21%) increase in self-efficacy. Clinical outcomes data were successfully calculated—there were clinically significant improvements in shock indices and quality of prehospital trauma care in the post- versus pre-implementation phases. Adoption of HEET was good, evidenced by 83% of facilitator participation in trainings, and 100% of surveyed stakeholders indicating good programmatic fit for their organization. Stakeholders responded that HEET was a sustainable educational solution that aligned well with their organization. Implementation fidelity was very high; 90% of the HEET intervention and 77% of the implementation strategy were delivered as originally planned. Participants provided very positive feedback, and explained that on-the-job timing enhanced their participation. Maintenance was not relevant to assess in this pilot study.

Conclusions

We successfully implemented the EMS-TruShoC educational intervention using the HEET training strategy in a single-site pilot study conducted in a low-resource international setting. All clinical outcomes were successfully calculated. Overall, this pilot study suggests high feasibility of our future, planned experimental trial.

Similar content being viewed by others

Background

Trauma is a leading global cause of mortality in persons between 5 and 44 years of age [1]. Each year, there are over 6 million trauma deaths worldwide. Furthermore, injured persons in low-and-middle income countries experience a disproportionately large burden (over 90%) of post-injury death and disability [1,2,3,4]. Traumatic injuries and mortality rates globally are expected to continue to rise in incidence, necessitating additional effective strategies to manage this growing global public health crisis [3].

High-quality prehospital (i.e., ambulance-based) care is a critical component of trauma care. Prehospital care can avert 54% of all mortality from emergency conditions, including trauma, in low-and-middle income countries [5]. Despite existence of evidence-based interventions, such as on-scene hemorrhage control and maintaining short scene times, few effective implementation strategies exist to introduce interventions into clinical practice in this setting [6,7,8].

In low-and-middle income countries, limited resources and strained clinical services often mean traditional educational models (e.g., classroom and simulation training) are difficult to implement and poorly attended, and result in variable outcomes [9,10,11]. Continuing education can be a cost-effective and sustainable strategy to improve the quality of prehospital trauma care in resource-limited settings [5, 9,10,11]. Yet, well-described evidence-based interventions and implementation strategies tailored to low-and-middle-income countries are lacking in the scientific literature, with less than 2% of Emergency Medicine guidelines being developed in low-and-middle income countries [11, 12]. More evidence is needed regarding effectiveness of interventions (the “what”) and implementation strategies (the “how”) to impact provider- and patient-level outcomes in prehospital trauma care in resource-limited settings globally [13].

In 2016, we developed a novel educational intervention of bundled trauma care (termed, EMS Traumatic Shock Care [EMS-TruShoC]), which is implemented using a novel training strategy (termed, High-Efficiency EMS Training [HEET]) based on adult-learner principles. In general, bundling of intervention components, usually 3 to 5 items, helps increase clinical uptake [14]. The EMS-TruShoC bundle was developed in 2016, using an international expert panel consensus process to select and assemble existing evidence-based interventions relevant to prehospital trauma care in resource-limited settings [15]. HEET is a novel low-dose, high-frequency, training and sensitization program designed to improve trauma knowledge, attitudes, and skills efficiently during clinical shifts in the back of the ambulance. HEET was modeled after Helping Babies BreatheTM, a globally acclaimed training program with 46% mortality reduction in perinatal asphyxia of neonates in resource-limited settings [16].

South Africa was the initial test site for EMS-TruShoC and HEET. South Africa, a middle-income country with high income inequality, has an exceptionally high prevalence of inter-personal violence resulting in 7 times the global mean trauma mortality rate, and loss of 1 million disability adjusted life years (DALYs) in 2000 [2, 17]. The Western Cape Province, approximately 130,000 km2 with over 6 million people in 2011, has over 1 million persons estimated to live in dense, informal settlements, where gang warfare and interpersonal violence are major contributors to the burden of trauma [18, 19].

The Western Cape Government Department of Health operates a public emergency medical services (EMS) system that provides prehospital (ambulance) services for the Western Cape [20]. Western Cape EMS transports over 500,000 patients per year, with 40% due to trauma. The system employs about 2000 providers and operates 250 ambulances, distributed over 10 health districts. Western Cape EMS has a well-delineated and distributed management structure, and continuing education is a top organizational priority. In the current state, a quarterly 2-day training program, delivered by experienced EMS educators, is the cornerstone of continuing education in Western Cape EMS. Trainings are conducted at each ambulance base, and providers are encouraged to participate in on their off-days in exchange for educational credits. Success of this program is limited by poor attendance, programmatic reach, and limited training resources.

The overarching purpose of this pilot study is to evaluate the feasibility of implementation and the feasibility of assessing outcomes. If the pilot study satisfies predetermined criteria for success, we will subsequently conduct an experimental trial to gain robust evidence regarding implementation of HEET and clinical effectiveness of EMS-TruShoC.

Methods

Objectives

The primary objective is to assess the feasibility of implementing EMS-TruShoC using the HEET strategy. The secondary objective is to evaluate feasibility of assessing implementation and clinical effectiveness outcomes.

Design

This is a single-site, prospective cohort, pilot study using a multi-method outcomes assessment. The delivery and feasibility assessment of HEET implementation was guided by an implementation science framework, RE-AIM (reach, effectiveness, adoption, implementation, maintenance) [21, 22]. Clinical outcomes were assessed using a previously developed standardized chart abstraction and analysis procedure [23, 24].

Logic model

The logic model underpinning this implementation science project is congruent with PRECEDE-PROCEED, a widely used model in public health for bringing change in behavior. The model suggests that behavior change is influenced by both individual and environmental factors [25]. We posit that effective implementation of EMS-TruShoC, using the HEET strategy, should improve providers’ trauma knowledge-attitudes-skills, thereby translating to improvements in their clinical quality of trauma care, and ultimately improving patients’ clinical outcomes (Fig. 1).

Setting

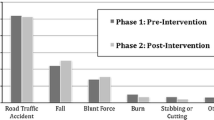

The pilot was conducted at one busy ambulance base, called “Northern Division,” within the Cape Town metropolitan area. The Northern Division base operates 13–15 ambulances daily, each staffed by a pair of providers, in 12-h shifts beginning at 07:00 and 19:00. In 2017, providers at the Northern Division transported about 7000 trauma patients, with 39% due to gunshot wounds and 43% from assaults and transport related injuries [26]. Traumatic shock comprises 6–7% of total annual trauma volume.

Participants

Northern Division has about 120 providers at the ranks of basic, intermediate, and advanced life support (BLS, ILS, ALS, respectively). All received international standard training in trauma care in their foundational education. Staff attrition is about 5% per year, and providers generally feel burned out and frustrated by the strained trauma care system [27]. Burnout and de-sensitization contribute to poor quality of trauma care [27]. In the HEET program, all ALS and ILS providers are eligible to be trainers, and all non-trainers are learners. Additionally, we also included EMS charts of patients greater than 17 years of age treated by providers in the study. Since the study focus is traumatic hemorrhage, we excluded injuries with the following mechanisms: drownings, hangings, poisonings, toxic ingestions, burns, bites or stings.

Intervention components

EMS-TruShoC is a bundle of 5 core, evidence-based, trauma interventions (derived from a prior international expert panel consensus process). The EMS-TruShoC core bundle includes the following: (i) on-scene hemorrhage control, (ii) scene time less than 10 min, (iii) large bore intravenous catheter insertion, (iv) high-flow oxygen administration, and (v) transport to a trauma center [15]. Each component is standard of care and achievable in Western Cape EMS with existing resources.

Implementation

HEET consists of structured trainings, divided into 8 sessions or modules, which were delivered by peer trainers (called facilitators) in 15-min sessions occurring every other week, during shifts, and in the back of ambulances. A small subset of all available ambulances were reserved for training, and training time was protected from being dispatched into service. Using peer facilitators in a 2:1 learner-to-facilitator ratio, HEET promotes learning intimacy and relationship building. Furthermore, training is conducted within the patient compartment of the ambulance so as to better approximate clinical context and environment while minimizing operational impact. Content is intentionally repeated to encourage retention. For example, the components of the core bundle are defined in each training session. Training sessions have a deliberate focus on the three domains of learning: knowledge transfer, skills practice, and self-efficacy enhancement. Assessments similarly focus on all three domains. Training modules use common clinical case scenarios to promote relevance and enhance adult-learning. In this pilot, HEET facilitators were ILS or ALS providers who elected to participate, and were specially trained in a 2-day program. A Western Cape EMS-appointed HEET Steering Committee oversaw the program. The Committee was comprised of six senior educators and two quality improvement managers—each with over 15 years of experience in Western Cape EMS. A subset of this committee with most familiarity of Northern Division (the “HEET Team”) conducted the pilot implementation.

Outcomes

Feasibility of implementation was the primary outcome, and feasibility of assessing implementation and clinical outcomes data were secondary outcome. We assessed implementation outcomes using the RE-AIM framework [21, 22, 28] (except for maintenance dimension which was not relevant to this initial shorter term pilot) and intervention outcomes (clinical effectiveness) using shock indices and clinical quality of care [29, 30].

Sampling

We planned to enroll all EMS providers working clinical shifts during the pilot study since the future study will pragmatically enroll all providers at participating ambulance bases; hence, no sample size estimates were calculated for this pilot study. The HEET Team solicited 12 volunteer facilitators through word of mouth to all ALS and ILS providers. Participation requirement of facilitators was simply a keen interest and being available at work during planned implementation months. Benefits of facilitation, emphasized by the HEET Team, included gaining educational credits, satisfying maintenance of certification requirements, and relationship-building with learners. Although facilitators volunteered, all remaining BLS and ILS staff on duty at the Northern Division were required to participate as learners since this was an on-the-job activity.

Procedures and data collection

Facilitators were trained by the HEET Team in September, 2017. Implementation was conducted at the Northern Division from October to December, 2017. There were 5 sources for quantitative data:

- (i)

Learner assessments—assessed knowledge, attitudes, and skills using verbal case vignettes, observation, and written questionnaires, respectively; collected pre- and post-training

- (ii)

Training rosters—collected names, training dates/times; completed by shift managers after each training session

- (iii)

Training evaluations—provided anonymous feedback on quality of content, satisfaction, relevance, and length of training; confidentially completed by learners and facilitators after each training session

- (iv)

Exit survey—assessed overall program experience, satisfaction, problems, willingness to adopt; completed by learners, facilitators, and base managers upon completion of program

- (v)

EMS clinical charts—retrospectively abstracted by a research associate using a validated chart abstraction tool; charts were completed by Northern Division providers during routine trauma care

Investigators (NM, JD) and trained research associates collected qualitative data upon program completion. We conducted interviews with learners, facilitators, base managers, and the HEET Steering Committee regarding their experiences with the program. Interviews were one-on-one, private, and semi-structured. We took detailed notes during interviews, then transcribed notes into a Microsoft Word (version 10, Redmond, WA) document. All data collectors were blinded to clinical outcome.

Analysis

Overall criteria for evaluating success (feasibility) of the pilot study were defined as follows: (i) continue to main study without modifications, i.e., feasible as-is; (ii) continue with modifications and/or close monitoring; (iii) stop—main study not feasible [31].

RE-AIM provided a framework to collect and organize implementation data. Quantitative and qualitative data were collected for 4 of the 5 RE-AIM dimension: Reach, Efficacy, Adoption, and Implementation Fidelity. Maintenance, defined as the existence of the institutionalized program after 6 months, was not applicable to this pilot study (as trainings lasted 10 weeks), and therefore not assessed. Each RE-AIM dimension contained one or more indices (Table 1). Data quality, difficulty of collecting data, and missing data were also tracked and documented. RE-AIM data was used to evaluate both implementation feasibility (the primary outcome) and implementation data collection (a secondary outcome). Feasibility of implementation was assessed by averaging 11 key feasibility indices within the RE-AIM framework (Table 1)—clinical and educational effectiveness data are not key feasibility indices, so excluded. The average score for the primary outcome was defined a priori, via deliberation and consensus decision among the investigators, as:

○ 80–100% is excellent implementation feasibility,

○ 60–79.9% is good implementation feasibility,

○ 40–59.9% is fair implementation feasibility, and

○ < 40% is poor implementation feasibility.

The feasibility of assessing implementation effectiveness (secondary outcome) was assessed by appraising the completeness of qualitative and quantitative data within the RE-AIM framework. The presence of data in most (defined as ≥ 80%) indices would favorably suggest progression to main study without any modifications, whereas presence of data in some (40–79%) indices would suggest modest modifications needed, while the presence of data in only a few (< 40%) indices would suggest very poor feasibility of implementation outcomes data collection in the future trial.

Qualitative data were thematically analyzed, by reviewing interview notes, to provide participant and stakeholder experiences. This was not a formal mixed-method study as there was no a priori plan to integrate nor co-analyze quantitative with qualitative data. Considering the pilot nature of this study, a formal deductive and thematic analysis, using independent reviewers and consensus discussion, was not conducted.

Feasibility of calculating clinical outcomes in the future trial was assessed in our pilot study by the success of calculating the average change in patients’ shock index, and separately, the average change in providers’ quality of traumatic shock care score. Shock index is heart rate divided by systolic blood pressure (calculated at the scene and on hospital arrival) [29]. Providers’ quality of traumatic shock care score is a validated score calculated by assigning unweighted points for execution of each core component of the bundle of care [23]. Comparisons between the pre- and post-intervention clinical cases were performed on categorical variables using chi square, Mantel-Haenszel chi square, or Fisher’s exact test, as appropriate. Since continuous variables were not normally distributed, comparisons were made using Wilcoxon tests. The statistical software package used for analysis was SAS/STAT version 9.4 (Copyright 2017 SAS Institute, Inc.). Feasibility was interpreted on a range, from favorable to poor, with favorable being the successful calculation of both shock index and quality of care score, and poor being the inability to calculate both, with no realistic solution for the main trial.

The primary feasibility outcome dictated the overall feasibility of the pilot study, while the secondary feasibility outcomes helped qualify whether modifications would be needed for the future main trial.

Results

In total, 121 Northern Division providers participated: 12 were trained as facilitators (4 ILS, 8 ALS), and 109 providers (56 BLS, 43 ILS, 10 ALS) were on duty during the implementation period and eligible to participate as learners (Fig. 2). Sixty-five (54%) of all providers were male. The mean age was 37.7 years (standard deviation, SD = 8). All eight EMS-TruShoC modules were delivered by facilitators, resulting in 539 learner encounters and generating 143 h of training time. Learners and facilitators returned completed evaluation forms following 99% and 96% of training sessions, respectively. The average of all implementation indices was 83% (SD = 10.3). All results are summarized in Table 1.

Reach

Ninety-two of 109 (84%) learners completed at least 6 of 8 (75%) training modules. During interviews, learners explained that training during work time facilitated attendance, and was a major factor for high participation rates (Table 2). Learners contrasted the convenience of on-shift training to traditional CME trainings that occur on their days off, which imposes challenges to their attendance. Facilitators noted learner hesitancy to participate early in the program rollout (Table 3). Reasons for non-participation of 17 learners are in Fig. 2. Lack of advanced notice to learners, and the base managers being hands-off, may have enabled some learners to not participate (Table 4).

Effectiveness

Educational outcomes

Pre- vs. post-implementation assessments indicated improvements in trauma resuscitation knowledge, skills, and self-efficacy. Eightfold more learners attained perfect assessment scores for traumatic shock knowledge post-training (35 out of 68; 52%) versus pre-training (4 out of 68 = 6%). There was a 3-fold improvement in the proportion of learners achieving perfect performance of traumatic shock skills post-training (26 out of 67; 39%) compared with pre-training (8 out of 68; 12%). There was a 2-fold improvement in proportion with high self-efficacy in recognizing and managing traumatic shock, assessed by the written questionnaire in the post-training (28 out of 67; 42%) versus pre-training (14 out of 68; 21%) phase. Participants mentioned that the peer-led format, the case-based discussion, and hands-on nature of trainings enhanced knowledge and skills transfer (Tables 2 and 3). Additionally, facilitators reported that the focus on sensitization helped improve learners’ feelings towards trauma care, although there were some items that were out of the control of care providers (e.g., scene time).

Clinical outcomes

A total of 1588 clinical trauma cases were screened, of which 739 (47%) met study criteria and were fully abstracted into the study database. Of those cases abstracted, 501 (68%) were excluded for multiple reasons (Fig. 2), with 243 (33%) missing a second set of vital signs, preventing us from calculating delta shock index (∆SI)—in our sensitivity analysis, there was no difference in provider or patient characteristics in the patients excluded versus included in our final analysis. Of the included 238 trauma patients, 143 (60%) received care pre-intervention and 95 (40%) received care post-intervention (Table 5). Seven percent more patients post-training experienced a clinically meaningful improvement in shock index (i.e., absolute decrease in shock index of at least 0.1) compared with pre-training phase (34% versus 27%). Of note, initial shock indices were similar in the pre- versus post-training phases (median (IQR) 0.89 (0.77–1.06) and 0.93 (0.77–1.12), respectively). We observed a clinically significant increase in quality of care in the post-versus pre-training phase (Table 6). Specifically, modest improvements were noted in the following core bundle areas: scene time less than 10 min; delivery of oxygen; transport to a trauma center. There was no clinically meaningful improvement noted in cases with IV catheter placement or controlling external hemorrhage. Overall, 12% of patients received 3 or 4 core bundle items pre-training and 20% received 3 or 4 core bundle items post training. Learners and facilitators explained that case-based and simulation-based training content felt very clinically and contextually relevant, thereby making it easy to readily apply training concepts and skills to clinical care (Tables 2, 3, and 4). We did encounter frequently missing data, particularly in two variables. Of 127 patients with at least 1 IV catheter inserted, no site or IV size was documented in 34 (27%) and 29 (23%) cases, respectively. In 51 cases with hemorrhage control was recorded as being performed, the specific method of control was not documented in 22 (43%) cases.

Adoption

Ten of 12 (83%) facilitators actively participated. Two ALS facilitators could not participate in trainings as one went on extended sick leave, and the other was promoted to management. Overall, the participating facilitators were very representative (in gender, rank, and years of experience) compared with the larger pool of eligible facilitators. Qualitative data indicate that, similar to learners, facilitators felt the on-the-job timing, the relative simplicity of training, and enjoyable format, facilitated their high rates of participation. Survey data from key stakeholders’ (managers and HEET Steering Committee) found that an overwhelming majority (92%) support the adoption and incorporation of this program into Western Cape EMS (Table 1). Stakeholders felt HEET was a sound, effective, and sustainable educational solution that aligned well with their EMS organization, and they would adopt the program.

Implementation Fidelity

The main issue experienced was that 2 modules required implementation in rapid succession during a catch-up phase. Facilitators explained that early in the program, administrative delays and learners “dragging their heels” extended the number of weeks allocated to completing modules 1 and 2. The implementation was adapted by running modules 3 and 4 back-to-back (i.e., “stacked training sessions”), and the intervention was adapted by excluding non-core (airway and breathing management) content to shorten modules 3 and 4. Both adaptations allowed a catch-up phase, and from module 5 onwards, administrators and participants were accustomed with the training regimen, allowing the program to run and conclude on schedule. Overall, 90% of the intervention (i.e., training content) was delivered as intended—about 10% of non-core (“airway” and “breathing”) content was removed from training materials to accelerate the overall program. Overall, 77% of the implementation strategy was delivered as originally planned, calculated by averaging the following three implementation metrics (Table 1): (i) 80% of training sessions achieved the a priori goal of completion in under 20 min, i.e., 15 min for training plus 5 min for logistics; (ii) 77% of sessions started +/− 15 min of shift change, a goal set to minimize disruptions to normal EMS operations; (iii) 75% (6 out of 8) of modules were delivered as planned. Purposeful adaptations were made to approximately 23% of our implementation strategy and 10% of our intervention content [32, 33].

Discussion

In our single-site pilot study, conducted in a resource-limited, high-trauma, international setting, we found excellent feasibility of both our primary and secondary feasibility endpoints, suggesting we can progress to conduct the main study with existing implementation and data collection strategies. This pilot is the first study to demonstrate that the implementation of EMS-TruShoC using the HEET strategy is highly feasible and implementation and clinical outcomes should be more rigorously evaluated in a larger trial. RE-AIM proved to be an excellent framework to guide the implementation and evaluation of this work. We learned four key lessons from the pilot useful to inform the design, and augment efficient conduct, of the future trial: [1] the on-site training intervention reached a very high percentage of providers and required only minimal to modest training time [2]; some clinical outcomes data were missing with high frequency [3]; collecting clinical effectiveness data consumed a large amount of effort; and [4] the implementation strategy needs to more formally include managers at the ambulance base.

First, the on-site training intervention reached a very large proportion of providers and required only minimal to modest training time (less than 15 min in 80% of trainings). The major contributory factor for high participation was the scheduling of training sessions during work hours, and the inclusion of HEET as part of change of shift procedures. Coupled with the enjoyable training format, learners and facilitators were able to frequently participate. Further, training times were kept short via tight monitoring by EMS shift managers (who released ambulances into service as soon as possible), and by facilitators who were trained to be sensitive to training time.

Second, we found that specific clinical data were missing with high frequency. In our future study, our primary aim is the change in shock index scores [29]. We noted that 33% of cases were missing data necessary to compute this outcome (Fig. 2). Seventeen percent additional cases were excluded for concern for significant head injuries (an a priori exclusion criteria, given different clinical management approaches of head injuries versus hemorrhagic shock). We will need to increase our final sample size by at least 50% in the future study, and tighten our screening criteria, to successfully assess our clinical outcomes. Additionally, we will plan to assess other robust secondary endpoints, including a plan for sensitivity analyses, given missing data.

Third, collecting clinical effectiveness data disproportionately consumed the largest proportion of research effort. We required multiple steps to collect clinical data, starting with a query of the EMS database, followed by manual screening and exclusion procedure, and ending with abstraction into our study database. We excluded 71% of 739 cases originally abstracted (for failing our inclusion criteria, one-third of which were missing our primary variable—change in shock index; Fig. 2)—cumulatively, these processes consumed the most time and personnel effort compared with any other single research activity. This tedious experience predicts that in future research, collecting clinical data will be relatively more complicated and time consuming than collecting implementation or educational data. This experience may explain why most published outcomes of emergency care educational interventions often report proximal (educational) outcomes and fail to assess clinically relevant outcomes [7, 34]. Notwithstanding, we surmounted this challenge and collected all relevant proximal and distal outcomes in this pilot. We may implement strategies to ensure higher screening efficiency for clinical data capture in our future trial.

Last, we repeatedly received feedback, from facilitators and HEET Team, that the challenges experienced implementing HEET (including reluctance by some learners, logistic challenges, and facilitators feeling pressure to go into service) stemmed from slow engagement by shift managers at the base. Shift managers are responsible for ensuring vehicles are staffed and leave the ambulance base on schedule, including other logistic responsibilities. In this pilot, while shift managers were informed about the implementation, they were not formally included in the planning and logistics until implementation. As a result, feedback suggests that while they were supportive of the program, they were disconnected from the details and not adequately invested, resulting in poor oversight and ownership of HEET. The future trial will be modified to formally include managers early and consistently in the implementation process, as recommended in “designing for dissemination” and stakeholder engagement practices [35].

The RE-AIM framework was successfully applied to this work. RE-AIM is a well-known public health framework useful to guide both program and delivery strategy planning and evaluation of implementation outcomes [21, 22]. RE-AIM has been used in a few global health reports from India, Kenya, and Australia, mostly focused on primary care and preventative interventions, we were unable to find published reports of its prior use in prehospital emergency care nor trauma care internationally [22]. Hence, we have demonstrated a proof of use of RE-AIM in a new context, which helps fill a gap in implementation science. Overall, we found RE-AIM easy to apply given its inherent simplicity and practicality as an implementation science framework [36]. We anticipate RE-AIM will offer similar ease of application in our future, planned large-scale study. Of note, we do plan to assess the fifth RE-AIM dimension (maintenance) in our future study that will have a longer follow-up period.

Our experience from this pilot study demonstrate three important concepts: first, that EMS-TruShoC can be practically implemented with HEET, and evaluated, in a busy EMS base in Africa; second, that relevant clinical outcomes data (shock index and quality of care scores) EMS-TruShoC are highly feasible to collect; and third, that there is a modest clinical outcomes benefit that warrants further assessment. We received generally positive and enthusiastic feedback from participants and stakeholders about the intervention and implementation strategy. Our pilot experience holds exciting promise for future implementation and research efforts.

Limitations and strengths

Study strengths included the multi-method (qualitative and quantitative) approach, the systematic assessment of important implementation issues using the RE-AIM framework, and inclusion of both learner and clinical patient outcomes. There are a few limitations of this pilot study. First, the study was conducted in only one site and we did not formally assess organizational context at the Northern Division base—while the future study will be conducted in the same EMS organization but with multiple sites, defining and understanding local context may be important for replication. Second, we did not assess cost-effectiveness—while we have a gross estimation of the resources and inputs, a formal micro-costing analysis may help predict costs of the future trial [37]. Third, we did not assess maintenance of the intervention in this pilot—this program is deliberately designed to have sustainable effectiveness, and although we do not foresee challenges to maintenance in our future trial, we cannot be certain as maintenance was not measured.

Conclusions

In this single-site pilot study, we successfully implemented the EMS-TruShoC educational intervention using the HEET training strategy, in a low-resource international setting. We successfully collected and analyzed quantitative and qualitative data on implementation, educational, and clinical effectiveness that will help enhance the conduct of our future trial. Overall, this pilot study suggests excellent feasibility and value of our future, planned experimental trial.

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- EMS:

-

Emergency medical services

- HEET:

-

High-efficiency EMS training

- RE-AIM:

-

Reach, efficacy, adoption, implementation fidelity, maintenance

- TruShoC:

-

Traumatic shock care

References

World Health Organization. Injuries and violence: the facts. Geneva: World Health Organization; 2010.

Norman R, Matzopoulos R, Groenewald P, Bradshaw D. The high burden of injuries in South Africa. Bull World Health Organ. 2007;85(9):695–702.

Haagsma JA, Graetz N, Bolliger I, Naghavi M, Higashi H, Mullany EC, et al. The global burden of injury: incidence, mortality, disability-adjusted life years and time trends from the Global Burden of Disease study 2013. Inj Prev. 2016;22(1):3–18.

Krug EG. World Health Organization. Injury: a leading cause of the global burden of disease. 1999.

Thind A, Hsia R, Mabweijano J, Hicks ER, Zakariah A, Mock CN. Prehospital and emergency care. In: Debas HT, Donkor P, Gawande A, Jamison DT, Kruk ME, Mock CN, editors. Essential Surgery: Disease Control Priorities, Third Edition (Volume 1). Washington (DC)2015.

Mock C, Kobusingye O, Joshipura M, Nguyen S, Arreola-Risa C. Strengthening trauma and critical care globally. Curr Opin Crit Care. 2005;11(6):568–75.

Mould-Millman NK, Sasser SM, Wallis LA. Prehospital research in sub-saharan Africa: establishing research tenets. Acad Emerg Med. 2013;20(12):1304–9.

Sasser SM, Varghese M, Joshipura M, Kellermann A. Preventing death and disability through the timely provision of prehospital trauma care. Bull World Health Organ. 2006;84(7):507.

Mould-Millman NK, Dixon JM, Sefa N, Yancey A, Hollong BG, Hagahmed M, et al. The state of emergency medical services (EMS) systems in Africa. Prehosp Disaster Med. 2017;32(3):273–83.

Nicholson B, McCollough C, Wachira B, Mould-Millman NK. Emergency medical services (EMS) training in Kenya: findings and recommendations from an educational assessment. Afr J Emerg Med. 2017;7(4):157–9.

Rowe AK, Rowe SY, Peters DH, Holloway KA, Chalker J, Ross-Degnan D. Effectiveness of strategies to improve health-care provider practices in low-income and middle-income countries: a systematic review. Lancet Glob Health. 2018;6(11):e1163–e75.

McCaul M, Clarke M, Bruijns SR, Hodkinson PW, de Waal B, Pigoga J, et al. Global emergency care clinical practice guidelines: a landscape analysis. Afr J Emerg Med. 2018;8(4):158–63.

Kobusingye OC, Hyder AA, Bishai D, Hicks ER, Mock C, Joshipura M. Emergency medical systems in low- and middle-income countries: recommendations for action. Bull World Health Organ. 2005;83(8):626–31.

Shafi S, Collinsworth AW, Richter KM, Alam HB, Becker LB, Bullock MR, et al. Bundles of care for resuscitation from hemorrhagic shock and severe brain injury in trauma patients-translating knowledge into practice. J Trauma Acute Care Surg. 2016;81(4):780–94.

Mould Millman NK, Dixon J, Lamp A, Lesch P, Lee M, Kariem B, Philander W, Mackier A, Williams C, de Vries S, Burkholder T, Ginde AA. Implementation effectiveness of a novel emergency medical services trauma training program in South Africa. Society for Academic Emergency Medicine annual meeting. Indianapolis: SAEM; 2018.

Niermeyer S. From the neonatal resuscitation program to Helping Babies Breathe: global impact of educational programs in neonatal resuscitation. Semin Fetal Neonatal Med. 2015;20(5):300–8.

Bank TW. Country Profile: South Africa 2018 [Available from: http://pubdocs.worldbank.org/en/798731523331698204/South-Africa-Economic-Update-April-2018.pdf.

Stats South Africa. Census 2011 Provincial Profile: Western Cape, Report 03-01-70. 2011 [Available from: http://www.statssa.gov.za/publications/Report-03-01-70/Report-03-01-702011.pdf].

Pillay-van Wyk V, Msemburi W, Laubscher R, Dorrington RE, Groenewald P, Glass T, et al. Mortality trends and differentials in South Africa from 1997 to 2012: second National Burden of Disease Study. Lancet Glob Health. 2016;4(9):e642–53.

Western Cape Government, Department of Health. Emergency Medical Services: Overview [Available from: https://www.westerncape.gov.za/your_gov/151].

Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–7.

Gaglio B, Shoup JA, Glasgow RE. The RE-AIM framework: a systematic review of use over time. Am J Public Health. 2013;103(6):e38–46.

Mould-Millman NK, Dixon J, Thomas J, Burkholder T, Oberfoell N, Oberfoell S, McDaniel K, Meese H, de Vries S, Wallis L, Ginde A. Measuring the quality of shock care-validation of a chart abstraction instrument. Qual Improv Res Pulmon Crit Care Med. 2018;A1482–A.

Gilbert EH, Lowenstein SR, Koziol-McLain J, Barta DC, Steiner J. Chart reviews in emergency medicine research: where are the methods? Ann Emerg Med. 1996;27(3):305–8.

Gielen AC, McDonald EM, Gary TL, Bone LR. Using the precede-proceed model to apply health behavior theories. Health Behav Health Educ Theory Res Pract. 2008;4:407–29.

Personal Communication: Western Cape Government DoH, Emergency Medical Services. In: Mould-Millman N-K, editor. Email ed2018.

Stassen W, Van Nugteren B, Stein C. Burnout among advanced life support paramedics in Johannesburg. South Africa. Emerg Med J. 2013;30(4):331–4.

Glasgow RE, Harden SM, Gaglio B, Rabin B, Smith ML, Porter GC, et al. RE-AIM planning and evaluation framework: adapting to new science and practice with a 20-year review. Front Public Health. 2019;7:64.

Bruijns SR, Guly HR, Bouamra O, Lecky F, Lee WA. The value of traditional vital signs, shock index, and age-based markers in predicting trauma mortality. J Trauma Acute Care Surg. 2013;74(6):1432–7.

Bruijns SR, Guly HR, Bouamra O, Lecky F, Wallis LA. The value of the difference between ED and prehospital vital signs in predicting outcome in trauma. Emerg Med J. 2014;31(7):579–82.

Thabane L, Ma J, Chu R, Cheng J, Ismaila A, Rios LP, et al. A tutorial on pilot studies: the what, why and how. BMC Med Res Methodol. 2010;10:1.

Rabin BA, McCreight M, Battaglia C, Ayele R, Burke RE, Hess PL, et al. Systematic, multimethod assessment of adaptations across four diverse health systems interventions. Front Public Health. 2018;6:102.

Stirman SW, Miller CJ, Toder K, Calloway A. Development of a framework and coding system for modifications and adaptations of evidence-based interventions. Implement Sci. 2013;8:65.

Haan CK, Edwards FH, Poole B, Godley M, Genuardi FJ, Zenni EA. A model to begin to use clinical outcomes in medical education. Acad Med. 2008;83(6):574–80.

Brownson RC, Colditz GA, Proctor EK. Dissemination and implementation research in health: translating science to practice: Oxford University Press; 2018.

Glasgow RE, Estabrooks PE. Pragmatic applications of RE-AIM for health care initiatives in community and clinical settings. Prev Chronic Dis. 2018;15:E02.

Keel G, Savage C, Rafiq M, Mazzocato P. Time-driven activity-based costing in health care: a systematic review of the literature. Health Policy. 2017;121(7):755–63.

Acknowledgements

We would like to express our immense gratitude to all the operational staff and managers at the Northern Division of Western Cape Government Emergency Medical Services. Additionally, we are appreciative of the substantive contributions to this project by the Western Cape EMS Human Resources Development and Continuous Quality Improvement teams.

Funding

Falck Foundation Research Grant (PI: Mould-Millman); Department of Emergency Medicine Seed grant, University of Colorado School of Medicine (PI: Mould-Millman). NIH NHLBI K12 IMPACT (IMPlementation to Achieve Clinical Transformation (IMPACT)), NIH Grant Number 1K12HL137862-01 (PI: Russel E. Glasgow; Edward Paul Havranek. Scholar: Mould-Millman).

Author information

Authors and Affiliations

Contributions

NM and AG designed the study. NM and JD drafted the manuscript. All other authors participated in reviewing and editing the manuscript. BB and KC performed the analysis. NM takes responsibility for the manuscript as a whole. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Institutional approval was obtained from Western Cape Government Emergency Medical Services. Ethics approval was obtained, prior to study participation, from University of Cape Town Human Research Ethics Committee (FWA00001637; IRB 00001938; reference 080/2017), and the Colorado Multiple Institutional Review Board (FWA00005070; IRB IORG0000433; protocol no. 17-0284).

Consent for publication

Not applicable

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Mould-Millman, NK., Dixon, J., Lamp, A. et al. A single-site pilot implementation of a novel trauma training program for prehospital providers in a resource-limited setting. Pilot Feasibility Stud 5, 143 (2019). https://doi.org/10.1186/s40814-019-0536-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40814-019-0536-0