Abstract

We introduce the sparse operator compression to compress a self-adjoint higher-order elliptic operator with rough coefficients and various boundary conditions. The operator compression is achieved by using localized basis functions, which are energy minimizing functions on local patches. On a regular mesh with mesh size h, the localized basis functions have supports of diameter \(O(h\log (1/h))\) and give optimal compression rate of the solution operator. We show that by using localized basis functions with supports of diameter \(O(h\log (1/h))\), our method achieves the optimal compression rate of the solution operator. From the perspective of the generalized finite element method to solve elliptic equations, the localized basis functions have the optimal convergence rate \(O(h^k)\) for a (2k)th-order elliptic problem in the energy norm. From the perspective of the sparse PCA, our results show that a large set of Matérn covariance functions can be approximated by a rank-n operator with a localized basis and with the optimal accuracy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Background

1.1 Main objectives and the problem setting

The main purpose of this paper is to develop a general strategy to compress a class of self-adjoint higher-order elliptic operators by localized basis functions that give optimal approximation property of the solution operator. To be more specific, suppose \({\mathcal {L}}\) is a self-adjoint elliptic operator in the divergence form

where the coefficients \(a_{\sigma \gamma }\in L^{\infty }(D) \), D is a bounded domain in \(\mathbb {R}^d\), \(\sigma = (\sigma _1, \ldots , \sigma _d)\) is a d-dimensional multiindex. We ask the question: given an integer n, what is the best rank-n compression of the operator \({\mathcal {L}}\) with localized basis functions? This question arises in many different contexts.

Consider the elliptic equation with the homogeneous Dirichlet boundary conditions

where the load \(f \in L^2(D)\). For a self-adjoint, positive definite operator \({\mathcal {L}}\), Eq. (1.2) has a unique weak solution, denoted as \({\mathcal {L}}^{-1} f\). We define the operator compression error of the basis \({\varPsi }\) as follows:

which is the optimal approximation error of \({\mathcal {L}}^{-1}\) among all positive semidefinite operators with range space spanned by \({\varPsi }\). Using \(E_{{\mathrm {oc}}}({\varPsi }; ({\mathcal {L}}+ \lambda _G)^{-1})\) for some \(\lambda _G > 0\) to quantify the compression error is useful for operators that are not invertible, such as \(-\Delta \) with periodic boundary conditions.

Without imposing the sparsity constraints on the basis \({\varPsi }\), the compression error \(E_{{\mathrm {oc}}}({\varPsi }; {\mathcal {L}}^{-1})\) achieves its minimum \(\lambda _{n+1}({\mathcal {L}}^{-1})\) if we use the first n eigenfunctions of \({\mathcal {L}}^{-1}\) to form \({\varPsi }\) (\(\lambda _n\) is the nth eigenvalue arranged in a descending order). However, the eigenfunctions are expensive to compute and do not have localized support [20, 40, 49]. In many cases, localized/sparse basis functions are preferred. For example, in the multiscale finite element method [12], localized basis functions lead to sparse linear systems and thus result in more efficient algorithms, see, e.g., [1, 2, 5, 10, 11, 22, 23, 28, 36, 39, 44]. In quantum chemistry, localized basis functions like the Wannier functions have better interpretability of the local interactions between particles (see, e.g., [26, 29, 30, 40, 47]), and also lead to more efficient algorithms [15]. In statistics, the sparse principal component analysis (SPCA) looks for sparse vectors to span the eigenspace of the covariance matrix, which leads to better interpretability compared with the PCA, see, e.g., [8, 25, 45, 46, 49].

1.2 Summary of our main results

In this paper, we study operator compression for higher-order elliptic operators. We assume that the self-adjoint elliptic operator \({\mathcal {L}}\) is coercive, bounded and strongly elliptic (to be made precise in Sect. 6.2). Under these assumptions, we construct n basis functions \({\varPsi }^{{\mathrm {loc}}} = [\psi _1^{{\mathrm {loc}}}, \ldots , \psi _n^{{\mathrm {loc}}}]\) that achieve nearly optimal performance on both ends in the accuracy–sparsity trade-off (1.10).

-

1.

They are optimally localized up to a logarithmic factor, i.e.,

$$\begin{aligned} \left| \text {supp}(\psi _i^{{\mathrm {loc}}})\right| \le \frac{C_l \log (n)}{n} \quad \forall 1 \le i \le n. \end{aligned}$$(1.4)Here, \(|\text {supp}(\psi _i^{{\mathrm {loc}}})|\) denotes the area/volume of the support of the localized function \(\psi _i^{{\mathrm {loc}}}\) in \(\mathbb {R}^d\), and the constant \(C_l\) is independent of n.

-

2.

If we use a generalized finite element method [1, 10, 22, 44] to solve the elliptic equations, we achieve the optimal convergence rate in the energy norm, i.e.,

$$\begin{aligned} \Vert {\mathcal {L}}^{-1} f - {\varPsi }^{{\mathrm {loc}}} L_n^{-1} ({\varPsi }^{{\mathrm {loc}}})^T f \Vert _H \le C_e \sqrt{\lambda _{n}({\mathcal {L}}^{-1})} \Vert f\Vert _2 \quad \forall f \in L^2(D), \end{aligned}$$(1.5)where \(L_n\) is the stiffness matrix under the basis \({\varPsi }^{{\mathrm {loc}}}\), \(\Vert \cdot \Vert _H\) is the associated energy norm, and \(C_e\) is independent of n.

-

3.

For the sparse operator compression problem, we achieve the optimal approximation error up to a constant, i.e.,

$$\begin{aligned} E_{{\mathrm {oc}}}({\varPsi }^{{\mathrm {loc}}}; {\mathcal {L}}^{-1}) \le C_e^2 \lambda _n({\mathcal {L}}^{-1}), \end{aligned}$$(1.6)where \(E_{{\mathrm {oc}}}({\varPsi }^{{\mathrm {loc}}}; {\mathcal {L}}^{-1})\) is the operator compression error defined in Eq. (1.3).

We will focus on the theoretical analysis of the approximation accuracy (1.5) and the localization of the basis functions (1.4).

1.3 Our construction

To construct such localized basis functions \({\varPsi }^{{\mathrm {loc}}} = [\psi _1^{{\mathrm {loc}}}, \ldots , \psi _n^{{\mathrm {loc}}}]\), we first partition the physical domain D using a regular partition \(\{\tau _i\}_{i=1}^m\) with mesh size h. We pick \(\{\varphi _{i,q}\}_{q=1}^Q\) to be a set of orthogonal basis functions of \({\mathcal {P}}_{k-1}(\tau _i)\), which is the space of all d-variate polynomials of degree at most \(k-1\) on the patch \(\tau _i \subset D\), and \(Q = \left( {\begin{array}{c}k+d-1\\ d\end{array}}\right) \) is the dimension of the space \({\mathcal {P}}_{k-1}(\tau _i)\). For \(r > 0\), let \(S_r\) be the union of the subdomains \(\tau _j\) that intersect with \(B(x_i, r)\) (for some \(x_i \in \tau _i\)) and let \(\psi _{i,q}^{{\mathrm {loc}}}\) be the minimizer of the following quadratic problem:

Here, the space \(H = \{{\mathcal {L}}^{-1} f : f\in L^2(D)\}\) is the solution space of the operator \({\mathcal {L}}\), and \(\Vert \cdot \Vert _H\) is the energy norm associated with \({\mathcal {L}}\) and the prescribed boundary condition. It is important to point out that the boundary condition of the elliptic problem is already incorporated in the above optimization problem through the solution space H and the definition of the energy norm \(\Vert \cdot \Vert _H\). This variational formulation is very general and can take into account lower-order terms very easily.

Collecting all the \(\psi _{i,q}^{{\mathrm {loc}}}\) for \(1\le i\le m\) and \(1\le q\le Q\) together, we get our basis \({\varPsi }^{{\mathrm {loc}}}\). We will prove that for \(r = \mathcal {O}( h \log (1/h))\),

-

1.

they achieve the optimal convergence rate to solve the elliptic equation, i.e.,

$$\begin{aligned} \Vert {\mathcal {L}}^{-1} f - {\varPsi }^{{\mathrm {loc}}} L_n^{-1} ({\varPsi }^{{\mathrm {loc}}})^T f \Vert _H \le C_e h^k \Vert f\Vert _2 \quad \forall f \in L^2(D), \end{aligned}$$(1.8)where the constant \(C_e\) is independent of n.

-

2.

they achieve the optimal approximation error to approximate the elliptic operator, i.e.,

$$\begin{aligned} E_{{\mathrm {oc}}}({\varPsi }^{{\mathrm {loc}}}; {\mathcal {L}}^{-1}) \le C_e^2 h^{2k}. \end{aligned}$$(1.9)

For \(n = m Q\), we can show that the nth largest eigenvalue of \({\mathcal {L}}^{-1}\) is of the order \(h^{2k}\), i.e., \(\lambda _n({\mathcal {L}}^{-1}) = \mathcal {O}(h^{2k})\). Therefore, the optimality above is exactly the optimality described in Eqs. (1.5) and (1.6).

1.4 Comparison with other existing methods

Our approach for operator compression originates at the MsFEM and numerical homogenization, where localized multiscale basis functions are constructed to approximate the solution space of some elliptic PDEs with multiscale coefficients; see [1, 2, 5, 10, 12, 22, 28, 35, 36, 39, 44]. Specifically, our work is inspired by the work presented in [28, 36], in which multiscale basis functions with support size \(O(h\log (1/h))\) are constructed for second-order elliptic equations with rough coefficients and homogeneous Dirichlet boundary conditions. In this paper, we generalize the construction [36] and propose a general framework to compress higher-order elliptic operators with optimal compression accuracy and optimal localization.

We remark that although we use the framework presented in [36] as the direct template for our method, to the best of our knowledge, the local orthogonal decomposition (LOD) [28], in the context of multidimensional numerical homogenization, contains the first rigorous proof of optimal exponential decay rates with a priori estimates (leading to localization to subdomains of size \(h \log (1/h)\), with basis functions derived from the Clement interpolation operator). The idea of using the preimage of some continuous or discontinuous finite element space under the partial differential operator to construct localized basis functions in Galerkin-type methods was even used earlier, e.g., in [16], although it did not provide a constructive local basis. In addition to establishing the exponential decay of the basis (for general nonconforming measurements of the solution, we will generalize the proof of this result to higher-order PDEs and measurements formed by local polynomials), a major contribution of [36] was to introduce a multiresolution operator decomposition for second-order elliptic PDEs with rough coefficients.

There are several new ingredients in our analysis that are essential for us to obtain our results for higher-order elliptic operators with rough coefficients. First of all, we prove an inverse energy estimate for functions in \(\Psi \), which is crucial in proving the exponential decay. In particular, Lemma 4.1 is an essential step to obtaining the inverse energy estimate for higher-order PDEs that is not found in [28] nor [36]. We remark that Lemma 3.12 in [36] provides such an estimate for second-order elliptic operators, by utilizing a relation between the Laplacian operator \(\Delta \) and the d-dimensional Brownian motion. It is not straightforward to extend this probabilistic argument to higher-order cases. In contrast, our inverse energy estimate is valid for any 2kth-order elliptic operators and is tighter than the estimation in [36] for the second-order case. Secondly, we prove a projection-type polynomial approximation property in \(H^k(D)\). This polynomial approximation property plays an essential role in both estimating the compression accuracy and in localizing the basis functions. Thirdly, we propose the notion of the strong ellipticity to analyze the higher-order elliptic operators and show that strong ellipticity is only slightly stronger than the standard uniform ellipticity. Very recently, the authors of [37] introduce the Gaussian cylinder measure and successfully generalize the probabilistic framework in [35, 36] to a much broader class of operators, including higher-order elliptic operators without requiring the strong ellipticity.

As in [28, 36], the error bound in our convergence analysis blows up for fixed oversampling ratio r / h. To achieve the desired \(O(h^k)\) accuracy in the energy norm, we require \(r/h=O(\log (1/h))\). There has been some previous attempt to study the convergence of MsFEM using oversampling techniques with r / h being fixed, see, e.g., [18, 41]. In particular, the authors of [18, 41] showed that if the oversampling ratio r / h is fixed, the accuracy of the numerical solution will depend on the regularity of the solution and cannot be guaranteed for problems with rough coefficients. By imposing \(r/h=O(\log (1/h))\), the authors of [18, 41] proved that the MsFEM with constrained oversampling converges with the desired accuracy O(h).

There has been some previous work for second-order elliptic PDEs by using basis functions of support size O(h), see, e.g., [2, 21]. However, they need to use \(O(\log (1/h))\) basis functions associated with each coarse finite element to recover the O(h) accuracy. The computational complexity of this approach is comparable to the one that we present in this paper. It is worth mentioning that the authors of [21] use a local oversampling operator to construct the optimal local boundary conditions for the nodal multiscale basis and enrich the nodal multiscale basis with optimal edge multiscale basis. Moreover, the method in [21] allows an explicit control of the approximation accuracy in the offline stage by truncating the SVD of the oversampling operator. In [21], the authors demonstrated numerically that this method is robust to high-contrast problems and the number of basis functions per coarse element is typically small. We remark that the recently developed generalized multiscale finite element method (GMsFEM) [5, 10] has provided another promising approach in constructing multiscale basis functions with support size O(h).

Another popular way to formulate the operator compression problem is to solve the following \(l^1\) penalized variational problem:

where \(\Vert \psi _i\Vert _{H}\) is the energy norm induced by the operator \({\mathcal {L}}\). In problem (1.10), enforcing \(\Vert \psi _i\Vert _{H}\) to be small leads to a small compression error, enforcing \(\Vert \psi _i\Vert _1\) to be small leads to a sparse basis function, and \(\lambda >0\) is a parameter to control the trade-off between the accuracy and sparsity.

The sparse PCA (SPCA) is closely related to the above \(l^1\)-based optimization problem. Given a covariance function K(x, y), the SPCA solves a variational problem similar to Eq. (1.10):

where \((\psi _i, {\mathcal {K}}\psi _i) := \int _D \int _D K(x,y) \psi _i(x) \psi _i(y)\mathrm {d}x\,\mathrm {d}y\). In the SPCA (1.11), we have the minus sign in front the variational term because we are interested in the eigenspace corresponding to the largest n eigenvalues. Although the \(l^1\) approach performs well in practice, neither Problem (1.10) nor the SPCA (1.11) is convex, and one needs to use some sophisticated techniques to solve the non-convex optimization problem or its convex relaxation; see, e.g., [8, 26, 40, 45, 49].

In comparison with the \(l^1\)-based optimization method or the SPCA, our approach has the advantage that this construction will guarantee that \(\psi _{i,q}\) decays exponentially fast away from \(\tau _i\). This exponential decay justifies the local construction of the basis functions in Eq. (1.7). Moroever, our construction (1.7) is a quadratic optimization with linear constraints, which can be solved as efficiently as solving an elliptic problem on the local domain \(S_r\). The computational complexity to obtain all n localized basis functions \(\{\psi _i^{{\mathrm {loc}}}\}_{i=1}^n\) is only of order \(N\log ^{3d}(N)\) if a multilevel construction is employed, where N is the degree of freedom in the discretization of \({\mathcal {L}}\); see [36]. In contrast, the orthogonality constraint in Eq. (1.10) is not convex, which introduces additional difficulties in solving the problem. Finally, our construction of \(\{\psi _i^{{\mathrm {loc}}}\}_{i=1}^n\) is completely decoupled, while all the basis functions in Eq. (1.10) are coupled together. This decoupling leads to a simple parallel execution and thus makes the computation of \(\{\psi _i^{{\mathrm {loc}}}\}_{i=1}^n\) even more efficient.

The rest of the paper is organized as follows. In Sect. 2, we introduce the abstract framework of the sparse operator compression. In Sect. 3, we prove a projection-type polynomial approximation property for the Sobolev spaces, which can be seen as a generalization of the Poincare inequality for functions with higher regularity. This polynomial approximation property is critical in our analysis of the higher-order case. It plays a role similar to that of the Poincare inequality in the analysis of the second-order elliptic operator. In Sect. 4, we prove the inverse energy estimate by scaling. In Sect. 5, we use the second-order elliptic PDE to illustrate the main idea of our analysis. In Sect. 6, we first introduce the notion of strong ellipticity and then prove the exponential decay of the constructed basis function for strongly elliptic operators. In Sect. 7, we localize the basis functions and provide the convergence rate for the corresponding MsFEM and the compression rate for the corresponding operator compression. Finally, we present several numerical results to support the theoretical findings in Sect. 8. Some concluding remarks are made in Sect. 9 and a few technical proofs are deferred to the “Appendix.”

2 Operator compression

In this section, we provide an abstract and general framework to compress a bounded self-adjoint positive semidefinite operator \({\mathcal {K}}: X \rightarrow X\), where X can be any separable Hilbert space with inner product \((\cdot , \cdot )\). In the case of operator compression of an elliptic operator \({\mathcal {L}}\), \({\mathcal {K}}\) plays the role of the solution operator \({\mathcal {L}}^{-1}\) and \(X = L^2(D)\). In the case of the SPCA, \({\mathcal {K}}\) plays the role of the covariance operator. In Sect. 2.1, we introduce the Cameron–Martin space, which plays the role of the solution space of \({\mathcal {L}}\). In Sect. 2.2, we provide our main theorem to estimate the compression error. We will use this abstract framework to compress elliptic operators in the rest of the paper.

2.1 The Cameron–Martin space

Suppose \(\{(\lambda _n, e_n)\}_{n=1}^{\infty }\) are the eigen pairs of the operator \({\mathcal {K}}\) with the eigenvalues \(\{\lambda _n\}_{n=1}^{\infty }\) in a descending order. We have \(\lambda _n\ge 0\) for all n since \({\mathcal {K}}\) is self-adjoint and positive semidefinite. From the spectral theorem of a self-adjoint operator, we know that \(\{e_n)\}_{n=1}^{\infty }\) forms an orthonormal basis of X.

Lemma 2.1

Let \({\mathcal {K}}(X)\) be the range space of \({\mathcal {K}}\). We have

-

1.

\({\mathcal {K}}(X)\) is an inner product space with inner product defined by

$$\begin{aligned} ( {\mathcal {K}}\varphi _1, {\mathcal {K}}\varphi _2 )_H = ({\mathcal {K}}\varphi _1, \varphi _2) \qquad \forall \varphi _1, \varphi _2 \in X. \end{aligned}$$(2.1) -

2.

\({\mathcal {K}}(X)\) is continuously imbedded in X.

-

3.

\({\mathcal {K}}(X)\) is dense in X if the null space of \({\mathcal {K}}\) only contains the origin, i.e., \(\mathrm {null}({\mathcal {K}}) = \{\varvec{0}\}\).

Proof

-

1.

Since \({\mathcal {K}}\) is self-adjoint, we have \(( {\mathcal {K}}\varphi _1, {\mathcal {K}}\varphi _2 )_H = ( {\mathcal {K}}\varphi _2, {\mathcal {K}}\varphi _1 )_H\). The linearity and nonnegativity are obvious. Finally, if \(( {\mathcal {K}}\varphi , {\mathcal {K}}\varphi )_H = 0\) for some \(\varphi \in X\), then \(({\mathcal {K}}\varphi , \varphi ) = 0\). Suppose that \(\varphi = \sum _n \alpha _n e_n\) by expanding \(\varphi \) with eigenvectors of \({\mathcal {K}}\). Then, we have \(({\mathcal {K}}\varphi , \varphi ) = \sum _n \lambda _n \alpha _n^2 = 0\). Therefore, \(\alpha _n = 0\) for all \(\lambda _n > 0\). Equivalently, we obtain \(\varphi \in \mathrm {null}({\mathcal {K}})\), i.e., \({\mathcal {K}}\varphi = 0\).

-

2.

Since \(\lambda _n^2 \le \lambda _1 \lambda _n\) for all \(n\in \mathbb {N}\), we have \({\mathcal {K}}^2 \preceq \lambda _1 {\mathcal {K}}\). Then, we obtain

$$\begin{aligned} \sqrt{({\mathcal {K}}\varphi , {\mathcal {K}}\varphi )} \le \sqrt{ \lambda _1 ({\mathcal {K}}\varphi , \varphi )} = \sqrt{ \lambda _1} \sqrt{ ( {\mathcal {K}}\varphi , {\mathcal {K}}\varphi )_H}, \end{aligned}$$(2.2)where we have used the definition of \(( \cdot , \cdot )_H\) in Eq. (2.1) in the last step.

-

3.

If \(\mathrm {null}({\mathcal {K}}) = \{\varvec{0}\}\), we have \(\text {span}\{e_n, n\ge 1\} \subset {\mathcal {K}}(X)\). Then, \({\mathcal {K}}(X)\) is dense in X. \(\square \)

We define the Cameron–Martin space H as the completion of \({\mathcal {K}}(X)\) with respect to the norm \(\sqrt{( \cdot , \cdot )_H}\). Then, H is a separable Hilbert space and we have the following lemma.

Lemma 2.2

-

1.

H can be continuously embedded into X.

-

2.

H is dense in X if \(\mathrm {null}({\mathcal {K}}) = \{\varvec{0}\}\).

-

3.

For all \(\psi \in X\) and all \(f \in H\), we have

$$\begin{aligned} (f, {\mathcal {K}}\psi )_H = (f, \psi ). \end{aligned}$$(2.3)

Proof

-

1.

By the continuous imbedding from \({\mathcal {K}}(X)\) to X, we know that a Cauchy sequence in \({\mathcal {K}}(X)\) is also a Cauchy sequence in X. Therefore, we have \(H \subset X\). By Eq. (2.2) and the the continuity of norms, we have \((\psi , \psi ) \le \lambda _1 (\psi , \psi )_H\) for any \(\psi \in H\).

-

2.

It is obvious from item 3 in Lemma 2.1.

-

3.

If \(f \in {\mathcal {K}}(X)\), Eq. (2.3) is exactly the definition of \((\cdot , \cdot )_H\) in Eq. (2.1). By the continuity of the inner product, Eq. (2.3) is true for any \(f \in H\). \(\square \)

2.2 Operator compression

Suppose H is an arbitrary separable Hilbert space and \(\Phi \subset H\) is n-dimensional subspace in H with basis \(\{\varphi _i\}_{i=1}^n\). In the rest of the paper, \({\mathcal {P}}_{\Phi }^{(H)}\) denotes the orthogonal projection from a Hilbert space H to its subspace \(\Phi \). With this notation, we present our theorem for error estimates below.

Theorem 2.1

Suppose there is a n-dimensional subspace \(\Phi \subset X\) with basis \(\{\varphi _i\}_{i=1}^n\) such that

Let \(\Psi \) be the n-dimensional subspace in H (also in X) spanned by \(\{{\mathcal {K}}\varphi _i\}_{i=1}^n\). Then

-

1.

For any \(u \in {\mathcal {K}}(X)\) and \(u = {\mathcal {K}}f\), we have

$$\begin{aligned} \Vert u - {\mathcal {P}}_{\Psi }^{(H)} u \Vert _{H} \le k_n \Vert f\Vert _X. \end{aligned}$$(2.5) -

2.

For any \(u \in {\mathcal {K}}(X)\) and \(u = {\mathcal {K}}f\), we have

$$\begin{aligned} \Vert u - {\mathcal {P}}_{\Psi }^{(H)} u \Vert _{X} \le k_n^2 \Vert f\Vert _X. \end{aligned}$$(2.6) -

3.

We have

$$\begin{aligned} \Vert {\mathcal {K}}- {\mathcal {P}}_{\Psi }^{(H)} {\mathcal {K}}\Vert \le k_n^2, \end{aligned}$$(2.7)where \(\Vert \cdot \Vert \) is the induced operator norm on \(\mathcal {B}(X,X)\). Moreover, the rank-n operator \({\mathcal {P}}_{\Psi }^{(H)} {\mathcal {K}}: X \rightarrow X \) is self-adjoint.

In Theorem 2.1, by using a projection-type approximation property of \(\Phi \) in H, i.e., Eq. (2.4), we obtain the error estimates of the multiscale finite element method with finite element basis \(\{{\mathcal {K}}\varphi _i\}_{i=1}^n\) in the energy norm, i.e., Eq. (2.5). We will take \(\Phi \) as the discontinuous piecewise polynomial space later, which is a poor finite element space for elliptic equations with rough coefficients. However, after smoothing \(\Phi \) with the solution operator \({\mathcal {K}}\), the smoothed basis functions \(\{{\mathcal {K}}\varphi _i\}_{i=1}^n\) have the optimal convergence rate. This data-dependent methodology to construct finite element spaces was pioneered by the generalized finite element (GFEM) [1, 44], the multiscale finite element method (MsFEM) [12, 22, 24], and numerical homogenization [28, 36].

Our error analysis is different from the traditional finite element error analysis in two aspects. First of all, the traditional error analysis relies on an interpolation type approximation property where higher regularity is required. For example, the error analysis for the FEM with standard linear nodal basis functions for the Poisson equation requires the following interpolation type approximation:

where \(\mathcal {I}_h u\) is the piecewise linear interpolation of the solution u. In Eq. (2.8), one assumes \(u \in H^2(D)\), but this is not the case for elliptic operators with rough coefficients. Secondly, in our projection-type approximation property (2.4) the error is measured by the “weaker” \(\Vert \cdot \Vert _X\) norm, while in the traditional interpolation type approximation property the error is measured by the “stronger” \(\Vert \cdot \Vert _H\) norm. In this sense, our error estimate relies on weaker assumptions. As far as we know, this kind of error estimate was first introduced in Proposition 3.6 in [36].

Proof of Theorem 2.1

-

1.

For an arbitrary \(v \in \Psi \), due to the definition of \(\Psi \), we can write \(v = {\mathcal {K}}( \sum _{i=1}^n c_i \varphi _i )\), and thus we get \(u - v = {\mathcal {K}}( f - \sum _{i=1}^n c_i \varphi _i )\). By Lemma 2.2, we have

$$\begin{aligned} \begin{aligned} \Vert u - v\Vert _{H}^2&= \left( u - v, f - \sum _{i=1}^n c_i \varphi _i\right) \\&= \left( u - v - {\mathcal {P}}_{\Phi }^{(X)} (u-v), f - \sum _{i=1}^n c_i \varphi _i\right) + \left( {\mathcal {P}}_{\Phi }^{(X)} (u-v), f - \sum _{i=1}^n c_i \varphi _i\right) . \end{aligned} \end{aligned}$$By choosing \(c_i\) such that \(\sum _{i=1}^n c_i \varphi _i = {\mathcal {P}}_{\Phi }^{(X)} (f)\), the second term vanishes. Then, we obtain

$$\begin{aligned} \begin{aligned} \Vert u - v\Vert _{H}^2&= \left( u - v - {\mathcal {P}}_{\Phi }^{(X)} (u-v), f - \sum _{i=1}^n c_i \varphi _i\right) \\&\le \Vert u - v - {\mathcal {P}}_{\Phi }^{(X)} (u-v)\Vert _X \Vert f - {\mathcal {P}}_{\Phi }^{(X)} (f)\Vert _X \le k_n \Vert u - v\Vert _{H} \Vert f\Vert _X \end{aligned} \end{aligned}$$Therefore, we conclude \(\Vert u - v\Vert _{H} \le k_n \Vert f\Vert _X\).

-

2.

We use the Aubin–Nistche duality argument to get the estimation in item 2. Let \(v = {\mathcal {K}}( u - {\mathcal {P}}_{\Psi }^{(H)} u )\). On one hand, we get

$$\begin{aligned} (u - {\mathcal {P}}_{\Psi }^{(H)} u, v - {\mathcal {P}}_{\Psi }^{(H)} v)_{H} = (u - {\mathcal {P}}_{\Psi }^{(H)} u, v)_{H} = (u - {\mathcal {P}}_{\Psi }^{(H)} u, u - {\mathcal {P}}_{\Psi }^{(H)} u)_{X} = \Vert u - {\mathcal {P}}_{\Psi }^{(H)} u\Vert _{X}^2. \end{aligned}$$On the other hand, we obtain

$$\begin{aligned} (u - {\mathcal {P}}_{\Psi }^{(H)} u, v - {\mathcal {P}}_{\Psi }^{(H)} v)_{H} \le \Vert u - {\mathcal {P}}_{\Psi }^{(H)} u\Vert _{H} \Vert v - {\mathcal {P}}_{\Psi }^{(H)} v\Vert _{H} \le k_n \Vert f\Vert _X \, k_n \Vert u - {\mathcal {P}}_{\Psi }^{(H)} u\Vert _X. \end{aligned}$$We have used the result of item 1 in the last step. Combining these two estimates, the result follows.

-

3.

From the last item, we obtain that \(\Vert {\mathcal {K}}f - {\mathcal {P}}_{\Psi }^{(H)} {\mathcal {K}}f \Vert _X \le k_n^2 \Vert f\Vert _X\) for any \(f \in X\). Therefore, we conclude \(\Vert {\mathcal {K}}- {\mathcal {P}}_{\Psi }^{(H)} {\mathcal {K}}\Vert \le k_n^2\). Now, we prove that \({\mathcal {P}}_{\Psi }^{(H)} {\mathcal {K}}\) is self-adjoint. For any \(x_1, x_2 \in X\), by definition of H-norm we have

$$\begin{aligned} (x_1, {\mathcal {P}}_{\Psi }^{(H)} {\mathcal {K}}x_2) = ({\mathcal {K}}x_1, {\mathcal {P}}_{\Psi }^{(H)} {\mathcal {K}}x_2)_H. \end{aligned}$$Since \({\mathcal {P}}_{\Psi }^{(H)}\) is self-adjoint in H, we have

$$\begin{aligned} ({\mathcal {K}}x_1, {\mathcal {P}}_{\Psi }^{(H)} {\mathcal {K}}x_2)_H = ({\mathcal {P}}_{\Psi }^{(H)} {\mathcal {K}}x_1, {\mathcal {K}}x_2)_H = ({\mathcal {P}}_{\Psi }^{(H)} {\mathcal {K}}x_1, x_2), \end{aligned}$$where we have used the definition of H-norm again in the last step. \(\square \)

Although the basis functions \(\{{\mathcal {K}}\varphi _i\}_{i=1}^n\) have good approximation accuracy, they are typically not localized. Therefore, we construct another set of basis functions \(\{\psi _i\}_{i=1}^{n}\) for \(\Psi \) via the following variational approach, which results in basis functions with good localization properties. For any given \(i \in \{1, 2, \ldots , n\}\), consider the following quadratic optimization problem

Define \(\Theta \in \mathbb {R}^{n \times n}\) by

It is easy to verify that \(\{{\mathcal {K}}\varphi _i\}_{i=1}^n\) are linearly independent if and only if \(\Theta \) is invertible. We will write \(\Theta ^{-1}\) as its inverse and \(\Theta ^{-1}_{i,j}\) as the (i, j)th entry of \(\Theta ^{-1}\). It is not difficult to prove the following properties of \(\psi _i\), which is defined as the unique minimizer of Eq. (2.9).

Theorem 2.2

If \(\mathrm {null}({\mathcal {K}}) \cap \Phi = \{\varvec{0}\}\) holds true, then we have

-

1.

The optimization problem (2.9) admits a unique minimizer \(\psi _i\), which can be written as

$$\begin{aligned} \psi _i = \sum _{j=1}^n \Theta _{i,j}^{-1} {\mathcal {K}}\varphi _j. \end{aligned}$$(2.11) -

2.

For \(w \in \mathbb {R}^n\), \(\sum _{i=1}^n w_i\psi _i\) is the minimizer of \(\Vert \psi \Vert _{H}\) subject to \((\varphi _j, \psi ) = w_j\) for \(j = 1,2, \ldots , n\). Moreover, for any \(\psi \) which satisfies \((\varphi _j, \psi ) = w_j\) for \(j = 1,2, \ldots , n\), we have

$$\begin{aligned} \Vert \psi \Vert _H^2 = \left\| \sum _{i=1}^n w_i\psi _i\right\| _H^2 + \left\| \psi - \sum _{i=1}^n w_i\psi _i\right\| _H^2. \end{aligned}$$(2.12) -

3.

\((\psi _i, \psi _j)_H = \Theta ^{-1}_{i,j}\).

With a good choice of the space \(\Phi \) and its basis \(\{\varphi _i\}_{i=1}^n\), the energy-minimizing basis \(\psi _i\), defined in Eq. (2.9), enjoys good localization properties. We will prove that the energy-minimizing basis function \(\psi _i\) decays exponentially fast away from its associated patch. The localization property justifies the following local construction of the basis functions:

where \(S_i \subset D\) is a neighborhood of the patch that \(\psi _i\) is associated with. Compared with Eq. (2.9), the localized basis \(\psi _{i}^{{\mathrm {loc}}}\) is obtained by solving exactly the same quadratic problem but on a local domain \(S_i\).

To compress elliptic operators with order 2k, we take \(\Phi \) as the space of (discontinuous) piecewise polynomials, with degree no more than \(k-1\). We take its basis as \(\{\varphi _{i,q}\}_{i=1, q=1}^{m, Q}\), where \(Q := \left( {\begin{array}{c}k+d-1\\ d\end{array}}\right) \) is the dimension of the d-variate polynomial space with degree no more than \(k-1\) and \(\{\varphi _{i,q}\}_{q=1}^{Q}\) is an orthonormal basis of the polynomial space on the patch \(\tau _i\). Two main theoretical results in this paper are as follows.

-

1.

The basis function \(\psi _i\) decays exponentially fast away from its associated patch; see Theorems 6.3 and 6.4.

-

2.

The localized basis function \(\psi _i^{{\mathrm {loc}}}\) approximates \(\psi _i\) accurately; see Theorem 7.1. Meanwhile, the compression rate \(E_{{\mathrm {oc}}}({\varPsi }^{{\mathrm {loc}}}; {\mathcal {L}}^{-1})\) is the same as \(E_{{\mathrm {oc}}}({\varPsi }; {\mathcal {L}}^{-1})\); see Theorem 7.2 and Corollary 7.3.

3 A projection-type polynomial approximation property

The following projection-type polynomial approximation property in the Sobolev space \(H^k(D)\) plays an essential role in both obtaining the optimal approximation error and proving the exponential decay of the energy-minimizing basis functions. It can be viewed as a generalized Poincare inequality.

Theorem 3.1

Suppose \(\Omega \subset \mathbb {R}^d\) is affine equivalent to \(\widehat{\Omega }\), i.e., there exists an invertible affine mapping

such that \(F(\widehat{\Omega }) = \Omega \). Let h be the diameter of \(\Omega \) and \(\delta h\) be the maximum diameter of a ball inscribed in \(\Omega \). Let the mapping \(\Pi : H^{k+1}(\Omega ) \rightarrow {\mathcal {P}}_k(\Omega )\) be the projection onto the polynomial space with degree no greater than k in \(L^2(\Omega )\). Then, there exists a constant \(C(k, \widehat{\Omega })\) such that for any \(u \in H^{k+1}(\Omega )\) and any \(0 \le p \le k+1\)

To prove Theorem 3.1, we use a basic result about the Sobolev spaces, due to J. Deny and J.L. Lions, which pervades the mathematical analysis of the finite element method: over the quotient space \(H^{k+1}(D)/{\mathcal {P}}_k(D)\), the seminorm \(|\cdot |_{k+1, D}\) is a norm equivalent to the quotient norm. We will use the following theorem (Theorem 3.1.4 in [6]), to prove Theorem 3.1.

Theorem 3.2

For some integers \(k \ge 0\) and \(m \ge 0\), let \(H^{k+1}(\widehat{\Omega })\equiv W^{k+1,2}(\widehat{\Omega })\) and \(H^m(\widehat{\Omega })\equiv W^{m,2}(\widehat{\Omega })\) be Sobolev spaces satisfying the inclusion

and let \(\widehat{\Pi } : H^{k+1}(\widehat{\Omega }) \rightarrow H^m(\widehat{\Omega })\) be a continuous linear mapping such that

For any open set \(\Omega \) which is affine equivalent to the set \(\widehat{\Omega }\) (see Eq. (3.1)), let the mapping \(\Pi _{\Omega }\) be defined by

for all functions \(\widehat{v} \in H^{k+1}(\widehat{\Omega })\) and \(v \in H^{k+1}(\Omega )\) in the correspondence \((\widehat{v}: \widehat{\Omega }\rightarrow \mathbb {R}) \rightarrow (v = \widehat{v}\circ F^{-1}: \Omega \rightarrow \mathbb {R})\). Then, there exists a constant \(C(\widehat{\Pi }, \widehat{\Omega })\) such that, for all affine-equivalent sets \(\Omega \),

where \(h = \text {diam}(\Omega )\) and \(\delta h\) is the diameter of the biggest ball contained in \(\Omega \).

By specializing the operator \(\widehat{\Pi }\) to be the projection of \(H^{k+1}(\widehat{\Omega })\) to the polynomial space \({\mathcal {P}}_k(\widehat{\Omega })\) in \(L^2(\widehat{\Omega })\), we can prove Theorem 3.1.

Proof of Theorem 3.1

Let \(\widehat{\Pi }: H^{k+1}(\widehat{\Omega }) \rightarrow {\mathcal {P}}_k(\widehat{\Omega })\) be the orthogonal projection in \(L^2(\widehat{\Omega })\). Let \(F:\widehat{\Omega } \rightarrow \Omega \) be the invertible linear map and write \(F(\widehat{x}) = B \widehat{x} + b\). Define \(\Pi _{\Omega }\) as

for all functions \(\widehat{v} \in H^{k+1}(\widehat{\Omega })\) and \(v \in H^{k+1}(\Omega )\) in the correspondence of the linear mapping. In the following, we prove that \(\Pi _{\Omega }:H^{k+1}(\Omega ) \rightarrow H^{k+1}(\Omega )\) is indeed the orthogonal projection from \(H^{k+1}(\Omega )\) to \({\mathcal {P}}_k(\Omega )\) in \(L^2(\Omega )\).

First of all, we have \(\Pi _{\Omega } v = (\widehat{\Pi } \widehat{v}) \circ F^{-1}\) from definition. Since \(\widehat{\Pi } \widehat{v} \in {\mathcal {P}}_k(\widehat{\Omega })\), we have \(\Pi _{\Omega } v \in {\mathcal {P}}_k(\Omega )\). Secondly, for any \(v \in {\mathcal {P}}_k(\Omega )\), \(\widehat{v} = v\circ F \in {\mathcal {P}}_k(\widehat{\Omega })\), and thus \(\widehat{\Pi } \widehat{v} = \widehat{v}\) by the definition of \(\widehat{\Pi }\). Therefore, we have \(\Pi _{\Omega } v = \widehat{v} \circ F^{-1} = v\) for any \(v \in {\mathcal {P}}_k(\Omega )\). Thirdly, by changing variable with \(x = F(\widehat{x})\), for any \(v \in H^{k+1}(\Omega )\) and any \(p(x) \in {\mathcal {P}}_k(\Omega )\), we have

In the last equality, we have used the fact that \(\widehat{p} \in {\mathcal {P}}_k(\widehat{\Omega })\) if \(p \in {\mathcal {P}}_k(\Omega )\) and the fact that \(\widehat{\Pi }: H^{k+1}(\widehat{\Omega }) \rightarrow {\mathcal {P}}_k(\widehat{\Omega })\) is the orthogonal projection in \(L^2(\widehat{\Omega })\). Therefore, the kernel space of \(\Pi _{\Omega }\) is orthogonal to its range space, i.e., \({\mathcal {P}}_k(\Omega )\). With the three points above, we have proved that \(\Pi _{\Omega }\) is the orthogonal projection from \(H^{k+1}(\Omega )\) to \({\mathcal {P}}_k(\Omega )\) in \(L^2(\Omega )\).

Finally, applying Theorem 3.2 with \(\widehat{\Pi }\) and \(\Pi _{\Omega }\) above, we prove Theorem 3.1 with the constant \(C(k, \widehat{\Omega }) := C(\widehat{\Pi }, \widehat{\Omega })\) in Eq. (3.3). \(\square \)

We also give the following theorem, which is a direct result of the Friedrichs’ inequality; see, e.g., [34].

Theorem 3.3

Let \(\Omega _h\) be a smooth, bounded, open subset of \(\mathbb {R}^d\) with diameter at most h. There exists a positive constant \(C_f\) such that

Here, \(C_f = C_f(d,k)\) depends only on the physical dimension d and the order of the derivative k.

4 An inverse energy estimation by scaling

In the sparse operator compression, we will show that for a large set of compact operators, the basis functions \(\{\psi _i\}_{i=1}^n\) constructed in (2.9) have exponentially decaying tails, which makes localization of these basis functions possible. The following lemma plays a key role in proving such exponential decay property.

Lemma 4.1

Let \(\Omega _{h}\) be a smooth, bounded, open subset of \(\mathbb {R}^d\) with diameter at most h and \(B(0, \delta h/2) \subset \Omega _{h}\) for some \(\delta > 0\). For \(k \in \mathbb {N}\), consider the operator \({\mathcal {L}}= (-1)^k \sum _{|\sigma | = k} D^{2 \sigma }\) with the homogeneous Dirichlet boundary condition on \(\partial \Omega _{h}\), i.e.,

Let \({\mathcal {P}}_{s}\) be the space of polynomials with order not greater than s. For \(\gamma \ge 0\), there exists \(C(k, s, d, \delta ) > 0\), such that

Proof

Let \(G_h\) be the Green’s function of Eq. (4.1). After multiplying \(u_h\) on both sides of Eq. (4.1) and integration by parts, we have \(| u_h|_{k,2,\Omega _{h}} = \int _{\Omega _{h}} u_h(x) f(x)\mathrm {d}x\). Recall that \({\mathcal {L}}u_h \in {\mathcal {P}}_{s-1}\), and thus Eq. (4.2) is equivalent to

Let \(\{p_1, p_2, \ldots , p_Q\}\) be all the monomials that span \({\mathcal {P}}_{s-1}\). It is easy to see \(Q = \left( {\begin{array}{c}s+d-1\\ d\end{array}}\right) \). For convenience, we assume that \(\{p_i\}_{i=1}^Q\) are in non-decreasing order with respect to its degree. Specifically, \(p_1 = 1\). Let \(u_{h,i}\) be the solution of Eq. (4.1) with right hand side \(p_i\), and \(S_h, M_h \in \mathbb {R}^{Q\times Q}\) be defined as follows:

Then, Eq. (4.3) is equivalent to

where \(A \preceq B\) means that \(B-A\) is positive semidefinite. The change of variable \(x = h z\) leads to \(u_i(x) = h^{2k + o_i} u_{1,i}(z)\) where \(u_{1,i}\) is the solution of the following PDE on \(\Omega _1 \equiv \{x/h~:~x \in \Omega _h\}\):

and \(o_i\) is the degree of \(p_i\). Therefore, it is easy to check that

where \(S_1(i,j) = \int _{\Omega _{1}} \int _{\Omega _{1}} G_1 p_i p_j = \int _{\Omega _{1}} u_{1,i} p_j\) and \(M_1(i,j) = \int _{\Omega _{1}} p_i p_j\), which are independent of h. Notice that both \(S_1\) and \(M_1\) are symmetric positive definite, and let \(\lambda _{\max }(M_1, S_1) > 0\) be the largest generalized eigenvalue of \(M_1\) and \(S_1\). By choosing

we have

Combining (4.7) and (4.9), Eq. (4.5) naturally follows. In “Appendix A,” we prove that \(C(k, s, d, \Omega _1)\) can be bounded by \(C(k, s, d, \delta )\), and this proves the lemma. \(\square \)

For the case \(s = k = 1\), we can take

as proved in Proposition (A.1). In this case, we have the estimate

where \(|\Omega _{h}|\) is the volume of \(\Omega _h\). The above bound is tight: when \(\Omega _h\) is a ball with diameter h, the equality holds true. Making use of the mean exit time of a Brownian motion, the author of [36] obtained a different bound

where \(V_d\) is the volume of a unit d-dimensional ball. The two estimates have the same order of \(\delta \) and h, but our estimates from Lemma 4.1 is much tighter. Moreover, Lemma 4.1 give estimates for any order k and any degree s, which plays a key role in proving the exponential decay in high-order cases, but the mean exit time of a Brownian motion is difficult to generalize to get these higher-order results.

5 Exponential decay of basis functions: the second-order case

The analysis for a general higher-order elliptic PDE is quite technical. In this section, we will prove that the basis function \(\psi _i\) for a second-order elliptic PDE has exponential decay away from \(\tau _i\). When \(c \equiv 0\), this problem has been studied in [36]. When \(c \ne 0\), it has been recently studied in [38] independently of our work. The results presented in this second-order case are not new [36]. We would like to use the simpler second-order elliptic PDE example to illustrate the main ingredients in the proof of exponential decay for a higher-order elliptic PDE, namely the recursive argument, the projection-type approximation property and the inverse energy estimate.

Consider the following second-order elliptic equation:

where D is an open bounded domain in \(\mathbb {R}^d\), the potential \(c(x) \ge 0\) and the diffusion coefficient a(x) is a symmetric, uniformly elliptic \(d\times d\) matrix with entries in \(L^{\infty }(D)\). For simplicity, we consider the homogeneous Dirichlet boundary condition here. We emphasize that all our analysis can be carried over for other types of homogeneous boundary conditions. We assume that there exist \(0 < a_{\min } \le a_{\max }\) and \(c_{\max }\) such that

To simply our notations, for any \(\psi \in H\) and any subdomain \(S \subset D\), \(\Vert \psi \Vert _{H(S)}\) denotes \(\left( \int _{S} \nabla \psi \cdot a \nabla \psi + c \psi ^2\right) ^{1/2}\). For the second-order case, the projection-type approximation property is simply the Poincare inequality. The following lemma provides us the inverse energy estimate. It is a special case of Lemma 6.2 and can be proved by using Lemma 4.1.

Lemma 5.1

For any domain partition with \(h \le h_0 \equiv \pi \sqrt{\frac{a_{\max }}{2 c_{\max }}}\), we have

where \(C(d, \delta ) = \sqrt{8 d(d+2)} \delta ^{-1-d/2}\). If \(c_{\max } = 0\), i.e., \(c(x) \equiv 0\), Eq. (5.3) holds true for all \(h > 0\) and \(C(d, \delta ) = \sqrt{4 d(d+2)} \delta ^{-1-d/2}\).

Now, we are ready to prove the exponential decay of the basis function \(\psi _i\).

Theorem 5.1

For \(h \le h_0 \equiv \pi \sqrt{\frac{a_{\max }}{2 c_{\max }}}\), it holds true that

with \(l = \frac{e-1}{\pi }(1+C(d, \delta ))\sqrt{\frac{a_{\max }}{a_{\min }}}\) and \(C(d, \delta ) = \sqrt{8 d(d+2)} (1/\delta )^{d/2+1}\). If \(c_{\max } = 0\), i.e., \(c(x) \equiv 0\), Eq. (5.4) holds true for all \(h > 0\) with \(l = \frac{e-1}{\pi }(1+C(d, \delta ))\sqrt{\frac{a_{\max }}{a_{\min }}}\) and \(C(d, \delta ) = \sqrt{4 d(d+2)} \delta ^{-1-d/2}\).

Proof

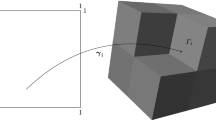

Let \(k\in \mathbb {N}\), \(l > 0\) and \(i \in \{1, 2, \ldots , m\}\). Let \(S_0\) be the union of all the domains \(\tau _j\) that are contained in the closure of \(B(x_i, k l h) \cap D\), let \(S_1\) be the union of all the domains \(\tau _j\) that are not contained in the closure of \(B(x_i, (k+1) l h) \cap D\) and let \(S^*=S_0^c \cap S_1^c \cap D\) (be the union of all the remaining elements \(\tau _j\) not contained in \(S_0\) or \(S_1\)), as illustrated in Fig. 1.

Let \(b_k := \Vert \psi _i\Vert _{H(S_0^c)}^2\), and from definition we have \(b_{0} = \Vert \psi _i\Vert _{H(D)}^2\), \(b_{k+1} = \Vert \psi _i\Vert _{H(S_1)}^2\) and \(b_{k}-b_{k+1} = \Vert \psi _i\Vert _{H(S^*)}^2\). The strategy is to prove that for any \(k \ge 1\), there exists constant C such that \(b_{k+1} \le C(b_{k} - b_{k+1})\). Then, we have \(b_{k+1} \le \frac{C}{C+1} b_k\) for any \(k \ge 1\) and thus we get the exponential decay \(b_k \le (\frac{C}{C+1})^{k-1} b_1 \le (\frac{C}{C+1})^{k-1} b_0\). We will choose l such that \(C \le \frac{1}{\mathrm {e}- 1}\) and thus get \(b_k \le e^{1-k} b_0\), which gives the result (5.4). We start from \(k=1\) because we want to make sure \(\tau _i \in S_0\); otherwise, \(S_0 = \emptyset \) and \(\tau _i \in S^*\).

Now, we prove that for any \(k \ge 1\), there exists constant C such that \(b_{k+1} \le C(b_{k} - b_{k+1})\), i.e., \( \Vert \psi _i\Vert _{H(S_1)}^2 \le C \Vert \psi _i\Vert _{H(S^*)}^2\). Let \(\eta \) be the function on D defined by \(\eta (x) = {\mathrm {dist}}(x, S_0)/\left( {\mathrm {dist}}(x, S_0) + {\mathrm {dist}}(x, S_1)\right) \). Observe that (1) \(0 \le \eta \le 1\) (2) \(\eta \) is equal to zero on \(S_0\) (3) \(\eta \) is equal to one on \(S_1\) (4) \(\Vert \nabla \eta \Vert _{L^{\infty }(D)} \le \frac{1}{l h}\).Footnote 1

By integration by parts, we obtain

Since \(a \succeq 0\) and \(c \ge 0\), the left-hand side gives an upper bound for \(\Vert \psi _i\Vert _{H(S_1)}\). Combining \(\nabla \eta \equiv 0\) on \(S_0 \cup S_1\) and the Cauchy–Schwarz inequality, we obtain

We have used \(c \ge 0\) to get \(\left( \int _{S^*} \nabla \psi _i \cdot a \nabla \psi _i \right) ^{1/2} \le \Vert \psi _i\Vert _{H(S^*)}\) in the last inequality. By the construction of \(\psi _i\) (2.9), we have \(\int _D \psi _i \varphi _j = 0\) for \(i \ne j\). Thanks to (2.11), we have \(-\nabla \cdot (a \nabla \psi _i ) + c \psi _i \in \Phi \). Therefore, we have \(\int _{S_1} \eta \psi _i (-\nabla \cdot (a \nabla \psi _i ) + c \psi _i) = 0\). Denoting \(\eta _j\) as the volume average of \(\eta \) over \(\tau _j\), we have

Up to now, \(I_1\) and \(I_2\) are some quantities of \(\psi _i\) purely on \(S^*\), and we only need to prove that both of them can be bounded by \(\Vert \psi _i\Vert _{H(S^*)}^2\) (up to a constant). By applying the Poincare inequality, we can easily do this for \(I_1\), as we will see soon. However, \(I_2\) involves the high-order term \(\Vert {\mathcal {L}}\psi _i\Vert _{L^2(\tau _j)}\) which in general may not be bounded by the lower-order term \(\Vert \psi _i\Vert _{H(S^*)}\). Fortunately, this can be proved since \({\mathcal {L}}\psi _i \in \Phi \), the piecewise constant function space. For the current operator \({\mathcal {L}}u= -\nabla \cdot (a(x) \nabla u) + c(x)u\) with rough coefficient a and nonzero potential c, Lemma 5.1 implies \(\Vert {\mathcal {L}}\psi _i\Vert _{L^2(\tau _j)} \le \sqrt{a_{\max }}C(d, \delta ) h^{-1} \Vert \psi _i\Vert _{H(\tau _j)}\) when \(h \le h_0\equiv \pi \sqrt{\frac{a_{\max }}{2 c_{\max }}}\). Then, we obtain

By the construction of \(\psi _i\) (2.9), we have \(\int _{\tau _j} \psi _i = 0\) for all \(\tau _j \in S^*\). By the Poincare inequality, we have \(\Vert \psi _i\Vert _{L^2(\tau _j)}\le \Vert \nabla \psi _i\Vert _{L^2(\tau _j)} h/\pi \), and then we obtain

By taking \(l \ge \frac{\mathrm {e}-1}{\pi }(1+C(d, \delta ))\sqrt{\frac{a_{\max }}{a_{\min }}}\), we have the constant \(\frac{1 + C(d, \delta )}{\pi l} \sqrt{\frac{a_{\max }}{a_{\min }}} \le \frac{1}{\mathrm {e}- 1}\). With the iterative argument given before, we have proved the exponential decay. \(\square \)

Remark 5.1

We point out that boundary conditions may be important in several applications. For example, the Robin boundary condition is useful in the application of the SPCA. The periodic boundary condition is useful in compressing a Hamiltonian with a periodic boundary condition in quantum physics.

The above proof can be applied to the operator \({\mathcal {L}}\) in (5.1) with other boundary conditions as long as the corresponding problem \({\mathcal {L}}u = f\) has a unique solution \(u \in H^k(D)\) for every \(f\in L^2(D)\). For other homogeneous boundary condition, the Cameron–Martin space is not \(H_0^1(D)\). Instead, we should use the solution space associated with the corresponding boundary condition. The proof of Theorem 5.1 can be easily carried over to other homogeneous boundary conditions, and the only difference is that a different boundary condition leads to slightly different integration by parts in (5.5). For the homogeneous Neumann boundary condition or the periodic boundary condition, the proof is exactly the same because the integration by parts (5.5) can be carried out in exactly the same way. For the problems with the Robin boundary condition, i.e.,

where \(\alpha (x) \ge 0\), the Cameron–Martin space is the subspace of \(H^1(D)\) in which all elements satisfy the Robin boundary condition and the associated energy norm is defined as

In this case, for a subdomain \(S \subset D\), the local energy norm on S should be modified as follows:

Similarly, we can define the Cameron–Martin space and the associated energy norm for the homogeneous mixed boundary conditions.

6 Exponential decay of basis functions: the higher-order case

In this section, we will study the case when \({\mathcal {K}}:L^2(D) \rightarrow L^2(D)\) is the solution operator of the following higher-order elliptic equation:

Here, we only consider the case when \({\mathcal {L}}\) (thus \({\mathcal {K}}\)) is self-adjoint, i.e.,

The corresponding symmetric bilinear form on \(H_0^k(D)\) is denoted as

We assume that B is an inner product on \(H_0^k(D)\) and the induced norm \(\left( B(u,u)\right) ^{1/2}\) is equivalent to the \(H_0^k(D)\) norm, i.e., there exists \(0 < a_{\min } \le a_{\max }\) such that

Thanks to the Riesz representation lemma, Eq. (6.1) has a unique weak solution in \(H_0^k(D)\) for \(f \in L^2(D)\).

6.1 Construction of basis functions and the approximation rate

Suppose D is divided into elements \(\{\tau _i\}_{1\le i \le m}\), where each element \(\tau _i\) is a triangle or a quadrilateral in 2D, or a tetrahedron or hexahedron in 3D. Denote the maximum element diameter by h. We also assume that the subdivision is regular [6]. This means that if \(h_i\) denotes the diameter of \(\tau _i\) and \(\rho _i\) denotes the maximum diameter of a ball inscribed in \(\tau _i\), there is a constant \(\delta >0\) such that

Applying Theorem 3.1 to \(\Omega = \tau _j\), for any \(u \in H^k(D)\) and any \(0 \le p \le k\), we have

where \(\Pi _i:H^k(\tau _i) \rightarrow {\mathcal {P}}_{k-1}(\tau _i)\) is the orthogonal projection to the polynomial space \({\mathcal {P}}_{k-1}(\tau _i)\) in \(L^2(\tau _i)\), and \(\widehat{\tau }_i\) is some reference domain that is affine equivalent to \(\tau _i\). Notice that the constant \(C(k-1, \widehat{\tau }_i) \delta ^{-p}\) can be bounded from above by a constant \(C_p\) for all the elements \(\{\tau _i\}_{1\le i \le m}\), because all elements in \(\{\tau _i\}_{1\le i \le m}\) are affine equivalent to an equilateral triangle or square in 2D, or a equilateral 3-simplex or cubic in 3D. Therefore, for any \(u \in H^k(D)\), any \(1 \le i \le m\) and any \(0 \le p \le k\), we have

Specifically for \(p=0\), \(\widetilde{u} \in L^2(D)\) with \(\widetilde{u}|_{\tau _i} = \Pi _i u\), we conclude that

Let \(X = L^2(D)\) and \(H = H_0^k(D)\). We use the standard inner product for \(L^2(D)\) and use the inner product \(\langle u, v \rangle = B(u,v)\) for H. Further, we denote \({\mathcal {K}}: L^2(D) \rightarrow L^2(D)\) as the operator mapping f to the solution u in Eq. (6.1). Let \(\{\varphi _{i,q}\}_{q=1}^{Q}\) be an orthogonal basis of \({\mathcal {P}}_{k-1}(\tau _i)\) with respect to the inner product in \(L^2(\tau _i)\), where \(Q = \left( {\begin{array}{c}k+d-1\\ d\end{array}}\right) \) is the number of d-variate monomials with degree at most \(k-1\). We take

Without loss of generality, we normalize these basis functions such that

A set of basis functions of \(\Psi \) is defined by Eq. (2.9) accordingly, i.e.,

Combining Eqs. (6.4) and (6.6), we have

Applying Theorem 2.1 with X and H defined above, we have

-

1.

For any \(u \in H\) and \({\mathcal {L}}u = f\), we have

$$\begin{aligned} \Vert u - {\mathcal {P}}_{\Psi }^{(H)} u \Vert _{H} \le \frac{C_p h^k}{\sqrt{a_{\min }}} \Vert f\Vert _{L^2(D)}. \end{aligned}$$(6.11)Here, \(C_p\) plays the role of the Poincare constant \(1/\pi \).

-

2.

For any \(u \in H\) and \({\mathcal {L}}u = f\), we have

$$\begin{aligned} \Vert u - {\mathcal {P}}_{\Psi }^{(H)} u \Vert _{L^2(D)} \le \frac{C_p^2 h^{2 k}}{a_{\min }} \Vert f\Vert _{L^2(D)}. \end{aligned}$$(6.12) -

3.

We have

$$\begin{aligned} \Vert {\mathcal {K}}- {\mathcal {P}}_{\Psi }^{(H)} {\mathcal {K}}\Vert \le \frac{C_p^2 h^{2 k}}{a_{\min }}. \end{aligned}$$(6.13)

Notice that the eigenvalues of the operator \({\mathcal {L}}\) (with the homogeneous Dirichlet boundary conditions) in (6.1) grow like \(\lambda _n({\mathcal {L}}) \sim n^{2 k/d}\) (see, e.g., [7, 32]), and thus, the eigenvalues of \({\mathcal {K}}\) decay like \(\lambda _n({\mathcal {K}}) \sim n^{-2 k/d}\). Meanwhile, the rank of the operator \({\mathcal {P}}_{\Psi }^{(H)} {\mathcal {K}}\), denoted as n, roughly scales like \(Q/h^d\) where \(1/h^d\) is roughly the number of patches. Plugging \(n = Q/h^d\) into Eq. (6.13), we have

Therefore, our construction of the m-dimensional subspace \(\Psi \) approximates \({\mathcal {K}}\) at the optimal rate. In Sect. 6.2, we introduce the concept of strong ellipticity that enables us to prove exponential decay results. In Sect. 6.4, we will prove that the basis functions \(\psi _{i,q}\) defined in Eq. (6.9) have exponential decay away from \(\tau _i\).

6.2 The strong ellipticity condition

In our proof, we need the following strong ellipticity condition of the operator \({\mathcal {L}}\) to obtain the exponential decay.

Definition 6.1

An operator in the divergence form \({\mathcal {L}}u := \sum \nolimits _{0 \le |\sigma |, |\gamma | \le k} (-1)^{|\sigma |} D^{\sigma } (a_{\sigma \gamma }(x) D^{\gamma } u)\) is strongly elliptic if there exists \(\theta _{k,\min } > 0\) such that

where \(\varvec{\zeta }_{\sigma }\) and \(\varvec{\zeta }_{\gamma }\) are the \(\sigma \)’th and \(\gamma \)’th entry of \(\varvec{\zeta }\), respectively. One can check that \(\left( {\begin{array}{c}k+d-1\\ k\end{array}}\right) \) is exactly the number of all possible kth derivatives, i.e., \(\#\{D^{\sigma } u: |\sigma | = k\}\).

For a 2kth-order partial differential operator \({\mathcal {L}}u = (-1)^k \sum \nolimits _{|\alpha |\le 2k} a_{\alpha } D^{\alpha } u\), \({\mathcal {L}}\) is strongly elliptic if there exists a strongly elliptic operator in the divergence form \(\widetilde{{\mathcal {L}}}\) such that \({\mathcal {L}}u = \widetilde{{\mathcal {L}}} u\) for all \(u \in C^{2k}(D)\).

Remark 6.1

For a 2kth-order partial differential operator \({\mathcal {L}}u = (-1)^k \sum \nolimits _{|\alpha |\le 2k} a_{\alpha } D^{\alpha } u\), its divergence form may not be unique. It is possible that it has two divergence forms, and one does not satisfy the strong ellipticity condition (6.1) while the other does. For example, the biharmonic operator \({\mathcal {L}}= \Delta ^2\) in two space dimensions have the following two different divergence forms:

where

when \(\{D^{\sigma } u: |\sigma | = 2\}\) is ordered as \((\partial _{x_1}^2, \partial _{x_2}^2, \partial _{x_1} \partial _{x_2})\). Obviously, the first one does not satisfy the strong ellipticity condition (6.1) while the second one does. These two divergence forms correspond to two bilinear forms on \(H_0^2(D)\):

where \(D^2 u : D^2 v = \sum _{i,j} \frac{\partial ^2 u}{\partial x_i \partial x_j} \frac{\partial ^2 v}{\partial x_i \partial x_j}\).

The strong ellipticity condition guarantees that for any local subdomain \(S \subset D\), the seminorm \(|\cdot |_{k,2,S}\) can be controlled by the local energy norm \(\Vert \cdot \Vert _{H(S)}\).

Lemma 6.1

Suppose \({\mathcal {L}}u = \sum \nolimits _{0 \le |\sigma |, |\gamma | \le k} (-1)^{|\sigma |} D^{\sigma } (a_{\sigma \gamma }(x) D^{\gamma } u)\) is self-adjoint. Assume that \(a_{\sigma \gamma }(x) \in L^{\infty }(D)\) for all \(0 \le |\sigma |, |\gamma | \le k\) and that for any \(x \in D\)

-

\({\mathcal {L}}\) is nonnegative, i.e.,

$$\begin{aligned} \sum _{ 0 \le |\sigma |, |\gamma | \le k} a_{\sigma \gamma }(x) \varvec{\zeta }_{\sigma } \varvec{\zeta }_{\gamma } \ge 0 \qquad \forall \varvec{\zeta } \in \mathbb {R}^{\left( {\begin{array}{c}k+d\\ k\end{array}}\right) }, \end{aligned}$$(6.19) -

\({\mathcal {L}}\) is bounded, i.e., there exist \(\theta _{0,\max }\ge 0\) and \(\theta _{k,\max } > 0\) such that

$$\begin{aligned} \sum _{ 0 \le |\sigma |, |\gamma | \le k} a_{\sigma \gamma }(x) \varvec{\zeta }_{\sigma } \varvec{\zeta }_{\gamma } \le \theta _{k,\max } \sum _{|\sigma | = k} \varvec{\zeta }_{\sigma }^2 + \theta _{0,\max } \sum _{|\sigma | < k} \varvec{\zeta }_{\sigma }^2 \qquad \forall x \in D \quad \forall \varvec{\zeta } \in \mathbb {R}^{\left( {\begin{array}{c}k+d\\ k\end{array}}\right) }, \end{aligned}$$(6.20) -

and \({\mathcal {L}}\) is strongly elliptic, i.e., there exists \(\theta _{k,\min } > 0\) such that

$$\begin{aligned} \sum _{ |\sigma | = |\gamma | = k} a_{\sigma \gamma }(x) \varvec{\zeta }_{\sigma } \varvec{\zeta }_{\gamma } \ge \theta _{k,\min } \sum _{|\sigma | = k} \varvec{\zeta }_{\sigma }^2 \qquad \forall x \in D \quad \forall \varvec{\zeta } \in \mathbb {R}^{\left( {\begin{array}{c}k+d-1\\ k\end{array}}\right) }. \end{aligned}$$(6.21)

For any subdomain \(S \subset D\) and any \(\psi \in H^{k}(D)\), define

Then, the following two claims hold true.

-

If \({\mathcal {L}}\) contains only highest order terms, i.e., \({\mathcal {L}}u = \sum \nolimits _{|\sigma | = |\gamma | = k} (-1)^{|\sigma |} D^{\sigma } (a_{\sigma \gamma }(x) D^{\gamma } u)\), then we have

$$\begin{aligned} |\psi |_{k,2,S} \le \theta _{k,\min }^{-1/2} \Vert \psi \Vert _{H(S)} \qquad \forall \psi \in H^{k}(D). \end{aligned}$$(6.23) -

If \({\mathcal {L}}\) contains low-order terms, for any regular domain partition \(D = \cup _{i=1}^m \tau _i\) with diameter \(h>0\) satisfying \(\frac{h^2(1-h^{2k})}{1-h^2} \le \frac{\theta _{k,\min }^2}{16 \theta _{0,\max }\theta _{k,\max } C_p^2}\), and any subdomain \(S = \cup _{_{j\in \Lambda }} \tau _j\), we have

$$\begin{aligned} |\psi _{i,q}|_{k,2,S} \le \left( 2/\theta _{k,\min }\right) ^{1/2} \Vert \psi _{i,q}\Vert _{H(S)} \qquad \forall \tau _i \not \in \mathcal {S},\quad 1\le q\le Q. \end{aligned}$$(6.24)Here, \(\Lambda \) is any subset of \(\{1, 2, \ldots , m\}\), and \(\psi _{i,q}\) is defined by Eq. (6.9).

Proof

The first point can be obtained directly from the definition of strong ellipticity. In the following, we provide the proof of the second point. For S stated in the second point and any \(\psi \in H^{k}(D)\), we have

From the strong ellipticity (6.21), we have

From the nonnegativity (6.19), we have

Combining the nonnegativity (6.19) and the boundedness (6.20), we can prove that

Therefore, using the Cauchy–Schwarz inequality, we obtain

Thanks to the polynomial approximation property, for any \(\tau _i \not \in \mathcal {S}\) and \(1\le q\le Q\), we have

Combining Eqs. (6.28) and (6.29), for \(\frac{h^2(1-h^{2k})}{1-h^2} \le \frac{\theta _{k,\min }^2}{16 \theta _{0,\max }\theta _{k,\max } C_p^2}\), we have

Combining Eqs. (6.25), (6.26), (6.27) and (6.30), we prove the second point. \(\square \)

Remark 6.2

When \({\mathcal {L}}\) contains low-order terms but there is no crossing term between \(D^{\sigma } u\) (\(|\sigma | = k\)) and \(D^{\sigma } u\) (\(|\sigma | < k\)), i.e., \(J_3 = 0\), we can directly get the same bound in Eq. (6.23) for all \(h >0\).

The strong ellipticity condition above is different from the standard uniformly elliptic condition (see Definition 9.2 in [42]), i.e., a linear partial differential operator \({\mathcal {L}}u= (-1)^k \sum \nolimits _{|\alpha |\le 2k} a_{\alpha } D^{\alpha } u\) is uniformly elliptic if there exists a constant \(\theta _{k,\min }>0\) such that

On the one hand, it is obvious that a strongly elliptic operator with smooth coefficients is uniformly elliptic, by taking \(\varvec{\zeta }_{\sigma } := \varvec{\xi }^{\sigma }\) in Eq. (6.15). On the other hand, the relation between the uniform ellipticity and the strong ellipticity turns out to be closely related to the relation between nonnegative polynomials and sum-of-square (SOS) polynomials. In fact, the strongly ellipticity condition (6.15) is equivalent to that there exists \(\theta _{k,\min } > 0\) such that

Using the famous Hilbert’s theorem (1888) on nonnegative polynomials and SOS polynomials, we have the following theorem. Readers can find the proof and more discussions in [48].

Theorem 6.2

Let \(a_{\alpha } \in C^{|\alpha |-k}(\overline{D})\) for \(k < |\alpha | \le 2k\), \(a_{\alpha } \in C(\overline{D})\) for \(|\alpha | \le k\), and \({\mathcal {L}}u = (-1)^k \sum \nolimits _{|\alpha |\le 2k} a_{\alpha } D^{\alpha } u\) for all \(u \in C^{2 k} (D)\). Then in the following two cases, if \({\mathcal {L}}\) is uniformly elliptic it is also strongly elliptic.

-

\(d=1\) or 2 : one- or two-dimensional physical domain,

-

\(k=1\) : second-order partial differential operators.

For the case \((d, k)=(3, 2)\), i.e., fourth-order partial differential operators in 3-dimensional physical domain, all uniformly elliptic operators with constant coefficients are also strongly elliptic.

For the case \((d, k)= (3, 2)\), we are not able to prove that strong ellipticity is equivalent to uniform ellipticity for elliptic operators with smooth and multiscale coefficients, but we suspect that it is true. For all other cases, there are uniformly but not strongly elliptic operators. Fortunately, for small physical dimensions d and differential orders k, strongly elliptic operators approximate uniformly elliptic operators well and counter examples are difficult to construct.

6.3 Exponential decay of basis functions I

In this subsection, we prove the exponential decay of basis functions constructed in Eq. (6.9) for higher-order elliptic operators that contain only the highest order terms. We will leave the proof for the general operators to the next subsection. The proof follows exactly the same structure as that in the second-order elliptic case.

Theorem 6.3

Let \({\mathcal {L}}u = (-1)^k \sum \nolimits _{|\sigma |=|\gamma |=k} D^{\sigma }(a_{\sigma \gamma } D^{\gamma } u)\) and \(a_{\sigma \gamma }(x) \in L^{\infty }(D)\) for all \(|\sigma |= |\gamma | = k\). Assume that for any \(x \in D\)

-

\({\mathcal {L}}\) is bounded, i.e., there exist nonnegative \(\theta _{k,\max }\) such that

$$\begin{aligned} \sum _{ |\sigma |= |\gamma |= k} a_{\sigma \gamma }(x) \varvec{\zeta }_{\sigma } \varvec{\zeta }_{\gamma } \le \theta _{k,\max } \sum _{|\sigma | = k} \varvec{\zeta }_{\sigma }^2 \qquad \forall \varvec{\zeta } \in \mathbb {R}^{\left( {\begin{array}{c}k+d-1\\ k\end{array}}\right) }, \end{aligned}$$(6.32) -

and \({\mathcal {L}}\) is strongly elliptic, i.e., there exists \(\theta _{k,\min } > 0\) such that

$$\begin{aligned} \sum _{ |\sigma | = |\gamma | = k} a_{\sigma \gamma }(x) \varvec{\zeta }_{\sigma } \varvec{\zeta }_{\gamma } \ge \theta _{k,\min } \sum _{|\sigma | = k} \varvec{\zeta }_{\sigma }^2 \qquad \forall \varvec{\zeta } \in \mathbb {R}^{\left( {\begin{array}{c}k+d-1\\ k\end{array}}\right) }. \end{aligned}$$(6.33)

Then for any \(1\le i \le m\) and \(1\le q\le Q\), it holds true that

with \(\sqrt{l^2-1} \ge (e-1) C_{\eta } C_p (C_1 + C(k,d,\delta )) \sqrt{\frac{\theta _{k,\max }}{\theta _{k,\min }}}\). Here, \(C_1\) and \(C_{\eta }\) only depends on k and d, \(C_p\) is the constant in Eq. (6.5) and \(C(k, d, \delta ) := C(k, k, d, \delta )\) from Lemma 3.1.

Proof

The proof follows the same structure as that of Theorem 5.1 and [36] (Thm. 3.9). Let \(k\in \mathbb {N}\), \(l > 0\) and \(i \in \{1, 2, \ldots , m\}\). Let \(S_0\) be the union of all the domains \(\tau _j\) that are contained in the closure of \(B(x_i, k l h) \cap D\), let \(S_1\) be the union of all the domains \(\tau _j\) that are not contained in the closure of \(B(x_i, (k+1) l h) \cap D\) and let \(S^*=S_0^c \cap S_1^c \cap D\) (be the union of all the remaining elements \(\tau _j\) not contained in \(S_0\) or \(S_1\)). In the following, we will prove that for any \(k \ge 1\), there exists constant C such that \(\Vert \psi _{i,q}\Vert ^2_{H(S_1)} \le C \Vert \psi _{i,q}\Vert ^2_{H(S^*)}\). Then, the same recursive argument in the proof of Theorem 5.1 can be used to prove the exponential decay.

Let \(\eta (x)\) be a smooth function which satisfies (1) \(0 \le \eta \le 1\), (2) \(\eta |_{B(x_i, k l h)} = 0\), (3) \(\eta |_{B^c(x_i, (k+1) l h)} = 1\) and (4) \(\Vert D^{\sigma } \eta \Vert _{L^{\infty }(D)} \le \frac{C_{\eta }}{(l h)^{|\sigma |}}\) for all \(\sigma \).

By integration by parts, we have

Making use of the binomial theorem \(D^{\sigma }(\eta \psi _{i,q}) = \eta D^{\sigma } \varphi _{i,q} + \sum _{\mathop {|\sigma _1| \ge 1}\limits ^{\sigma _1 + \sigma _2 = \sigma }} \left( {\begin{array}{c}\sigma \\ \sigma _1\end{array}}\right) D^{\sigma _1} \eta D^{\sigma _2} \psi _{i,q}\), we obtain

Since \(\sum \nolimits _{|\sigma |= |\gamma | = k} a_{\sigma \gamma }(x) D^{\sigma }\psi _{i,q} D^{\gamma } \psi _{i,q} \ge 0\) for every \(x\in D\), the left-hand side gives an upper bound for \(\Vert \psi _{i,q}\Vert _{H(S^1)}^2\). Since \(D^{\sigma _1} \eta = 0\) (\(|\sigma _1| \ge 1\)) on both \(S_0\) and \(S_1\), we obtain

Here, \(C_1\) is a constant only dependent on k and d. We have used the Cauchy–Schwarz inequality and the bound (6.32) in Eq. (6.37). We will defer the proof of the last step in Eq. (6.38) to the “Appendix.” Since \(\psi _{i,q} \perp {\mathcal {P}}_{k-1}\) locally in \(L^2\), we obtain from Theorem 3.1 that

Therefore, we get

In the last inequality, we have used \(\sum _{s'=1}^k l^{- 2s'} = \frac{1-l^{-2k}}{l^2 -1} \le \frac{1}{l^2 -1}\).

By the construction of \(\psi _{i,q}\) given in (6.9), we have \(\int _D \psi _{i,q} \varphi _{j,q'} = 0\) for \(i \ne j\). Thanks to (2.11), we have \({\mathcal {L}}\psi _{i,q} \in \Phi \). Therefore, we get \(\int _{S_1} \eta \psi _{i,q} {\mathcal {L}}\psi _{i,q} = 0\). Denoting \(\eta _j\) as the volume average of \(\eta \) over \(\tau _j\), we obtain

By using Lemma 6.2, which is stated in the beginning of Sect. 6.5, we have \(\Vert {\mathcal {L}}\psi _{i,q}\Vert _{L^2(\tau _j)} \le \sqrt{\theta _{k,\max }}C(k, d, \delta ) h^{-k} \Vert \psi _{i,q}\Vert _{H(\tau _j)}\) for any \(h > 0\) because \({\mathcal {L}}\) contains only the highest order derivatives. Then, we obtain

where we have used Eq. (6.5) in the last step.

Combining Eqs. (6.40) and (6.42), we obtain

By the strong ellipticity (6.33) and Eq. (6.23), we have \(|\psi _{i,q}|_{k,2,S^*} \le \theta _{k,\min }^{-1/2} \Vert \psi _{i,q}\Vert _{H(S^*)}\). Therefore, we have

By taking \(\sqrt{l^2-1} \ge (e-1) C_{\eta } C_p (C_1 + C(k,d,\delta )) \sqrt{\frac{\theta _{k,\max }}{\theta _{k,\min }}}\), the exponential decay naturally follows. \(\square \)

6.4 Exponential decay of basis functions II

The following theorem gives the exponential decay property of \(\psi _{i,q}\) for an operator \({\mathcal {L}}\) with lower-order terms. Similar to the proof of Theorem 6.4, we need the polynomial approximation property (6.5) and the Friedrichs’ inequality (3.4) to bound the lower-order terms, and we get an extra factor of 2 in our error bound.

Theorem 6.4

Suppose \({\mathcal {L}}u = \sum \nolimits _{0 \le |\sigma |, |\gamma | \le k} (-1)^{|\sigma |} D^{\sigma } (a_{\sigma \gamma }(x) D^{\gamma } u)\) is self-adjoint. Assume that \(a_{\sigma \gamma }(x) \in L^{\infty }(D)\) for all \(0 \le |\sigma |, |\gamma | \le k\) and that for any \(x \in D\)

-

\({\mathcal {L}}\) is nonnegative, i.e.,

$$\begin{aligned} \sum _{ 0 \le |\sigma |, |\gamma | \le k} a_{\sigma \gamma }(x) \varvec{\zeta }_{\sigma } \varvec{\zeta }_{\gamma } \ge 0, \qquad \forall x \in D \quad \forall \varvec{\zeta } \in \mathbb {R}^{\left( {\begin{array}{c}k+d\\ k\end{array}}\right) }, \end{aligned}$$(6.44) -

\({\mathcal {L}}\) is bounded, i.e., there exist \(\theta _{0,\max }\ge 0\) and \(\theta _{k,\max } > 0\) such that

$$\begin{aligned} \sum _{ 0 \le |\sigma |, |\gamma | \le k} a_{\sigma \gamma }(x) \varvec{\zeta }_{\sigma } \varvec{\zeta }_{\gamma } \le \theta _{k,\max } \sum _{|\sigma | = k} \varvec{\zeta }_{\sigma }^2 + \theta _{0,\max } \sum _{|\sigma | < k} \varvec{\zeta }_{\sigma }^2 \qquad \forall x \in D , \quad \forall \varvec{\zeta } \in \mathbb {R}^{\left( {\begin{array}{c}k+d\\ k\end{array}}\right) }, \end{aligned}$$(6.45) -

and \({\mathcal {L}}\) is strongly elliptic, i.e., there exists \(\theta _{k,\min } > 0\) such that

$$\begin{aligned} \sum _{ |\sigma | = |\gamma | = k} a_{\sigma \gamma }(x) \varvec{\zeta }_{\sigma } \varvec{\zeta }_{\gamma } \ge \theta _{k,\min } \sum _{|\sigma | = k} \varvec{\zeta }_{\sigma }^2, \qquad \forall \varvec{\zeta } \in \mathbb {R}^{\left( {\begin{array}{c}k+d-1\\ k\end{array}}\right) }. \end{aligned}$$(6.46)

Then there exists \(h_0 > 0\) such that for any \(h \le h_0\), \(1\le i \le m\) and \(1\le q\le Q\), it holds true that

with \(\sqrt{l^2-1} \ge 2 (e-1) C_{\eta } C_p (C_1 + C(k,d,\delta )) \sqrt{\frac{\theta _{k,\max }}{\theta _{k,\min }}}\). Here, \(C_1\) and \(C_{\eta }\) depend on k and d only, \(C_p\) is the constant given in Eq. (6.5), \(C(k, d, \delta ) := C(k, k, d, \delta )\) is given in Lemma 4.1 and \(\theta _{k,\max } := \max (\theta _{0,\max }, \theta _{k,\max })\). The constant \(h_0\) can be taken as

where \(C_f\) is the constant in the Friedrichs’ inequality (3.4).

Proof

The proof follows the same structure as the proof of Theorem 6.3. All we need to do is to use the polynomial approximation property (6.5) and the Friedrichs’ inequality (3.4) to bound the lower-order terms when they appear. First, the \(I_1\) in Eq. (6.35) contains all the lower-order terms and its estimation should be modified as follows:

Here, \(\theta _{k,\max } := \max (\theta _{0,\max }, \theta _{k,\max })\). We have used the Cauchy–Schwarz inequality and the bound (6.45) in Eq. (6.49). We will defer the proof of the last step in Eq. (6.50) to the “Appendix.” Since \(\psi _{i,q} \perp {\mathcal {P}}_{k-1}\) locally in \(L^2\), we obtain from Theorem 3.1 that

Therefore, we have

If we compare the above estimate with Eq. (6.40), we conclude that Eq. (6.52) contains all the lower-order terms. We will use the polynomial approximation property (6.5) and take \(\frac{h^2-h^{2k}}{1-h^2} \le 1/C_p^2\) to guarantee that Eq. (6.53) is valid. When \({\mathcal {L}}\) contains lower-order terms, by Lemma 6.2, we have \(\Vert {\mathcal {L}}\psi _{i,q}\Vert _{L^2(\tau _j)} \le \sqrt{2 \theta _{k,\max }}C(k, d, \delta ) h^{-k} \Vert \psi _{i,q}\Vert _{H(\tau _j)}\) for any \(h >0\) satisfying \(\frac{h^2(1-h^{2k})}{1-h^2} \le \frac{\theta _{k,\max }}{2 \theta _{0,\max } C_f^2}\). Therefore, using Eq. (6.42) we get

when h satisfies \(\frac{h^2(1-h^{2k})}{1-h^2} \le \frac{\theta _{k,\max }}{2 \theta _{0,\max } C_f^2}\). Finally, we need to use Eq. (6.24) instead of Eq. (6.23) to bound \(|\psi _{i,q}|_{k,2,S^*}\). We get

where we have imposed another condition on h, i.e., \(\frac{h^2(1-h^{2k})}{1-h^2} \le \frac{\theta _{k,\min }^2}{16 \theta _{0,\max }\theta _{k,\max } C_p^2}\). By taking \(\sqrt{l^2-1} \ge 2 (e-1) C_{\eta } C_p (C_1 + C(k,d,\delta )) \sqrt{\frac{\theta _{k,\max }}{\theta _{k,\min }}}\), we prove the exponential decay. \(\square \)

Remark 6.3

As we have pointed out in Remark 6.2, when \({\mathcal {L}}\) contains low-order terms but there is no crossing term between \(D^{\sigma } u\) (\(|\sigma | = k\)) and \(D^{\sigma } u\) (\(|\sigma | < k\)), Eq. (6.23) can be used to bound \(|\psi _{i,q}|_{k,2,S^*}\). In this case, the constraint on l is

and the \(h_0\) can be taken as

6.5 Lemmas

In this subsection, we will prove the following lemma, which is used in the proof of Theorem 6.3 and Theorem 6.4.

Lemma 6.2

\({\mathcal {L}}\) is defined in Eq. (6.1) and the space \(\Psi \) is defined as above. Assume that for any \(x \in D\)

Let \(C_f\) be the constant in the Friedrichs’ inequality (3.4). Then for any domain partition with \(\frac{h^2(1-h^{2k})}{1-h^2} \le \frac{\theta _{k,\max }}{2 \theta _{0,\max } C_f^2}\), we have

where \(C(k, d, \delta ) = C(k,k,d,\delta )\) from Lemma 4.1.

If the operator \({\mathcal {L}}\) contains only the highest order terms, i.e., \({\mathcal {L}}u = (-1)^k \sum \nolimits _{|\sigma |=|\gamma |=k} D^{\sigma }(a_{\sigma \gamma } D^{\gamma } u)\), we have \(\Vert {\mathcal {L}}v\Vert _{L^2(\tau _j)} \le \sqrt{\theta _{k,\max }} C(k,d,\delta ) h^{-k} \Vert v\Vert _{H(\tau _j)}\) for all \(h > 0\).

We will use Lemma 4.1 to prove this result, but we need to deal with the variable coefficients \(a_{\sigma \gamma }\) and the low-order terms \(a_{\sigma \gamma }\) with \(|\sigma |+|\gamma | < 2 k\) before we can apply Lemma 4.1. Our strategy is to transfer the variable coefficients to constant ones by the variational formulation (see Lemma 6.3) and to use the polynomial approximation property to deal with the low-order terms; see Lemma 6.4. For this purpose, we first introduce the following two lemmas.

Lemma 6.3

Let \(\Omega \) be a smooth, bounded, open subset of \(\mathbb {R}^d\). \({\mathcal {L}}u = \sum \nolimits _{0 \le |\sigma |, |\gamma | \le k} (-1)^{|\sigma |} D^{\sigma } (a_{\sigma \gamma }(x) D^{\gamma } u)\) and \({\mathcal {M}}u = \sum \nolimits _{0 \le |\sigma |, |\gamma | \le k} (-1)^{|\sigma |} D^{\sigma } (b_{\sigma \gamma }(x) D^{\gamma } u)\) are two symmetric operators on \(H_0^k(\Omega )\). Moreover, we assume that the bilinear forms induced by both \({\mathcal {L}}\) and \({\mathcal {M}}\) are equivalent to the standard norm on \(H_0^k(\Omega )\). Let \(G_{{\mathcal {L}}}\) and \(G_{{\mathcal {M}}}\) be the Green’s functions of \({\mathcal {L}}\) and \({\mathcal {M}}\), respectively. If for any \(x \in D\) we have

then for all \(f \in L^2(\Omega )\),

Proof

Let \(f\in L^2(\Omega )\). Let \(\psi _{{\mathcal {L}}}\) and \(\psi _{{\mathcal {M}}}\) be the weak solutions of \({\mathcal {L}}\psi _{{\mathcal {L}}} = f\) and \({\mathcal {M}}\psi _{{\mathcal {M}}} = f\) with the homogeneous Dirichlet boundary conditions on \(\partial \Omega \). Observe that \(\psi _{{\mathcal {L}}}\) and \(\psi _{{\mathcal {M}}}\) are the unique minimizers of \(I_{{\mathcal {L}}}(u, f)\) and \(I_{{\mathcal {M}}}(u, f)\) with

At the minima \(\psi _{{\mathcal {L}}}\) and \(\psi _{{\mathcal {M}}}\), we have

Observe that

where the first inequality is true because \(\psi _{{\mathcal {L}}}\) is the minimizer of \(I_{{\mathcal {L}}}\), and the second inequality is true because \(I_{{\mathcal {L}}}(u,f)\le I_{{\mathcal {M}}}(u,f)\) for any \(u \in H_0^k(\Omega )\). Combining Eq. (6.61) and (6.62), we obtain \(\int _{\Omega } \psi _{{\mathcal {M}}} f \le \int _{\Omega } \psi _{{\mathcal {L}}} f\). This proves the lemma. \(\square \)

Lemma 6.4

Let \(\Omega _h\) be a smooth, convex, bounded, open subset of \(\mathbb {R}^d\) with diameter at most h. Let \(G_{h}\) be the Green’s function of \({\mathcal {L}}u = (-1)^k \sum _{|\sigma | = k} D^{2 \sigma } u + c \sum _{|\sigma | < k} (-1)^{\sigma } D^{2 \sigma } u\) with the homogeneous Dirichlet boundary condition on \(\partial \Omega _h\) and \(G_{h,0}\) be the Green’s function of \({\mathcal {L}}_0 u = (-1)^k \sum _{|\sigma | = k} D^{2 \sigma } u\) with the homogeneous Dirichlet boundary condition on \(\partial \Omega _h\). Here, \(c > 0\) is a positive constant. Then, for any \(f\in L^2(\Omega _h)\)

Moreover, \(\frac{\int _{\Omega _h} \int _{\Omega _h} G_{h}(x,y) f(x) f(y) \mathrm {d}x\,\mathrm {d}y}{\int _{\Omega _h} \int _{\Omega _h} G_{h,0}(x,y) f(x) f(y)\mathrm {d}x\,\mathrm {d}y} \ge 1/2\) for all \(h>0\) such that \(\frac{h^2(1-h^{2k})}{1-h^2} \le \frac{1}{2 c C_f^2}\).

Proof

Let \(\psi _{h}\) be the solution of \({\mathcal {L}}\psi _{h} = f\) with the homogeneous Dirichlet boundary conditions on \(\partial \Omega _h\) and \(\psi _{h,0}\) be the solution of \({\mathcal {L}}_0 \psi _{h,0} = f\) with the homogeneous Dirichlet boundary conditions on \(\partial \Omega _h\). Let

At the minima \(\psi _{h}\) and \(\psi _{h,0}\), we have

Note that Eq. (6.65) implies that \(I_{{\mathcal {L}}_0}(\psi _{h,0}, f) < 0\). By the definition of Green’s function, we further have

Since \(I_{{\mathcal {L}}_0}(u, f) \le I_{{\mathcal {L}}}(u, f)\) for any \(u \in H_0^k(\Omega )\), we have \(\frac{\int _{\Omega _h} \int _{\Omega _h} G_{h}(x,y)f(x) f(y) \mathrm {d}x\,\mathrm {d}y}{\int _{\Omega _h} \int _{\Omega _h} G_{h,0}(x,y) f(x) f(y) \mathrm {d}x\,\mathrm {d}y} \le 1\) for any \(h > 0\). Applying the Friedrich’s inequality (3.4) to \(\Vert \psi _{h,0}\Vert _{k-1,2,\Omega _h}^2\), we get

Here, we have used Eq. (6.66) in the last equality. Therefore, we have

where we have used \(I_{{\mathcal {L}}}(\psi _{h}, f) \le I_{{\mathcal {L}}}(\psi _{h,0}, f)\) in the first inequality. By using the above upper bound, we prove the lemma. \(\square \)

Now, we are ready to prove Lemma 6.2.

Proof of Lemma 6.2

Let \(v = \sum _{i=1}^m \sum _{q=1}^{Q} c_{i,q} \psi _{i,q}\). Thanks to Eq. (2.11), we have

Let \(g_{j} = \sum _{q'=1}^{Q} \sum _{i,q} c_{i,q} \Theta _{iq,jq'}^{-1} \varphi _{j,q'}\). Due to the construction of \(\varphi _{j,q'}\), we have

Furthermore, v can be decomposed over \(\tau _j\) as \(v = v_1 + v_2\), where \(v_1\) solves \({\mathcal {L}}v_1 = g_j(x)\) in \(\tau _j\) with \(v_1 \in H_0^k(\tau _j)\), and \(v_2\) solves \({\mathcal {L}}v_2 = 0\) with \(v_2 - v \in H_0^k(\tau _j)\). It is easy to check that \(\Vert v\Vert _{H(\tau _j)}^2 = \Vert v_1\Vert _{H(\tau _j)}^2 + \Vert v_2\Vert _{H(\tau _j)}^2\). We denote \(G_j\) as the Green’s function of the operator \({\mathcal {L}}\) with the homogeneous Dirichlet boundary condition on \(\tau _j\), then

Thanks to Lemma 6.3, we have

where \(G_j^*\) is the Green’s function of the operator \((-1)^k \sum \nolimits _{|\sigma | = k} D^{2 \sigma } u + \frac{\theta _{k,\max }}{\theta _{0,\max }} \sum \nolimits _{|\sigma | < k} (-1)^{\sigma } D^{2 \sigma } u\) with the homogeneous Dirichlet boundary condition on \(\partial \tau _j\). Thanks to Lemma 6.4, for all \(h>0\) such that \(\frac{h^2(1-h^{2k})}{1-h^2} \le \frac{\theta _{k,\max }}{2 \theta _{0,\max } C_f^2}\) we have