Abstract

In this work, we study the convergence of an efficient iterative method, the fast sweeping method (FSM), for numerically solving static convex Hamilton–Jacobi equations. First, we show the convergence of the FSM on arbitrary meshes. Then we illustrate that the combination of a contraction property of monotone upwind schemes with proper orderings can provide fast convergence for iterative methods. We show that this mechanism produces different behavior from that for elliptic problems as the mesh is refined. An equivalence between the local solver of the FSM and the Hopf formula under linear approximation is also proved. Numerical examples are presented to verify our analysis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Background

Efficient and robust iterative methods are highly desirable for solving a variety of static hyperbolic partial differential equations (PDEs) numerically. The most important property for hyperbolic problems is that the information propagates along the characteristics. For linear hyperbolic problems, the characteristics are known á priori and do not intersect. For nonlinear hyperbolic problems, the characteristics are not known á priori and may intersect. Consequently the information that propagates along different characteristics has to compromise in certain ways when the characteristics intersect to render the desired weak solution. When discretizing hyperbolic PDEs, monotone upwind schemes are an important class of schemes that use stencils following the characteristics in the direction from which the information comes (e.g., see [39]). How to design an effective iterative method for hyperbolic problems also needs to fully utilize the above properties. We use the fast sweeping method (FSM) [43] as an example to show that the combination of monotone upwind schemes with Gauss–Seidel iterations and proper orderings can provide fast convergence. The convergence mechanism is different from that of iterative methods for elliptic problems, where relaxation is the underlying mechanism for convergence and the key point is how to deal with long-range interactions through short-range interactions efficiently by using techniques such as multi-grids and/or effective pre-conditioners. We present both analysis and examples to explain this different convergence behavior as the mesh is refined.

In this work, we consider the following static Hamilton–Jacobi equation

where \(\Omega \) is a bounded domain in \(R^d\) with d the dimension, and H is the Hamiltonian that satisfies the following assumptions:

-

(A1)

Continuity: \(H\in C({\overline{\Omega }}\times R^d)\),

-

(A2)

Convexity: \(H(\mathbf x ,\mathbf p )\) is convex in \(\mathbf p \in R^d\) for all \(\mathbf x \in {\overline{\Omega }}\),

-

(A3)

Coercivity: \(H(\mathbf x ,\mathbf p ) \rightarrow \infty \) as \(|\mathbf p |\rightarrow \infty \) uniformly for \(\mathbf x \in {\overline{\Omega }}\),

-

(A4)

Compatibility of Hamiltonian: \(H(\mathbf x ,0)\le 0\) for all \(\mathbf x \in \Omega \),

-

(A5)

Compatibility of Dirichlet data: \(g(\mathbf x )-g(\mathbf y )\le l(\mathbf x ,\mathbf y )\) for all \(\mathbf x ,\mathbf y \in \partial \Omega \), where \(l(\mathbf x ,\mathbf y )\) is the optical distance defined by [7, 22],

$$\begin{aligned} \begin{array}{l} l(\mathbf x ,\mathbf y )=\inf \left\{ \int _0^1\rho (\xi (t),-\xi '(t))\mathrm{{d}}t : \xi \in C^{0,1}([0,1],{\overline{\Omega }}), \xi (0)=\mathbf x , \xi (1)=\mathbf y \right\} , \\ \\ \text{ with }~\rho (\mathbf x ,\mathbf q )=\max \limits _{H(\mathbf x ,\mathbf p )=0}\langle \mathbf p , \mathbf q \rangle \text{ the } \text{ support } \text{ function. } \end{array} \end{aligned}$$(1.2) -

(A6)

g is Lipschitz continuous.

The characteristic equations for (1.1) are given by

with appropriate initial conditions \(\mathbf x (0) = \mathbf x _0, \mathbf p (0) = \mathbf p _0\) and \(u(0) = u(\mathbf x _0)\). Due to the nonlinearity, the characteristics may intersect and a classical solution of (1.1) does not exist in general. We consider the viscosity solution [10, 11]: a function \(u\in C^{0,1}({\overline{\Omega }})\) is a viscosity subsolution (supersolution, resp.) if for all \(v\in C_0^{\infty }(\Omega )\) such that \(u-v\) attains a local maximum (minimum, resp.) at some \(\mathbf x _0\in \Omega \), then

A viscosity solution is both a viscosity subsolution and a viscosity supersolution. The mathematical definition of the viscosity solution can be interpreted as the Hopf formula [8, 19, 22],

where \(u(\mathbf x )\) is the value function for an appropriate optimal control problem.

The fast sweeping method is an efficient iterative method for solving (1.1). It has been developed and used successfully for various hyperbolic problems (e.g., see [6, 7, 14, 15, 17, 20, 23, 26, 28, 30, 34, 35, 40, 43–45] and references therein for development, and [3, 18, 21, 24, 25, 27, 29, 31] and references therein for applications to different problems). The key ingredients for the success of the FSM are the following:

-

An appropriate upwind discretization scheme (or local solver) that is consistent with the underlying PDE and guarantees the numerical solution converges to the desired weak solution.

-

Gauss–Seidel iterations with enforced causality: combined with an appropriate upwind scheme it means that (1) the information propagates along the characteristics efficiently, and (2) all newly updated information is used in a correct way and the intersection of different characteristics can be resolved.

-

Alternating orderings that can cover the propagation of the information in all directions in a systematic and efficient way.

The above properties can result in optimal complexity for the FSM, i.e., the number of iterations is finite and independent of the grid size. Previous studies on the convergence of the FSM were mainly based on the method of characteristics [34, 35, 43]. The assumption is that all characteristics start from the boundary where the data are explicitly given, and are of finite length. The convergence is achieved in a finite number of iterations that are independent of the grid size, which is due to the fact that any characteristic can be divided into a finite number of pieces and the information propagation along each piece can be covered by one of the sweeping directions [43]. Another view in terms of linear algebra is that the system of the discretized equation can be turned into a triangular system if a right ordering is followed. So one iteration is needed if the ordering is known. For nonlinear problems the ordering depends on the solution which is not available á priori. However, since an upwind scheme is used, all grid points can be divided into a few connected domains according to their upwind directions. Each connected domain with similar upwind dependence can be turned into a triangular system in one of the systematic orderings.

In this paper we first explain the monotonicity and consistency of the FSM and show that there is a contraction property. Then we prove the convergence that is implied by the contraction property. We prove the convergence in two ways: one is following the usual convergence proof as in [5] by monotonicity and consistency; the other one is through its equivalence to the discretized Hopf formula in the framework of linear approximation. More importantly, we show that the contraction property implies local truncation error estimate. Furthermore, we study the contraction property of the FSM for hyperbolic problems and use it to explain the fast convergence. Through the contraction property we study the convergence of Gauss–Seidel iterations with proper orderings. By using an example with periodic boundary conditions (see examples in Sect. 4), we analyze and explain a phenomenon for hyperbolic problems which is different from that for elliptic problems: fewer iterations are needed as the mesh is refined. Since periodic boundary conditions are imposed, the discretized system cannot be put into a triangular system due to the cyclic dependence and the argument based on the method of characteristics cannot be used.

The paper is organized as follows. In Sect. 2 we recall monotone upwind schemes and the fast sweeping method. In Sect. 3 we first show the contraction property of monotone upwind schemes; then, we prove the convergence of the fast sweeping method. In Sect. 4 we show some numerical examples to verify our analysis. Conclusion remarks are given at the end.

2 Monotone upwind schemes

The first step to solve the Hamilton–Jacobi equation (1.1) numerically with finite difference schemes on a mesh/grid is the discretization of the differential operators that reduces a continuous problem of infinite dimension to a discrete problem of finite dimension. The discretization must be consistent, which means it approximates the PDE with certain accuracy such that the local truncation error goes to zero as the grid size approaches zero. In an abstract form, a discretization scheme establishes an equation for the solution at each grid point and its neighbors that is consistent with the PDE. Assume the equation at grid i has the general form

after discretization, where \(F_i\) denotes a relation of the solution at grid i and its neighbors \(j\in N(i)\).

Remark 2.1

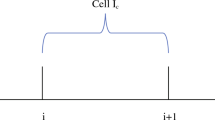

A time-dependent problem can be regarded as a special case in which the characteristic has a fixed direction in one variable, i.e., the time. This special case usually leads to the simplification of the above general relation, e.g., with explicit time stepping,

i.e., the solution at time \(t_{n+1}\) depends on the solution at previous times (see Fig. 1a). However, this explicit scheme has to satisfy a CFL (Courant–Friedrichs–Lewy) condition to guarantee the causality of the true solution, i.e., the numerical domain of dependence must include the true domain of dependence [9]. Geometrically this means that if one traces the characteristic through \(\mathbf x _i\) at \(t_{n+1}\) backward in time, the characteristic will go through the interior of the polygon formed by \(\mathbf x _j, j\in N(i)\) at time \(t^n\) (see Fig. 1a). Numerically this means that there is a constraint on the time step such that \(c\frac{\delta t}{\delta x}\le 1\) where c is the speed of the characteristic. One can also develop unconditionally stable implicit schemes by allowing the characteristics to come in from the side (see Fig. 1b). In this case the solution at mesh points at \(t_{n+1}\) may depend on each other, which essentially turns into the general form (2.1) except that we know the solution at \(t_{n+1}\) does not depend on the solution at \(t_{n+2}\) or later time.

Although using implicit schemes will need the CFL condition to be of the same order for accuracy reason, there are a few advantages of using unconditionally stable implicit schemes such as:

-

The time step can still be relaxed by a factor as explained above and in Fig. 1. Using an efficient implicit solver, such as the fast sweeping method, one can still gain computational efficiency.

-

The constant in the CFL condition for explicit schemes may not be easy to estimate for nonlinear problems. For example, when the Hamiltonian is nonlinear, the flux velocity depends on the solution itself and varies significantly such that a sharp global bound of the velocity is unavailable. This usually leads to either inefficiency or instability.

The consistency of a numerical scheme is usually easy to satisfy. However, it does not guarantee the stability and convergence of the numerical solution. For hyperbolic PDEs, an important class of discretization schemes is monotone schemes. The monotonicity is equivalent to requiring that \(F_i\) is nondecreasing in its first variable and nonincreasing in the remaining variables or vice versa. For nonlinear hyperbolic problems the monotonicity is usually necessary for the convergence of the numerical solutions to the correct weak solutions [5, 12]. Although monotone schemes are at most first-order accurate (e.g., see [32, 39]), they provide the most robust schemes in practice as well as the starting point for high-order schemes [6, 33, 38, 42]. The simplest way to construct monotone schemes is using the Lax–Friedrichs scheme which uses central differences to approximate the derivatives and explicit numerical viscosity term with a coefficient proportional to the grid size, e.g., the discrete Laplacian, to achieve the monotonicity. The main advantage of the Lax–Friedrichs scheme is its simplicity and generality. However, it is not upwind which makes iterative methods converge slowly because the underlying equation has changed from a hyperbolic problem to an elliptic problem with singular perturbation. The convergence to the correct viscosity solution is through the vanishing viscosity as the grid size decreases. Therefore we focus on upwind monotone schemes that are more desirable for iterative methods. In particular, we study the FSM on a general mesh [34, 35, 43]. For simplicity we restrict our discussion in 2D. Extension to higher dimension is straightforward.

The key point of the discretization for the FSM is to use the PDE locally to enforce the consistency and the causality condition. The discretization is detailed as follows. We consider meshes that satisfy the following regularity requirement. Given a mesh \(\Omega _h\) covering the domain \(\Omega \), for each triangle/simplex of the mesh, we denote the diameter of this triangle by \(h_1\) and the minimal height of its vertices by \(h_0\), and let h be the maximum diameter among all triangles of the mesh. Then we assume the mesh satisfies the assumption:

where \(\theta \) is some constant. Given a vertex \(\mathbf C \) and its local mesh \(D_h^\mathbf C \) which consists of all triangles that include \(\mathbf C \) as a common vertex (see Fig. 2), the local discretization scheme first uses the PDE to find a possible value at \(\mathbf C \) that satisfies both the consistency and the causality condition on each triangle and then picks the minimum one among all the possible values according to the control interpretation (or the Hopf formula) of the viscosity solution.

For a typical triangle/simplex \(\triangle \mathbf A \mathbf B \mathbf C \) with vertices \(\mathbf A =(x_A, y_A)\), \(\mathbf B =(x_B, y_B)\) and \(\mathbf C =(x_C, y_C)\), angles \(\angle \mathbf{A }=\alpha \), \(\angle \mathbf{B }=\beta \) and \(\angle \mathbf{C }=\gamma \) and sides \(|\overline{\mathbf{A \mathbf B }}|=c\), \(|\overline{\mathbf{A \mathbf C }}|=b\) and \(|\overline{\mathbf{B \mathbf C }}|=a\), we use linear Taylor expansion,

to approximate \(\nabla u(\mathbf C )\) as

Inserting \(\nabla u (\mathbf C )\) into the Hamilton–Jacobi equation (1.1) at point \(\mathbf C \), we have a consistent discretization of the equation on the triangle \(\triangle \mathbf A \mathbf B \mathbf C \),

which yields an equation for \(u(\mathbf A ), u(\mathbf B )\) and \(u(\mathbf C )\), i.e.,

where \({\hat{H}}\) is the numerical Hamiltonian. Given values \(u(\mathbf A )\) and \(u(\mathbf B )\), we solve (2.7) for \(u(\mathbf C )\). Since \(\nabla u(\mathbf C )\) is approximated as a linear vector function in \(u(\mathbf C )\) by (2.5), the solution corresponds to the intersection(s) of a straight line (parameterized by \(u(\mathbf C )\)) with the level set \(\{\mathbf{p }: H(\mathbf{C },\mathbf{p })=0\}\) in the phase space \(\mathbf p =\nabla u \in R^2\). Since H is convex in \(\mathbf p \), the level set \(\{\mathbf{p }: H(\mathbf{C },\mathbf{p })=0\}\) is also convex. There are only three scenarios (see Fig. 3):

-

1.

There are no solutions for \(u(\mathbf C )\) from (2.7), i.e., the triangle does not support any consistent candidate for \(u(\mathbf C )\), e.g., Fig. 3a.

-

2.

There is only one solution for \(u(\mathbf C )\) from (2.7), i.e., the triangle supports one consistent candidate for \(u(\mathbf C )\), e.g., Fig. 3b.

-

3.

There are two solutions for \(u(\mathbf C )\) from (2.7), i.e., the triangle supports two consistent candidates for \(u(\mathbf C )\), e.g., Fig. 3c.

For scenario 1 we choose

where \(l(\cdot ,\cdot )\) is the optical distance between two points defined by (1.2). For scenario 2 or 3, we need to further check whether a candidate value for \(u(\mathbf C )\) that is consistent with the PDE satisfies the following causality condition: the characteristic passing through \(\mathbf C \) is in between the two vectors \(\overrightarrow{\mathbf{A \mathbf C }}\) and \(\overrightarrow{\mathbf{B \mathbf C }}\). This turns out to be a crucial condition for the monotonicity of the scheme, which will be discussed later. For a value \(u(\mathbf C )\) we compute \(\nabla u(\mathbf C )\) from (2.5) and check whether the vector \( \nabla _{ \mathbf p }H(\mathbf C ,\nabla u(\mathbf C ))\) is in between \(\overrightarrow{\mathbf{A \mathbf C }}\) and \(\overrightarrow{\mathbf{B \mathbf C }}\). We call \(u(\mathbf C )\) a feasible candidate if it satisfies both the consistency and the causality condition. We can use the same procedure to find feasible candidates for \(u(\mathbf C )\) from other triangles that include \(\mathbf C \) as one of their vertices. Now the question is: if there are more than one candidates, which one should be chosen? One can imagine that when different characteristics intersect, each characteristic will provide a possible candidate. The viscosity solution will pick only one of them. Although we have used the local information provided by the PDE, which gives us the relations among all the neighboring vertices and reduces the possible candidates to a few, we need extra information, usually global, to determine which one is the one for the viscosity solution. In this situation, the PDE definition of the viscosity solution (1.4) is difficult to use. On the other hand the optimal control interpretation (or the Hopf formula) (1.5) gives a convenient criterion: among all the possible candidates of \(u(\mathbf C )\) we choose the minimum one. Although the control interpretation is global, the optimality condition can be applied easily and locally using the dynamical programming principle [4, 22]. In such a way, the upwind information determined by the characteristic is chosen correctly.

Now we relate the above scheme to the one derived in [7] using the optimal control formulation based on the following fact: if the Hamiltonian is convex and homogeneous in space, i.e., \(H(\mathbf x ,\mathbf p )=H(\mathbf p )\), it can be shown that the optimal path between two points \(\mathbf x , \mathbf y \in \Omega \) is the straight line \(\overline{\mathbf{x \mathbf y }}\) connecting these two points if \(\overline{\mathbf{x \mathbf y }}\subset \Omega \) [7, 22]. Let

then \(\xi '(t)=\mathbf y -\mathbf x \), and

It is easy to see that for a smooth and convex Hamiltonian \(H(\mathbf p )\), \(\max _{H(\mathbf p )=0}\langle \mathbf p ,\mathbf q \rangle \) is obtained at a unique \({\hat{\mathbf{p }}}\) such that

One can solve the above equation for \({\hat{\mathbf{p }}}\) and get

In our first-order approximation, we assume \(H(\mathbf x ,\mathbf p )\approx H(\mathbf C ,\mathbf p )\) on the local mesh \(D_h^\mathbf C \) of \(\mathbf C \). The above scheme is equivalent to finding the optimal value \(u(\mathbf C )\) with the boundary value defined as the linear interpolation of the nodal values on each line segment of \(\partial D_h^\mathbf C \). In particular, for each triangle, e.g., \(\triangle \mathbf A \mathbf B \mathbf C \), if the values at \(\mathbf B \) and \(\mathbf C \) are compatible, i.e., \(|u(\mathbf B )-u(\mathbf C )|\le l(\mathbf B ,\mathbf C )\), then scenario 2 or 3 occurs. The corresponding characteristic is the optimal path in this triangle. However, if the values at \(\mathbf B \) and \(\mathbf C \) are not compatible, the optimal path is one of the edges. This is, for example, if the value at one vertex, say \(\mathbf A \), is much smaller than the values at other vertices, then \(u(\mathbf A )+l(\mathbf A ,\mathbf C )\) may provide the smallest possible value for \(u(\mathbf C )\) than through any other triangles or edges. This is important when one starts from an arbitrary initial guess, i.e., for those vertices on or near the boundary, their values are set according to the boundary conditions, while all other vertices are assigned a large value.

By denoting the minimum value among those possible candidates satisfying the consistency and causality through each triangle on \(D_h^\mathbf C \) by \({\hat{u}}(\mathbf C )\), and the minimum value among those values going though the edges connecting to \(\mathbf C \) by

our local solver of the discretization scheme for \(u(\mathbf C )\) is

This scheme is consistent, monotone and upwind. The scheme is upwind because the value of \(u(\mathbf C )\) is determined by either one triangle or one edge, which is the upwind direction. The consistency and monotonicity guarantee the numerical solution will converge to the correct viscosity solution as the grid size approaches zero [5, 12]. We will show a few important properties of this scheme in more details in Sect. 3.1.

The discretization at each vertex results in a nonlinear equation like (2.7). Since we have a boundary value problem, all the vertices are coupled together and a large system of nonlinear equations needs to be solved, which is the second step in designing the method. The key idea behind the FSM is using causality enforced Gauss–Seidel iterations with alternating orderings.

We summarize the method:

-

1.

Initial guess:

For vertices on or near the boundary, their values are set according to the given boundary conditions, and they are fixed during iterations. All other vertices are assigned a large value, e.g., infinity.

-

2.

Causality enforced Gauss–Seidel iterations with alternating orderings (sweepings):

-

Update during each iteration: at a vertex \(\mathbf C \), the updated value \(u^\mathrm{{new}}(\mathbf C )\) at \(\mathbf C \) is

$$\begin{aligned} u^\mathrm{{new}}(\mathbf C )=\min \{u^\mathrm{{old}}(\mathbf C ), {\tilde{u}}(\mathbf C )\}, \end{aligned}$$(2.12)where \(u^\mathrm{{old}}(\mathbf C )\) is the current value at \(\mathbf C \) and \({\tilde{u}}(\mathbf C )\) is the value at \(\mathbf C \) computed from the current given neighboring values according to (2.7) and (2.11).

-

Orderings: all vertices are ordered according to a finite number of different orderings alternately during Gauss–Seidel iterations. The orderings are designed in a way to cover all the directions of the characteristics systematically and efficiently. For example, in 2D cases, if a Cartesian grid is used, four alternating orderings are needed and given by

$$\begin{aligned} \begin{array}{l} (1)~ i=1{:}I; j=1{:}J; \qquad (2)~ i=I{:}1; j=1{:}J;\\ (3)~ i=I{:}1; j=J{:}1; \qquad (4)~ i=1{:}I; j=J{:}1. \end{array} \end{aligned}$$(2.13)If a triangulated mesh is used, we can choose the orderings designed in [34, 35], where the distances to a few fixed reference points are used to order all the vertices.

-

Remark 2.2

This scheme can be formulated in different ways such as the variational formulation [7], the Legendre-transform formulation [20] and the fast marching method [36, 37]. For more types of discretizations, please refer to [1] and references therein.

3 Properties of FSM and its convergence

In this section, we analyze the fast convergence of the FSM and the convergence of its numerical solution to the viscosity solution as the grid size approaches zero. Firstly, we prove the monotonicity and consistency, especially we prove the scheme has a contraction property. Secondly, we show the convergence of the FSM and the convergence of its numerical solution to the viscosity solution as the grid size approaches zero. We also prove its equivalence to the discretized Hopf formula. Finally, we analyze the effect of the contraction property that contracts the local truncation error, which also implies the fast convergence with optimal complexity.

3.1 Monotonicity, consistency and contraction property

We show that the scheme is consistent and monotone, and especially it has a contraction property.

Lemma 3.1

The discretization scheme (2.7) along with the causality condition is consistent and monotone. Moreover, if \(u(\mathbf C )\) depends on \(u(\mathbf A )\) and \(u(\mathbf B )\), i.e., \(u(\mathbf C )\) is computed through the triangle \(\triangle \mathbf A \mathbf B \mathbf C \) or along one of the two edges, \(\overline{\mathbf{A \mathbf C }}\) or \(\overline{\mathbf{B \mathbf C }}\), then the following contraction condition is satisfied:

Proof

The consistency and monotonicity have been shown in [34, 35] if \(u(\mathbf C )\) is computed through one triangle. Taking the minimum among all the triangles will maintain the monotonicity. In particular if \(u(\mathbf C )\) is determined from \(\triangle \mathbf A \mathbf B \mathbf C \), we have

as in (2.7). The causality condition implies that \(\nabla _\mathbf p H\) can be represented as a convex combination of \(\overrightarrow{\mathbf{A \mathbf C }}\) and \(\overrightarrow{\mathbf{B \mathbf C }}\), which gives

Then the monotonicity is guaranteed, i.e.,

with \(\mathbf q \) defined in (2.5). If we differentiate the equation \({\hat{H}}(\mathbf C ,\mathbf q )=0\) with respect to \(u(\mathbf A )\) and \(u(\mathbf B )\), we have

and similarly,

Hence the last statement of the lemma holds. This completes the proof.

The next lemma shows a special property of the scheme if the Hamilton–Jacobi equation is of the Eikonal type,

where \(f(\mathbf x )>0\) is a positive continuous function bounded above and bounded below from 0. This allows the causality condition to be enforced by a sorting algorithm, which is the base of a Dijkstra-type algorithm [13].

Lemma 3.2

For the Eikonal equation (3.3), if \(u(\mathbf C )\) is computed through the triangle \(\triangle \mathbf A \mathbf B \mathbf C \) and \(\angle \mathbf{C }=\gamma < \frac{\pi }{2}\), we have

where h is the size of the triangle and \(\delta (\gamma )>0\) is a constant depending on \(\gamma \).

Proof

The result comes directly from the Fermat’s principle. Without loss of generality, we assume \(u(\mathbf B )\ge u(\mathbf A )\), and \(\theta \) is the angle formed by \(\overrightarrow{\mathbf{A \mathbf C }}\) and the characteristic; then we have (see Fig. 4),

which implies

This completes the proof.

Remark 3.3

Tsitsiklis [41] proved the same result on a Cartesian grid.

As a consequence of the monotonicity of the scheme and (3.1), we have the following lemma.

Lemma 3.4

The fast sweeping algorithm is monotone in the initial data, i.e., if at iteration k, \(u^{(k)} \le v^{(k)}\) at every grid point, then at any later iteration, say \(n>k\), \(u^{(n)} \le v^{(n)}\) at every grid point.

With the above properties for the discretization scheme, we prove the convergence of the iterative method and the convergence of the numerical solution to the viscosity solution as the grid size approaches zero. Besides, we prove the equivalence between the FSM discretization and the Hopf formula under linear approximations.

3.2 Convergence of the FSM

We first show the convergence of the FSM, which is implied by the contraction property and monotonicity. Here we use subscript h to indicate the numerical solutions.

Theorem 3.5

Given a mesh \(\Omega _h\) with size h for the domain \(\Omega \), assume that the initial guess enforces the boundary condition \(u_h^{(0)}(\mathbf x ) = g(\mathbf x )\) for mesh points \(\mathbf x \in \partial \Omega _h\), and assigns \(u_h^{(0)}(\mathbf x ) = M\) for some \(M>0\) large enough at all other mesh points, then the sequence of solutions \(\left\{ u_h^{(n)}\right\} _{n=0}^\infty \) computed by the FSM corresponding to Gauss–Seidel iterations will converge to a solution \(u_h\) of the discretized equation (2.7) with \(u_h|_{\partial \Omega _h} = g\). Moreover,

Proof

The causality enforcement (2.12) during iterations and the monotonicity in the initial data from Lemma 3.4 imply

at all mesh points. At any iteration n,

where the first inequality is due to the enforcement in (2.12), the second inequality is due to the control interpretation (or the Hopf formula) of the viscosity solution (see Lemma 3.8 in Sect. 3.3), and the equality is the result of fixing the boundary conditions during iterations.

Then the sequence \(\left\{ u_h^{(n)}\right\} _{n=0}^\infty \) is monotone and bounded, which implies \(u_h^{(n)}(\mathbf x ) \rightarrow u_h(\mathbf x )\) as \(n \rightarrow \infty \) for certain function \(u_h\) at any mesh point \(\mathbf x \). By Lipschitz dependence of the numerical Hamiltonian on the mesh values (3.2), \(u_h\) satisfies the discretized equation (2.7) with \(u_h|_{\partial \Omega _h} = g\). And

Remark 3.6

The large number \(M>0\) only needs to be larger than the maximum value of \(u_h(\mathbf x )\) that has an upper bound given as the optimal cost among all the paths passing through edges to the boundary,

where \(\xi _h^e(\mathbf x ,\mathbf y )\) is any path through edges of the mesh starting from \(\mathbf x \) and ending at \(\mathbf y \in \partial \Omega _h\), and \(l(\xi _h^e(\mathbf x , \mathbf y ))\) is defined by (2.9) on each edge segment.

Now we prove that the numerical solution of the FSM converges to the viscosity solution as the grid size approaches zero.

Theorem 3.7

As \(h\rightarrow 0\), the numerical solution \(\{u_h\}\) of the FSM converges uniformly to the viscosity solution of (1.1).

Proof

The proof consists of two steps: (1) \(\{u_h\}\) is equi-continuous and uniformly bounded (Remark 3.6) such that by the theorem of Arzelà–Ascoli \(\{u_h\}\) converges to some function u satisfying (1.1) as \(h\rightarrow 0\) (e.g., see [7]); (2) u is the viscosity solution by the monotonicity (e.g., see [5]).

We first prove \(\{u_h\}\) is Lipschitz continuous by the following three steps: (a) for two vertices \(\mathbf x \) and \(\mathbf y \) belonging to the same triangle, \(|u_h(\mathbf x )-u_h(\mathbf y )|\le K_1|\mathbf x -\mathbf y |\) for some \(K_1>0\) independent of the mesh size h; (b) for any two points \(\mathbf x \) and \(\mathbf y \) in the same triangle, \(|u_h(\mathbf x )-u_h(\mathbf y )|\le K_2|\mathbf x -\mathbf y |\) for some \(K_2>0\) independent of the mesh size h; and (c) for any two points \(\mathbf x \) and \(\mathbf y \) on the mesh, \(|u_h(\mathbf x )-u_h(\mathbf y )|\le K|\mathbf x -\mathbf y |\) for some \(K>0\) independent of the mesh size h.

For (a), the convexity and coercivity of the Hamiltonian imply for any \(\mathbf p \), \(H(\mathbf x ,\mathbf p )\ge c_1 |\mathbf p | - c_2\) for some positive constants \(c_1\) and \(c_2\). From (2.9), we have \(\rho (\mathbf p )\le K_1 |\mathbf p |\) with \(K_1=c_2/c_1\). Using the control interpretation (or the Hopf formula) of the viscosity solution (also see Lemma 3.8 in Sect. 3.3), we get

hence,

For (b), let us first assume \(\mathbf x \) and \(\mathbf y \) are in a triangle denoted as \(\Delta \mathbf A \mathbf B \mathbf C \), \(\mathbf x \) is on edge \(\overline{\mathbf{A \mathbf B }}\), and \(\mathbf y \) is on edge \(\overline{\mathbf{B \mathbf C }}\). Then

for some \(\lambda \in [0,~1]\), and

for some \(\mu \in [0,~1]\), following which we have

and

Hence we have

By denoting the internal angle at \(\mathbf B \) of \(\Delta \mathbf A \mathbf B \mathbf C \) as \(\theta _\mathbf B \), we have

And by the assumption (2.3), \(\theta _\mathbf B \ge \theta _{*} > 0\) for some constant \(\theta _*\) independent of h, which implies there exists some constant \(K_3>0\) independent of h such that

Since \(u_h(\mathbf x )\) is linear on \(\Delta \mathbf A \mathbf B \mathbf C \) and \(|u_h(\mathbf x ) - u_h(\mathbf y )|\le K_2|\mathbf x -\mathbf y |\) for any two points on edges of the triangle, it is true for any two points in the interior of the triangle.

For (c), for any two vertices \(\mathbf x ,~ \mathbf y \in \Omega _h\), there is a Lipschitz path \(\xi \in C^{0,1}([0,~1],\Omega _h)\) linking \(\mathbf x \) and \(\mathbf y \) with arc-length \(l_\xi \) such that

for some constant \(K_\Omega \) depending on \(\Omega \) (see [2]). Let \(0=t_0<t_1<\ldots <t_l=1\) be a partition of [0, 1] such that \(\xi (t_i)\) and \(\xi (t_{i+1})\) are points belonging to a common triangle, which can be chosen as the intersections of \(\xi \) with the mesh \(\Omega _h\). Then we have

where the first inequality is by the triangle inequality, the second inequality is due to (b), the third inequality is by the fact that the total length of the polygonal path through \(\{\xi (t_i)\}_{i=0}^l\) is bounded by \(l_\xi \), and the last inequality is due to (3.7). Let \(K= K_\Omega K_2\), we prove that \(\{u_h\}\) is Lipschitz continuous with a Lipschitz constant independent of h.

The boundedness of {\(u_h\)} as discussed in Remark 3.6, the above proof of Lipschitz continuity and the theorem of Arzelà–Ascoli imply that there exists a subsequence \(\{ u_{h_k} \}\) of \(\{u_{h}\}\) such that

where u is some function satisfying (1.1).

Next we follow the arguments in [5, 7] to prove that u is the viscosity solution. We first prove u is a viscosity subsolution. Following the perturbed test function method in [16], let \(\phi \in C^\infty _0(\Omega )\) and \(\mathbf x _0\in \Omega \) such that \(u-\phi \) attains a strict local maximum at \(\mathbf x _0\). Then there is a sequence of points \(\{\mathbf{C }_{h_{k'}} \in {\Omega }_{h_{k'}}\} \) such that \(\mathbf C _{h_{k'}} \rightarrow \mathbf x _0\), \(h_{k'}\rightarrow 0\) as \(k'\rightarrow \infty \), and \(u_{h_{k'}} - \phi \) attains a local maximum at \(\mathbf C _{h_{k'}}\). That is,

for all \(\mathbf x _{h_{k'}}\) belonging to the local mesh of \(\mathbf C _{h_{k'}}\) (see Fig. 2). Let us focus on the upwind triangle, say \(\Delta \mathbf A _{h_{k'}} \mathbf B _{h_{k'}} \mathbf C _{h_{k'}}\), and follow the notations in Lemma 3.1, and we have

and

Then we have

and

Following the causality and monotonicity in Lemma 3.1, we have

Consequently we have

following the consistency. Hence we prove u is a viscosity subsolution. Similarly we can prove u is a viscosity supersolution. Therefore, u is the viscosity solution.

Due to the uniqueness of the viscosity solution [10, 11], the above proof shows that every subsequence of \(\{ u_h\}\) has a subsequence which converges uniformly to u. Therefore, \(\{ u_h\}\) converges uniformly to u as \(h\rightarrow 0\). This completes the proof.

In conclusion, we show that the FSM is convergent and its numerical solution converges to the viscosity solution as the grid size approaches zero.

3.3 Hopf formula and FSM

Here we show that, with piecewise linear approximation, the local solver based on the Hopf formula introduced in [7, 37] and the local solver, (2.7) + (2.12) + (2.11), of the FSM are equivalent. The subscript h is omitted in this part for notational simplicity.

The Hopf formula under piecewise linear approximation on a local mesh \(D_h^\mathbf C \) at \(\mathbf C \) (see Fig. 2) is given as,

where the boundary value \(u(\mathbf F )\) is determined by linear interpolations of vertex values on each line segment of \(\partial D_h^\mathbf C \).

Lemma 3.8

The local solver (3.8) based on the Hopf formula using piecewise linear approximation is equivalent to the local solver, (2.7) \(+\) (2.11) \(+\) (2.12), of the FSM.

Proof

Note that the local solver (3.8) is exactly to enforce the method of characteristics on direction \(\overrightarrow{\mathbf{F \mathbf C }}\) and to choose the minimum one along all the paths. That is,

Now we show that on each triangle \(\triangle \mathbf A \mathbf B \mathbf C \), the local solver of the FSM, (2.7), (2.11) and (2.12), can pick the minimum one as shown in the Hopf formula, therefore from all triangles.

For each point \(\mathbf F \in \overline{\mathbf{A \mathbf B }}\), by linear interpolations,

Define

where \({\hat{H}}(\mathbf p (\lambda ))=0\), and \(\nabla {\hat{H}}(\mathbf p (\lambda )) \text{ is } \text{ parallel } \text{ to } (\mathbf C -\mathbf F )\). Then, for \(\lambda \in (0,~1)\),

Since \({\hat{H}}(\mathbf p (\lambda ))=0\) and \(\nabla {\hat{H}}(\mathbf p (\lambda )) \text{ is } \text{ parallel } \text{ to } (\mathbf C -\mathbf F )\), we know

Then,

First we show that the solution from the local solver of the FSM, (2.7), (2.11) and (2.12), denoted as \(\{\lambda _M, \mathbf p (\lambda _M)\}\), satisfies

To prove this, we use the notations from the proof of Lemma 3.1. We have

Therefore, \(\{\lambda _M, \mathbf p (\lambda _M)\}\) is a critical point of \(G(\lambda )\).

Next we prove that \(G(\lambda )\) is convex by showing

We show that,

And we see that

since \(\nabla {\hat{H}}(\mathbf p (\lambda ))\cdot \frac{\partial ^2\mathbf p (\lambda )}{\partial \lambda ^2}\le 0\) implies \((\mathbf C -\mathbf F )\cdot \frac{\partial ^2\mathbf p (\lambda )}{\partial \lambda ^2}\le 0\). Hence \(G(\lambda )\) is convex when \(\lambda \in (0,~1)\).

Since \(G(\lambda )\) is convex in (0, 1), the global minimum of \(G(\lambda )\) on [0, 1] is obtained either at an interior point or at the two end points. If \(\lambda _M\) is one of the two end points, \(\{\lambda _M, \mathbf p (\lambda _M)\}\) is a global minimum point according to (2.7), (2.11) and (2.12). If \(\lambda _M \in (0,~1)\), since \(\frac{\mathrm{{d}}G(\lambda _M)}{\mathrm{{d}}\lambda }=0\), \(\{\lambda _M, \mathbf p (\lambda _M)\}\) is a global minimum point.

In conclusion, the local solver (3.8) based on the Hopf formula and that of the FSM, (2.7), (2.11) and (2.12), are equivalent to piecewise linear approximation. The proof is complete.

3.4 Local truncation error and error estimate

Lemma 3.1 shows the monotonicity and consistency of the local solver of the FSM, especially the contraction property (3.1). Here we study the relation for the errors at vertices of the same triangle. In contrast to error estimate for linear problems, the main difficulty for error estimate for nonlinear problems is that the errors do not satisfy the same equation as the solution.

Under the assumptions (A1)–(A7), let u be the exact viscosity solution of (1.1) and \(u_h\) be the numerical solution computed by the FSM on \(\Omega _h\). Assume that \(\nabla _\mathbf p H\) satisfies

on \(\Omega _h\) for both u and \(u_h\) with given constants \(v_{\min }\) and \(v_{\max }\). Denote the error by \(e(x,y) = u(x,y) - u_h(x,y)\) on \(\Omega _h\). In a typical triangle \(\Delta \mathbf A \mathbf B \mathbf C \in \Omega _h\) as shown in Fig. 5a, assume \(u_h(\mathbf C )\) is determined by \(u_h(\mathbf A ), u_h(\mathbf B )\) and u is twice differentiable.

Denote \(\mathbf q = (q_1,q_2)\) and \(\mathbf q ^h = (q_1^h, q_2^h)\) with

where

Here \({\hat{u}}_{xx},{\hat{u}}_{xy}, {\hat{u}}_{yy}\) and \({\tilde{u}}_{xx},{\tilde{u}}_{xy}, {\tilde{u}}_{yy}\) are evaluated at appropriate points on \(\overline{\mathbf{A \mathbf C }}\) and \(\overline{\mathbf{B \mathbf C }}\), respectively, and \(\mathbf p = \mathbf P ^{-1} \mathbf q \) and \(\mathbf p ^h = \mathbf P ^{-1} \mathbf q ^h\) with \(\mathbf P \) defined in (2.4). It is clear that \(|\gamma _\mathbf{A \mathbf C }| \le D(u) h^2\) and \(|\gamma _\mathbf{B \mathbf C }| \le D(u) h^2\), where D depends on the regularity of u. Since u is twice differentiable everywhere, \(D \le M < \infty \) for some constant M that is independent of h. We know

Let us also denote

where the partial derivative of \(u(\mathbf C )\) with respect to values at \(\mathbf A \) and \(\mathbf B \) is defined as in the proof of Lemma 3.1. Since \(H(\mathbf C , \mathbf p )\) is convex in the second argument, on the level set \(\{ \mathbf p : H(\mathbf C ,\mathbf p ) = 0\}\) at \(\mathbf C \) as shown in Fig. 5b, there is only one point on the level set, denoted as \(\mathbf p ^\mu \), such that \(\nabla _\mathbf p H(\mathbf C , \mathbf p ^\mu )\) is perpendicular to the line segment connecting \(\mathbf p \) and \(\mathbf p ^h\), i.e.,

and \(\mathbf p ^\mu \) must be on the curve between \(\mathbf p \) and \(\mathbf p ^h\). Then we know \(\nabla _\mathbf p H(\mathbf C , \mathbf p ^\mu )\) is in between \(\nabla _\mathbf p H(\mathbf C , \mathbf p )\) and \(\nabla _\mathbf p H(\mathbf C , \mathbf p ^h)\). Equation (3.11) implies,

where \(\mathbf q ^\mu = \mathbf P \mathbf p ^\mu \), and \(\alpha ^\mu _\mathbf C \) and \(\beta ^\mu _\mathbf C \) are defined at \(\mathbf q ^\mu \) as in the proof of Lemma 3.1. We rewrite the above equation as

with

If the true characteristic \(\nabla _\mathbf p H(\mathbf C , \mathbf p )\) through \(\mathbf C \) is inside the triangle as well, as demonstrated in Fig. 5c, from Lemma 3.1, we know

\(\alpha ^\mu _\mathbf C \) is between \(\alpha ^h_\mathbf C \) and \(\alpha _\mathbf C \), and \(\beta ^\mu _\mathbf C \) is between \(\beta ^h_\mathbf C \) and \(\beta _\mathbf C \). Equation (3.12) can also be written as

Moreover, in addition to the above error relation, we can get a relation among the function values. With Fermat’s principle (see Fig. 5a, c), we have

for \(\mathbf F =\alpha _C \mathbf A + \beta _C \mathbf B \) and \(\mathbf F ^h=\alpha ^h_C \mathbf A + \beta ^h_C \mathbf B \), where \(v_\mathbf{F \mathbf C } = |\nabla _\mathbf{p } H(\mathbf C , \nabla u(\mathbf C ))|\) (\(v^h_\mathbf{F \mathbf C } = |\nabla _\mathbf{p } H(\mathbf C , \nabla u_h(\mathbf C ))|\), resp.) is the group velocity along the direction \(\mathbf F \rightarrow \mathbf C \) (\(\mathbf F ^h \rightarrow \mathbf C \), resp.). Then we have

and

Due to the Lipschitz continuity of u and \(u_h\), there exists a constant \(0< L < \infty \) such that

for any \(\mathbf x , ~\mathbf y \in \Omega _h\).

Define

where \(\theta \) is given in (A7). For h sufficiently small, from (3.16) and (3.17) and the Lipschitz continuity, we have:

The above analysis shows that in a triangle in which the causality of the true solution and the causality of the numerical solution are consistent, the global error has a stable accumulation of local truncation error as shown in (3.14). Moreover, this stable accumulation of local truncation error can be traced back to the boundary following a strict descent order of the solution in \(O(1/h_0)\) steps as shown in (3.18).

3.5 Convergence study through contraction property

Using the contraction property of monotone upwind schemes we study error reduction during Gauss–Seidel iterations with proper orderings. The convergence mechanism is different from that, i.e. relaxation, for elliptic problems. We analyze and explain a few interesting phenomena as the mesh is refined for hyperbolic problems using examples with different boundary conditions. We start by using the following linear convection equation as an illustration. For the problem

where a, b are constants and \(a>b>0\), the first-order monotone upwind scheme is

Define \( e^n_{j,i}=u^n_{j,i}-u_{j,i}\) as the error at the nth iteration. With the right ordering (i.e., \(i=1:I,~j=1:J\)) for Gauss–Seidel iterations, the update at every point gives the following recursive relation in the error,

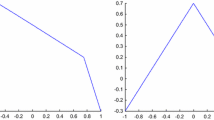

We analyze three convergence scenarios (see Fig. 6).

-

Case 1:

The information propagates directly from the inflow boundary. Then the convergence can be obtained in a finite number of iterations independent of the grid size for Gauss–Seidel iterations that use upwind schemes with proper orderings, e.g., the fast sweeping method. For this example, one sweep is enough if the boundary condition is given on the left and bottom sides.

-

Case 2:

There is circular dependence, e.g., periodic boundary conditions.

-

Partial circular dependence, e.g., periodic boundary condition in x and given boundary condition on the bottom. This is equivalent to solving the PDE on a cylinder with boundary conditions given on the bottom. The characteristics wind from the bottom to the top of the cylinder. For this example, grid points on each horizontal line are coupled. Update at each grid point on the first line above the bottom side has the following error contraction from (3.21),

$$\begin{aligned} e^n_{1,i}=\frac{a}{a+b}e^n_{1,i-1}. \end{aligned}$$In each sweeping with right orderings, the error at the first line is contracted by a factor of \(\alpha ^{\frac{1}{h}}\) after one iteration with \(\alpha =\frac{a}{a+b}<1\). Very few iterations, say n, are needed to get \({\left( \alpha ^{\frac{1}{h}}\right) }^n \backsim h^p\) for any p. Moreover, the smaller the h, the faster the convergence. The convergence due to the contraction follows similarly for other horizontal grid lines.

-

Full circular dependence, e.g., periodic boundary conditions in both x and y with the value fixed at one point. This is equivalent to solving the PDE on a torus. The characteristics can be infinitely long or closed depending on a and b. If \(\frac{b}{a}\) is rational, each characteristic forms a closed curve. These closed curves are parallel to each other and do not have a starting point from which the information originates, except the characteristic that passes through the point with fixed value to make the solution unique. For the discrete system, all grid points are coupled together due to the circular dependence. In this case, the characteristic that passes through the given point will converge first and quickly. The solution has to compromise among the characteristics as well as propagate along the characteristics gradually due to the relaxation from the monotone scheme (3.20), i.e., the value at a grid point is the average of its neighbors’ values. When the grid is refined by half, there are twice as many characteristics as before involved. Hence the number of iterations is almost doubled as shown by numerical tests in Sect. 4. If \(\frac{b}{a}\) is irrational, the characteristic is infinitely long and covers the whole torus. Again all grid points are coupled together and the convergence is due to the relaxation from the scheme. When the grid is refined by half, the characteristic is twice as long as before with respect to the grid size. So the number of iterations is also doubled.

-

Remark 3.9

The above discussion for the convergence of iterative methods for linear convection problems provides some insight into nonlinear problems (Lemma 3.1), except for a few key differences:

-

1.

The ordering, the upwind finite difference scheme and the contraction rate are unknown á priori (all depending on the solution). That is why sweeping in four directions alternately is needed.

-

2.

Nonlinear stability: for each point, due to the update with causality enforcement, e.g., accepting the smallest/best value propagated to this point so far, incorrect value at this point, no matter how large the error is, can be corrected when the correct information arrives during a sweeping with the right orderings; hence decay of the error may be better than geometric during iterations.

-

3.

For the full periodic case, the characteristics behavior is much more complicated. The convergence depends on ergodicity of the characteristics on the torus. So when the mesh is refined, more iterations may or may not be needed for convergence as indicated by the numerical examples in Sect. 4.

Numerical examples are shown in the next section to verify the above study.

4 Numerical examples

In this section, we first use a few examples to show the general error estimate and the fast convergence. Then we use various tests on the linear convection equation as well as nonlinear Hamilton–Jacobi equations to demonstrate the convergence in different scenarios as discussed in Sect. 3.5. We record the number of iterations. One iteration means one sweep over all grid points.

Example 1: Eikonal equation. In this example, we consider the isotropic Eikonal equation,

where s is the slowness field and different boundary conditions are considered. The tests are performed on a rectangular mesh.

-

1.

We consider \(s \equiv 1\), and the domain is \([0, ~1]^2\). We first compute the distance to a source point. The boundary condition is imposed at the source point. The solution is not differentiable at the source, i.e., there has a source singularity. Table 1 shows the results. The accuracy is \(O(|h\log h|)\) due to the source singularity, which verifies the error estimate in [43]. Then we compute the distance to two disjoint circles of radius 0.1 and 0.15, respectively. Table 2 shows the results. We record maximum errors both inside and outside the circles. Although there are shocks both inside and outside the circles, they are different. Inside the circles, the shocks are located at the centers of the circles. All characteristics are converging to the centers. The second derivatives blow up like \(\frac{1}{d}\), where d is the distance to the centers. So the accumulation of local truncation error behaves in the same way as near a source singularity, which gives an error estimate of the form \(O(|h\log h|)\). Outside the circles, the shock is a smooth curve (at the equal distance locations). As long as the shock is a smooth curve without end points, the second derivatives are uniformly bounded except at the shock. So the local truncation error is still \(O(h^2)\) all the way up to the shock. At the shock, the local truncation error is O(h). So the global error is still O(h), which is verified by the numerical tests.

-

2.

We consider \(s(x,y) = 0.5 - (y-0.25)\) and the domain is \([0,~0.5]^2\). For point-source conditions, the exact solution can be derived as in [17]. Table 3 shows the results with one point source. When the boundary condition is enforced only at the source, the maximum error is \(O(h\log h)\) since the solution has a source singularity. When the exact solution is enforced on a disk of radius 0.1 centered at the source and the computation is performed outside the disk, then the maximum error is O(h) since the solution is smooth outside the disk. Table 4 shows the results with two source points and with the same treatment at the sources. Similar accuracy is observed.

Example 2: Linear problem (3.19) In this example, we show convergence tests corresponding to the three scenarios of boundary conditions discussed in Sect. 3.5. All the iterations use one ordering \(i=1{:}I,~j=1{:}J\) since all the characteristics go upright from the boundary with \(a>0, b>0\). Table 5 shows the results. It shows that for explicit inflow boundary conditions, one iteration is needed (the second iteration is for the check of convergence). For partial periodic boundary conditions, the number of iterations depends on the contraction rate that depends on a and b. The number of iterations decreases as the mesh is refined. For full periodic boundary conditions, the number of iterations increases almost linearly as the mesh is refined.

Example 3: Anisotropic Eikonal equation In this example, we consider the anisotropic Eikonal equation given by

where \(M_\theta = \left( \begin{array}{cc}\cos \theta &{}\quad -\sin \theta \\ \sin \theta &{}\quad \cos \theta \\ \end{array}\right) M_0 \left( \begin{array}{cc}\cos \theta &{}\quad -\sin \theta \\ \sin \theta &{}\quad \cos \theta \\ \end{array}\right) ^t ~\text{ with } \text{ given }~M_0\). The domain is \([0,~1]^2\). The tests are performed on a rectangular mesh. We choose \(M_0\) as one of the following two forms:

-

(a)

$$\begin{aligned}M_0=\left( \begin{array}{cc}1/4 &{} \quad 0\\ 0 &{} \quad 1\\ \end{array}\right) , \end{aligned}$$

without spatial variation.

-

(b)

$$\begin{aligned}M_0=\left( \begin{array}{cc}1/4(1-0.5\sin \pi x \sin \pi y) &{} \quad 0\\ 0 &{} \quad 1+0.5\sin \pi x \sin \pi y\\ \end{array}\right) , \end{aligned}$$

with spatial variation.

We test the following cases with different boundary conditions and show both similarities and differences (see Remark 3.9) between linear and nonlinear problems.

-

1.

With Dirichlet boundary condition

$$\begin{aligned} u(x,0)=(\alpha +1) - \alpha \cos (\pi x). \end{aligned}$$For this case, the characteristics from the boundary are oriented in one direction, either from lower left to upper right or from lower right to upper left, which can be pre-determined. Hence one ordering is needed for convergence. Table 6 shows the ordering and the test results.

-

2.

With Dirichlet boundary condition

$$\begin{aligned} u(x,0)=(\alpha +1) - \alpha \cos (2\pi x), \end{aligned}$$and partial periodic boundary condition: periodic in x. For this case, the characteristics from the boundary are oriented in two directions: from lower left to upper right and from lower right to upper left. Hence two orderings are needed for convergence. Table 7 shows the orderings and the test results. The number of iterations does not increase as the mesh is refined.

-

3.

With point-source condition

$$\begin{aligned} u(1/2,1/2)= 0. \end{aligned}$$For this case, the characteristics from the source point are oriented in all directions. Hence four orderings (\(i=1{:}I,~j=1{:}J;~i=1{:}I,~j=J{:}1; ~i=I{:}1,~j=1{:}J; i=I{:}1,~j=J{:}1\)) are needed for convergence. Table 8 shows the orderings and the test results. The number of iterations does not increase as the mesh is refined.

-

4.

With partial periodic boundary condition: u is periodic in x and

$$\begin{aligned} u(1/2,1/2)= 0. \end{aligned}$$For this case, the characteristics from the source point are oriented in all directions. Hence four orderings (\(i=1{:}I,j=1{:}J;~i=1{:}I,j=J{:}1; ~i=I{:}1,j=1{:}J; ~i=I{:}1,j=J{:}1\)) are needed for convergence. Table 9 shows the orderings and the test results. The number of iterations does not increase as the mesh is refined.

-

5.

With full partial periodic boundary condition: u is periodic in x and y, and

$$\begin{aligned} u(1/2,1/2)= 0. \end{aligned}$$For this case, the characteristics from the source point are oriented in all directions. Hence four orderings (\(i=1{:}I,j=1{:}J;~i=1{:}I,j=J{:}1; ~i=I{:}1,j=1{:}J;~ i=I{:}1,j=J{:}1\)) are needed for convergence. Table 10 shows the orderings and the test results. The number of iterations does not increase as the mesh is refined, which is different from the linear case of Example 3 in that the characteristics originated at the source propagate in all directions.

Example 5: A time-dependent problem In this example, we use the fast sweeping method as a fully implicit scheme for solving a time-dependent problem as follows,

The solution is \(u(x,y,t)=\sqrt{(x^2+y^2)}-0.2-t\) for \(t\in [0,~ 1]\). From time \(t_n\) to \(t_{n+1}\), we treat the time-dependent problem as a stationary problem whose characteristic has a fixed direction in time (see Remark 2.1). The implicit scheme is unconditionally stable. At each time step, we use the FSM to solve the problem. The test is performed on a rectangular mesh. And the iterations use four orderings, \(i=1{:}I,~j=1{:}J;~i=1{:}I,~j=J{:}1; ~i=I{:}1,~j=1{:}J; \text{ and } i=I{:}1,~j=J{:}1\), alternately. The time step we choose is \(\delta t=0.02\) or \(\delta t=0.04\). We test the example on two meshes: \(101\times 101\) and \(201\times 201\). The time step is large such that the CFL condition is violated for both meshes and an explicit scheme will break down. On both meshes, the number of iterations is 6 at each time step for the implicit scheme. Figure 7 shows the zero level set \(\{(x,y): u_h(x,y,t)=0\}\) with the numerical solutions computed by the FSM. A test on solving the same problem with the same setup by a forward Euler scheme with either the Lax–Friedrichs numerical Hamiltonian or the Godunov numerical Hamiltonian [33] is also performed. The CFL condition is not satisfied, and the scheme is unstable.

5 Conclusion

We investigate the convergence of iterative methods for hyperbolic problems such as the boundary value problems of static convex Hamilton–Jacobi equations using the fast sweeping method as an example. We prove the convergence and show that the contraction property of monotone upwind schemes combined with Gauss–Seidel iterations and proper orderings can provide fast convergence for such hyperbolic problems. The mechanism is different from that for elliptic problems and may render different behavior when the mesh is refined. The study is verified by various numerical examples.

Acknowledgements

S. Luo is partially supported by NSF Grant DMS 1418908. H. Zhao is partially supported by NSF Grant DMS 1418422.

References

Abgrall, R.: Numerical discretization of the first-order Hamilton–Jacobi equations on triangular meshes. Comm. Pure Appl. Math. 49, 1339–1377 (1996)

Alt, H.W.: Lineare Funktionalanalysis, 3rd edn. Springer, Berlin (1999)

Aslam, T., Luo, S., Zhao, H.: A static PDE approach to multi-dimensional extrapolations using fast sweeping methods. SIAM J. Sci. Comput. 36(6), A2907–A2928 (2014)

Bardi, M., Capuzzo-Dolcetta, I.: Optimal control and viscosity solutions of Hamilton–Jacobi–Bellman equations. Birkhauser (United states), Boston (1997)

Barles, G., Souganidis, P.E.: Convergence of approximation schemes for fully nonlinear second order equations. Asymptot. Anal. 4, 271–283 (1991)

Benamou, J.D., Luo, S., Zhao, H.: A compact upwind second order scheme for the eikonal equation. J. Comput. Math. 28, 489–516 (2010)

Bornemann, F., Rasch, C.: Finite-element discretization of static Hamilton–Jacobi equations based on a local variational principle. Comput. Vis. Sci. 9, 57–69 (2006)

Conway, E.D., Hopf, E.: Hamilton’s theory and generalized solutions of the Hamilton–Jacobi equations. J. Math. Mech. 13(6), 939–986 (1964)

Courant, R., Friedrichs, K., Lewy, H.: On the partial difference equations of mathematical physics. IBM J. Res. Dev. 11(2), 215–234 (1967)

Crandall, M.G., Evans, L.C., Lions, P.-L.: Some property of viscosity solutions of Hamilton–Jacobi equations. Trans. Am. Math. Soc. 282(2), 487–502 (1984)

Crandall, M.G., Lions, P.-L.: Viscosity solutions of Hamilton–Jacobi equations. Trans. Am. Math. Soc. 277, 1–42 (1983)

Crandall, M.G., Lions, P.-L.: Two approximations of solutions of Hamilton–Jacobi equations. Math. Comput. 43, 1–19 (1984)

Dijkstra, E.W.: A note on two problems in connection with graphs. Numer. Math. 1, 269–271 (1959)

Engquist, B., Froese, B.D., Tsai, Y.-H.R.: Fast sweeping methods for hyperbolic systems of conservation laws at steady state. J. Comput. Phys. 255, 316–338 (2013)

Engquist, B., Froese, B.D., Tsai, Y.-H.R.: Fast sweeping methods for hyperbolic systems of conservation laws at steady state II. J. Comput. Phys. 286, 70–86 (2015)

Evans, L.C.: The perturbed test function method for viscosity solutions of nonlinear PDE. Proc. R. Soc. Edinburgh Sect. A Math. 111(3–4), 359–375 (1989)

Fomel, S., Luo, S., Zhao, H.: Fast sweeping method for the factored eikonal equation. J. Comput. Phys. 228(17), 6440–6455 (2009)

Gao, H., Zhao, H.: A fast forward solver of radiative transfer equation. Transp. Theory Stat. Phys. 38, 149–192 (2009)

Hopf, E.: Generalized solutions of non-linear equations of first order. J. Math. Mech. 14(6), 951–973 (1965)

Kao, C.Y., Osher, S., Tsai, Y.H.: Fast sweeping method for static Hamilton–Jacobi equations. SIAM J. Num. Anal. 42, 2612–2632 (2005)

Leung, S., Qian, J.: An adjoint state method for three-dimensional transmission traveltime tomography using first-arrivials. Commun. Math. Sci. 4(1), 249–266 (2006)

Lions, P.-L.: Generalized Solutions of Hamilton–Jacobi Equations. Pitman, Boston (1982)

Luo, S.: A uniformly second order fast sweeping method for eikonal equations. J. Comput. Phys. 241, 104–117 (2013)

Luo, S., Guibas, L.-J., Zhao, H.: Euclidean skeletons using closest points. Inv. Probl. Imag. 5(1), 95–113 (2011)

Luo, S., Leung, S., Qian, J.: An adjoint state method for numerical approximation of continuous traffic congestion equilibria. Commun. Comput. Phys. 10(5), 1113–11131 (2011)

Luo, S., Qian, J.: Fast sweeping method for factored anisotropic eikonal equations: multiplicative and additive factors. J. Sci. Comput. 52(2), 360–382 (2012)

Luo, S., Qian, J., Burridge, R.: Fast Huygens sweeping methods for Helmholtz equations in inhomogeneous media in the high frequency regime. J. Comput. Phys. 270, 378–401 (2014)

Luo, S., Qian, J., Burridge, R.: High-order factorizations and high-order schemes for point-source eiknoal equations. SIAM J. Numer. Anal. 52(1), 23–44 (2014)

Luo, S., Qian, J., Stefanov, P.: Adjoint state method for the identification problem in SPECT: recovery of both the source and the attenuation in the attenuated X-ray transform. SIAM J. Imag. Sci. 7(2), 696–715 (2014)

Luo, S., Qian, J., Zhao, H.: Higher-order schemes for 3D first-arrival traveltimes and amplitudes. Geophysics 77(2), T47 (2012)

Luo, S., Yu, Y., Zhao, H.: A new approximation for effective Hamiltonians for homogenization of a class of Hamilton–Jacobi equations. Multiscale Model. Simul. 9(2), 711–734 (2011)

Oberman, A.M.: Convergent difference schemes for degenerate elliptic and parabolic equations: Hamilton–Jacobi equations and free boundary problems. SIAM J. Numer. Anal. 44(2), 879–895 (2006)

Osher, S., Shu, C.-W.: High-order essentially nonoscillatory schemes for Hamilton–Jacobi equations. SIAM J. Math. Anal. 28(4), 907–922 (1991)

Qian, J., Zhang, Y.-T., Zhao, H.: A fast sweeping method for static convex Hamitlon–Jacobi equations. J. Sci. Comput. 31(1/2), 237–271 (2007)

Qian, J., Zhang, Y.-T., Zhao, H.: Fast sweeping methods for eiknonal equations on triangulated meshes. SIAM J. Numer. Anal. 45, 83–107 (2007)

Sethian, J.A., Vladimirsky, A.: Ordered upwind methods for static Hamilton–Jacobi equations. Proc. Natl. Acad. Sci. 98, 11069–11074 (2001)

Sethian, J.A., Vladimirsky, A.: Ordered upwind methods for static Hamilton–Jacobi equations: theory and algorithms. SIAM J. Numer. Anal. 41, 325–363 (2003)

Shu, C.-W.: High order numerical methods for time dependent Hamilton–Jacobi equations. IMS lecture notes series, mathematics and computation in imaging science and information progressing, vol. 11, pp. 47–91 (2007)

Thomas, J.W.: Numerical Partial Differential Equations: Finite Difference Methods. Springer, New York (1995)

Tsai, Y.-H.R., Cheng, L.-T., Osher, S., Zhao, H.: Fast sweeping algorithms for a class of Hamilton–Jacobi equations. SIAM J. Numer. Anal. 41, 673–694 (2003)

Tsitsiklis, J.N.: Efficient algorithms for globally optimal trajectories. IEEE Trans. Automat. Control 40, 1528–1538 (1995)

Zhang, Y.-T., Zhao, H., Qian, J.: High order fast sweeping methods for static Hamilton–Jacobi equations. J. Sci. Comput. 29, 25–56 (2006)

Zhao, H.: A fast sweeping method for eikonal equations. Math. Comput. 74, 603–627 (2005)

Zhao, H.: Parallel implementions of the fast sweeping method. J. Comput. Math. 25, 421–429 (2007)

Zhao, H., Osher, S., Merriman, B., Kang, M.: Implicit and non-parametric shape reconstruction from unorganized points using variational level set method. Comput. Vis. Image Underst. 80, 295–319 (2000)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Luo, S., Zhao, H. Convergence analysis of the fast sweeping method for static convex Hamilton–Jacobi equations. Res Math Sci 3, 35 (2016). https://doi.org/10.1186/s40687-016-0083-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40687-016-0083-8

Keywords

- Fast sweeping method

- Hamilton–Jacobi equation

- Contraction property

- Iterative method

- Fast convergence

- Error estimate