Abstract

Background

The ability to navigate obstacles and embrace iteration following failure is a hallmark of a scientific disposition and is hypothesized to increase students’ persistence in science, technology, engineering, and mathematics (STEM). However, this ability is often not explicitly explored or addressed by STEM instructors. Recent collective interest brought together STEM instructors, psychologists, and education researchers through the National Science Foundation (NSF) research collaborative Factors affecting Learning, Attitudes, and Mindsets in Education network (FLAMEnet) to investigate intrapersonal elements (e.g., individual differences, affect, motivation) that may influence students’ STEM persistence. One such element is fear of failure (FF), a complex interplay of emotion and cognition occurring when a student believes they may not be able to meet the needs of an achievement context. A validated measure for assessing FF, the Performance Failure Appraisal Inventory (PFAI) exists in the psychological literature. However, this measure was validated in community, athletic, and general undergraduate samples, which may not accurately reflect the motivations, experiences, and diversity of undergraduate STEM students. Given the potential role of FF in STEM student persistence and motivation, we felt it important to determine if this measure accurately assessed FF for STEM undergraduates, and if not, how we could improve upon or adapt it for this purpose.

Results

Using exploratory and confirmatory factor analysis and cognitive interviews, we re-validated the PFAI with a sample of undergraduates enrolled in STEM courses, primarily introductory biology and chemistry. Results indicate that a modified 15-item four-factor structure is more appropriate for assessing levels of FF in STEM students, particularly among those from groups underrepresented in STEM.

Conclusions

In addition to presenting an alternate factor structure, our data suggest that using the original form of the PFAI measure may significantly misrepresent levels of FF in the STEM context. This paper details our collaborative validation process and discusses implications of the results for choosing, using, and interpreting psychological assessment tools within STEM undergraduate populations.

Similar content being viewed by others

Introduction

The ability to navigate obstacles and embrace an iterative process in response to failure is considered a hallmark of the scientific disposition and has been hypothesized to increase students’ persistence in STEM (Harsh et al. 2011; Laursen et al. 2010; Lopatto et al. 2008; Simpson and Maltese 2017; Thiry et al. 2012). The ways in which this ability can be fostered through undergraduate science, technology, engineering, or mathematics (STEM) education is a topic both historically underexplored by researchers and under-addressed by explicit instructor-driven curricula (Simpson and Maltese 2017; Traphagen 2015). However, recent increased interest in investigating the effects of various intrapersonal attributes on STEM students’ ability to navigate scientific obstacles has set the stage for promising educational research in this arena. Intrapersonal elements, previously referred to more broadly as “noncognitive factors” (Henry et al. 2019), include a subset of one’s individual competencies not related to one’s intelligence or knowledge. For example, intrapersonal elements include mindsets, attitudes, and beliefs, among other elements related to one’s understanding of their own experience (this is contrasted with interpersonal elements, such as empathy and other social skills, which involve recognizing others’ perspectives and experiences; Farrington 2019; National Research Council 2012). Many of these intrapersonal elements—such as fear of failure (FF), the topic of this work—are predicted to influence students’ engagement with challenges, responses to failure, and subsequent academic success (Henry et al. 2019). Yet, unlike some predictors of success that can be measured directly (e.g., prior achievement), most intrapersonal elements consist of latent variables, which cannot be directly observed or measured. Such variables must be assessed using multiple metrics (often multiple questions on a survey) that together allow us to estimate levels of the underlying construct (Knekta et al. 2019). Unfortunately, measures for these elements often either do not exist or may not be valid for our population of interest, STEM undergraduates. This is the case for FF. In this study, we build on prior work that describes the validity of a measure of FF, the Performance Failure Appraisal Inventory (PFAI). We investigate the validity of this instrument and work to improve and modify it for use with STEM undergraduates. Our aim was to provide a suitable revision of the PFAI and make it available to instructors and education researchers to measure FF in undergraduate STEM contexts.

Fear of failure

FF involves a complex interplay of emotion (Martin and Marsh 2003), personality (Noguera et al. 2013), and cognition (Conroy 2001). Historically, research has focused separately on these three aspects. Past researchers have described FF as either (a) purely affective, consisting of feelings of anxiety, nervousness, or worry when considering future failures; (b) an aspect of personality, for example having a high degree of neuroticism that consistently contributes to FF across all contexts; or (c) a context-specific cognitive assessment that evaluates a given situation as a threat to success (e.g., evaluation of failing a class as being a determinant of admission to medical school, and given this, fear of failing). However, more recent work recognizes that all of these domains are interrelated and contribute to the most comprehensive explanation of FF (Henry et al. 2019). Specifically, Cacciotti (2015) defined FF as a “temporary cognitive and emotional reaction towards environmental stimuli that are apprehended as threats in achievement contexts” (p. 59). An achievement context includes any situation in which (1) some task must be performed, (2) the task will be evaluated against standards or expectations, and (3) one must have certain competencies in order to carry out the task to those standards (Cacciotti 2015). In other words, FF is manifested in anxiety-based thoughts and emotions when one believes they may be unable to meet the demands of an achievement context. It is important to distinguish this multidimensional view of FF from constructs that solely describe emotion, such as anxiety. While these have been used as analogs for FF in the past, our modern understanding of FF recognizes that focusing only on the emotional aspects of the experience provides an incomplete understanding. For example, focusing only on emotion fails to recognize the cognitive appraisals of an achievement context that are often the root cause of affective states and may constitute specific targets for interventions seeking to alleviate FF. In other words, exploring the emotions related to FF (like anxiety) is necessary, but not sufficient, for a complete understanding of FF (Henry et al. 2019).

A key component of this definition is that one’s level of FF may change based upon the specific details of the achievement context or other contextual factors (Conroy 2001). For example, if two students enrolled in introductory biology have different goals, they will create different achievement contexts based on those expectations. If Student A is only enrolled because the course fulfills a general education requirement, the demands to satisfy the achievement context may be relatively low (e.g., just pass the class). The student is therefore less likely to experience FF. However, Student B, who is pursuing graduate study or a health career, is likely to judge the achievement context to be much more demanding (e.g., anything less than an A is unacceptable). While these students may generally differ to some extent in their baseline levels of FF, Student B will also likely experience greater FF, in part because they perceive the stakes of failure to be higher.

FF is broadly recognized as an element that can lead to avoidance of challenge, lower motivation, and self-impeding behaviors (e.g., making excuses, reduced effort, etc.; Chen et al. 2009). It has been studied extensively in K-12 contexts (e.g., Caraway et al. 2003; De Castella et al. 2013; Pelin and Subasi 2020) and in certain nonacademic contexts, such as entrepreneurship (e.g., Cacciotti et al. 2016) and sports (e.g., Conroy et al., 2001; Sagar et al., 2010). It has also been studied extensively in undergraduate students broadly (Bartels and Herman 2011; Bledsoe and Baskin 2014; Chen et al. 2009; Elliot and Church 1997; Elliot and Church 2003; Elliot and McGregor 2001; Elliot and Thrash 2004). Yet, despite its potential to impact achievement, FF has not been studied extensively in STEM undergraduate contexts. This is surprising given that “embracing failure” is seen as a necessary skill for professional scientists and that STEM individuals are known to view failures in ways distinct from those in other fields (Simpson and Maltese 2017). Although studies are few in number, several lines of evidence suggest that STEM students experience FF and that this may either limit engagement in STEM learning or, in some cases, prevent engagement altogether. Researchers have found that FF positively predicts procrastination behaviors for pre-health undergraduates (Zhang et al., 2018) and that this relationship extends to STEM graduate students in statistics courses (Onwuegbuzie, 2004). Similarly, work on understanding the causes of student anxiety during active learning in STEM classrooms cites a closely related construct, fear of negative evaluation by others, as an important cause of anxiety that can hamper students’ motivation to participate in class (Cooper et al. 2018; Downing et al. 2020). Ceyhan and Tillotson (2020) found that undergraduate STEM majors weighed their FF as an element influencing their motivation to engage in undergraduate research, citing the emotional cost of engagement. This is especially notable considering the enthusiasm for and movement towards research-based courses that are more likely to expose students to scientific failures (Auchincloss et al. 2014; Corwin et al. 2015; Gin et al. 2018). Indeed, FF may become more salient to students engaged with these new pedagogies. Prior work also suggests that FF differs among male- and female-identified STEM undergraduate students (Nelson et al., 2021), suggesting that we need to consider differential effects of FF across identities in STEM. Finally, and most importantly, FF may predict whether or not students ultimately choose a STEM major or choose to remain a STEM major after their first semester at college (Nelson et al. 2019). FF may contribute to the extensive drop in STEM majors typically seen after the first year in STEM fields (Nelson et al. 2019; Seymour and Hunter 2019). STEM instructors recognize that assisting students in coping with failure and alleviating FF are important priorities when training future scientists (Gin et al. 2018; Henry et al. 2019; Simpson and Maltese 2017). However, we must be able to accurately measure the effects of these efforts if we are to understand how teaching practices can serve to alleviate FF.

Measuring fear of failure

Given the complex nature of FF, it can be difficult to conceptualize a valid measure which fully captures all of its properties. For example, risk aversion was historically used as a proxy for FF, but contemporary researchers now acknowledge that, while risk aversion may tap into some of the emotional aspects of FF, it likely does not fully represent the personality or cognitive aspects (Noguera et al. 2013), nor is it responsive to changes in context (Conroy 2001). Beginning in 2001, Conroy and colleagues addressed these concerns by attempting to understand the causes of FF at the individual level, resulting in the creation and refinement of a multidimensional assessment measure: the PFAI.

To characterize FF, Conroy et al. (2001) conducted interviews with eight adult elite athletes and eight adult elite performing artists (50% female). In these interviews, subjects provided insight into their definitions of failure, what situations or contexts they considered “failures” in the past, and how they reacted to experiencing those situations (Conroy et al. 2001). Based on a content analysis of these interviews, Conroy et al. (2001) created 89 items that could be classified under ten broad sources of FF (e.g., fear of an uncertain future). They then asked 396 high school and college-aged student-athletes (mean age = 19.3 years, SD = 4.3) to respond to these 89 items. Each statement evoked a situation in which the student was “failing” or “not succeeding” and students rated each item on a scale of “Do not believe [this to be true] at all (− 2)” to “Believe [this to be true] 100% of the time (+ 2)”. For example, students read the statement “When I am failing, my future seems uncertain” and then selected whether they believed this to be true and to what degree. Conroy and colleagues then used factor analysis, which groups items according to statistical relationships that correspond to psychological constructs—in this case, types of FF. This work narrowed the number of PFAI items down to 41, loading strongly onto five factors (meaning that the items cluster broadly into five meaningful categories or dimensions, rather than the originally proposed 10, see Knekta et al. (2019) for an explanation of how factors are formed). Subsequent factor analyses with samples of only college student participants further reduced the number of items on the PFAI from 41 to 25, with five items measuring each dimension, or reason for demonstrating FF (Table 1; Conroy et al. 2002).

The current work

Addressing FF is especially important when considering the broader challenge of finding and validating measures of intrapersonal elements for STEM undergraduates (Knekta et al. 2019; Rowland et al. 2019) and the more specific challenge of assessing the influence of intrapersonal elements on students’ resilience, motivation to engage in challenging tasks, and ability to navigate obstacles when they arise (Henry et al. 2019). Previous research utilizing the PFAI as an assessment measure has found that increased FF is related to reduced challenge engagement (Bledsoe and Baskin 2014). This can most clearly be seen in high-FF students who demonstrate self-impeding behaviors by reducing effort or making excuses before failure occurs, thereby protecting their self-worth in the short term at the risk of long-term success (Berglas and Jones 1978; Chen et al. 2009; Cox 2009; Zuckerman and Tsai 2005). While these results were found in academic, community, and broad college contexts, they have not yet been investigated specifically in undergraduate STEM education, a context in which challenge engagement is likely critical for progress (Henry et al. 2019) but where failure is also a commonly accepted part of the process (Simpson and Maltese 2017). The contextual nature of both FF (Cacciotti 2015) and human cognitive appraisals (Schwarz 1999) suggests that FF is likely to manifest in significantly different ways depending upon the achievement context(s) one is assessing. Therefore, college students in a STEM context are likely to experience FF differently than students in high school or non-STEM courses. Many students enrolled in STEM courses enter with intentions of pursuing graduate study or health careers (e.g., Gasiewski et al. 2012), which can make achievement contexts more salient. In addition, the active research that students may engage in during the course of STEM education often includes tasks that have a higher likelihood of failure or not achieving a stated research goal (Auchincloss et al. 2014), and this may represent one of the first times students encounter an academic situation in which judging success against the achievement context is difficult or unclear. All of these contextual factors influence how STEM students experience FF and also how they will respond to assessments of FF. To investigate and understand FF in STEM contexts, we need to ensure that we can accurately measure FF for STEM students.

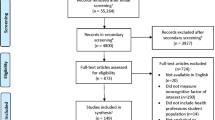

Any education research conducted on a topic will only be as strong as the assessment tools used for the constructs of interest (Cronbach and Meehl 1955). While the PFAI was originally constructed using a sample that included some college students, these students were only used for factor analyses to reduce the number of items and refine the measure for certain types of FF. They were not interviewed as part of the initial creation of the items or to ascertain how they interpreted the items and if this interpretation matched the assumptions and definitions of FF researchers. This reveals a critical unmet need, because there is much about college contexts—and undergraduate STEM achievement contexts more specifically—which could affect students’ responses and/or alter response patterns to the PFAI. Such contextual factors could make Conroy’s proposed factor structure inappropriate and invalid for these specialized populations of interest. This is important, because if the PFAI is not valid for undergraduate STEM samples, using it for education research could lead to misrepresentation of FF levels and faulty conclusions about levels of FF present in STEM classrooms or the efficacy of interventions that aim to reduce FF within that context. As such, we set out to build upon the work of Conroy et al. (2002) to (a) ascertain specifically how STEM undergraduates interpret PFAI questions related to Conroy et al.’s (2002) proposed dimensions of FF and (b) refine the PFAI for assessing FF in undergraduate STEM contexts. First, we used confirmatory factor analysis (CFA) to test whether Conroy’s proposed five-factor model is appropriate for measuring STEM undergraduates’ FF. Then, we used exploratory factor analysis (EFA) to consider alternative factor structures and evaluate whether any offer a better fit for data from an undergraduate STEM sample. Once we identified the “strongest” factor structure for the initial sample of STEM students, we performed additional CFA analyses in several other samples to confirm this structure. We then asked whether that factor structure remains a good fit for science PEERs—persons excluded because of their ethnicity or race (Asai 2020) using an additional CFA. Finally, we conducted a series of cognitive interviews among students to assess the face and content validity of our final revised measure to (a) ensure that students find all items clear and easy to understand and (b) better characterize the nuance with which STEM undergraduate students interpret the PFAI items. The progression of our analyses and their basic goals is outlined in Fig. 1, with more details about each analysis’ sample and the location of specific results detailed in Table 2. Below, we describe each of these steps, their methods, results, and a brief discussion of their findings before concluding with a broad discussion of our modified measure and its suitability for assessing FF in undergraduate STEM student populations.

Step 1: Confirmatory factor analyses (CFAs) of existing models

CFAs serve to test whether a measure of a construct—in this case the PFAI—is consistent with the proposed understanding of that construct (i.e., FF) and its components. A good “fit” of the data collected with a particular measure to the proposed conceptual model indicates that the measure is accurate with regard to the researchers’ understanding of the construct and its components. When there is strong a priori and/or empirical evidence supporting a conceptual model—as in the case of the PFAI—it is best to start with CFAs when exploring measure utility for new populations (Knetaka et al., 2019). Thus, the purpose of our initial CFA analyses was twofold: (a) to investigate whether data collected from a sample of undergraduate STEM students fit to the current factor structure (twenty-five items on five factors) proposed by Conroy et al. (2002) and (b) to assess whether or not a change in item wording to prompt students to consider struggles and failures specifically in STEM contexts improves the model fit. We reasoned that the alternate wording would result in an improved model fit, as it addresses the specific, unique (as discussed above) STEM education context in which students find themselves frequently confronting academic challenges and failures. We explored this possibility with the aim of creating a version of the PFAI best suited to assess STEM-related FF in undergraduate students.

Methods

Participants

Four hundred and twenty-three undergraduate students were recruited for this study during Spring/Summer 2018. Students were recruited with the aid of STEM instructors at a diverse group of institutions—public and private; rural and urban; liberal arts and research-intensive—across multiple regions of the USA. Recruiting instructors were members of FLAMEnet, an NSF-funded research collaborative which brings together diverse STEM instructors, education researchers, and social scientists to conduct research and create resources aimed at fostering the next generation of resilient and innovative scientists (https://qubeshub.org/community/groups/flamenet/). Instructors announced the research opportunity to students either during class, via the course learning management system, via email, or on social media. All recruited students were enrolled in a STEM course at the time of the study. Two hundred and thirty-five students in this volunteer sample provided complete surveys and were included in the final data set. Students in this sample predominantly identified as female (68.1%) and Caucasian (81.7%), with a majority describing themselves as STEM majors (90%). A full breakdown of participant characteristics can be found in Table 3, column 3.

Instruments

Fear of failure

The 25-item version of the PFAI was employed (Conroy et al. 2002; the original measure can be viewed in Table S1). Using this measure, we asked participants to endorse certain beliefs regarding the likely consequence(s) of failure on a scale of 1 (“I believe this is never true of me”) to 5 (“I believe this is true of me all of the time”). This scale was modified from the original 5-point scale of − 2 “Do not believe at all” to + 2 “Believe 100% of the time” based on anecdotal preliminary feedback from undergraduate research assistants that the modified response scale is easier to understand. Evidence of the validity and reliability of this 25-item version of the PFAI (Conroy 2001) has previously been gathered in a general college sample. In that study, Conroy et al. (2002) produced a final well-fitting model that accounted for the five proposed dimensions—fear of shame or embarrassment (FSE), fear of devaluing one’s self-estimate (FDSE), fear of having an uncertain future (FUF), fear of important others losing interest (FIOLI), and fear of upsetting important others (FUIO)—each of which consists of a group of five questions that corresponds to the specified dimension, along with the higher-order overall FF that can be derived from averaging these five subscales. (From here forward, we refer to these groups of questions as “dimensions” since they are inseparable from the FF dimensions. When discussing the mathematical fit of items to these dimensions resulting from factor analysis, we use the term “factors”.) Fit statistics for this original model are provided at the top of Table 4. Internal reliability for this form of the PFAI has previously been demonstrated to be high, with Cronbach’s alpha of all twenty-five items at 0.91 and for the higher-order FF dimension derived by the mean of the five factors at 0.82 (Conroy et al. 2002; Cronbach’s alpha is a statistical measure which assesses the degree to which items on a scale are correlated with each other, with values closer to one indicating stronger relationships. If items on a scale do, in fact, all measure the same construct, one would expect to see high consistency as measured by Cronbach’s alpha; Cronbach 1951). In this study, PFAI items were framed in two ways. In the “general” condition, the PFAI items were introduced by asking students to “consider the way you feel and act when you face failures and challenges.” The PFAI items themselves were introduced with the following: “For the following questions, please consider challenges and failures that you face in general. For each question, indicate how often you believe each statement is true of you.” By contrast, our “STEM” condition introduced this section of the survey by asking respondents to “consider the way you feel and act when you face failures and challenges in your STEM courses”. And the questions themselves were introduced by reminding students to “please consider challenges and failures that you face specifically in your STEM course(s). For each question, indicate how often you believe each statement is true of you.” Students also provided qualitative responses to a set of questions that asked them to describe a recent time when they experienced a failure or challenge (again, either in general or in a STEM context specifically) and how upsetting they found this event on a scale from 0 to 10, with 10 being the most upsetting. These qualitative items are discussed more in Step 7 below.

STEM anxiety

To quantify the overall level of anxiety surrounding the academic context of STEM courses, we asked students the following: “On a scale from 0 to 10, how anxious are you about your performance in your STEM classes?” Here, 0 indicated “not at all anxious” while 10 indicated “extremely anxious.” Students utilized the entire range of possible responses for this question, with a mean response of 6.37 (SE 0.175; variance 6.670). Cognitive interviews (see Step 7) were used to assess the face validity of this question, with students indicating that they found it simple and straightforward.

Procedures

All activities conducted during this and all subsequent research steps were completed with the approval of the Emory University IRB (Protocols IRB00105275 and IRB00114138). Subjects were recruited from STEM courses over the Spring/Summer 2018 semester. Any student enrolled in a participating STEM undergraduate course was eligible to participate. After agreeing to participate in the study, subjects were randomly assigned to one of two groups following a planned missingness design. That is, we intentionally provided only half of our items to 50% of the sample and the other half of the items to the other 50%. While this creates a large amount of missing data, as long as random assignment is used to decide which participants receive which half of the questions, responses for the remaining items can be imputed (Little 2013; Little and Rhemtulla 2013; Rhemtulla and Little 2012). This method of data collection was chosen to avoid survey fatigue. Students in this sample were asked to answer survey items related to the FF measure discussed in this paper and also to provide survey data on a larger number of other intrapersonal constructs of interest (i.e., coping behaviors, growth mindset). Taken together, asking students to respond to all of these items would have resulted in a survey exceeding the recommended length of 10–20 min (Cape and Phillips 2015; Revilla and Ochoa 2017). To ensure we collected high-quality data from students on all measures, a planned missingness design was judged the best approach. We also randomly assigned participants to either the “general” or “STEM” group. The “general” group received the PFAI items as they were validated by Conroy et al. (2002). The “STEM” group received versions of these same items that were preceded by language that prompted respondents to consider failures and challenges in STEM contexts specifically. Both groups rated the items on a scale of 1 “I believe this is never true of me” to 5 “I believe this is true of me all of the time”. We randomly assigned these versions of the survey to two groups because we wished to avoid survey fatigue by not asking students to respond to both versions and because we wished to investigate whether or not the language prompting students to explicitly consider STEM contexts influenced FF responses. After responding to the FF questions, all students were asked to rate their level of anxiety specifically related to taking STEM courses. Finally, participants completed demographic questions. We intentionally placed the demographic questions at the end of the survey to mitigate any effects of stereotype threat that can be introduced by such questions.

Results

Preliminary results

Missingness

To confirm that the intentional patterns of missingness (that is, what data are missing from participants) created as part of our planned missingness design (Little 2013; Little and Rhemtulla 2013; Rhemtulla & Little 2013) are missing completely at random (MCAR), Little’s MCAR Test (Little 1988) was computed. Results confirm that our data are missing at random (□2 (256) = 127, p > .05), and it is appropriate to impute missing values. Missing values were imputed with five iterations (Schafer et al. 1997) and the imputed datasets were used for all further analyses. We calculated estimates separately for each imputed dataset and then averaged those estimates to derive final model estimates based on Rubin’s rules for multiple imputation (Rubin, 1978).

Descriptive analyses

Outliers in the dataset were identified using the outlier labeling method (Hoaglin and Iglewicz 1987; Hoaglin et al. 1986; Tukey 1977) which labels identified outliers as “missing” to exclude them from further analysis without removing them entirely from a dataset. Visual inspection of skewness and kurtosis (George & Mallery, 2016), as well as Shapiro-Wilk’s testing indicated that our data were not normally distributed (p < .05; Shapiro and Wilk 1965), so the robust maximum likelihood ratio (MLR) was the most appropriate for main analyses in MPlus (Asparouhov and Muthén 2018; Muthén and Muthén 1998-2018). Preliminary analyses also indicated that students in our sample reported relatively low levels of FF, with dimension averages ranging from 1.46 (SE = .030; FSE) to 3.30 (SE = 0.30; FUF) on a 5-point scale where higher scores indicate more FF.

Main results

Main analyses were carried out in MPlus v. 8.1 (Muthén & Muthén,1998-2018). Factor analyses examined the fit of a variety of nested models, beginning with a CFA of the five-factor model of FF proposed by Conroy et al. (2002). In accordance with recommendations from Knekta et al. (2019), model fit was assessed using Akaike’s Information Criterion (AIC), Root Mean Square Error Approximation (RMSEA), Comparative Fit Index (CFI), and Standardized Mean Square Residual (SRMR). AIC compares each proposed model to a theoretical “true” model, calculating how far data fit to the model fall from this theoretical ideal. AIC also allows for comparison of the fit between models fit to the same sample; the AIC value for each model will be that respective model’s distance from the “true” fit for the data. So, the model with the lowest AIC represents the best fit for those data (Akaike 1998; Kenny 2020). RMSEA values describe the “badness of fit,” so once again a lower number is preferred. CFI assesses incremental improvements in model fit above a baseline model; thus, higher values indicate better fit (Kline 2010; Taasoobshirazi and Wang 2016). And, finally, SRMR represents the standardized difference between a predicted correlation among error residuals and the actual observed correlations. Since a smaller difference between these correlation values would indicate closer convergence between prediction and observation, a smaller SRMR value indicates good fit (Kline 2010; Taasoobshirazi and Wang 2016). See Knetkta et al. (2019) for more complete descriptions of how each metric is calculated and their meaning. Fit statistics for all models can be found in Table 4, along with cut-off criteria used to assess goodness of fit. For all models discussed below, changes made for earlier models are carried over to later models unless otherwise stated.

Model A: CFA of Conroy’s structure

As described above, Conroy et al. (2002) proposed a five-factor structure to capture different sources of FF (Table 1). CFA was used to determine whether items in the college STEM sample loaded on the factors proposed a priori by Conroy et al. (2002; i.e. supported the proposed conceptual model). Fit statistics suggest that this model has weak to mediocre fit for students in STEM contexts (Table 4). Analysis of standardized factor loadings for individual items indicates that Question # 9 (“When I am failing, I lose the trust of people who are important to me“) does not load onto the FUIO factor (훃 = 0.450, p > .05) as proposed by Conroy et al. (2002). Further investigation of beta output provided by the MPlus program suggests that, in our sample, the following items do not load onto any of the proposed factors: 1 (“When I am failing, it is often because I am not smart enough to perform successfully”; FDSE), 2 (“When I am failing, my future seems uncertain”; FUF), 3 (“When I am failing, it upsets important others”; FUIO), 9, and 10 (“When I am not succeeding, I am less valuable than when I succeed”; FSE).

Model B: Modified factor structure

Based on the results of Model A, items 1, 2, 3, 9, and 10 were removed from the item inventory, and the CFA based on Conroy’s PFAI model was rerun. Model fit improved substantially (see Table 4) though overall fit was still considered to be “poor” and individual factor loadings did not suggest that model fit would be improved by the further removal of items.

Model C: Using modified factor structure to predict overall FF

Conroy et al. (2002) also hypothesized that their instrument would explain differences in students’ overall FF. Model C tests that hypothesis using our modified factor structure (with items 1, 2, 3, 9, and 10 dropped from their respective dimensions). For this model, an additional step was added in which, after individual items predicted factor formation, the factors together predicted overall mean level of FF. We see (Table 4) that model fit worsens, but not back to the level of Model A. Also, examination of standardized beta weights suggests that the negatively coded item 12 (“When I am failing, I am not worried about it affecting my future plans“) on the FUF factor is a poor fit for this sample of undergraduate STEM students when predicting overall FF (□ = − 0.331, p > .05).

Model D: Modified overall model

Removing item 12 from the overall model improves model fit (Table 4) and does not yield any further suggestions for improved model fit for either the individual composition of factors or to increase the model’s ability to predict overall FF.

Model E: Modified model with STEM-specific items

Our final model in this step tested our hypothesis that question wording which primed students to think specifically about STEM contexts when completing the PFAI survey would lead to a better model fit. To test this, we took our best-fitting model from our work with the existing PFAI items (Model D) and substituted data from our STEM-specific questions. We assessed the effect of STEM-specific language after finding the best overall model fit with Conroy’s original items because we wished to see if this change affected model fit above and beyond other modifications. When these STEM-specific item variants were used, model fit improved to its highest level. While we would still not classify this as a strong model fit, it is nonetheless markedly improved and better represents the population of interest.

Convergent validity

Convergent validity of the PFAI in general—that is, the degree to which FF measured by the PFAI is correlated with other constructs which, theoretically, should be related to FF—has been extensively addressed by Conroy and colleagues in their original validation protocol (see Conroy et al. 2001; Conroy et al. 2002). Another variable within our dataset which addresses affective components thought to be related to FF is STEM anxiety, measured via one question: “On a scale from 0 to 10, how anxious are you about your performance in your STEM classes?” Assuming that our refined model for the PFAI has good convergent validity, we would expect mean overall FF and STEM anxiety to be highly correlated. Overall, this sample reported moderate levels of STEM anxiety (M = 6.37, SE = 0.175). Responses ranged from 0 to 10 with a variance of 6.670, indicating that this question has sufficient variance to be used in assessments of convergent validity. Overall FF obtained by our measure is significantly correlated with STEM anxiety (r = 0.568, p < .0001), supporting the convergent validity of the modified PFAI.

Brief discussion

Our initial CFA demonstrated that the PFAI best reflects university STEM students’ fear of failure when the language of the survey specifically directs them to consider their experiences within the STEM academic context. This use of STEM-specific language significantly improved model fit above the original model; however, it still did not result in a model that was well-fitting overall (Akaike 1998; Kline 2010; Taasoobshirazi and Wang 2016). This implies that the underlying model structure of the PFAI might be inappropriate to assess FF in undergraduate STEM students. To explore this possibility, and to find the model structure with the greatest efficiency for measuring FF in undergraduate STEM samples, an EFA was conducted next.

Step 2: Exploratory factor analysis (EFA) to define new model structure

In contrast to the CFA described above, EFA frees individual items from any a priori organizational constraints, allowing them to reorganize into new factors based on responses of participants, rather than researchers’ pre-formed hypotheses regarding how the items should cluster together. Thus, compared to CFA, which investigates whether data “fit” an existing conceptual model, EFAs suggest new models that best fit the data (Knetka et al, 2019). We hypothesized that EFA with the STEM-specific items would yield a well-fitting model of the PFAI for undergraduate STEM students by allowing removal or reorganization of some of the items among Conroy’s (2001) five proposed dimensions (described above in “Introduction”) or organization into new factors representing different dimensions. Our justification of this hypothesis is that students in STEM contexts may view failures differently than other undergraduate students. STEM professionals view failure in unique ways not generalizable to all populations (Simpson & Maltese, 2014), and STEM students describe FF as occurring as a result of specific STEM contexts and not as a more general cross-context fear (e.g., Ceyhan and Tillotson 2020; Cooper et al. 2018; Onwuegbuzie 2004), supporting the idea that FF is highly context-specific (Cacciotti 2015). Thus, the constructs proposed for other undergraduate populations may require revision for STEM undergraduate populations.

Participants and procedures

In accordance with best practices in psychometrics, especially with regard to statistical power (Knekta et al. 2019), data for this EFA were acquired from a new dataset that included approximately 1800 undergraduate STEM students. These participants were drawn from the same research network as those in “Step 1: Confirmatory factor analyses (CFAs) of existing models,” which was expanded to include more minority serving and 2-year institutions. These data were collected in Fall 2018 as part of a pre-survey completed by students within the first month of the semester, prior to the first major assessment in their participating STEM course. The vast majority of courses included in this sample were traditionally targeted for first- or second-year students. Once the data were cleaned (e.g., outliers truncated, cases with majority missing data deleted), a sample of 1309 college students in STEM contexts remained for analyses (Hoaglin and Iglewicz 1987; Hoaglin et al. 1986, and Tukey 1977). Demographics for this sample can be viewed on Table 3, column 4. Because the STEM-specific items provided a better fit in the initial CFA study (see “Step 1: Confirmatory factor analyses (CFAs) of existing models”), students in this study were only asked to complete versions of the original twenty-five items of the PFAI which had been modified to be STEM-specific. Independent samples t-tests comparing the key demographics of this sample to the sample in our first analyses found no significant differences between participants on race, parents’ level of education, or reported STEM anxiety (all p’s > .05). There were significant differences observed between participants on age, class standing, and gender, with participants in this sample tending to be older, less academically advanced, and male. However, these differences were relatively small (see Table S2). Table 3 displays frequencies of other key demographic variables for all samples.

Results

Eigenvalues and Scree plots are first steps in EFA that are used to determine how many factors a researcher should consider including in their measurement model by exploring how much variance might be explained by the addition of more factors. Eigenvalues provide a basic measure of how much unique information each assumed factor provides. For that reason, factors with higher eigenvalues are considered more useful; in general, researchers should only include factors with eigenvalues above one in their models (Knetka et al., 2019). Scree plots help provide a visual aide for this determination by plotting eigenvalues against the number of factors. Researchers should limit the number of factors at the point in the Scree plot where the curve experiences its first sharp drop (Cattell 1966; Knetka et al., 2019). These general guidelines can be widely interpreted and are meant only to help researchers limit the beginning number of factors considered for EFAs. It is important to carefully examine the quantitative fit statistics (e.g., AIC, RMSEA) generated for all potential models before making conclusions regarding the optimum number of factors or goodness of fit for any model. It is also important to consider the theory underlying the generation of survey items and the ultimate proposed use of a measurement (Knetka et al., 2019). Exploration of the Scree plot (see Fig. 2) and eigenvalues suggested that a model having between one to five factors would be the most effective for this sample of STEM undergraduates. This determination was based on established criteria of visual inspection of the Scree plot for initial leveling of slope (Kaiser 1960) and eigenvalues greater than 1.0 (Cattell 1978). MPlus v. 8.1 (Muthén & Muthén, 1998–2018) was used to successfully carry out EFA for each of the proposed factor structures. Model fit was assessed using Akaike’s Information Criterion (AIC), Root Mean Square Error Approximation (RMSEA), Comparative Fit Index (CFI), and Standardized Mean Square Residual (SRMR) as described in the “Results” section of “Step 1: Confirmatory factor analyses (CFAs) of existing models,” above (Kline 2010; Taasoobshirazi and Wang 2016). Model fit statistics are in Table 5.

Both the four-factor model and five-factor model provide a good fit of the PFAI items for STEM undergraduate students (Table 5). Therefore, to further investigate fit, we examined the factor structures themselves. Any item that loaded onto a factor with a loading above 0.40 and a distance of at least 0.20 from any cross-loadings was retained on that factor (Masaki 2010). Using these criteria, items that failed to load clearly onto a unique factor were dropped from the measure. From this evaluation of the factor structures, the four-factor model emerged as both conceptually and practically stronger than the five-factor model, as the five-factor model contained two factors having only one item each and a total of twelve dropped items. In contrast, the four-factor model required dropping only ten items, and the remaining fifteen PFAI items were more evenly distributed across factors that echo the original dimensions proposed by Conroy et al. (2001). Our revised form of the PFAI can be viewed in Fig. 3, and the factor loadings, R2, and residual variances for the four-factor model are displayed in Table 6. Correlations among latent factors can be seen in Table S3 and are within acceptable bounds (Brown 2015; Watkins 2018).

Brief discussion

Use of EFAs allowed us to further refine the PFAI for use in college-aged STEM student samples. Our best-fitting model significantly reduced the number of items from twenty-five to fifteen, which may aid with increasing compliance and decreasing cognitive load when surveying college-aged STEM students (reviewed in Peytchev and Peytcheva 2017). Based on a series of qualitative cognitive interviews with STEM undergraduates (see Step 7, below), it appears that several of the dropped items contained words or phrases that made them unclear or ambiguous to students. One dimension, FDSE, contained items with the words “talent,” “hate,” and “not in control” to describe situations in which students might devalue their own self-estimate. In cognitive interviews, students objected to these words, and ultimately, FDSE was not supported by factor analysis as a unique dimension. It is likely the wording of items did not align with STEM students’ views of themselves when responding to failures. Interestingly, one item was retained in the scale from the original group of questions for the FDSE dimension. “When I am failing, I blame my lack of talent,” now loads onto the FUF factor. This suggests that perhaps STEM students at the college level view talent as a potential advantage (or stumbling block) for future success, rather than a reflection on their current self-estimate.

Ultimately, four of the five Conroy-proposed dimensions were still represented in the final fifteen-item revised model, and a majority of items present loaded onto their “original” dimension (Fig. 3), with four items assessing Fear of an Uncertain Future (FUF), five items assessing Fear of Important Others Losing Interest (FIOLI), three items assessing Fear of Upsetting Important Others (FUIO), and three items assessing Fear of Shame and Embarrassment (FSE). It is worth noting that several residual variances for the individual items in the model remain high (Table 6). This suggests that there is still some variability in students’ responses to the PFAI items that is not explained by the current model. Thus, it is possible that more factors could remain to be extracted (Pett et al., 2003; Watkins 2018). More qualitative work within a STEM context could elucidate additional FF dimensions relevant for STEM undergraduates which could augment the current instrument in future iterations of scale development.

Steps 3–5: CFAs to confirm fit of new factor structure

After determining a new factor structure, it was important to verify that the structure fit well in more than only the sample of STEM students used to conduct the EFA. We subsequently performed a series of CFAs using similar methods to those described above on this newly suggested structure.

Step 3

We first verified the fit of our model within the sample of students used for the EFA in Step 2. This sample included 1309 students recruited from the FLAMEnet research network during Fall 2018 (Table 3, column 4). CFA within this sample yielded excellent model fit (Table 5, row 7).

Step 4

We next wanted to confirm the revised factor structure in a separate sample of STEM undergraduates to verify the stability of the model. FLAMEnet participants during Fall 2019 provided data on 433 students (see Table 3, column 5 for demographics). This analysis also proved to have excellent fit (Table 5, row 8).

Step 5

Finally, we wanted to return to our original sample of 235 students recruited during Summer of 2018 (Table 3, column 3) to see if the revised model provided good fit given that our efforts with CFA in “Step 1: Confirmatory factor analyses (CFAs) of existing models” improved model fit significantly but did not reach the threshold of good fit. The model demonstrated excellent fit in this sample as well (Table 5, row 9).

Brief discussion

We conducted three separate CFAs to verify that the new model structure for the PFAI indicated by the EFA (Step 2) could be replicated in multiple samples of STEM undergraduates. In all three cases, fit statistics indicated excellent model fit. Independent samples t-tests among the various samples identified some significant differences among demographic variables (see Table S2). These differences, while statistically significant, were small, and the model’s continual good fit despite them demonstrates its robustness as an assessment tool across undergraduate STEM samples.

Step 6: Model fit among persons excluded because of their ethnicity or race (PEERs)

The work described thus far represents a novel presentation of the PFAI which we have shown to be a stronger fit for undergraduates’ actual conceptualization of FF in STEM contexts. While work aimed at improving the validity and applicability of interventions and assessment represents a worthy goal of service for all students, it is especially salient for PEER students, who are more likely to leave STEM academic programs (Asai 2020; National Science Board 2018; Steele 1997; Stinebrickner and Stinebrickner 2014). Factors such as FF are likely to be important leverage points for improving STEM students’ ability to persevere through academic challenges and failures. The implied long-term impact of aligning pedagogical practices to reduce FF is increased inclusion and success in STEM education and careers (e.g., retention within STEM majors, Nelson et al. 2019). For the PFAI to be an effective assessment tool, then, it is critically important to ensure that the same factor structure is valid for people at higher risk of leaving STEM, such as PEERs (Asai 2020). Additionally, previous intervention studies with psychological constructs that influence students’ responses to challenge and failure (e.g., mindset) suggest that these interventions may be most effective for PEER students (Aronson et al. 2002; Fink et al. 2018; Yeager et al. 2016). Thus, we conducted separate model fit analyses with a sample of only PEER undergraduate STEM students to explore how this instrument functions when assessing this critically important population.

Participants and procedures

Participants for this analysis were drawn from the same dataset of approximately 1309 undergraduate STEM students described in the previous EFA section (Step 2), along with two other datasets collected in Fall 2019 (Step 4) and Summer 2018 (Step 1). While these data were pulled from surveys collected at different times, there were no differences in the method by which surveys were presented. These data were then coded to classify each student as either a “PEER” (1) or “not a PEER” (0). Any student who self-identified as “White/Caucasian” or “Asian” on a demographic survey question was not considered a PEER; all other students were coded as a PEER. This classification was based on data from the NSF which indicates that Asian students are not typically underrepresented in STEM and health-related sciences in the USA (Asai 2020; National Science Foundation 2020). In total, 280 PEER students were identified. Full demographics for this sample are included in Table 3, column 6. In our sample, PEER students identified as belonging to African American or Black; American Indian or Alaskan Native; Arabic or Middle Eastern; Hispanic or Latinx; and/or some other racial or ethnic group. All further analyses described in this section have been conducted with only those students classified as PEERs. Independent samples t-tests (see Table S2) verify that this combined sample of PEER students contained a significantly higher number of PEER students than the samples from which it was drawn (by an average factor of 10). Small differences existed among other demographics, but in general PEER demographics were intermediate or roughly equivalent to other samples (Table S2) with all differences being small. The only difference which might also have impacted students’ FF was that PEER students reported that their parents received a lower overall level of education compared to students in our other samples. This has often been used as a proxy for socioeconomic status (SES; Pascarella and Terenzini 1991; Snibbe and Markus 2005) and could mean PEER students are under financial stress, making them more likely to fear failure. However, PEERs, in general, are more likely to hail from first-generation or lower SES backgrounds in the USA (Cullinane 2009; Kuh et al. 2006); thus, this observed difference is not surprising. Indeed, differences in educational background that correlate with race and ethnicity, in part, contribute to the need to understand, study, and create measures specific to PEER groups. PEER students also reported equivalent levels of STEM anxiety, which suggests they are not, overall, more anxious about STEM courses than non-PEER STEM students.

Results

MPlus v. 8.1 (Muthén & Muthén,1998-2018) was used to conduct CFA for the four-factor, 15-item model described above (see Tables 4 and 5). Model fit was assessed using AIC, RMSEA, CFI, and SRMR as described in the “Results” section of “Step 1: Confirmatory factor analyses (CFAs) of existing models” above (Kline 2010; Taasoobshirazi and Wang 2016). Model fit statistics are displayed in Table 5, row 10. All fit statistics are within acceptable ranges for a “good” fitting model (Kline 2010; Taasoobshirazi and Wang 2016). While the RMSEA value of 0.071 slightly exceeds our established criterion of RMSEA < 0.06 for a “good” fitting model, the 90% confidence interval does include this value. In addition, disagreement abounds regarding appropriate cut-off points for fit indices (e.g., Hayduk et al., 2007), with some researchers arguing that RMSEA can rise as high as 0.08 before a model is considered a “poor” fit (MacCallum et al. 1996). RMSEA, along with SRMR, is also the fit index perhaps most susceptible to inflation with small sample sizes (Kenny et al. 2015). The difference in sample size between samples in Step 2 (N = 1309 in the full EFA) and Step 6 (N = 280 in our CFA with PEER students) may partially explain the increase in RMSEA.

Brief discussion

By conducting this sub-analysis, we demonstrate that our 15-item modified form of the PFAI does provide a statistically good fit for PEER students in STEM. Given past research on both the disproportionate difficulties of pursuing a STEM career as a PEER student and the increased effectiveness of interventions for PEER students (e.g., Sisk et al. 2018), this implies that the modified PFAI could be an especially powerful assessment tool for future research. More research is needed to assess if this is a broad effect across all classes of underrepresented and excluded identities. Our sample was restricted to racial and ethnic exclusion and, even then, all possible identities were not represented (e.g., we did not collect data on subgroups within the broad category “Asian”). In addition, other types of underrepresentation and exclusion, such as gender, sexual orientation, first-generation status, and religious affiliation, likely influence FF and may affect responses on the PFAI. Future studies should investigate the fit of our modified PFAI among these groups and for students with identities that intersect multiple underrepresented groups.

Step 7: Cognitive interviews

Cognitive interviews were conducted to assess face validity of all twenty-five items proposed by Conroy et al. (2001). Face validity describes the extent to which a test or survey measures what it purports to measure. Cognitive interviews are an excellent way to assess face validity of survey questions measuring latent intrapersonal constructs because they allow the researcher to directly ask participants about their interpretation of survey items and to then assess whether this interpretation matches with the intended purpose of the item. They also assess participants’ understanding of the content of the instrument in addition to elucidating what the participant is thinking and feeling while responding, which can often influence the valence of responses (Willis 2015). For our study, we used cognitive interviews as the last step in our data collection to (a) check the face validity of our items (were the items interpreted by STEM undergraduates as we intended), (b) help elucidate potential reasons that certain items did not have good fit in our CFA and EFA analyses, and (c) provide clarity and additional information about how students were interpreting certain phrases that were more ambiguous in the PFAI items.

Participants and procedures

In accordance with results from our initial CFA (Step 1), students who participated in cognitive interviews were asked to consider the wording of the PFAI questions specifically in the context of their STEM courses and research (e.g., “For the following questions, please consider challenges and failures that you face specifically in your STEM course(s) and research”). Eleven students completed interviews of approximately 20 min each via Zoom in return for a $20 Amazon gift card. The research opportunity was announced to students by FLAMEnet instructor partners. Interested students completed an initial screening questionnaire. From this information, the research team selected students to participate in a deliberate attempt to achieve a sample with approximately equal gender and racial distribution and who represented STEM fields similar to those seen in our larger sample(s). Demographic characteristics of these students are described in Table 7. During these interviews, students were asked (a) if the meaning of each question was clear and how they interpreted the question, (b) if answer choices seemed appropriate, (c) if there were any suggestions for improving the question, and (d) if they had any other thoughts. In addition, students were asked to clarify their thought process related to the somewhat ambiguous phrase “important others,” which appears in many of the items (e.g., “Which specific people come to mind when they hear this phrase?” “Is it the same or different for each question which uses this phrase?” etc.). Students were also asked to provide their thoughts on three questions added by the researchers prior to the PFAI items on the survey. These items asked respondents to describe a time when they recently encountered a challenge or failure in their STEM course(s) and then to rate how upsetting they found that event on a scale from 0 to 10, with 10 being the most upsetting. Students were also asked to report how anxious they were about their performance in their STEM course(s) on a scale from 0 to 10, with 10 indicating the highest levels of anxiety (see “STEM anxiety” under “Step 1: Confirmatory factor analyses (CFAs) of existing models”, above).

Results

Overall, students found the survey instructions clear and appropriate. Students reported that our additional questions, which asked them to describe a recent challenge or failure in a STEM context and to rate how upsetting that experience was, along with their general levels of anxiety in STEM contexts, did not prompt any confusion, discomfort, or concern during the interviews.

Student responses to the ten items dropped through EFA (Step 2) both support the removal of these items from the measure and provide some explanations for why these items may not fit well for students in undergraduate STEM contexts. With a majority of these items, there appear to be specific words or phrases that generate confusion for the respondent. For example, with item 7, “When I am failing, I am afraid that I might not have enough talent,” students expressed hesitation with the word “talent,” especially since they felt it did not describe STEM contexts.

Why “talent”? I would expect to see “intelligence.” I’m not used to thinking about talent in this context [science and STEM].—CE

Talent—is that specific for that subject? It’s not as clear as “smart enough”? Talent is

associated more with singing, dancing, etc.”—MB

Similarly, for item 16, “When I am failing, I hate the fact that I am not in control of the outcome,” students objected to the use of “hate,” often stating that hate was too “strong” a word. They also felt that “not having control” was inappropriate in this context.

My initial reaction is that you’re always in control a little bit; I just don’t think anyone is not in control of the outcome. Is there a different way to word this?—CE

For the other dropped items, students expressed similar objections to specific words or phrases they found confusing.

General themes in students’ interview responses also provide important insight into how students interpreted the survey items. Throughout the measure, the item stems “When I am failing” and “When I am not succeeding” are alternated and, presumably, are thought to be synonymous. However, students said that they interpret these two phrases differently and would actually have responded to some questions differently, had the opposite stem been used.

“Not succeeding” is more broad than “failing.” Failing is an “F” vs. not succeeding is not getting straight 100 s when [you] wanted to. Depending on what your standard was for “succeeding,” it might change [your] response.”—UN

One student expressed that these changing stems were useful because they could respond about a broader range of experiences instead of only responding about the more extreme scenario of failing, which they narrowly defined as getting an “F.”

I like the changing stems because ‘failing’ and ‘not succeeding’ are two different things. You can ‘not succeed’ without ‘failing’. You could just be doing not as good as you thought you could do. [Getting a] ‘B’ instead of an ‘A’. [You’re] not failing though, because it’s not an ‘F’. I like that both are assessed with these questions.—PO

This conflict can especially be seen in student responses to item 10, the first time that the phrase “not succeeding” is introduced as an alternative question stem to “failing” in the original Conroy structure: “When I am not succeeding, I am less valuable than when I succeed.”

Wording change to ‘not succeeding’ is weird. I had to read it twice.—MB

This change could explain why this item was one of the ten items dropped by EFA. Multiple students expressed confusion at this word change and a need to reread the question. However, as indicated by the above students’ responses, students were generally able to interpret this phrase after considering it and used it to broaden the scope of scenarios they responded about.

Finally, these interviews help provide insight into the identities and roles of individuals the students called to mind when they encountered the phrase “important others.” Interestingly, student responses suggest that the specific people brought to mind by this phrase may change depending on the actions being attributed to those important others. When important others were described as losing interest (e.g., items 11 or 21), students described current or future professors, research supervisors, and employers (“they may ‘give up’ on you”—CE) or friends and classmates (“maybe [they] wouldn’t want to study with you anymore”—LF). Describing important others as upset prompted students to think more broadly, with answers including professors, family, and friends. However, the language of some of these items specifically pointed students towards family. For example, item # 3, “When I am failing, it upsets important others,” elicited the wide range of responses previously mentioned. However, item # 19, “When I am failing, important others are disappointed,” keyed students into thinking more specifically of “mostly family and relatives/caretakers” (PW). Similarly, item 6, “When I am failing, I expect to be criticized by important others,” was largely associated specifically with instructors and others with academic authority such as “teachers, professors, and mentors” (CE) and also “academic advisors, tutors, etc.” (MZ). In general, it appears that the most salient “important others” are brought to mind for various aspects of the STEM academic context with these items. That is, students tend to think of the important others that are most likely, in their estimation, to experience a given emotion or respond in a specific way to their failures or lack of success.

Brief discussion

Overall, results from our cognitive interviews support the general structure of the revised survey. There were no major confusions or issues with instructions or overall question wordings. Student responses supported the statistical decision to remove dropped items. In exploring student responses to items involving “important others,” we found that students may think of different people depending on the particular aspects of the STEM context that are evoked by the action phrases of the item (e.g., “criticize” vs. “upset” vs. “disappoint”). This ability of items to draw on the most salient people in students’ minds grants flexibility to these questions and reduces concerns that the phrasing of “important others” might be restrictive or otherwise confusing for respondents. This phrasing allows students to consider a broad range of relationships and histories among an individual student and those they consider to be “important.” However, it restricts survey interpretation in some ways because we cannot know the exact identity of the “important other” that comes to mind for students. We can only assume, based on these results, that that important other is an important person that is also likely to be perceived by the student as responding in accordance with the question language (e.g., being disappointed, upset, critical). Finally, these interviews suggest that some students do not view the phrases “when I am failing” and “when I am not succeeding” as interchangeable. However, this may, in fact, be a benefit of the measure. In most cases, students view “failing” as more negative, damaging, and permanent than “not succeeding.” By using the more mild “not succeeding” items, this measure may allow one to assess FF (or fear of not performing to a specific standard) in students who have rigid definitions for what constitutes “failure.”

Limitations

As with any research aimed at instrument validation, this work has several limitations. A priori power analysis using GPower 3.0 (Erdfelder et al. 1996) indicated that a sample size of 500 would be ideal for our initial planned CFA. While we recruited close to 500 participants in Step 1 (N = 423), only 54% of these participants (N = 235) provided data that were complete enough for analysis. This sample size limits our power to detect the small yet meaningful differences (Little 2013), which are increasingly recognized as large effects in the educational community (Kraft 2020). This may have affected model fit in our initial CFA, as fit indices are influenced by sample size (The precise effect varies by fit index; Kyriazos 2018). However, since our total sample for the initial CFA still exceeded the level at which the most conservative fit statistics begin to be affected (n = 200) and our CFA model had many indicators estimating each factor (5 items per factor in the original model), it is unlikely that our sample size influenced fit statistics to such an extent that erroneous conclusion were drawn (Boomsma and Hoogland 2001; Marsh and Hau 1999). In addition, our knowledge of likely recruitment difficulties led to our choice to use a planned missingness design (Little and Rhemtulla 2013; Rhemtulla & Little 2013), which resulted in the imputation of large sections of our data. This limitation is not present in our EFAs, which had a much larger sample size of 1309 and involved no data imputation. Likewise, our samples for the CFAs of our modified factor structure in a novel mixed sample and PEER-only sample did not involve multiple imputation or planned missingness. However, they both fell short of 500 participants (N = 433 and N = 280, respectively).

All of our mixed samples (used for Steps 1–5 and 7) contained low levels of academic, racial, and gender diversity. In particular, our samples contain a majority of students identifying as female. While women do currently comprise approximately half of the undergraduate STEM population and these percentages are higher in the life sciences (National Science Board 2018), female students are still likely overrepresented in our sample. While we were able to conduct a separate fit analysis for PEER students, racial and ethnic diversity of the other samples overall were not completely representative of national trends (U.S. Department of Education, 2012) and there may be finer grained variations between students from different racial and ethnic groups that our data are not able to elucidate. In addition, in our PEER analysis Asian students were treated as non-PEERs based on NSFs’ definition of Asian as not underrepresented in STEM. While this is true for the broad category, it does not take into account different Asian subgroups (e.g., Korean, Vietnamese) which may be underserved and excluded in STEM. If the goal is to assess interventions which would target underserved populations in STEM, it is especially important that assessment measures, such as the PFAI, accurately assess members of all underserved populations. Our investigation of the modified PFAI fit for PEERs starts this, but only scratches the surface. Future studies of the utility of the modified PFAI should consider nuances among PEER groups and other types of underserved groups (e.g., first-generation students). In addition, our sample contained a majority of Biology and Chemistry students and did not represent as many students from other STEM disciplines (e.g., Physics, Geoscience, Computer Science, Psychology). This should be taken into account when interpreting the results of this work. Also, a significant majority of participants across all samples reported pursuing a STEM major (e.g., Biology, Chemistry, Engineering). While the language modifying the PFAI is not specific to STEM majors and simply asks respondents to consider their responses to failure and challenges within STEM contexts, which may be equally applicable to STEM majors and students pursuing other majors who enroll in STEM classes to fulfill graduation requirements, it is nonetheless possible that the reason for enrolling in the course influences students’ goals within the STEM context and affects their responses to the PFAI. Future work should more carefully consider this possibility.

Finally, none of these samples were randomly selected. Participation was voluntary, with announcements of the research opportunity disseminated by instructors who value good pedagogy and may have previously encouraged more adaptive outcomes (like lower FF) in their classrooms. This, combined with possible self-selection of the most motivated or achievement-driven students among these classrooms, may have biased our participant group. However, our concerted efforts to collect data across multiple disciplines and multiple institutions representing diverse contexts may have partially mitigated this selection bias.

Discussion

The aim of this study was to evaluate, revise, and present a modified version of an existing instrument, the PFAI, for STEM undergraduate populations. This work is particularly important since prior evidence suggests that FF may contribute to STEM student procrastination (Onwuegbuzie, 2004; Zhang et al., 2018), threaten motivation (Ceyhan and Tillotson 2020), and even lead to attrition from STEM (Nelson et al., 2019). Our results support the modification of the original version of the PFAI to effectively measure STEM-specific FF. Our analyses supported a more parsimonious reduced scale: fifteen items as opposed to twenty-five items and four factors corresponding to different dimensions as opposed to five. In addition, we found support for the hypothesis that STEM-specific items provide the best fit for STEM undergraduate students. Notably, our reduced, STEM-specific scale functions well for both PEER and non-PEER samples and has good face validity. We also present evidence that our reduced, STEM-specific scale estimates different levels of FF in STEM student samples than the original Conroy measure—a finding supporting the importance of the scale’s revision and modification. Our results can be used to guide the use and interpretation of our new STEM-specific version of the PFAI within STEM undergraduate contexts.

A reduced, more parsimonious, version of the PFAI

Shorter, more parsimonious scales are generally preferred as they help to mitigate survey fatigue, improve response rates, and increase measure accuracy (reviewed in Peytchev and Peytcheva 2017). Based on our factor analyses, we were able to drop ten of Conroy’s original twenty-five items, including one entire dimension (FSDE) from the measure, resulting in a shorter, more parsimonious, scale of fifteen items. Our final best-fitting model of the PFAI specifically includes the FUF, FIOLI, FUIO, and FSE scales. We assert that Conroy’s (2001) original definitions of these dimensions continue to be appropriate for use in STEM populations since our cognitive interviews indicated that the items retained in the scale had reasonable face validity.

After our final examination of the EFA, the FDSE dimension proposed by Conroy et al. (2001) did not emerge among the responses of STEM undergraduates. This may mean that, within STEM academic contexts, undergraduates are not worried about damage to their self-estimate as a result of failure. However, this is not well supported in the literature, which implies that students who identify with a field of study may experience lower self-efficacy as a result of STEM failures (Bandura et al., 1999; Pajares 2005). Alternatively, it could suggest that the current PFAI items for this dimension (e.g., “When I am failing, I am afraid that I may not have enough talent.”) do not accurately articulate threats to students’ self-estimate within STEM contexts. Indeed, responses to cognitive interviews support this latter view, as students expressed confusion over considering “talent” in regard to STEM, as opposed to a more arts-based environment. Several studies provide evidence that people have different views of whether “talent” or similar attributes such as “brilliance” are determinants of success in certain fields (Leslie et al. 2015; Storage et al. 2016). It could be that, for the STEM fields included in this study, “talent” is not seen as a determinant of failure or success, and therefore, it did not make sense when included in some items. However, it is of note that one item that was originally on the FDSE factor (“When I am failing, I blame my lack of talent”) loaded onto the FUF factor based on STEM students’ responses. So, while some students were uncertain if “talent” was the right word to use when discussing science, their responses to the survey nevertheless indicate that feeling as if they possess a lack of talent in STEM contexts is linked to future uncertainty. Additional studies could address whether STEM students do, or do not, experience fear of devaluing their self-estimate when experiencing STEM failures and how students might describe this using words other than “talent” in order to generate potential new items within this dimension.

Interestingly, while all of Conroy’s other proposed dimensions still emerged from students’ responses, we noted that items dropped from the scale often included items with particularly strong and direct wording (e.g., “When I am failing, I expect to be criticized by important others” or “When I am failing, I believe that everybody knows I am failing). Responses to such questions may have been impacted by individuals’ tendency to, knowingly or unknowingly, underreport thoughts, feelings, or behaviors which run contrary to established social norms or are perceived to invade their privacy (Gnambs and Kaspar 2014). For example, Fisher (1993) showed that social desirability bias in survey responses was affected by direct versus indirect questioning. Indirect questions, which ask subjects to respond from the perspective of another person, alleviated social desirability bias while direct questions did not. In addition, research has found that bias tends to be enhanced when respondents view survey questions as sensitive or seeking to invade their privacy (Gnambs and Kaspar 2014; Krumpal 2013).

While the PFAI questions are not indirect, it could be that the most strongly worded PFAI questions do not load well onto factors because they more blatantly confront the respondent with constructs which are viewed as too personal or extreme to endorse. In addition, if respondents associate FF constructs with social norms and a related potential to generate personal discomfort or negative reactions, they could be less likely to endorse these beliefs (Fisher, 1993). Our cognitive interviews support these conclusions as students were often opposed to strongly valenced words such as “hate,” words that carried specific connotations such as “talent,” and phrases that brought into question their personal agency or privacy such as “not in control” and “everybody knows.” Given our findings from cognitive interviews and EFA analyses, in addition to findings from other studies, we feel that removal of the ten items improves the modified PFAI scale not only because it makes it shorter, but also because it may avoid introducing biases as a result of emotional reactions to question wording.

A STEM-specific version of the PFAI