Abstract

Amidst the technological evolution shaping the landscape of education, this research critically examines the imperative factors influencing the design of language-teaching chatbots in Thai language classrooms. Employing a comprehensive two-pronged methodology, our study delves into the intricacies of chatbot design by engaging with a diverse participant pool of pre-service teachers, in-service teachers, and educators. The study included the chatbot design opinion survey, a validated scale assessing attitudes toward chatbot design, and semi-structured interviews with teachers, educators, and experts to offer qualitative insights. Our findings reveal five key factors influencing chatbot design: learner autonomy and self-directed learning, content and interaction design for language skill development, implementation and usage considerations, alternative learning approaches and flexibility, and content presentation and format. Concurrently, thematic analysis of interviews results in five overarching themes: enhancing interactive language learning, motivational learning experience, inclusive language learning journey, blended learning companion, and communicative proficiency coaching. These findings inform the development of effective chatbots for Thai language classrooms within the evolving landscape of technology-driven education. These findings inform the development of effective chatbots for language classrooms within the evolving landscape of technology-driven education.

Similar content being viewed by others

Introduction

A chatbot, a computer program adept at simulating conversations with human users, particularly online, has become a pivotal component in the realm of artificial intelligence (AI). Its functionality is underscored by AI capabilities and natural language processing (NLP) (Zumstein & Hundertmark, 2017), enabling it to comprehend and respond to user inquiries or prompts. The integration of AI in education has witnessed substantial growth and emerged as a key area of interest (Roos, 2018; Wiboolyasarin et al., 2024; Yuan, 2023). Among the myriad AI technologies employed to enhance teaching and learning activities, chatbot systems stand out prominently (Okonkwo & Ade-Ibijola, 2020).

In the contemporary landscape, where technological advancements dictate communication trends, the use of chatbots has gained momentum, especially in educational settings. These systems play a crucial role in augmenting student interaction, aligning perfectly with the prevalent reliance on online platforms. Given the widespread ownership of smartphones among higher education students, chatbot systems are often implemented as mobile web applications to facilitate learning (Okonkwo & Ade-Ibijola, 2021). Moreover, their versatility allows integration into various platforms such as websites or messaging applications, offering automated assistance, answering queries, performing tasks, and engaging in meaningful conversations. As a result, chatbots significantly enhance user experiences, streamline interactions, and automate specific functions, making them increasingly prevalent in user support, information retrieval, and various other online services.

Extensive research explores the application of chatbots in education, primarily focusing on their effectiveness in language learning (Annamalai et al., 2023a, 2023b; Green & O’Sullivan, 2019; Jeon, 2024; Yuan et al., 2023; Yuan, 2023) and student motivation (Laeeq & Memon, 2019; Liu et al., 2023; Yin et al., 2021; Zhang et al., 2023c). However, a critical gap exists in literature. Prior research often relies on fragmented literature reviews, failing to comprehensively analyze existing knowledge on educational chatbot design principles. Additionally, these reviews frequently encompass various educational approaches, neglecting broader design considerations beyond pedagogy (Chen et al., 2020).

While previous studies have examined the efficacy of chatbots in language learning and motivation (Annamalai et al., 2023a), a crucial gap exists in the area of comprehensive qualitative investigations that delve specifically into the design of chatbots for secondary school students within language classrooms. Furthermore, there is a lack of research considering how chatbots are designed for both mainstream languages like English and less commonly taught languages like Thai. Furthermore, as highlighted in review studies by Hew et al. (2023) and Hwang and Chang (2023), chatbot research in education has primarily employed quantitative approaches in design.

To design more personalized and engaging chatbot-based language learning experiences, gaining a deeper understanding of how chatbots can effectively support users in language classrooms is paramount. This necessitates a convergent mixed methods approach (Creswell & Creswell, 2022) to deepen our contextualized understanding of chatbot design. Thus, this study aims to address this research gap by exploring users’ experiences with chatbots for language learning. This exploration seeks to identify the factors influencing the design of language-teaching chatbots in the context of language classrooms and how to incorporate these factors to optimize their effectiveness and student engagement.

Our research questions are specifically formulated to address this need:

-

Research Question 1: What factors influence the design of language-teaching chatbots in the context of language classrooms?

-

Research Question 2: How should chatbots be designed for the language classroom to optimize their effectiveness and student engagement?

Literature review

Chatbots, conversational computer programs powered by AI and NLP, are gaining traction in language education. These educational chatbots leverage NLP and machine learning algorithms to understand and respond to user inputs, offering an interactive learning tool for language acquisition (Liu et al., 2022; Yuan et al., 2023). This technology boasts several advantages, including immediate feedback, personalized learning experiences, and consistent practice opportunities.

Recognizing the pedagogical potential of chatbots, researchers and educators are actively exploring their application in educational settings. Studies suggest positive student attitudes towards chatbot integration due to features like instant feedback, human-like interactions, and reduced anxiety (Al-Shafei, 2024; Blut et al., 2021; Guo et al., 2023; Kohnke, 2022; Zhang et al., 2023, 2023b) Moreover, empirical evidence demonstrates positive impacts on student learning outcomes (Baha et al., 2024; Guo et al., 2024; Jasin et al., 2023; Liu et al., 2022; Yuan et al., 2023).

For example, Liu et al. (2022) investigated the affordances of an AI-powered chatbot designed as a book talk companion. Their study explored the role of chatbot interaction in fostering student engagement and reading interest. The findings revealed a strong student-perceived social connection with the chatbot, evidenced by stable situational interest during conversations. Additionally, a positive correlation emerged between perceived social connection and reading engagement. Similarly, Yuan et al. (2023) found that students in an AI-assisted learning group demonstrated significant improvement in oral English proficiency and communication willingness compared to a control group using conventional methods. These studies highlight chatbots’ potential as powerful facilitators in language learning, not only enhancing proficiency but also creating supportive, non-judgemental practice environments that reduce language anxiety and boost student confidence.

While research has examined learner attitudes and the impact of chatbot use on learning outcomes (Zhang et al., 2023, 2023c) the aspect of user experience informing chatbot design remains underexplored. Although Annamalai et al. (2023b) explored user experiences with chatbots for English language learning, revealing factors influencing positive and negative experiences, the study, like many others, often overlooks the design principles underpinning chatbot effectiveness (Kuhail et al., 2023). Many studies focus on specific functionalities like implementing the 5E learning model (Liu et al., in press) and micro-learning (Yin et al., 2021), or incorporating scaffolding strategies (Winkler et al., 2020), but fail to delve into the broader design considerations that inform these functionalities. This lack of focus on design principles hinders the development of truly effective educational chatbots.

Drawing attention to these foundational elements, Martha and Santoso (2019) emphasized the role and appearance of the chatbot as crucial in design, similar to Okonkwo and Ade-Ibijola (2021) and Winkler and Söllner (2018), who posit that chatbot design directly affects its effectiveness in education. A comprehensive understanding of these design principles is essential for educators and practitioners aiming to develop chatbots that are not only functionally sound but also pedagogically engaging for students.

Method

Our research methodology involved a two-pronged approach: an online survey conducted at the project’s outset, encompassing pre-service teachers, in-service teachers, and educators from both basic and higher education communities, and semi-structured interviews with selected teachers, educators, and experts aligned with our research questions (RQs). This mixed methods approach was essential to provide a comprehensive understanding of chatbot design for language learning, combining quantitative and qualitative analyses to capture a full spectrum of insights.

Participants

For our investigation, we engaged a diverse population in the field of language education in Thailand, including pre-service teachers, in-service teachers, and educators. Collaboration with colleagues and acquaintances teaching in the Faculty of Education facilitated access to our sample of student teachers. The demographic breakdown revealed a majority of female participants (n = 564, 75.40%) and a significant representation of male participants (n = 184, 24.60%). Most participants identified as pre-service teachers (n = 345, 46.12%), with others being in-service teachers (n = 246, 32.89%) and educators (n = 157, 20.99%) in Thailand. Geographically, participants were distributed across various regions, with Bangkok and surrounding areas (n = 201, 26.87%) having the highest affiliation, followed by the Central Region (n = 188, 25.13%), the Northern Region (n = 158, 21.12%), and smaller numbers in the Northeast (n = 102, 13.64%), Eastern (n = 67, 8.96%), and Southern (n = 32, 4.28%) Regions. Participants exhibited a broad spectrum of learning or teaching experience, with 386 (51.60%) having 1–9 years and 362 (48.40%) having 10 years or more in the field. Regarding technology ownership, participants possessed various devices, including tablets (Android: n = 58, 7.75%; iOS: n = 446, 59.63%) and smartphones (Android: n = 280, 37.43%; iOS: n = 472; 63.12%), along with other miscellaneous devices (n = 52, 6.95%). Preferences for using the LINE application were split between tablets (Android: n = 48, 6.42%; iOS: n = 416, 55.61%) and smartphones (Android: n = 272, 36.36%; iOS: n = 456, 60.96%) across Android and iOS platforms. Preliminary exploration into participants’ daily digital habits revealed diverse patterns, with a significant majority accessing devices for more than 5 h daily (n = 432, 57.75%), while others reported varying durations of usage.

To recruit participants for interviews, we employed two methods: Firstly, notices in education groups within the LINE application aimed to reach a diverse set of individuals from various roles within the education sector. Secondly, personal invitations to colleagues in Teacher Education programs ensured the inclusion of individuals with specialized knowledge and expertise. Through these methods, we recruited 20 interviewees, consisting of 10 teachers, 5 educators, and 5 experts. This targeted approach allowed us to gain insights from individuals with diverse perspectives and experiences related to the use and design of chatbots in language education. Prior to interviews, participants received project summaries, consent forms, and encouragement to ask questions. Those not previously surveyed completed a pre-interview questionnaire. The interviews were conducted online via Zoom, audio-recorded, and transcribed for analysis.

Questionnaire development

The Chatbot Design Opinion Survey (CDOS) is a 50-item scale designed to measure responses toward a chatbot’s design on a five-point scale, ranging from “Strongly agree” to “Strongly disagree.” Derived from previous literature in relevant fields (Annamalai et al., 2023a, 2023b; Chen et al., 2020; Guo et al., 2023; Yuan et al., 2023; Zhang et al., 2023c), these question items were translated into Thai to ensure response validity, following recommended steps of forward translation, backward translation, reconciliation, and pretesting (Beaton et al., 2000). Content validity indices were evaluated by a panel of 10 subject matter experts specializing in language teaching (n = 5) and instructional technology (n = 5), selected based on specific academic criteria, including a doctorate in a relevant field and ten or more years of teaching experience with pre-service teachers. A package containing a cover letter, questionnaire, and content validity survey was distributed to the raters. The content validity was quantitatively assessed using two forms of content validity index (CVI): item-level CVI (I-CVI) and scale-level CVI (S-CVI). A prototype version of the questionnaire demonstrated good item-specific content validity (an I-CVI range of 1.00) and strong overall content validity (S-CVI/UA = 1.00 and S-CVI/Ave = 1.00). To assess the reliability of the questionnaire, pretesting was conducted on a representative sample of 30 respondents. Cronbach’s alpha for internal consistency was calculated, excluding the sample from the study. The resulting reliability level was 0.971, indicating relatively homogeneous items (Abbady et al., 2021; Abraham & Barker, 2015; Wiboolyasarin et al., 2023), with no omissions.

Semi-structure interviews

In parallel with the survey, we developed research materials, such as consent forms, project summary sheets, initial information request forms, and interview templates for teachers, educators, and experts. Questions for educators and experts covered chatbot descriptions, design factors, features, learning activities, modifications, and experiences, while teacher questions focused on the specific design of chatbots for secondary school students and implementing chatbots in language classrooms. These materials were submitted to the Ethics Committee for approval. Our RQs provided the lens for data analysis, adopting an inductive or thematic approach (Braun & Clarke, 2006, 2021). Two team members independently analyzed interviews, identifying recurring themes and subthemes. The article focuses on a core subset of the data, presenting themes, subthemes and its frequency. Design considerations and principles for chatbot development were extrapolated from these subthemes, establishing connections with quantitative data, and are consolidated in Table 4 within the “Implications and Suggestions” section.

Data collection

The initial phase involved constructing and administering an online survey using the Microsoft Forms application. The survey served a dual purpose: firstly, to gather data on user needs and factors influencing language learning experiences relevant to designing a language classroom chatbot, and secondly, to identify potential participants for follow-up interviews. A total of 748 questionnaires were completed, with 20 respondents expressing interest in participating in interviews. Concurrently, we prepared research materials specific to different participant groups: teachers, educators, and experts. These materials include consent forms, project summaries, initial information request forms, and interview templates tailored to each groups’ expertise. The survey data informed the development of interview questions focusing on chatbot descriptions, design factors, features, learning activities, potential modifications, and user experiences.

Data analysis rationale

The rationale for employing a mixed methods approach lies in the complementary strengths of quantitative and qualitative methods. The quantitative analysis, specifically exploratory factor analysis (EFA), provided a robust statistical framework for identifying underlying factors influencing chatbot design. EFA helped in extracting significant patterns from the survey data, which were crucial for understanding broad trends and correlations within the dataset. The reliability of the results is underscored by the use of rigorous statistical techniques, such as the Kaiser–Meyer–Olkin (KMO) measure and Bartlett’s test of sphericity, which confirmed the suitability of the data for factor analysis.

Conversely, the qualitative analysis, conducted through thematic analysis of semi-structured interviews, offered in-depth insights into individual experiences and perceptions that quantitative methods alone could not capture. Thematic analysis allowed us to explore the nuanced aspects of user interactions with chatbots, uncovering detailed and context-specific factors that impact the design and implementation of chatbot systems in educational settings.

By integrating EFA and thematic analysis, we ensured a comprehensive understanding of chatbot design principles. The quantitative data offered a macro-level view, identifying key factors and their interrelationships, while the qualitative data provided a micro-level understanding of personal experiences and contextual influences. This mixed methods approach enabled us to triangulate findings, enhancing the validity and reliability of our results and offering a well-rounded perspective on the effective design of chatbots for language learning. This robust methodological integration ensures that the resulting design principles are both statistically grounded and richly informed by user experiences, making them highly relevant and practical for implementation in educational contexts.

Results

Exploratory factor analysis

This study aimed to identify key factors influencing the design of chatbots for language classrooms. In a survey study, an EFA was conducted on 50 items using principal components analysis (PCA) with varimax rotation, Kaiser normalization, and an eigenvalue cut-off of 1. This analysis revealed five distinct factors accounting for 45.87% of the variance. Item loadings exceeding 0.40, the absence of cross-loadings, and the number of items per factor were considered for interpretation (Costello & Osborne, 2005).

Prior to the EFA, univariate descriptive analysis confirmed the suitability of the data for factor analysis. The sample size of 748 yielded a subject-to-item ratio exceeding 10:1, exceeding the recommended threshold. Additionally, the KMO measure of sampling adequacy was 0.956, indicating excellent sampling adequacy. Bartlett’s test of sphericity was statistically significant (χ2 = 12,409.207, df = 1225, p = 0.000), further confirming the appropriateness of using factor analysis (Hair et al., 2019).

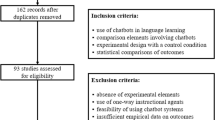

To investigate factor retention, a PCA extraction approach with varimax rotation was used on a 50-item questionnaire. Initially, the EFA generated a model with six components that account for 65.99% of the shared variance and had eigenvalues greater than one, which was sufficient for further analysis based on Kaiser’s criterion. Item commonalities (retaining items larger than 0.40), factor loadings (retaining items higher than 0.40), and the number of items per factor (retaining factors with at least three items) were examined to evaluate items and factors (Hair et al., 2019). Ultimately, no items were deleted due to low commonalities or insufficient factor loadings, but the sixth factor was eliminated because it contained no items larger than 0.40, as presented in Table 1.

The final analysis of the 50-item scale using EFA confirmed the presence of the five factors initially identified. Furthermore, a clear elbow point in the scree plot (Fig. 1) supported the extraction of five significant factors. These extracted factors represent broad categories with high relevance to chatbot design for language learning, as detailed in Table A-1. of Appendix 1. Following the analysis, we assigned subjective labels to the factors to enhance clarity, as follows:

Factor 1: learner autonomy and self-directed learning

This factor underscores the importance of features that empower learners and foster a sense of ownership over their learning journey. Immediate feedback allows learners to self-assess and adjust their strategies, while personalized learning paths cater to individual needs and learning styles. Learners gain agency and motivation through the flexibility to choose content, the order of engagement, and the level of difficulty, further promoting self-directed learning. Additionally, self-paced learning empowers learners to access the chatbot and materials at their convenience, accommodating individual schedules and learning styles.

Factor 2: content and interaction design for language skill development

This factor emphasizes how content and interaction elements are designed to actively promote the development of various language skills. The chatbot targets specific skills like listening, speaking, reading, and writing through diverse activities and exercises. Learners actively engage with the chatbot through interactive elements like responding to prompts, participating in dialogues, and completing tasks, fostering skill development through practice and application. The use of multimedia elements like audio, video, and images enhances engagement and caters to diverse learning styles, while aligning content with real-life contexts makes learning relevant and applicable to practical scenarios.

Factor 3: implementation and usage considerations

This factor addresses practical considerations for integrating chatbots effectively within the learning environment. Usage guidelines specify aspects like recommended session durations, synchronous or asynchronous completion of activities, and specific instructions for using the chatbot. Determining the language used in the chatbot content, whether solely the target language or a combination with the learners’ native language, is crucial for comprehension and accessibility. Defining the intended role of the chatbot within the broader curriculum is essential, whether as the primary learning platform, a supplementary resource, or for specific phases like introductions, lesson delivery, or summaries.

Factor 4: alternative learning approaches and flexibility

This factor highlights features that cater to diverse learning styles and preferences, ensuring an inclusive and adaptable learning experience. Learning time flexibility allows learners to extend sessions beyond predetermined limits if needed, accommodating individual learning paces and preferences. Asynchronous learning empowers learners to engage with materials and activities at their convenience, independent of fixed schedules or synchronous sessions. Additionally, providing diverse content formats and options, such as incorporating the International Phonetic Alphabet (IPA) alongside the target language, caters to different learning styles and addresses specific needs.

Factor 5: content presentation and format

This factor focuses on the various ways information is presented within the chatbot, catering to diverse learning preferences and enhancing content delivery. Descriptive videos provide visual learners with engaging and informative content that complements textual explanations. Clear and concise textual explanations ensure comprehension for learners who prefer reading-based learning. Combining audio narration with visual or textual content creates a more immersive and engaging experience for auditory learners.

Thematic analysis

To gain deeper insights and validate the findings from the EFA, we conducted semi-structured interviews with a diverse group of 20 participants. To ensure a comprehensive range of perspectives on chatbot use and design in language education, a targeted recruitment approach was employed. This strategy involved posting project notices within education groups on the LINE application, reaching a broad audience of educators familiar with online platforms. Additionally, personal invitations were extended to colleagues in Teacher Education programs, guaranteeing participation from individuals with specialized knowledge and expertise in language pedagogy. Prior to the interviews, all participants received a project summary, consent form, and the opportunity to ask clarifying questions about the study’s objectives. The interviews themselves were conducted online via Zoom, audio-recorded for later transcription and analysis.

Thematic analysis (Wiboolyasarin & Jinowat, 2024) was employed to identify recurring themes, patterns, and insights within the qualitative interview data from pre-service teachers, in-service teachers, and educators. This approach, utilizing an inductive or thematic coding scheme (Braun & Clarke, 2006, 2021), provided a comprehensive understanding of participants’ perspectives on chatbot design for language learning. Two team members independently analyzed the interviews, identifying recurring themes and subthemes, as illustrated in Table 2.

The triangulation of quantitative (EFA) and qualitative (thematic analysis) data provides a holistic understanding of the factors influencing chatbot design for language learning. The EFA results align with the thematic analysis, reinforcing the importance of learner autonomy, effective content and interaction design, practical implementation considerations, flexibility, and diverse content presentation and formats. These findings are further detailed in Table 3, which presents a coding scheme derived from the thematic analysis of semi-structured interviews. This coding scheme categorizes user perspectives into specific design principles that can inform the development of effective chatbots for language learning.

Leveraging a convergent mixed methods approach (Creswell & Creswell, 2022), we sought to integrate the quantitative statistical results with the qualitative textual data to achieve a comprehensive understanding of chatbot design. The entailed merging the five factors identified through EFA with the five themes and 16 sub-themes derived from the qualitative analysis. Figure 2 illustrates the data merging process, which considered the relevance and interrelationships between these elements.

The design considerations for a language-teaching chatbot are intricately interconnected, emphasizing a holistic and learner-centric approach to language learning. This ensures a more engaging and effective learning experience. The alignment of the five identified factors with the overarching themes is as follows:

Factor 1: Learner Autonomy and Self-Directed Learning aligns with Theme 1: Enhancing Interactive Language Learning.

-

Subtheme 1.1: Immediate Interaction and Feedback underscores the significance of real-time feedback in aligning with learner autonomy by enabling self-assessment and strategy adjustments.

-

Subtheme 1.3: Adaptability to User Needs supported personalized learning paths, granting learners autonomy in selecting content, practicing skills in their preferred order, and adjusting difficulty levels.

-

Subtheme 1.4: Promotion of Fun and Additional Learning Resources fosters self-directed learning by offering engaging features and encouraging exploration of additional resources, contributing to a more enjoyable and self-driven experience.

Factor 2: Content and Interaction Design for Language Skill Development further aligns with Theme 1.

-

Subtheme 1.2: Enhanced Communication Skills highlights the chatbot’s role in actively engaging learners through practical interaction and dialogue, promoting communication skill development.

-

Factor 3: Implementation and Usage Considerations connects with Theme 3: Inclusive Language Learning Journey.

-

Subtheme 3.1: User-Friendly Design and Accessibility addresses practical considerations for effective chatbot integration, emphasizing usability and accessibility for all learners.

-

Subtheme 3.2: Relevance to Digital Citizen Life Skills emphasizes defining the chatbot’s role within the curriculum to ensure alignment with broader educational objectives and digital skills.

-

Subtheme 3.3: Gradual Difficulty Progression and Continuous Story suggests the chatbot’s ability to provide lessons with increasing difficulty and a continuous narrative, which can maintain user engagement.

-

Subtheme 3.4: Multilingual Learning Options showcases the chatbot’s inclusivity by offering learning in multiple languages, catering to a diverse audience.

Factor 4: Alternative Learning Approaches and Flexibility integrates with Theme 4: Blended Learning Companion.

-

Subtheme 4.1: Self-Learning Outside of the Classroom supports flexible learning time and asynchronous engagement, providing options for self-paced and independent learning outside of class.

-

Subtheme 4.2: Integration with Classroom Activities positions the chatbot as a versatile companion, supporting both in-class and independent learning in a flexible and integrated manner.

Factor 5: Content Presentation and Format influences both Theme 2: Language Skill Development and Theme 5: Communicative Proficiency Coaching.

-

Subtheme 2.1: Stimulation of Interest and Motivation emphasizes the chatbot’s ability to spark curiosity and maintain user engagement through diverse content presentation and formats.

-

Subtheme 2.2: Promotion of Self-Development underscores the chatbot’s role in facilitating users’ learning and improvement through engaging content.

-

Subtheme 2.3: Problem-Solving and Simulation offers opportunities for users to practice problem-solving skills through interactive simulations.

-

Subtheme 2.4: Positive Feedback in Learning reinforces the chatbot’s role in providing positive reinforcement and encouragement, motivating users to persist in their learning journey.

-

Subtheme 5.1: Emphasis on Communication Skills highlights how the chatbot utilizes various formats like videos, text, and audio, to support communication skill development.

-

Subtheme 5.2: Immediate Feedback and Diverse Assessment Options underscores the chatbot’s ability to provide immediate feedback and diverse assessment methods to enhance both learning and communication proficiency.

Discussion

Our study delves into the intricate design considerations for language-teaching chatbots, employing a comprehensive mixed-methods approach. The quantitative phase, leveraging EFA, identified five key factors encapsulating crucial aspects of effective chatbot design for language learning. Subsequent thematic analysis of qualitative data from semi-structured interviews complemented these findings, offering a holistic exploration of participants’ perspectives and experiences regarding language-teaching chatbot design.

The EFA yielded five distinct factors, each significantly contributing to the observed data variability. Factor 1, labeled “Learner Autonomy and Self-Directed Learning,” accentuates features empowering learners to take ownership of their educational journey. Immediate feedback, personalized learning paths, and self-paced learning emerged as vital components, all aligning harmoniously with the overarching theme of “Enhancing Interactive Language Learning.” By facilitating active knowledge exploration and construction (Chang et al., 2022), chatbots designed with this ethos allow students to comprehend instructional materials (Fidan & Gencel, 2022; Kohnke, 2023b) and receive personalized feedback, ultimately augmenting overall learning effectiveness (Grossman et al., 2019). Prior studies (Vazquez-Cano et al., 2021; Wu et al., 2020; Yin et al., 2021) have underscored the positive impact of chatbots on self-paced learning, offering effective feedback, and improving learning performance, highlighting the importance of real-time feedback and user-adaptive features within a self-directed learning framework.

Factor 2, “Content and Interaction Design for Language Skill Development.” actively promotes the development of diverse language skills through interactive activities. Recognized as interactive agents delivering instantaneous responses (Okonkwo & Ade-Ibijola, 2020; Smutny & Schreiberova, 2020), chatbots play a pivotal role in enriching human interaction, especially in a technology-driven world heavily reliant on online platforms (Okonkwo & Ade-Ibijola, 2021). Learners can engage with chatbots through interactive elements, seamlessly aligning with the overarching theme of “Enhancing Interactive Language Learning.”

Chatbots excel at providing standardized information instantly, covering course contents (AlKhayat, 2017; Cunningham-Nelson et al., 2019; Divekar et al., 2022), responding to student queries (Essel et al., 2022; Jasin et al., 2023), and serving as instructional partners (Fryer et al., 2019; Kim et al., 2019; Liu et al., 2022; Yang et al., 2022). Beyond enhancing student engagement and support, these systems significantly alleviate the administrative workload of instructors, allowing them to concentrate on curriculum development and research (Cunningham-Nelson et al., 2019). Despite the prevalence of various modes of interaction in education, chatbot technology uniquely facilitates individualized learning experiences, offering students a more personalized and engaging learning environment (Abbas et al., 2022; Benotti et al., 2017; Studente et al., 2020). This factor underscores the significance of practical interaction, dialogue, and multimedia elements in fostering skill development and ensuring learning remains relevant and applicable to real-life scenarios.

Factor 3, “Implementation and Usage Considerations,” pragmatically addresses chatbot integration within the learning environment, resonating with the theme of an “Inclusive Language Learning Journey.” It emphasizes user-friendly design, accessibility, gradual difficulty progression, and the provision of multilingual learning options. Prior research has lauded chatbots for facilitating swift access to educational information (Ciupe et al., 2019; Murad et al., 2019; Wu et al., 2020). This expeditious access not only saves time but also maximizes students’ learning potential and achievements (Clarizia et al., 2018; Murad et al., 2019; Ranoliya et al., 2017). Furthermore, chatbots exhibit advantages as language partners, enriching the learning process for L2 students (Guo et al., 2022). Practical considerations, including session durations, language choices, and the chatbot’s role within the curriculum, play a pivotal role in ensuring effective integration, enhancing usability and relevance to optimize the overall language learning experience.

Factor 4, “Alternative Learning Approaches and Flexibility,” emphasizes features tailored to diverse learning styles, ensuring an inclusive and adaptable learning experience. Chatbots’ accessible 24/7 on learners’ personal mobile devices provides education at convenient times and locations. This information is delivered in manageable, bite-sized portions, a format well-suited to the fast-paced lives of contemporary students (Dokukinaa & Gumano, 2020). As advocated by Kohnke (2023a), an effective L2 instructional chatbot should inspire students to complete extracurricular assignments, enhance class preparedness, and enable studying at preferred times and locations. This aligns seamlessly with the theme of a “Blended Learning Companion,” highlighting the importance of flexible learning schedules, asynchronous participation, and integration with both classroom and independent learning environments.

Factor 5, “Content Presentation and Format,” focuses on diverse methods of presenting information, tailored to accommodate various learning preferences. Its impact extends to both the “Motivational Learning Experience” and “Communicative Proficiency Coaching” themes, underscoring varied content presentation and formats, immediate feedback mechanisms, and a range of assessment methods. The integration of rich media design, incorporating text, pictures, and videos, empowers students to choose preferred modes of engagement (Yin et al., 2021), fostering motivation in language learning. Previous research demonstrates the utility of chatbots in supporting language learning by delivering prompt feedback during conversations (Annamalai et al., 2023a, 2023b; Guo et al., 2022), and conducting language assessments (Jia et al., 2012). Incorporating these features ensures a dynamic and engaging language learning experience, aligning with the objectives of this factor.

Implications and suggestions

The integration of specific design principles within a language learning chatbot holds significant implications for enhancing pedagogical practices and learner outcomes. Each factor, as outlined in Table 3, contributes to a comprehensive framework for designing chatbot in language classrooms. As we delve into the implications of these factors, in Table 4, we also offer suggestions for educators, instructional designers, and developers to further refine and apply these principles effectively.

Conclusions, limitations, and future directions

This study significantly advances the field of language learning chatbot design by offering a nuanced understanding of the pivotal factors influencing their effectiveness in classrooms. Our meticulous mixed-methods approach, combining quantitative and qualitative data, provides a comprehensive view for educators, designers, and researchers, empowering them to make informed decisions concerning the development and implementation of language-teaching chatbots.

As digital technology reshapes education, our research establishes a robust foundation for the intentional integration of chatbots into language learning environments. These chatbots can cultivate engaging, adaptive, and effective educational experiences for learners, aligning perfectly with the demands of contemporary language education. This research is particularly significant considering the ever-evolving landscape of instructional technologies and the growing need for innovative and effective language learning solutions.

Our empirical research, while insightful, encountered limitations in participant diversity. The perspectives primarily stemmed from a restricted pool of pre-service teachers, limiting the scope compared to a broader range that includes experienced teachers and educators. Expanding the pre-service teacher sample could have provided a more comprehensive understanding of user experiences from their unique standpoint. Additionally, the limited sample size might have hindered the depth of insights into the nuances of their experiences.

To address this limitation and enhance the robustness of our findings, future studies should incorporate a more extensive and diverse group of pre-service teachers. Longitudinal studies are also crucial to capture user experiences over extended periods, shedding light on evolving interrelationships between user learning and chatbot design.

Another critical limitation is the absence of evaluation for the proposed design considerations. We plan to implement them in a future chatbot designed for secondary school students. This implementation, using a LINE Bot platform, will allow us to gather user feedback on the applicability and usefulness of the design considerations while assessing language improvement outcomes. This iterative approach will contribute to a more comprehensive and refined understanding of the user experience-chatbot design dynamics.

Future research should also explore the integration of advanced AI capabilities, such as Generative AI (GenAI) and Large Language Models (LLMs), to further enhance the adaptability and interactivity of chatbots. The use of GenAI and LLMs can significantly impact the effectiveness and acceptance of chatbots in educational settings, as evidenced by recent research (Mahapatra, 2024; Tlili et al., 2023). These studies underscore the importance of carefully designing chatbot interactions to maximize educational benefits while minimizing potential drawbacks. By integrating these advanced AI technologies, future chatbots can provide more nuanced, context-aware responses, making the learning experience more engaging and effective. Continuous refinement of chatbot design based on user feedback and technological advancements will ensure these tools remain relevant and impactful in supporting language education.

Availability of data and materials

The data used in this study will be made available upon request.

References

Abbady, A. S., El-Gilany, A.-H., El-Dabee, F. A., Elsadek, A. M., ElWasify, M., & Elwasify, M. (2021). Psychometric characteristics of the of COVID stress scales-Arabic version (CSS-Arabic) in Egyptian and Saudi university students. Middle East Current Psychiatry, 28(14), 1–9. https://doi.org/10.1186/s43045-021-00095-8

Abbas, N., Whitfield, J., Atwell, E., Bowman, H., Pickard, T., & Walker, A. (2022). Online chat and chatbots to enhance mature student engagement in higher education. International Journal of Lifelong Education, 41(3), 308–326. https://doi.org/10.1080/02601370.2022.2066213

Abraham, J., & Barker, K. (2015). Exploring gender difference in motivation, engagement and enrolment behaviour of senior secondary physics students in New South Wales. Research in Science Education, 45(1), 59–73. https://doi.org/10.1007/s11165-014-9413-2

AlKhayat, A. (2017). Exploring the effectiveness of using chatbots in the EFL classroom. In P. Hubbard & S. Ioannou-Georgiou (Eds.), Teaching English reflectively with technology (pp. 20–36). IATEFL.

Al-Shafei, M. (2024). Navigating human-chatbot interactions: An investigation into factors influencing user satisfaction and engagement. International Journal of Human–Computer Interaction. https://doi.org/10.1080/10447318.2023.2301252

Annamalai, N., Eltahir, M. E., Zyoud, S. H., Soundrarajan, D., Zakarneh, B., & Salhi, N. R. A. (2023a). Exploring English language learning via Chabot: A case study from a self determination theory perspective. Computers and Education: Artificial Intelligence, 5, 1–8. https://doi.org/10.1016/j.caeai.2023.100148

Annamalai, N., Rashid, R. A., Hashmi, U. M., Mohamed, M., Alqaryouti, M. H., & Sadeq, A. E. (2023b). Using chatbots for English language learning in higher education. Computers and Education: Artificial Intelligence, 5, 1–9. https://doi.org/10.1016/j.caeai.2023.100153

Baha, T. A., Hajji, M. E., Es‑Saady, Y., & Fadili, H. (2024). The impact of educational chatbot on student learning experience. Education and Information Technologies, 29, 10153–10176. https://doi.org/10.1007/s10639-023-12166-w

Beaton, D. E., Bombardier, C., Guillemin, F., & Ferraz, M. B. (2000). Guidelines for the process of cross-cultural adaptation of self-report measures. Spine, 25(24), 3186–3191. https://doi.org/10.1097/00007632-200012150-00014

Benotti, L., Martínez, M. C., & Schapachnik, F. (2017). A tool for introducing computer science with automatic formative assessment. IEEE Transactions on Learning Technologies, 11(2), 179–192. https://doi.org/10.1109/TLT.2017.2682084

Blut, M., Wang, C., Wünderlich, N. V., & Brock, C. (2021). Understanding anthropomorphism in service provision: A meta-analysis of physical robots, chatbots, and other AI. Journal of the Academy of Marketing Science, 49(4), 632–658. https://doi.org/10.1007/s11747-020-00762-y

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706qp063oa

Braun, V., & Clarke, V. (2021). Thematic analysis: A practical guide. SAGE.

Chang, C. Y., Hwang, G. J., & Gau, M. L. (2022). Promoting students’ learning achievement and self-efficacy: A mobile chatbot approach for nursing training. British Journal of Educational Technology, 53(1), 171–188. https://doi.org/10.1111/bjet.13158

Chen, H.-L., Widarso, G. V., & Sutrisno, H. (2020). A ChatBot for learning Chinese: Learning achievement and technology acceptance. Journal of Educational Computing Research, 58(6), 1161–1189. https://doi.org/10.1177/0735633120929622

Ciupe, A., Mititica, D. F., Meza, S., & Orza, B. (2019). Learning agile with intelligent conversational agents [paper presentation]. In The 2019 IEEE Global Engineering Education Conference (EDUCON), Dubai, UAE. https://doi.org/10.1109/EDUCON.2019.8725192

Clarizia, F., Colace, F., Lombardi, M., Pascale, F., & Santaniello, D. (2018). Chatbot: An education support system for student [paper presentation]. In The 10th international symposium, CSS 2018, Amalfi, Italy. https://doi.org/10.1007/978-3-030-01689-0_23

Costello, A. B., & Osborne, J. W. (2005). Best practices in exploratory factor analysis: Four recommendations for getting the most from your analysis. Practical Assessment, Research, and Evaluation, 10(7), 1–9. https://doi.org/10.7275/jyj1-4868

Creswell, J. W., & Creswell, D. (2022). Research design: Qualitative, quantitative, and mixed methods approaches (6th ed.). SAGE.

Cunningham-Nelson, S., Boles, W., Trouton, L., & Margerison, E. (2019) A review of chatbots in education: Practical steps forward. In The 30th annual conference for the Australasian Association for Engineering Education (AAEE 2019) conference, Brisbane, Australia.

Divekar, R. R., Drozdal, J., Chabot, S., Zhou, Y., Su, H., & Chen, Y. (2022). Foreign language acquisition via artificial intelligence and extended reality: Design and evaluation. Computer Assisted Language Learning, 35(9), 2332–2360. https://doi.org/10.1080/09588221.2021.1879162

Dokukinaa, I., & Gumano, J. (2020). The rise of chatbots—New personal assistants in foreign language learning. Procedia Computer Science, 169, 542–546. https://doi.org/10.1016/j.procs.2020.02.212

Essel, H. B., Vlachopoulos, D., Tachie-Menson, A., Johnson, E. E., & Baah, P. K. (2022). The impact of a virtual teaching assistant (chatbot) on students’ learning in Ghanaian higher education. International Journal of Educational Technology in Higher Education, 19, 1–19. https://doi.org/10.1186/s41239-022-00362-6

Fidan, M., & Gencel, N. (2022). Supporting the instructional videos with chatbot and peer feedback mechanisms in online learning: The effects on learning performance and intrinsic motivation. Journal of Educational Computing Research, 60(7), 1716–1741. https://doi.org/10.1177/07356331221077901

Fryer, L. K., Nakao, K., & Thompson, A. (2019). Chatbot learning partners: Connecting learning experiences, interest and competence. Computers in Human Behavior, 93, 279–289. https://doi.org/10.1016/j.chb.2018.12.023

Green, A., & O’Sullivan, B. (2019). Language learning gains among users of English Liulishuo. LAIX. http://hdl.handle.net/10547/623198

Grossman, J., Lin, Z., Sheng, H., Wei, J. T.-Z., Williams, J. J., & Goel, S. (2019). MathBot: Transforming online resources for learning math into conversational interactions. http://logical.ai/story/papers/mathbot.pdf

Guo, K., Li, Y., Li, Y., & Chu, S. K. W. (2024). Understanding EFL students’ chatbot-assisted argumentative writing: An activity theory perspective. Education and Information Technologies, 29, 1–20. https://doi.org/10.1007/s10639-023-12230-5

Guo, K., Wang, J., & Chu, S. K. W. (2022). Using chatbots to scaffold EFL students’ argumentative writing. Assessing Writing, 54, 1–6. https://doi.org/10.1016/j.asw.2022.100666

Guo, K., Zhong, Y., Li, D., & Chu, S. K. W. (2023). Effects of chatbot-assisted in-class debates on students’ argumentation skills and task motivation. Computers and Education, 203, 1–19. https://doi.org/10.1016/j.compedu.2023.104862

Guo, K., Zhong, Y., Li, D., & Chu, S. K. W. (2023). Investigating students’ engagement in chatbot-supported classroom debates. Interactive Learning Environments. https://doi.org/10.1080/10494820.2023.2207181

Hair, J. F., Black, W. C., Babin, B. J., & Anderson, R. E. (2019). Multivariate data analysis (8th ed.). Cengage Learning.

Hew, K. F., Huang, W., Du, J., & Jia, C. (2023). Using chatbots to support student goal setting and social presence in fully online activities: Learner engagement and perceptions. Journal of Computing in Higher Education, 35, 40–68. https://doi.org/10.1007/s12528-022-09338-x

Hong, J.-C., Lin, C.-H., & Juh, C.-C. (2023). Using a Chatbot to learn English via Charades: The correlates between social presence, hedonic value, perceived value, and learning outcome. Interactive Learning Environments. https://doi.org/10.1080/10494820.2023.2273485

Hwang, G.-J., & Chang, C.-Y. (2023). A review of opportunities and challenges of chatbots in education. Interactive Learning Environments, 31(7), 4099–4112. https://doi.org/10.1080/10494820.2021.1952615

Jasin, J., Ng, H. T., Atmosukarto, I., Iyer, P., Osman, F., Wong, P. Y. K., Pua, C. Y., & Cheow, W. S. (2023). The implementation of chatbot-mediated immediacy for synchronous communication in an online chemistry course. Education and Information Technologies, 28, 10665–10690. https://doi.org/10.1007/s10639-023-11602-1

Jeon, J. (2024). Exploring AI chatbot affordances in the EFL classroom: Young learners’ experiences and perspectives. Computer Assisted Language Learning, 37(1–2), 1–26. https://doi.org/10.1080/09588221.2021.2021241

Jia, J., Chen, Y., Ding, Z., & Ruan, M. (2012). Effects of a vocabulary acquisition and assessment system on students’ performance in a blended learning class for English subject. Computers and Education, 58(1), 63–76. https://doi.org/10.1016/j.compedu.2011.08.002

Kim, H., Shin, D. K., Yang, H., & Lee, J. H. (2019). A study of AI chatbot as an assistant tool for school English curriculum. Journal of Learner-Centered Curriculum and Instruction, 19(1), 89–110. https://doi.org/10.22251/jlcci.2019.19.1.89

Kohnke, L. (2022). A qualitative exploration of student perspectives of chatbot use during emergency remote teaching. International Journal of Mobile Learning and Organisation, 16(4), 475–488. https://doi.org/10.1504/IJMLO.2022.125966

Kohnke, L. (2023a). A pedagogical chatbot: A supplemental language learning tool. RELC Journal, 54(3), 828–838. https://doi.org/10.1177/00336882211067054

Kohnke, L. (2023b). L2 learners’ perceptions of a chatbot as a potential independent language learning tool. International Journal of Mobile Learning and Organisation, 17(1/2), 214–226. https://doi.org/10.1504/IJMLO.2023.128339

Kuhail, M. A., Alturki, N., Alramlawi, S., & Alhejori, K. (2023). Interacting with educational chatbots: A systematic review. Education and Information Technologies, 28, 973–1018. https://doi.org/10.1007/s10639-022-11177-3

Laeeq, K., & Memon, Z. A. (2019). Scavenge: An intelligent multi-agent based voice-enabled virtual assistant for LMS. Interactive Learning Environments, 29(6), 954–972. https://doi.org/10.1080/10494820.2019.1614634

Liu, C.-C., Liao, M.-G., Chang, C.-H., & Lin, H.-G. (2022). An analysis of children’ interaction with an AI chatbot and its impact on their interest in reading. Computers and Education, 189, 1–16. https://doi.org/10.1016/j.compedu.2022.104576

Liu, C.-C., Wang, H.-J., Wang, D., Tu, Y.-F., Hwang, G.-J., & Wang, Y. (2023). An interactive technological solution to foster preservice teachers’ theoretical knowledge and instructional design skills: A chatbot-based 5E learning approach. Interactive Learning Environments. https://doi.org/10.1080/10494820.2023.2277761

Mahapatra, S. (2024). Impact of ChatGPT on ESL students’ academic writing skills: A mixed methods intervention study. Smart Learning Environments, 11, 1–18. https://doi.org/10.1186/s40561-024-00295-9

Martha, A. S. D., & Santoso, H. B. (2019). The design and impact of the pedagogical agent: A systematic literature review. Journal of Educators Online, 16(1), 1–15. https://doi.org/10.9743/JEO.2019.16.1.8

Murad, D. F., Irsan, M., Akhirianto, P. M., Fernando, E., Murad, S. A., & Wijaya, M. H. (2019). Learning support system using chatbot in” kejar c package” homeschooling program [paper presentation]. In The 2019 international conference on information and communications technology (ICOIACT), Yogyakarta, Indonesia. https://doi.org/10.1109/ICOIACT46704.2019.8938479

Okonkwo, C. W., & Ade-Ibijola, A. (2020). Python-bot: A chatbot for teaching python programming. Engineering Letters, 29(1), 1–10.

Okonkwo, C. W., & Ade-Ibijola, A. (2021). Chatbots applications in education: A systematic review. Computers and Education: Artificial Intelligence, 2, 1–10. https://doi.org/10.1016/j.caeai.2021.100033

Ranoliya, B. R., Raghuwanshi, N., & Singh, S. (2017). Chatbot for university related faqs [paper presentation]. In The 2017 international conference on advances in computing, communications and informatics (ICACCI), Udupi, India. https://doi.org/10.1109/ICACCI.2017.8126057

Roos, S. (2018). Chatbots in education: A passing trend or a valuable pedagogical tool? Department of Informatics and Media, Uppsala University. http://www.diva-portal.org/smash/get/diva2:1223692/FULLTEXT01.pdf

Smutny, P., & Schreiberova, P. (2020). Chatbots for learning: A review of educational chatbots for the Facebook messenger. Computers and Education, 151, 1–11. https://doi.org/10.1016/j.compedu.2020.103862

Studente, S., Ellis, S., & Garivaldis, S. (2020). Exploring the potential of chatbots in higher education: A preliminary study. International Journal of Educational and Pedagogical Sciences, 14(9), 768–771.

Tlili, A., Shehata, B., Adarkwah, M. A., Bozkurt, A., Hickey, D. T., Huang, R., & Agyemang, B. (2023). What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learning Environments, 10, 1–24. https://doi.org/10.1186/s40561-023-00237-x

Vazquez-Cano, E., Mengual-Andrés, S., & López-Meneses, E. (2021). Chatbot to improve learning punctuation in Spanish and to enhance open and flexible learning environments. International Journal of Educational Technology in Higher Education, 18, 1–20. https://doi.org/10.1186/s41239-021-00269-8

Wiboolyasarin, W., & Jinowat, N. (2024). Exploring teachers’ experiences in bilingual education for young learners: Implications for dual-language learning apps design. Iranian Journal of Language Teaching Research, 12(2), 45–64. https://doi.org/10.30466/ijltr.2024.121417

Wiboolyasarin, W., Kamonsawad, R., Wiboolyasarin, K., & Jinowat, N. (2023). Digital school or online game? Factors determining 3D virtual worlds in language classrooms for pre-service teachers. Interactive Learning Environments. https://doi.org/10.1080/10494820.2023.2172588

Wiboolyasarin, W., Wiboolyasarin, K., Suwanwihok, K., Jinowat, N., & Muenjanchoey, R. (2024). Synergizing collaborative writing and AI feedback: An investigation into enhancing L2 writing proficiency in wiki-based environments. Computers and Education: Artificial Intelligence, 6, 1–10. https://doi.org/10.1016/j.caeai.2024.100228

Winkler, R., Hobert, S., Salovaara, A., Söllner, M., & Leimeister, J. M. (2020). Sara, the lecturer: Improving learning in online education with a scaffolding-based conversational agent [paper presentation]. In The 2020 CHI conference on human factors in computing systems, Honolulu, USA

Winkler, R., & Söllner, M. (2018). Unleashing the potential of chatbots in education: A state-of-the-art analysis [paper presentation]. In The 78th annual meeting of the academy of management, Chicago, USA. https://doi.org/10.5465/AMBPP.2018.15903abstract

Wu, E.H.-K., Lin, C.-H., Ou, Y.-Y., Liu, C.-Z., Wang, W.-K., & Chao, C.-Y. (2020). Advantages and constraints of a hybrid model K-12 e-learning assistant chatbot. IEEE Access, 8, 77788–77801. https://doi.org/10.1109/ACCESS.2020.2988252

Yang, H., Kim, H., Lee, J., & Shin, D. (2022). Implementation of an AI chatbot as an English conversation partner in EFL speaking classes. ReCALL, 34(3), 327–343. https://doi.org/10.1017/S0958344022000039

Yin, J., Goh, T.-T., Yang, B., & Xiaobin, Y. (2021). Conversation technology with micro-learning: The impact of chatbot-based learning on students’ learning motivation and performance. Journal of Educational Computing Research, 59(1), 154–177. https://doi.org/10.1177/0735633120952067

Yuan, C.-C., Li, C.-H., & Peng, C.-C. (2023). Development of mobile interactive courses based on an artificial intelligence chatbot on the communication software LINE. Interactive Learning Environments, 31(6), 3562–3576. https://doi.org/10.1080/10494820.2021.1937230

Yuan, Y. (2023). An empirical study of the efficacy of AI chatbots for English as a foreign language learning in primary education. Interactive Learning Environments. https://doi.org/10.1080/10494820.2023.2282112

Zhang, R., Zou, D., & Cheng, G. (2023a). Chatbot-based training on logical fallacy in EFL argumentative writing. Innovation in Language Learning and Teaching, 17(5), 932–945. https://doi.org/10.1080/17501229.2023.2197417

Zhang, R., Zou, D., & Cheng, G. (2023b). A review of chatbot-assisted learning: Pedagogical approaches, implementations, factors leading to effectiveness, theories, and future directions. Interactive Learning Environments. https://doi.org/10.1080/10494820.2023.2202704

Zhang, R., Zou, D., & Cheng, G. (2023c). Chatbot-based learning of logical fallacies in EFL writing: Perceived effectiveness in improving target knowledge and learner motivation. Interactive Learning Environments. https://doi.org/10.1080/10494820.2023.2220374

Zumstein, D., & Hundertmark, S. (2017). Chatbots—An interactive technology for personalized communication, transactions and services. IADIS International Journal on WWW/Internet, 15(1), 96–109.

Funding

The research outlined in this article has received support from Mahidol University’s Fundamental Fund, specifically the Basic Research Fund type, during the fiscal year 2024 (Grant No.: FF-181/2567).

Author information

Authors and Affiliations

Contributions

WW designed the research design, the accuracy of the data, and revised the manuscript. PT implemented the research procedure. PB and NJ developed and contributed the research tool. KW analysed the data using the inferential statistics. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This research project was conducted in accordance with and the approval of the Institutional Review Board of Mahidol University (COA No. 2023/182.0812). Participants were volunteers.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wiboolyasarin, W., Wiboolyasarin, K., Tiranant, P. et al. Designing chatbots in language classrooms: an empirical investigation from user learning experience. Smart Learn. Environ. 11, 32 (2024). https://doi.org/10.1186/s40561-024-00319-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40561-024-00319-4