Abstract

The detection of hate speech (HS) in online platforms has become extremely important for maintaining a safe and inclusive environment. While significant progress has been made in English-language HS detection, methods for detecting HS in other languages, such as Bengali, have not been explored much like English. In this survey, we outlined the key challenges specific to HS detection in Bengali, including the scarcity of labeled datasets, linguistic nuances, and contextual variations. We also examined different approaches and methodologies employed by researchers to address these challenges, including classical machine learning techniques, ensemble approaches, and more recent deep learning advancements. Furthermore, we explored the performance metrics used for evaluation, including the accuracy, precision, recall, receiver operating characteristic (ROC) curve, area under the ROC curve (AUC), sensitivity, specificity, and F1 score, providing insights into the effectiveness of the proposed models. Additionally, we identified the limitations and future directions of research in Bengali HS detection, highlighting the need for larger annotated datasets, cross-lingual transfer learning techniques, and the incorporation of contextual information to improve the detection accuracy. This survey provides a comprehensive overview of the current state-of-the-art HS detection methods used in Bengali text and serves as a valuable resource for researchers and practitioners interested in understanding the advancements, challenges, and opportunities in addressing HS in the Bengali language, ultimately assisting in the creation of reliable and effective online platform detection systems.

Similar content being viewed by others

Introduction

Hate speech (HS) is a form of expression that spreads negativity and incites violence against people or groups based on their inborn traits, including religion, race, ethnicity, sexual preference, and gender. The increasing prevalence of social media platforms has facilitated the spread of HS by providing a medium for individuals to communicate with one another, regardless of their psychological traits and backgrounds. The global population is now estimated at almost 7.7 billion people, and approximately 3.484 billion people are active on social media [1].

Conflicts arise among individuals with diverse mindsets, as one may find it challenging to tolerate the thoughts of another. Social media has evolved into a platform for HS under the guise of freedom of speech. Text serves as one method of communication. When text is used to insult others, it is considered inaccurate and defamatory and could lead to harassment, religious conflicts, and eve-teasing on social media platforms [2]. In severe cases, HS can even threaten and incite people against society and the government. In addition to text, other media such as images, audio, videos, and image/graphical representations can all be used to propagate unpleasant or hateful content on social media. Moreover, the use of hashtags facilitates the transformation of text into clickable links, enabling information to be transmitted more quickly.

As there are no universal guidelines or comprehensive rules for defining HS, controlling and detecting hateful and offensive language pose major challenges in today’s society. While social media offers a platform for all users to share their thoughts, instances of offensive and harmful content are not uncommon and can severely impact users’ experience and even the civility of a community [3]. HS may also have significant cultural impacts depending on one’s cultural background.

Automated HS identification is critical in addressing the spread of HS, especially on social media. Numerous methods have been developed for this task, including a recent increase in the development of deep learning (DL)-based approaches. Technology companies, scholars, and policymakers have been developing natural language processing (NLP) tools to identify HS and thus mitigate unlawful activities [4]. NLP is a large subdomain of artificial intelligence that helps computers understand, translate, and control human language [5].

To date, most hate speech research has focused on addressing the challenges posed by languages with abundant linguistic resources. As English is the most widely used language on the internet, extensive research has been conducted on English-language hate speech detection [6, 7]. To promote additional studies in this area, researchers created the Arabic datasets annotated for religious hate detection and the Arabic lexicon of religious hate terms [8, 9]. Some researchers have also worked on multilingual projects [10]. One researcher proposed a robust neural classifier for an HS binary classification task, with comparisons across different languages, namely, English, Italian and German, focusing on freely available Twitter datasets for HS detection [11].

According to statistics from 2018, Bengali (endonym “Bangla”) is the sixth most widely spoken native language, with an estimated 300 million native speakers worldwide [12]. Bengali is Bangladesh’s national, official, and most widely spoken language and the second-most commonly used language in India.

Social media usage has become increasingly prevalent in Bangladesh, with an estimated 50.3 million active users engaging with various online platforms in 2022. This figure represents approximately 29.7% of the population, a substantial proportion of the country’s total population. There are several benefits to the use of online media and the internet, e.g., uniting people from all different backgrounds and allowing them to communicate and interact with one another [11]. As a result, people with diverse psychological and sociocultural backgrounds can share their thoughts, ideas, and opinions easily.

Among Bangladesh’s social media users, there are nearly 46 million users on Facebook and around 29 million users on YouTube. According to UNICEF, approximately 32 percent of children in Bangladesh are vulnerable to cyberbullying. A study conducted by the Cyber Crime Awareness Foundation, an NGO, revealed that 73.71% of cybercrime victims are women [13]. The situation worsens when individuals resort to suicide [14]. Despite this large user base, the availability of comprehensive and linguistically diverse datasets for Bengali HS has been severely limited [15].

The use of vulgar language is influenced by sociocultural context and demographic factors [16], and exploring its prevalence in languages other than English [17] is important. As more people express their thoughts online via social media, HS has continued spread. Given that discriminatory speech may detrimentally impact society, the development of detection and prevention systems can benefit governments and social networking sites. In this study, we explore a solution to the problem of HS detection and prevention in Bengali text by providing a thorough summary of field research.

Collecting and preparing data, extracting features, constructing and deploying models, and obtaining results are all processes involved in detecting HS. The general steps for identifying HS in Bengali text are shown in Fig. 1 and explained in "Hate Speech Detection Methodologies" section. Note that there may be variations to these steps, and the framework may not always be the same.

-

Data Collection: The first step in HS detection is data collection. Text, image/graphic, audio, and video datasets are the primary Bengali datasets used for HS detection and classification. Text datasets are mainly employed for HS detection because they are easily accessible through social media posts and comments, and social media users use text more often than other types of data.

-

Data Preprocessing: The data preprocessing step includes labeling and cleaning data, as well as eliminating tokens, hashtags, emojis, and other irrelevant information from raw data. The quality and interpretability of the original data are enhanced, and the ability to detect relevant information is improved through data preprocessing.

-

Feature Extraction: The large amount of raw data collected above is separated and reduced to more manageable categories through feature extraction processes, significantly simplifying the procedure. Several feature extraction approaches can be utilized in this phase, e.g., the TF-IDF, CountVectorizer, bag of words, n-grams, Word2Vec, FastText, word embedding, and part-of-speech tagging approaches.

-

Model Training: Machine learning is used to train a model that accurately recognizes HS in Bengali. However, choosing an appropriate model can be arduous. Researchers have utilized several different models in HS detection studies. One can employ classical machine learning (ML), deep learning (DL), hybrid (a combination of classical ML and DL), and ensemble models to detect HS in Bengali text.

-

HS Classification Using the Model: Classification involves partitioning a set of data into groups with related traits. After training the model, researchers can carry out a classification to detect HS in real Bengali text. In this step, text can be classified into labels, e.g., “HS”, “Not HS”, or “Neutral”, and HS can be identified easily through automation.

In this study, we conduct a systematic review of the use of cutting-edge technology to identify HS in Bengali on online social networking sites. We examine the most popular and widely used natural language processing (NLP) methods that have helped to identify HS automatically. We also discuss the findings of various related research works and their limitations. Finally, we provide ideas and recommendations for additional research while examining the current issues. The overall contributions of our study are as follows:

-

1.

To our best knowledge, this is the first comprehensive survey on hate speech detection in the Bengali language. While some surveys on HS detection research in English have been published [7, 18, 19], there are no existing surveys on HS detection in Bengali. In our survey, we carefully review the available research articles from academic journals/conferences, datasets, and other resources for the purpose of detecting hate speech in Bengali.

-

2.

The aim of this survey is to investigate various machine learning (ML) and deep learning (DL) methods for the detection of HS in Bengali. We have developed a comprehensive taxonomy of the ML and DL methods employed for Bengali HS detection. We have also outlined the basic architectures that are used and performed a comparative study to clarify the advantages and disadvantages of various approaches.

-

3.

We thoroughly review text preprocessing techniques, including a range of tools and packages to preprocess text for subsequent learning tasks. We also explore feature extraction techniques, explaining their models and architectures, and summarize the benefits and drawbacks of each.

-

4.

We have carefully described the current restrictions and challenges in detecting hate speech in Bengali and outlined their possible solutions. In particular, we investigate the topic of aspect-based hate speech analysis, which could be transformative in the area of HS detection. However, this approach is not well-known to researchers in the context of Bengali text. We offer a brief explanation of aspect-based hate speech detection, highlighting its subtleties and suggesting potential approaches for its successful deployment.

The components of this study are visualized in Fig. 2.

This survey is designed for three primary groups: NLP researchers, computer scientists and engineers, and policymakers. NLP researchers are expected to provide insights into the theoretical and research challenges associated with detecting hate speech in Bengali text, including the nuances of language and the effectiveness of various models. Computer scientists and engineers are targeted for their expertise in the technical implementation, development, and optimization of algorithms and classifiers for hate speech detection, focusing on practical challenges and performance issues. Policymakers are included to address the broader societal impact, ethical considerations, and regulatory implications of deploying automated hate speech detection tools. Clearly defining these groups in the introduction helps tailor the survey questions to each audience’s specific interests and expertise, ensuring relevant and actionable feedback that can guide future research, technical improvements, and policy development.

Background

In the realms of ML, NLP, and data science, HS detection is a promising area of study. While several surveys have been published on this topic, none have focused explicitly on the Bengali language. However, notable studies have been conducted in other languages, such as English and Arabic, providing valuable insights into HS detection techniques. Following are a few examples, by no means complete, of HS detection research in other languages.

-

English: In a recent study, researchers provided a short and analytical survey of HS detection in NLP, specifically focusing on the English language [7]. They began by defining essential terms for studying HS and analyzed the commonly used features in this field. They also explored research on bullying and its applications, particularly in predicting social unrest. Finally, the authors addressed data and categorization issues and presented various approaches to address these issues.

In another survey [19], the authors critically analyzed HS, mainly focusing on “cyber hate” in social media and on the internet (primarily for the English text). By examining the nature and impact of this phenomenon, the authors emphasized the need for proactive measures to combat HS online, urging the active involvement of social media platforms to create a safer and more inclusive digital space.

In another article [18], the authors provided more precise definitions of HS and offered an overview of the field’s development in recent years. They adopted a systematic method to analyze existing data collections that is more comprehensive than previous methods. This study highlights the increasing awareness of the spread of HS through networks in Arabic regions and worldwide. Many countries are now actively working to regulate and counter such speech.

Some of the other recent surveys for HS detection in English are [20,21,22,23].

-

Arabic: In the context of Arabic social media platforms, several studies have been conducted to detect offensive language and HS. One study utilized a multitask learning (MTL) model trained on diverse datasets representing different types of offensive and hateful text. The developed MTL model outperformed existing models and achieved superior performance in detecting Arabic offensive language and HS [24].

In another innovative approach, a DL framework was proposed that combines a CNN and LSTM network to automatically detect cyber HS on Arabic Twitter. This approach, which employs word embeddings, yielded positive outcomes in terms of accurately classifying HS tweets using different evaluation metrics [25].

Similarly, the effectiveness of a convolutional neural network (CNN), a CNN with long short-term memory (CNN-LSTM), and a bidirectional LSTM (BiLSTM)-CNN was explored in terms of automatically detecting hateful content on social media using the Arabic Hate Speech (ArHS) dataset, which consists of 9,833 annotated tweets. Different types of experiments were conducted, addressing binary, ternary, and multiclass classification for different categories of HS [26].

Furthermore, another study focused on developing an automated system to detect HS in Arabic, aiming to monitor online behavior for threats to national security and cyberbullying. Deep recurrent neural networks (DRNNs) were employed for HS classification, utilizing a unique dataset of 4,203 comments categorized into seven HS classes [27].

-

Danish: A Danish dataset capturing offensive language from platforms such as Reddit and Facebook was created, and four automatic classification systems were developed for both the English and Danish languages [28].

-

Hindi: Similarly, the growing problem of online HS and the need for automatic detection methods were emphasized, explicitly focusing on code-mixed Hindi-English datasets. This study surveyed advancements in neural-based models designed to address HS in this context [29].

Moreover, the issue of HS in user-generated social media content was addressed by focusing on code-mixed social media texts. A code-mixed dataset was used to assess two architectures for HS detection: a subword-level LSTM and a phonemic subword-level hierarchical LSTM with attention [30].

-

Urdu: In another study, a vocabulary of hostile words and an annotated dataset named RUHSOLD were used for automatic HS and offensive language detection in Roman Urdu (RU). It was concluded that transfer learning is effective, and a CNN-gram model that exhibited greater robustness compared to that of baseline approaches was proposed [31].

-

Bahasa Indonesia: In Indonesia, where limited research on HS detection exists, researchers aimed to create a new dataset encompassing various aspects of HS, targeting religion, race, ethnicity, and gender. A preliminary study was conducted to compare machine learning algorithms and features. It was found that word n-grams performed better than character n-grams in detecting HS [32].

Overall, these studies and surveys shed light on HS detection techniques in various languages and highlight the significance of addressing this issue to foster a more inclusive and respectful online environment. While research in HS detection in the Bengali language is still limited, the insights gained from the studies conducted in other languages can serve as a foundation for future research and development in this area.

Definition of hate speech

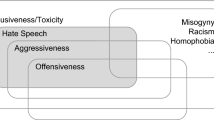

The definition of HS lacks a universal consensus, leading to disagreements among parties, organization, and individuals. Detecting HS is a challenging task. HS is characterized by language that uses stereotypes to express a hateful mindset. To annotate a corpus and create a consistent language model, addressing numerous difficulties related to defining HS is essential [33].

Some organizations and authors have defined HS as follows:

-

1.

European Union: “Any public incitement to roughness or abomination against an individual or a member of a group of individuals characterized by their race, color, religion, ancestry, nationality, or ethnicity” [34].

-

2.

International Minorities Association: “Any criminal activity that targets individuals because of their actual or perceived membership in a certain group is considered a hate crime. The crimes can take many different forms, including rape, property destruction, blackmail, physical and psychological intimidation, and hostility and violence. A language that disparages or insults a group on the basis of their race, ethnicity, religion, disability, gender, age, sexual orientation, or gender identity” [35].

-

3.

Facebook: “We define hate speech as an outright attack on an individual based on one or more of the categories we refer to as protected characteristics, such as race, ethnicity, national origin, disability, religion, caste, sexual orientation, sex, and significant medical conditions. Attacks include what we define as aggressive or dehumanizing rhetoric, damaging stereotypes, claims of inferiority, expressions of scorn, disgust, or dismissal, profanity, and calls for segregation or exclusion. When used in conjunction with another protected trait, age is regarded as a protected characteristic. Although we permit opinion and criticism of immigration laws, we also shield refugees, migrants, immigrants, and asylum seekers from the harshest assaults. In a similar vein, we offer certain safeguards for traits like occupation when they are mentioned with a protected trait” [36].

-

4.

Twitter (now X): “You aren’t allowed to incite or harass others based on their race, ethnicity, national origin, caste, sexual preference, gender, or gender identity, their age, handicap, or a serious illness” [37].

-

5.

YouTube: “We eliminate material that calls for harm to people or groups based on any of the following characteristics: Age, caste, handicap, ethnicity, gender expression, nationality, race, immigration status, religion, sex/gender, and sexual orientation, as well as veteran status and those who have experienced a significant act of violence and their family members, are also taken into consideration” [38].

After analyzing the terms used in the aforementioned definitions to comprehend the meaning of HS more clearly, we find that each definition emphasizes that HS targets a specific entity, such as a person, group, or nationality. The majority of these definitions also address themes of violence, racism, and gender discrimination and issues related to race and ethnicity. Less common standards for measuring hate speech include the use of profane language and issues related to disability, property damage, advanced age, and significant illness. Table 1 presents common hate speech categories and a few examples of hate targets in each category.

Importance of HS detection

In recent years, HS has attracted the attention of researchers and has become popular in the NLP field. We specify why automatic HS detection is essential below.

-

Social Media Safety: Detecting HS is essential to ensure the safety of social media users. HS can be defined as any form of speech that targets and dehumanizes a particular group based on factors such as race, ethnicity, religion, sexual orientation, and gender identity [24]. The consequences of HS can be severe, ranging from promoting violence and discrimination to potentially inciting genocide. In addition, HS can harm and threaten individuals and communities who are already marginalized and vulnerable [40]. An investigation was conducted in five Bangladeshi areas in 2020 by “Ain O Salish Kendra” (ASK), a rights and legal assistance NGO. They discovered that during the COVID-19 pandemic, many young students experienced online harassment. In particular, an alarming 30% of the 108 children, 61 girls and 47 boys, polled said they had experienced internet harassment [41].

We can assist in defending these groups and fostering a safer online environment by identifying and preventing HS. HS has the potential to undermine civic dialogue, fostering prejudice, polarization, and division. Detecting and suppressing HS can play a crucial role in nurturing civil discourse and encouraging productive online discussions. HS often infringes upon human rights, including freedom of expression, nondiscrimination, and equality. While information sharing has become more accessible, the rise of cyberbullying poses a growing concern. Moreover, the harmful effects of cyberbullying on children were discussed in one another, and it was demonstrated in another study that victims of such behavior are more likely to consider suicide than non-victims [42]. Upholding fundamental rights and preventing the spread of harmful and discriminatory content is crucial for creating a safe social media environment.

Conversely, social media platforms that allow HS to thrive risk damaging their reputation and losing users. By actively detecting HS, platforms can demonstrate their commitment to fostering a safe and respectful online environment and thereby attract more users. One of the most significant challenges in detecting HS is that it exists in diverse forms, including subtle and implicit expressions [2]. Traditional methods for detecting offensive language, such as keyword-based filtering or manual moderation, often need to be revised to capture all instances of HS. Social media platforms can implement additional measures to enhance the safety. These measures include giving users more control over their feeds, creating reporting mechanisms that allow users to report HS more efficiently, and enforcing strict community guidelines.

-

Mental Health: HS promotes violence and erodes social harmony and tolerance, and history is replete with examples of the destructive effects of hatred. Online platforms have quickly evolved into forums for divisive and hateful statements on a global scale, endangering peace and harmony. In today’s digital world, HS can escalate, exerting a much more significant influence than ever before.

-

Hate Crime Prevention: Hate crimes are those in which the victim is targeted because of their actual or perceived race, color, religion, disability, sexual orientation, or national origin [43]. To gather data, the United States Federal Bureau of Investigation (FBI) defines a hate crime as a “criminal offense against a person or property motivated in whole or in part by an offender’s bias against a race, religion, disability, sexual orientation, ethnicity, gender, or gender identity” [44]. By decreasing the rate of HS, the rate of hate crimes could also be reduced.

Survey methodology

The method used to conduct this survey is the “systematic literature review” (SLR) method developed by Kitchenham and colleagues [45, 46], which involves the comprehensive identification, critical evaluation, and systematic interpretation of all existing research studies that are pertinent to the specific research question, topic area, or phenomenon under investigation. The processes involved in conducting an SLR are categorized into three distinct phases: defining the scope of the review, conducting the literature search, and filtering the documents. Each of these phases will be discussed in more detail in the following sections.

Defining the scope of the review

The first step in this study involved determining the extent and boundaries of the review, including the specific aspects of Bengali text hate speech detection that we wanted to cover, e.g., the types of hate speech, the methods used for detection, the datasets used for training and evaluation, and the performance metrics). After selecting the inclusion and exclusion criteria, we searched for the most relevant papers, articles, and studies that would be suitable for the survey. Our focus was primarily on the ACM, IEEE, Springer, Elsevier, and arXiv databases. Once we completed this step, we began the study.

Research questions

The fundamental research questions that we composed are as follows:

-

What role does hate speech detection play in the digital sphere?

-

How does its effectiveness impact the reduction of online toxicity and the promotion of welcoming online spaces?

-

What are the linguistic indicators or criteria frequently used in the identification and detection of hate speech within diverse contexts?

-

How do various organizations and councils define hate speech?

-

What kinds of datasets are frequently used to identify HS in Bengali text?

-

What linguistic resources and features are commonly employed in creating models that effectively identify HS in Bengali text?

-

Which are the current approaches frequently used for feature extraction, classification, and preprocessing in text-based Bengali HS detection?

-

How are HS detection systems generally assessed in light of the data’s various linguistic and cultural contexts?

-

How well do the models used for Bengali HS detection perform?

-

What are the obstacles and constraints faced in Bengali HS detection today?

-

In what creative ways can these problems be solved to overcome the challenges they face?

-

What directions might future researchers explore to improve HS detection systems’ efficacy?

The above research questions are addressed in "Background", "Dataset Description", "Hate Speech Detection Methodologies", and "Challenges" sections of this survey.

Conducting the literature search

To conduct the literature search, we posed a primary research query: “What are the current techniques and approaches utilized for recognizing hate speech in the Bengali language, as well as the important datasets utilized by the research community?” To answer these questions, we thoroughly explored the existing literature using multiple academic databases, such as the ACM Digital Library (which also indexes papers from Association for Computational Linguistics, ACL), IEEE Xplore, SpringerLink, ScienceDirect (Elsevier), arXiv, and Google Scholar. To collect papers for this study, we used keywords including “Bengali hate speech,” “machine learning,” “deep learning,” “natural language processing,” “Bengali text classification,” “Bengali hate speech detection,” “AI-based hate speech detection in Bengali” on Google Scholar. These keyword searches allowed us to identify some of the critical research articles and reviews on this topic.

An exact search was conducted on specific repositories such as IEEE, ACM, Springer, Elsevier, and arXiv. However, we obtained fewer articles due to their indexing methods. For example, our search on IEEE Xplore only returned a few papers, of which only a small fraction was relevant to this work. A similar outcome was observed on Springer, which also yielded only a few articles.

On the other hand, Google Scholar was found to be more inclusive and accessible, facilitating the discovery of all relevant content in one result. As a result, this platform was used for this study. Next, we used specific search terms to narrow the search results further. Finally, we searched for articles that cited the critical research articles identified during the literature search to identify additional relevant articles.

Research type

The research type specifies the category of written materials, which may include scholarly publications, conference or workshop papers, book chapters, or dissertations. The total number of journals and conference papers published in this field and used in our survey are shown graphically in Fig. 3.

Publication year and type

At the outset of this study, 150 papers were collected from various sources, and 38 papers were selected for the survey. Over 90% of these papers were published from 2010 to 2023. Consequently, we incorporated more recent articles to revise and augment this review.

Document filtering

Inclusion and exclusion criteria are typically used to determine whether participants should be included or eliminated in a study. These criteria aid researchers in ensuring that the participants used in their study are representative of the population under investigation and that they can provide relevant insights into the research topic. After applying inclusion and exclusion criteria to this study, we found that many of the research articles we retrieved were relevant to this study. The following inclusion criteria were employed:

-

The paper is only from a peer-reviewed journal, conference proceeding, or arXiv preprint.

-

The full text is available in a digital database.

-

The paper includes the proposal of a model, various machine learning or deep learning techniques, or a framework.

-

The paper is written in English and focuses on Bengali hate speech detection.

In contrast, papers that were duplicates, appearing in multiple academic databases, reviews, book chapters, magazine articles, theses, and interview-based articles, not relevant to Bengali hate speech detection or did not have full-text access were excluded from our study.

We followed the “Preferred Reporting Items for Systematic Reviews and Meta-Analyses” (PRISMA) protocol [47], as depicted in Fig. 4.

Dataset description

In this section, we discuss the sources of data for Bengali HS detection. We found that each of the included papers focuses on specific or several related themes, such as politics, religion, or other relevant topics, when collecting data. Furthermore, we analyzed how data was extracted from these sources. Finally, we provide a table that summarizes the datasets used by various authors. Table 2 includes information on the dataset source, the data collection method employed, and the data size and availability.

Data sources

The effectiveness of HS detection models relies on the quality and diversity of the datasets utilized for training and evaluation. In this section, we present an overview of the data sources used in the studies reviewed in this survey. Most studies reviewed in our survey relied on social media platforms as their primary data source for detecting HS in the Bengali language. Facebook was the most frequently utilized among these platforms, followed by YouTube. A significant portion of the studies utilized Facebook [41, 48, 52, 57, 58, 60, 63, 64] as the main data source, while in a few works [5, 14, 40], Facebook comments were collected along with tweets.

Several studies utilized datasets of comments extracted from Bangladeshi news websites for training their HS detection models [2, 5, 14], in addition to comments from Facebook and YouTube. Users’ comments on the Facebook pages of newspapers were collected in [65], and [15, 49, 53, 55] used comments from controversial YouTube videos uploaded on Facebook.

Publicly available datasets were used in other studies [50, 54, 59, 61, 66]. In contrast, some researchers created their own datasets by collecting data from existing datasets [15, 48, 49, 52, 58, 67]. In one study, two Bengali corpora consisting of 7,245 reviews and comments obtained from YouTube were created. These datasets were made available to the public for further use and analysis [17].

In other studies, diverse data collection methods were utilized. Some of these methods include keyword-based searches or targeted crawling of specific accounts [52, 58, 60, 65, 68]. In contrast, some studies focused on particular types of HS, such as religious, political, racist, and sexual HS [14, 41, 51, 61].

The widespread utilization of social media platforms as the primary data source highlights the need for developing methods to handle social media data’s dynamic and noisy nature for HS detection in the Bengali language. The distribution of data sources for the collected datasets is presented in Fig. 5 in graphical form.

Data categories

The detection and classification of HS in the Bengali language is an essential area of research due to the limited availability of data compared to that of other languages, such as English. Most of the existing Bengali datasets were manually prepared, and data were grouped into multiple categories based on different criteria. Data collection for HS detection research has primarily been conducted through social media platforms such as Facebook, YouTube, and Twitter. Researchers have collected data based on various categories, including politics, religion, sports, entertainment, crime, memes, and TikTok videos [49].

The number of dataset categories varied across studies. However, use of five to seven categories was most common [15, 49, 53]. While the categories differed between authors, categories related to politics, religion, and celebrities were commonly included [50]. The results of these studies indicated that HS is more prevalent in specific categories than others. For example, HS related to religion and sports had higher occurrence rates than those of other categories. Categorizing data based on different classes provided valuable insight into the nature and prevalence of HS in Bengali.

-

Politics: In the context of political HS, derogatory language is often used to attack political opponents based on their political ideology, party affiliation, or personal characteristics. The following is an example of HS in Bengali related to politics: "যারা শুধু আবার বাংলাদে শে র প্রতি দুর্ন ীতি চালান োর চে ষ্টা করে তাদে র হঠাৎ ধরে মার দি তে হবে‡" (Those who are attempting to defame Bangladesh should be suddenly caught and beaten up) [58].

-

Religion: In the context of religious HS, people often use derogatory language to insult and discriminate against people of different faiths or sects. An example of HS in Bengali related to religion is as follows: "মুসলমানরা হৃদয়হীন ও জাহি ল" (Muslims are heartless and ignorant).

-

Sports: In the context of sports-related HS, fans of opposing teams often use derogatory language and insults to belittle each other. An example of HS in Bengali related to sports is as follows:"ভারতীয় পাগলদে র বি রুদ্ধে জয় হবে ইনশাআল্লাহ" (Inshallah, we will win against the crazy Indians).

-

Entertainment: HS related to entertainment can include derogatory remarks and personal attacks against actors, actresses, and other celebrities.

-

Crime: HS related to a crime can include derogatory remarks and personal attacks against individuals accused of committing crimes.

-

Memes: Memes in Bengali may contain derogatory remarks and HS against certain groups or individuals.

Data collection methods

There are various methods available to extract data, which refers to the process of collecting text data from social media sources. Different authors have used different approaches to extract data. Subsequently, the extracted data instances are annotated with different class labels (e.g., “HS”, “Not HS”, or “Neutral”) in preparation for supervised learning.

Data extraction

The most commonly used data extraction methods are described below.

a. FacePager: FacePager is an open-source software tool designed to facilitate data collection from websites and social media platforms such as Facebook, YouTube, and Twitter by leveraging APIs and web scraping methods. This tool offers a user-friendly interface that enables researchers to retrieve data based on specific search criteria, such as hashtags, keywords, or user profiles. Researchers can use FacePager to define extraction projects focused on collecting Bengali text data linked to HS. By setting search parameters, individuals can extract posts, comments, and other pertinent data from social media sites. This application controls pagination, automates querying, and stores retrieved data in an SQLite database that can be exported to CSV format for further analysis.

For example, the authors in [15, 49, 53] utilized FacePager to collect public comments related to Bengali HS from Facebook and YouTube. Although FacePager facilitates data collection, it is important to remember that manual setup and management are still required. Users are accountable for defining search criteria and specifying the data they wish to extract.

b. Web Scraping: Web scraping tools are software programs or libraries designed to automate the process of extracting data from websites. This approach involves extracting data from web pages, social media platforms, and other online sources [69].

For example, a researcher may scrape data from social media sites such as Facebook, Twitter, or Instagram to build a dataset for HS detection in Bengali [48]. To scrape HS data from websites and social media platforms, researchers need to use web scraping tools that are designed to work with these specific platforms. Several web scraping tools, such as BeautifulSoup, Selenium or Scrapy, extract and filter text data based on predefined criteria such as hashtags, keywords, or user profiles. Researchers also can utilize APIs to obtain HS datasets from various social media platforms. There are a number of studies in which the authors used web scraping for HS data collection [70,71,72].

-

BeautifulSoup: BeautifulSoup, a Python library, extracts data from HTML and XML documents and can be used to facilitate web scraping activities on various websites, including social media platforms such as Facebook, Twitter, and Instagram [2]. To perform data scraping with BeautifulSoup, the website(s) from which one wants to scrape HS data must be identified. Next, one must inspect the website(s) to understand the HTML structure of the web pages containing HS data. Then, BeautifulSoup it used to extract the relevant HTML elements containing HS data. The extracted data must be cleaned and preprocessed to remove unwanted characters, formatting, or duplicates and analyzed to identify trends, patterns, and insights. The HTML content of the website containing HS data is retrieved using the requests library, and then BeautifulSoup parses the HTML content and finds all HTML elements that include HS data. The text content of each HS element is then extracted and stored in a list for further analysis.

-

Scrapy: The scrapy tool is a Python framework for web scraping that provides tools and libraries for building web scrapers. Scrapy can scrape data from websites, including social media platforms. First, the URLs or APIs that contain the HS data one wants to extract for each platform are identified, and then a new Scrapy project and spider are created for each platform [73].

-

Selenium: Selenium is a tool that automates web browsers, allowing developers to simulate user interactions with websites, which helps to scrape data from websites and social media platforms [74].

It is important to ensure that the web scraping procedure conforms with the terms and conditions of the website and respects user privacy and rights when utilizing web scraping technologies to gather HS data from social media platforms. To eliminate false positives and ensure that the data are trustworthy and pertinent to the research issue, data preprocessing and filtering may be needed.

c. Social Media APIs: Application programming interfaces (APIs) of social media platforms such as Twitter, Facebook, and Instagram can be used to collect Bengali text data related to HS. Several Python libraries can extract HS data from social media platforms such as Facebook, Twitter, and Instagram. For instance, the official Facebook Graph API can extract data from Facebook pages, groups, and profiles.

An API provides access to various data, including posts, comments, likes, reactions, and user profiles, which can be filtered to extract data relevant to HS [60]. Libraries such as Facebook-SDK, Facebook-scraper, social media API, and PySocialWatcher can interact with the Facebook API. Facebook-SDK is a Python wrapper for the Facebook Graph API that allows the user to authenticate requests and retrieve data from Facebook’s platform. One can use this library to extract posts, comments, and other text data from Facebook pages and groups and apply NLP techniques to analyze the text for HS.

PySocialWatcher is a Python library for monitoring social media platforms, including Facebook, for potentially harmful content, such as HS. This library uses machine learning algorithms to analyze text data and flag posts and comments that contain HS or other problematic content. In contrast, Facebook-scraper is a Python library for scraping Facebook pages and groups to extract data such as posts, comments, and reactions. Using NLP techniques, one can use this library to filter data using specific keywords or hashtags and analyze text data for HS. Moreover, the official Twitter API can extract data from Twitter, including tweets, user profiles, and other metadata. This API provides access to various endpoints, such as search APIs and streaming APIs, which can be used to extract relevant data.

Libraries such as Tweepy, Twython, and Python-twitter can be used to interact with the Twitter API [75, 76]. Tweepy is a Python library that provides convenient access to the Twitter API, offering an easy-to-use interface for sending requests to the Twitter API, handling rate limits, and interacting with Twitter data. With Tweepy, one can easily authenticate an application, search for tweets, retrieve user information, post tweets, and more. Tweepy is widely used for social media analysis, sentiment analysis, and other data science applications involving Twitter data. Python-twitter is a collection of Python scripts and libraries for accessing the Twitter API, providing a simple and flexible interface for working with Twitter data.

Moreover, Instagram Graph API can extract data from Instagram accounts, hashtags, and locations. This API provides access to various data, including posts, comments, likes, and user profiles, which can be filtered to extract data relevant to HS [77]. Libraries such as Instaloader and Instagram API can be used to interact with the Instagram API.

Installer is a third-party Python library for downloading and processing data from Instagram. This library provides a user-friendly interface for effortlessly downloading images, videos, and metadata from Instagram. The collected data can be filtered based on relevant keywords and hashtags and then preprocessed and labeled for training an HS detection model [78].

Hate speech annotation

HS annotation is largely a manual process. It typically involves a team of human annotators who read text and make a determination regarding whether the text contains language that is meant to denigrate, intimidate, or incite violence against a particular individual or group based on their race, religion, gender, sexuality, or other characteristics. Manual labeling of Bengali text for HS detection was reported in [15].

One could hire human annotators who are proficient in the Bengali language to identify Bengali HS. The annotators would read through text and label it as HS or non-HS based on predetermined criteria [79]. A research team may select a set of Bengali text documents, such as social media posts, news articles, or online comments, and have a team of expert annotators manually label them. The criteria for labeling could include explicit HS terms, offensive language, discriminatory language, or threatening language [80].

Crowdsourcing is another manual data collection and labeling method. Crowdsourcing platforms have been utilized to annotate Bengali text data for HS [81, 82]. A researcher can post annotation tasks on a platform such as Amazon Mechanical Turk [83] or CrowdFlower [84] and receive annotations from many annotators. However, the annotation quality may be inconsistent, and it may be challenging to ensure the label accuracy.

Active learning

Active Learning (AL) is a sophisticated method of data annotation that leverages model uncertainty to prioritize and select the most informative samples for labeling. Rather than passively annotating data, active learning actively involves the model in identifying which data points, when annotated, would most enhance its performance. It is a machine learning strategy that improves the process of collecting data by iteratively choosing the most useful examples to be annotated. It is also known as query learning. AL involves minimizing the quantity of labeled data needed to understand the target variable. To accomplish this, the user is asked to provide labels for the most valuable instances, resulting in a more efficient learning process with fewer examples [85].

Traditional data gathering approaches typically require substantial amounts of annotated data to train supervised ML models. Whereas AL focuses on querying samples that the model finds most uncertain or complex [86]. This method entails training an initial model using a limited amount of labeled data. The model is then used to identify data points where its predictions are least certain. Human annotators are then employed to provide labels for these data points [87]. By incorporating these newly labeled data points into the training set and retraining the model, learning becomes faster and performance increases.

Studies [88, 89] used the active learning approach for data annotation. Bengali is a low-resource language that lacks a substantial amount of data corpora. AL can be utilized to develop a domain-specific Bangla data corpus. Another study [90] adopted the AL technique to expand their labeled samples and automatically detect hate speech regarding Rohingyas (refugees in the border areas with Myanmar).

Hate speech detection methodologies

In "Introduction" section, we discussed some of the general steps in HS detection. In this section, we provide a detailed explanation of every step.

Text preprocessing

We discuss the most commonly used text preprocessing methods below.

Stemming: Stemming in Bengali text preprocessing involves reducing Bengali words to their basic form. This process involves eliminating common suffixes from Bengali words to derive their stem, a helpful technique to minimize the vocabulary size and improve the efficiency of NLP tasks such as text classification, sentiment analysis, and information retrieval. However, stemming in Bengali is a difficult task due to the complex morphology of the language.

For example, the Bengali word "করতে‡" can be stemmed to "কর" by removing the suffix "তে‡". Similarly, the word "পরে র" can be stemmed to "পর" by removing the suffix "এর". Stemming can also help to reduce dataset redundancy, making the dataset easier to analyze and interpret. In contrast, stemming may lead to the loss of information as it reduces words to their root form, resulting in the loss of nuance and context [54].

Lemmatization: Lemmatization is a technique used in Bengali text preprocessing to identify a word’s base form (lemma) by considering its inflectional and derivational morphology. Unlike stemming, which involves reducing words to their root form by removing common suffixes, lemmatization leads to more accurate results by considering the sentence’s grammatical structure.

For example, the Bengali word "লি খে ছি লে ন¨" can be lemmatized to "লি খা" by identifying its base form and considering its inflectional morphology (the suffix "ছি লে ন"). Similarly, the word "চলছে‡" can be lemmatized to "চলা" by identifying its base form and considering its inflectional morphology (the suffix "ছে‡"). There are different approaches to lemmatization in Bengali text preprocessing, including rule-based and ML-based approaches. Rule-based approaches rely on hand-crafted rules to identify the base form of a word, while ML-based approaches use statistical models trained on large corpora of Bengali text to achieve this task.

Normalization: Normalization in Bengali text preprocessing refers to converting text into a standard or canonical form that computer algorithms can easily process [91]. This preprocessing method involves several techniques, such as Unicode normalization, punctuation removal, digit normalization, and case folding. Normalization aims to ensure consistency and reduce the variability of the input text, thereby improving the accuracy of natural language processing tasks.

Tokenization: Tokenization in Bengali text preprocessing refers to breaking down a piece of text into smaller units, known as tokens, such as words, phrases, or symbols [55], that computer algorithms can efficiently process. The goal of tokenization is to simplify the text and enable more effective NLP tasks, such as information retrieval, text classification, and sentiment analysis.

For example, consider the following Bengali sentence: "আমি বাংলাদে শে থাকি ।" (I live in Bangladesh). In word tokenization, this sentence is broken down into individual words: "আমি ", "বাংলাদে শে ","থাকি ". In phrase tokenization, this sentence is broken down into phrases: "আমি বাংলাদে শে ", "থাকি ". In symbol tokenization, any symbols in the sentence, such as punctuation marks or emojis, are identified and treated as separate tokens.

Stop Word Removal: Stop word removal is a common technique used in Bengali text preprocessing that involves removing commonly used words, known as stop words, from the input text. These words are usually short, function words that do not carry much meaning and are commonly used in natural language.

Examples of some stop words in Bengali include "একটি " (a), "একটা" (one), "একজন", "তার" (her/his), "সে " (he/she), and "এবং" (and), "যে " (which), "করে " (do), "করতে " (to do), "সব" (all), "বা" (or), "কি ছু" (some), "না" (no/not), "আমরা" (we), and "তুমি " (you).

For example, in the sentence "আমি একজন শি ক্ষক।" (I am a teacher), the stop words "একজন" (one person) and "এক" (one) would be removed during the stop word removal process, as they do not convey much information on their own. The goal of stop word removal in Bengali text preprocessing is to simplify the text and improve the accuracy of NLP tasks [57]. By removing commonly used stop words, the vocabulary size and noise are minimized, enabling a more effective analysis of the meaningful words in the text.

Stop word removal is typically performed after tokenization, as it involves identifying and removing specific tokens from the input text. Stop word lists are commonly used for Bengali text preprocessing. These lists include a predefined set of stop words that are commonly used in Bengali text. In addition, these lists can be customized based on the specific context or domain of the text to ensure that relevant stop words are removed from the input text.

Emoticons and Punctuation Marks: Emoticons and punctuation marks can be essential in Bengali text preprocessing, as they convey important information about the text’s tone, sentiment, and intent. Emoticons are graphical representations of facial expressions commonly used in electronic communications to convey emotions or attitudes.

For example, in the Bengali sentence "আমি খুশি ! :-)", the exclamation mark and emoticon indicate a positive emotional tone. Punctuation marks, such as commas (,), periods (|), and exclamation (!) points, also play an essential role in Bengali text preprocessing. For example, in the sentence "আমি বাসায় রয়ে ছি ।", the period indicates the end of the sentence.

During Bengali text preprocessing, emoticons and punctuation marks may be retained or removed depending on the specific task and context [59]. For example, emoticons may be useful in sentiment analysis tasks to help identify the emotional tone of text. At the same time, punctuation marks may be removed during tokenization to simplify the text for further analysis.

Hashtag Removal: Hashtag removal is a common preprocessing technique in Bengali natural language processing to remove hashtags from text data. Hashtags are words or phrases that are preceded by the symbol ‘#’, which is commonly used in social media platforms such as Twitter, Instagram, and Facebook to categorize and organize content [2].

For example, in the Bengali tweet "#আমার_ভাল ো_লাগা_গান সে ই সুরে র মাঝে মাঝে মন খুলে যায়।" (I love the song "#আমার_ভাল ো_লাগা_গান," it opens my heart), the hashtag "#আমার_ভাল ো_লাগা_গান" can be removed during preprocessing to simplify the text to "সে ই সুরে র মাঝে মাঝে মন খুলে যায়।" (It opens my heart among those tunes). This process can help to focus on the sentiment expressed in the text without the distraction of the hashtag.

Removing hashtags during preprocessing can help simplify text and make it easier to analyze certain tasks, such as text classification, sentiment analysis, or topic modeling, where hashtags may not be relevant.

Duplicate Removal and Padding: Duplicate removal and padding are two text preprocessing techniques used in Bengali language text data to improve the data quality and reduce noise. Duplicate removal involves identifying and removing exact duplicate copies of text data that may exist within a dataset. This operation is important because duplicate text can unnecessarily increase the size of the dataset and lead to skewed results.

For example, consider the following Bengali text: "আমি বাংলা ভাল োবাসি । আমি বাংলা ভাল োবাসি । আমি বাংলা ভাল োবাসি ।" (I love Bengali. I love Bengali. I love Bengali.). After removing the duplicate text, we have: "আমি বাংলা ভাল োবাসি ।" (I love Bengali.). Padding, conversely, is a technique used to ensure that all text data are of equal length. This operation is important when working with machine learning models that require input data of uniform length.

Padding involves adding extra characters or spaces to shorter text data to make it the same length as that of more comprehensive text data. If we want to pad all text to the same length, we could add extra spaces to the end of the shorter sentence to match the length of the longest sentence in the dataset.

For example: Original text: "আমি বাংলা ভাল োবাসি ।"; Padded text: "আমি বাংলা ভাল োবাসি । ". Here, we added extra spaces to the end of the sentence to match the length of the longest sentence in the dataset. This process ensures that all the text data is of uniform length, which is required for some ML models.

Feature extraction

Now, we discuss feature extraction methods.

CountVectorizer: CountVectorizer is a feature extraction method that converts a collection of text documents into a matrix of token counts. This method considers each document as a vector of token counts, where each element represents the count of a particular word (token) in a document. The matrix contains one row per document and one column per unique token in the entire corpus. CountVectorizer, which is essentially a bag of word statistics across documents, is a simple and efficient method that can handle large datasets and is relatively easy to interpret. This method can identify Bengali terms that appear more frequently in HS documents than in non-HS documents [5]. In one study, text was tokenized using CountVectorizer, which builds a lexicon of recognized terms, and a new document was encoded using this lexicon [48].

Researchers used the machine learning-based algorithm CountVectorizer [14] to identify offensive Bengali texts. Moreover, two different vectorization methods were utilized for the experiments in one research work; one method was CountVectorizer, which considers the frequency of features. This study demonstrated that an SVM with a linear kernel performed best in terms of accuracy while an SVM with a sigmoid kernel performed the worst [42].

Term Frequency-Inverse Document Frequency (TF-IDF): TF-IDF is a powerful statistical metric for evaluating the importance of words within a corpus or document. This measure provides a score that accounts for a term’s rarity across the entire corpus as well as its frequency in a specific text, providing a thorough assessment of its importance [55]. The term frequency (TF) is derived by Eq. 1 [28].

The inverse document frequency (IDF), on the other hand, is computed using the logarithm of the ratio of the total number of texts in the sample to the number of documents where a certain word appears [48].

Finally, the TF-IDF may be formed by multiplying the TF by the IDF, which will have normalized weights.

The TF-IDF feature extraction approach removes irrelevant features, which helps minimize the corpus’s dimensionality, leading to better classification results [55]. This approach transforms textual data into a numerical format, making it easier to feed it into ML models for further analysis [67]. Moreover, one study utilized the sklearn TF-IDF vectorizer to represent communal hate towards a minority community [58].

The n-grams Method: The n-grams method refers to sequences of n words extracted from a text corpus and is a feature extraction method commonly used in NLP and ML. During an experiment [91], three types of string features, uni-gram, bi-gram, and tri-gram features, were extracted. Uni-gram features consider each word in a sentence independently, without considering the relationships between words. This type of feature does not consider the relevancy of words within a single sentence but can identify highly abusive words. On the other hand, bi-gram features consider the relationship between two consecutive words in a sentence. In the case of tri-gram features, the relationship between three successive words in a sentence is considered. This type of feature finds more of the context and meaning in a sentence, allowing it to better identify and flag abusive language [42].

A tri-gram model and word tokenization were used for text-based feature extraction [92], and a range of n-grams (\(n=1,3\)) was applied for extracting text [14]. Sazzed et al. [17] extracted unigrams and bigrams from text data and calculated the TF-IDF. The TF-IDF scores were then used as inputs for CML classifiers. In addition, Sarker et al. [56] incorporated n-grams modeling to detect antisocial comments using TF-IDF weights [58]; using this method, the authors more precisely captured the context and meaning of the text by looking at sequences of n words in comments. Another study [57] utilized an MNB classifier with TF-IDF weights for n-grams up to three.

Scholars have incorporated n-grams modeling to more accurately identify the presence of HS by considering combinations of words instead of individual words. While the word "তুই‡" may not be regarded as hateful or dehumanizing, it cannot be classified as hateful speech when analyzed using unigrams (\(n=1\)). However, when combined with other words like "খানকি র বাচ্চা" to form bigrams or trigrams (\(n=2,3\)), this word can be classified as HS. Graphical visualizations of n-grams are shown in Fig. 6.

Word2Vec: When using a neural network-based method named Word2Vec, words are represented as dense vectors that capture both their semantic and syntactic meaning. This method can represent Bengali text as a numerical vector, where each element in the vector represents embedding a unique comment in the text corpus [61]. Word2Vec is an algorithm used to generate word embeddings, which are fixed-length vector representations of words in a corpus [59]. One of the fundamental equations in the Word2Vec model is the skip-gram objective function, which is used to train a neural network. The skip-gram objective function is defined below.

where T is the total number of words in the corpus, m is the size of the context window, \(w_t\) is the center word, and \(w_{t+j}\) is the context word. The probability \(p(w_{t+j}|w_t)\) is computed using the softmax function, which inputs the dot product of the vector representations of \(w_t\) and \(w_{t+j}\).

The Word2Vec model can also be visualized using a diagram that shows how the neural network is structured and how the different layers are connected. This diagram typically includes input and output layers, one or more hidden layers, and various activation functions and weights that transform the input data into meaningful embeddings. One author used the Word2Vec model with the gensim module to train on a 30k dataset using the CBoW method, and the embedding dimension was set to 300 [49]. In another analysis, the embedding dimension was set to 16 to create the Word2Vec model, and 19,469 vocabulary words were accounted for. As a consequence, words mostly appear on two opposing edges [52]. Two Word2Vec models, referred to as Word2Vec skip-gram (W2V SG) and Word2Vec continuous bag of words (W2V CBOW) were trained using informal text and are hence referred to as informal embedding approaches. The training procedures employed 1.47 million Bengali comments from Facebook and YouTube from eight different categories [53]. The Word2Vec embedding architecture is shown in Fig. 7.

FastText: FastText is a variant of Word2Vec that can capture subword information. This approach is beneficial in languages where words can be constructed from multiple morphemes. The advantage of FastText is that it can understand the meaning of rare or misspelled words and can be used to represent out-of-vocabulary words [93]. In research work, researchers used the largest pretrained Bengali word embedding model based on FastText (BengFastTest) to test how the model performed on YouTube data [49]. Then, with the use of informal texts, two FastText embeddings, denoted as FastText skip-gram (FT SG) and FastText continuous bag of words (FT CBOW), were trained on YouTube comments [53]. Additionally, the FastText model was evaluated using lemmatization in one research project, where it was trained on Bengali articles for a classification benchmark study and showed somewhat greater accuracy. The sequences were limited to 100 words by truncating larger texts and padding shorter ones with zeros to prevent padding in convolutional layers with numerous blank vectors [50].

BERT: Bidirectional encoder representations from transformers (BERT) is a type of deep learning model where each output unit is connected to each input unit, and the strengths of the connections are determined dynamically through calculations. BERT consists of three layers (Fig. 8). Collections of tokens, special symbols, words, etc., are input into the first layer. Following the input layer, the coding layer consists of diverse multilayer transformers [94], which are based on the attention mechanism [95]. These two-way transformers are employed to encrypt input text and generate the associated output matrices. BERT is another prominent pretrained unigram strategy used to construct token embeddings.

As shown in Fig. 8, BERT allows word vectors, segment embedding vectors, and several forms of position embedding vectors. BERT models include BERT-base and BERT-large. The BERT-base model uses 12 transformer encoder blocks with 768 hidden layers and 12 self-attention heads, for a total of approximately 110 million parameters. The BERT-large model, in contrast, consists of 24 transformer encoder blocks with 24 heads of self-attention layers, and generates over 340 million parameters. The accuracy of BERT is model-dependent, with BERT-large achieving higher accuracy than that of BERT-base. However, using BERT-large requires more extensive resources, making it more computationally expensive. BERT has several advantages, including its bidirectional ability to handle contextual information extraction. Additionally, this model trains faster than many other language models and has been successfully applied in various language modeling applications [96].

Data must undergo comprehensive preprocessing before Bengali texts containing political, personal, geopolitical, and religious HS can be classified. This preprocessing step involves cleaning and transforming collected raw text data to a standardized format that can be further analyzed and modeled. Some BERT architectures used for Bengali HS detection include the monolingual Bangla BERT-base [97], multilingual BERT-cased/uncased [98], and XLM-RoBERTa models [99].

The Bangla BERT-base model is a language model that is pretrained on a large corpus of Bengali text data using the BERT architecture. RoBERTa is an enhanced version of BERT that has been optimized by using larger batch sizes and dynamic masking and training on even larger datasets. XLM-RoBERTa can understand and generate text in multiple languages, including Bengali [50]. To detect aggression in text data written in the English, Hindi, and Bengali languages, the BERT and RoBERTa models were utilized [100]. Because XLM-RoBERTa and multilingual mBERT are pre-trained on data from multiple languages, including low-resource ones such as Bengali, they have a particularly large impact. They can transfer knowledge from high-resource languages to low-resource languages thanks to this multilingual training, which enhances performance on tasks like text translation and classification.

Meta-embedding: Combining different word embeddings to produce a cohesive representation that can use each embedding’s unique advantages is known as meta-embedding. Vectors representing words in a continuous vector space-where similar words have similar representations-are called word embeddings. Combining various embedding techniques (e.g., Word2Vec, GloVe, FastText, BERT, etc.) can result in richer representations as they capture different aspects of word semantics. For languages with limited resources, meta-embedding combines multiple pre-trained embeddings from high-resource or multilingual embeddings to create a comprehensive word representation. This approach uses the semantic information encoded in pre-existing embeddings to improve word vector quality for a language with constrained linguistic resources. Let \(\textbf{E}_1, \textbf{E}_2, \ldots , \textbf{E}_n\) represent the embeddings from different sources. The meta-embedding \(\textbf{M}\) for a word w can be computed using techniques like concatenation \(\textbf{M}(w) = [\textbf{E}_1(w); \textbf{E}_2(w); \ldots ; \textbf{E}_n(w)]\), averaging \(\textbf{M}(w) = \frac{1}{n} \sum _{i=1}^{n} \textbf{E}_i(w)\), or more advanced methods like canonical correlation analysis (CCA) which finds transformations \(\textbf{W}_i\) that maximize the correlation between pairs of embeddings: \(\textbf{M}(w) = \sum _{i=1}^{n} \textbf{W}_i \textbf{E}_i(w)\). This approach effectively transfers the richness of high-resource embeddings to the target language, enhancing its word representations and compensating for the lack of native linguistic data. Hossain et al. [101] used the average meta embedding for text classification in the resource-constrained language in terms of increased accuracy. For the domain-specific text classification meta embedding application is also promising [102].

Table 3 summarizes text preprocessing and feature engineering methods used in the papers on Bengali HS detection that we surveyed.

Detection models

We identified several models that can detect HS in the Bengali language. In this section, we provide additional information regarding these models. As mentioned in "Introduction" section, model training is an essential task in Bengali language HS detection. Furthermore, an in-depth discussion of the algorithms employed by these models will be presented. In addition, graphs will be used to demonstrate the detection and classification architectures of the models. The taxonomy of each model is provided in Fig. 9. In Table 5, we provide information on the models and algorithms used as well as their performance in relation to the evaluation criteria. In Table 4, we highlight the advantages and disadvantages of each model.

Classical machine learning models (CMLMs)

In the past few years, there has been significant research in Bengali HS detection. Most studies in this area have utilized machine learning techniques to classify Bengali texts in a dataset [40, 42, 58, 63, 92]. The most commonly used approaches are the Naïve Bayes, SVM, k-nearest neighbors, decision tree, random forest, and combined techniques.

Naive Bayes (NB): NB is a widely used supervised ML algorithm based on the Bayesian theorem. A key characteristic of this classifier is its reliance on probability-based predictions, which determine the likelihood of an object being part of a particular category or class. The posterior probability P(A|B) is calculated using the Bayes theorem [103] and the equation is given as follows:

The NB classification technique examines the relationship between each attribute and the class for each instance to determine the conditional probability for their interrelationship. This probability is then used to predict an instance’s class based on its attributes’ values. In several practical applications, such as text classification and spam detection, NB classifiers have demonstrated remarkable performance [104] and can work well with a small dataset.

Support Vector Machine (SVM): The SVM is one of the most commonly used supervised machine learning techniques for classification. SVMs examine data to find patterns and are utilized for performing classification and regression analysis [105]. This method aims to identify a linear separator (hyperplane) in multidimensional space that can effectively divide the data points of two different classes until a large minimum distance is discovered. This approach is well-suited for analyzing high-dimensional data, as an accurately constructed hyperplane can lead to superior performance [106]. For instance, Akhter et al. [92], used an SVM classifier for cyberbullying detection in Bengali text, and it performed the best among other classifiers.

k-Nearest Neighbors (KNN): The KNN approach is a lazy way of learning that may be applied to situations requiring classification as well as regression-based prediction. When using an instance-based learning approach, computation is performed until the classification task is completed. In this approach, local approximation is accounted for, and it is assumed that similar data are close together [107]. The KNN classifier records all the existing data in a given dataset and classifies a new data point based on its similarity to the existing data. The classical KNN classifier utilizes a distance function such as the Euclidean distance to measure the distance between two data points [108]. The equation of the Euclidean distance between two data points A and B is given by:

where d is the number of dimensions of the data points. By calculating the distances between data points, we can obtain the nearest points, i.e., neighbors.

Logistic Regression (LR): LR is a statistical technique that uses a probabilistic approach in which the classifier uses a logistic function to evaluate the relationship between a dependent variable as a target class and one or more independent variables or features for a given dataset [109]. A logistic function is also referred to as a sigmoid function. This approach provides probabilistic values that lie between 0 and 1. LR is a more effective substitute for linear regression, which applies linear models to each class and makes forecasts for new instances based on majority voting [110]. Logistic regression is applied mainly to solve classification problems. In several studies [40, 59], LR was implemented along with other algorithms to identify toxic and abusive Bengali comments.

Decision Tree (DT): A DT is a supervised learning algorithm that employs a tree-like structure to represent decisions and their potential outcomes by consolidating a set of data-derived classification rules [111]. Internal nodes in a decision tree represent dataset features, branches represent decision rules, and each leaf node represents an outcome. To classify an instance, the process begins at the root of the decision tree using a top-down approach. Based on the outcome of the test, the algorithm moves down the tree to the appropriate child node, and the process is repeated until a leaf node is reached, which provides the final classification or prediction. To make sound decisions on each subset, DT algorithms recursively divide the training set by using superior feature values [112]. However, decision trees are prone to overfitting, which is a major problem. In addition, decision trees are not appropriate for continuous variables [113].

Ensemble models

Ensemble methods are a collection of techniques used to enhance the accuracy of models by combining multiple models rather than relying on a single model. By leveraging the collective predictions of these models, ensemble methods can yield significantly improved results. The use of ensemble models has contributed to the widespread adoption and popularity of ensemble methods in the field of machine learning. An ensemble method employs various modeling algorithms or uses different training datasets. The ensemble model then combines the predictions from each model to generate a final prediction for new, previously unseen data. The primary objective of ensemble modeling is to reduce the generalization error and enhance the prediction accuracy. Ensemble approaches effectively mitigate prediction errors by ensuring that the base models are diverse and independent. This approach leverages the collective wisdom of the models to arrive at more robust predictions. Despite comprising multiple base models, an ensemble model functions and performs as a unified entity. Consequently, ensemble modeling techniques are widely employed in practical data mining solutions.

Ensemble methods are widely regarded as the most advanced solution for numerous machine learning problems [114]. Ensemble models are commonly used in data science and machine learning applications because they can produce more accurate and reliable results than those of any single model alone. To enhance the accuracy of a multiclass classifier, researchers [52] have utilized ensemble model techniques that involve binary classifiers. Multiple models were trained, and their predicted results leveraged for all comments. Several supervised machine learning algorithms were employed, including the random forest, SVM, KNN, and Naïve Bayes algorithms. Notably, the SVM algorithm outperformed the other classifiers, achieving an improved accuracy of 85%.

Ensemble architecture with two levels of classifiers for HS detection [115]

Random Forest (RF): The random forest (RF) classifier is an ensemble of decision trees used for regression, classification, and other tasks. This classifier trains a large number of decision trees and then outputs the class that represents the mode of the classes (in the case of classification) or mean prediction of the individual trees (in the case of regression) [116]. By increasing the number of decision trees in a random forest, one can improve the model’s accuracy and reduce the likelihood of overfitting. In text classification, RF classifiers are well suited for dealing with high-dimensional noisy data [117]. In addition, an RF classifier can assist in determining feature importance, which can aid in better understanding underlying data patterns.

Tuning RF hyperparameters, such as the maximum tree depth, is critical for attaining optimal performance for a specific task. However, hyperparameter tuning can be computationally expensive and time-consuming, particularly when working with large datasets or high-dimensional data. In a study by Islam et al. [63], an RF with a TF-IDF vectorizer was applied to detect abusive text and HS in Bengali text on social media platforms. There are several ensemble models. Some of these models are discussed here. Figure 10 shows the most popular ensemble methods graphically. The architecture used by [115] for Bangali HS detection is shown in Fig. 11.

Extreme Gradient Boosting (XGBoost): XGBoost is a tree-based ensemble method that utilizes a gradient-boosting machine learning framework [118] for solving regression and classification problems. Unlike the random forest (RF) approach, XGBoost employs distinct strategies for constructing and ordering of decision trees, handling feature importance, and combining the results of multiple trees. XGBoost follows a level-wise approach to constructing trees, beginning with a single root node and incrementally expanding the tree in a breadth-first manner. In contrast, an RF method builds trees independently and combines their predictions through voting or averaging.