Abstract

A high-dimensional and incomplete (HDI) matrix is a typical representation of big data. However, advanced HDI data analysis models tend to have many extra parameters. Manual tuning of these parameters, generally adopting the empirical knowledge, unavoidably leads to additional overhead. Although variable adaptive mechanisms have been proposed, they cannot balance the exploration and exploitation with early convergence. Moreover, learning such multi-parameters brings high computational time, thereby suffering gross accuracy especially when solving a bilinear problem like conducting the commonly used latent factor analysis (LFA) on an HDI matrix. Herein, an efficient annealing-assisted differential evolution for multi-parameter adaptive latent factor analysis (ADMA) is proposed to address these problems. First, a periodic equilibrium mechanism is employed using the physical mechanism annealing, which is embedded in the mutation operation of differential evolution (DE). Then, to further improve its efficiency, we adopt a probabilistic evaluation mechanism consistent with the crossover probability of DE. Experimental results of both adaptive and non-adaptive state-of-the-art methods on industrial HDI datasets illustrate that ADMA achieves a desirable global optimum with reasonable overhead and prevails competing methods in terms of predicting the missing data in HDI matrices.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

An increasing number of industrial applications have focused on exploring high-dimensional and incomplete (HDI) matrices in the artificial intelligence era including e-commerce recommendation systems, social networks analysis, biometric feature recognition etc. [1,2,3,4,5]. The missing items in an HDI matrix are probably the most valuable information that can be exploited by latent factor analysis [6], matrix factorization [7], collaborative filtering [8], and neural network-related models [9]. They are constructed to approximate the high-dimensional data with low-dimensional features that can represent the unobserved data in the HDI matrix.

Latent factor analysis (LFA) model is a nontrivial model for big data analysis problems, which represents the HDI matrix with magnitude lower-dimensional latent factor matrices. From the learned features of users and items in the latent matrices, missing items of interested users can be acquired when meeting a goal built as an objective function. To date, there are symmetric, non-negative, dynamic, and deep latent factor models, etc. [10,11,12,13,14]. Song et al. [10] integrate triple-factorization-based symmetric into a nonnegative latent factor model for a lower expenditure of time and storage space. Luo et al. [11] establish an ingenious method of unconstrained non-negative latent factor analysis method through a single-element-dependent mapping function. Bong et al. [12] focus on the cross-correlations among the latent variables in neural recordings, the dynamic high-dimensional time series data, to capture target factors. Wu et al. [14] provide a deep-structured model that successively connects multiple latent factor models with computational complexity linearly related to its layer count.

In practical application, however, extra parameters in these models unavoidably affect their performance. Recently, evolutionary computation-based methods, one of the most suitable and effective adaptive techniques with the swarm intelligence, arose. Differential evolution (DE), innovatively proposed by Storn and Price for solving the Chebyshev polynomials, is a simple yet efficient global numerical optimization evolutionary algorithm [15]. Instead of mimicking biological or social behaviors of ants [16], birds [17], bees [18], or humans [19], DE directly inherits the mechanisms of evolutionary: mutation, crossover, and selection. In fact, these three mechanisms are also included in a genetic algorithm (GA) [20], but DE directly employs the parent individuals to generate the offspring in which the gene representation process is omitted. Mutation, the decisive mechanism of DE, determines the range of generated offspring. For instance, with different selection mechanism of fundamental individual, there are standard DE (DE/rand/1), DE/best/1, DE/cur-to-best/1, DE/best/2, DE/rand/2, DE/rand-to-best/1, and DE/rand-to-best/1 schemes [21]. However, offspring random selection operation in mutation mechanism restricts its exploration and exploitation abilities, thereby inducing search stagnation and premature convergence. Engaged by this problem, many studies propose new models like DEHM [22], GA-KMEANS [23], and NBOLDE [24]. All these methods enhance the performance of convergence more or less, but they did not focus on the internal operation of mutation mechanism.

To solve multi-parameter adaptive latent factor analysis on an HDI matrix with the accuracy and efficiency, this paper presents an efficient annealing-assisted differential evolution for adaptive multi-parameter latent factor analysis (ADMA) utilizing the standard DE to train parameters. To further improve its efficiency, with the probability of the crossover in DE, some unchanged individuals will not participate in the evaluation process, which efficiently reduces the total computational time in DE. In addition, a physical mechanism annealing is further incorporated to help the model overstep its local saddle points, thereby receiving a desirable performance in predicting missing data. Contributions of this study can be summarized as follows:

-

1)

An ADMA algorithm, an adaptive multi-parameter latent factor analysis on an HDI matrix, witch adopts the philosophy of annealing mechanism to improve the exploration and exploitation abilities of DE;

-

2)

Efficient self-adaptive mechanism for multi-parameter estimation in the dynamic training process of the proposed latent factor analysis model;

-

3)

Improved DE for analyzing HDI matrices in real industrial applications.

The rest of this paper is organized as follows. Section II illustrates the preliminaries, Section III introduces the ADMA model, Section IV analyzes the performance of the ADMA algorithm as well as the state-of-the-art, and lastly, the work is conducted in Section V.

Related work

Parameter selection has been revealed as one of the most significant challenges especially in big data analysis tasks [25,26,27,28]. Manual fine-tuning of the parameters is inevitably time consuming and cannot achieve high accuracy in capricious industrial applications. Along this line, parameter adaptable algorithms are proposed as a promising approach to address the problem. Various techniques have been developed for parameter adaptive. Pertaining to traditional optimization algorithm, Miao et al. [29] proposed a novel parameter-adaptive variational mode decomposition (VMD) by grasshopper optimization algorithm (GOA) applied to compound-fault diagnosis, which can acquire a competitive decomposition results more efficiently. It can significant decline the overhead triggered by the long-term and harsh operation environment. Moreover, machine learning related methods are also utilized to solve this problem. For example, Xiao et al. [30] employ an unsupervised reinforcement learning to dynamically acquire the parameters in navigation systems of robots.

Among the parameter adaptive algorithms, three main categories of their adaptive mechanisms, which is mentioned in Table 1, can be concluded as follows:

-

1)

Analytical approach-based. The motivation of analytical approach is controlling the updating process by analyzing the objective function in a big data analysis task. El-Naggar et al. [31] firstly propose a simulated annealing approach-based solar cell model parameters estimation method. Na et al. [32] estimate the time-varying parameters from parameter estimation errors that drive several new adaptive laws with a faster convergence rate. Noticeable, analytical approach-based mechanisms are efficient and concise, but it is unavoidable to set internal assumptions precisely in advance.

-

2)

Dynamic optimization method-based. For a more intelligent parameter adaptation, some dynamic optimization methods are employed into the parameters training, such as the Newton–Euler (NE) method and the stochastic gradient descent (SGD) method. Yang et al. [33] identify dynamic parameters of robot systems by a robot control scheme based on the NE method accelerating the convergence speed. Kapetina et al. [34] propose a gradient-based parameter estimation technique that can track sufficiently slow changes in process and parameters. Although dynamic optimization method-based methods are intelligent in searching optimal solutions, the searching results are highly sensitive to their initialization and searching knowledge.

-

3)

Evolutionary computation-based. Despite the methods based on dynamic optimization rules, using evolutionary algorithms such as ant colony optimization (ACO) [35], particle swarm optimization (PSO) [36], gene and brain storm optimization (BSO) [37], etc. is an advanced genre in parameter adaptable algorithms. For example, Luo et al. [36] incorporate PSO into the latent factor analysis that makes the big data analysis convergence faster than traditional SGD-based mechanism. The evolutionary computation-based method neither tracks error with bound nor require the knowledge of dynamics. Unfortunately, the evolution process is time consuming and has premature convergence.

Peliminaries

Problem statement

The key problem of finding an LFA on an HDI scoring matrix is stated as follows:

Definition 1.

Suppose M and N are entity sets of users and items respectively, each of the entity m ∈ M grades the entity n ∈ N with the score zm,n that constitutes the HDI scoring matrix Z|M|×|N|; The entities in an HDI matrix can be divided into two sets Λ and Γ, where the known entry set Λ is far less in size than that of the unknown entry set Γ, namely, |Λ| ≪|Γ|.

Definition 2.

Suppose there are P|M|×D and Q|N|×D latent factor matrices for M and N respectively, from which a rank-d matrix Ẑ = PQT is learned to approximate Z via an LFA model. With these latent factor matrices, the dimensional space of the approximation matrix Ẑ of an HDI matrix is far less than that of entities of users and items, i.e., D ≪ min {|M|, |N|}.

The objective of the LFA model is to predict unknown entities in Γ via learned latent factor matrices P and Q from the limited known entry set Λ. Along this line, the unknown information in an HDI matrix can be acquired, which is theoretically expressed as solving the following Euclidean distance measure problem [10, 11]:

where zm,n represents a known element in HDI matrix Z, pm,d demotes the m-th row vector of P in the d-th dimension, while qn,d denotes the n-th row vector of Q in the d-th dimension.

Due to the ill-posed characteristic of solving (1), the commonly used Tikhonov regularization is employed to fight against the disturbance from the model. Along this line, the fundamental Eq. (1) is accordingly extended into:

where ẑm,n = ∑D d = 1 pm,dqn,d, λP and λQ are set as regularization constants of P and Q respectively, and ||||2 denotes L2norm.

An SGD-based LFA model

An LFA model is commonly addressed by the stochastic gradient descent (SGD) algorithm that shows the stability, flexibility, and advanced feedback control in solving big data analysis issues, especially on HDI data. Based on the SGD algorithm, matrices P and Q in (2) can be updated as follows:

where εm,n = (zm,n–ẑm,n)2 + λP||pm||2 2 + λQ||qn||2 2, in which pmdemotes the m-th row vector of P and qn denotes the n-th row vector of Q, η denotes the learning rate in the SGD, and the detailed update process for (2) in (3) is derived as follows:

where errm,n = zm,n–ẑm, denotes an instant error. As noted above, there are three hyper-parameters that need to be tuned including the learning rate η in SGD and the regularization parameters λP and λQ. The model can fast converge with impressive performance when these hyper-parameters are properly tuned.

Differential evolution

As a particle swarm optimization algorithm, original differential evolution (DE) conducts its mutation, crossover, and update operations on NC particles that can be represented as set X = {xi = [xi,1,xi,2,…,xi,K]| xi ∊ Ω, 1 ≤ i ≤ NC} where Ω = RK is the feasible solution space. The mutation operation in t-th iteration generates a new particle \({\varvec{v}}_{i}^{t} { = }\left( {v_{{i,{1}}}^{t} ,v_{i,2}^{t} ,...,v_{i,K}^{t} } \right)\). In the original DE, the new particle is generated based on a random particle\(x_{r1,k}^{t - 1}\) in X, and a differential value of the other two random particles \(x_{r2,k}^{t - 1}\) and \(x_{r3,k}^{t - 1}\):

where r1, r2, r3 are different random constants in the range of [1,NC], γ is a scaling factor between 0 and 1.

Crossover decides whether the new particle can be inherited. With the probability of Pselect or when the operation is on the iselect-th particle, the particle \({\varvec{u}}_{i}^{t} { = }\left( {u_{{i,{1}}}^{t} ,u_{i,2}^{t} ,...,u_{i,K}^{t} } \right)\) is replaced by the mutation particle. Instead, it will inherit the original particles. It is processed as follows:

where iselect is a fixed constant in the range of [1,NC]. This guarantees that at least one particle will be inherited by the new particle.

Selection operation chooses the better particle as\({\varvec{x}}_{i}^{t}\) in the iteration t from \({\varvec{u}}_{i}^{t}\) and \({\varvec{x}}_{i}^{{t{ - 1}}}\) after comparing the evaluation results of them, which is defined as:

where f is the objective function.

After limited iterations for all particles, such as, meeting the maximal iteration count or multiple rounds of updates unchanged, the algorithm meets the termination criteria and acquires a final solution.

Methods

An annealing-assisted differential evolution algorithm

To further improve the performance of DE, the cosine anneal mechanism is incorporated into the original DE algorithm for adjusting the parental particles in mutation operation. Following the definition of the original ED, the cosine annealing mechanism can be defined as a period function within minimal and maximal value of the target xmin and xmax:

where \(x_{anneal}^{t}\) is the anneal value at t-th iteration, and the maximal iteration time is T.

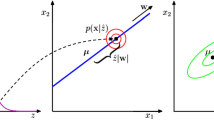

In the mutation operation, two of three parents are selected to calculate their differential values between the anneal values, and the equilibrium factor μ is set to balance them and the original differential value between the selected parents, which are defined as:

where \({\varvec{v}}_{i}^{t} { = }\left( {v_{{i,{1}}}^{t} ,v_{i,2}^{t} ,...,v_{i,K}^{t} } \right)\), r1, r2, r3 are different random constants in the range of [1,NC], and γ is the scaling factor in the range of (0,1).

To further improve its efficiency, evaluation operation in HDI data analysis task need to be conducted more concisely, which is consistent with estimating particles. Specifically, after the crossover operation, some particles are unchanged, whose evaluation results can be reserved without further estimation, in contrast, other renewed particles need to be evaluated exactly.

An ADMA model

From the previous information of the improved DE, the flowchart of the proposed ADMA can be illustrated as Fig. 1, where the red boxes represent the improvements. In order to make parameters adaptive in solving the LFA model on an HDI matrix, three-dimensional particles (learning rate η and regularization constants λP and λQ) need to learn using the improved DE, i.e., the problem dimension K = 3 in this part which means X = {xi = [xi,1,xi,2,xi,3]| xi ∊ Ω, 1 ≤ i ≤ NC} where Ω = R3.

As discussed in Sect. 3.1, the mutation particle \({\varvec{v}}_{i}^{t} { = }\left( {v_{{i,{1}}}^{t} ,v_{i,2}^{t} ,v_{i,3}^{t} } \right)\) is limited in the lower and upper bounds:

where empirical settings of these parameters’ boundaries are xmax,1 = 2–8, xmin,1 = 2–12, and xmax,2 = xmax,3 = 2–3, xmin,2 = xmin,3 = 2–7, respectively in the vector xmin and xmax.

Crossover operation is the same as the original one, which is:

where \({\varvec{u}}_{i}^{t} { = }\left( {u_{{i,{1}}}^{t} ,u_{i,2}^{t} ,u_{{i,{3}}}^{t} } \right)\), iselect is in the range of [1,NC], and Pselect is the probability of selecting mutation particles.

Based on Eqs. (3) and (4), when the crossover particle is replaced by the mutation particle, the update of P and Q is completed in the evaluation operation as follows:

where \(u_{{i,{1}}}^{t}\) represents the learning rate η on the SGD-based model, \(u_{i,2}^{t}\) and \(u_{i,3}^{t}\) denote the regularization constants λP and λQof P and Q respectively.

Selection operation chooses the particle with a better evaluation value between the replaced particle and the original one, which is defined as:

where \({\varvec{x}}_{i}^{t} { = }\left( {x_{{i,{1}}}^{t} ,x_{i,2}^{t} ,x_{i,3}^{t} } \right)\), f is the RMSE or MAE evaluation function in this method.

In the end, if the model meets the termination criteria that the best evaluation value is unchanged for five iterations or the maximum iteration time 1000 is reached, the latest optimum latent factor matrices are returned as the approximate results of the model.

Algorithm design and analysis

As is shown in Fig. 1, the flowchart of algorithm ADMA demonstrates that there are two additional mechanisms: an anneal mechanism and a screening mechanism of evaluation compared with the traditional DE algorithm. Evaluation for latent factor matrices learned from an HDI matrix in this model is the most time-consuming part, which can also be shown in the Algorithm ADMA.

Time complexity

To train the latent factor matrices in ADMA, the following steps are sequentially performed at each iteration: cosine anneal for each dimension, mutation, crossover, evaluation screening, evaluation, and replace for particles, whose total time complexity is:

where C is a constant, D represents latent feature dimension, NC denotes the particles in DE algorithm. From (14) we know that the size of the known entry set |Λ| major can affect the overall time consumption, but it is far less than the size of entity size |M| and |N|.

Space complexity

Large-scale data processing requires high storage space in most cases. Therefore the space complexity is another significant indicator of the algorithm. As for the ADMA algorithm, there are latent factor matrices and differential evolution particles that take the most space to store. More specifically, the dimension of the target latent factors P and Q, the dimension of the parameters, and the number of the particles are the primary source of storage costs, which is confirmed as follows:

where D is the latent feature dimension and |M| and |N| are the entity size of users and items. The result in (15) indicates that the storage space of ADMA is far less than that of the original rating matrix.

Experimental results and analysis

General settings

Evaluation metrics

Numerical comparison for predicting missing data in HDI matrices commonly performs via two evaluation measures: mean squared error (RMSE) and mean absolute error (MAE) [6, 38], which are:

where ẑm,n is the approximation of zm,n ∈ Γ. It is worth noting that from the previous illustration of function (13), the evaluation function is consistent with the RMSE and MAE evidencing the affinity of the evaluation function and metrics.

Experimental design

To illuminate the exploration and exploitation abilities of the proposed method, a standard scheme with a fundamental model SGD and its modified one Moment SGD are compared to show the searching ability. And to describe the adaptive searching efficiency, a general adaptive model ALF, and the standard DE adaptive model DELF are also compared in this part. Datasets from industrial applications with different density under 0.54% are adopted in our experiments. These sparse data form the target high-dimensional and incomplete matrix to be analyzed.

Datasets

As shown in Table 2, five popular datasets of industrial HDI matrices, including Douban, MovieLlens 20 M (ML20M), Flixter, Yahoo-R2, and Epinion [39,40,41,42] are used in this paper. Detailed information is described as follows:

-

1)

D1: Douban. It is composed of 16,830,839 ratings from 58,541 items in a large Chinese website Douban where 129,490 users grade the movies, music, books, etc. The data density of Douban is about 0.22% that subjects to an HDI matrix.

-

2)

D2: MovieLens 20 M (ML20M). There are 26,744 movies in the MovieLens system constituted by GroupLens. Within the ML20M dataset collected in the system, 138,493 users grade the movies 20,000,263 times scaling from 0.5 to 5, whose density is 0.54% and size is 20 M.

-

3)

D3: Flixter. The Flixter commercial website dataset has 8,196,077 ratings ranging from 0.5 to 5 on 48,794 movies graded by 147,612 users. The data density of the dataset is around 0.11%.

-

4)

D4: Yahoo-R2. Yahoo-R2 termed Yahoo is a large dataset that contains 200,000 users who rate 136,736 songs with 76,344,627 ratings ranging from 1 to 5. Its density is 0.28%.

-

5)

D5: Epinion. In the website Trustlet, Epinion dataset is collected in which 120,492 users grade 775,760 articles with 13,668,320 known entries. Notably, the density of Epinion is only 0.015%.

Conventional data assignments are also implemented in this paper that randomly divide the data into ten independent parts on average and allocate the train, validation, and test part into a ratio of 7:1:2.

Moreover, each model on these datasets are conducted ten times. Then, the averages of ten experimental results under the same conditions are analyzed in the end, which can dismiss the possible outliers.

Model settings

We compare our model with five representative state-of-the-art SGD-based LFA models on HDI datasets are compared in the following part. M1 (SGD) and M2 (Moment SGD) are LFA methods based on traditional SGD. M3 (Adam), M4 (ALF), M5 (DELF) [37, 38], and M6 (ADMA) are methods of parameter adaptive SGD-based LFA models. All model descriptions are illustrated in Table 3

The experimental parameter settings in the aforementioned methods are set in advance as follows:

-

1)

For all models, latent factor features in an LFA model are constructed with a fixed dimension D = 20, which can both maximize the representation ability and balance the computing effects.

-

2)

In M1–M4, their hyper-parameters are set as the optimal value of each model, for instance, λP = λQ = 0.03 in M1-M4; η = 0.003 in M1 and M2; the adaptive boundary of the learning rate η is from 2–12 to 2–8 in M3 and M4; For PSO mechanism in M4, model parameters are c1 = c2 = 2, the number of particles is set as 20, and the velocity boundary is vmax = 1, vmin = -−1 etc.

-

3)

In M5-M6, the learning rate η and regularization constants λP and λQ are all self-adaptive. Other parameters used in the adaptive learning process are empirically set asγ = μ = 0.1, Pselect = 0.7, NC = 20, iselect = NC/4, xmax,1 = 2–8, xmin,1 = 2–12, and xmax,2 = xmax,3 = 2–3, xmin,2 = xmin,3 = 2–7.

-

4)

Experimental termination criteria is the best evaluation value unchanged in five rounds or the reach of the maximum iteration number—1000.

Performance comparison

Experiments intuitively show the numerical performance of SGD (M1), Moment SGD (M2), Adam (M3), ALF (M4), DELF (M5), and our method GASL (M6) on industrial datasets Douban (D1), ML20M (D2), Flixter (D3), Yahoo (D4), and Epinion (D5). Table 4 and Table 5 report all their results regarding total iterations, total training time with variance, and the prediction precision evaluated by RMSE and MAE respectively. Noticeabley, the precision and total training time are in bold in each experiments. Their training processes are compared in Fig. 2 and Fig. 3 with RMSE and MAE respectively. The follwing observations can be made based on these results:

-

1)

Our model ADMA predicts the missing data in an HDI matrix more accurately than both adaptive and non-adaptive LFA models. Overall, Table 4 illustrates that the RMSE evaluation results of M6 are more accurately than M1-M5 on all five datasets D1-D5. And from Table 5, the MAE evaluation results of our model M6 are also more accurately than M1-M5 on D1-D4, and M2-M3 on D5. Take the non-adaptive model M2 as an example, the prediction accuracy is 2.648%, 1.494%, 0.7289%, 1.027%, and 0.2983% lower than that of our model on D1-D5 respectively in RMSE results. Andcompared with the adaptive model M3, the MAE prediction errors of our model M6 are 3.959%, 3.661%, 3.464%, 3.019%, and 0.7723% more accurately on D5, D1, D3, D2, and D4 respectively. Although there is an inferior MAE performace of M6 on D5, its RMSE performace is superior. Resonalble explaination for the precise prediction is that the physical mechanism annealing helps the model overstep its local saddle points, thereby achieving a desirable performance in terms of prediction accuracy.

-

2)

ADMA saves a great deal of computational time without degrading prediction accuracy. From the total training iterations shown in Table 4 and Table 5, the iteration time of ADMA plummets from previous researches, which is neither too much to be trained fully, nor too little to save the computational time. From the average results, the RMSE iteration number of our model M6, as shown in Table 4, is less than 84.53% of M1-M3 on D1-D5 without degrading the performances of prediction accuracy. Similarly, the MAE iteration of our model M6, as shown in Table 5, is less than 80.00% of M1-M3 on D1-D5 except for the iteration time of M3 on D5 that is also less than 71.55% with fast convergence. Although, the iteration of our model M6 is slightly more than that of M4, our model breaks the bottleneck of the premature shortcoming in M4 that makes the model stop early before reaching its optimal value.

-

3)

ADMA is increasing rapidly in convergence with relatively higher computational efficiency. Another advantage of an ADMA is that it converges fastly. From the convergence curves drawn in Fig. 2 and Fig. 3 for RMSE and MAE training process respectively, the yellow triangle convergent curves represents our model M6, which is plummeting with limited number of iterations in a relatively short time on all HDI datasets. On the contrary, M1-M3 colored as purple, light blue, and blue have a slower rate of convergence on D1-D5, where the preset maximun is even reached forcing to stop training untimely and resulting in suboptimal prediction accuracy. The M4 that adapts the learning rate of an LFA model colored as brown in Fig. 2 and Fig. 3 is the fastest converged algorithm. Unfortunately, the accuracy of M4 is lower than our model M6 and other models in a number of cases.

-

4)

Compared with traditional DE algorithm, the improved DE algorithm in ADMA avoids its premature convergence while at the same time requiring less training time. One typical improvement of an algorithm is to avoid premature convergence, thus reducing total training time. Surprisingly, they are both realized in our method M6. From the RMSE results in Table 4, the convergence time of M6 is 16.84% and 38.66% smaller than that of M5 on D2 and D3 respectively, while its prediction error is also 0.5878% and 1.313% on each data. The same situation is also shown in Table 5, the MAE convergence results of M6 is lower 17.16% and 32.52% lower than M5 on D2 and D3 respectively, while its prediction error is lower 1.131% and 1.485% on each data. It shows that the physical mechanism cosine annealing dose help the original DE-based model overstep its local saddle points, and the improved differential evolution (DE) mechanism achieves a desirable prediction efficiency.

Summary

The aforementioned results show that the proposed model ADMA (M6) can balance the exploration and exploitation well, and has better prediction accuracy when solving latent factor analysis (LFA) on HDI matrices. It also helps reduce a great number of iterations without degrading prediction accuracy. Moreover, the convergence of an ADMA is increasing rapidly, thereby maintaining a relatively higher computational efficiency. The model overstep its local optimum effectively with the support of the annealing mechanism. And the improved DE algorithm in ADMA avoids its premature convergence. The resulting ADMA is an efficient parameter self-adaptive HDI data analysis model.

Discussion

On the one hand, ADMA adaptively learn latent factors by the use of DE algorithm firstly. Although the basic offspring random selection operation in mutation mechanism restricts its exploration and exploitation abilities, we innovatively control the internal operation by a periodic equilibrium mechanism thereby improving its search and convergence abilities. Compared with other non-adaptive and adaptive related methods, the proposed method has better properties in terms of predicting the missing data in HDI matrices with reasonable overhead. On the other hand, ADMA estimates parameters basically under the stochastic mechanism. However, the SGD mechanism is easy to fall into the local optimum. Therefore, other learning mechanisms like Newton methods, alternating least squares (ALS), and alternating direction method (ADM) can be employed to dismiss the disadvantage of the mechanism used in ADMA. We plan to explore these alternative mechanisms to further enhance our model ADMA in our future work.

Conclusion

An efficient annealing-assisted differential evolution for multi-parameter adaptive latent factor analysis (ADMA) is proposed in this paper. By incorporating the physical mechanism annealing, it efficiently improves the fundamental differential evolution (DE) mechanism and helps the latent factor analysis (LFA) model analyze high-dimensional and incomplete (HDI) matrix adaptively. Experiments on five popular industrial HDI datasets show that our proposed model ADMA has better performance in terms of prediction accuracy and convergence speed. Integrating the annealing mechanism, ADMA assists the model overstep its local optimum and the improved DE algorithm effectively, which is increasing rapidly in convergence and reduces a great number of iterations without degrading prediction accuracy. Although on some datasets, ADMA has relatively longer training time, it substantially increases the prediction accuracy without requiring the extremely costly multi-parameter computing. As a result, ADMA outperforms the state-of-the-art in terms of predicting missing data in HDI matrices in real industrial applications.

In the future, there are at least three research directions worth studying. First, with time perspective, this method focus on a periodic equilibrium mechanism. To further improve particles searching accuracy, space related accelerate mechanisms can also be analyzed and incorporated into the method. Second, as regard to adaptive learning efficiency, we employ DE algorithm as the main update strategy. Meanwhile, other state of the art algorithms that have different advantages is worth studying according to different tasks. And last, but certainly not least, fundamental stochastic gradient descent mechanism, generally used in the HDI data analysis methods, could also be improved, and then strengthen the big data analysis field.

Availability of data and materials

The dataset has no restrictions that all the data can be acquired in the related site.

References

Thudumu S, Branch P, Jin J, Singh JJ. A comprehensive survey of anomaly detection techniques for high dimensional big data. J Big Data. 2020;7(1):1–30.

Angskun J, Tipprasert S, Angskun T. Big data analytics on social networks for real-time depression detection. J Big Data. 2022;9(1):1–15.

Tarus JK, Niu Z, Mustafa G. Knowledge-based recommendation: a review of ontology-based recommender systems for e-learning. Artif Intell Rev. 2018;50(1):21–48.

Peng S, Wang G, Xie D. Social influence analysis in social networking big data: opportunities and challenges. IEEE Network. 2016;31(1):11–7.

Giatrakos N, Alevizos E, Artikis A, Deligiannakis A, Garofalakis M. Complex event recognition in the big data era: a survey. VLDB J. 2020;29(1):313–52.

Luo X, Liu H, Gou G, Xia Y, Zhu Q. A parallel matrix factorization based recommender by alternating stochastic gradient decent. Eng Appl Artif Intell. 2012;25(7):1403–12.

Bisot V, Serizel R, Essid S, Richard G. Feature learning with matrix factorization applied to acoustic scene classification. IEEE/ACM Trans Audio Speech Lang Process. 2017;25(6):1216–29.

Adomavicius G, Tuzhilin A. Toward the next generation of recommender systems: a survey of the state-of-the-art and possible extensions. IEEE Trans Knowl Data Eng. 2005;17(6):734–49.

Y. Chen, X. Hu and Y. Hui. Correlation filter network model performance analysis. 2020 International Conference on Computer Network, Electronic and Automation (ICCNEA), 2020. P. 254–258.

Song Y, Li M, Luo X, Yang G, Wang C. Improved symmetric and nonnegative matrix factorization models for undirected, sparse and large-scaled networks: a triple factorization-based approach. IEEE Trans Industr Inform. 2020;16(5):3006–17.

Luo X, Zhou M, Li S, Wu D, Liu Z, Shang M. Algorithms of unconstrained non-negative latent factor analysis for recommender systems. IEEE Trans Big Data. 2021;7(1):227–40.

H. Bong, Z. Liu, Z. M. A. Ren, Smith, V. Ventura, and R. E. Kass. Latent dynamic factor analysis of high-dimensional neural recordings. In: NeurIPS, 2020.

Li Q, Xiong DW, Shang MS. Adjusted stochastic gradient descent for latent factor analysis. Inf Sci. 2022;588:196–213.

Wu D, Luo X, Shang M, He Y, Wang G, Zhou M. A deep latent factor model for high-dimensional and sparse matrices in recommender systems. IEEE Trans Syst Man Cybern Syst. 2021;51(7):4285–96.

Price KV. Differential evolution. Handbook of optimization. Heidelberg: Springer; 2013. p. 187–214.

Dorigo M, Birattari M, Stutzle T. Ant colony optimization. IEEE Comput Intell Mag. 2006;1(4):28–39.

Poli R, Kennedy J, Blackwell T. Particle swarm optimization. Swarm Intell. 2007;1(1):33–57.

DT Pham, A Ghanbarzadeh, E Koç, S Otri, S Rahim, and M Zaidi. The bees algorithm—a novel tool for complex optimization problems. Intelligent production machines and systems. Amsterdam: Elsevier Science Ltd. 2006.

Shi YH, Brain storm optimization algorithm in objective space. IEEE Congress on Evolutionalry Computation (CEC). Sendai. 2015;2015:1227–34.

Choi K, Jang D, Kang S, Lee J, Chung T, Kim H. Hybrid algorithm combing genetic algorithm with evolution strategy for antenna design. IEEE Trans Magn. 2016;52(3):1–4.

Das S, Suganthan PN. Differential evolution: a survey of the state-of-the-art. IEEE Trans Evol Comput. 2011;15(1):4–31.

Zhou Z, Abawajy J, Shojafar M, Chowdhury M. DEHM: an improved differential evolution algorithm using hierarchical multistrategy in a cybertwin 6G network. IEEE Trans Industr Inform. 2022;18(7):4944–53.

Baldassi C. Recombinator-k-means: an evolutionary algorithm that exploits k-means++ for recombination. IEEE Trans Evol Comput. 2022. https://doi.org/10.1109/TEVC.2022.3144134.

Deng W, Shang S, Cai X, Zhao H, Song Y, Xu J. An improved differential evolution algorithm and its application in optimization problem. Soft Comput. 2021;25(7):5277–98.

Zhang X, Ge Z. Local parameter optimization of LSSVM for industrial soft sensing with big data and cloud implementation. IEEE Trans Industr Inform. 2020;16(5):2917–28.

Zhang Q, Yang LT, Chen Z, Li P, Bu F. An adaptive dropout deep computation model for industrial IoT big data learning with crowdsourcing to cloud computing. IEEE Trans Industr Inform. 2019;15(4):2330–7.

Fikri N, Rida M, Abghour N, Moussaid K, El Omri A. An adaptive and real-time based architecture for financial data integration. J Big Data. 2019;6(1):1–25.

Taheri S, Goudarzi M, Yoshie O. Learning-based power prediction for geo-distributed Data Centers: weather parameter analysis. J Big Data. 2020;7(1):1–16.

Miao Y, Zhao M, Lin J. Identification of mechanical compound-fault based on the improved parameter-adaptive variational mode decomposition. ISA Trans. 2019;84:82–95.

Xiao X, Liu B, Warnell G, Fink J, Stone P. Appld: Adaptive planner parameter learning from demonstration. IEEE Robot Autom Lett. 2020;5(3):4541–7.

El-Naggar KM, AlRashidi MR, AlHajri MF, Al-Othman AK. Simulated annealing algorithm for photovoltaic parameters identification. Sol Energy. 2012;86(1):266–74.

Na J, Xing Y, Costa-Castelló R. Adaptive estimation of time-varying parameters with application to roto-magnet plant. IEEE Trans Syst Man Cybernetics Syst. 2021;51(2):731–41.

Yang C, Jiang Y, He W, Na J, Li Z, Xu B. Adaptive parameter estimation and control design for robot manipulators with finite-time convergence. IEEE Trans Industr Elect. 2018;65(10):8112–23.

Kapetina MN, Rapaić MR, Pisano A, Jeličić ZD. Adaptive parameter estimation in LTI systems. IEEE Trans Automatic Control. 2019;64(10):4188–95.

Kumar A, Kumar A. Adaptive management of multimodal biometrics fusion using ant colony optimization. Inform Fusion. 2016;32:49–63.

Luo X, Yuan Y, Chen S, Zeng N, Wang Z. Position-transitional particle swarm optimization-incorporated latent factor analysis. IEEE Trans Knowled Data Eng. 2020. https://doi.org/10.1109/TKDE.2020.3033324.

Li Q, Shang M. BALFA: A brain storm optimization-based adaptive latent factor analysis model. Inf Sci. 2021;578:913–29.

S. -L Chen, Y. Yuan, and J. Wang. An adaptive latent factor model via particle swarm optimization for high-dimensional and sparse matrices. 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), 2019. p. 1738–1743.

M. Shang, Y. Yuan, X. Luo and M. Zhou. An α -β -Divergence-Generalized Recommender for Highly Accurate Predictions of Missing User Preferences. In: IEEE Transactions on Cybernetics, 2021.

Luo X, Qin W, Dong A, Sedraoui K, Zhou M. Efficient and high-quality recommendations via momentum-incorporated parallel stochastic gradient descent-based learning”. IEEE/CAA J Automatica Sinica. 2020;8(2):403–11.

Takács G, Pilászy I, Németh B, Tikky D. Scalable collaborative filtering approaches for large recommender systems. J Mach Learn Res. 2009;10:623–56.

Koren Y, Bell R, Volinsky C. Matrix-factorization techniques for recommender systems. IEEE Comput. 2009;42(8):30–7.

Acknowledgements

We thank Chongqing University of Posts and Telecommunications, Singapore Management University, and Chongqing Key Laboratory of Big Data and Intelligent Computing, Chongqing Institute of Green and Intelligent Technology for financing this study. Then we thank all the staff members of Chongqing Key Laboratory of Big Data and Intelligent Computing, Chongqing Institute of Green and Intelligent Technology. Finally, I want to thank my parents and friends who always accompany and support me.

Funding

This research is supported in part by grants from the Key Cooperation Project of Chongqing Municipal Education Commission (HZ2021017) and nsfc 62072429. The collaborative fund is from Chongqing University of Posts and Telecommunications, and Chongqing Key Laboratory of Big Data and Intelligent Computing, Chongqing Institute of Green and Intelligent Technology.

Author information

Authors and Affiliations

Contributions

QL: Investigation, conceptualization, visualization, formal analysis, methodology, software, validation, writing- original draft preparation, writing-reviewing and editing. GP: writing-reviewing and editing, supervision. MS: resources, data curation, supervision, funding acquisition. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, Q., Pang, G. & Shang, M. An efficient annealing-assisted differential evolution for multi-parameter adaptive latent factor analysis. J Big Data 9, 95 (2022). https://doi.org/10.1186/s40537-022-00638-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40537-022-00638-8