Abstract

We propose in this paper a Proper Generalized Decomposition (PGD) solver for reduced-order modeling of linear elastodynamic problems. It primarily focuses on enhancing the computational efficiency of a previously introduced PGD solver based on the Hamiltonian formalism. The novelty of this work lies in the implementation of a solver that is halfway between Modal Decomposition and the conventional PGD framework, so as to accelerate the fixed-point iteration algorithm. Additional procedures such that Aitken’s delta-squared process and mode-orthogonalization are incorporated to ensure convergence and stability of the algorithm. Numerical results regarding the ROM accuracy, time complexity, and scalability are provided to demonstrate the performance of the new solver when applied to dynamic simulation of a three-dimensional structure.

Similar content being viewed by others

Introduction

Despite remarkable progress achieved in Computational Sciences and Engineering over the past decades, it is still necessary to develop innovative numerical methods to simplify models and make them easier to interpret for researchers and engineers in design offices. In linear structural dynamics, Modal Decomposition [15] with truncation undoubtedly remains the most popular technique among engineering analysis tools. It relies on computing the eigenvectors to describe the natural response of a given system. Unfortunately, not all eigenvectors are necessarily relevant to obtain the structural response under external loads, or, conversely, it may introduce a large number of these vectors to describe the mechanical behavior, which is not desirable in reduced-order modeling. Alternative approaches have been proposed for model reduction in structural dynamics, such as the Proper Orthogonal Decomposition (POD) or the Proper Generalized Decomposition (PGD) approaches. The POD method [4, 19, 21] has been successfully applied to linear and nonlinear structures subjected to transient load and can be viewed as an a posteriori approach, in the sense that it takes the state of the full-order model at different time-steps as input, the so-called snapshots, in order to extract the dominant spatial and temporal modes in the data. By contrast, the PGD method [5, 7, 12] constructs a reduced basis on-the-fly, eliminating the need for prior knowledge of the solution to the problem. In that respect, the PGD method is used as an a priori approach and can be assimilated as a solver: one simultaneously solves the problem and constructs a reduced approximation subspace.

The strength of the PGD strategy resides in the way it reduces high-dimension problems into subproblems of lower dimensions. The theoretical complexity of PGD solvers also decreases compared to conventional solvers. Indeed, following [12], one observes that if a solution is sought for in a space of a given dimension d, the complexity of conventional solvers scales exponentially with d while that of PGD solvers scales linearly. However, the performance of the PGD approach using a space-time separation for transient structural dynamics has often been considered unsatisfactory [5, 7]. One reason is that the fixed-point algorithm employed by Galerkin-based PGD solvers tends to exhibit poor convergence, if it converges at all [7]. In fact, it is open to question whether the PGD framework using space-time separability is suitable for solving the wave equation, or more generally, second-order hyperbolic problems. The authors in [6, 7] have proposed an alternative to the Galerkin-based PGD, namely the minimal residual PGD, that would consistently converge, a proof of which is given in [3].

On another note, the PGD framework has also been extended to perform basic operations, such as divisions, or more complex operations, such as solving linear systems of algebraic equations, leveraging the principle of variable separation. The authors in [13] have thus created a versatile toolbox for PGD algebraic operators, which has been used in a non-intrusive manner to solve parametric eigen problems arising, for instance, in automotive applications [10]. Furthermore, notable advancements on the development of the PGD framework have been achieved using separated representations with respect to the space and frequency variables [22, 24]. Moreover, it provides a means to take into account the parametric variability of a system due to, for example, material properties or geometric topology. In this respect, the advantage of PGD solvers seems clear when geometric or material parameter separation is at stake, offering a considerable reduction of the computational complexity [5, 12]. However, the space-frequency formulation does not necessarily provide direct insights into the transient behavior of the system. While it can determine its response at specific frequencies, it may fail to accurately capture time-dependent loads or dynamical events.

This was the motivation of the previous work [28], in which we introduced a new space-time Galerkin-based PGD solver based on the Hamiltonian formalism, which leads to an algorithm that was shown to be more stable than the Galerkin-based solver mentioned above. The novelty of the solver lied in the implementation of procedures that ensure linear independence of the modes and stability of the reduced-order model while progressively computing the new modes. However, the relevance of a reduced-order modeling technique stems from its ability to exceed the computational efficiency of a conventional Finite Element model, while incurring a relatively low error with respect to the FE solution of the full model. So far, if space-time PGD solvers have demonstrated a satisfying level of accuracy with a rather low number of modes, their computational efficiency is far from being competitive [5]. In this paper, we develop a novel space-time PGD solver with a focus on computational efficiency. The integration of the PGD strategy within the Hamiltonian formalism is revisited and we comment on the preservation of the symplectic structure on the time parameter by the reduced model. The Aitken transformation [2] has subsequently been introduced to accelerate the convergence of the fixed-point algorithm. We will show that it significantly reduces the number of required iterations for convergence. Additionally, a new orthogonal projection, more robust than the one formerly implemented, is performed on the spatial modes to enforce their linear independence and ensure the stability of the algorithm. Yet, the computational cost of such solvers mainly depends on the problem with respect to the spatial variable, which needs to be assembled and factorized at each fixed-point iteration. An original approach has been developed to avoid having to repeatedly factorize matrices. It consists in pre-processing the eigen-pair approximations of the operators, namely the Ritz pairs [27], that provide a subspace in which the problem in space remains diagonal throughout the fixed-point iterations. In the manner of Modal Decomposition, all computations are then carried out in the subspace spanned by the Ritz vectors [16], hence drastically decreasing the computational burden while capturing using only a small number of modes most of the information from the full model. Numerical examples dealing with the dynamical behavior of a 3D structure will be presented in order to demonstrate the efficiency of the proposed approach.

The paper is organized as follows: in “Model problem” section, we describe the model problem and its spatial Finite Element approximation. In “The Hamiltonian formalism” section, we present the Hamiltonian formalism and its symplectic structure. The PGD approaches are described in “PGD reduced-order modeling” section along with the Aitken acceleration and the orthogonal projectors applied to the fixed-point algorithm, as well as the projection of the PGD approximation onto the subspace spanned by the Ritz vectors. The numerical experiments are presented in “Numerical examples and discussion” section to illustrate the performance of the proposed approach. We finally provide some concluding remarks in “Conclusions” section.

Model problem

Strong formulation

The model problem we shall consider is that of elastodynamics in three dimensions under the assumption of infinitesimal deformation. Let \(\Omega \) be an open bounded subset of \({\mathbb {R}}^{3}\), with Lipschitz boundary \(\partial \Omega \), and let \({\mathcal {I}}= (0, T)\) denote the time interval. The boundary \(\partial \Omega \) is supposed to be decomposed into two portions, \(\partial \Omega _D\) and \(\partial \Omega _N\), such that \(\partial \Omega = \overline{\partial \Omega _D \cup \partial \Omega _N}\). The displacement field \(u: \bar{\Omega } \times \bar{{\mathcal {I}}} \rightarrow {\mathbb {R}}^{3}\) satisfies the following partial differential equation:

where, in the case of infinitesimal deformation, the stress tensor \(\sigma (u)\) and strain tensor \(\varepsilon (u)\) are given by:

and is subjected to the initial conditions:

as well as to the boundary conditions:

The functions \(f: {\Omega } \times {{\mathcal {I}}} \rightarrow {\mathbb {R}}^{3}\), \(u_{0}: {\Omega } \rightarrow {\mathbb {R}}^{3}\), \(v_{0}: {\Omega } \rightarrow {\mathbb {R}}^{3}\), and \(g_{N}: \partial \Omega _{N} \times {{\mathcal {I}}} \rightarrow {\mathbb {R}}^{3} \) are supposed to be sufficiently regular to yield a well-posed problem. The medium occupied by \(\bar{\Omega }\) is assumed to be isotropic, with density \(\rho \) and Lamé coefficients \(\lambda \), \(\mu \) (the material parameters could possibly vary in space). The constitutive equation (2), written above in terms of the tensor of elasticity \({\mathbb {E}}\), thus reduces to:

where \(I_{3} \in {\mathbb {R}}^{3 \times 3}\) is the identity matrix. In the following, we will denote the first and second time derivatives by \({\dot{u}} = \partial u /\partial t\) and \(\ddot{u} = \partial ^2 u/\partial t^2\).

Semi-weak formulation

We consider here the semi-weak formulation with respect to the spatial variable in order to construct the discrete problem in space using the Finite Element method. Multiplying (1) by an arbitrary smooth function \(u^{*}=u^{*}(x)\) and integrating over the whole domain \(\Omega \), one obtains:

By virtue of \(-\left( \nabla \cdot \sigma (u) \right) \cdot u^{*} = \sigma (u): \nabla u^{*} - \nabla \cdot \left( \sigma ( u ) \cdot u^{*} \right) \), Eq. (8) can be recast as:

Since \(\sigma (u)\) is a symmetric tensor:

and substituting the constitutive equation for \(\sigma (u)\), one gets:

Applying the divergence theorem and the boundary conditions, and choosing the test function such that \(u^*= 0\) on \(\partial \Omega _D\), the semi-discrete formulation of the problem then reads: Find \(u=u(\cdot ,t) \in V\), for all \(t\in \bar{\mathcal I}\), such that:

and:

where V is the vector space of vector-valued functions defined on \(\Omega \):

Spatial discretization

We partition the domain into \(N_e\) elements \(K_e\) such that \(\overline{\Omega } = \cup _{e=1}^{N_e} K_e\) and \(\text {Int}(K_i) \cap \text {Int}(K_j) = \varnothing \), \(\forall i,j=1,\ldots ,N_e\), \(i\ne j\). We then associate with the mesh the finite-dimensional Finite Element space \(W^h\), \(\text {dim}\ W^h = s\), of scalar-valued continuous and piecewise polynomial functions defined on \(\Omega \), that is:

where \({\mathbb {P}}_k(K_e)\) denotes the space of polynomial functions of degree k on \(K_e\). Let \(\phi _i\), \(i=1,\ldots ,s\), denote the basis functions of \(W^h\), i.e. \(W^{h} = \text {span}\{\phi _{i}\}\). We then introduce the finite element subspace \(V^h\) of V such that:

and search for finite element solutions \(u_h=u_h(\cdot , t) \in V^h\), \(\forall t \in \bar{{\mathcal {I}}}\), in the form:

where the vectors of degrees of freedom, \(q_{j} \in {\mathbb {R}}^{3}\), depend on time. We introduce the set of \(n=3s\) vector-valued basis functions as:

Using the Galerkin method, the Finite Element problem thus reads:

where \(u_{0,h}\) and \(v_{0,h}\) are interpolants or projections of \(u_0\) and \(v_0\) in the space \(V^h\). The above problem can be conveniently recast in compact form as:

where M and K are the global mass and stiffness matrices, respectively, both being symmetric and positive definite:

\({\varvec{f}}(t)\) is the load vector at time t whose components are given by:

\({\varvec{q}}(t)\) is the global vector of degrees of freedom:

and \({\varvec{u}}_{0}\) and \({\varvec{v}}_{0}\) are the initial vectors:

Note that \(u_{0,i} \in {\mathbb {R}}^{3}\) and \(v_{0,i} \in {\mathbb {R}}^{3}\), \(i=1,\ldots ,s\), are vectors whose components are the initial displacements and velocities in the three spatial directions.

The Hamiltonian formalism

Hamilton’s weak principle

The Hamiltonian formalism consists in modeling the motion of the system along a trajectory in the phase space by introducing the generalized coordinates \({\varvec{q}}\) and their generalized (or conjugate) momenta \({\varvec{p}}\) as independent variables. For the problem at hand, the Hamiltonian functional \(h\) reads:

Given the Hamiltonian functional \(h\) of the system, the action functional, denoted by \({\mathcal {S}}[{\varvec{q}}, {\varvec{p}}]\), is defined as:

The Hamilton’s weak principle then states that the trajectory \(({\varvec{q}}, {\varvec{p}})\) of the system in the phase space should satisfy:

where \({\mathcal {S}}'[{\varvec{q}}, {\varvec{p}}]({\varvec{q}}^{*}, {\varvec{p}}^{*})\) denotes the Gâteaux derivative of \({\mathcal {S}}[{\varvec{q}}, {\varvec{p}}]\) with respect to a variation \(({\varvec{q}}^{*}, {\varvec{p}}^{*}) \in {\mathcal {Z}} \times {\mathcal {Z}}\) such that:

After Gâteaux derivation and integration by parts with respect to time, we get:

that is,

or, equivalently,

The last weak formulation of (16) leads the so-called Hamilton’s equations:

This formulation is consistent with (12) in the sense that if we differentiate with respect to time the second equation and substitute \(\dot{{\varvec{p}}}\) for the expression in the first equation, we do exactly recover (12).

Symplectic structure

Let us introduce \({\varvec{z}}\in {\mathcal {Z}}^2\) that vertically concatenates \({\varvec{q}}\) and \({\varvec{p}}\) such that:

The gradient of the Hamiltonian (15) then reads:

In the symplectic framework, the dynamics of the structure is modeled by the trajectory in the symplectic vector space \(({\mathbb {R}}^{2n}, \omega )\) of dimension \(2n\) for linear systems, where \(\omega \) is the so-called symplectic form defined as:

with \(J_{2n}\) the skew-symmetric operator such that:

and \(J_{2n}^{2} = - I_{2n}\). It is then possible to recast (17) as:

where \(\nabla ^{\omega } = J_{2n} \nabla _{\!z}\) is defined as the symplectic gradient. The Hamiltonian can be written as a sum of a quadratic form on \({\mathbb {R}}^{2n}\) and the external energy term:

with H the Hessian operator of \(h\) and \({\varvec{f}}_{z}\) such that:

It follows that one can rewrite the weak formulation (16) as:

We now introduce the notion of symplectic mapping. A symplectic mapping is a linear transformation that preserves the symplectic form \(\omega \), i.e.:

As a consequence, such a mapping A verifies:

The notion can actually be generalized to rectangular matrices with the symplectic Stiefel manifold, denoted \(S_{p}(2r, 2n)\), such that:

Let \(({\mathbb {R}}^{2r}, \gamma )\) be a symplectic vector space, \(A \in S_{p}(2r, 2n)\) a symplectic mapping, and \({\varvec{y}}\in {\mathbb {R}}^{2r}\) such that \({\varvec{x}}= A {\varvec{y}}\). One can define a Hamiltonian for \({\varvec{y}}\):

with G its Hessian operator and \({\varvec{f}}_{y}\) the projection of the external loads on the symplectic subspace (in the case \(r\leqslant n\)), such that:

The preservation of the symplectic structure implies that \({\varvec{y}}\) is governed by Hamilton’s canonical equations, expressed hereinafter in terms of \(\gamma \) (symplectic form on \({\mathbb {R}}^{2r}\)) and g such that:

with \(\nabla ^{\gamma } = J_{2r} \nabla _{\! y}\) and Hamilton’s weak principle (16) then reads:

Discretization in time of the Hamiltonian problem

The time domain \({\mathcal {I}}\) is divided into \(n_{t}\) subintervals \({\mathcal {I}}^{i} = \left[ t^{i-1},t^{i} \right] \), \(i=1,\ldots ,n_{t}\), of size \(h_{t}=t^{i} - t^{i-1}\). The Crank–Nicolson method is then applied to (17) as detailed in the previous work [28]. The solutions given by the FEM in space, integrated with Crank–Nicolson in time, will be used as reference solutions when assessing the results of the PGD solvers.

Although not the primary focus of this article, we acknowledge the relevance of symplectic integrators in the case of Hamiltonian mechanics. These integrators are particularly robust to compute long-time evolution of Hamiltonian systems [20, 25, 26]. In addition, the preservation of the symplectic structure by the reduced model is the subject of numerous studies [1, 8, 23]. We will also discuss this property on the time parameter with respect to our PGD solver in “Temporal update and symplectic structure” section.

PGD reduced-order modeling

The proper-generalized decomposition method applied within the Hamiltonian framework aims at approximating both the generalized coordinates \({\varvec{q}}\) and their generalized momenta \({\varvec{p}}\) in separated form. We are thus searching for a space-time separated representation of \({\varvec{z}}\) as:

with:

where \(\Phi _{i}\) is a \((2 n \times 2)\) matrix and \(\varvec{\psi }_{i}\) a \((2 \times 1)\) vector while \(\Psi _{i}\) is a \((2 n \times 2 n)\) matrix and \(\varvec{\varphi }_{i}\) a \((2 n \times 1)\) vector. The two notations are mathematically equivalent and convenient whether the weak formulation is solved for \(\varvec{\varphi }\) (spatial problem) or \(\varvec{\psi }\) (temporal problem). The vector-valued functions \((\varvec{\varphi }_{i}^{q})_{1 \leqslant i \leqslant m}\) and \((\varvec{\varphi }_{i}^{p})_{1 \leqslant i \leqslant m}\) provide the spatial bases for the generalized coordinates and conjugate momenta, respectively:

For the sake of clarity in the presentation, we shall drop from now on the subscript i and write the decomposition of rank m of \({\varvec{z}}\) as:

The approach considered here is the so-called greedy rank-one update algorithm, where the separated representation is computed progressively by adding one pair of modes \(\varvec{\varphi }\) and \(\varvec{\psi }\) at each enrichment. The goal in this section is to construct the separated spatial and temporal problems that satisfy the enrichment modes \(\varvec{\varphi }\) and \(\varvec{\psi }\), the new unknowns of the problem, assuming that the previous iterate \({\varvec{z}}_{m - 1}\) has already been calculated.

Fixed-point strategy

Computing a separated representation of \({\varvec{q}}\) and \({\varvec{p}}\) demands an adequate solution strategy of the weak formulation (18). Substituting the trial solution \({\varvec{z}}_{m}\) for \({\varvec{z}}\) in (18) leads to a non-linear formulation for the modes \(\varvec{\varphi }\) and \(\varvec{\psi }\). Several iterative schemes could be used to solve such a problem. The fixed point algorithm is considered here, which proceeds as follows:

-

1.

Solve (18) for \(\varvec{\varphi }\) with \(\varvec{\psi }\) known. This step is referred to as the spatial problem and is written in a generic format as:

$$\begin{aligned} A(\varvec{\psi }) \varvec{\varphi }= {\varvec{b}}(\varvec{\psi }, {\varvec{z}}_{m - 1}), \end{aligned}$$(20)where the matrix \(A(\varvec{\psi })\) and vector \({\varvec{b}}(\varvec{\psi }, {\varvec{z}}_{m - 1})\) will be specified in “Problem in space” section. More precisely, in order to enhance robustness, we propose to force the new spatial mode to preserve the linear independence of the spatial bases \((\varvec{\varphi }_{i}^{q})_{1 \leqslant i \leqslant m}\) and \((\varvec{\varphi }_{i}^{p})_{1 \leqslant i \leqslant m}\), which can formally be written as:

$$\begin{aligned} \varvec{\varphi }= P_m A(\varvec{\psi })^{-1} {\varvec{b}}(\varvec{\psi }, {\varvec{z}}_{m - 1}), \end{aligned}$$where \(P_m\) is a projector that is orthogonal to the subspace spanned by previous mode (for a well chosen inner product).

-

2.

Solve (18) for \(\varvec{\psi }\) with \(\varvec{\varphi }\) known. The temporal problem corresponds to the system of first-order differential equations:

$$\begin{aligned} \dot{\varvec{\psi }}= f_{{\mathcal {T}}}(\varvec{\psi }, \varvec{\varphi }, {\varvec{z}}_{m - 1}), \end{aligned}$$(21)where the vector-valued function \(f_{{\mathcal {T}}}\) will be explicitly provided in “Problem in time” section.

Steps 1 and 2 are repeated until a convergence criterion is fulfilled. It is noteworthy that (20) is a linear system of size \(2 n\) associated with the space discretization, similar to that of a steady-state FEM problem. Equation (21) is a system of two first order scalar ordinary differential equations in time, solved for \(\psi _{q}\) and \(\psi _{p}\). Both problems are described in the next sections.

Problem in space

We assume that \(\varvec{\psi }\) is known and search for the new spatial mode \(\varvec{\varphi }\). We substitute \({\varvec{z}}_{m - 1} + \Psi \varvec{\varphi }\) for \({\varvec{z}}\) in (18) and choose test functions in the form \({\varvec{z}}^{*}= \Psi \varvec{\varphi }^{*}\). Equation (18) reduces to:

which, since \(\varvec{\varphi }^{*}\) and \(\varvec{\varphi }\) are independent of time, can be rewritten as:

This leads to the following linear system:

with:

and (see Appendix for the explicit form of the time operators):

The operator \(M^{-1}\) is not computed explicitly. Instead, the Schur complement of \(M^{-1}\) in \(A_{{\mathcal {S}}}\) is considered. Equation (22) can thus be expanded as:

so that:

Therefore, the solution of (22) amounts to solving (23) for \(\varvec{\varphi }_{q}\) by factorization of the sparse symmetric matrix:

and inserting the solution \(\varvec{\varphi }_{q}\) into (24) to determine \(\varvec{\varphi }_{p}\).

For a given \(m\text {th}\) enrichment, the spatial modes \(\varvec{\varphi }_{q}\) and \(\varvec{\varphi }_{p}\) are subsequently projected to ensure that any new mode is searched in a direction that is orthogonal to the subspaces generated by the previous modes, respectively \((\varvec{\varphi }_{i}^{q})_{1 \leqslant i \leqslant m - 1}\) and \((\varvec{\varphi }_{i}^{p})_{1 \leqslant i \leqslant m - 1}\). At any given m, we want \((\varvec{\varphi }_{i}^{q})_{1 \leqslant i \leqslant m}\) and \((\varvec{\varphi }_{i}^{p})_{1 \leqslant i \leqslant m}\) to be orthogonal with respect to K and \(M^{-1}\), respectively. Let \(S_{q}\) and \(S_{p}\) be defined as:

A classical approach consists in using the orthogonal projections:

At any enrichment step, the previous modes \((\varvec{\varphi }_{i}^{q})_{1 \leqslant i \leqslant m - 1}\) and \((\varvec{\varphi }_{i}^{p})_{1 \leqslant i \leqslant m - 1}\) are orthogonal and normalized with respect to K and \(M^{-1}\), respectively. Thus, the projectors above simplify as:

Therefore, if we denote by \(\varvec{\varphi }_{q}^{\circ }\) and \(\varvec{\varphi }_{p}^{\circ }\) the modes initially obtained from Eqs. (23) and (24) and by \(\varvec{\varphi }_{q}\) and \(\varvec{\varphi }_{p}\) the modes that one retains after orthonormalization, the procedure reads:

It is noteworthy that, in practice, the inverse of M is never evaluated. Instead, one performs a Cholesky factorization to obtain the decomposition \(M = L L^{T}\). In particular, the normalization of \(\varvec{\varphi }_{p}\) is done by forward and backward substitution, whose cost is negligible with respect to the overall complexity of the algorithm. Indeed, the main bottleneck is the factorization of \(A_{q}\) (25), which needs to be performed at each iteration of the fixed point algorithm. We propose below two approaches that aim at:

-

Reducing the number of iterations in the fixed point algorithm in order to reach convergence (see “Aitken acceleration” section);

-

Avoiding repetitive factorization of \(A_{q}\) by carrying out computations in a subspace provided by the Ritz vectors, which are approximations of the eigenvectors of the generalized eigenproblem \(K {\varvec{u}}= \lambda M {\varvec{u}}\) (see “Projection in Ritz subspace” section).

Problem in time

We assume here that \(\varvec{\varphi }\) is known and search for a new temporal mode \(\varvec{\psi }\). We substitute \({\varvec{z}}_{m - 1} + \Phi \varvec{\psi }\) for \({\varvec{z}}\) in (18) and choose test functions in the form \({\varvec{z}}^{*}= \Phi \varvec{\psi }^{*}\), with \(\varvec{\psi }^{*}\in {\mathcal {Y}}^2\), where:

Equation (18) reduces in this case to:

which simplifies to:

Above equation is discretized using the Crank–Nicolson time-marching scheme, such that, given \(\varvec{\psi }^{0}\), one computes the \(i\text {th}\) iterate (\(i > 0\)) as:

where:

and:

For each time step, Eq. (26) represents a \(2 \times 2\) linear system that can be explicitly solved for \(\varvec{\psi }^{n}\). Overall, the time problem is relatively cheap to solve as the cost is linear in the number of time steps \(n_{t}\). As previously mentioned, \(\varvec{\varphi }_{q}\) and \(\varvec{\varphi }_{p}\) are normalized after (22) is solved, so that \(k_{x} = m_{x} = 1\) and only \(c_{x}\) needs to be updated.

Aitken acceleration

In the context of PGD order-reduced modeling, the number of iterations performed by the fixed-point algorithm has a direct impact on the efficiency of the approach. We propose here to employ the Aitken’s \(\Delta ^2\) process to reduce the number of iterations that are necessary to reach convergence.

Let \(\text {lin}(n)\) denote the complexity associated with solving one linear system of \(n\) algebraic equations in \(n\) unknown variables (\(\text {lin}(n) \approx {\mathcal {O}}(n^3)\) for fully-populated matrices). In structural dynamics simulations, the usual approach is to discretize the continuous equations with respect to the spatial variables using the finite element method and then obtain a system of \(n\) ordinary differential equations in the time variable \(t \in {\mathcal {I}}\). The system is thereafter discretized in time by means of an (implicit) integration scheme (e.g. Euler, Newmark, Crank–Nicolson, Hilber–Hughes–Taylor, ...). The degrees of freedom are then evaluated at each time step by solving a linear system of size \(n\). In the case of \(n_{t}\) time steps, the complexity of the approach amounts to \(n_{t} \text {lin}(n)\).

In the PGD framework, the solution of the problems in space and time is decoupled such that at each fixed-point iteration, one system of size \(n\) is solved for the spatial mode (23) and one system of size two is solved \(n_{t}\) times (marching scheme) for the temporal mode (26). The complexity of one fixed-point iteration can be assumed to be of the order of \(\text {lin}(n) + n_{t}\). It follows that the overall complexity of the PGD algorithm will be \(m k_{\max } ( \text {lin}( n) + n_{t} )\), where m denotes the rank of the decomposition, i.e. the number of modes, and \(k_{\max }\) is the maximal number of iterations allowed in the fixed-algorithm, whether or not convergence is reached. It can be inferred that a space-time separated PGD algorithm is competitive against a classical full-order solver whenever the following inequality holds:

highlighting the fact that the efficiency of the PGD algorithm highly depends on the number of fixed-point iterations.

The computation of an enrichment mode involves the following operators, formally written, at any given fixed-point iteration k:

-

\({\mathcal {S}}^{(k)}: \varvec{\psi }^{(k - 1)} \mapsto \varvec{\varphi }^{(k)}\), the operator that solves the system (22) for \(\varvec{\varphi }^{(k)}\) with \(\varvec{\psi }^{(k - 1)}\) given;

-

\({\mathcal {T}}^{(k)}: \varvec{\varphi }^{(k)} \mapsto \varvec{\psi }^{(k)}\), the operator that solves the system (26) for \(\varvec{\psi }^{(k)}\) with \(\varvec{\varphi }^{(k)}\) given.

As a result, the fixed-point algorithm computes two sequences \(\big ( \varvec{\varphi }^{(k)} \big )_{1 \leqslant k \leqslant k_{\max }}\) and \(\big ( \varvec{\psi }^{(k)} \big )_{1 \leqslant k \leqslant k_{\max }}\) until convergence. These sequences can be defined by recurrence relations as follows:

The fixed-point convergence hinges upon the contraction property of the operators \({\mathcal {S}}^{(k)} \circ {\mathcal {T}}^{(k - 1)}\) and \({\mathcal {T}}^{(k)} \circ {\mathcal {S}}^{(k)}\) for \(\varvec{\varphi }^{(k)}\) and \(\varvec{\psi }^{(k)}\) respectively. One common way to improve fixed-point iterations is by using relaxation techniques. This helps achieve a contraction property and usually enhances the convergence rate. The introduction of relaxation parameters \(\omega _{\varphi }\) and \(\omega _{\psi }\) leads to the following formulation of a fixed-point iteration:

In practice, it is preferable to adapt \(\omega _{\varphi }\) and \(\omega _{\psi }\) at each iteration. The so-called Aitken’s delta square method provides a useful heuristic for determining the sequences \(\omega _{\varphi }^{(k)}\) and \(\omega _{\psi }^{(k)}\). One can also choose to enforce relaxation on the generalized coordinates modes and the conjugate momentum modes separately. In the Algorithm 1, Aitken acceleration is applied on the spatial modes only and separately for \(\varvec{\varphi }_{q}\) and \(\varvec{\varphi }_{p}\). Note that steps 15 and 16 of algorithm 1 are not implemented in practice. Instead, space-time separation should be leveraged to efficiently compute stagnation coefficients in step 17.

Temporal update and symplectic structure

Greedy algorithms generally incorporate an update procedure that consists in updating all the temporal modes for a given set of spatial modes. For a decomposition of rank m, the spatial modes can be conveniently stored in the matrix S of size \(2 n \times 2\,m\), defined as:

while the temporal modes can be vertically stored in the time-dependent vector \(\varvec{\psi }\) of size \(2 m \times 1\), defined as:

such that the decomposition of rank m of \({\varvec{z}}\) reads:

The temporal update is performed by substituting \(S \varvec{\psi }\) for \({\varvec{z}}\) in (18) and choosing test functions in the form \({\varvec{z}}^{*}= S \varvec{\psi }^{*}\). Equation (18) thus reduces to:

which can be rewritten in matrix form, with \({\varvec{f}}_{\psi } = S^{T}{\varvec{f}}_{z}\), as:

Time discretization of the above equation using the Crank–Nicolson marching scheme leads to:

with:

and:

The orthonormalization of \(( \varvec{\varphi }_{i}^{q} )_{1 \leqslant i \leqslant m}\) and \(( \varvec{\varphi }_{i}^{p} )_{1 \leqslant i \leqslant m}\) with K and \(M^{-1}\), respectively, results in \(K_{x} = M_{x} = I_{m}\).

The update procedure can be interpreted as projecting Hamilton’s equations onto the subspace generated by the vectors of S. The system to be solved is governed by the Hamiltonian g whose Hessian is \(G = S^{T}H S\). This Hessian can be interpreted as the rank-2m reduced counterpart of the Hessian operator H, such that:

and the full-order vector is given by \({\varvec{z}}\simeq {\varvec{z}}_{m} = S \varvec{\psi }\). Assuming that Hamiltonian g is canonical, the Hamilton’s canonical equations of such a reduced-order system read:

where the symplectic gradient is given by:

It follows that the Hamilton’s equations can be written as:

Multiplying both sides of this equation by \(J_{2 m}\) (recall that \(J_{2 m} J_{2 m} = - I_{2 m}\)) and rearranging the terms leads to:

Recalling here Eq. (27):

one observes that that the two equations are identical if and only if \(S^{T}J_{2n} S = J_{2 m}\), i.e. if S is a symplectic mapping, according to the definition of the symplectic Stiefel manifold (19). However, in general, S is not symplectic nor g is a canonical Hamiltonian. The product \(S^{T}J_{2 n} S\) writes:

This suggests that the symplectic property could be enforced by biorthogonalization of \(( \varvec{\varphi }_{i}^{q} )_{1 \leqslant i \leqslant m}\) and \(( \varvec{\varphi }_{i}^{p} )_{1 \leqslant i \leqslant m}\), such that:

However, this property is not ensured in the current algorithm since we chose to orthogonalize \(( \varvec{\varphi }_{i}^{q} )_{1 \leqslant i \leqslant m}\) and \(( \varvec{\varphi }_{i}^{p} )_{1 \leqslant i \leqslant m}\) with respect to K and \(M^{-1}\), respectively. Yet, it can be enforced via a post-processing procedure. Let P and Q be two matrices of size \(m \times m\) such that:

It follows that:

In other words, the matrices Q and P recombine the columns of \(S_{q}\) and \(S_{p}\) such that \(\left( \hat{\varvec{\varphi }}_{i}^{q} \right) _{1 \leqslant i \leqslant m}\) and \(\left( \hat{\varvec{\varphi }}_{i}^{p} \right) _{1 \leqslant i \leqslant m}\) form a biorthogonal system. We can readily conceive two approaches, among others, to enforce (29):

-

The LU factorization \(S_{q}^{T}S_{p} = LU\) allows one to define \(Q = L^{-T}\) and \(P = U^{-1}\);

-

The Singular Value Decomposition \(S_{q}^{T}S_{p} = U \Sigma V^{T}\) allows one to define \(Q = U^{-T}\Sigma ^{- 1/2}\) and \(P = V^{-T}\Sigma ^{- 1/2}\) (\(\Sigma ^{- 1/2}\) is defined as the diagonal matrix whose coefficients are given by the square root of the inverse of the singular values if different from zero, and zero otherwise).

We note that the two procedures are computationally efficient since they are performed on reduced matrices (\(m \ll n\)). Therefore, it is possible to construct a symplectic basis by post-processing the basis calculated by the PGD solver.

Projection in Ritz subspace

As previously mentioned, the main bottleneck of the PGD solver is the solution of (22) that requires one to factorize the operator \(A_{q}\) at each fixed-point iteration. Even though Aitken transformation does reduce the PGD solver time, the computational cost of the repeated factorization makes the solver prohibitively expensive when the dimension of the finite element space is large.

We recall here the problem in space (22), expressed now at a given fixed-point iteration indexed by parameter k:

with:

where \(m_{t}^{(k)}\), \(k_{t}^{(k)}\), \(c_{t}^{(k)}\) and \(d_{t}^{(k)}\) are computed from the temporal modes \(\psi _{q}^{(k - 1)}\) and \(\psi _{p}^{(k - 1)}\), as defined in “Problem in space” section. In particular:

Therefore, at each fixed-point iteration, the weights associated with the stiffness and mass operators K and M, respectively, have to be modified and a new factorization of \(A_{q}^{(k)}\) needs to be obtained.

Although \(A_{q}^{(k)}\) varies from one iteration to the next, its spectral content remains similar because the operator is derived from a linear combination of K and M (both remaining constant). The proposed approach takes advantage of the later observation and consists in projecting Eq. (23) onto the subspace of approximated eigen-vectors, namely the Ritz vectors, which verify the following properties (with \(m \leqslant r \ll n\)):

where the Ritz values and the Ritz vectors are:

We now introduce the mapping R:

and remark that \(R \in S_{p}(2 r, 2 n)\), i.e. R is a symplectic mapping. In other words, the structure of the equations presented above holds, which can be written in terms of \(\hat{{\varvec{z}}} \in {\mathbb {R}}^{r}\), that satisfies \({\varvec{z}}= R \hat{{\varvec{z}}}\), and the Hamiltonian \({\mathcal {G}}\) defined as:

with:

For the Hamiltonian \({\mathcal {G}}\), the problem in space (22) using \(\varvec{\varphi }^{(k)} = R \hat{\varvec{\varphi }}^{(k)}\) can thus be rewritten as:

with:

and (23) becomes a diagonal system expressed as:

The complexity of the spatial problem (22) is now linear in terms of the dimension r of the Ritz subspace. The number of Ritz vectors r has to be chosen sufficiently high with respect to the expected rank m of the PGD approximation. Depending on the external load, one can compute the Ritz vectors associated to the Ritz values corresponding to the frequency band of interest. Here, we chose to retain the Ritz vectors whose Ritz values have the lowest magnitudes, as conventionally performed in structural dynamics [15].

Numerical examples and discussion

Test case: asymetric triangle wave Neumann boundary condition

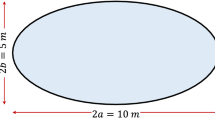

The test case is inspired by an example found in [14] and has the interest of showcasing a transient phase followed by a steady-state harmonic regime. A 3D beam is considered, such that the domain \(\Omega = (0, 6) \times (0, 1) \times (0, 1)\) (in meters) is a parallelepiped with a squared cross-section (see Fig. 1). Its response to an external load on its top surface is computed in \({\mathcal {I}}= (0, 5)\) (in seconds):

with:

Moreover, the beam is subjected to homogeneous initial conditions:

and to the boundary conditions:

In other words, the beam is clamped on its left end \(\partial \Omega _{D} = \{0 \} \times (0, 1) \times (0, 1)\), an external load \(g_{N} \cdot n\) is applied on its top surface \(\partial \Omega _{N} = (0, 6) \times \{ 1 \} \times (0, 1)\) such that:

where \(t_{1} = 0.625\) and \(t_{2} = 0.75\). In other words, the external load pulls the beam upwards for \(t \in [0, t_{1})\) and pushes it downwards for \(t \in [t_{1}, t_{2})\) (see Fig. 2). Finally, the beam is free on the remainder of the boundary \(\partial \Omega _{0} = \partial \Omega \backslash (\partial \Omega _{D} \cup \partial \Omega _{N})\). In the space-discrete, time-continuous Hamiltonian formalism, the problem reads:

with:

where the stiffness and mass matrices, K and M respectively, result from the enforcement of the homogeneous Dirichlet boundary conditions by eliminating the corresponding rows and columns; the right-hand side \({\varvec{f}}\) is computed from the prescribed Neumann boundary conditions.

The values of the parameters are chosen as follows:

and the Lamé coefficients are evaluated as:

The time domain \({\mathcal {I}}\) is divided into \(n_{t}= 4800\) sub-intervals of equal size. The space domain \(\Omega \) is partitioned into linear tetrahedral elements and five discretizations will be considered such that the number of spatial degrees of freedom (DOF) \(2 s\) is chosen in \(\{ 1302, 6204, 36,774, 67,032, 244,926 \}\). The number of Ritz vectors is set to \(r = 300\) regardless of the spatial discretization. Unless otherwise stated, the reduced-order models are assessed on solutions involving \(m = 50\) modes.

Comparison method and performance criteria

We shall report the results based on the following four features:

-

1.

The number of fixed-point iterations without and with Aitken acceleration;

-

2.

The accuracy of the PGD approximations with respect to full-order solutions, namely the FEM solutions described in “Spatial discretization” section;

-

3.

The actual execution time of the different approaches and algorithms. The time efficiency of the PGD solvers will be detailed regarding the successive phases of the computation, namely the pre-processing, the fixed-point algorithm, the Gram-Schmidt algorithm, and the temporal update procedure.

-

4.

The scalability of the approaches with respect to the size of the spatial discretization.

The relative error \(\epsilon _{\text { {ROM}}}\) in the reduced-order approximations with respect to the full-order solutions computed by the FEM is given by:

with  being the energy norm:

being the energy norm:

More precisely, in the space-discrete Hamiltonian framework, the energy norm will be evaluated as follows:

Note that the full-order solution computed by the FEM is obtained using the same discretization parameters.

The reduced-order approximations that will be considered are the Singular Value Decomposition (SVD) of the full-order solution, the PGD LU that factorizes the space operator by LU decomposition for each fixed-point iteration and the PGD Ritz that computes the reduced-order model in the subspace spanned by the Ritz vectors. More precisely, we will present the errors with respect to the number of modes m in the PGD solutions and compare these errors to those obtained by subsequently performing an SVD on the full-order solutions.

As far as computer times are concerned, all computations were run on a computer with the following configuration:

-

CPU: AMD Ryzen 7 PRO 4750U @ 1.7 GHz per core (8 cores, 16 threads);

-

RAM: 38 GB;

-

OS: Arch Linux.

The code was written using Python 3.9.17 with NumPy 1.25.0 [17] and SciPy 1.10.1 [29] built from sources and linked against BLAS/LAPACK and SuiteSparse [11].

Numerical results

Aitken acceleration The relaxation technique significantly reduces the number of fixed-point iterations (see Fig. 3). For 20 modes, Aitken acceleration saves five iterations per enrichment, on average, and a total of over 100 iterations for the full computation. Moreover, it is worth highlighting that without Aitken acceleration, the fixed-point procedure sometimes terminates without reaching convergence. This is the case for example for modes 2, 4, and 6, as shown in Fig. 3. Indeed, the maximum number of iterations in this example is set to 35 iterations, so that if convergence is not reached within the 35 iterations, the fixed-point procedure is aborted and the last computed mode is retained. Thus, not only Aitken acceleration increases the computational efficiency, but also allows one to reach the convergence criterion that may not be satisfied otherwise. Eventually, slight discrepancies in the temporal modes may be noticeable between the results obtained with and without acceleration (see Fig. 4). On the other hand, there is no significant difference on the spatial modes, as illustrated in Fig. 8, with Aitken acceleration when using either one of the two PGD approaches.

Visualization [9] of the first three temporal modes (normalized) with and without Aiken acceleration, herein denoted \(\widetilde{\psi }_{i}^{q}\) and \(\psi _{i}^{q}\), respectively

ROM accuracy Figure 5 shows the errors of the reduced-order models with respect to the FEM solutions for \(2n = \) 244,926 spatial degrees of freedom. We observe that the errors significantly decrease for both the PGD LU and PGD Ritz approaches during the 20 first modes. In fact, the accuracy of the PGD Ritz solution is similar to that of the PGD LU solutions. Moreover, we observe that the convergence of the two PGD approximations is comparable to that of the SVD, at least for the 20 first modes, before reaching a plateau.

Execution time and scalability Figures 6 and 7 show respectively the total and detailed real execution times of the different methods. We remark that the PGD solver is not competitive when the number of degrees of freedom remains low. We also observe that, except in the case with 1302 spatial degrees of freedom, the PGD Ritz outperforms any other method. On the one hand, the SVD, as an a posteriori method, requires a full-order snapshot to build a reduced-order model. Moreover, the extraction of the principal components from the data takes as much time as the actual full-order computation. On the other hand, the Ritz version of the PGD solver as an a priori method does not require any prior knowledge of the full-order solution and reaches an error comparable to that of the SVD for the first 20 modes. More precisely, the PGD Ritz does not reach an error as low as that of the SVD. However, the difference in error is small enough in view of the speedup to justify the use of the PGD Ritz over the SVD (see Table 1). Conversely, the use of the PGD LU in this context cannot really be justified over the SVD.

Regarding the detailed execution times, it seems that the pre-processing phase has comparable computational efficiency. In other words, the computation of a Cholesky factorization for M is as costly as computing Ritz pairs. Nevertheless, carrying out the PGD computation in the subspace provided by the Ritz vectors drastically increases the performance of each of the subsequent phases, namely the fixed-point, Gram-Schmidt, and the temporal update procedures.

Further discussion

The PGD Ritz solver is overall much more efficient than the other approaches and offers a remarkably good compromise in terms of error decay. Moreover, this novel approach displays good scalability with respect to the number of spatial degrees of freedom, with a reasonable error for a relatively small number of modes, which is highly suitable in model-order reduction. The PGD Ritz solver could be interpreted as a hybrid approach between classic PGD solvers and Modal Decomposition methods. In that respect, the relevance of the PGD Ritz over classic PGD solvers is unequivocal in a space-time separated context. Yet, its advantage over Modal Decomposition must be discussed, as well as its potential to perform well if separation with additional parameters (material, geometric, etc.) had to be accounted for.

Around the 20th mode, we observe on Fig. 5 that the error decay slows down or even stagnates for the PGD Ritz. Since computations are carried out in the subspace spanned by the Ritz vectors, it is intuitively understandable that the quality of the PGD approximation is bounded by the information contained in the Ritz vectors. Indeed, Fig. 5 illustrates this idea: the error in the solution obtained by the PGD Ritz after the first 20 enrichments matches the error of the response computed by Modal Decomposition (MD) with \(r = 300\) modes (number of Ritz vectors). On the one hand, Ritz vectors are describing the natural response of the system. Thus, not all the Ritz vectors will be relevant to describe the structural response under external loads. Mode participation factors or methods such as sensitivity analysis or mode shape analysis may provide insights to select a set of vectors that capture a given dynamic behavior. However, these approaches can be tedious as they may require user intervention to interpret the results, which makes the process subjective and less repeatable. On the other hand, the PGD solver inherently accounts for external loads to compute relevant modes that describe the structural response accurately. In the PGD Ritz framework, it translates to find linear combinations of the Ritz vectors that satisfy the PGD spatial formulation (22) that derives from the Galerkin finite element formulation. This is well illustrated by Fig. 8: the first three modes for Modal Decomposition are the dominant deformation modes for the beam geometry, respectively vertical bending, lateral bending, and torsion. However, for the given external load, lateral bending and torsion are not relevant. We can thus see that, like modal decomposition, the PGD solvers compute a first mode corresponding to vertical bending, but the subsequent modes also contribute to the description of vertical bending, which is effectively the dominant mode to describe the structure’s response to the prescribed load.

Figure 5 also shows that while the error in the PGD Ritz solution reaches a plateau, that in the PGD LU solution eventually keeps decreasing when the number of modes is increased. Thus, if error stagnation is detected while the accuracy remains above a given tolerance, two strategies can be considered:

-

Restarting the Arnoldi algorithm to find subsequent Ritz vectors (i.e. increase r), so as to enrich the research space for new PGD modes;

-

Switch back to the PGD LU algorithm.

The methodology can be straightforwardly extended to viscoelastic systems modeled with Rayleigh damping, allowing for the construction of a parametric reduced-order model with respect to the Rayleigh damping coefficients. Indeed, the damping term does not change the matrix pattern of the system (22) to be solved for the spatial mode. In [10], the parametric eigenproblem \(K_{\mu } {\varvec{u}}(\varvec{\mu }) = \lambda (\varvec{\mu }) M_{\mu } {\varvec{u}}(\varvec{\mu })\) is solved for applications in structural dynamics, where the stiffness \(K_{\mu }\) and mass \(M_{\mu }\) operators depend on material or geometric parameters \(\varvec{\mu }\). The authors introduce an original method to solve this parametric eigenproblem within the PGD framework, so as to find approximations of the eigen-pairs \(\left( \lambda (\varvec{\mu }), {\varvec{u}}(\varvec{\mu }) \right) \) in a parameter-separated format. Their approach may be considered to provide a parametrized subspace, onto which the spatial problem (22) can be projected to recover a diagonal structure as in (31). The PGD Ritz would optimize the selection of the eigenvectors that contribute to the structure response. Therefore, the PGD Ritz could present a proficient tool to compute the dynamic response of structures subjected to time-dependent loads, even in a parametrized setting.

Furthermore, the possibility to choose a symplectic time integrator in combination with the preservation of symplecticity of the spatial modes offers an appropriate foundation for a potential extension of this work. It may allow for the development of a reduction technique suited to the treatment of elastodynamics problems that involve large rotations and small strains as presented in [26]. Finally, the proposed approach allows one to consider the construction of a PGD Ritz aimed at minimizing an error with respect to a Quantity of Interest (QoI) [18]. The PGD subproblems would be modified so that combinations of the Ritz vectors are now sought for as to minimize a residual over a QoI. These topics will be studied in future works.

Conclusion

The PGD solver developed here combines good accuracy and efficiency, even with an increased number of degrees of freedom. The calculation of the PGD modes in the subspace spanned by the Ritz vectors proves to be proficient, as it substantially accelerates the computation without introducing a significant approximation error. Aitken acceleration and the orthogonalization procedures are not as important for computational efficiency, but guarantee convergence and stability properties that are essential to the solver. In addition, the solver, which is based on the Hamiltonian formalism, builds reduced models for both the generalized coordinates and conjugate momenta. It has been shown that it allows the construction of a symplectic reduced basis, thus respecting the structure of canonical Hamiltonian mechanics. This is an interesting feature, as it opens up a variety of avenues related to this fundamental structure in dynamics. The numerical results also show great promise regarding the viability of this approach for solving linear elastodynamics problems on three-dimensional structures.

Data availability

All data generated or analysed during this study are included in this published article or available from the corresponding author on reasonable request.

References

Afkham BM, Hesthaven JS. Structure preserving model reduction of parametric Hamiltonian systems. SIAM J Sci Comput. 2017;39(6):A2616–44.

Aitken AC. XII.—Further numerical studies in algebraic equations and matrices. Proc R Soc Edinb. 1932;51:80–90.

Ammar A, Chinesta F, Falcó A. On the convergence of a greedy rank-one update algorithm for a class of linear systems. Arch Comput Methods Eng. 2010;17:473–86.

Bamer F, Bucher C. Application of the proper orthogonal decomposition for linear and nonlinear structures under transient excitation. Acta Mechanica. 2012;223:2549–63.

Boucinha L. Réduction de modèle a priori par séparation de variables espace-temps: application en dynamique transitoire. Theses, INSA de Lyon; 2013. p. 163–6.

Boucinha L, Ammar A, Gravouil A, Nouy A. Ideal minimal residual-based proper generalized decomposition for non-symmetric multi-field models—application to transient elastodynamics in space-time domain. Comput Methods Appl Mech Eng. 2014;273:56–76.

Boucinha L, Gravouil A, Ammar A. Space-time proper generalized decompositions for the resolution of transient elastodynamic models. Comput Methods Appl Mech Eng. 2013;255:67–88.

Buchfink P, Glas S, Haasdonk B. Optimal bases for symplectic model order reduction of canonizable linear Hamiltonian systems. IFAC-PapersOnLine (10th Vienna international conference on mathematical modelling MATHMOD 2022). 2022;55(20):463–8.

Cadiou C. Matplotlib label lines. Version v0.5.1. 2022. https://doi.org/10.5281/zenodo.7428071.

Cavaliere F, Zlotnik S, Sevilla R, Larrayoz X, Díez P. Nonintrusive parametric solutions in structural dynamics. Comput Methods Appl Mech Eng. 2022;389: 114336.

Chen Y, Davis TA, Hager WW, Rajamanickam S. Algorithm 887: CHOLMOD, supernodal sparse Cholesky factorization and update/downdate. ACM Trans Math Softw. 2008;35(3):1–4.

Chinesta F, Leygue A, Bordeu F, Aguado JV, Cueto E, González D, Alfaro I, Ammar A, Huerta A. PGD-based computational Vademecum for efficient design, optimization and control. Arch Comput Methods Eng. 2013;20:31–59.

Díez P, Zlotnik S, García A, Huerta A. Encapsulated PGD algebraic toolbox operating with high-dimensional data. Arch Comput Methods Eng. 2019;27:11.

Fischer H, Roth J, Wick T, Chamoin L, Fau A. MORe DWR: space-time goal-oriented error control for incremental POD-based ROM. 2023;04 .

Géradin M, Rixen DJ. Mechanical vibrations: theory and application to structural dynamics. Newark: Wiley; 1994.

Gosselet P, Rey C, Pebrel J. Total and selective reuse of Krylov subspaces for the resolution of sequences of nonlinear structural problems. Int J Numer Methods Eng. 2013;94(1):60–83.

Harris CR, Millman KJ, van der Walt SJ, Gommers R, Virtanen P, Cournapeau D, Wieser E, Taylor J, Berg S, Smith NJ, Kern R, Picus M, Hoyer S, van Kerkwijk MH, Brett M, Haldane A, del Río JF, Wiebe M, Peterson P, Gérard-Marchant P, Sheppard K, Reddy T, Weckesser W, Abbasi H, Gohlke C, Oliphant TE. Array programming with NumPy. Nature. 2020;585(7825):357–62.

Kergrene K, Chamoin L, Laforest M, Prudhomme S. On a goal-oriented version of the proper generalized decomposition method. J Sci Comput. 2019;81:92–111.

Kerschen G, Golinval J-C, Vakakis A, Bergman L. The method of proper orthogonal decomposition for dynamical characterization and order reduction of mechanical systems: an overview. Nonlinear Dyn. 2005;41:147–69.

Lew AJ, Marsden JE, Ortiz M, West M. An overview of variational integrators. In: Finite element methods: 1970’s and beyond. Barcelona: CIMNE; 2004.

Lu K, Jin Y, Chen Y, Yang Y, Hou L, Zhang Z, Li Z, Fu C. Review for order reduction based on proper orthogonal decomposition and outlooks of applications in mechanical systems. Mech Syst Signal Process. 2019;123:264–97.

Malik MH, Borzacchiello D, Aguado JV, Chinesta F. Advanced parametric space-frequency separated representations in structural dynamics: a harmonic-modal hybrid approach. Comptes Rendus Mécanique. 2018;346(7):590–602.

Peng L, Mohseni K. Symplectic model reduction of Hamiltonian systems. SIAM J Sci Comput. 2016;38:A1–27.

Quaranta G, Argerich Martin C, Ibañez R, Duval J L, Cueto E, Chinesta F. From linear to nonlinear PGD-based parametric structural dynamics. Comptes Rendus Mécanique. 2019;347(5):445–54.

Razafindralandy D, Hamdouni A, Chhay M. A review of some geometric integrators. Adv Model Simul Eng Sci. 2018;5:1–67.

Simo J, Tarnow N. The discrete energy-momentum method. Conserving algorithms for nonlinear elastodynamics. Zeitschrift für angewandte Mathematik und Physik ZAMP. 1992;43:757–92.

Sorensen DC. Implicitly restarted Arnoldi/Lanczos methods for large scale eigenvalue calculations. Dordrecht: Springer; 1997. p. 119–65.

Vella C, Prudhomme S. PGD reduced-order modeling for structural dynamics applications. Comput Methods Appl Mech Eng. 2022;402: 115736.

Virtanen P, Gommers R, Oliphant TE, Haberland M, Reddy T, Cournapeau D, Burovski E, Peterson P, Weckesser W, Bright J, van der Walt SJ, Brett M, Wilson J, Millman KJ, Mayorov N, Nelson ARJ, Jones E, Kern R, Larson E, Carey CJ, Polat İ, Feng Y, Moore EW, VanderPlas J, Laxalde D, Perktold J, Cimrman R, Henriksen I, Quintero EA, Harris CR, Archibald AM, Ribeiro AH, Pedregosa F, van Mulbregt P, SciPy 1.0 Contributors. SciPy 1.0: fundamental algorithms for scientific computing in python. Nat Methods. 2020;17:261–72.

Acknowledgements

Not applicable.

Funding

Clément Vella acknowledges the funding awarded by the French Ministry of National Education, Higher Education, Research and Innovation referred to as “Specific Doctoral Contracts for Normaliens”. Serge Prudhomme is grateful for the support by a Discovery Grant from the Natural Sciences and Engineering Research Council of Canada [Grant Number RGPIN-2019-7154].

Author information

Authors and Affiliations

Contributions

Clément Vella: conceptualization, methodology, software, validation, formal analysis, investigation, writing—original draft, visualization. Pierre Gosselet: conceptualization, methodology, formal analysis, writing—review and editing. Serge Prudhomme: conceptualization, formal analysis, writing—review and editing, supervision, funding acquisition, discussions.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Time operators

Appendix: Time operators

The computation of time integrals is required to evaluate the coefficients of the problem in space presented in “Problem in space” section, i.e. \(k_{t}\), \(c_{t}\), \(d_{t}\), and \(m_{t}\). Let \(u = u(t)\) and \(v = v(t)\) be two functions of time and assume they are sufficiently regular. We consider continuous, piecewise linear approximations of u and v, which read in the case of u, and in a similar manner for v:

with \(h_{t} = t^{i} - t^{i - 1}\). We can now define the vectors \({\varvec{u}}, {\varvec{v}}\in {\mathbb {R}}^{n_{t}}\) as:

The time integrals are then approximated as:

with \(A_{t}\) and \(C_{t}\) the time operators such that:

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vella, C., Gosselet, P. & Prudhomme, S. An efficient PGD solver for structural dynamics applications. Adv. Model. and Simul. in Eng. Sci. 11, 15 (2024). https://doi.org/10.1186/s40323-024-00269-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40323-024-00269-z