Abstract

In this paper, we propose a new feature descriptor, named local mesh quantized extrema patterns (LMeQEP) for image indexing and retrieval. The standard local quantized patterns collect the spatial relationship in the form of larger or deeper texture pattern based on the relative variations in the gray values of center pixel and its neighbors. Directional local extrema patterns explore the directional information in 0°, 90°, 45° and 135° for a pixel positioned at the center. A mesh structure is created from a quantized extrema to derive significant textural information. Initially, the directional quantized data from the mesh structure is extracted to form LMeQEP of given image. Then, RGB color histogram is built and integrated with the LMeQEP to enhance the performance of the system. In order to test the impact of proposed method, experimentation is done with bench mark image repositories such as MIT VisTex and Corel-1k. Avg. retrieval rate and avg. retrieval precision are considered as the evaluation metrics to record the performance level. The results from experiments show a considerable improvement when compared to other recent techniques in the image retrieval.

Similar content being viewed by others

Background

Due to the proliferation of digital technologies and allied areas, large number of images are being created every moment and hence there is a dire need for a system that can organize these images in an efficient way for effective utilization. In text based retrieval technique, manual annotation is followed to organize and retrieve the images from the repositories. However, the amount of labor, subjectivity of interpretations are the major limitations of the text based methods. The varying, rich content in images becomes the bottleneck in perceiving the information. In other words, human perception of images always varies from person to person. Content-based image retrieval has been a significant method in addressing the issues of text based methods. The inherent contents of the image such as shape, color, texture, etc., are used to retrieve the images from the repository. An exhaustive and detailed description of image retrieval methods is available in Gai et al. (2013), Shi et al. (2011), Smeulders et al. (2000), Tang et al. (2013), Rui and Huang (1999).

Color content of an image plays an important role in providing more information about a scene and this information is used to retrieve images. Many search techniques based on color have been proposed by researchers, a few of these are outlined here in this section. A method based on color histogram and histogram intersection has been proposed by Swain and Ballard (1991). Stricker and Orengo (1995) introduced two novel color indexing methods using cumulative color histogram and color moments. A vector quantization method has been proposed by Idris and Panchanathan (1997) to obtain a code word, which becomes a feature vector. Similarly, Lu and Burkhardt (2005) introduced an integrated method to color image retrieval by combining vector quantization method and discrete cosine transform. A popular image compression technique called block truncation coding (BTC) has been introduced in Qiu (2003) to explore two main features i.e. block pattern histogram and block color co-occurrence matrix. Hauang et al. (1997) introduced color correlogram method for image retrieval. By incorporating spatial information, Pass and Zabih (1996) devised the color coherence vectors (CCV). In order to extract spatial information, Rao et al. (1999) made modification to color histogram. As part of it, three color histograms annular, angular and hybrid color histograms have been used. By taking care of the issues like change of illumination, orientation and view point geometry with reduced length of feature vector, a modified color co-occurrence matrix has been proposed by Chang et al. (2005)

Another prominent feature in CBIR is texture. Variance and mean values of the wavelet coefficients have been used to describe texture features in image retrieval by Smith and Chang (1996). Ahmadian and Mostafa (2003) devised the wavelet transform to classify the texture. Subrahmanyam et al. (2011) modified the concept of correlogram using wavelets and rotated wavelets (WC + RWC). A spatial computation method called local binary pattern (LBP) was introduced by Ojala et al. (1996) and later local binary patterns have been enhanced for rotational invariant texture classification (Ojala et al. 2002). Pietikainen et al. (2000) proposed the rotational invariant texture classification using feature distributions. Zhao and Pietikainen (2007) applied LBP in face recognition and analysis. Heikkil and Pietikainen (2006) used LBP for background modeling and detection by using LBP. Li and Staunton (2008) proposed a combination of LBP and Gabor filter for texture segmentation. By considering LBP as a non-directional 1st order spatial pattern, Zhang et al. (2010) presented local derivative pattern for face recognition areas. A modified version of LBP called center-symmetric LBP, combined with scale invariant feature transform (SIFT) has been used to describe interest region in Heikkil et al. (2009). An extension of LBP concept based on edge responses named Local directional pattern was proposed by Jabid et al. (2010). Subrahmanyam et al. (2012) designed different types of spatial pattern based feature descriptors, local tetra patterns (LTrP) local maximum edge patterns (LMEBP) (Subrahmanyam et al. 2012), directional local extrema patterns (DLEP) (Subrahmanyam et al. 2012) for natural or texture image retrieval. Directional binary wavelet patterns (DBWP) (Subrahmanyam et al. 2012), local mesh patterns (LMeP) (Subrahmanyam and Wu 2014) and local ternary co-occurrence patterns (LTCoP) (Subrahmanyam and Wu 2013) for biomedical image retrieval. Reddy and Reddy (2014) added magnitude information to DLEP to enhance the performance of retrieval system. To address few issues of LBP, Hussain and trigges (2012) introduced local quantized patterns (LQP) for visual recognition. Verma et al. (2015) proposed an integrated approach based on local patterns to natural, biomedical images (Koteswara Rao and Venkata Rao 2015). Vipparthi et al. integrated Local patterns and Gabor feature to propose a feature descriptor (Verma et al. 2015).

In recent times, combination of texture and color features has been proved effective in image retrieval. Jhanwar et al. (2004) introduced the motif co-occurrence matrix (MCM) for content-based image retrieval. They applied the MCM on blue (B), red (R) and green (G) color planes. In Vadivel et al. (2007) combined color and intensity co-occurrence matrix. After analyzing the features of HSV space, an appropriate weight values have been suggested to extract relative share of color and intensity levels of a pixel.

Recent methods on spatial patterns LQP (Hussain and Triggs 2012) and DLEP (Subrahmanyam et al. 2012) have motivated us to propose the local mesh quantized extrema patterns (LMeQEP) for image indexing and retrieval systems. Primary contributions of the work are briefed as follows. (1) Proposed method extracts a mesh quantized HVDA structure from an image (2) Directional extrema information is collected from the set of mesh patterns to create LMeQEP (3) To achieve the improvement in the performance, LMeQEP and RGB color histogram are integrated (4) Experiments are conducted on standard datasets of images for natural and texture image retrieval.

The paper is organized as follows: a brief review of image retrieval and related work is provided in Sect. “A review of existing spatial local patterns”. Section “Local quantized extrema patterns (LQEP)” provides a detailed review of existing feature extractors based on local patterns. A framework for image retrieval and similarity measures are depicted in Sect. “Local mesh quantized extrema patterns (LMeQEP)”. The results of the experiments and discussions are given in Sect. “Experimental results and discussion”. Conclusions and future directions are given in Sect. “Conclusions”.

A review of existing spatial local patterns

Local binary patterns (LBP)

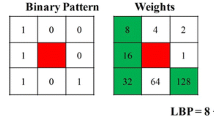

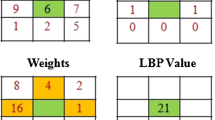

A modified form of texture unit called LBP operator was proposed by Ojala et al. (1996) for texture analysis. Some of the specific features such as speed (as there is no requirement to set parameters), simplicity made LBP prominent in many research directions. The performance is observed in multiple areas of research such as face recognition and analysis (Pietikainen et al. 2000; Zhao and Pietikainen 2007), object tracking (Subrahmanyam et al. 2012), texture classification (Ojala et al. 1996, 2002; Pietikainen et al. 2000; Zhao and Pietikainen 2007), content based retrieval systems (Subrahmanyam et al. 2012a, b, c, d; Subrahmanyam and Wu 2014, 2013; Reddy and Reddy 2014) and finger print recognition. A pixel at the center becomes the threshold to yield a local binary pattern in a small 3 × 3 arrangement of spatial structure. The computation of LBP of a center pixel w.r.t its neighbors is done according to Eqs. (1) and (2):

where I(n c ) is intensity value of center pixel, I(n p ) is gray level of its surrounding elements, X represents the no. of neighbors where as Y is the length of the neighborhood.

Subsequent to derivation of LBP for each pixel (j, k), a histogram is built to represent the whole image as per the Eq. (3).

where the size of input image is X 1 × X 2.

Calculation of LBP for a 3 × 3 neighborhood is given in Fig. 1. The occurrence of edges in the image is depicted by histograms and the histograms show the information pertaining to edge distribution.

Center-symmetric LBP (CS_LBP)

A center symmetric sets of pixels are considered instead of the existing center pixel-neighbor comparison by Heikkil and Pietikainen (2006). The computation of CS_LBP is done as per the Eq. (5):

Subsequent to calculation of CS_LBP for each pixel (j, k), a histogram is built to represent the extracted data in a similar way to LBP. The histogram is considered as the feature vector for retrieval.

Local directional pattern (LDP)

Jabid et al. (2010) have presented LDP for human face recognition. Relative edge response of a pixel in different directions is the key idea behind LDP. Kirsch masks are used to derive this spatial pattern. Eight masks are employed to extract responses in eight directions. High response values in particular direction indicate the presence of edge or corner. To get the information about the spatial location along with the pattern, the image is divided into regions. Subsequent to derivation of local pattern, a histogram with 56 bins is built to represent various values of the encoded image.

Local quantized patterns (LQP)

Hussain and Trigges (2012) have introduced LQP for visual recognition. The directional geometric information is extracted in vertical, horizontal, anti-diagonal, diagonal strips of pixels along conventional circular and disk-shaped areas. Figure 2 depicts the possible arrangement of quantized geometric structures for LQP calculation. The more details about LQP are available in Vipparthi et al. (2015).

Directional local extrema patterns (DLEP)

To derive the spatial structure of local texture, Subrahmanyam et al. (2012) proposed directional local extrema patterns (DLEP) for image retrieval system. Key idea behind DLEP is the extraction of local extrema of a center pixel g c .

In DLEP, local extrema values in 0°, 90°, 45° and 135° directions are taken out by considering the local variation in the values of center pixel and its neighbors as given below:

Local extremas are calculated according the Eq. (7)

Subsequently, DLEP is defined in β = 0°, 45°, 90°, and 135° as given below:

Local quantized extrema patterns (LQEP)

The operators DLEP (Jhanwar et al. 2004) and LQP (Vipparthi et al. 2015) are integrated to propose the LQEP (Koteswara Rao and Venkata Rao 2015) for image retrieval. In the first step, the possible structures are extracted from the considered pattern of an image. Then, local extrema method is applied on the extracted geometric structures in four major directions. As shown in the Fig. 3, pixels in 5 × 5 pattern are indexed with arbitrary values to enable user understanding. HVDA5 geometric structure is used for feature extraction in the presented work.

Local mesh quantized extrema patterns (LMeQEP)

The proposed method (LMeQEP) collects the spatial texture information from an image based on mesh and LQEP concepts. More discriminative information can be collected by forming the mesh with the pixels at alternate positions still without losing the connectivity in pixel information. The LMeQEP calculation is given in Fig. 4.

A method to collect HVDA5 geometric structure for a given center pixel (P) in an image I is given in Fig. 4. Directional extremas in four directions i.e. 0°, 90°, 45°, and 135° are derived as follows.

where,

Subsequently, LMeQEP is defined based on the Eqs. (10)–(13) as follows.

In the next step, given image is converted to LMeQEP map containing the values from 0 to 4095. A histogram supported by Eq. (16) is built to represent the frequency of occurrence of the LMeQEPs.

Proposed image retrieval system

In this paper, we propose a novel feature descriptor for image retrieval by applying the mesh concept on the extracted geometric structure. First, the image is loaded and converted into gray scale in case if it is RGB. The four directional HVDA5 structure is collected using the LQP method. Calculation of extremas in 0°, 90°, 45° and 135° directions is done on the mesh as given in Eqs. (10) to (13). Finally, the LMeQEP feature is generated by constructing the histogram. In order to enhance the performance of proposed method, LMeQEP is integrated with color RGB histogram.

The algorithm for the LMeQEP is given below and the schematic representation of the proposed technique is given in Fig. 5

Algorithm:

-

1.

Convert the RGB image into gray scale image.

-

2.

Extract the HVDA5 structure for a given center pixel.

-

3.

Compute the local extrema in 0°,90°, 45° and 135° directions.

-

4.

Derive the 12-bit LMeQEP with four directional extrema.

-

5.

Construct a histogram for 12-bit LMeQEP.

-

6.

Construct RGB histogram from the RGB image.

-

7.

Generate a feature vector by concatenating RGB and LMeQEP histograms.

-

8.

Compare the query image and a database image using Eq. (20).

-

9.

Retrieve the images based on the best matches

Query matching

Followed by feature extraction, feature vector for query image is represented as \(f_{R} = (f_{{R_{1} }} ,f_{{R_{2} }} , \ldots f_{{R_{Lg} }} )\). In the similar way, feature vector of every image in database is represented as \(f_{{DS_{j} }} = (f_{{DS_{j1} }} ,f_{{DS_{j2} }} , \ldots f_{{DS_{jLg} }} );\,j = 1,2, \ldots ,\left| {DS} \right|\). The primary requirement is to identify n best images those have close resemblance to the query image. This is achieved by measuring the distance between query and database image|DS|. We have used four different distance metrics as given in Eqs. (17)–(20).

Here,\(f_{{DS_{ji} }}\) represents ith feature of jth image in database |DS|.

Experimental results and discussion

To analyze the performance of LMeQEP method, experimentation is done with standard image databases. It is specified further that Corel-1k (Corel 1000), and MIT VisTex databases are used in this process (http://vismod.media.mit.edu/pub/).

In the process of experimentation, each image in the database is treated as query image. The system returns ‘n’ database images M = (m 1 , m 2 …m n ) for each query as per the distance measured as per the Eq. (17). When the resultant image m i = 1, 2…n is related to the category of query image, it can be established that the retrieval system is correct, otherwise it is treated as a failure.

Avg. retrieval precision and avg. retrieval rate are considered to evaluate performance of the proposed LMeQEP as shown below:

The precision for a query image is specified as follows:

Experiment no. 1

MIT VisTex database that contains images of forty varying textures (http://vismod.media.mit.edu/pub/) is used in the present work. As specified earlier, each image in database is treated as query image. Avg. retrieval precision, avg. retrieval rate mentioned earlier are used to compare the results.

Figure 6 depicts the performance of different methods against ARR on MIT VisTex database. It is obvious that proposed LMeQEP shows a substantial increase in the performance when compared to related approaches in terms of ARR on MIT VisTex image database. Figure 7 provides the query results of proposed LMeQEP on MIT VisTex dataset.

Experiment no. 2

Corel-1k database that contains large number of images with varying content Verma et al. (2015) is considered for the experiment. All the images have been pre-categorized into various classes each of size 100 by domain experts. 1000 images are collected from 10 different domains containing 100 images per domain. Avg. retrieval precision as provided in Eq. (22) is considered to evaluate the performance of our method. Figure 8 shows sample images of Corel-1k database.

Figure 9 illustrates the retrieval results of proposed LMeQEP and other existing methods w.r.t ARP on Corel-1k database which depicts that the LMeQEP method shows a substantial increase in ARP values as compared to other recent methods. Figure 10, depicts comparison of the precision Vs recall of various methods on Corel-1k database. From Fig. 10, it is obvious that the proposed LMeQEP method outperforms the other existing methods on Corel-1k database. Figure 11 shows retrieved images for a query image using Corel-1k database.

Conclusions

A novel image feature descriptor named local mesh quantized extrema patterns (LMeQEP) for texture and natural image retrieval is presented in this paper. A mesh is created out of the quantized geometric structure. The directional extrema information in specified directions is extracted from the quantized mesh of the pattern. By creating a mesh from a pattern, it has been found that more discriminative information associated with each pixel can be extracted. In order to enhance the performance of proposed method further, color feature in the form of RGB histogram is added to form the feature vector for retrieval system. The effectiveness of proposed method is tested by performing experiments with standard databases. The retrieval results show a considerable increase in the values of ARR, ARP when compared to other related, recent methods in image retrieval.

References

Ahmadian A, Mostafa A (2003) An efficient texture classification algorithm using Gabor wavelet. In: 25th annual international conference of the IEEE EMBS. Cancun, Mexico, pp 930–933

Chang MH, Pyun JY, Ahmad MB, Chun JH, Park JA (2005) Modified color co-occurrence matrix for image retrieval. Lect Notes Comput Sci 3611:43–50

Corel 1000 and Corel 10000 image database. [Online]. http://wang.ist.psu.edu/docs/related.shtml

Gai S, Yang G, Zhang S (2013) Multiscale texture classification using reduced quaternion wavelet transform. Int J Electron Commun (AEÜ) 67:233–241

Heikkil M, Pietikainen M (2006) A texture based method for modeling the background and detecting moving objects. IEEE Trans Pattern Anal Mach Intell 28(4):657–662

Heikkil M, Pietikainen M, Schmid C (2009) Description of interest regions with local binary patterns. Pattern Recognit 42:425–436

Huang J, Kumar S, Mitra M, Zhu W, Zabih R (1997) Image indexing using color correlograms. In: Proceeding computer vision and pattern recognition. San Jaun, Puerto Rico, pp 762–768

Hussain S, Triggs B (2012) Visual recognition using local quantized patterns. In: ECCV 2012, part II, LNCS 7573, Italy, pp 716–729

Idris F, Panchanathan S (1997) Image and video indexing using vector quantization. Mach Vis Appl 10:43–50

Jabid T, Kabir MH, Chae OS (2010) Local directional pattern (LDP) for face recognition. In: IEEE international conference on consumer electronics, pp 329–330

Jhanwar N, Chaudhuri S, Seetharaman G, Zavidovique B (2004) Content-based image retrieval using motif co-occurrence matrix. Image Vis Comput 22:1211–1220

KoteswaraRao L, Venkata Rao D (2015) Local quantized extrema patterns for content-based natural and texture image retrieval. Hum Cent Comput Inf Sci 5:26. doi:10.1186/s13673-015-0044

Li M, Staunton RC (2008) Optimum Gabor filter design and local binary patterns for texture segmentation. J Pattern Recognit 29:664–672

Lu ZM, Burkhardt H (2005) Colour image retrieval based on DCT domain vector quantisation index histograms. Electron Lett 41:956–957

MIT Vision and Modeling Group, Vision Texture. [Online]. http://vismod.media.mit.edu/pub/

Ojala T, Pietikainen M, Harwood D (1996) A comparative study of texture measures with classification based on feature distributions. J Pattern Recognit 29(1):51–59

Ojala T, Pietikainen M, Maenpaa T (2002) Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans Pattern Anal Mach Intell 24(7):971–987

Pass G, Zabih R (1996) Histogram refinement for content-based image retrieval. In: Proceedings of the IEEE workshop on applications of computer vision, pp 96–102

Pietikainen M, Ojala T, Scruggs T, Bowyer KW, Jin C, Hoffman K, Marques J, Jacsik M, Worek W (2000) Overview of the face recognition using feature distributions. J Pattern Recognit 33(1):43–52

Qiu G (2003) Color image indexing using BTC. IEEE Trans Image Process 12:93–101

Rao A, Srihari RK, Zhang Z (1999) Spatial color histograms for content-based image retrieval. In: Proceedings of the eleventh IEEE international conference on tools with artificial intelligence. Chicago, IL, USA, pp 183–187

Reddy PVB, Reddy ARM (2014) Content based image indexing and retrieval using directional local extrema and magnitude patterns. Int J Electron Commun (AEÜ) 68(7):637–643

Rui Y, Huang TS (1999) Image retrieval: current techniques, promising directions and open issues. J Vis Commun Image Represent 10:39–62

Shi F, Wang J, Wang Z (2011) Region-based supervised annotation for semantic image retrieval. Int J Electron Commun (AEÜ) 65:929–936

Smeulders AWM, Worring M, Santini S, Gupta A, Jain R (2000) Content-based image retrieval at the end of the early years. IEEE Trans Pattern Anal Mach Intell 22(12):1349–1380

Smith JR, Chang SF (1996) Automated binary texture feature sets for image retrieval. In: Proceedings of the IEEE international conference on acoustics, speech and signal processing. Columbia University, New York, pp 2239–2242

Stricker M, Orengo M (1995) Similarity of color images. In: Proceedings of the SPIE storage and retrieval for image and video databases III. San Jose, Wayne Niblack, pp 381–392.

Subrahmanyam M, Wu QMJ (2013) Local ternary co-occurrence patterns: a new feature descriptor for MRI and CT image retrieval. Neurocomputing 119(7):399–412

Subrahmanyam M, Wu QMJ (2014) Local mesh patterns versus local binary patterns: biomedical image indexing and retrieval. IEEE J Biomed Health Informat 18(3):929–938

Subrahmanyam M, Maheshwari RP, Balasubramanian R (2011) A correlogram algorithm for image indexing and retrieval using wavelet and rotated wavelet filters. Int J Signal Imag Syst Eng 4(1):27–34

Subrahmanyam M, Maheshwari RP, Balasubramanian R (2012a) Local Tetra patterns: a new feature descriptor for content based image retrieval. IEEE Trans Image Process 21(5):2874–2886

Subrahmanyam M, Maheshwari RP, Balasubramanian R (2012b) Local maximum edge binary patterns: a new descriptor for image retrieval and object tracking. Signal Process 92:1467–1479

Subrahmanyam M, Maheshwari RP, Balasubramanian R (2012c) Directional local extremal patterns: a new descriptor for content based image retrieval. Int J Multimed Inf Retr 1(3):191–203

Subrahmanyam M, Maheshwari RP, Balasubramanian R (2012d) Directional binary wavelet patterns for biomedical image indexing and retrieval. J Med Syst 36(5):2865–2879

Swain MJ, Ballard DH (1991) Color indexing. Int J Comput Vis 7:11–32

Tang Z, Zhang X, Dai X, Yang J, Wu T (2013) Robust image hash function using local color features. Int J Electron Commun (AEÜ) 67:717–722

Vadivel A, Sural S, Majumdar AK (2007) An integrated color and intensity co-occurrence matrix. Pattern Recognit Lett 28:974–983

Verma M, Raman B, Murala S (2015a) Local extrema co-occurence pattern for color and texture image retrieval. Nuerocomputing 165:255–269

Verma M, Raman B, Murala S (2015b) Centre symmetric local binary co-occurence pattern for texture, face and bio-medical image retrieval. J Vis Commun Image Represent 32:224–236

Vipparthi SK, Murala S, Nagar SK, Gonde AB (2015) Local Gabor maximum edge position octal patterns for image retrieval. Neuro Comput 167:336–345

Zhang B, Gao Y, Zhao S, Liu J (2010) Local derivative pattern versus local binary pattern: face recognition with higher-order local pattern descriptor. IEEE Trans Image Proc 19(2):533–544

Zhao G, Pietikainen M (2007) Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans Pattern Anal Mach Intell 29(6):915–928

Authors’ contributions

All authors listed have contributed sufficiently to be included as authors. In brief, Mr. LKR conceptualized LMeQEP algorithm, involved in experimentation. Dr. DVR carried out the experimentation and systematic manuscript preparation. Dr. LPR contributed to the analysis, interpretation, logical sequence of the research work. All authors read and approved the final manuscript.

Acknowledgements

The authors declare that they have no acknowledgements for the current research work.

Competing interests

The authors declare that they have no competing interests.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Koteswara Rao, L., Venkata Rao, D. & Reddy, L.P. Local mesh quantized extrema patterns for image retrieval. SpringerPlus 5, 976 (2016). https://doi.org/10.1186/s40064-016-2664-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40064-016-2664-9