Abstract

In this paper, a model of branching processes with random control functions and affected by viral infectivity in independent and identically distributed random environments is established, and the Markov property of the model and a sufficient condition for the model to be certainly extinct under some conditions are discussed. Then, the limit properties of the model are studied. Under the normalization factor \(\{S_{n}:n\in N\}\), the normalization processes \(\{\hat{W}_{n}:n\in N\}\) are studied, and the sufficient conditions of \(\{\hat{W}_{n}:n\in N\}\) a.s., \(L^{1}\) and \(L^{2}\) convergence are given; A sufficient condition and a necessary condition for convergence to a nondegenerate at zero random variable are obtained. Under the normalization factor \(\{I_{n}:n\in N\}\), the normalization processes \(\{\bar{W}_{n}:n\in N\}\) are studied, and the sufficient conditions of \(\{\bar{W}_{n}:n\in N\}\) a.s., and \(L^{1}\) convergence are obtained.

Similar content being viewed by others

1 Introduction

As an extension of the classical branching process, Sevast’yanov and Zubkov ([1]) established the branching process controlled by a real-valued function and studied the extinction and nonextinction probability of the model. Subsequently, Zubkov and Yanev ([2, 3]) generalized the model and established a controlled branching process with random control functions and discussed the conditions of extinction and nonextinction of the model. Yanev, Yanev, and Holzheimejr ([4–6]) studied some properties of the controlled branching processes in random environments, such as the extinction probability and extinction conditions. By using the properties of a conditional probability generating function, Bi and Li ([7]) obtained a sufficient condition for the inevitable extinction of a controlled branching process in random environments. Fang, Yang, and Li ([8]) studied the convergence rate of the limit of a normalized controlled branching process with random control function in random environments. Li, et al. ([9]) discussed the Markov property of a controlled branching process in random environments and the limit properties of the process after proper normalization, such as the conditions for convergence almost everywhere, convergence in \(L^{1}\) and \(L^{2}\). More research on controlled branching processes in random environments can be found in the literature ([10–14]). The reproduction process of species is affected by many factors such as natural environment and social environment, and highly infectious viruses such as the influenza virus, the SARS virus, and the novel coronavirus all have direct or indirect effects on the reproduction of species. Around 50 million people worldwide died of influenza in 1918, and according to the WHO, around 6.3 million people have died of the novel coronavirus as of June 30, 2022. Based on these issues, Ren et al. studied the Markov property of branching processes affected by viral infectivity in random environments, the limit properties of normalized processes, such as sufficient conditions for convergence almost everywhere and convergence in \(L^{1}\) and the bisexual branching process affected by viral infectivity in random environments, and gave the Markov property of the model, the properties of the probability generating function, and the extinction condition of the processes ([15, 16]).

In this paper, we mainly study the Markov property, extinction probability, and some limit properties of the branching process with random control functions and affected by virus infectivity in random environments, and discuss the limit properties of the normalized processes \(\{\hat{W}_{n},n\in N\}\) and \(\{\bar{W}_{n},n\in N\}\), such as the conditions for convergence almost everywhere and convergence in \(L^{1}\) and \(L^{2}\).

The remainder of this paper is organized as follows. In Sect. 2, some notations, definitions, and conventions are introduced. Sections 3–6 are devoted to presenting the main results, including the Markov property, the extinction probability, and the limit properties.

2 Preliminaries

In this section we present a convention, some notations, and basic definitions, which will be used in the remainder of the paper.

Let \((\Omega ,\mathfrak{F},P)\) be a probability space, \((\Theta ,\Sigma )\) a measurable space, \(\vec{\xi}=\{\xi _{0},\xi _{1},\ldots \}\) an independent and identically distributed (i.i.d.) sequence of random variables mapping from \((\Omega ,\mathfrak{F},P)\) to \((\Theta ,\Sigma )\), and \(N=\{0,1,2,\ldots \}\), \(N^{+}=\{1,2,\ldots \}\). T is a shift operator such that \(T(\vec{\xi})=\{\xi _{1},\xi _{2},\ldots \}\). \(\{X_{nj},n\in N,j\in N^{+}\}\) is a cluster of random variables mapping from \((\Omega ,\mathfrak{F},P)\) to N. Let \(\{P_{i}(\theta ):\theta \in \Theta , i\in N^{+}\}\), \(\{Q(\theta ;k,i):\theta \in \Theta ,k,i\in N\}\) and \(\{\alpha ^{x}(\theta )(1-\alpha (\theta ))^{1-x}, \theta \in \Theta ,x=0,1\}\) be probability distribution sequences. Let \(\{\phi _{n}(k):n,k\in N\}\) be a cluster of i.i.d. random functions with respect to n, from N to N, with distribution \(Q(\xi _{n};k,i)=P(\phi _{n}(k)=i|\vec{\xi})\), \(i\in N\).

Definition 2.1

If \(\{Z_{n},n\in N\}\) satisfies

-

(i)

\(Z_{0}=N_{0}\), \(Z_{n+1}=\sum_{j=1}^{\phi _{n}(Z_{n })}X_{nj}I_{nj}\), \(n \in N,N_{0}\), \(j\in N^{+}\);

-

(ii)

\(P(X_{nj}=r|\vec{\xi})=P_{r}(\xi _{n})\), \(r, n\in N\), \(j\in N^{+}\), \(P(I_{nj}=x|\vec{\xi})=\alpha ^{x}(\xi _{n})(1-\alpha ( \xi _{n}))^{(1-x)}\), \(x=0 \text{ or } 1\), \(n\in N\), \(j\in N^{+}\);

-

(iii)

\(P(X_{nj}=r_{nj},1\leq j\leq l,0\leq n\leq m|\vec{\xi})= \prod_{n=0}^{m}\prod_{j=1}^{l}P(X _{nj}=r_{nj}| \vec{\xi} )\), \(r_{nj}\in N\), \(1\leq j \leq l\), \(0\leq n \leq m\), \(m\in N\), \(l\in N^{+}\);

-

(iv)

for given \(\overrightarrow{\xi}\), \(\{X_{nj}:n\in N,j\in N^{+}\}\), \(\{I_{nj}:n\in N,j\in N^{+}\}\) and \(\{\phi _{n}(k):n,k\in N\}\) are of mutually conditional independence; furthermore, for given n, \(\{(X_{nj},I_{nj}): j\in N^{+}\}\) is a sequence of i.i.d. two-dimensional random variables.

Then, \(\{Z_{n}, n\in N\}\) is called a branching process with random control functions and affected by virus infectivity in random environments.

In the model under consideration, \(X_{nj}\) represents the number of offspring produced by the jth particle in the nth generation. We set \(I_{nj}=0\) when the jth particle in the nth generation dies of a viral infection, that is, it does not participate in the reproduction of the next generation; \(I_{nj}=1\) means the jth particle in the nth generation does not have the virus or was cured of it, that is, it normally participates in the reproduction of the next generation, \(\alpha (\xi _{n})\) represents the probability that the nth-generation particles will not be affected by the virus. \(Z_{n+1}\) represents the total number of the \((n+1)\)th-generation particles, \(\phi _{n}(\cdot )\) represents the control function in the reproduction process of the nth-generation particles and \(\phi _{n}(k)=i\) means that when the total number of the nth-generation particles is k, of which the number of particles participating in the reproduction of offspring is i.

We further introduce some convention and notations, which will be used in the following discussion.

In order to avoid the trivialities of the process, we assume throughout that

- \((A_{1})\):

-

For any \(n\in N\), it holds that

$$ 0< P_{0}(\xi _{n})+P_{1}(\xi _{n})< 1, \qquad 0< P\bigl(\phi _{n}(k)=k|\vec{\xi}\bigr)< 1,\quad \text{a.s.},k \in N^{+}. $$ - \((A_{2})\):

-

For any \(n\in N\), it holds that

$$ 0< \alpha (\xi _{n})< 1,\quad \text{a.s.} $$

Otherwise, if \(\alpha (\xi _{n})=1\), a.s., for any \(n\in N\), then the model under consideration will be the one in reference [9].

We give some notations by

3 Markov property

Definition 3.1

If for any \(x,n\in N\), it holds that

Then, X⃗ is defined as a Markov chain in random environment ξ⃗.

Theorem 3.2

\(\{Z_{n},n\geq 0\}\) is a Markov chain in random environment ξ⃗ with the one-step transition probabilities

Proof

From the definition of \(\{Z_{n},n\geq 0\}\), we have \(P(Z_{0}=N_{0}|\vec{\xi})=P(Z_{0}=N_{0}|\xi _{0})\), namely equation (3.1) holds.

The following is to prove equation (3.2) is true. When ξ⃗ is given, for any \(n\in N\), \(k\in N^{+}\), \(\phi _{n}(k)\), \(X_{nk}\) and \(I_{nk}\) are mutually independent, hence we obtain, for any \(i,j,i_{1},\ldots ,i_{n-1}\in N^{+}\),

By Definition 3.1, it is immediately obvious that \(\{Z_{n},n\geq 0\}\) is a Markov chain in random environment ξ⃗ with one-step transition probabilities

□

Lemma 3.3

For any \(n\in N\), it holds that

-

(i)

\(E(Z_{n+1}|\mathfrak{F}_{n}(\vec{\xi}))=Z_{n}m(\xi _{n})\alpha ( \xi _{n})\varepsilon (\xi _{n},Z_{n})\) a.s.

In particular, it follows that

$$ N_{0}\prod_{i=0}^{n-1}m(\xi _{i})\alpha (\xi _{i})\varepsilon _{1}( \xi _{i})\leq E(Z_{n}|\vec{\xi})\leq N_{0}\prod _{i=0}^{n-1}m(\xi _{i}) \alpha (\xi _{i})\varepsilon (\xi _{i}). $$ -

(ii)

\(\operatorname{Var}(Z_{n+1}|\mathfrak{F}_{n}(\vec{\xi}))=Z_{n}\varepsilon (\xi _{n},Z_{n})\operatorname{Var}(X_{n1}I_{n1}| \vec{\xi})+m^{2}(\xi _{n})\alpha ^{2}(\xi _{n})\delta ^{2 }(\xi _{n},Z_{n})\).

Proof

(i) Theorem 3.2 implies that

Since

the recurrence relation of equation (3.3) gives

By the definitions of \(\varepsilon (\xi _{n})\) and \(\varepsilon _{1}(\xi _{n})\), we deduce that

(ii) Using Theorem 3.2 gives

Thus, it holds that

□

4 The extinction probability of \(\{Z_{n},n\in N\}\)

An important tool in the analysis of the branching process in random environments is the generating function. In order to discuss the extinction probability of the model, we first introduce the relevant conditional probability generating function of the model as follows

For any \(n\in N\), \(i\in N^{+}\), from the independence of \(X_{ni}\) and \(I_{ni}\), we obtain

and we designate \(B(w)=\{w:Z_{n}=0,n\in N^{+}\}\), \(q(\vec{\xi})=P(B(w)|\vec{\xi},Z_{0}=N_{0})\) and \(q=P(B(w)| Z_{0}=N_{0})\), then \(q=E(q(\vec{\xi}))\).

If for some \(n\in N\), \(q=1\), then we say \(\{Z_{n},n\in N\}\) is certainly extinct; otherwise, \(\{Z_{n},n\in N\}\) is noncertainly extinct.

Lemma 4.1

If there exists a sequence of i.i.d. random variables \(\{\eta _{n},n\in N\}\) such that for any \(n\in N\), \(\sup_{k\geq 1}\frac{\phi _{n}(k)}{k}\leq \eta _{n}\) a.s., then

Proof

From the assumed condition, the properties of the generating functions of conditional probability and the fact that for any fixed n, \(X_{nj}I_{nj}\) is i.i.d. with respect to j, it follows that

namely (4.1) holds for \(n=1\). Supposing (4.1) holds for \(n=k\), we deduce by induction, for \(n=k+1\),

namely (4.1) holds for \(n=k+1\), which completes the proof of Lemma 4.1,

By the properties of generating functions,

and

Thus, \(\mu (\vec{\xi},\vec{\eta})=\lim_{n\rightarrow \infty}\mu _{n}( \vec{\xi},\vec{\eta})\) a.s., and

For \(q(\vec{\xi})=\lim_{n\rightarrow \infty}\Pi _{\xi _{n}}(0)\), then by (4.1)

□

Lemma 4.2

Suppose for any \(n\in N\),

-

(a)

If there exists a sequence of i.i.d. random variables \(\{\eta _{n},n\in N\}\) such that

$$ \sup_{k\geq 1}\frac{\phi _{n}(k)}{k}\leq \eta _{n}\quad \textit{a.s.}; $$ -

(b)

\(E((\log \eta _{0}N_{0}\alpha (\xi _{0})f'_{ \xi _{0}}(1))^{+})<\infty \) and \(\frac{1-(1-\alpha (\xi _{0})+\alpha (\xi _{0})f_{\xi _{0}}(s))^{\eta _{0}N_{0}}}{1-s}\) is strictly monotonically increasing with respect to s on \((0,1]\).

Then, on \(\{q(\vec{\xi})<1\} \), it holds that

-

(i)

\(E(| \log \frac{1-\mu (\vec{\xi},\vec{\eta})}{1-\mu (T\vec{\xi},T\vec{\eta})} | )<\infty \), \(E(\log \frac{1-\mu (\vec{\xi},\vec{\eta})}{1-\mu (T\vec{\xi},T\vec{\eta})})=0\);

-

(ii)

\(E(| \log N_{0}\eta _{0}\alpha (\xi _{0})f'_{\xi _{0}}(1) | )<\infty \), \(E(\log N_{0}\eta _{0}\alpha (\xi _{0})f'_{\xi _{0}}(1))>0\).

Proof

To prove (i), by Lemma 4.1, we obtain

hence,

If

then

Denote

then

Since

then

and iterating this gives

Hence, on {\(q(\vec{\xi})<1\)}, by the nonnegativity of \(h(\vec{\xi},\vec{\eta})\), we arrive at

i.e.,

From the monotonicity of \(\frac{1-[1-\alpha (\xi _{0})+\alpha (\xi _{0})f_{\xi _{0}}(s))]^{\eta _{0}N_{0}}}{1-s}\), it follows that

On \(\{q(\vec{\xi})<1\}\), it holds that \(\lim_{n\rightarrow \infty}n^{-1}h(\vec{\xi},\vec{\eta})=0\). Since \((\vec{\xi},\vec{\eta})\) are i.i.d., according to (4.2), we arrive at

By the law of large numbers, we have \(E(f^{+}(\vec{\xi},\vec{\eta}))<\infty \), so \(E(|f(\vec{\xi},\vec{\eta})|)<\infty \). As

\(\lim_{n\rightarrow \infty}n^{-1}h(\vec{\xi},\vec{\eta})=0\) and \((\vec{\xi},\vec{\eta})\) are i.i.d., then \(\lim_{n\rightarrow \infty}n^{-1}h(T^{n+1}\vec{\xi},T^{n+1} \vec{\eta})=0\).

Thus, we have \(E(f(\vec{\xi},\vec{\eta}))=0\), which completes the proof of (i).

Now, we turn to prove (ii). We only need to show that

A direct calculation gives

If

then

and since \(E(f(\vec{\xi},\vec{\eta}))=0\), then

From the assumed monotonicity it follows that

and

unless \(P(P_{1}(\xi _{n})=1)=1\), which contradicts with

Thus, it holds that

□

Theorem 4.3

Suppose for any \(n\in N\),

-

(i)

If there exists a sequence of i.i.d. random variables \(\{\eta _{n},n\in N\}\) such that

$$ \sup_{k\geq 1}\frac{\phi _{n}(k)}{k}\leq \eta _{n}\quad \textit{a.s.}; $$ -

(ii)

\(E((\log \eta _{0}N_{0}\alpha (\xi _{0})f'_{\xi _{0}}(1))^{+})< \infty \) and \(\frac{1-(1-\alpha (\xi _{0})+\alpha (\xi _{0})f_{\xi _{0}}(s))^{\eta _{0}N_{0}}}{1-s}\) is strictly monotonically increasing with respect to s on \((0,1]\).

Then, when \(E((\log \eta _{0}N_{0}\alpha (\xi _{0})f'_{\xi _{0}}(1)))\leq 0\), we have \(P(q(\vec{\xi})=1)=1\), i.e., \(\{Z_{n},n\in N\}\) is certainly extinct.

Proof

We proceed with the proof by contradiction. Suppose \(P(q(\vec{\xi})=1)<1\) when \(E((\log \eta _{0}N_{0}\alpha (\xi _{0})f'_{\xi _{0}}(1)))\leq 0\), then

From Lemma 4.2 we obtain that the assumed conditions (i) and (ii) in this theorem hold, then on \(\{q(\vec{\xi})<1\}\),

which contradicts our assumption and completes the proof. □

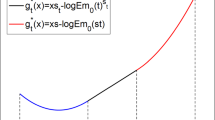

Since the expression of the conditional expectation of the process cannot be calculated precisely, using the upper and lower bounds of the conditional expectation of the process given by Lemma 3.3, we define two random sequences \(\{S_{n},n\in N\}\) and \(\{I_{n},n\in N\}\), where

and obviously \(S_{0}=I_{0}=N_{0}\). Regarding \(S_{n}\) and \(I_{n}\) as normalized factors, we define two random sequences as \(\hat{W}_{n}=Z_{n}S_{n}^{-1}\), \(\bar{W}_{n}=Z_{n}I_{n}^{-1}\), \(n\in N\).

In what follows, we discuss the limit properties of \(\{\hat{W}_{n},n\in N\}\) and \(\{\bar{W}_{n},n\in N\}\).

5 The limit properties of \(\{\hat{W}_{n},n\in N\}\)

Theorem 5.1

\(\{\hat{W}_{n},\mathfrak{F}_{n}(\vec{\xi}),n \in N \}\) is a nonnegative supermartingale, and there exists a nonnegative finite random variable Ŵ such that

and

Proof

From Lemma 3.3 we obtain

namely \(\{\hat{W}_{n},\mathfrak{F}_{n}(\vec{\xi}),n\in N \}\) is a nonnegative supermartingle. According to the Doob martingale convergence theorem, there exists a nonnegative, finite random variable Ŵ satisfying

Taking the conditional expectation with respect to ξ on both sides of of (5.1), we are able to obtain recursively

Using the Fatou Lemma gives

which completes the proof. □

Theorem 5.2

If \(\sum_{i=0}^{\infty}E( \frac{m_{2}(\xi _{i})}{S_{i}m^{2}(\xi _{i})\alpha (\xi _{i})\varepsilon (\xi _{i})})< \infty \) and \(\sum_{i=0}^{\infty}E( \frac{\delta ^{2}(\xi _{i})}{S_{i}^{2}\varepsilon ^{2}(\xi _{i})})< \infty \), then \(\{\hat{W}_{n},n\in N\}\) is bounded in \(L^{2}\) and converges in \(L^{1}\) to Ŵ.

Proof

From Lemma 3.3 and the fact that for given ξ⃗ and any \(n\in N\), \(k\in N^{+}\), \(X_{nk}\) and \(I_{nk}\) are mutually independent, one can derive

Taking the conditional expectation on both sides of (5.2) and combining with Theorem 5.1, we have

Taking the expectation on both sides of (5.3), it is deduced recursively that

Owing to the assumed condition, we obtain that \(\{E\hat{W}_{n}^{2},n\in N\}\) is bounded, namely \(\{ \hat{W}_{n},n\in N\}\) is bounded in \(L^{2}\). Hence, \(\{ \hat{W}_{n},n\in N\}\) is uniformly integrable, which combined with Theorem 5.1 yields the desired result that \(\{\hat{W}_{n},n\in N\}\) converges in \(L^{1}\) to Ŵ. □

Now, we give the condition that the limit Ŵ of \(\{\hat{W}_{n},n\in N\}\) is nondegenerate, beginning by introducing a Lemma.

Lemma 5.3

([10])

Set \(R^{+}=(0,+\infty )\), when ξ⃗ is given, for any fixed \(n\in N\),

-

(i)

If \(\{a_{j}(\xi _{n}),j\in N^{+}\}\) is a nondecreasing sequence, there exists a nondecreasing function \(\varphi _{\xi _{n}}(\cdot )\) on \(R^{+}\) such that \(\varphi _{\xi _{n}}(x)\geq a_{1}(\xi _{n})\), \(x>0\); \(\varphi _{\xi _{n}}(j) \leq a_{j}(\xi _{n})\), \(j\in N^{+}\) and \(\varphi _{\xi _{n}}^{\ast}(x)\equiv x\cdot \varphi _{\xi _{n}}(x)\), \(x>0\) is convex.

-

(ii)

If \(\{a_{j}(\xi _{n}),j\in N^{+}\}\) is a nonincreasing sequence, there exists a nonincreasing function \(\psi _{\xi _{n}}(\cdot )\) on \(R^{+}\) such that \(\psi _{\xi _{n}}(x)\leq a_{1}(\xi _{n})\), \(x>0\); \(\psi _{\xi _{n}}(j)\geq a_{j}( \xi _{n})\), \(j\in N^{+}\) and \(\psi _{\xi _{n}}^{\ast}(x)\equiv x\cdot \psi _{\xi _{n}}(x)\), \(x>0\) is concave.

For any fixed \(n\in N\), let \(\{\varepsilon (\xi _{n},k):k\in N^{+}\}\) be a nondecreasing sequence, then by Lemma 5.3 there exists a nondecreasing \(\varphi _{\xi _{n}}(\cdot )\) on \(R^{+}\) such that \(\varphi _{\xi _{n}}(x)\geq \varepsilon (\xi _{n};1)\), \(x>0\); \(\varphi _{ \xi _{n}}(j)\leq \varepsilon (\xi _{n};j)\), \(j\in N^{+}\) and \(\varphi _{\xi _{n}}^{\ast}(x)\equiv x\varphi _{\xi _{n}}(x)\), \(x>0\) is convex.

Theorem 5.4

For any fixed \(n\in N\), if \(\{\varepsilon (\xi _{n};k):k\in N^{+}\} \) is a nondecreasing sequence and

then \(E(\hat{W})>0\), i.e., \(P(\hat{W}>0)>0\).

Proof

From the Lemmas 3.3 and 5.3, one obtains

Since for any \(n\in N\), \(\varphi _{\xi _{n}}(\cdot )\) is nondecreasing and \(\varphi _{\xi _{n}}^{\ast}(\cdot )\) is convex, taking the conditional expectation on both sides of (5.5) and combining the Jensen inequality and Lemma 3.3 yields

Iterating (5.6) with respect to n, we obtain

By the assumed condition of Theorem 5.4 and Fatou Lemma, we deduce that

from which it follows \(E(\hat{W})>0\), which completes the proof. □

Theorem 5.5

If \(P(\hat{W}>0)>0\), then it holds on \(\{\hat{W}>0\}\)

Proof

For any \(n\in N\), Lemma 3.3 implies

Hence,

Since \(E(\hat{W}_{0})=1\), iterating (5.7) gives

In (5.8), letting \(n\rightarrow \infty \), we arrive at

Thus,

From (5.9), we have for almost everywhere \(w\in \{\hat{W}>0\}\), it holds that

Since \(\lim_{n\rightarrow \infty}\hat{W}_{n}(w)=\hat{W}(w)>0 \), by the sign-preserving property of the limit, there exists \(k(w)\) satisfying \(0< k(w)<\hat{W}(w)\) and \(n_{0}(w)\in N^{+}\) such that when \(n>n_{0}(w)\), it holds that

Therefore, on \(\{\hat{W}>0\}\), we have \(\sum_{k=0}^{\infty}[1- \frac{\varepsilon (\xi _{k};Z_{n})}{\varepsilon (\xi _{k})}]<\infty \), a.s. □

Below, we prove the convergence in \(L^{2}\) of \(\{\hat{W}_{n},n\in N\}\).

Theorem 5.6

Under the condition of Theorem 5.2, if

and

Then, \(\{\hat{W}_{n},n\in N\} \) converge in \(L^{2}\) to Ŵ.

Proof

Since \(\{\hat{W}_{n},\mathfrak{F}_{n}(\vec{\xi}),n\in N\}\) is a nonnegative supermartingale, from the Doob martingale decomposition theorem, it follows that, for any \(n\in N\), \(\hat{W}_{n}=Y_{n}-T_{n}\), where \(\{Y_{n},\mathfrak{F}_{n}(\vec{\xi}),n\in N\}\) is a martingale, \(\{T_{n},n\in N\}\) is an increasing process with

In what follows, we prove \(\{T_{n},n\in N\}\) is bounded in \(L^{2}\).

Since

from (5.4) we can derive

Thus,

Therefore,

According to the assumed condition of Theorem 5.6, \(\{T_{n},n\in N\}\) is bounded in \(L^{2}\), from which and the fact \(\{T_{n},n\in N\}\) is a nonnegative increasing process, it follows that \(\{T_{n},n\in N\}\) converges in \(L^{2}\). From Theorem 5.2, we have \(\{\hat{W}_{n},n\in N\}\) is bounded in \(L^{2}\), so \(\{Y_{n},n\in N\}\) is bounded in \(L^{2}\). Since \(\{Y_{n},\mathfrak{F}_{n}(\vec{\xi} ),n\in N\} \) is a martingale, \(\{Y_{n},n\in N\}\) converges in \(L^{2}\), and therefore \(\{\hat{W}_{n},n\in N\}\) converges in \(L^{2}\) to Ŵ. □

6 The limit properties of \(\{\bar{W}_{n},n\in N\}\)

Theorem 6.1

If \(E(\prod_{k=0}^{\infty} \frac{\varepsilon (\xi _{k})}{\varepsilon _{1}(\xi _{k})})<\infty\), then there exists a nonnegative, infinite random variable W̄ such that \(\lim_{n\rightarrow \infty} \bar{W}_{n}=\bar{W}\) a.s., and \(E(\bar{W})<\infty \).

Proof

From Lemma 3.3, it follows that

Namely, \(\{\bar{W}_{n},\mathfrak{F}_{n}(\vec{\xi}),n\in N\}\) is a nonnegative submartingale and

Taking expectation on both sides of (6.1), we arrive at

An immediate consequence of the assumed condition of Theorem 6.1 is \(\sup_{n\geq 0}E(\bar{W}_{n})<\infty \). By the submartingale convergence theorem, there exists a nonnegative random variable W̄ such that

and \(E(\bar{W})<\infty \).

Below, we discuss the condition of \(\{\bar{W}_{n},n\in N\} \) converges in \(L^{1}\).

We set

then it holds that \(E(\frac{|\bar{W}_{n+1}-\bar{W}_{n}|}{\bar{W}_{n}}|Z_{n},\mathfrak{F}_{n}( \vec{\xi}))=(\varepsilon _{1}(\xi _{n})m(\xi _{n}))^{-1}r_{Z_{n}}( \xi _{n})\). for fixed \(n\in N\), let \(\{r_{k}(\xi _{n}),k\in N^{+}\}\) be a nonincreasing sequence. Namely, as the number of particles increases, the absolute value of the average growth rate of \(\bar{W}_{n}\) is required to decrease. By Lemma 5.3, there exists a nonincreasing function \(\psi _{\xi _{n}}(\cdot )\) on \(R^{+}\) such that \(\psi _{\xi _{n}}(x)\leq r_{1}(\xi _{n})\), \(x>0\); \(\psi _{\xi _{n}}(j)\geq r_{j}( \xi _{n})\), \(j\in N^{+}\) and \(\psi _{\xi _{n}}^{\ast }(x)\equiv x\psi _{\xi _{n}}(x)\), \(x>0\) is concave. □

Lemma 6.2

Suppose

and for given n, \(\{r_{k}(\xi _{n}):k\in N^{+}\}\) is a nonincreasing sequence, then \(\{\bar{W}_{n},n\in N\}\) converges in \(L^{1}\) to nonnegative, infinite random variable W̄.

Proof

We begin with proving \(\{\bar{W}_{n},n\in N\} \) is a \(L^{1}\text{-}\mathit{Cauchy}\) sequence. By considering Lemma 5.3, it suffices to show that

Since \(\psi _{\xi _{n}}(\cdot )\) is nondecreasing and \(\psi _{\xi _{n}}^{\ast}(\cdot )\) is concave, then by Jensen’s inequality, we obtain

Lemma 3.3 implies that

Thus, we have

Summing (6.2) with respect to n gives

Considering the assumed condition of Lemma 6.2, it is immediately clear that

Namely, \(\{\bar{W}_{n},n\in N\}\) is a \(L^{1}\text{-}\mathit{Cauchy}\) sequence, so \(\{\bar{W}_{n},n\in N\} \) converges in \(L^{1}\) to a nonnegative, finite random variable W̄. □

7 Conclusion

A model of branching processes with random control functions and affected by viral infectivity in an i.i.d. random environment is established, and the Markov property of the model, the sufficient conditions for certain extinction, and some limit properties of the normalized processes are studied. The relevant conclusions of the branching processes are extended and their application fields are expanded. Next, we intend to study the limit theory of the model further, such as the convergence rate of the limit and the central limit theorem, and some properties of the branching processes with random control functions and affected by viral infectivity in i.i.d. random environments with different distributions and stationary traversal random environments, and will try to give application examples.

Availability of data and materials

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

References

Sevast’yanov, B.A., Zubkov, A.M.: Controlled branching process. Theory Probab. Appl. 19(1), 12–24 (1974)

Yanev, N.M.: Conditions for degeneracy of φ-branching processes with random φ. Theory Probab. Appl. 20, 421–428 (1975)

Zubkov, A.M., Yanev, N.M.: Conditions for extinction of controlled branching processes. Math. Educ. Math. 12, 550–555 (1989)

Yanev, N.M.: Controlled branching processes in random environments. Math. Balk. 7, 137–156 (1977)

Yanev, G.P., Yanev, N.M.: Extinction of controlled branching processes in random environment. Math. Balk. 4, 368–380 (1990)

Holzheimejr, J.: ϕ-Branching processes in a random environment. Zastos. Mat. 18, 351–358 (1984)

Bi, Q.X., Li, J.F.: Controlled branching process in random environments. J. Math. 23(4), 437–442 (2003)

Fang, L., Yang, X.Q., Li, Y.Q.: Convergence rates for a controlled branching process with random control functions in the varying environment. J. Univ. Chin. Acad. Sci. 31(2), 160–164 (2014)

Li, Y.Q., Li, D.R., Pan, S., Peng, X.L.: Limit theorems for controlled branching processes in random environments. Acta Math. Sinica (Chin. Ser.) 61(2), 317–326 (2018)

Rosenkranz, G.: Diffusion approximation of controlled branching processes with random environment. Stoch. Anal. Appl. 3(3), 363–377 (1985)

Wang, W.G., Hu, D.H.: Stability of controlled branching processes in random environment. J. Math. 29(3), 237–241 (2009)

Hu, Y.L., Wang, J., Wang, H.S.: Controlled branching processes with random comtrol function. J. Math. 30(2), 333–337 (2010)

Wang, Y.P., Peng, Z.H., Li, N.S.: The convergence rate of controlled branching process in random environment. J. Hunan Univ. Arts Sci. (Sci. Technol.) 30(4), 8–12 (2018)

Tan, K., Chen, Y., Wang, Y.P.: Limit properties of controlled branching process in a random environment. J. Hunan Univ. Arts Sci. (Sci. Technol.) 32(1), 1–3 (2020)

Ren, M.: Limit properties for branching process affected by communicable diseases in random environments. J. Zhejiang Univ. Sci. 49(1), 53–59 (2022)

Ren, M., Wang, J.J., Wang, Y.P.: Probability generating functions and extinction conditions for the bisexual branching processes affected by infectivity of virus in random environments. Wuhan Univ. J. Nat. Sci. 67(3), 263–269 (2021)

Acknowledgements

The authors want to express their sincere thanks to the referee for his or her valuable remarks and suggestions, which made this paper more readable.

Funding

This survey is supported by the National Natural Science Foundation of China (Grant No. 11971034), the Natural Science Foundation of Anhui Universities (Grant No. 2022AH051370,Grant No. KJ2021A1101, Grant No. KJ2020A0731), and the Humanities and Social Science Foundation of Anhui Universities (Grant No. SK2020A0527).

Author information

Authors and Affiliations

Contributions

MR was a major contributor in writing the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ren, M., Zhang, G. Some properties of branching processes with random control functions and affected by viral infectivity in random environments. Adv Cont Discr Mod 2023, 26 (2023). https://doi.org/10.1186/s13662-023-03775-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-023-03775-3