Abstract

In this paper, an efficient numerical method is presented for solving nonlinear stochastic Itô–Volterra integral equations based on Haar wavelets. By the properties of Haar wavelets and stochastic integration operational matrixes, the approximate solution of nonlinear stochastic Itô–Volterra integral equations can be found. At the same time, the error analysis is established. Finally, two numerical examples are offered to testify the validity and precision of the presented method.

Similar content being viewed by others

1 Introduction

Stochastic integral equations are widely applied in engineering, biology, oceanography, physical sciences, etc. There systems are dependent on a noise source, such as Gaussian white noise. As we all know, many stochastic Volterra integral equations do not have exact solutions, so it makes sense to find more precise approximate solutions to stochastic Volterra integral equations. There are different numerical methods to stochastic Volterra integral equations, for example, orthogonal basis methods [1,2,3,4,5,6,7,8,9,10], wash series methods [11, 12], and polynomials methods [13,14,15,16].

In [1], Fakhrodin studied linear stochastic Itô–Volterra integral equations (SIVIEs) through Haar wavelets (HWs). In [3], Maleknejad et al. also considered the same integral equations by applying block pulse functions (BPFs). In [9], Heydari et al. solved linear SIVIEs by the generalized hat basis functions. Meanwhile, in line with the same hat functions, Hashemi et al. also presented the numerical method of nonlinear SIVIEs driven by fractional Brownian motion [8]. Moreover, Jiang et al. applied BPFs to solve two-dimensional nonlinear SIVIEs [7]. In a general way, Zhang studied the existence and uniqueness solution to stochastic Volterra integral equations with singular kernels and constructed an Euler type approximation solution [17, 18].

Inspired by the discussion above, we use HWs to solve the following nonlinear SIVIE:

where \(x(v)\) is an unknown stochastic process defined on some probability space \((\varOmega,\mathcal{F},P)\), \(k(u,v)\) and \(r(u,v)\) are kernel functions for \(u, v\in[0,1)\), and \(x_{0}(v)\) is an initial value function. \(B(u)\) is a Brownian motion and \(\int _{0}^{v}r(u,v)\rho(x(u))\,dB(u)\) is Itô integral. σ and ρ are analytic functions that satisfy some bounded and Lipschitz conditions.

In contrast to the above papers [1, 3, 7,8,9], the differences of this paper are as follows. Firstly, we construct a preparation theorem to deal with the nonlinear analytic functions. Secondly, the error analysis is strictly proved. Finally, compared with the reference [8], the numerical solution is more accurate and the calculation is simpler because of the use of HWs. Moreover, the rationality and effectiveness of this method can be further supported by two examples.

The structure of the article is as follows.

In Sect. 2, some preliminaries of BPFs and HWs are given. In Sect. 3, the relationship between HWs and BPFs is shown. In Sect. 4, the approximate solutions of (1) are derived. In Sect. 5, the error analysis of the numerical method is demonstrated. In Sect. 6, the validity and efficiency of the numerical method are verified by two examples.

2 Preliminaries

BPFs and HWs have been widely analysed by lots of scholars. For details, see references [1, 3].

2.1 Block pulse functions

BPFs are denoted as

for \(i=0,\ldots,m-1\), \(m=2^{L}\) for a positive integer L and \(h=\frac {1}{m}\), \(v\in[0,1)\).

The basic properties of BPFs are shown as follows:

- (i)

disjointness:

$$ \psi_{i}(v)\psi_{j}(v)=\delta_{ij} \psi_{i}(v), $$(2)where \(v\in[0,1)\), \(i, j=0,1,\ldots,m-1\), and \(\delta_{ij}\) is Kronecker delta;

- (ii)

orthogonality:

$$\int_{0}^{T}\psi_{i}(v) \psi_{j}(v)\,dt=h\delta_{ij}; $$ - (iii)

completeness property: for every \(g\in L^{2}[0,1)\), Parseval’s identity satisfies

$$ \int_{0}^{1}g^{2}(v)\,dv=\lim _{m\to\infty}\sum_{i=0}^{m}(g_{i})^{2} \bigl\Vert \psi _{i}(v) \bigr\Vert ^{2}, $$(3)where

$$g_{i}=\frac{1}{h} \int_{0}^{1}g(v)\psi_{i}(v)\,dv. $$

The set of BPFs can be represented by the following m-dimensional vector:

From the above description, it yields

where \(F_{m}= (f_{0},f_{1},\ldots,f_{m-1} )^{T}\) and \(\mathbf {D}_{F_{m}}=\operatorname{diag}(F_{m})\).

Furthermore, for an \(m\times m\) matrix M, it yields

where M̂ is an m-dimensional vector and its entries equal the main diagonal entries of M.

In accordance with BPFs, every function \(x(v)\) which satisfies square integrable conditions in the interval \([0,1)\) can be approached as follows:

where the function \(x_{m}(v)\) is an approximation of the function \(x(v)\) and

Similarly, every function \(k(u,v)\) defined on \([0,1)\times[0,1)\) can be written as

where \(\mathbf{K}= (k_{ij} )_{m_{1}\times m_{2}}\) with

and \(h_{1}=\frac{1}{m_{1}}\), \(h_{2}=\frac{1}{m_{2}}\).

2.2 Haar wavelets

The notation and definition of HWs are introduced in this section (also see [1]). The set of orthogonal HWs is defined as follows:

where \(h_{0}(v)=1\), \(v \in[0,1)\), and

For HWs \(h_{n}(v)\) defined in \([0,1)\), we have

where \(\delta_{ij}\) is the Kronecker delta.

In accordance with HWs, every function \(x(v)\) that satisfies square integrable conditions can be approached as follows:

where

We can see that when \(m=2^{L}\), equation (8) can be rewritten as

Obviously, the vector form is as follows:

where \(H_{m}= (h_{0}(v),h_{1}(v),\ldots,h_{m-1}(v) )^{T}\) and \(C_{m}= (c_{0},c_{1},\ldots,c_{m-1} )^{T}\) are HWs and Haar coefficients, respectively.

Similarly, every function \(k(u,v)\) defined on \([0,1)\times[0,1)\) can be approached as follows:

where \(\mathbf{K}= (k_{ij} )_{m\times m}\) with

3 Haar wavelets and BPFs

Some lemmas about HWs and BPFs are introduced in this section. For a detailed description, see the reference [1].

Lemma 3.1

Suppose that \(H_{m}(v)\)and \(\varPsi_{m}(v)\)are respectively given in (10) and (4), \(H_{m}(v)\)can be written in accordance with BPFs as follows:

where \(\mathbf{Q}= (Q_{ij} )_{m \times m}\)and

Proof

See [1]. □

Lemma 3.2

Suppose thatQis given in (11), then we have

whereIis an \(m \times m\)identity matrix.

Proof

See [1]. □

Lemma 3.3

Suppose thatFis anm-dimensional vector, we have

where \(\tilde{\mathbf{F}}\)is an \(m \times m\)matrix and \(\tilde {\mathbf{F}}=\mathbf{Q}\bar{\mathbf{F}}\mathbf{Q^{-1}}\), \(\bar {\mathbf{F}}=\operatorname{diag}(\mathbf{Q}^{T}F)\).

Proof

See [1]. □

Lemma 3.4

Suppose thatMis an \(m\times m\)matrix, we have

where \(\hat{M}=N^{T}\mathbf{Q}^{-1}\)is anm-dimensional vector and the entries of the vectorNare the diagonal entries of matrix \(\mathbf{Q}^{T}\mathbf{MQ}\).

Proof

See [1]. □

Lemma 3.5

Suppose that \(\varPsi_{m}(v)\)is given in (4), we have

where

Proof

Lemma 3.6

Suppose that \(\varPsi_{m}(v)\)is given in (4), we have

where

Proof

Lemma 3.7

Suppose that \(H_{m}(v)\)is given in (10), we have

whereQandPare respectively given in (11) and Lemma 3.3, \(\boldsymbol{\varLambda}=\frac{1}{m}\mathbf {Q}\mathbf{P}\mathbf{Q}^{T}\).

Proof

Lemma 3.8

Suppose that \(H_{m}(v)\)is given in (10), we have

whereQand \(\mathbf{P}_{B}\)are respectively given in (11) and Lemma 3.3and \(\boldsymbol{\varLambda}_{B}=\frac {1}{m}\mathbf{Q}\mathbf{P}_{B}\mathbf{Q}^{T}\).

Proof

4 Numerical method

For convenience, we set \(m_{1}=m_{2}=m\) and nonlinear SIVIE (1) can be solved by HWs. Firstly, a useful result for HWs is proved.

Theorem 4.1

For the analytic functions \(\sigma (v)=\sum a_{j}v^{j}\), \(\rho(v)=\sum b_{j}v^{j}\)andjis a positive integer, then

where \(H_{m}(v)\)and \(C_{m}\)are derived in (10),

Proof

According to the disjointness property of HWs, we can deduce

thus,

Similarly,

The proof is completed. □

Now, in order to solve (1), we approximate \(x(v)\), \(x_{0}(v)\), \(k(u,v)\), and \(r(u,v)\) in following forms by HWs:

where \(C_{m}\) and \({C_{0}}_{m}\) are HWs coefficient vectors, K and R are HWs coefficient matrices. Substituting approximations (12)–(17) into (1), we have

By Lemma 3.3, we get

Applying Lemmas 3.7 and 3.8, we get

then by Lemma 3.4, we derive

where \(\mathbf{A}_{0}=\mathbf{K}^{T}\tilde{\boldsymbol{\sigma}(C_{m})} \boldsymbol{\varLambda}\) and \(\mathbf{B}_{0}=\mathbf{R}^{T} \tilde{\boldsymbol{\rho}(C_{m})} \boldsymbol{\varLambda}_{B}\).

For nonlinear equation (18), a series of methods, such as simple trapezoid method, Simpson method, and Romberg method, are often introduced in the numerical analysis courses. In this paper, the function of fsolve in MATLAB is used to solve equation (18).

5 Error analysis

In contrast to the articles [1, 3], we will give a strict and accurate error analysis in this section. Firstly, we recall two useful lemmas.

Lemma 5.1

Suppose that function \(x(u)\), \(u\in[0,1)\)satisfies the bounded condition and \(e(u)=x(u)-x_{m}(u)\), where \(x_{m}(u)\)is m approximations of HWs of \(x(u)\), then

Proof

See [1]. □

Lemma 5.2

Suppose that the function \(x(u,v)\)satisfying the bounded condition is defined on \(\mathbf{D}=[0,1)\times[0,1)\)and \(e(u,v)=x(u,v)-x_{m}(u,v)\), where \(x_{m}(u,v)\)is m approximations of HWs of \(x(u,v)\), then

Proof

See [1]. □

Secondly, let \(e(v)=x(v)-x_{m}(v)\), where \(x_{m}(v)\), \(x_{0_{m}}(v)\), \(k_{m}(u,v)\), and \(r_{m}(u,v)\) are m approximations of Haar wavelets of \(x(v)\), \(x_{0}(v)\), \(k(u,v)\), and \(r(u,v)\), respectively.

Lastly, the main convergence theorem is proved.

Theorem 5.1

Suppose that analytic functionsσandρsatisfy the following conditions:

- (i)

\(|\sigma(x)-\sigma(y)| \leq l_{1}|x-y|\), \(| \rho(x)-\rho(y)|\leq l_{3} |x-y|\);

- (ii)

\(|\sigma(x)|\leq l_{2}\), \(|\rho(y)|\leq l_{4}\);

- (iii)

\(|k(u,v)|\leq l_{5}\), \(|r(u,v)|\leq l_{6}\),

where \(x,y\in\mathbb{R}\), constant \(l_{i}>0\), \(i=1, 2, \ldots, 6\). Then

Proof

For (21), we have

On the basis of Lipschitz continuity, Itô isometry, and Cauchy–Schwarz inequality, it yields

Then we can get

or

Let \(f(v)=\mathbb{E} ( |e_{m}(v) |^{2} )\), we get

By Gronwall’s inequality, it follows that

Then

By using (19) and (20), we have

So we can get

where constant \(w_{i}>0\), \(i=1, 2, \ldots, 6\).

The proof is completed. □

6 Numerical examples

In this section, some examples are given to verify the validity and rationality of the above method.

Example 6.1

Consider the nonlinear SIVIE [6, 8]

where

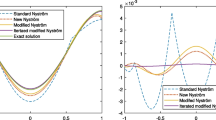

In this example, \(a=\frac{1}{30}\) and \(x_{0}(v)=\frac{1}{10}\). The error means \(E_{m}\), error standard deviations \(E_{s}\), and confidence intervals of Example 6.1 for \(m=2^{4}\) and \(m=2^{5}\) are shown in Table 1 and Table 2, respectively. The error means \(E_{m}\) and error standard deviations \(E_{s}\) are obtained by 104 trajectories. Compared with Table 2 in [8], \(E_{m}\) is smaller and the confidence interval is smaller under the same confidence level. Moreover, the comparison of exact and approximate solutions of Example 6.1 for \(m=2^{4}\) and \(m=2^{5}\) is displayed in Fig. 1 and Fig. 2, respectively.

\(m=2^{4}\), simulation result of the approximate solution and exact solution for Example 6.1

\(m =2^{5}\), simulation result of the approximate solution and exact solution for Example 6.1

Example 6.2

Consider the nonlinear SIVIE [17, 18]

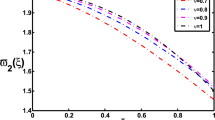

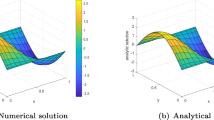

The mean and approximate solutions of Example 6.2 for \(m=2^{4}\) and \(m=2^{5}\) are respectively given in Fig. 3 and Fig. 4, where the mean solution is obtained by 104 trajectories.

\(m=2^{4}\), simulation result of the approximate solution and mean solution for Example 6.2

\(m=2^{5}\), simulation result of the approximate solution and mean solution for Example 6.2

References

Mohammadi, F.: Numerical solution of stochastic Itô–Voltterra integral equations using Haar wavelets. Numer. Math., Theory Methods Appl. 9, 416–431 (2016)

Reihani, M.H., Abadi, Z.: Rationalized Haar functions method for solving Fredholm and Volterra integral equations. J. Comput. Appl. Math. 200, 12–20 (2007)

Maleknejad, K., Khodabin, M., Rostami, M.: Numerical solution of stochastic Volterra integral equation by a stochastic operational matrix based on block pulse function. Math. Comput. Model. 55, 791–800 (2012)

Maleknejad, K., Basirat, B., Hashemizadeh, E.: Hybrid Legendre polynomials and block-pulse functions approach for nonlinear Volterra–Fredholm integro-differential equations. Comput. Math. Appl. 61, 2821–2828 (2011)

Maleknejad, K., Khodabin, M., Rostami, M.: A numerical method for solving m-dimensional stochastic Itô–Volterra integral equations by stochastic operational matrix. Comput. Math. Appl. 63, 133–143 (2012)

Ezzati, R., Khodabin, M., Sadati, Z.: Numerical implementation of stochastic operational matrix driven by a fractional Brownian motion for solving a stochastic differential equation. Abstr. Appl. Anal. 2014, Article ID 523163 (2014)

Jiang, G., Sang, X.Y., Wu, J.H., Li, B.W.: Numerical solution of two-dimensional nonlinear stochastic Itô–Volterra integral equations by applying block pulse function. Adv. Pure Math. 9, 53–66 (2019)

Hashemi, B., Khodabin, M., Maleknejad, K.: Numerical solution based on hat functions for solving nonlinear stochastic Itô–Volterra integral equations driven by fractional Brownian motion. Mediterr. J. Math. 14, 1–15 (2017)

Heydari, M.H., Hooshmandasl, M.R., Ghaini, F.M.M., Cattani, C.: A computational method for solving stochastic Itô–Volterra integral equations based on stochastic operational matrix for generalized hat basis functions. Mediterr. J. Math. 270, 402–415 (2014)

Mirzaee, F., Hadadiyan, E.: Numerical solution of Volterra–Fredholm integral equations via modification of hat functions. Appl. Math. Comput. 280, 110–123 (2016)

Balakumar, V., Murugesan, K.: Single-term Walsh series method for systems of linear Volterra integral equations of the second kind. Appl. Math. Comput. 228, 371–376 (2014)

Blyth, W.F., May, R.L., Widyaningsih, P.: Volterra integral equations solved in Fredholm form using Walsh functions. ANZIAM J. 45, 269–282 (2003)

Mohamed, D.S., Taher, R.A.: Comparison of Chebyshev and Legendre polynomials methods for solving two dimensional Volterra–Fredholm integral equations. J. Egypt. Math. Soc. 25, 302–307 (2017)

Maleknejad, K., Sohrabi, S., Rostami, Y.: Numerical solution of nonlinear Volterra integral equations of the second kind by using Chebyshev polynomials. Appl. Math. Comput. 188, 123–128 (2007)

Ezzati, R., Najafalizadeh, S.: Application of Chebyshev polynomials for solving nonlinear Volterra–Fredholm integral equations system and convergence analysis. Indian J. Sci. Technol. 5, 2060–2064 (2012)

Asgari, M., Hashemizadeh, E., Khodabin, M., Maleknejad, K.: Numerical solution of nonlinear stochastic integral equation by stochastic operational matrix based on Bernstein polynomials. Bull. Math. Soc. Sci. Math. Roum. 57105, 3–12 (2014)

Zhang, X.C.: Euler schemes and large deviations for stochastic Volterra equations with singular kernels. J. Differ. Equ. 244, 2226–2250 (2008)

Zhang, X.C.: Stochastic Volterra equations in Banach spaces and stochastic partial differential equation. J. Funct. Anal. 258, 1361–1425 (2010)

Availability of data and materials

No availability of data and material.

Funding

This article is funded by NSF Grants 11471105 of China and Innovation Team of the Educational Department of Hubei Province T201412. These supports are greatly appreciated.

Author information

Authors and Affiliations

Contributions

The authors have made the same contribution. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wu, J., Jiang, G. & Sang, X. Numerical solution of nonlinear stochastic Itô–Volterra integral equations based on Haar wavelets. Adv Differ Equ 2019, 503 (2019). https://doi.org/10.1186/s13662-019-2440-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-019-2440-6