Abstract

Partial differential equations with nonlocal boundary conditions have been widely applied in various fields of science and engineering. In this work, we first build a high accuracy difference scheme for Poisson equation with two integral boundary conditions. Then, we prove that the scheme can reach the asymptotic optimal error estimate in the maximum norm through applying the discrete Fourier transformation. In the end, numerical experiments validate the correctness of theoretical results and show the stability of the scheme.

Similar content being viewed by others

1 Introduction

Partial differential equations with nonlocal boundary conditions have been widely used to build mathematical models in various fields of science and engineering such as thermoelasticity, physics, medical science, chemical engineering, and so on (see [1–6]).

This work is concerned with the following two-dimensional Poisson equation with two integral boundary conditions:

where \(f(x,y)\), \(\mu_{i}(y)\ (i=1,2)\), \(\mu_{j}(x)\ (j=3,4)\) are some given smooth functions, and \(\xi_{1}\), \(\xi_{2}\) are constants such that \(0<\xi_{1}<\xi_{2}<1\).

FDM is preferred by many researchers because of its simple format and easy programming. Recently, Sapagovas [7] presented a difference scheme of fourth-order approximation for Poisson equation with two integral boundary conditions. The author also studied its solvability and justified an iteration method for solving the corresponding difference system. Berikelashvili [8] constructed some difference schemes for Poisson problem with one integral condition and obtained its estimate of the convergence rate. For Poisson equation with Bitsadze–Samarskii nonlocal boundary, a new method was developed [9] which used the five-point difference scheme to discretize Laplace operator. There are also some literature works on nonlinear and high order elliptic problems with nonlocal boundary conditions. In [10, 11], the authors presented some iterative methods for the system of difference equations to solve nonlinear elliptic equation with integral condition. Pao and Wang [12, 13] used finite difference method to construct a coupled system of two second-order equations for fourth-order elliptic equations with nonlocal boundary conditions.

In recent years, the radial basis function (RBF) collocation method is very popular for PDEs to seek numerical solution, especially for elliptic equations with nonlocal boundary [14–16]. However, the numerical results of RBF collocation method often suffer from shape parameter and condition number of the collocation matrix. As for some other numerical methods for elliptic equations with nonlocal boundary conditions, e.g., FEM, we refer the reader to [17–19].

To our knowledge, few studies not only focus on building high accuracy difference schemes which are of optimal or asymptotic optimal order for error estimation and showing theoretical proofs, but also on displaying corresponding numerical tests for Poisson problem with nonlocal boundary conditions. Therefore, how to design a high accuracy scheme and prove that it is of optimal or asymptotic optimal order for error estimation is a great challenge for us. In this work, we consider a two-dimensional Poisson problem with two integral conditions. The first novel idea is that we build a high accuracy difference scheme by introducing the equivalent relations which are convenient to discretize two nonlocal conditions. The second one is that we ingeniously apply the discrete Fourier transformation (DFT) to transform the two-dimensional problem to a one-dimensional problem for error analysis. Besides, we prove that the difference scheme can reach the asymptotic optimal error estimate in the maximum norm. Numerical examples confirm the correctness of theoretical results.

This work is organized as follows. In Sect. 2, we present a finite difference scheme for Problem (1.1a)–(1.1e). In Sect. 3, the error equations of the scheme are analyzed with the DFT and the corresponding error estimates are presented. In Sect. 4, we show numerical results to support our conclusions. Finally, a summary of this article and future work in this field are discussed.

2 The finite difference discretization

For convenience of discretizing the integral boundary conditions, we can easily prove the equivalent relations as follows.

Lemma 2.1

Suppose that the solution \(u\in C^{2}(\bar{\Omega})\) in Problem (1.1a)–(1.1e), and \(\mu_{i}(y)\ (i=1,2)\) and \(\mu_{j}(x)\ (j=3,4)\) satisfy the following consistent properties:

Then the integral boundary conditions (1.1d) and (1.1e) are equivalent to the nonlocal boundary conditions

and

respectively, where

Proof

Integrating two sides of (1.1a) about the variable y over the interval \([0,\xi_{1}]\) and using (1.1d), we have

i.e.,

which yields (2.3).

In turn, when (2.3) holds, together with (1.1a), we can obtain

Integrating two sides of the above expression about the variable x twice, we have

where \(C_{1}\) and \(C_{2}\) are two constants.

Let \(x=0\) and \(x=1\) in the above equation respectively, and from (1.1b)–(1.1c) and (2.1), we get

which yields

Therefore, (1.1d) is equivalent to (2.3)

Similarly, from (1.1a)–(1.1c) and (2.2), we can also derive that (1.1e) is equivalent to (2.4). Thus, the proof of this lemma is completed. □

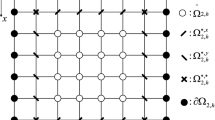

Based on Lemma 2.1, we are now in a position to present the finite difference scheme for Problem (1.1a)–(1.1e). Divide Ω into an \(N\times N\) mesh by

where \(x_{i}=i h, y_{j}=j h\), \(h=\frac{1}{N}\) is the stepsize in both x and y directions, and N is the corresponding partition number. For convenience, we only discuss Problem (1.1a)–(1.1e) under the assumption that \(\xi_{1}\) and \(\xi_{2}\) are two rational constants. Moreover, assume that \(\xi_{m}= N_{m} h\) \((m=1,2)\), where \(N_{1},N_{2}\) are integers and \(0< N_{1}< N_{2}< N\).

Let U be the finite difference approximation of u. Denote \(u_{i,j} = u(x_{i}, y_{j})\), \(U_{i,j}=U(x_{i},y_{j})\), \(f_{i,j}=f(x_{i},y_{j})\), \((\mu_{m})_{j}=\mu_{m}(y_{j})\), and \((\phi_{m})_{i}=\phi_{m}(x_{i})\) \((m=1,2)\). Then the governing equation (1.1a) and two local boundary conditions (1.1b) and (1.1c) can be discretized as follows:

From Lemma 2.1, two nonlocal boundary conditions (1.1d) and (1.1e) can be discretized as follows:

3 Error estimate

Denote the error at point \((x_{i},y_{j})\) by \(e_{i,j}:=U_{i,j}-u_{i,j}\). Suppose that the exact solution \(u\in C^{4}(\bar{\Omega})\). Then, from (2.5) and (2.6), we have

and

where \(\alpha_{i,j}\) and \((\beta_{m})_{i}\ (m=1,2)\) are the corresponding local truncation errors satisfying

Aiming to present the estimation of the errors \(e_{i,j}\ (i,j=1,2,\ldots,N-1)\), we introduce the following DFT formula:

Taking the DFT for \(\alpha_{i,j}\) and \((\beta_{m})_{i}\), respectively, we find

Since \(\sqrt{2h} (\sin k\pi x_{i})_{(N-1)\times(N-1)}\) is an orthogonal matrix, the following inverse DFT formulas hold:

Thereby, from (3.3) and (3.5), we can derive

Substituting (3.4) into the first equation of (3.1) and (3.2), respectively, we have

where

Let \(\varepsilon_{k,j}=\widehat{e}_{k,j}+h^{2}p_{k,j}\), where \(p_{k,j}\) satisfies

From (3.7) and (3.9), one can see that

where \((\widetilde{\beta}_{m})_{k}\) \((m=1,2)\) are defined by

Let \(\Vert \widehat{\alpha}_{k}\Vert =\max_{j=1,\ldots,N-1} |\widehat{\alpha}_{k,j}| \). Now, we can obtain the following estimates.

Lemma 3.1

Suppose that \(p_{k,j}\) satisfies (3.9). Then we have

and

Proof

Let \(|p_{k,\ell}|=\max_{j=1,\ldots,N-1} |p_{k,j}|\). Then, from (3.8) and (3.9), we have

Recalling that \(\theta_{k}=\frac{k\pi h}{2}\), \(h=\frac{1}{N}\), and \(1\leq k\leq N-1\), we can derive

Therefore, one can easily infer (3.11).

Let \(\delta_{k,i}=\widehat{\alpha}_{k,i}-4\sin^{2}{\theta_{k}}p_{k,i}\), \(i=1,\ldots,N-1\). From (3.13), we have

From (3.9), we get

Then, summing the above equations over i from 1 to j (\(1\leq j\leq N-1\)), we obtain

Furthermore, summing (3.16) over j from 1 to \(N-1\) and noticing \(p_{k,N}=p_{k,0}=0\), we get

From (3.15) and the above equation, we have

Therefore, using (3.15) again together with (3.16), we finally obtain (3.12) which completes the proof. □

Now we present the convergence theorem for Problem (1.1a)–(1.1e).

Theorem 3.1

Suppose that \(u\in C^{4}(\overline{\Omega})\). Then, for \(i,j=1,\ldots,N-1\), we have

Proof

Let \(\lambda_{k}=(\sqrt{1+\sin^{2}{\theta_{k}}}+\sin{\theta_{k}})^{2}, k=1,\ldots, N-1\). Obviously,

From (3.10), we have

where \(\eta= \frac{3}{2}-2\lambda_{k} +\frac{1}{2}\lambda^{2}_{k}, \overline{\eta} = \frac{3}{2}-2\lambda^{-1}_{k} +\frac{1}{2}\lambda^{-2}_{k}\),

\(C_{1} = \frac{h(\widetilde{\beta}_{2})_{k}}{(1-\lambda ^{N_{1}-N_{2}-N}_{k})(\lambda^{N}_{k}\overline{\eta}+\lambda^{N_{2}}_{k} \eta)}\) and \(C_{2} = \frac{h(\widetilde{\beta}_{1})_{k}}{(1-\lambda ^{N+N_{2}-N_{1}}_{k})(\lambda^{N_{1}}_{k}\overline{\eta}+\eta)}\).

From the definition of \((\widetilde{\beta}_{m})_{k}\) \((m=1,2)\), Lemma 3.1, and (3.6), we obtain

Note the fact that

Then we have

From the above inequality, (3.19), (3.20), and (3.21), we get

From (3.18), (3.21), and (3.14), we have

Similar to the above estimation, we can derive

Therefore, we get

From the definition of \(\varepsilon_{k,j}\), Lemma 3.1, and (3.6), we have

Together with (3.4), we finally obtain (3.17), which completes the proof. □

4 Numerical experiments

In this section, we present two typical examples to demonstrate the theoretical results and compare the numerical results with the RBF collocation method [15].

Example 4.1

Consider Problem (1.1a)–(1.1e), and let

One can check that the exact solution is \(u(x,y)=\sin{\pi x}\sin{\pi y}\).

In this experiment, we take the uniform partition for Ω and employ the preconditioned conjugate gradient method to solve the corresponding difference equations (2.5) and (2.6). Numerical results are shown in Tables 1 and 2, where the norms \(\| u-U\|_{m}\ (m= 2, \infty)\) are defined by \(\| u-U\|_{2} = \frac{1}{N} (\sum_{i=1}^{N} \sum_{j=1}^{N} |{u_{i,j}-U_{i,j}}|^{2} )^{\frac{1}{2}}\), \(\|u-U\|_{\infty} = \max_{1\leq i,j \leq N} |{u_{i,j}-U_{i,j}}|\), respectively. To illustrate the pointwise error, we choose four typical points and show the corresponding errors in Table 2. From the results, one can see that the convergent order is close to two, which validates the correctness of the theoretical results.

Now, we adopt the RBF collocation method to solve Example 4.1. Like [15], we examine efficiency of the method based on regular distribution and MQ RBF, i.e., let RBF

where ϵ is the shape parameter. Take \(\epsilon=2\) and the results are shown in Tables 3 and 4, where \(\kappa(A)\) and \(U_{\mathrm{RBF}}\) denote the condition number of the discretize linear system and the corresponding approximation solution, respectively. By comparing Tables 3 and 4 with Tables 1 and 2, one can see that when h decreases gradually from \(1/16\) to \(1/128\), one fact is that the RBF collocation method has better approximation to the exact solution than our method. But the other fact is that the \(\kappa(A)\) becomes larger which would make the discretize linear system unsolvable. Meanwhile, the convergence rate of errors for RBF collocation method decreases rapidly near to zero. In turn, the convergence rate of errors for our method is always kept at about 4 whether h is large or small. Therefore, we can conclude that our method is far more stable than the RBF collocation method, and it can get better approximation numerical results to the exact solution with h becoming smaller.

Example 4.2

Consider Problem (1.1a)–(1.1e), and let

One can verify that the exact solution is \(u(x,y)=e^{x}(x+y^{2})\).

In this example, take the same partitions as in Example 4.1 and let the shape parameter \(\epsilon=6\). The numerical results of our method are shown in Tables 5 and 6, while the results of the RBF collocation method are shown in Tables 7 and 8. From Tables 5 and 6, one can see that the convergent order is close to two, which confirms the correctness of theoretical results again. From Tables 7 and 8, the approximation solution obtained by the RBF collocation method is very close to the exact solution when \(h=1/16, 1/32\) and \(1/64\). However, the ratio of error norms drops sharply to zero when \(h=1/128\). By comparison with Tables 5 and 6, the fact that our approach is more stable than the RBF collocation method is demonstrated once again.

5 Summary and conclusions

In this paper, we construct a high accuracy difference scheme for Poisson equation with two integral boundary conditions and prove that the scheme can reach the asymptotic optimal error estimate. Numerical results verify the correctness of theoretical analysis. In the future, we will work on designing some other high order difference schemes (e.g., fourth-order nonstandard compact finite difference [20], or sixth-order implicit finite difference [21]) for Poisson problem with other nonlocal boundary conditions. Besides, we will also try to apply some other analytic methods for error estimation, e.g., homotopy analysis transform method [22, 23], or Lie symmetry analysis method [24, 25].

References

Shi, P., Shillor, M.: On design of contact patterns in one-dimensional thermoelasticity. In: Theoretical Aspects of Industrial Design (Wright–Patterson Air Force Base), SIAM Proc., pp. 76–82. Society for Industrial and Applied Mathematics, Philadelphia (1992)

Cannon, J.R.: The solution of the heat equation subject to specification of energy. Q. Appl. Math. 21, 155–160 (1963)

Nakhushev, A.M.: An approximate method for solving boundary value problems for differential equations and its application to the dynamics of ground moisture and ground water. Differ. Equ. 18(1), 72–81180 (1982)

Diaz, J.I., Padial, J.F., Rakotoson, J.M.: Mathematical treatment of the magnetic confinement in a current carrying stellarator. Nonlinear Anal. 34(6), 857–887 (1998)

Capasso, V., Kunisch, K.: A reaction-diffusion system arising in modelling man-environment diseases. Q. Appl. Math. 46(3), 431–450 (1988)

Dagan, G.: The significance of heterogeneity of evolving scales to transport in porous formations. Water Resour. Res. 30(12), 3327–3336 (1994)

Sapagovas, M.P.: Difference method of increased order of accuracy for the Poisson equation with nonlocal conditions. Differ. Equ. 44(7), 1018–1028 (2008)

Berikelashvili, G.: Finite difference schemes for some mixed boundary value problems. In: Proceedings of A. Razmadze Mathematical Institute, vol. 127, pp. 77–87 (2001)

Volkov, E.A., Dosiyev, A.A., Buranay, S.C.: On the solution of a nonlocal problem. Comput. Math. Appl. 66(3), 330–338 (2013)

Čiupaila, R., Sapagovas, M., Štikonienė, O.: Numerical solution of nonlinear elliptic equation with nonlocal condition. Nonlinear Anal., Model. Control 4(4), 412–426 (2013)

Sapagovas, M., Griškonienė, V., Štikonienė, O.: Application of m-matrices theory to numerical investigation of a nonlinear elliptic equation with an integral condition. Nonlinear Anal., Model. Control 22(4), 489–504 (2017)

Pao, C.V., Wang, Y.M.: Numerical methods for fourth-order elliptic equations with nonlocal boundary conditions. J. Comput. Appl. Math. 292(C), 447–468 (2016)

Pao, C.V., Wang, Y.M.: Nonlinear fourth-order elliptic equations with nonlocal boundary conditions. J. Math. Anal. Appl. 372(2), 351–365 (2010)

Siraj-ul-Islam, Aziz, I., Ahmad, M.: Numerical solution of two-dimensional elliptic PDEs with nonlocal boundary conditions. Comput. Math. Appl. 69(3), 180–205 (2015)

Sajavičius, S.: Radial basis function method for a multidimensional linear elliptic equation with nonlocal boundary conditions. Comput. Math. Appl. 67(7), 1407–1420 (2014)

Sajavičius, S.: Optimization, conditioning and accuracy of radial basis function method for partial differential equations with nonlocal boundary conditions—a case of two-dimensional Poisson equation. Eng. Anal. Bound. Elem. 37(4), 788–804 (2013)

Lobjanidze, G.: On variational formulation of some nonlocal boundary value problems by symmetric continuation operation of a function. Appl. Math. Inform. Mech. 11(2), 15–2290 (2006)

Nie, C., Yu, H.: Some error estimates on the finite element approximation for two-dimensional elliptic problem with nonlocal boundary. Appl. Numer. Math. 68, 31–38 (2013)

Nie, C., Shu, S., Yu, H., An, Q.: A high order composite scheme for the second order elliptic problem with nonlocal boundary and its fast algorithm. Appl. Math. Comput. 227, 212–221 (2014)

Hajipour, M., Jajarmi, A., Baleanu, D.: On the accurate discretization of a highly nonlinear boundary value problem. Numer. Algorithms (2017). https://doi.org/10.1007/s11075-017-0455-1

Hajipour, M., Jajarmi, A., Malek, A., Baleanu, D.: Positivity-preserving sixth-order implicit finite difference weighted essentially non-oscillatory scheme for the nonlinear heat equation. Appl. Math. Comput. 325, 146–158 (2018)

Singh, J., Kumar, D., Qurashi, M.A., Baleanu, D.: A novel numerical approach for a nonlinear fractional dynamical model of interpersonal and romantic relationships. Entropy 19(7), 375 (2017)

Kumar, D., Singh, J., Baleanu, D.: A new analysis for fractional model of regularized long wave equation arising in ion acoustic plasma waves. Math. Methods Appl. Sci. 40, 5642–5653 (2017)

Inc, M., Yusuf, A., Aliyu, A.I., Baleanu, D.: Lie symmetry analysis, explicit solutions and conservation laws for the space-time fractional nonlinear evolution equations. Physica A 496, 371–383 (2018)

Baleanu, D., Inc, M., Yusuf, A., Aliyu, A.I.: Lie symmetry analysis, exact solutions and conservation laws for the time fractional Caudrey–Dodd–Gibbon–Sawada–Kotera equation. Commun. Nonlinear Sci. Numer. Simul. 59, 222–234 (2018)

Acknowledgements

The authors are grateful to the anonymous referees for their useful suggestions and comments that improved the presentation of this paper.

Funding

This work is partially supported by NSFC Project (Grant Nos. 11571293, 11171281 and 61603322), the Key Project of Scientific Research Fund of Hunan Provincial Science and Technology Department (Grant No. 2011FJ2011), Hunan Provincial Natural Science Foundation of China (Grant No. 2016JJ2129), Hunan Provincial Civil Military Integration Industrial Development Project “Adaptive Multilevel Solver and Its Application in ICF Numerical Simulation” and Open Foundation of Guangdong Provincial Engineering Technology Research Center for Data Science (Grant No. 2016KF03).

Author information

Authors and Affiliations

Contributions

All authors have participated in the sequence alignment and drafted the manuscript. They have approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Zhou, L., Yu, H. Error estimate of a high accuracy difference scheme for Poisson equation with two integral boundary conditions. Adv Differ Equ 2018, 225 (2018). https://doi.org/10.1186/s13662-018-1682-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-018-1682-z