Abstract

In this paper, we consider a multi-term variable-order fractional diffusion equation on a finite domain, which involves the Caputo variable-order time fractional derivative of order \(\alpha(x,t) \in(0,1) \) and the Riesz variable-order space fractional derivatives of order \(\beta(x,t) \in (0,1)\), \(\gamma(x,t)\in(1,2)\). Approximating the temporal direction derivative by L1-algorithm and the spatial direction derivative by the standard and shifted Grünwald method, respectively, a characteristic finite difference scheme is proposed. The stability and convergence of the difference schemes are analyzed via mathematical induction. Some numerical experiments are provided to show the efficiency of the proposed difference schemes.

Similar content being viewed by others

1 Introduction

As far as we are concerned, the theory of fractional partial differential equations (FPDE), as a new and effective mathematical tool, is very popular and important in many scientific and engineering problems. This is due to the fact that they can well describe the memory and hereditary properties of different substances [1–5]. For instance, the multi-term FPDEs have been employed to some models for describing the processes in practice, such as the oxygen delivery through a capillary to tissues [6], the underlying processes with loss [7], the anomalous diffusion in highly heterogeneous aquifers and complex viscoelastic materials [8], and so on. For others, one may refer to [1, 9–13].

Recently, researchers have found that many important dynamic processes exhibit fractional-order behavior that may vary with time and/or space. So it is significant to develop the concept of variable-order calculus. Presently, variable-order calculus has been applied in many fields such as viscoelastic mechanics [14], geographic data [15], signal confirmation [16], and diffusion process [17]. Since the kernel of the variable-order operators has a variable exponent, analytical solutions to variable fractional-order differential equations are more difficult to obtain. Therefore, the development of numerical methods to solve variable-order fractional differential equations is an actual and important problem. Nowadays numerical methods for variable-order fractional differential equations, which mainly cover finite difference methods [18–26], spectral methods [27–29], matrix methods [30, 31], reproducing kernel methods [32, 33], and so on, have been studied extensively by many researchers.

Roughly speaking, the fractional models can be classified into three principal kinds: space-fractional differential equation, time-fractional differential equation, and time-space fractional differential equation. Lately, Shen et al. [18] proposed numerical techniques for the variable-order time fractional diffusion equation. Zhang et al. studied an implicit Euler numerical method for the time variable fractional-order mobile-immobile advection-dispersion model in [19]. Lin et al. [20] investigated the stability and convergence of an explicit finite-difference approximation for the variable-order nonlinear fractional diffusion equation. Zhuang et al. [21] proposed explicit and implicit Euler approximations for the variable-order fractional advection-diffusion equation with a nonlinear source term. Sweilam et al. used an explicit finite difference method to study the variable-order nonlinear space fractional wave equation [22]. Zhuang et al. [23] proposed an implicit Euler approximation for the time and space variable fractional-order advection-dispersion model with first-order temporal and spatial accuracy.

But in the existing literature, there is little work on higher-order numerical methods for the multi-term time-space variable-order fractional differential equations because more numerical analysis is involved.

In this paper, we consider the following multi-term time-space variable-order fractional diffusion equations with initial-boundary value problem:

Here, the operator \(P_{\alpha,\alpha_{1},\ldots,\alpha_{S}}({}^{C}_{0}D_{t})u(x,t)\) is defined by

where \({}^{C }_{0}D^{\alpha_{s}(x,t)}_{t} (s=0,1,\ldots,S)\) are left-hand side variable-order Caputo fractional derivatives defined by [21]

\(0<\alpha_{S}(x,t)<\alpha_{S-1}(x,t)<\cdots<\alpha_{1}(x,t)<\alpha_{0}(x,t), 0<\underline{\alpha}\leq\alpha_{0}(x,t)\leq\overline{\alpha}<1, a_{s}(x,t)\geq0\). The space fractional derivatives \(_{x}R^{\beta(x,t)}, _{x}R^{\gamma(x,t)}\) are generalized Riesz fractional derivatives defined by [20, 21]

Here, left-hand side and right-hand side variable-order Riemann–Liouville fractional derivatives are defined by

where n is a positive integer and \(n-1<\zeta(x,t)< n\). \(1<\underline{\beta}\leq\beta(x,t)\leq\overline{\beta}< 2, 0<\underline {\gamma}\leq\gamma(x,t)\leq\overline{\gamma}<1, \rho\geq0, \sigma\geq0, \rho+\sigma=1\), \(p(x,t) \geq0, q(x,t)\geq 0, c(x,t)\geq0\). We use L1-algorithm to approximate the temporal direction derivative, the standard and shifted Grünwald method to approximate the spatial direction derivative, and propose an unconditionally stable finite difference scheme. Furthermore, we prove the convergence of the scheme by using errors estimation method, and the convergence rate of order \((\tau+h)\) is obtained.

The remainder of the paper is organized as follows. In Sect. 2, we give some preliminaries, which is necessary for our following analysis. A finite difference scheme for equations (1)–(3) is proposed, and the unconditional stability and convergence of the approximation scheme are proved in Sect. 3. Numerical examples are given in Sect. 4 to demonstrate the effectiveness of the scheme. Finally, we conclude this paper in Sect. 5.

2 Preliminaries and discretization of the diffusion equation

Let \(t_{k}=k\tau, k=0,1, 2, \ldots, n, x_{i}=ih, i=0,1,2,\ldots,m\), where \(\tau= T/n\) and \(h=L/m\) are time and space steps, respectively. For an arbitrary function of two variables \(u(x,t)\), we denote \(u^{k}_{i}=u(x_{i},t_{k})\). Let \(P(x,t)=p(x,t)\times(-\operatorname{sec}\beta(x,t)\pi/2), Q(x,t)=q(x,t)\times (-\operatorname{sec}\gamma(x,t)\pi/2)\).

For a variable-order Caputo derivative, the authors proposed the L1 operator in [19] as follows:

where \(G^{\alpha,k+1}_{i,j}=(j+1)^{1-\alpha^{k+1}_{i}} -j^{1-\alpha^{k+1}_{i}}\), and gave the following approximation result.

Lemma 2.1

([19])

Suppose that \(\frac{\partial^{2}u(x,t)}{\partial t^{2}}\in C(\Omega)\), \(0<\alpha(x,t)< 1\), we have

For a variable-order Grünwald–Letnikov fractional derivative, [21, 34] proposed the following “standard” and “shifted” operators:

where \(g^{j}_{\zeta^{k}_{i}}\) is the Grünwald weights defined by \(g^{j}_{\zeta^{k}_{i}}=\frac{{\Gamma(j-\zeta_{i}^{k})}}{ {\Gamma(-\zeta_{i}^{k})}\Gamma(j+1)}, j=0,1,2,\ldots\) .

Suppose that the function \(f(x)\) is \((m-1)\)-continuously differentiable in the interval \([0, L]\) and that \(f^{m}(x)\) is integrable in \([0, L]\). Then, for every \(\zeta(x,t)\ (0\leq m-1<\zeta(x,t)< m)\), the Riemann–Liouville fractional derivative exists and coincides with the Grünwald–Letnikov fractional derivative [1, 35]. So the Grünwald–Letnikov operator can be used to approximate the Riemann–Liouville derivative.

Define the function space as follows:

where \(F[u(t,x)](\omega)=\int\mathrm {e}^{i\omega x}u(x,t)\,dx\). So the following results can be obtained.

Lemma 2.2

For \(0 \leq n-1<\xi(x,t)< n\), if \(u(x,t)\in\Theta^{1+\zeta}\) and \({}^{R }_{0}D^{\xi(x,t)}_{x}u(x,t), {}^{R }_{x}D^{\xi (x,t)}_{L}u(x,t)\in C^{1}(\Omega)\), then we can obtain the “standard” Grünwald approximation

and the “shifted” Grünwald approximation

It was shown in [34] that when \(\beta\in(1,2)\) using the “standard” Grünwald formula will be unconditionally unstable. So we adopt the “shifted” Grünwald formula to approximate the space fractional derivatives \(_{x}R^{\beta(x,t)}u(x,t)\) and the “standard” Grünwald formula to approximate the space fractional derivatives \(_{x}R^{\gamma(x,t)}u(x,t)\).

At the end of this section, we give the following discretization schemes for Eq. (1)–(3):

These are linear implicit schemes, each layer of iterative needs to solve a system of linear algebraic equations. Now we rewrite algorithm (4) as a vector form. To this end, we give the following notation.

Let \(b^{k+1}_{i}=\tau^{\alpha^{k+1}_{0i}}\Gamma(2-\alpha^{k+1}_{0i})\) and

\(E^{k+1}_{i}=(M^{k+1}_{i,0})^{-1}b^{k+1}_{i}P^{k+1}_{i}, F^{k+1}_{i}=(M^{k+1}_{i,0})^{-1}b^{k+1}_{i}Q^{k+1}_{i}, H^{k+1}_{i}=(M^{k+1}_{i,0})^{-1}b^{k+1}_{i}c^{k+1}_{i}\).

For \(i=1,2,\ldots, m-1\), \(j=1,2,\ldots, m-1\), \(k=0,1,2,\ldots, n \),

For \(i=1,2,\ldots, m-1, k=1,2,\ldots, n \),

Denote \(A^{k}=(A^{k}_{ij})\), \(B^{k}=(B_{1}^{k},B_{2}^{k},\ldots, B_{m-1}^{k})^{T} \), \(V^{k}=(v^{k}_{1}, v^{k}_{2},\ldots, v^{k}_{m-1})^{T} \), \(\phi=(\phi_{1}, \phi_{2}, \ldots, \phi_{m-1})^{T}\).

Therefore, the discrete scheme (4) can be expressed in the following vector form:

3 Solvability, stability and convergence

In this section, we consider the solvability, stability and convergence of the discrete scheme (4) or (8). For this purpose, we give the following two lemmas.

Lemma 3.1

Suppose \(0<\underline{\alpha}\leq\alpha_{s}(x,t)\leq\overline{\alpha }<1\ (s=0,1,2,\ldots,S)\), and \(M^{k}_{i,j}\) given by (5). Then

for \(k=1,2,\ldots, n \).

Proof

Let \(f(x)=(x+1)^{1-\alpha(x_{i},t_{k})}-{x}^{1-\alpha(x_{i},t_{k})}\), we have

so the function \(f(x)\) is strictly decreasing. Therefore, for \(j>0\), we have \(f(j+1)< f(j)\), namely

It is easy to see that \(M^{k}_{i,0}>M^{k}_{i,1}\). Moreover, for \(j=1, \ldots , m-1\), we have

In addition, noting that \((M^{k}_{i,0})^{-1}M^{k}_{i,0}=1\), we can obtain the second formula of the lemma. The proof is completed. □

Lemma 3.2

([23])

For \(1<\underline{\beta}\leq\beta(x,t)\leq\overline{\beta}<2, 0<\underline{\gamma}\leq\gamma(x,t)\leq\overline{\gamma}<1\), the coefficients \(g^{j}_{\beta^{k}_{i}}\) and \(g^{j}_{\gamma^{k}_{i}}\) \((i=1,2,\ldots, m, k=1,2,\ldots, n)\) satisfy

3.1 Solvability analysis

Using Lemma 3.2 and a simple computation yields

Namely, matrix A is strictly diagonally dominant with positive diagonal terms and nonpositive off-diagonal terms. Therefore, matrix A is invertible. So the following theorem can be obtained.

Theorem 3.1

Scheme (8) has a unique solution.

3.2 Stability analysis

Theorem 3.2

Suppose \(V^{k}_{i},\widetilde{V}^{k}_{i}\) are solutions of schemes (4) with the initial values \(V^{k}_{0},\widetilde{V}^{k}_{0}\), respectively. Then

where \(\Vert V^{k}-\widetilde{V}^{k} \Vert _{\infty}=\max_{1\leq i \leq m-1} \vert v^{k}_{i}-\widetilde{v}^{k}_{i} \vert \).

Proof

Denote \(X^{k}=V^{k}-\widetilde{V}^{k}=(\varepsilon^{k}_{1}, \ldots, \varepsilon ^{k}_{m-1})^{T}\), then

where the component of \(B^{k+1}\) is

Now we prove this theorem applying mathematical induction method.

For \(k=1\), suppose \(\Vert X^{1} \Vert _{\infty}= \vert \varepsilon ^{1}_{l} \vert \). Considering the lth equation of (10), we have

namely

Due to (9) and (11), we can obtain

Assume that \(\Vert X^{j} \Vert _{\infty}\leq \Vert X^{0} \Vert _{\infty}\ (j=1,\ldots, k)\), similar to the case of \(k=1\). Suppose \(\Vert X^{k+1} \Vert _{\infty}= \vert \varepsilon ^{k+1}_{l} \vert \), according to (9), (11), and Lemma 3.1, we have

Due to the principle of mathematical induction, the proof is completed. □

3.3 Convergence analysis

Theorem 3.3

Suppose that problem (1) has a smooth solution \(u(x, t)\). Let \(v^{k}_{i}\) be the numerical solution computed by (4). Then there is a positive constant C independent of τ and h such that

Proof

Denote \(Y^{k}=U^{k}-\widetilde{V}^{k}=(\eta^{k}_{1}, \ldots, \eta^{k}_{m-1})^{T}\), then according to (1) and (4), we obtain the following error equation:

where

We rewrite it in the following vector form:

where the component of \(B^{k+1}\) is

similar to the proof of Theorem 3.3.

For \(k=1\), suppose \(\Vert Y^{1} \Vert _{\infty}= \vert \eta^{1}_{l} \vert \). From Lemma 2.1, Lemma 2.2, the definitions of \(M_{i,j}^{k}, b_{i}^{k}\) and (13), we have

Assume that \(\Vert Y^{j} \Vert _{\infty}\leq C (\tau+h)\ (j=1,\ldots,k)\). Suppose \(\Vert Y^{k+1} \Vert _{\infty}= \vert \eta^{k+1}_{l} \vert \). Similar to the case of \(k=1\), we have

By the definitions of \(M^{k+1}_{i,k}\) and \(b_{i}^{k+1}\), we have

namely

Substituting the above inequality into (14), we have

Due to the principle of mathematical induction, the theorem is proved. □

4 Numerical examples

In this section, three examples are presented to illustrate the practical application of our numerical method. Consider the vectors \(V^{k}=(v_{0}^{k},\ldots,v_{m}^{k})\), where \(v^{k}_{i}\) is the approximate solution for \(x_{i}=i h, i=0,1,\ldots, m\), at a certain time t, and \(U^{k}=(u_{0}^{k},\ldots, u_{m}^{k})\), where \(u_{i}^{k}\) is the exact solution. The error is defined by the \(l_{\infty}\) norms:

Example 4.1

Consider the following fractional differential equation:

where

We take \(\alpha(x,t)=0.8+0.01 \sin(5xt), \beta(x,t)=1.8+0.01x^{2}t^{2}, \gamma(x,t)=0.8+0.01x^{2}\sin t\), \(\rho=1, \sigma=0, \tau=h=0.1\). The above problem has the exact solution \(u(x,t)=5(t^{2}+1)x^{2}(1-x)\).

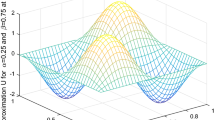

Table 1 lists the maximum errors of the proposed method between the exact solution and the numerical solution for problem (15) at \(T=1\). Figure 1 shows the behavior of the exact solution and the numerical solution of the proposed method at \(t = 0, t = 0.5, t = 1\) for problem (15), respectively. It can be seen that the numerical solution is in good agreement with the exact solution. Figure 2 shows 3D-drawing of the numerical solution and the exact solution of problem (15) at \(T= 1\). We can see that the numerical solution is very similar to the exact solution. From the results displayed in Table 1 and in Figs. 1 and 2, it is obvious that the proposed method is efficient and able to give numerical solutions coinciding with the exact solutions.

The solution behavior of (15) at \(t=0\), \(t=0.5\), \(t=1\)

Three-dimensional numerical solution (left) and the exact solution (right) of (15)

Example 4.2

Consider the following fractional differential equation:

where

We take \(\alpha(x,t)=0.5+0.01 \sin(5xt), \beta(x,t)=1.5+0.01x^{2}t^{2}, \gamma(x,t)=1\), \(\rho=1, \sigma=0, \tau=0.05, h=0.1\). The above problem has the exact solution \(u(x,t)=\frac{(t+1)x^{2}(8-x)}{80}\).

A comparison of the numerical solution of the proposed method and the exact solution for problem (16) is listed in Table 2 and is shown in Fig. 3. It can be seen that the proposed method is in excellent agreement with the exact solution.

The solution behavior of (16) at \(t=0\), \(t=0.5\), \(t=1\)

Example 4.3

Consider the two-term VO fractional differential equation:

where

We take \(\alpha(x,t)=1-0.5e^{-xt}, \beta(x,t)=1.7+0.1e^{-\frac {x^{2}}{1000}-\frac{t}{50}-1}\), \(\rho=\sigma=\frac{1}{2},\tau=h=0.1\). The above problem has the exact solution \(u(x,t)=(1+t^{2})x^{2}(1-x)^{2}\).

Table 3 gives the numerical solution, the exact solution, and the absolute error at \(T=1\) of (17). Figure 4 shows the solution behavior of (17) at \(t=0.25, t=0.75, t=1\), respectively. It can be seen that the numerical solution is in good agreement with the exact solution.

The solution behavior of (17) at \(t=0.25\), \(t=0.75\), \(t=1\)

5 Conclusion

In this paper, a finite difference scheme has been proposed to solve a multi-term time-space variable-order fractional diffusion equation. The stability and convergence have been analyzed by the mathematical induction method. Numerical examples are provided to show that the finite difference scheme is computationally efficient. The techniques for the numerical schemes and related numerical analysis can be applied to solve variable-order fractional (in space and/or in time) partial differential equations.

References

Podlubny, I.: Fractional Differential Equations. Academic Press, San Diego (1999)

Trujillo, J.J., Kilbas, A.A., Srivastava, H.M.: Theory and Applications of Fractional Differential Equations. Elsevier, Amsterdam (2006)

Ross, B., Miller, K.S.: An Introduction to the Fractional Calculus and Fractional Differential Equations. Wiley, New York (1993)

Meerchaert, M.M., Benson, D.A., Wheatcraft, S.W.: Application of a fractional advection-dispersion equation. Water Resour. Res. 36, 1403–1412 (2000)

Hilfer, R.: Applications of Fractional Calculus in Physics. World Scientific, Singapore (1999)

Srivastava, V., Rai, K.N.: A multi-term fractional diffusion equation for oxygen delivery through a capillary to tissues. Math. Comput. Model. 51, 616–624 (2010)

Luchko, Y.: Initial-boundary-value problems for the generalized multi-term time-fractional diffusion equation. J. Math. Anal. Appl. 374, 538–548 (2011)

Liu, Y., Zhou, Z., Jin, B., Lazarov, R.: The Galerkin finite element method for a multi-term time-fractional diffusion equation. J. Comput. Phys. 281, 825–843 (2015)

McGough, R., Zhuang, P., Liu, Q., Liu, F., Meerschaert, M.M.: Numerical methods for solving the multi-term time fractional wave equations. Fract. Calc. Appl. Anal. 16, 9–25 (2013)

Anh, I., Turner, I., Ye, H., Liu, F.: Maximum principle and numerical method for the multi-term time-space Riesz–Caputo fracional differential equations. Appl. Math. Comput. 227, 531–540 (2014)

Bai, Y.R., Liu, Z.H., Zeng, S.D.: Maximum principles for multi-term space-time variable-order fractional diffusion equation and their applications. Fract. Calc. Appl. Anal. 19, 188–211 (2016)

Jia, X.Z., Du, D.H., Li, G.S., Sun, C.L.: Numerical solution to the multi-term time fractional diffusion equation in a finite domain. Numer. Math., Theory Methods Appl. 9, 337–357 (2016)

Sun, Z.Z., Gao, G.H., Alikhanov, A.A.: The temporal second order difference schemes based on the interpolation approximation for sloving the time multi-term and distributed-order fractional sub-diffusion equations. J. Sci. Comput. 73, 93–121 (2017)

Coimbra, C.F.M.: Mechanica with variable-order differential operators. Ann. Phys. 12, 692–703 (2003)

Cowan, D.R., Cooper, G.R.J.: Filtering using variable order vertical derivatives. Comput. Geosci. 30, 455–459 (2004)

Tseng, C.C.: Design of variable and adaptive fractional order fir differentiators. Signal Process. 86, 2554–2566 (2006)

Chen, Y., Sun, H., Chen, W.: Variable-order fractional differential operators in anomalous diffusion modeling. Physica A 388, 4586–4592 (2009)

Chen, J., Turner, I., Anh, V., Shen, S., Liu, F.: Numerical techniques for the variable order time fractional diffusion equation. Appl. Math. Comput. 218, 10861–10870 (2012)

Phanikumar, M.S., Meerschaert, M.M., Zhang, H., Liu, F.: A novel numerical method for the time variable fractional order mobile-immobile advection-dispersion model. Comput. Math. Appl. 66, 693–701 (2013)

Anh, V., Lin, R., Liu, F., Turner, I.: Stability and convergence of a new explicit finitedifference approximation for the variable-order nonlinear fractional diffusion equation. Appl. Math. Comput. 212, 435–445 (2009)

Ahn, V., Turner, I., Zhuang, P., Liu, F.: Numerical methods for the variable-order fractional advection-diffusion equation with a nonlinear source term. SIAM J. Numer. Anal. 47, 1760–1781 (2009)

Almarwm, H.M., Sweilam, N.H., Khader, M.M.: Numerical studies for the variable-order nonlinear fractional wave equation. Fract. Calc. Appl. Anal. 15, 669–683 (2012)

Zhuang, P., Turner, I., Anh, V., Zhang, H., Liu, F.: Numerical analysis of a new space-time variable fractional order advection-dispersion equation. Appl. Math. Comput. 242, 541–550 (2014)

Anh, V., Turner, I., Chen, C.M., Liu, F.: Numerical schemes with high spatial accuracy for a variable-order anomalous subdiffusion equation. SIAM J. Sci. Comput. 32, 1740–1760 (2010)

Qiu, Y.N., Cao, J.X.: A compact finite difference scheme for variable order subdiffusion equation. Commun. Nonlinear Sci. Numer. Simul. 48, 140–149 (2017)

Karniadakis, G.E., Zhao, X., Sun, Z.Z.: Second-order approximations for variable order fractional derivatives: algorithms and applications. J. Comput. Phys. 293, 184–200 (2015)

Zaky, M.A., Bhrawy, A.H.: Numerical algorithm for the variable-order Caputo-fractional functional differential equation. Nonlinear Dyn. 85, 1818–1823 (2016)

Karniadakis, G.E., Zayernouri, M.: Fractional spectral collocation methods for linear and nonlinear variable order fpdes. J. Comput. Phys. 293, 312–338 (2015)

Zakyb, M.A., Bhrawya, A.H.: Highly accurate numerical schemes for multi-dimensional space variable-order fractional Schrödinger equations. Comput. Math. Appl. 73, 1100–1117 (2017)

Chen, Y.M., Wei, Y.Q., Liu, D.Y., Yu, H.: Numerical solution for a class of nonlinear variable order fractional differential equations with Legendre wavelets. Appl. Math. Lett. 46, 83–88 (2015)

Li, B.F., Sun, Y.N., Chen, Y.M., Li, L.Q.: Numerical solution for the variable order linear cable equation with Bernstein polynomials. Appl. Math. Comput. 238, 329–341 (2014)

Wu, B.Y., Li, X.Y.: A numerical technique for variable fractional functional boundary value problems. Appl. Math. Lett. 43, 108–113 (2015)

Wu, B.Y., Li, X.Y.: A new reproducing kernel method for variable order fractional boundary value problems for functional differential equations. J. Comput. Appl. Math. 311, 387–393 (2017)

Tadjeran, C., Meerschaert, M.M.: Finite difference approximations for fractional advection-dispersion flow equations. J. Comput. Appl. Math. 172, 65–77 (2004)

Marichev, O.I., Samko, S.G., Kilbas, A.A.: Fractional Integrals and Derivatives: Theory and Applications. Gordon and Breach, Amsterdam (1993) (Translation from the Russian)

Acknowledgements

The work was supported by the National Natural Science Foundation of China (No. 11672011, 11272024 ).

Author information

Authors and Affiliations

Contributions

TX wrote the first draft and SL corrected and improved the final version. Both authors read and approved the final draft.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Xu, T., Lü, S., Chen, W. et al. Finite difference scheme for multi-term variable-order fractional diffusion equation. Adv Differ Equ 2018, 103 (2018). https://doi.org/10.1186/s13662-018-1544-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-018-1544-8