Abstract

In this paper, we consider the nonergodic Ornstein-Uhlenbeck process

driven by the weighted fractional Brownian motion \(B_{t}^{a,b}\) with parameter a and b. Our goal is to estimate the unknown parameter \(\theta>0\) based on the discrete observations of the process. We construct two estimators \(\hat{\theta}_{n}\) and \(\check{\theta}_{n}\) of θ and show their strong consistency and the rate consistency.

Similar content being viewed by others

1 Introduction

The fractional Brownian motion (fBm for short) has already been widely applied in hydrology, traffic volume prediction, estimation of Hurst exponent of seismic signal, finance, and various other areas due to its properties such as long-range dependence, self-similarity, and stationarity of its increments. However, fBm is not sufficient for some random phenomena, so many researchers have chosen more general stochastic processes to construct stochastic models. For instance, Azzaoui and Clavier [1] studied impulse response of the 60-Ghz channel by using α-stable processes. Lin and Lin [2] studied pricing debt value in stochastic interest rate by using Lévy processes. Meanwhile, the weighted fractional Brownian motion (wfBm), which is a kind of generalizations of the fBm, can be also used for modeling.

We recall that the wfBm \(B_{t}^{a,b}\) with parameters \((a,b)\) such that \(a>-1\), \(\vert b\vert <1\), and \(\vert b\vert < a+1\) and long/short-range dependence is a centered self-similar Gaussian process with covariance function

Obviously, when \(a=b=0\), \(B_{t}^{a,b} \) is the standard Brownian motion \(B_{t}\). When \(a=0\), we have

which is the covariance function of the fBm with Hurst index \(\frac{b+1}{2}\) when \(-1< b<1\). Therefore, the wfBm is an extension of the fBm. The wfBm has some applications in various areas. It is known that the process \(B_{t}^{a,b}\) introduced in Bojdecki et al. [3] is neither a semimartingale nor a Markov process unless \(a=0\) and \(b=0\), so many useful technical tools of stochastic analysis are ineffective when researchers deal with \(B_{t}^{a,b}\). More studies on the wfBm can be found in Bojdecki et al. [4, 5], Garzón [6], Shen, Yan, and Cui [7], Yan, Wang, and Jing [8], and the references therein.

Kleptsyna and LeBreton [9] first studied the maximum likelihood estimator (MLE) of the fractional Ornstein-Uhlenbeck (fOU) process \(X_{t}\) and proved the convergence of the MLE. Hu and Nualart [10] studied the drift parameter estimation by a least squares approach and obtained the consistency and the asymptotic properties of the estimator based on continuous observations \(\{X_{t}, t \in[0, T]\}\). In the ergodic case, Azmoodeh and Morlanes [11], Azmoodeh and Viitasaari [12], Hu and Song [13], and Jiang and Dong [14] studied the statistical inference for several models. In addition, Belfadli, Es-Sebaiy, and Ouknine [15], El Machkouri, Es-Sebaiy, and Ouknine [16], Es-Sebaiy and Ndiaye [17], Shen, Yin, and Yan [18] studied the parameter estimation in the nonergodic case for fOU processes. Liu and Song [19] considered minimum distance estimation for fOU processes. Xiao et al. [20] considered the fOU processes with discretization procedure of an integral transform.

Thus, motivated by all these studies, the present paper is concerned with the parameter estimation problem for nonergodic O-U process driven by the wfBm

where \(\theta>0\) is an unknown parameter. Hu and Nualart [10] used the LSE technique to define the estimation of the unknown parameter as follows:

where the integral \(\int^{t}_{0}X_{s}\,dX_{s}\) is interpreted in the Young sense (see Young [21]). In fact, when \(\widehat{\theta }_{t}=\frac{\int^{t}_{0}X_{s}\,dX_{s}}{\int^{t}_{0}X^{2}_{s}\,ds}\), the quadratic function of θ

has minimum.

Note that ElOnsy, Es-Sebaiy. and Ndiaye [22] studied the parameter estimation for nonergodic fOU processes of the second kind with discrete observations. They proved the strong consistency of the estimators and obtained the rate consistency of those estimators. In this paper, we study parameter estimation problems for nonergodic OU processes driven by the weighted fractional Brownian motion with discrete observations. We construct two estimators, then prove the consistency of the estimators and get their rate consistency. By comparing ElOnsy, Es-Sebaiy, and Ndiaye [22] with our paper, we obtain both the consistency of the estimators and their rate consistency. However, there is some difference of the processes we study.

From a practical standpoint, it is more realistic and amusing to consider asymptotic properties of the estimator based on discrete observations of \(X_{t}\). We suppose that an Ornstein-Uhlenbeck process \(X_{t}\) is observed in equidistant times with step size \(\Delta_{n}:t_{1}=\Delta_{n}\), \(t_{2}=2\bigtriangleup_{n},\dots, t_{n}=T_{n}=n\bigtriangleup_{n}\), where we denote by \(T_{n}\) the length of the ‘observation window.’ The goal is to construct two estimators for θ that converge at rate \(\sqrt{n\Delta_{n}}\) based on the observational data \(X_{t_{i}}\), \(i=0,1,\dots,n\).

Since \(\int_{0}^{t} X_{s}\,dX_{s}\) is a Young integral (pathwise sense), we have \(\int_{0}^{t}X_{s}\,dX_{s}=\frac{1}{2}X_{t}^{2}\), Thus, we obtain

In the following, we construct two different discrete version estimators of \(\tilde{{\theta}}_{T_{n}}\). In (4), let us replace \(\int_{0}^{T_{n}}X_{s}\,dX_{s}\) by \(\sum_{i=1}^{n}X_{t_{i-1}}(X_{t_{i}}-X_{t_{i-1}})\) and \(\int _{0}^{T_{n}}X_{s}^{2}\,ds\) by \(\Delta_{n}\sum_{i=1}^{n}X_{t_{i-1}}^{2}\).

Then the estimators of θ are as follows:

and

Denote \(S_{n}=\Delta_{n}\sum_{i=1}^{n}X_{t_{i-1}}^{2}\). Then (5) and (6) can be rewritten respectively as

and

The paper is organized as follows. In Section 2, some preliminaries for the wfBm \(B_{t}^{a,b}\) and main lemmas are provided. In Section 3 the strong consistency of \(\hat{\theta}_{n}\) and \(\check{\theta}_{n}\) are proved. In Section 4, we show the rate consistency of \(\hat{\theta}_{n}\) and \(\check{\theta}_{n}\). Finally, we make simulations to show the performance of two estimators \(\hat{\theta }_{n}\) and \(\check{\theta}_{n}\).

2 Preliminaries and main lemmas

Let \(B_{t}^{a,b}\) be a wfBm defined on a complete probability space \((\Omega,\mathcal{F},P)\) with parameters a, b (\(a>-1\), \(0< b<1\), \(b< a+1\)). It is possible for researchers to construct a variety of stochastic calculus with respect to the wfBm \(B_{t}^{a,b}\) associated with the Malliavin calculus. More studies and references can be found in Nualart [23] and the references therein. Here, we need to review the basic concepts and some results of the Malliavin calculus.

The crucial ingredient is the canonical Hilbert space \(\mathcal{H}\) (also called the reproducing kernel Hilbert space) associated with the wfBm \(B_{t}^{a,b}\) defined as the closure of the linear space \(\mathcal {E}\) generated by the indicator functions \(\{\mathbf{1}_{[0,t]}, t\in [0,T]\}\) with respect to the scalar product

The mapping \(\mathcal{E} \ni\varphi \mapsto B^{a,b}(\varphi)=\int_{0}^{T} \varphi(s)\,dB_{s}^{a,b}\) (\(B^{a,b}(\varphi)\) is a Gaussian process on \(\mathcal{H}\), and \(E[B^{a,b}(\varphi)B^{a,b}(\psi)]=\langle\varphi, \psi\rangle_{\mathcal{H}}\) for all \(\varphi, \psi\in\mathcal{H}\)) is an isometry from the space \(\mathcal{E}\) to the Gaussian space generated by the wfBm \(B_{t}^{a,b}\), and it can be extended to the Hilbert space \(\mathcal{H}\).

We can find a linear space of functions contained in \(\mathcal{H}\) in the following way. Let \(\vert {\mathcal{H}}\vert \) be the linear space of measurable functions φ on \([0, T]\) such that

with \(\phi(s,r)=b(s\wedge r)^{a}(s\vee r-s\wedge r)^{b-1}\).

It can be proved that \((\vert {\mathcal{H}}\vert , \langle \cdot, \cdot\rangle_{\vert {\mathcal{H}}\vert })\) is a Banach space (see Shen et al. [18] and Pipiras and Taqqu [24]. Moreover,

Furthermore, for all \(\varphi, \phi\in{ \vert \mathcal{H}\vert }\) (see Shen et al. [18]),

For every \(n \geq1\), we denote by \(\mathcal{H}_{n}\) the nth Wiener chaos of \(B_{t}^{a,b}\). Namely, \(\mathcal{H}_{n}\) is the closed linear subspace of \(L^{2}(\Omega)\) generated by the random variables \(\{ H_{n}(B_{t}^{a,b}(f)),f \in\mathcal{H},{\Vert f \Vert _{\mathcal{H}}=1}\}\), where \(H_{n}\) is the nth Hermite polynomial. The mapping \(I_{n}(h^{\otimes n})=n!H_{n}(B_{t}^{a,b}(f))\) gives a linear isometry between the symmetric tensor product \(\mathcal {H}^{\odot n}\) and \(\mathcal{H}_{n}\), where the symmetric tensor product \(\mathcal{H}^{\odot n}\) is equipped with the modified norm \(\Vert \cdot \Vert _{\mathcal{H}^{\odot n}}=\frac{1}{\sqrt {n!}}\Vert \cdot \Vert _{\mathcal{H}^{\otimes n}}\), where \(\mathcal{H}^{\otimes n}\) denotes the tensor product, \(h^{\otimes n}\in \mathcal{H}^{\otimes n}\). For every \(f,g \in\mathcal{H}^{\odot n}\), we have the following formula:

where \(I_{n}(f) \) is the multiple stochastic integral of a function f. It has the following property:

Naturally, for any \(F\in\oplus_{i=1}^{q}\mathcal{H}_{i}\), we have

To prove the consistency of the estimators \(\hat{\theta}_{n}\) and \(\check{\theta}_{n}\), we will use the following lemmas.

Lemma 1

(Kloeden and Neuenkirch [25])

Let \(\gamma>0\) and \(P_{0}\in\mathbf{N}\). Moreover, let \(\{Z_{n}\}_{n\in \mathbf{N}}\) be a sequence of random variables. Suppose that, for every \(p\geq p_{0}\), there exists a constant \(c_{p} >0\) such that, for all \(n\in\mathbf{N}\),

Then, for all \(\varepsilon>0\), there exists a random variable \(\eta _{\varepsilon}\) such that, for any \(n\in\mathbf{N}\),

Moreover, \(E\vert \eta_{\varepsilon} \vert ^{p}<\infty\) for all \({p\geq1}\).

Lemma 2

Assume that \(-1< a<0\), \(0< b< a+1\), \(a+b>0\), and \(\Delta_{n}\rightarrow0\) and \(T_{n}\rightarrow\infty\) as \(n\rightarrow\infty\). Then, for any \(\alpha>0\),

Moreover, if \(n\Delta_{n}^{1+\beta}\rightarrow\infty\) for some \(\beta>0 \) as \(n\rightarrow\infty\), then

In particular, as \(n\rightarrow\infty\),

where \(\eta_{\infty}:=\int_{0}^{\infty}{e^{-\theta s}\, d{B_{s}^{a,b}}}\). Small o-notation \(o(\bullet)\) is defined as an infinitesimal of higher order of •.

Proof

First, it is easy to get the solution of SDE (2):

Denote

Then

Applying (16), we have

Next, we need to deal with the term \(e^{-2\theta T_{n}}S_{n}\):

Hence,

Because \(e^{2\theta\Delta_{n}}-1=2\theta\Delta_{n}+o(\Delta_{n})\), we have

where \(F_{n}:=\sum_{i=1}^{n-1}(\eta_{t_{i}}^{2}-\eta _{t_{i-1}}^{2})e^{2\theta(i-n)\Delta_{n}}\). From the equality

applying the Minkowski inequality, we have

By the Cauchy-Schwarz inequality we can rewrite (18) as

According to (10), we can calculate

Letting \(s=(u+i-1)\Delta_{n}\) and \(r=(v+i-1)\Delta_{n}\) (\(i=1,2,3,\dots ,n\)), we have

where \(M=2B(a+1,b+1)\). Hence,

where \(c(a,b,\theta)\) is a positive constant depending on a, b, θ, and its value may be different in different cases. Therefore, for any \(\alpha> 0\),

According to (11) and Lemma 1, there exists a random variable \(X_{\alpha}\) such that

for all \(n \in N\). Moreover, \([E\vert X_{\alpha} \vert ^{p}]< \infty\) for all \(p\geq1\). Therefore equality (12) is satisfied.

For the convergence (13), we assume that \(n\Delta _{n}^{1+\beta}\rightarrow\infty\) for some \(\beta>0 \) as \(n\rightarrow\infty\). Then

Note that \(T_{n}^{\alpha+\frac{2\alpha+1-(a+b)}{2\beta}}e^{-\theta T_{n}}\rightarrow0\) as \(n\rightarrow\infty\) and

Hence, using (12), we get the convergence (13).

For the convergence (14), note that \(\frac{\Delta_{n}}{e^{2\theta\Delta_{n}}-1} \rightarrow 2\theta\) as \(n \rightarrow\infty\), and using \(\eta_{t} \rightarrow \eta_{\infty}: =\int_{0}^{\infty}e^{-\theta s}\,dB_{s}^{a,b}\) as \(t\rightarrow\infty\), we can easily obtain it by (13). □

3 Establishment and strong consistency of the estimators

In this section, we construct two estimators \(\hat{\theta}_{n}\) and \(\check{\theta}_{n}\) and prove the strong consistency.

Using \(t_{i}=i \Delta_{n}\) (\(i=1,2,\dots,n\)) and (16), we have

and

Applying (22) and (23), we can write the estimator \(\hat {\theta}_{n}\) in (7) as

where \(G_{n}:=\sum_{i=1}^{n}e^{\theta t_{i}}(\eta_{t_{i}}-\eta _{t_{i-1}})X_{t_{i-1}}\).

Substituting (16) into (8), we can write the other estimator \(\check{\theta}_{n}\) of θ as

Theorem 1

Let \(-1< a<0\), \(0< b< a+1\), \(a+b>0\). Assume that \(\theta>0\) and that \(\Delta_{n}\rightarrow0 \) and \(n\Delta _{n}^{1+\beta}\rightarrow\infty\) for some \(\beta>0 \) as \(n\rightarrow\infty\). Then

and

Proof

To prove (26), we need to show that \(\frac {G_{n}}{S_{n}}\rightarrow0\) a.s. as \(n\rightarrow\infty\).

According to (14), it suffices to show that

By the Minkowski inequality and (20) we have

Noting that \(\Delta_{n}^{\frac{a+b-1}{2}}e^{-\theta T_{n}}=o(n^{-\alpha})\), \(\alpha>0\), as \(n \rightarrow\infty\), we have that, for any \(\gamma>0\),

and thus (29) can be written as follows:

By (11) and Lemma 1 there exists a random variable \(X_{\alpha}\) such that

for all \(n \in N\). Moreover, \([E\vert X_{\alpha} \vert ^{p}]< \infty\) for all \(p\geq1\). Hence, the convergence (28) is satisfied. Observe that \(\frac{e^{\theta\Delta_{n}}-1}{\Delta_{n}}\rightarrow \theta\) as \(n \rightarrow\infty\), and then the convergence (26) is proved.

Now, it remains to show that the convergence (27) is satisfied.

Since \(\eta_{t} \rightarrow\eta_{\infty}: =\int_{0}^{\infty }e^{-\theta s}\,dB_{s}^{a,b}\) a.s. as \(t\rightarrow\infty\), the convergence (27) is easily proved from (14) and (25). This completes proof. □

4 Rate consistency of the estimators

In this section, we show that \(\sqrt{T_{n}}(\hat{\theta}_{n}-\theta)\) and \(\sqrt{T_{n}}(\check{\theta}_{n}-\theta)\) are bounded in probability.

Theorem 2

Let \(-1< a<0\), \(0< b< a+1\), \(a+b>0\). Assume that \(\Delta_{n}\rightarrow0 \), \(T_{n}\rightarrow\infty\), and \(n\Delta_{n}^{1+\beta }\rightarrow\infty\) for some \(\beta>0\) as \(n\rightarrow\infty\),

-

(1)

for any \(q\geq0\),

$$\begin{aligned} \Delta_{n}^{q} e^{\theta T_{n}}(\hat{ \theta}_{n}-\theta) \textit{ is not bounded in probability}. \end{aligned}$$(30) -

(2)

if \(n\Delta_{n}^{3}\rightarrow0\) as \(n\rightarrow\infty\), then

$$\begin{aligned} \sqrt{T_{n}}(\hat{\theta}_{n}-\theta) \textit{ is bounded in probability}. \end{aligned}$$(31)

Proof

(1) Firstly, we consider the case of \(q=1\).

According to (24), we have

Because \(e^{\theta\Delta_{n}}-1-\theta\Delta_{n}\sim\frac{\theta ^{2}}{2}\Delta_{n}^{2} \) as \(\Delta_{n}\rightarrow0 \), we have

Because \(n\Delta_{n}^{1+\beta}\rightarrow\infty\) as \(n\rightarrow \infty\) for some \(\beta>0\) and \(\frac{e^{\theta T_{n}}}{T_{n}^{\frac {2}{\beta}}} \rightarrow\infty\) as \(n\rightarrow\infty\), we obtain that, as \(n\rightarrow\infty\),

Applying (29), we have that, as \(n\rightarrow\infty\),

Therefore we get the result (30) when \(q=1\) by combining (14), (32), (33), and (34). Next, we consider the case of \(q>1\).

According to (32), we have

Because \(e^{\theta\Delta_{n}}-1-\theta\Delta_{n}\sim\frac{\theta ^{2}}{2}\Delta_{n}^{2} \) as \(\Delta_{n}\rightarrow0 \), we have

Because \(n\Delta_{n}^{1+\beta}\rightarrow\infty\) as \(n\rightarrow \infty\) for some \(\beta>0\) and \(\frac{e^{\theta T_{n}}}{T_{n}^{\frac {1+q}{\beta}}}\rightarrow\infty\) as \(n\rightarrow\infty\), we obtain that, as \(n\rightarrow\infty\),

Then by using (14), (34), (35), and (36) the result (30) is obtained when \(q>1\).

Finally, the case of \(0\leq q<1\) is a direct result. Thus the proof of (30) is completed.

(2) According to (32), we have

where \(o(\Delta_{n}^{2})\) is an infinitesimal of higher order than \(\Delta_{n}^{2}\) as \(n\rightarrow\infty\).

Because \(\Delta_{n}\rightarrow0\) and \(n\Delta_{n}^{3}\rightarrow 0\) as \(n\rightarrow\infty\), we have

Let us now show that, as \(n\rightarrow\infty\),

Applying (14), it remains to prove \(\sqrt{T_{n}}e^{-2\theta T_{n}}G_{n}\rightarrow0\) as \(n\rightarrow\infty\) in probability.

Using (37), we have

Then

The last convergence (39) follows from \(n\Delta_{n}^{1+\beta }\rightarrow\infty\) as \(n\rightarrow\infty\). Applying the Markov inequality, as \(n\rightarrow\infty\), we have

Thus, combining (14), (38), and (39), we deduce the conclusion (2). □

Theorem 3

Let \(-1< a<0\), \(0< b< a+1\), \(a+b>0\). Assume that \(\Delta_{n}\rightarrow0 \), \(T_{n}\rightarrow\infty\), and \(n\Delta_{n}^{1+\beta}\rightarrow \infty\) for some \(\beta>0\) as \(n\rightarrow\infty\),

-

(1)

for any \(q\geq0\),

$$\begin{aligned} \Delta_{n}^{q} e^{\theta T_{n}}(\check{{ \theta }}_{n}-\theta) \textit{ is not bounded in probability}. \end{aligned}$$(40) -

(2)

If \(n\Delta_{n}^{3}\rightarrow0\) as \(n\rightarrow\infty\), then

$$\begin{aligned} \sqrt{T_{n}}(\check{{\theta}}_{n}-\theta) \textit{ is bounded in probability}. \end{aligned}$$(41)

Proof

(1) First, we shall prove the case of \(q=\frac{1}{2}\). By using (33) we calculate

Using the Minkowski and Cauchy inequalities, by (20) and (21) we have

The last inequality converges to 0 a.s. as \(n\rightarrow\infty\).

Thus by the Markov inequality and (14) we that, as \(n\rightarrow\infty\),

Furthermore,

Noting that \(n\Delta_{n}^{1+\beta}\rightarrow\infty\) as \(n\rightarrow\infty\) for some \(\beta>0\), \(\frac{e^{\theta T_{n}}}{T_{n}^{\frac{3}{2\beta}}}\rightarrow\infty\) as \(n\rightarrow \infty\), and \(\frac{e^{2\theta\Delta_{n}}-1-2\theta\Delta _{n}}{\Delta_{n}^{2}}\rightarrow2\theta^{2}\) and \(\frac{\Delta _{n}}{e^{2\theta\Delta_{n}}-1}\rightarrow\frac{1}{2\theta}\) as \(\Delta_{n}\rightarrow0\), we obtain that, as \(n\rightarrow\infty\),

Then we get (40) when \(q=\frac{1}{2}\) by combining (14), (43), and (44).

Similarly, we can prove (40) when \(q>\frac{1}{2}\) and \(0\leq q<\frac{1}{2}\).

Thus we have the conclusion (1) of Theorem 3.

(2) Now, we calculate

where equation (45) comes from (17).

Using the Minkowski and Cauchy inequalities, from (20) and (21) we have

The last term converges to 0 almost surely since \(n\Delta _{n}^{1+\beta}\rightarrow\infty\) as \(n\rightarrow\infty\). Thus by the Markov inequality and (14) we have that, as \(n\rightarrow\infty\),

Next, we consider the convergence of \(\sqrt{T_{n}}(1-\frac{2\theta \Delta_{n}}{e^{2\theta\Delta_{n}}-1})\):

Because \(n\Delta_{n}^{3}\rightarrow0\), \(\frac{e^{2\theta\Delta _{n}}-1-2\theta\Delta_{n}}{\Delta_{n}^{2}}\rightarrow2\theta^{2}\), and \(\frac{\Delta_{n}}{e^{2\theta\Delta_{n}}-1}\rightarrow\frac {1}{2\theta}\) as \(n\rightarrow\infty\), we obtain that, as \(n\rightarrow\infty\),

Therefore, combining (14), (46), and (47), we have the conclusion (2) of Theorem 3. □

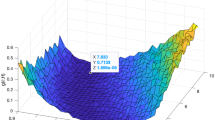

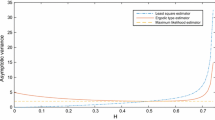

5 Numerical simulations

In this section, we study the efficiency of the estimators \(\hat{\theta }_{n}\) and \(\check{\theta}_{n}\) of θ based on the simulated path of \(X_{t}\) for different values of a, b, θ. We simulate 200 sample paths of \(X_{t}\) on the time interval \([0,1]\) with the equidistant time \(\Delta_{n}=0.0001\). At the end, we get a series of data sets about the two estimators \(\hat{\theta}_{n}\) and \(\check {\theta}_{n}\) by (5) and (6). The simulation of \(\hat {\theta}_{n}\) and \(\check{\theta}_{n}\) is given in Table 1.

According to Table 1, we can easily see that the standard deviations of the estimators \(\hat{\theta}_{n}\) and \(\check{\theta }_{n}\) are small. Also, we see that the means and medians of the constructed parameter estimators are close to the true parameter values. Therefore, the numerical simulations confirm the theoretical research.

References

Azzaoui, N, Clavier, L: An impulse response model for the 60 Ghz channel based on spectral techniques of alpha-stable processes. In: Proceeding of IEEE International Conference Communications, pp. 5040-5045 (2007)

Lin, S, Lin, T: An application of Lévy process with stochastic interest rate in structural model. In: International Conference on Innovative Computing Inforemation and Control, p. 491 (2008)

Bojdecki, T, Gorostiza, LG, Talarczyk, A: Occupation time limits of inhomogeneous Poisson systems of independent particles. Stoch. Process. Appl. 118, 28-52 (2008)

Bojdecki, T, Gorostiza, LG, Talarczyk, A: Some extensions of fractional Brownian motion and sub-fractional Brownian motion related to particle system. Electron. Commun. Probab. 12, 161-172 (2007)

Bojdecki, T, Gorostiza, LG, Talarczyk, A: Self-similar stable processes arising from high density limits of occupation times of particle systems. Potential Anal. 28, 71-103 (2008)

Garzón, J: Convergence to weighted fractional Brownian sheets. Commun. Stoch. Anal. 3, 1-14 (2009)

Shen, G-J, Yan, L-T, Cui, J: Berry-Esséen bounds and almost sure CLT for quadratic variation of weighted fractional Brownian motion. J. Inequal. Appl. 2013, 275 (2013)

Yan, L-T, Wang, Z, Jing, H: Some path properties of weighted fractional Brownian motion. Stochastics 86, 721-758 (2014)

Kleptsyna, M, Le Breton, A: Statistical analysis of the fractional Ornstein-Uhlenbeck type process. Stat. Inference Stoch. Process. 5, 229-248 (2002)

Hu, Y-Z, Nualart, D: Parameter estimation for fractional Ornstein-Uhlenbeck process. Stat. Probab. Lett. 80, 1030-1038 (2010)

Azmoodeh, E, Morlanes, JI: Drift parameter estimation for fractional Ornstein-Uhlenbeck process of the second kind. Statistics 49, 1-18 (2015)

Azmoodeh, E, Viitasaari, L: Parameters estimation based on discrete observations of fractional Ornstein-Uhlenbeck process of the second kind. Stat. Inference Stoch. Process. 18, 205-227 (2015)

Hu, Y-Z, Song, J: Parameter estimation for fractional Ornstein-Uhlenbeck processes with discrete observations. In: Viens, F, Feng, J, Hu, Y-Z, Nualart, E (eds.) Malliavin Calculus and Stochastic Analysis: A Festschrift in Honor of David Nualart. Springer Proceedings in Mathematics and Statistics, vol. 34, pp. 427-442 (2013)

Jiang, H, Dong, X: Parameter estimation for the non-stationary Ornstein-Uhlenbeck process with linear drift. Stat. Pap. 56, 1-12 (2015)

Belfadi, R, Es-Sebaiy, K, Ouknine, Y: Parameter estimation for fractional Ornstein-Uhlenbeck process: non-ergodic case. Front. Sci. Eng. 1, 1-16 (2011)

El Machkouri, M, Es-Sebaiy, K, Ouknine, Y: Least squares estimator for non-ergodic Ornstein-Uhlenbeck processes driven by Gaussian processes. J. Korean Stat. Soc. 45, 329-341 (2016)

Es-sebaiy, K, Ndiaye, D: On drift estimation for non-ergodic fractional Ornstein-Uhlenbeck processes with discrete observations. Afr. Stat. 9, 615-625 (2014)

Shen, G-J, Yin, X-W, Yan, L-T: Least squares estimation for Ornstein-Uhlenbeck processes driven by the weighted fractional Brownian motion. Acta Math. Sci. 36, 394-408 (2016)

Liu, Z, Song, N: Minimum distance estimation for fractional Ornstein-Uhlenbeck type process. Adv. Differ. Equ. 2014, 137 (2014)

Xiao, W, Zhang, W, Xu, W: Parameter estimation for fractional Ornstein-Uhlenbeck processes at discrete observation. Appl. Math. Model. 35, 4196-4207 (2011)

Young, LC: An inequality of the Hölder type connected with Stieltjes integration. Acta Math. 67, 251-282 (1936)

ElOnsy, B, Es-Sebaiy, K, Ndiaye, D: Parameter estimation for discretely observed non-ergodic fractional Ornstein-Uhlenbeck processes of the second kind. Braz. J. Probab. Stat. (2017) Accepted

Nualart, D: Malliavin Calculus and Related Topics. Springer, Berlin (2006)

Pipiras, V, Taqqu, MS: Integration questions related to fractional Brownian motion. Probab. Theory Relat. Fields 118, 251-291 (2000)

Kloeden, P, Neuenkirch, A: The pathwise convergence of approximation schemes for stochastic differential equations. LMS J. Comput. Math. 10, 235-253 (2007)

Acknowledgements

We thank two anonymous referees and the editor for their very careful reading and suggestions, which have led to significant improvements in the presentation of our results.

Funding

This research is supported by the Distinguished Young Scholars Foundation of Anhui Province (1608085J06), the National Natural Science Foundation of China (11271020), the Natural Science Foundation of Universities of Anhui Province (KJ2017A426, KJ2016A527), and the Natural Science Foundation of Chuzhou University (2016QD13).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Cheng, P., Shen, G. & Chen, Q. Parameter estimation for nonergodic Ornstein-Uhlenbeck process driven by the weighted fractional Brownian motion. Adv Differ Equ 2017, 366 (2017). https://doi.org/10.1186/s13662-017-1420-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1420-y