Abstract

This paper is concerned with the problem of \(H_{\infty}\) state estimation problem for a class of delayed static neural networks. The purpose of the problem is to design a delay-dependent state estimator such that the dynamics of the error system is globally exponentially stable with a prescribed \(H_{\infty}\) performance. Some improved delay-dependent conditions are established by using delay partitioning method and the free-matrix-based integral inequality. The gain matrix and the optimal performance index are obtained via solving a convex optimization problem subject to LMIs (linear matrix inequality). Numerical examples are provided to illustrate the effectiveness of the proposed method comparing with some existing results.

Similar content being viewed by others

1 Introduction

Neural networks (NNs) have attracted a great deal of attention because of their extensive applications in various fields such as associative memory, pattern recognition, combinatorial optimization, adaptive control, etc. [1, 2]. Due to the finite switching speed of the amplifiers, time delays are inevitable in electronic implementations of neural networks (such as Hopfield neural networks, cellular neural networks). Time delay in a system is often a source of instability, and delay systems has been widely studied (see [3–9] and the references therein). The stability and passivity problems of delayed NNs have been widely reported (see [1, 2, 10–14]).

We mainly focus on static neural networks (SNNs) in this paper, which is one type of recurrent neural networks. According to whether the neuron states or the local field states of neurons are chosen as basic variables, the model of neural networks can be classified into static neural networks or local field networks. As mentioned in [15, 16], local field neural networks and SNNs are not always equivalent. In contrast to the local field neural networks, the stability analysis of SNNs has attracted relatively little attention, many interesting results on the stability analysis of SNNs have been addressed in the literature [2, 17–21].

Meanwhile, the state estimation of neural networks is an important issue. In many applications, it is important to estimate the state of neurons to utilize the neural networks. Recently, some results on the state estimation problem for the neural networks have been investigated in [22–37]. Among them \(H_{\infty}\) state estimation of static neural networks with time delay was studied in [31, 33–37]. In [31], a delay partition approach was proposed to deal with the state estimation problem for a class of static neural networks with time-varying delay. In [33], the state estimation problem of the guaranteed \(H_{\infty}\) and \(H_{2}\) performance of static neural networks was considered. Further improved results were obtained in [34, 35, 37] by using the convex combination approach. The exponential state estimation of time-varying delayed neural networks was studied in [36]. These literatures all use the Lyapunov-Krasovskii functionals (LKFs) method, conservativeness comes from two things: the choice of functional and the bound on its derivative. A delay partitioning LKF reduce the conservativeness of a simple LKF by containing more delay information. There are two main techniques for dealing with the integrals that appear in the derivative: the free-weighting matrix method and the integral inequality method. However, the inequalities used may lead to conservatism to some extent. Moreover, the information of neuron activation functions has not been adequately taken into account in [31, 33–37]. Therefore, the guaranteed performance state estimation problem has not yet been fully studied and remains a space for improvement. Thus, it remains a space to improve the results.

This paper studies the problem of \(H_{\infty}\) state estimation for a class of delayed SNNs. Our aim is to design a delay-dependent state estimator, such that the filtering error system is globally exponentially stable with a prescribed \(H_{\infty}\) performance. By using delay equal-partitioning method, augmented LKFs are properly constructed. The lower bound is partitioned into several components in [21], so it is impossible to deal the situation when lower bound is zero. Moreover, the effectiveness of delay partitioning method is not evident when lower bound is very small. Different from the delay partitioning method used in [21], we decompose the delay interval into multiple equidistant subintervals, this method is more universal in dealing with the interval time-varying delay. Then the free-weighting matrix technique is used to get a tighter upper bound on the derivatives of the LKFs. As mentioned in Remark 3.3, we also reduce conservatism by taking advantage of the information on activation function. Moreover, the free-matrix-based integral inequality was used to derive improved delay-dependent criteria. Compared with existing results in [34–36], the criteria in this paper lead to less conservatism.

The remainder of this paper is organized as follows. The state estimation problem is formulated in Section 2. Section 3 deals with the design of the state estimator for delayed static neural networks. In Section 4, two numerical examples are provided to show the effectiveness of the results. Finally, some conclusions are made in Section 5.

Notations: The notations used throughout the paper are fairly standard. \(\mathbb{R}^{n}\) denotes the n-dimensional Euclidean space; \(\mathbb{R}^{n\times m}\) is the set of all \(n\times m\) real matrices; the notation \(A > 0\) (<0) means A is a symmetric positive (negative) definite matrix; \(A^{-1}\) and \(A^{T}\) denote the inverse of matrix A and the transpose of matrix A, respectively; I represents the identity matrix with proper dimensions; a symmetric term in a symmetric matrix is denoted by (∗); \(\operatorname{sym}\{A\}\) represents \((A + A^{T})\); \(\operatorname{diag}\{\cdot\}\) stands for a block-diagonal matrix. Matrices, if their dimensions are not explicitly stated, are assumed to be compatible for algebraic operations.

2 Problem formulation

Consider the delayed static neural network subject to noise disturbances described by

where \(x(t)=[x_{1}(t), x_{2}(t), \ldots, x_{n}(t)]^{T}\in \mathbb{R}^{n} \) is the state vector of the neural network, \(y(t)\in \mathbb{R}^{m}\) is the neural network output measurement, \(z(t)\in \mathbb{R}^{r}\) to be estimated is a linear combination of the state, \(w(t)\in \mathbb{R}^{n}\) is the noise input belonging to \(L_{2}[0,\infty)\), \(f(\cdot)\) denote the neuron activation function, \(A= {\operatorname{diag}} \{a_{1}, a_{2}, \ldots, a_{n}\}\) with \(a_{i}> 0\), \(i=1, 2, \ldots, n\) is the positive diagonal matrix, \(B_{1}\), \(B_{2}\), C, D, and H are real known matrices with appropriate dimensions, \(W\in \mathbb{R}^{n\times n} \) denote the connection weights, \(h(t)\) represent the time-varying delays, J represents the exogenous input vector, the function \(\phi(t)\) is the initial condition, and \(\tau= \sup_{t\geq 0}\{h(t)\}\).

In this paper, the time-varying delays satisfy

where \(d_{1}\), \(d_{2}\), and μ are constants.

In order to conduct the analysis, the following assumptions are necessary.

Assumption 2.1

For any \(x, y \in R\) (\(x\neq{y}\)), \(i \in \{1, 2, \ldots, n\}\), the activation function satisfies

where \({k}_{i}^{-}\), \({k}_{i}^{+}\) are constants, and we define \(K^{-}= {\operatorname{diag}} \{k^{-}_{1}, k^{-}_{2}, \ldots, k^{-}_{n}\}\), \(K^{+}= {\operatorname{diag}} \{k^{+}_{1}, k^{+}_{2}, \ldots, k^{+}_{n}\}\).

We construct a state estimator for estimation of \(z(t)\):

where \(\hat{x}(t)\in \mathbb{R}^{n} \) is the estimated state vector of the neural network, \(\hat{z}(t)\) and \(\hat{y}(t)\) denote the estimated measurement of \({z}(t)\) and \({y}(t)\), K is the gain matrix to be determined. Define the error \(e(t)={x}(t)-\hat{x}(t)\), \(\bar{z}(t)={z}(t)-\hat{z}(t)\), we can easily obtain the error system:

where \(g(W{e}(t))=f(Wx(t)+J)-f(W\hat{x}(t)+J)\).

Definition 2.1

[35] For any finite initial condition \(\phi(t) \in \mathcal{C}^{1}([-\tau,0];\mathbb{R}^{n})\), the error system (4) with \(w(t)=0\) is said to be globally exponentially stable with a decay rate β, if there exist constant \(\lambda >0\) and \(\beta >0\) such that

Given a prescribed level of disturbance attenuation level \(\gamma > 0\), the error system is said to be globally exponentially stable with \(H_{\infty}\) performance, when the error system is globally exponentially stable and the response \(\bar{z}(t)\) under zero initial condition satisfies

for every nonzero \(w(t) \in L_{2}[0,\infty)\), where \(\|\phi\|_{2}=\sqrt{\int_{0}^{\infty}{\phi^{T}(t)\phi(t) \,\mathrm{d}t}}\).

Lemma 2.1

[38]

For any constant matrix \(Z \in {R}^{n \times n}\), \(Z=Z^{T}>0\), scalars \(h_{2}>h_{1}>0\), and vector function \(x: [h_{1},h_{2}]\to R^{n}\) such that the following integrations are well defined,

Lemma 2.2

Schur complement

Given constant symmetric matrices \(S_{1}\), \(S_{2}\), and \(S_{3}\), where \(S_{1}=S_{1}^{T}\), and \(S_{2}=S_{2}^{T}>0\), then \(S_{1}+S_{3}^{T}S_{2}^{-1}S_{3}<0\) if and only if

Lemma 2.3

[39]

For differentiable signal x in \([\alpha,\beta]\rightarrow \mathbb{R}^{n}\), symmetric matrix \(R\in \mathbb{R}^{n \times n}\), \(Y_{1},Y_{3}\in \mathbb{R}^{3n \times 3n}\), any matrices \(Y_{2}\in \mathbb{R}^{3n \times 3n}\), and \(M_{1},M_{2}\in \mathbb{R}^{3n \times n}\) satisfying

the following inequality holds:

where

and

3 State estimator design

In this section, a delay partition approach is proposed to design a state estimator for the static neural network (1). For convenience of presentation, we denote

where \(d=d_{2}-d_{1}\).

Theorem 3.1

Under Assumption 2.1, for given scalars μ, \(0\leqslant d_{1}\leqslant d_{2}\), \(\gamma>0\), \(\alpha \geq0\), and integers \(m\geq1\), \(m\geq k\geq1\), the system (4) is globally exponentially stable with \(H_{\infty}\) performance γ if there exist matrices \(P\mbox{ }(\in \mathbb{R}^{n\times n})>0\), \(R_{i}\mbox{ }(\in \mathbb{R}^{n\times n})>0\) (\(i=1,2,\ldots,m+1\)), \({Q_{1}\mbox{ }(\in \mathbb{R}^{2mn\times 2mn})>0}\), \(Q_{2}\mbox{ }(\in \mathbb{R}^{2n\times 2n})>0\), symmetrical matrices \(X_{1},X_{3},Y_{1},Y_{3},X_{4},X_{6},Y_{4},Y_{6}\in \mathbb{R}^{3n\times 3n}\), positive diagonal matrices Γ, \(\Lambda_{i}\) (\(i=1,2,\ldots,m+1\)), \(\Lambda_{m+2}\), and any matrices with appropriate dimensions M, \(X_{2}\), \(X_{5}\), \(Y_{2}\), \(Y_{5}\), \(N_{j}\) (\(j=1,2,\ldots,8\)), such that the following LMIs hold:

where

the estimator gain matrix is given by \(K=M^{-1}G\).

Proof

Construct a Lyapunov-Krasovskii functional candidate as follows:

where

calculating the derivative of \(V(t,e_{t})\) along the trajectory of system, we obtain

according to Lemma 2.3, it follows that

there should exist a positive integer \(k\in\{1,2,\ldots,m\}\), such that \(h(t)\in[\frac {(k-1)}{m}d+d_{1},\frac {k}{m}d+d_{1}]\),

For \(i\neq k\), we also have the following inequality by Lemma 2.1:

According to Assumption 2.1, we obtain

where Γ, \(\Lambda_{i}\) are positive diagonal matrices.

According to the system equation, the following equality holds:

Combining the qualities and inequalities from (12) to (23), we can obtain

where \(\hat{\zeta}_{t}\) is defined as

Based on Lemma 2.2, one can deduce that

where \(\bar{H}=[0,0,0,0,H,0 ,0,0,0,0,0,0,0,0]\).

If the LMI (6) holds, then

\(\alpha V(t,x_{t})\geq0\), so we can obtain

since \(V(t,e(t))>0\), under the zero initial condition, we have

Therefore, the error system (4) guarantee \(H_{\infty}\) performance γ according to Definition 2.1. In the sequel, we show the globally exponentially stability of the estimation error system with \(w(t)=0\). When \(w(t)=0\), the error system (4) becomes

equation (23) becomes

considering the same Lyapunov-Krasovskii functional candidate and calculating its time derivative along the solution of (27), we can derive

where Ξ is obtained by deleting the terms in Ξ̂ associated with \(w(t)\),

Let \(G=MK\), it is obvious that if \(\hat{\Xi}<0\), then \(\Xi<0\), so we get

Integrating the above inequality (30), so we obtain

From (11), we have

where

Combining (31), (32), and (33) yields

hence the error system (4) is globally exponentially stable. Above all, if \(\hat{\Xi}<0\), then the state estimator for the static neural network has the prescribed \(H_{\infty}\) performance and guarantees the globally exponentially stable of the error system. This completes the proof. □

Remark 3.1

We use two ways to reduce the conservativeness: a good choice of the Lyapunov-Krasovskii functionals, and the use of a less conservative integral inequality. To make the Lyapunov-Krasovskii functionals contain more detailed information of time delay, delay partitioning method is employed. We also use the free-weighting matrix method to obtain a tighter upper bound on the derivative of the LKFs, many free-weighting matrices will be introduced with the increasing number of partitions. That will lead to complexity and computational burden. Hence, the partitioning number should be properly chosen.

Remark 3.2

Condition (6) in Theorem 3.1 depends on the time-varying delay, it is easy to show that the condition is satisfied for all \(0\leqslant d_{1}\leqslant h(t) \leqslant d_{2}\) if \(\hat{\Xi}|_{ h(t)=d_{1}}<0\) and \(\hat{\Xi}|_{ h(t)=d_{2}}<0\).

Remark 3.3

In some previous literature [33, 35, 36], \({k}_{i}^{-}\leq\frac{f_{i}(x)}{x}\leq {k}_{i}^{+}\), which is a special case of \({k}_{i}^{-}\leq\frac{f_{i}(x)-f_{i}(y)}{x-y}\leq {k}_{i}^{+}\) was used to reduce the conservatism. In our proof, not only \({k}_{i}^{-}\leq\frac{g_{i}(W_{i}e(t))}{W_{i}e(t)}\leq {k}_{i}^{+}\), but also \({k}_{i}^{-}\leq\frac{g_{i}(W_{i}e(t-h(t)))}{W_{i}e(t-h(t))}\leq {k}_{i}^{+}\), \({k}_{i}^{-}\leq\frac{g_{i}(W_{i}e(t-d_{1}-\frac{j-1}{m}d))}{W_{i}e(t-d_{1}-\frac{j-1}{m}d)}\leq {k}_{i}^{+}\), \(j \in \{1, 2, \ldots, m+1\}\) have been used, which play an important role in reducing the conservatism.

4 Numerical examples

In this section, numerical examples are provided to illustrate effectiveness of the developed method for the state estimation of static neural networks.

Example 1

Consider the neural networks (1) with the following parameters:

To compare with the existing results, we let \(\alpha=0\), \(d_{1}=0\). We obtain the optimal \(H_{\infty}\) performance index γ for different values of delay \(d_{2}\) and μ. It is summarized in Table 1.

From Table 1, it is clear that our results achieve better performance. In addition, the optimal \(H_{\infty}\) performance index γ becomes smaller as the partitioning number is increasing. It shows that the delay partitioning method can reduce the conservatism effectively.

Example 2

Consider the neural networks (1) with the following parameters:

The activation function is \(f(x)=\tanh(x)\), it is easy to get \(K^{-}=0\), \(K^{+}=I\). We set \(\gamma=1\), \(\alpha=0\), \(h(t)=0.5+0.5\sin(0.8t)\), so the bound of time delay \(d_{1}=0\), \(d_{2}=1\), and \(\mu=0.4\). The noise disturbance is assumed to be

By solving through the Matlab LMI toolbox, we obtain the gain matrix of the estimator:

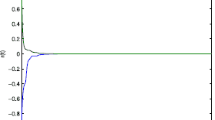

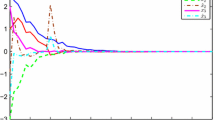

Figure 1 present the state variables and their estimation of the neural network (1) from initial values \([0.3,-0.5,0.2]^{T}\). Figure 2 shows the state response of the error system (4) under the initial condition \(e(0)=[0.3,-0.5,0.2]^{T}\). It is clear that \(e(t)\) converges rapidly to zero. The simulation results reveal the effectiveness of the proposed approach to the design of the state estimator for static neural networks.

5 Conclusions

In this paper, we investigated the \(H_{\infty}\) state estimation problem for a class of delayed static neural networks. By constructing the augmented Lyapunov-Krasovskii functionals, new delay-dependent conditions were established. The estimator matrix gain was obtained by solving a set of LMIs, which can guarantee the global exponential stability with a prescribed \(H_{\infty}\) performance level of the error system. In the end, numerical examples were provided to illustrate the effectiveness of the proposed method comparing with some existing results.

References

Kwon, OM, Lee, SM, Park, JH: Improved results on stability analysis of neural networks with time-varying delays: novel delay-dependent criteria. Mod. Phys. Lett. B 24(8), 775-789 (2010)

Du, B, Lam, J: Stability analysis of static recurrent neural networks using delay-partitioning and projection. Neural Netw. 22(4), 343-347 (2009)

Lu, J, Wang, Z, Cao, J, Ho, DW, Kurths, J: Pinning impulsive stabilization of nonlinear dynamical networks with time-varying delay. Int. J. Bifurc. Chaos 22(7), 1250176 (2012)

Zhang, B, Lam, J, Xu, S: Relaxed results on reachable set estimation of time-delay systems with bounded peak inputs. Int. J. Robust Nonlinear Control 26(9), 1994-2007 (2015)

Lu, R, Xu, Y, Xue, A: \(H_{\infty}\) filtering for singular systems with communication delays. Signal Process. 90(4), 1240-1248 (2010)

Wu, Z-G, Shi, P, Su, H, Lu, R: Dissipativity-based sampled-data fuzzy control design and its application to truck-trailer system. IEEE Trans. Fuzzy Syst. 23(5), 1669-1679 (2015)

Ali, MS, Saravanan, S, Cao, J: Finite-time boundedness, \(L_{2}\)-gain analysis and control of Markovian jump switched neural networks with additive time-varying delays. Nonlinear Anal. Hybrid Syst. 23, 27-43 (2017)

Ali, MS, Yogambigai, J: Synchronization of complex dynamical networks with hybrid coupling delays on time scales by handling multitude Kronecker product terms. Appl. Math. Comput. 291, 244-258 (2016)

Cheng, J, Park, JH, Zhang, L, Zhu, Y: An asynchronous operation approach to event-triggered control for fuzzy Markovian jump systems with general switching policies. IEEE Trans. Fuzzy Syst. (2016, to appear)

Arunkumar, A, Sakthivel, R, Mathiyalagan, K, Park, JH: Robust stochastic stability of discrete-time fuzzy Markovian jump neural networks. ISA Trans. 53(4), 1006-1014 (2014)

Shi, K, Zhu, H, Zhong, S, Zeng, Y, Zhang, Y: Less conservative stability criteria for neural networks with discrete and distributed delays using a delay-partitioning approach. Neurocomputing 140, 273-282 (2014)

Shi, K, Zhu, H, Zhong, S, Zeng, Y, Zhang, Y: New stability analysis for neutral type neural networks with discrete and distributed delays using a multiple integral approach. J. Franklin Inst. 352(1), 155-176 (2015)

Kwon, OM, Park, MJ, Park, JH, Lee, SM, Cha, EJ: Improved approaches to stability criteria for neural networks with time-varying delays. J. Franklin Inst. 350(9), 2710-2735 (2013)

Ali, MS, Arik, S, Rani, ME: Passivity analysis of stochastic neural networks with leakage delay and Markovian jumping parameters. Neurocomputing 218, 139-145 (2016)

Xu, Z-B, Qiao, H, Peng, J, Zhang, B: A comparative study of two modeling approaches in neural networks. Neural Netw. 17(1), 73-85 (2004)

Qiao, H, Peng, J, Xu, Z-B, Zhang, B: A reference model approach to stability analysis of neural networks. IEEE Trans. Syst. Man Cybern., Part B, Cybern. 33(6), 925-936 (2003)

Li, P, Cao, J: Stability in static delayed neural networks: a nonlinear measure approach. Neurocomputing 69(13), 1776-1781 (2006)

Zheng, C-D, Zhang, H, Wang, Z: Delay-dependent globally exponential stability criteria for static neural networks: an LMI approach. IEEE Trans. Circuits Syst. II 56(7), 605-609 (2009)

Shao, H: Delay-dependent stability for recurrent neural networks with time-varying delays. IEEE Trans. Neural Netw. 19(9), 1647-1651 (2008)

Li, X, Gao, H, Yu, X: A unified approach to the stability of generalized static neural networks with linear fractional uncertainties and delays. IEEE Trans. Syst. Man Cybern., Part B, Cybern. 41(5), 1275-1286 (2011)

Wu, Z-G, Lam, J, Su, H, Chu, J: Stability and dissipativity analysis of static neural networks with time delay. IEEE Trans. Neural Netw. Learn. Syst. 23(2), 199-210 (2012)

Shi, K, Liu, X, Tang, Y, Zhu, H, Zhong, S: Some novel approaches on state estimation of delayed neural networks. Inf. Sci. 372, 313-331 (2016)

Li, T, Fei, S, Zhu, Q: Design of exponential state estimator for neural networks with distributed delays. Nonlinear Anal., Real World Appl. 10(2), 1229-1242 (2009)

Mahmoud, MS: New exponentially convergent state estimation method for delayed neural networks. Neurocomputing 72(16), 3935-3942 (2009)

Zheng, C-D, Ma, M, Wang, Z: Less conservative results of state estimation for delayed neural networks with fewer LMI variables. Neurocomputing 74(6), 974-982 (2011)

Zhang, D, Yu, L: Exponential state estimation for Markovian jumping neural networks with time-varying discrete and distributed delays. Neural Netw. 35, 103-111 (2012)

Chen, Y, Zheng, WX: Stochastic state estimation for neural networks with distributed delays and Markovian jump. Neural Netw. 25, 14-20 (2012)

Rakkiyappan, R, Sakthivel, N, Park, JH, Kwon, OM: Sampled-data state estimation for Markovian jumping fuzzy cellular neural networks with mode-dependent probabilistic time-varying delays. Appl. Math. Comput. 221, 741-769 (2013)

Huang, H, Huang, T, Chen, X: A mode-dependent approach to state estimation of recurrent neural networks with Markovian jumping parameters and mixed delays. Neural Netw. 46, 50-61 (2013)

Lakshmanan, S, Mathiyalagan, K, Park, JH, Sakthivel, R, Rihan, FA: Delay-dependent \(H_{\infty}\) state estimation of neural networks with mixed time-varying delays. Neurocomputing 129, 392-400 (2014)

Huang, H, Feng, G, Cao, J: State estimation for static neural networks with time-varying delay. Neural Netw. 23(10), 1202-1207 (2010)

Huang, H, Feng, G: Delay-dependent \(H_{\infty}\) and generalized \(H_{2}\) filtering for delayed neural networks. IEEE Trans. Circuits Syst. I 56(4), 846-857 (2009)

Huang, H, Feng, G, Cao, J: Guaranteed performance state estimation of static neural networks with time-varying delay. Neurocomputing 74(4), 606-616 (2011)

Duan, Q, Su, H, Wu, Z-G: \(H_{\infty}\) state estimation of static neural networks with time-varying delay. Neurocomputing 97, 16-21 (2012)

Huang, H, Huang, T, Chen, X: Guaranteed \(H_{\infty}\) performance state estimation of delayed static neural networks. IEEE Trans. Circuits Syst. II 60(6), 371-375 (2013)

Liu, Y, Lee, SM, Kwon, OM, Park, JH: A study on \(H_{\infty}\) state estimation of static neural networks with time-varying delays. Appl. Math. Comput. 226, 589-597 (2014)

Ali, MS, Saravanakumar, R, Arik, S: Novel \(H_{\infty}\) state estimation of static neural networks with interval time-varying delays via augmented Lyapunov-Krasovskii functional. Neurocomputing 171, 949-954 (2016)

Gu, K, Chen, J, Kharitonov, VL: Stability of Time-Delay Systems. Springer, Berlin (2003)

Zeng, H-B, He, Y, Wu, M, She, J: Free-matrix-based integral inequality for stability analysis of systems with time-varying delay. IEEE Trans. Autom. Control 60(10), 2768-2772 (2015)

Acknowledgements

This work was supported by the Sichuan Science and Technology Plan (2017GZ0166).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors have made equal contributions to the writing of this paper. All authors have read and approved the final version of the manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wen, B., Li, H. & Zhong, S. New results on \(H_{\infty}\) state estimation of static neural networks with time-varying delay. Adv Differ Equ 2017, 17 (2017). https://doi.org/10.1186/s13662-016-1056-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-016-1056-3