Abstract

This paper aims at proposing an observer-based T-S fuzzy singular system. Firstly, we give a general model of nonlinear singular systems. We use the T-S fuzzy control method to form a T-S fuzzy singular system and we give the augmented system and compact form of a T-S fuzzy singular system. Secondly, we design a T-S fuzzy observer for the augmented system. In order to prove the parameters and state estimation errors are globally stable for the T-S fuzzy observer, we construct a Lyapunov function with T-S fuzzy form. Then we give the sufficient condition that the fuzzy control fuzzy system is globally exponentially stable and give the controller gains. Finally, we give two numerical examples for the observer, and the simulation results demonstrate the effectiveness of the observer for the nonlinear singular system,through a comparison of the literature (Zulfiqar et al. in Appl. Math. Model. 40(3):2301-2311, 2016).

Similar content being viewed by others

1 Introduction

State feedback plays an important role in solving all kinds of complex problems in the control systems. Many control system problems can be realized by introducing proper state feedback, such as stabilization, decoupling, non-static error tracking, and optimal control. But because the system state cannot be measured directly, or due to the limitation of measuring equipment in economic or use, leading to the impossibility to actually get all information of the system state variables, it is very difficult to realize a physical form of state feedback. So the requirement of the state feedback in performance is incompatible with a physical implementation. One of the ways to solve this problem is through the reconstruction of the state of systems, and make the reconstruction of the state take the place of the real state of systems, to satisfy the requirement of the state feedback. The state reconstruction problem, namely the problem of observer design, is the way to solve these problems. In this paper, we are able to design effective state observers for nonlinear systems using the T-S fuzzy control method.

In the past 20 years, scholars have made a lot of research on the design of nonlinear observers. In the early 1960s, the famous Kalman filter [2] and Luenberger observer [3] led to the linear system state observer complete design method. Unlike the linear system, nonlinear observer design is more complex. For nonlinear systems, there have no unified analysis method until now. A currently popular method is to classify the system, firstly. Then the existence and the design of state observer for different types of nonlinear systems are studied. For example, in accordance with the degree of nonlinearity of the system, which system has been well studied, Lipschitz nonlinear systems [4, 5] only depend on nonlinear systems of the output [6, 7], nonlinear systems of the multivariable meet the circle criterion [8], and we have the strict feedback stochastic nonlinear system [9].

At present, the design of the nonlinear system is aimed at the particular nonlinear system. Yi and Zhang [10] developed an extended updated-gain high gain observer to make a tradeoff between reconstruction speed and measurement noise attenuation. Huang et al. [11] propose a new method to design observers for Lur’e differential inclusion systems. Generally speaking, the structure of the observer for nonlinear systems can be summarized as follows by several methods: the class of Lyapunov function method [12, 13], differential geometric methods for designing [14, 15], the extended Luenberger observer method [16, 17], and the extended Kalman filtering method [18, 19]. Chadli and Karimi dealt with the observer design for Takagi-Sugeno (T-S) fuzzy models subject to unknown inputs and disturbance affecting both states and outputs of the system [20]. Yin et al. presented an approach for data-driven design of fault diagnosis system [21]. Aouaouda et al. were concerned with robust sensor fault detection observer (SFDO) design for uncertain and disturbed discrete-time Takagi-Sugeno (T-S) systems using the \(H_{-} /H_{\infty}\) criterion [22]. Zhao et al. proposed an input-output approach to the stability and stabilization of uncertain Takagi-Sugeno (T-S) fuzzy systems with time-varying delay [23].

Singular systems are also called differential algebraic systems, one has many theories of research results in nearly 30 years, and one has many applications in the aviation, aerospace, robotics, power system, electronic network, chemistry, biology, economy, and other fields [24–26] at present; the study of the singular system is still an area of control theory research of great interest at home and abroad. Singular systems describe a class of more extensive actual system models. In particular, singular systems have an impulse behavior, and they make relevant research becoming more complex and novel. Therefore, it has important academic value and broad application background. At 1999, Taniguchi et al. made the normal fuzzy system extend to more general situations and put forward the fuzzy singular system [27, 28], which use multiple local linear singular systems to approximate a global nonlinear system, and it is easy to solve the problem of the control of global nonlinear singular systems with the help of linear system analysis and the control method.

In this paper, firstly, we apply the T-S fuzzy method in nonlinear singular systems and form a T-S fuzzy singular system. Secondly, in order to get a better state feedback, we use the method of Lyapunov functions to design an observer with T-S fuzzy form. Then we prove the parameters and state estimation errors are globally stable of the T-S fuzzy observer, we give the sufficient condition that the fuzzy control fuzzy system is globally exponentially stable, and we give the controller gains. Finally, simulation results are given to illustrate the correctness of results and the effectiveness of the observer.

Notations

The symbol R denotes the set of real numbers. \(R^{n}\) denotes the n-dimensional Euclidean space. The superscript ‘T’ stands for matrix transposition. \(A > 0\), \(A < 0\) denote symmetric positive definite and symmetric negative definite, respectively. For a matrix A, \(A^{ - 1}\), \(\Vert A \Vert \), \(\lambda_{\min} (A)\), and \(\lambda _{\max}(A)\) denote its inverse, the induced norm, the minimum eigenvalues, and maximum eigenvalues, respectively. \(C^{0}\) and \(C^{1}\) represent the continuous and differentiable functions, respectively. \(\Sigma^{ +}\) represent the generalized inverse matrix of the matrix Σ.

2 Preliminaries

Lemma 1

For proper dimension matrix \(v_{1}\) and \(v_{2}\), there is a positive k and arbitrary matrix norm \(\Vert \bullet \Vert \) leading to the following equalities:

Lemma 2

[29]

The singular value decomposition of \(C^{*}\) is

where \(U \in R^{q \times q}\) is an orthogonal matrix, \(\Sigma= \operatorname{diag} [ \sigma_{1}(C^{*} ), \ldots,\sigma_{r_{c}}(C^{*} ) ]\), \(\sigma_{k}(C^{*} )\), \(k = 1,2, \ldots,r_{c}\), refer to the singular value of \(C^{*}\), \(r_{c} = \operatorname{rank}(C^{*} ) \le q\), and \(H \in R^{n \times n}\) represents an orthogonal matrix. Then the pseudo-inverse \(C^{*}\), which is denoted by \(C^{+}\), is

Lemma 3

For any positive a, b, the following equation holds true:

Lemma 4

Rayleigh-Ritz theorem

Let \(A^{*} \in R^{n \times n}\) be a positive definite, real, and symmetric matrix. Then \(\forall x \in R^{n}\), the following inequality holds:

3 Problem statement

Consider a singular nonlinear system described by

where \(x(t) \in R^{n}\) is the state variable, \(y(t) \in R^{p}\) is the measured output, \(u(t) \in R^{m}\) is the control input. \(f:R^{n} \times R^{m} \times R \to R^{f}\) is an unknown continuous nonlinear function, \(E \in R^{n \times n}\) is a singular matrix, \(A \in R^{n \times n}\), \(B \in R^{n \times m}\), \(C \in R^{p \times n}\), and \(D \in R^{n \times f}\) are indicative of the known constant matrices.

System (3.1) is a classic nonlinear model and the nonlinear model is more troublesome dealing with many problems, so we use the linear system to approximate the nonlinear system according to the characteristics of T-S fuzzy control, so that the problem of the nonlinear system is transformed into the problem of a linear system.

-

Model rule i:

If \(\xi_{1}(t)\) is \(M_{i1}\) and ⋯ and \(\xi_{q}(t)\) is \(M_{iq}\)

then

$$ \left \{ \textstyle\begin{array}{l} E\dot{x}(t) = A_{i}x(t) + B_{i}u(t), \\ y = C_{i}x(t), \end{array}\displaystyle \right .\quad i = 1,2, \ldots,r, $$(3.2)

where \(M_{ij}\) is a fuzzy set and r is the number of rules, \(A_{i} \in R^{n \times n}\), \(B_{i} \in R^{n \times m}\), \(C_{i} \in R^{p \times n}\), \(\xi_{1}(t),\xi_{2}(t), \ldots,\xi_{i}(t)\) are known premise variables that may be functions of the state variables, we will use \(\xi(t)\) to denote the vector containing all individual elements \(\xi_{1}(t),\xi_{2}(t), \ldots,\xi_{i}(t)\). \(\beta_{i}(\xi(t)) = \prod_{j = 1}^{l} M_{ij}(\xi_{j})\) is the membership function of the system (3.2) with respect to the ith plant rules.

According to the characteristics of T-S fuzzy approximation, we can approximate the global nonlinear function with a number of local linear functions. So we can get the following model:

where

According to the system (3.3), the following augmented system is considered:

and its compact form is defined by

where \(z(t) = [ x(t) \ \dot{x}(t)]^{T} \in R^{2n}\) is the augmented state. \(\overline{E} = \bigl [{\scriptsize\begin{matrix}{} I_{n} & 0 \cr 0 & 0\end{matrix}} \bigr ] \in R^{2n \times2n}\), \(\overline{A}_{i} = \bigl [{\scriptsize\begin{matrix}{}0 & I_{n} \cr A_{i} & - E\end{matrix}} \bigr ] \in R^{2n \times2n}\), \(\overline{B}_{i} = \bigl [{\scriptsize\begin{matrix}{} 0 \cr B_{i}\end{matrix}} \bigr ] \in R^{2n \times m}\), \(\overline{C}_{i} = \bigl [{\scriptsize\begin{matrix}{} C_{i} & 0 \cr 0 & C_{i}\end{matrix}} \bigr ] \in R^{2p^{*}2n}\), \(i = 1,2, \ldots,r\).

In order to facilitate the design of the observer, we have the following assumptions:

-

1.

The T-S fuzzy augmented system (3.5) is solvable and impulse free.

-

2.

The first-order derivative of the output vector is available.

-

3.

\(\operatorname{rank}\Bigl[ {\scriptsize\begin{matrix}{} \overline{E} \cr \Gamma\overline{A}_{i} \cr \overline{C}_{i}\end{matrix}} \Bigr] = 2n\), \(i = 1,2, \ldots,r\), the matrix \(\Gamma\in R^{r_{1} \times2n}\) is a full row rank, and \(\Gamma[ \overline{E} \ 0 \ 0] = 0\) where \(\operatorname{rank}([ \overline{E} \ 0 \ 0]) = n + p\).

4 Main results

In 2008, Karimi [30] presented a convex optimization method for observer-based mixed \(H_{2}/H_{\infty}\) control design of linear systems with time-varying state, input and output delays and gave the delay dependent sufficient conditions for the design of a desired observer-based control, that is, the linear matrix inequalities. Kao et al. [31] focused on designing a sliding-mode control for a class of neutral-type stochastic systems with Markovian switching parameters and nonlinear uncertainties.

Consider the following continuous observer for the ‘subsystem’ of the augmented system (3.5):

Below we will explain the meaning of the parameters of system (4.1):

\(\theta(t) \in R^{q}\) is the observer state vector, \(\bar{z}(t)\) represents the estimation of \(\bar{y}(t)\). \(L_{i}\) is the estimator gain and continuous:

where \(\tilde{\bar{y}}(t) = \bar{z}(t) - \bar{y}(t)\) is the output reconstruction error,

and the \(\gamma_{1}\) satisfy the following conditions:

where \(N_{i}\) is the Hurwitz matrix, \(X_{i}\) and \(Q_{1}\) are symmetric positive definite matrices.

According to the literature [32], we get the relevant parameters:

\(R \in R^{q \times2n}\) is a full row rank matrix,

where \(r_{1} = n - p\), Y, Z, W are arbitrary matrices of appropriate dimension.

Theorem 1

For the T-S fuzzy observer (4.1), the parameters and state estimation errors are globally stable, if the following equations (4.3)-(4.5) hold:

and there exist positive \(\gamma_{1}\), \(X_{i} = X_{i}^{T} > 0\), satisfying the following matrix inequality:

Proof

First we define ε as the error of θ and \(T_{i}\overline{E}z\). That is \(\varepsilon= \theta- T_{i}\overline{E}z\). According to (3.5) and (4.1), we can obtain

According to (4.3) and the process of solving \(H_{i}\), we can get

By considering the equation

we have the equation

So

That is,

Using equation (4.8), θ and z̄, we can get

which can be rewritten as

According to equation (4.4), the augmented state observation error is obtained:

where \(\tilde{z} = \bar{z} - z\).

Here we are going to have a stability analysis. First, we construct a positive definite function as a Lyapunov function:

By using (4.6), the time derivative of V is written:

According to Lemma 1, we can get

Then \(X_{i} = S_{i}\overline{C}_{i}K_{i}\), \(i = 1,2, \ldots,r\), where \(S_{i}\) is computed using Lemma 2. So

Using equation (4.2), we can obtain

Because of \(h_{2i}(t) > 0\) and \(\sup\dot{h}_{1}(t) < 0\), we combine with Lemma 3:

\(h_{2i}(t)\) should satisfy this inequality:

The result is the following inequality:

Because

and by applying Schur lemma and Rayleigh-Ritz theorem, we can get

Therefore, the derivative of V is negative semi definite. According to the Lyapunov theorem, we can get the ε, z̃ and the parameters estimation errors are globally stable.

According to the above analysis, we proved the theorem. □

In view of the system (4.1), we give a kind of fuzzy control scheme.

-

Model rule i:

If \(\xi_{1}(t)\) is \(M_{i1}\) and ⋯ and \(\xi_{q}(t)\) is \(M_{iq}\)

then \(u(t) = K_{l}x(t)\),

where \(l \in L: = \{ 1,2, \ldots,r\}\), which can be rewritten as

The fuzzy control system consisting of the fuzzy system (4.1) and smooth controller (4.14) can be rewritten as

Theorem 2

The fuzzy control system (4.15) is globally exponentially stable if there a set of matrices \(Q_{l}\), \(l \in L\), a set of symmetric matrices \(\Phi_{l}\), \(l \in L\), a set of matrices \(\Phi_{li} = \Phi_{il}^{T}\), \(i,l \in L\), \(l < i\), and a positive definite matrix X meets the following LMIs:

and the controller gains can be determined by

Proof

First of all, we defined the Lyapunov function

where the matrix X is positive definite [33].

By applying the Schur complement to (4.16) and (4.17), respectively, one has

We define \(A_{li} = N_{l} + H_{l}K_{i}\) and \(B = L_{l}(a,b,c,\bar{y}) + J_{l}\bar{y}\), and we consider the Lyapunov function defined in (4.20). Because the form of the following function is a sum, in order to facilitate the subsequent proof, we give the discrete form for the Lyapunov function:

where \(k > 0\).

Thus the fuzzy control system (4.15) is globally exponentially stable and the controller gains can be determined by (4.8). The proof is thus completed. □

5 Examples

Example 1

In 1984, Mikania micrantha first appeared in Shenzhen. Mikania micrantha is in vines and has the super ability to reproduce, climbing shrubs and trees, can quickly form whole plant coverage, and the plants by destroying the photosynthesis suffocate. Mikania micrantha can produce allelochemicals to inhibit the growth of other plants.

According to [34], we give the model of the invasion of Mikania micrantha:

where \(x(t)\), \(y(t)\), \(E(t)\) denote the density of native species, the density of alien species (Mikania micrantha), and the capture of the alien species (Mikania micrantha), respectively; \(r_{1}\) and \(r_{2}\) denote the intrinsic growth rate of native species and Mikania micrantha, respectively; k represents environmental capacity of native species; m represents the cost of artificial capture of Mikania micrantha; c represents unit capture cost of Mikania micrantha.

The values of the settings in [34] are \(r_{2} = 0.1\), \(k = 20\), \(c = 0.03\), \(r_{1} = 0.5\), \(m = 4\). So we can get

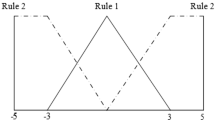

On account of the presence of saturation for the species population density, it is reasonable to suppose \(x(t) \in[ - d_{1},d_{1}]\), \(y(t) \in[- d_{2},d_{2}]\), \(d_{1} = 10\), \(d_{2} = 10\), and then the fuzzy state model can be written as follows, which is appropriate for describing model system (4.15) as \(x(t) \in[ - d_{1},d_{1}]\), \(y(t) \in[ - d_{2},d_{2}]\):

Rule 1: If \(x(t)\) is \(M_{1}\) and \(y(t)\) is \(M_{3}\), then

Rule 2: If \(x(t)\) is \(M_{1}\) and \(y(t)\) is \(M_{4}\), then

Rule 3: If \(x(t)\) is \(M_{2}\) and \(y(t)\) is \(M_{3}\), then

Rule 4: If \(x(t)\) is \(M_{2}\) and \(y(t)\) is \(M_{4}\), then

Here

In order to observe the state variables, we add an output variable:

where

According to the definitions and results of fuzzy models, the integral fuzzy model is inferred as follows:

where \(\tilde{\lambda}_{1}(X(t)) = \frac{1}{4}(1 - \frac{x(t)}{d_{1}})\), \(\tilde{\lambda}_{2}(X(t)) = \frac{1}{4}(1 + \frac{x(t)}{d_{1}})\), \(\tilde{\lambda}_{3}(X(t)) = \frac{1}{4}(1 - \frac{y(t)}{d_{2}})\), \(\tilde{\lambda}_{4}(X(t)) = \frac{1}{4}(1 + \frac{y(t)}{d_{2}})\).

According to (3.5), we can obtain matrices \(\overline{A}_{i}\), E̅, \(\overline{B}_{i}\), \(\overline{C}_{i}\), R, and Γ, \(i = 1,2,3,4\),

The application of fourth chapter results in \(N_{i}\), \(H_{i}\), \(L_{i}\), \(J_{i}\), \(K_{i}\), \(F_{i}\), \(S_{i}\), and \(Q_{i}\), \(i = 1,2,3,4\).

In addition, the following Xand γ must obey the Schur lemma:

Below we give the functions \(h_{1}(t)\), \(h_{2i}(t)\) (\(i = 1,2,3,4\)) and the initial value:

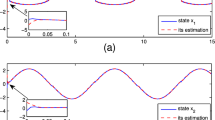

We give the state response diagram of the system, as shown in Figures 1, 2, and 3. The red line in Figure 1 represents the state response of the \(x(t)\), and the blue line indicates the observer’s estimation. Figure 2 shows the state response of \(y(t)\), and the value of the blue line indicates the observer’s estimation. Figure 3, the red line, indicates the state response of \(E(t)\), and the blue line indicates the observer’s estimation.

To illustrate the superiority of our design of the observer, we use for the state observer of [1] three state estimation of nonlinear singular systems. The red lines in Figure 4 show the actual state response of the \(x(t)\), and the blue line the literature observer on the estimate of the \(x(t)\); in Figure 5 red lines represent actual state response of the \(y(t)\), and the blue line shows the literature observer estimate states of \(y(t)\). In Figure 6 the red line shows the actual state of \(E(t)\), and the blue line shows the literature observer on the estimate of \(E(t)\).

The comparison of the actual and estimated states of \(\pmb{x(t)}\) by the observer of [ 1 ].

The comparison of the actual and estimated states of \(\pmb{y(t)}\) by the observer of [ 1 ].

The comparison of the actual and estimated states of \(\pmb{E(t)}\) by the observer of [ 1 ].

Example 2

In view of system (5.2), we give the controller of the system (4.15).

Rule 1: If \(x(t)\) is \(M_{1}\) and \(y(t)\) is \(M_{3}\), then

Rule 2: If \(x(t)\) is \(M_{1}\) and \(y(t)\) is \(M_{4}\), then

Rule 3: If \(x(t)\) is \(M_{2}\) and \(y(t)\) is \(M_{3}\), then

Rule 4: If \(x(t)\) is \(M_{2}\) and \(y(t)\) is \(M_{4}\), then

Here

After adding the controller, the graphics of the system (5.2) state variables are given in Figures 7, 8, and 9.

6 Conclusions

In this paper, we mainly design the state observer-based T-S fuzzy singular system. We use the T-S fuzzy control method to form a T-S fuzzy singular system. Then we give an augmented system and T-S fuzzy compact form of singular system. Then we design a T-S fuzzy observer for the system. In order to verify the global stability of the T-S fuzzy observer with parameter and state estimation error, we construct a Lyapunov function of the T-S fuzzy form. Finally, we consider two numerical examples, comparing in the simulation observation the actual state and our observer is designed to estimate the state. From the comparison of the results we see that our design for the observer is effective. In future work, we intend to consider the following two main approaches of the literature. Kao et al. [35] devoted their work to investigating the problem of robust sliding-mode control for a class of uncertain Markovian jump linear time-delay systems with generally uncertain transition rates (GUTRs) and Noroozi et al. [36] gave semiglobal practical integral input-to-state stability (SP-iISS) for a feedback interconnection of two discrete-time subsystems.

References

Zulfiqar, A, Rehan, M, Abid, M: Observer design for one-sided Lipschitz descriptor systems. Appl. Math. Model. 40(3), 2301-2311 (2016)

Kalman, RE: A new approach to linear filtering and prediction problems. J. Basic Eng. 82, 35-45 (1960)

Luenberger, DG: An introduction to observer. IEEE Trans. Autom. Control 16(6), 596-602 (1971)

Rajamanti, R: Observers for Lipschitz nonlinear system. IEEE Trans. Autom. Control 43(3), 397-401 (1998)

Cho, YM, Rajamanti, R: A systematic approach to adaptive observer synthesis for nonlinear systems. IEEE Trans. Autom. Control 12(4), 531-537 (1997)

Marino, R, Tomei, P: Adaptive observer for single output nonlinear system. IEEE Trans. Autom. Control 35(9), 1051-1058 (1990)

Marino, R, Tomei, P: Global adaptive observer for nonlinear systems via filtered transformations. IEEE Trans. Autom. Control 37(8), 1239-1245 (1992)

Areak, M, Kokotovic, P: Nonlinear observer: a circle criterion design and robustness analysis. IEEE Trans. Autom. Control 37(12), 1923-1930 (2001)

Lin, YG, Zhang, JF: Reduced-order observer-based control design for stochastic nonlinear systems. Syst. Control Lett. 52(2), 123-135 (2004)

Yi, B, Zhang, WA: A nonlinear updated gain observer for MIMO systems: design, analysis and application to marine surface vessels. ISA Trans. 64, 129-140 (2016)

Huang, J, Gao, Y, Yu, L: Novel observer design method for Lur’e differential inclusion systems. Int. J. Syst. Sci. 47(9), 2128-2138 (2016)

Tsinias, J: Observer for nonlinear systems. Syst. Control Lett. 13(2), 135-142 (1989)

Tsinias, J: Further results on the observer design problem. Syst. Control Lett. 14(5), 411-418 (1990)

Krener, AJ, Respondek, M: Nonlinear observer with linearizable error dynamics. SIAM J. Control Optim. 23(2), 197-216 (1985)

Bestle, D, Zeitz, M: Canonical form observer with linearizable error dynamics. Int. J. Control 38(2), 419-431 (1983)

Zeitz, M: The extended Luenberger observer for nonlinear systems. Syst. Control Lett. 9(3), 149-156 (1987)

Birks, J, Zeitz, M: Extended Luenberger observer for nonlinear multivariable systems. Int. J. Control 47(5), 1823-1836 (1988)

Song, Y, Grizzle, JW: The extended Kalman filter as a local asymptotic observer for nonlinear discrete-time systems. J. Math. Syst. Estim. Control 5(1), 59-78 (1995)

Boutayeb, M, Aubry, D: A strong tracking extended Kalman observer for nonlinear discrete-time systems. IEEE Trans. Autom. Control 44(8), 1550-1556 (1999)

Chadli, M, Karimi, HR: Robust observer design for unknown inputs Takagi-Sugeno models. IEEE Trans. Fuzzy Syst. 21(1), 158-164 (2013)

Yin, S, Yang, X, Karimi, HR: Data-driven adaptive observer for fault diagnosis. Math. Probl. Eng. 2012, Article ID 832836 (2012)

Aouaouda, S, Chadli, M, Shi, P, Karimi, HR: Discrete-time \(H_{-}/H_{\infty}\) sensor fault detection observer design for nonlinear systems with parameter uncertainty. Int. J. Robust Nonlinear Control 25, 339-361 (2015)

Zhao, L, Gao, H, Karimi, HR: Robust stability and stabilization of uncertain T-S fuzzy systems with time-varying delay: an input-output approach. IEEE Trans. Fuzzy Syst. 21(5), 8285-8290 (2011)

Dai, L: Singular Control System. Springer, Berlin (1989)

Liu, YQ, Wang, W, Li, YQ: The Basic Theory and Application of the Solution of Delay Singular Systems. South China University of Technology Press, Guangzhou (1997)

Zhang, QL, Yang, DM: Analysis and Synthesis of Uncertain Singular Systems. Northeastern University press, Shenyang (2003)

Taniguchi, T, Tanaka, K, Wang, HO: Fuzzy descriptor systems: stability analysis and design via LMIs. In: Proceedings of the American Control Conference, vol. 3, pp. 1827-1831 (1999)

Taniguchi, T, Tanaka, K, Wang, HO: Fuzzy descriptor systems and nonlinear model following control. IEEE Trans. Fuzzy Syst. 8(4), 442-452 (2000)

Bernstein, DS: Matrix Mathematics. Princeton University Press, Princeton (2005)

Karimi, HR: Observer-based mixed \(H_{2}/H_{\infty}\) control design for linear systems with time-varying delays: an LMI approach. Int. J. Control. Autom. Syst. 6(1), 1-14 (2008)

Kao, Y, Xie, J, Wang, C, Karimi, HR: A sliding mode approach to \(H_{\infty}\) non-fragile observer-based control design for uncertain Markovian neutral-type stochastic systems. Automatica 52, 218-226 (2015)

Tanaka, K, Wang, HO: Fuzzy Control Systems Design and Analysis: A LMI Approach. Usenix Systems Administration Conference, New York (2001)

Darouach, M, Boutat-Baddas, L: Observers for class of nonlinear singular systems. IEEE Trans. Autom. Control 53(11), 2627-2633 (2008)

Zhang, Y, Zhang, QL, Zheng, GF: \(H_{\infty}\) control of T-S fuzzy fish population logistic model with the invasion of alien species. Neurocomputing 73, 724-733 (2016)

Kao, Y, Xie, J, Zhang, L, Karimi, HR: A sliding mode approach to robust stabilisation of Markovian jump linear time-delay systems with generally incomplete transition rates. Nonlinear Anal. Hybrid Syst. 17, 70-80 (2015)

Noroozi, N, Khayatian, A, Karimi, HR: Semiglobal practical integral input-to-state stability for a family of parameterized discrete-time interconnected systems with application to sampled-data control systems. Nonlinear Anal. Hybrid Syst. 17(8), 10-24 (2015)

Acknowledgements

This work was supported by National Natural Science Foundation of China under Grant No. 61273008, National Natural Science Foundation of Liaoning Province under Grant No. 2015020007, Science and Technology Research Fund of Liaoning Education Department under Grant No. L2013051 and Jiangsu Planned Projects for Postdoctoral Research Funds under Grant No. 1401044, Doctor Startup Fund of Liaoning Province under Grant No. 20141069.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally and significantly in writing this paper. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Zhang, Y., Jin, Z. & Zhang, Q. Observer design for a class of T-S fuzzy singular systems. Adv Differ Equ 2017, 4 (2017). https://doi.org/10.1186/s13662-016-1052-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-016-1052-7