Abstract

This paper presents a new way to solve numerically multiterm variable-order fractional differential equations (MTVOFDEs) with initial conditions by using a class of modified shifted Jacobi polynomials (MSJPs). As their defining feature, MSJPs satisfy the given initial conditions. A key aspect of our methodology involves the construction of operational matrices (OMs) for ordinary derivatives (ODs) and variable-order fractional derivatives (VOFDs) of MSJPs and the application of the spectral collocation method (SCM). These constructions enable efficient and accurate numerical computation. We establish the error analysis and the convergence of the proposed algorithm, providing theoretical guarantees for its effectiveness. To demonstrate the applicability and accuracy of our method, we present five numerical examples. Through these examples, we compare the results obtained with other published results, confirming the superiority of our method in terms of accuracy and efficiency. The suggested algorithm yields very accurate agreement between the approximate and exact solutions, which are shown in tables and graphs.

Similar content being viewed by others

1 Introduction

Fractional calculus has gained significant interest from researchers across various disciplines over the past few decades. This interest stems from the fact that fractional operators offer a universal perspective on system evolution. As a result, fractional derivatives provide a more accurate description of certain physical phenomena [1–4]. In the literature, numerous definitions of fractional differentiation (for more information, we refer to [5–7]).

A recent advancement in the field of fractional calculus involves extending the theory to accommodate VOFDs. This enables a more flexible and dynamic characterization of systems. In a specific study conducted by the authors [8], they explored the properties of VOFD operators [9, 10].

Variable-order fractional calculus (VOFC) provides a powerful framework for capturing the nonlocal characteristics exhibited by various systems, and it has been widely applied in physics, mechanics, control, and signal processing to describe real-world phenomena [11–15]. In the field of engineering mechanics, VOFC has found numerous applications. For instance, in [16], VOFD operators were utilized to model the microscopic structure of materials. The Riesz–Caputo fractional derivative of space-dependent order was employed in continuum elasticity, as demonstrated in [17]. The nonlinear viscoelastic behavior of fractional systems of time-dependent fractional order was investigated in [18, 19]. These examples highlight the diverse applications of VOFC in engineering mechanics, illustrating its ability to capture complex behaviors and phenomena.

Finding analytical solutions for fractional differential equations (FDEs) is a challenging task, leading researchers to rely on numerical approximations in most cases. Consequently, numerous numerical methods have been introduced and developed to obtain approximated solutions for this class of equations. In previous works, researchers have employed various techniques to construct numerical solutions for FDEs using orthogonal and non-orthogonal polynomials (see, for instance, [20–29]), whereas the Bernstein polynomials were employed in [30, 31]. In [32] a numerical scheme based on Fourier analysis was proposed. In [33], proposed schemes were discussed based on finite difference approximations. These references highlight the diverse range of numerical methods employed in approximating solutions for FDEs, showcasing the utilization of Jacobi polynomials (JPs), Legendre polynomials, Legendre wavelets, operational matrices, Chebyshev polynomials, and Bernstein polynomials.

Orthogonal JPs [34–37] possess numerous advantageous properties that render them highly valuable in the numerical solution of various types of DEs, particularly through spectral methods. The key characteristics of JPs include orthogonality, exponential accuracy, and the presence of two parameters that offer flexibility in shaping the approximate solutions. These properties make JPs well suited for solving diverse problems. In the current research, we leverage the MSJPs that satisfy the given initial conditions to develop an SCM capable of addressing linear and nonlinear FDEs of variable order. By utilizing these polynomials we can effectively construct an accurate numerical approach for solving FDEs, taking advantage of the SCM and the desirable properties of JPs. This algorithm is based on building two types of OMs for the ODs and the VOFDs of MSJPs. Another advantage of the presented method is that it does not require the uniqueness of the suggested solution. This is important because many differential equations have multiple solutions, and the collocation method can still be used to approximate these solutions. For more explanation, the collocation method approximates the solution by interpolating it at a set of collocation points. Even if the solution is not unique, the collocation method will still produce an approximation that is accurate at the collocation points. As the number of collocation points increases, the approximation will become more accurate and converge to the exact solution.

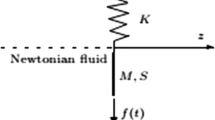

Here we examine the following general form of an MTVOFDE:

subject to the initial conditions

where n is the smallest positive integer number such that \(0<\nu _{1}(t)<\nu _{2}(t)<\cdots <\nu _{m}(t)<\nu (t)\le n\) for all \(t\in [0,\ell ]\), and \(D^{\nu (t)}y(t)\), \(D^{\nu _{i}(t)}y(t)\) \((i=1,2,\dots,m)\) are the VOFDs defined in the Caputo sense. Equations of the form (1.1) and (1.2) hold significant practical relevance, as they find applications in various domains. Specifically, these equations have been employed in noise reduction and signal processing [38, 39], geographical data processing [40], and signature verification [41]. These applications highlight the broad range of fields where these equations play a crucial role in addressing real-world challenges.

We have come up with a novel way to deal with problem (1.1)–(1.2) by making a new Galerkin operational matrix (OM) of ODs and a new operational matrix of VOFDs in the Caputo sense that are both designed for the MSJPs’ basis vector. These OMs serve as a powerful tool for achieving accurate numerical solutions through the utilization of the SCM. To the best of our knowledge, this is the first instance in the existing literature where a method for solving a broad class of MTVOFDEs, based on the Caputo derivative of the proposed basis vector, has been introduced. This novel methodology opens up new avenues for effectively addressing and obtaining numerical solutions for this class of FDEs.

This paper is structured as follows. In Sect. 2, we provide a review of the fundamental notiond and principles of VOFC. In Sect. 3, we present certain characteristics of the shifted JPs and MSJPs. In Sect. 4, we focus on making new OMs for the ODs and VOFDs of MSJPs. This is done to solve the problem shown in equations (1.1) and (1.2). In Sect. 5, we explore the application of constructed new OMs with the SCM as a numerical approach to solve this problem. The evaluation of the error estimate for the numerical solution obtained through this new scheme is presented in Sect. 6. To illustrate the effectiveness of the proposed method, Sect. 7 includes six examples and comparisons with various other methods available in the literature. Finally, in Sect. 8, we summarize the main findings and draw conclusions based on our study.

2 Basic definition of Caputo variable-order fractional derivatives

In this section, we present the essential notions and fundamental tools necessary for developing the proposed technique. These notions and tools form the foundation upon which our approach is built, enabling us to effectively address the problem at hand.

Definition 2.1

[23, 31, 42] The Caputo VOFDs for \(h(t) \in C^{m}[0,\ell ]\) are defined as

In the context of a perfect beginning time and \(0< \mu (t)< 1\), we get the following:

Definition 2.2

[23, 31, 42]At the beginning time, we have

The Caputo VOFD operator has the following properties:

As shown in [23, 31], equation (2.1) yields

Remark 2.1

The interested reader can refer to [4, pp.35–42] for numerous definitions and more properties related to the VOFDs.

3 An overview on the shifted JPs and their modified ones

This section concentrates on presenting some elementary properties of the JPs and their shifted ones. Furthermore, we will introduce a new kind of orthogonal polynomials, which we call MSJPs.

3.1 An overview on the shifted JPs

The orthogonal JPs, \(P^{(\alpha,\beta )}_{n}(x), \alpha, \beta >-1\), satisfy the orthogonality relation [43]

where \(w^{\alpha,\beta}(x)=(1-x)^{\alpha}(1+x)^{\beta}\) and \(h^{(\alpha,\beta )}_{n}= \frac {2^{\lambda}\Gamma (n+\alpha +1)\Gamma (n+\beta +1)}{n!(2n+\lambda )\Gamma (n+\lambda )}, \lambda =\alpha +\beta +1\).

The so-called shifted JPs, \(P^{(\alpha,\beta )}_{\ell,n}(t)=P^{(\alpha,\beta )}_{n}(2t/\ell -1)\), satisfy the relation

where \(w^{\alpha,\beta}_{\ell}(t)=(\ell -t)^{\alpha} t^{\beta}\).

The power-form representation of \(P^{(\alpha,\beta )}_{\ell,n}(t)\) is as follows:

where

Alternatively, the expression for \(t^{k}\) in relation to \(P^{(\alpha,\beta )}_{\ell,r}(t)\) has the form

where

3.2 Introducing MSJPs

In this section, it is advantageous to introduce a definition for the polynomials \(\{\phi ^{(\alpha,\beta )}_{n,j}(t)\}_{j \ge 0}\) to satisfy the given form of homogeneous initial conditions:

Subsequently, the polynomials \(\phi ^{(\alpha,\beta )}_{n,j}(t)\) satisfy the orthogonality relation, as follows:

where \(w^{\alpha,\beta}_{n,\ell}(t)=\frac {1}{t^{2n}}(\ell -t)^{\alpha} t^{ \beta}\).

Remark 3.1

For \(n=0\), we have

and thus \(\phi ^{(\alpha,\beta )}_{n,i}(t)\) are generalizations of \(P^{(\alpha,\beta )}_{\ell,i}(t)\).

4 Two OMs for ODs and VOFDs of \(\phi ^{(\alpha,\beta )}_{n,i}(t)\)

In this section, we present two OMs for ODs and VOFDs of \(\phi ^{(\alpha,\beta )}_{n,i}(t)\), with \(n=0,1,2,\dots \). To accomplish this, we first start with the following theorem.

Theorem 4.1

The first derivative of \(\phi ^{(\alpha,\beta )}_{n,i}(t)\) for all \(i\ge 0\) can be written in the form

where \(\epsilon _{n,i}(t)=\frac {1}{i!}(-1)^{i} n (\beta +1)_{i} t^{n-1}\), and

where

Proof

In view of relations (3.1) and (3.3), following the same procedures as in [44, Theorem 1], formula (4.1) can be proved. □

Now we have reached the main desired two results in this section, which are the two mentioned OMs of

The first result is given in Corollary 4.1, which is a direct consequence of Theorem 4.1, and the second one is proved in Theorem 4.2 as follows.

Corollary 4.1

The mth derivative of the vector \(\boldsymbol{\Phi}^{(\alpha,\beta )}_{n,N}(t)\) has the form

with \(\boldsymbol{\eta}^{(m)}_{n,N}(t)=\sum_{k=0}^{m-1}G^{k}_{n} \boldsymbol{\epsilon}^{(m-k-1)}_{n,N}(t)\), where \({\boldsymbol{\epsilon}_{n,N}}(t)= [\epsilon _{n,0}(t),\epsilon _{n,1}(t), \dots,\epsilon _{n,N}(t) ]^{T}\textit{ and } G_{n}= (g_{i,j}(n) )_{0\le i,j\le N}\),

Theorem 4.2

\(D^{\mu (t)}\phi ^{(\alpha,\beta )}_{n,i}(t)\) for all \(i\ge 0\) can be written in the form

and, consequently, the VOFD of \(\boldsymbol{\Phi}^{(\alpha,\beta )}_{n,N}(t)\) has the form

where \({\mathbf{D}}_{n}^{(\mu (t))}=(d_{i,j}^{(n)}(\mu (t)))\) is the matrix of order \((N+1)\times (N+1)\) explicitly expressed as

where

and

Proof

In view of (3.1), using (2.4b), we have

Employing relation (3.3), (4.10) can be expressed in the form (4.5), which can be written as follows:

and this expression leads to the proof of (4.6). □

For instance, if \(N=4, \alpha =\beta =0\), and \(\mu (t)=t\), then we get

and

5 Numerical handling for MTVOFDE subject to initial conditions

In this section, we utilize the OMs derived in Corollary 4.1 and Theorem 4.2 to get numerical solutions for MTVOFDE (1.1) subject to initial conditions (1.2).

5.1 Homogeneous initial conditions

Suppose that the initial conditions (1.2) are homogeneous, that is, \(\beta _{j}=0, j=0,1,2,\dots,n-1\). We can consider an approximate solution to \(y(t)\) in the form

where \({\mathbf{A}}= [c_{0}, c_{1},\dots,c_{N} ]^{T}\).

Corollary 4.1 and Theorem 4.2 enable us to approximate the derivatives \(y^{(\mu (t))}(t)\) in matrix form:

In this method, approximations (5.2) allow us to write the residual of equation (1.1) as

In this section, we propose a spectral approach, referred to as the modified shifted Jacobi collocation operational matrix method (MSJCOPMM), to obtain the numerical solution of equation (1.1) under the initial conditions specified in (1.2) (with \(\beta _{j}=0, j=0,1,\dots,n-1\)). The collocation points for this method are chosen as the \(N+1\) zeros of \(P^{(\alpha,\beta )}_{\ell,N+1}(t)\) or, alternatively, as the points \(t_{i}=\frac{\ell (i+1)}{N+2}\), \(i=0,1,\dots,N\). These points serve as the basis for performing the spectral approximation in our proposed numerical approach. So we have

By solving a set of \(N+1\) linear or nonlinear algebraic equations (5.4) using an appropriate solver, the unknown coefficients \(c_{i}\) (where \(i=0,1,\dots,N\)) can be determined. These coefficients play a crucial role in obtaining the desired numerical solution (5.1).

5.2 Nonhomogeneous initial conditions

A crucial aspect of developing the proposed algorithm involves transforming equation (1.1) together with the nonhomogeneous conditions (1.2) into an equivalent form with homogeneous conditions. This transformation is achieved through the following conversion:

Thus it is sufficient to solve the following modified equation, simplifying the problem at hand:

subject to the homogeneous conditions

Then

Remark 5.1

We present an algorithm to solve multiple numerical examples in Sect. 7. The computations were performed using Mathematica 13.3 on a computer system equipped with an Intel(R) Core(TM) i9-10850 CPU operating at 3.60 GHz, featuring 10 cores and 20 logical processors. The algorithmic steps for solving the MTVOFDE using MSJCOPMM are expressed as follows:

6 Convergence and error analysis

Within this section, we investigate the convergence and error estimates of the proposed approach. We focus on the space \(S_{n,N}\) defined as follows:

Additionally, the error between \(y(t)\) and its approximation \(y_{N}(t)\) can be defined by

In the paper, we analyze the error of the numerical scheme by using the \(L_{2}\) norm error estimate

and the \(L_{\infty}\) norm error estimate

Theorem 6.1

Assume that \(y(t)=t^{n} u(t)\) and suppose that \(y_{N}(t)\) has the form (5.1) and represents the best possible approximation for \(y(t)\) out of \(S_{n,N}\). Then there is a constant K such that

and

where \(q=\max \{\alpha,\beta,-1/2\}< N+1\) and \(K=\max_{t\in [0,\ell ]} | \frac {d^{N+1} u(\eta )}{d t^{N+1}} |, \eta \in [0,\ell ]\).

Proof

Using Theorem 3.3 in [45, p. 109], we can write the function \(u(t)\) as

where \(u_{N}(t)\) is the interpolating polynomial for \(u(t)\) at the points \(t_{k}, k=0,1,\dots,N\), which are the roots of \(P^{(\alpha,\beta )}_{\ell,N+1}(t)\) such that \(N>q-1\). Then we get

where \(c^{N+1}_{N+1}= \frac {\Gamma (2N+\lambda +2)}{\ell ^{N+1} (N+1)! \Gamma (N+\lambda +1)}\).

In view of formula [43, formula (7.32.2)], we obtain

and hence

By using the asymptotic result (see [46, pp. 232–233])

inequality (6.9) takes the form

Now consider the approximation \(y(t)\simeq Y_{N}(t)=t^{n}u_{N}(t)\). Then

Since the approximate solution \(y_{N}(t)\in S_{n,N}\) represents the best possible approximation to \(y(t)\), we get

and

Therefore

and

□

The following corollary shows that the obtained error has a very rapid rate of convergence.

Corollary 6.1

For all \(N> q-1\), we have the following two estimates:

and

The next theorem emphasizes the stability of error by making an estimate of error propagation.

Theorem 6.2

For any two successive approximations of \(y(t)\), we have

where ≲ means that there exists a generic constant d such that \(|y_{N+1}-y_{N}|\le d (e \ell /4)^{N} N^{q-N-1}\).

Proof

In view of Theorem 6.1, it is not difficult to obtain (6.19). □

7 Numerical simulations

In this section, we give five examples to demonstrate the applicability and high efficiency of the proposed method established in Sect. 5. The maximum absolute error (MAE) between exact and approximate solutions is presented for evaluation. In the provided numerical problems, we explain that MSJCOPMM gives the exact solution if the given problem has a polynomial solution of degree N, as shown in Examples 7.1–7.3. This solution can be found by combining \(\phi ^{(\alpha,\beta )}_{0,i}(t), \dots,\phi ^{(\alpha,\beta )}_{N-2,i}(t)\).

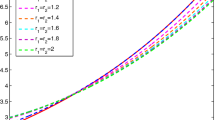

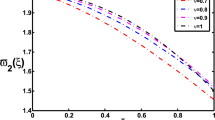

Furthermore, the computed errors to obtain some numerical solutions \(y_{N}(t)\) using MSJCOPMM for \(N = 1,\dots,12\) are presented in two Tables 3 and 5. In these tables, we see excellent computational results. The comparisons of MSJCOPMM and other techniques in [23–25, 28, 47, 48] are presented in Tables 1, 2, 4, and 6. These tables confirm that MSJCOPMM provides more precise results than the other techniques. In addition, as we can see in Figs. 1 and 3, the exact and approximate solutions in Examples 7.4 and 7.5 are in excellent agreement. Besides, Figs. 2(a), 4(a) and Figs. 2(b), 4(b) display absolute and log-errors for various N values and different values of \(\alpha, \beta \) as a way of demonstrating the convergence and stability of the solutions, respectively, to Problems 7.4 and 7.5 when MSJCOPMM is applied. As well, Example 7.6 illuminates a valuable technique for assessing accuracy in cases where the exact solution remains elusive. Along with the insightful results shown in Table 7, Figs. 5 and 6 also clearly demonstrate that MSJCOPMM produces extremely accurate solutions.

Approximate and exact solutions plots for Example 7.4 using \(\alpha =\beta =0\)

Figures of obtained errors \(E_{N}\) using various N and \(\alpha =\beta =0\) for Example 7.4

Approximate and exact solutions plots for Example 7.5 using \(\alpha =0\) and \(\beta =1\)

Figures of obtained errors \(E_{N}\) using various N, \(\alpha =0\), and \(\beta =1\) for Example 7.5

Error plot for Example 7.6 using \(N=14, \alpha =0\), and \(\beta =0\)

Approximate solutions plots for Example 7.6 using \(N=0,3,5\) and \(\alpha =\beta =0\)

Problem 7.1

Consider the differential equation [23, 24]

where \(g(t)\) is chosen such that the exact solution is \(y(t)=2-\frac{t^{2}}{2}\).

The application of proposed method \(SJCOPMM\) gives the exact solution in the form

where \(c_{0}=-1/2\) and \(c_{i}=0, i=1,2,\dots,N\).

Problem 7.2

Consider Bagley–Torvik equation [24, 49]

where the exact solution is \(y(t)=t^{2}\).

The application of proposed method \(SJCOPMM\) gives the exact solution in the form

where \(c_{0}=1\) and \(c_{i}=0, i=1,2,\dots,N\).

Remark 7.1

It is worth noting that the exact solution of (7.2) is obtained using \(N=0, \alpha,\beta >-1\), whereas the authors in [24] presented the exact solution using \(N=2\). Moreover, the authors in [49] show that the exact solution is obtained as \(N\rightarrow \infty \), and the best error obtained was \(2\times 10^{-10}\).

Problem 7.3

Consider the differential equation [24, 25, 28]

where \(\mu (t)=\frac {t+2e^{t}}{7}\). The exact solution is \(y(t)=5(1+t)^{2}\).

The application of proposed method \(MSJCOPMM\) gives the exact solution in the form

where \(c_{0}=5\) and \(c_{i}=0, i=1,2,\dots,N\).

Problem 7.4

Consider the nonlinear initial value problem, [47, 50]

where the exact solution is \(y(t)=t^{7/2}\). This solution agrees perfectly with the numerical solutions of accuracy 10−8 at \(N=12\), as shown in Table 3.

Problem 7.5

Consider the initial value problem, [47, 48]

where g(t) is chosen such that the exact solution is \(y(t)=e^{t}\). This solution agrees perfectly with the numerical solutions of accuracy 10−16 at \(N=11\), as shown in Table 5.

Problem 7.6

Consider the fractional-order nonlinear equation

where the explicit exact solution is not available, so the following error norm is used to check the accuracy in this case:

The application of MSJCOPMM with different choices of α and β and \(N=0,3,6,9,12,14\) gives the numerical results shown in Table 7.

8 Conclusions

In this work, we have introduced a modified version of shifted JPs that satisfy homogeneous initial conditions. Moreover, by utilizing the OMs derived in Sect. 4 along with the CSM, we have developed an approximation technique for the given MTVOFDEs. The proposed method, known as MSJCOPMM, has been applied and tested on five different examples demonstrating its high accuracy and efficiency. We recognize the potential for extending our results to boundary value problems (BVPs), where the investigation of the system behavior at boundary conditions would provide valuable insights and further enhance the applicability of our findings. Additionally, we believe that the theoretical findings presented in this paper can be further employed to address other types of FDEs.

Availability of data and materials

No data is associated with this research.

References

Maayah, B., Arqub, O.A., Alnabulsi, S., Alsulami, H.: Numerical solutions and geometric attractors of a fractional model of the cancer-immune based on the Atangana–Baleanu–Caputo derivative and the reproducing kernel scheme. Chin. J. Phys. 80, 463–483 (2022)

Berredjem, N., Maayah, B., Arqub, O.A.: A numerical method for solving conformable fractional integrodifferential systems of second-order, two-points periodic boundary conditions. Alex. Eng. J. 61(7), 5699–5711 (2022)

Arqub, O.A., Rabah, A.B., Momani, S.: A spline construction scheme for numerically solving fractional Bagley–Torvik and Painlevé models correlating initial value problems concerning the Caputo–Fabrizio derivative approach. Int. J. Mod. Phys. C 34(9), 2350115 (2023)

Almeida, R., Tavares, D., Torres, D.F.M.: The Variable-Order Fractional Calculus of Variations. Springer, Cham (2019)

Podlubny, I.: Fractional Differential Equations: An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of Their Solution and Some of Their Applications. Mathematics in Science and Engineering, vol. 198. Academic Press, San Diego (1999)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations, vol. 204. Elsevier, Amsterdam (2006)

Mainardi, F.: Fractional Calculus and Waves in Linear Viscoelasticity. Imperial College Press, London (2010)

Samko, S.G., Ross, B.: Integration and differentiation to a variable fractional order. Integral Transforms Spec. Funct. 1(4), 277–300 (1993)

Odzijewicz, T., Malinowska, A.B., Torres, D.F.M.: Noether’s theorem for fractional variational problems of variable order. Cent. Eur. J. Phys. 11(6), 691–701 (2013)

Chen, S., Liu, F., Burrage, K.: Numerical simulation of a new two-dimensional variable-order fractional percolation equation in non-homogeneous porous media. Comput. Math. Appl. 67, 1673–1681 (2014)

Coimbra, C.F.M., Soon, C.M., Kobayashi, M.H.: The variable viscoelasticity operator. Ann. Phys. 14, 378–389 (2005)

Odzijewicz, T., Malinowska, A.B., Torres, D.F.M.: Fractional variational calculus of variable order. In: Advances in Harmonic Analysis and Operator Theory: Advances and Applications (2013)

Ostalczyk, P.W., Duch, P., Brzeziński, D.W., Sankowski, D.: Order functions selection in the variable-, fractional-order PID controller. In: Advances in Modelling and Control of Non-integer-Order Systems. Lect. Notes Electr. Eng., vol. 320, pp. 159–170 (2015)

Rapaić, M.R., Pisano, A.: Variable-order fractional operators for adaptive order and parameter estimation. IEEE Trans. Autom. Control 59(3), 798–803 (2013)

Izadi, M., Yüzbasi, S., Adel, W.: Two novel Bessel matrix techniques to solve the squeezing flow problem between infinite parallel plates. Comput. Math. Math. Phys. 61(12), 2034–2053 (2021)

Patnaik, S., Semperlotti, F.: Variable-order particle dynamics: formulation and application to the simulation of edge dislocations. Philos. Trans. R. Soc. Lond. A 378(2172), 20190290 (2020)

Blaszczyk, T., Bekus, K., Szajek, K., Sumelka, W.: Approximation and application of the Riesz–Caputo fractional derivative of variable order with fixed memory. Meccanica 57, 861–870 (2022)

Di Paola, M., Alotta, G., Burlon, A., Failla, G.: A novel approach to nonlinear variable-order fractional viscoelasticity. Philos. Trans. R. Soc. Lond. A 378, 20190296 (2020)

Burlon, A., Alotta, G., Di Paola, M., Failla, G.: An original perspective on variable-order fractional operators for viscoelastic materials. Meccanica 56, 769–784 (2021)

Sheikhi, S., Matinfar, M., Firoozjaee, M.A.: Numerical solution of variable-order differential equations via the Ritz-approximation method by shifted Legendre polynomials. Int. J. Appl. Comput. Math. 7(1), 22 (2021)

Izadi, M., Yüzbasi, S., Adel, W.: A new Chelyshkov matrix method to solve linear and nonlinear fractional delay differential equations with error analysis. Math. Sci. 17(3), 267–284 (2023)

Izadi, M., Sene, N., Adel, W., El-Mesady, A.: The Layla and Majnun mathematical model of fractional order: stability analysis and numerical study. Results Phys. 51, 106650 (2023)

Liu, J., Li, X., Wu, L.: An operational matrix of fractional differentiation of the second kind of Chebyshev polynomial for solving multiterm variable order fractional differential equation. Math. Probl. Eng. 2016, Article ID 7126080 (2016)

El-Sayed, A.A., Baleanu, D., Agarwal, P.: A novel Jacobi operational matrix for numerical solution of multi-term variable-order fractional differential equations. J. Taibah Univ. Sci. 14(1), 963–974 (2020)

Nagy, A.M., Sweilam, N.H., El-Sayed, A.A.: New operational matrix for solving multiterm variable order fractional differential equations. J. Comput. Nonlinear Dyn. 13(1), 011001 (2018)

El-Sayed, A.A., Agarwal, P.: Numerical solution of multiterm variable-order fractional differential equations via shifted Legendre polynomials. Math. Methods Appl. Sci. 42(11), 3978–3991 (2019)

Wang, L.F., Ma, Y.P., Yang, Y.Q.: Legendre polynomials method for solving a class of variable order fractional differential equation. Comput. Model. Eng. Sci. 101(2), 97–111 (2014)

Chen, Y.M., Wei, Y.Q., Liu, D.Y., Yu, H.: Numerical solution for a class of nonlinear variable order fractional differential equations with Legendre wavelets. Appl. Math. Lett. 46, 83–88 (2015)

Youssri, Y.H., Abd-Elhameed, W.M., Ahmed, H.M.: New fractional derivative expression of the shifted third-kind Chebyshev polynomials: application to a type of nonlinear fractional pantograph differential equations. J. Funct. Spaces 2022, Article ID 3966135 (2022)

Bushnaq, S., Shah, K., Tahir, S., Ansari, K.J., Sarwar, M., Abdeljawad, T.: Computation of numerical solutions to variable order fractional differential equations by using non-orthogonal basis. AIMS Math. 7(6), 10917–10938 (2022)

Chen, Y.M., Liu, L.Q., Li, B.F., Sun, Y.: Numerical solution for the variable order linear cable equation with Bernstein polynomials. Appl. Math. Comput. 238, 329–341 (2014)

Shen, S., Liu, F., Chen, J., Turner, I., Anh, V.: Numerical techniques for the variable order time fractional diffusion equation. Appl. Math. Comput. 218(22), 10861–10870 (2012)

Moghaddam, B.P., Machado, J.A.T.: Extended algorithms for approximating variable order fractional derivatives with applications. J. Sci. Comput. 71, 1351–1374 (2017)

Shen, J., Tang, T., Wang, L.: Spectral Methods: Algorithms, Analysis and Applications, vol. 41. Springer, Berlin (2011)

Ashry, H., Abd-Elhameed, W.M., Moatimid, G.M., Youssri, Y.H.: Robust shifted Jacobi–Galerkin method for solving linear hyperbolic telegraph type equation. Palest. J. Math. 11, 504–518 (2022)

Abd-Elhameed, W.M., Ahmed, H.M., Youssri, Y.H.: A new generalized Jacobi Galerkin operational matrix of derivatives: two algorithms for solving fourth-order boundary value problems. Adv. Differ. Equ. 2016(1), 1 (2016)

Abd-Elhameed, W.M., Ahmed, H.M.: Tau and Galerkin operational matrices of derivatives for treating singular and Emden–Fowler third-order-type equations. Int. J. Mod. Phys. C 33(5), 2250061 (2022)

Eghbali, A., Johansson, H., Saramäki, T.: A method for the design of Farrow-structure based variable fractional-delay FIR filters. Signal Process. 93(5), 1341–1348 (2013)

Yu, C., Teo, K.L., Dam, H.H.: Design of allpass variable fractional delay filter with signed powers-of-two coefficients. Signal Process. 95, 32–42 (2014)

Cooper, G.R.J., Cowan, D.R.: Filtering using variable order vertical derivatives. Comput. Geosci. 30(5), 455–459 (2004)

Tseng, C.-C.: Design of variable and adaptive fractional order FIR differentiators. Signal Process. 86(10), 2554–2566 (2006)

Coimbra, C.F.M.: Mechanics with variable-order differential operators. Ann. Phys. 515(11–12), 692–703 (2003)

Szeg, G.: Orthogonal Polynomials, Volume XXIII.S, 4th edn. AMS, Providence (1975)

Abd-Elhameed, W.M., Al-Harbi, M.S., Amin, A.K., Ahmed, H.M.: Spectral treatment of high-order Emden–Fowler equations based on modified Chebyshev polynomials. Axioms 12(2), 1–17 (2023)

Burden, R.L., Faires, J.D., Burden, A.M.: Numerical Analysis. Cengage Learning (2015)

Jeffrey, A., Dai, H.H.: Handbook of Mathematical Formulas and Integrals, 4th edn. Elsevier, Amsterdam (2008)

Nemati, S., Lima, P.M., Torres, D.F.M.: Numerical solution of variable-order fractional differential equations using Bernoulli polynomials. Fractal Fract. 5(4), 219 (2021)

Sabermahani, S., Ordokhani, Y., Lima, P.M.: A novel Lagrange operational matrix and tau-collocation method for solving variable-order fractional differential equations. Iran. J. Sci. Technol., Trans. A, Sci. 44, 127–135 (2020)

Irandoust-Pakchin, S., Kheiri, H., Abdi-Mazraeh, S.: Efficient computational algorithms for solving one class of fractional boundary value problems. Comput. Math. Math. Phys. 53, 920–932 (2013)

Hassani, H., Dahaghin, M.S., Heydari, H.: A new optimized method for solving variable-order fractional differential equations. J. Math. Ext. 11, 85–98 (2017)

Acknowledgements

The author expresses his gratitude to the editor for providing valuable assistance throughout the publication process. Additionally, the referees’ comments and suggestions have greatly contributed to the enhancement of the paper in its current form.

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB). No funding was received to assist with the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

H.M. Ahmed wrote the main manuscript text, prepared all figures and reviewed the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ahmed, H.M. Enhanced shifted Jacobi operational matrices of derivatives: spectral algorithm for solving multiterm variable-order fractional differential equations. Bound Value Probl 2023, 108 (2023). https://doi.org/10.1186/s13661-023-01796-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13661-023-01796-1