Abstract

This manuscript deals with two general systems of nonlinear ordered variational inclusion problems. We also construct some new iterative algorithms for finding approximation solutions to the general systems of nonlinear ordered variational inclusions and prove the convergence of the sequences obtained by the schemes. The results presented in the manuscript are new and improve some well-known results in the literature.

Similar content being viewed by others

1 Introduction

A lot of work has been added into the theory of variational inequalities since its seed was planted by Lions et al. [24]. On account of its wide applications in physics and applied sciences etc., the classical variational inequalities have been extensively studied by many researchers in different ways [1, 4, 5, 7–10].

A useful and important generalization of variational inequality problem is variational inclusion problem which was introduced and studied by Hasounni et al. [16]. Furthermore, they proposed a perturbed iterative algorithm for solving the variational inclusion problem.

Fang et al. [12] introduced and studied H-monotone operators, which was used to design a resolvent operator and to prove its Lipschitz continuity. Furthermore, they also introduced a class of variational inclusions in Hilbert space. Fang et al. [13] additionally presented another class of generalized monotone operators, called \((H,\eta)\)-monotone operators, which generalize different classes of maximal monotone, maximal η-monotone and H-monotone operators.

Recently, Lan et al. [17] presented another idea of \((A,\eta )\)-accretive mappings, which generalized the current monotone or accretive operators, and concentrated a few properties of mappings. They examined a class of variational inclusions using the resolvent operator related with \((A,\eta)\)-accretive mappings.

Amann [6] studied the number of fixed points for a continuous operator \(A:[x,y] \rightarrow[x,y]\) on a bounded order interval \([x,y] \subset\mathcal{E}\), an ordered Banach space. The nonlinear mapping fixed point theory and applications have been widely studied in ordered Banach spaces [4, 14, 15]. In this manner, it is essential that summed up nonlinear ordered variational inclusions (ordered equation) are contemplated.

Plenty of research concerned with the ordered equations and ordered variational inequalities in ordered Banach spaces has been done by Li et al.; see [21, 23]. Many problems concerning ordered variational inclusions are answered by the resolvent technique linked with RME set-valued mappings [19], \((\alpha, \lambda)\)-NODM set-valued mapping [20], \((\gamma_{G}, \lambda)\)-weak RRD mapping [2] and \((\alpha, \lambda)\)-weak ANODD set-valued map with strongly comparison mapping A [21] and many more see; e.g., [3, 22, 25, 26, 29] and the references therein.

In this work, we make use of the resolvent operator approach for the approximation solvability of solutions of implicit system of generalized nonlinear ordered variational inclusions in real ordered Hilbert spaces.

2 Preliminaries

In this part, we present some basic notions and results for the building up the manuscript.

Allow \(\mathcal{E}\) to be a real ordered Hilbert space endowed with a norm \(\|\cdot\|\), and an inner product \(\langle\cdot,\cdot\rangle\), d be a metric induced by the norm \(\|\cdot\|\), \(CB(\mathcal{E})\) be a collection of all closed and bounded subsets of \(\mathcal{E}\) and \(D(\cdot, \cdot)\) be a Hausdorff metric on \(CB(\mathcal{E})\) defined as

where \(M, N \in CB(\mathcal{E})\), \(d(x, N)= \inf_{y \in N}d(x, y)\) and \(d(M, y)= \inf_{x \in M}d(x, y)\).

Definition 2.1

Let \(\mathfrak{C}\) be a nonvoid closed, convex subset of \(\mathcal{E}\). Then \(\mathfrak{C}\) is called a cone if

-

(a)

\(x\in\mathfrak{C}\) and \(\kappa>0\), \(\kappa x \in\mathfrak{C}\);

-

(b)

x and \(-x\in\mathfrak{C}\), then \(x=\Theta\).

Definition 2.2

([11])

A cone \(\mathfrak{C}\) is said to be normal iff there exists \(\lambda _{N_{C}} >0\) with \(0\leq x \leq y\) implying \(\|x\| \leq\lambda_{N_{C}} \|y\|\), where \(\lambda_{N_{C}}\) is called a normal constant of \(\mathfrak{C}\).

Definition 2.3

A relation ≤ defined as \(x \leq y\) iff \(y-x \in\mathfrak{C}\) for \(x, y \in\mathcal{E}\) is known as a partial order relation expounded by \(\mathfrak{C}\) in \(\mathcal{E}\); then (\(\mathcal{E}, \leq\)) is called a real ordered Hilbert space.

Definition 2.4

([27])

Members \(x, y \in\mathcal{E}\) having the relation \(x \leq y\) (or \(y \leq x\)) are called comparable with each other.

Definition 2.5

([27])

For arbitrary elements \(x, y \in\mathcal{E}\), \(\operatorname{lub}\{x, y\}\) and \(\operatorname{glb}\{ x, y\}\) mean the least upper bound and the greatest upper bound of the set \(\{x, y\}\). Suppose \(\operatorname{lub}\{x, y\}\) and \(\operatorname{glb}\{x, y\}\) exist; some binary relations are defined as follows:

-

(a)

\(x \vee y = \operatorname{lub}\{x, y\}\);

-

(b)

\(x \wedge y = \operatorname{glb}\{x, y\}\);

-

(c)

\(x \oplus y = (x-y) \vee(y-x)\);

-

(d)

\(x \odot y = (x-y) \wedge(y-x)\).

The operations ∨, ∧, ⊕ and ⊙ are called OR, AND, XOR and XNOR operations, respectively.

Proposition 1

([11])

For any positive integer n, if \(x \propto y_{n}\) and \(y_{n} \rightarrow y^{\ast}\) (\(n \rightarrow\infty\)), then \(x \propto y^{\ast}\).

Proposition 2

Let \(\textbf{XOR}, \textbf{XNOR}\) be two operations on \(\mathcal{E}\). Then the following hold:

-

(a)

\(x \odot x = 0, x \odot y= y \odot x= -(x \oplus y)=-(y \oplus x)\);

-

(b)

\((\lambda x) \oplus(\lambda y)= |\lambda| (x \oplus y)\);

-

(c)

\(x \odot0 \leq0\), if \(x \propto0\);

-

(d)

\(0 \leq x \oplus y \), if \(x \propto y\);

-

(e)

if \(x \propto y\), then \(x \oplus y= 0\) if and only if \(x=y\);

-

(f)

\((x+y) \odot(u+v) \geq(x \odot u) + (y \odot v)\);

-

(g)

\((x+y) \odot(u+v) \geq(x \odot v) + (y \odot u)\);

-

(h)

\((\alpha x \oplus\beta x)= |\alpha- \beta|x\), if \(x \propto0\), \(\forall x, y, u, v \in\mathcal{E}\) and \(\alpha, \beta, \lambda\in\mathbb{R}\).

Proposition 3

([11])

Let \(\mathfrak{C}\) be a normal cone in \(\mathcal{E}\) with normal constant \(\lambda_{N_{C}}\), then, for each \(x, y \in\mathcal{E}\), the following hold:

-

(a)

\(\|0+0\|= \|0\|=0\);

-

(b)

\(\|x \vee y\| \leq\|x\|\vee\|y\| \leq\|x\|+ \|y\|\);

-

(c)

\(\|x \oplus y\|\leq\|x-y\|\leq\lambda_{N_{C}}\|x \oplus y\|\);

-

(d)

if \(x \propto y\), then \(\|x \oplus y\|= \|x-y\|\).

Definition 2.6

([20])

Let \(A:\mathcal{E} \rightarrow\mathcal{E}\) to be a single-valued map.

-

(a)

A is called a δ-order non-extended map, if there is a positive constant \(\delta> 0\) such that

$$\delta(x \oplus y) \leq A(x) \oplus A(y) \quad \mbox{for all } x, y \in \mathcal{E}; $$ -

(b)

A is called a strongly comparison map, if it is a comparison map and \(A(x) \propto A(y)\) iff \(x \propto y\), for all \(x, y \in\mathcal{E}\).

Definition 2.7

([2])

A single-valued map \(A: \mathcal{E}\rightarrow\mathcal{E}\) is termed a β-ordered compression, if it is comparison map and

Definition 2.8

([18])

A map \(A:\mathcal{E}\times\mathcal{E}\rightarrow\mathcal{E}\) is called \((\alpha_{1},\alpha_{2})\)-restricted-accretive map, if it is a comparison and ∃ constants \(0 \leq\alpha_{1}, \alpha_{2} \leq1\) such that

where I is the identity map on \(\mathcal{E}\).

Lemma 2.1

([28])

Let \(\theta\in(0, 1)\) be a constant. Then the function \(f(\lambda )=1-\lambda+\lambda\theta\) for \(\lambda\in[0,1]\) is nonnegative and strictly decreases and \(f(\lambda) \in[0, 1]\). Furthermore, if \(\lambda\neq0\), then \(f(\lambda) \in(0,1)\).

Lemma 2.2

([30])

Assume that \(\{a_{n}\}\) and \(\{b_{n}\}\) be two sequences of nonnegative real numbers such that

where \(\theta\in(0,1)\) and \(\lim_{n\rightarrow\infty}b_{n} = 0\). Then \(\lim_{n\rightarrow\infty} a_{n}=0\).

3 Ordered weak-ARD mapping in ordered Hilbert spaces

Definition 3.1

Let \(A: \mathcal{E}\rightarrow\mathcal{E}\) be a strong comparison and β-ordered compression mapping and \(M:\mathcal{E}\rightarrow CB(\mathcal{E})\) be a set-valued mapping. Then

-

(a)

M is said to be a comparison mapping, if for any \(v_{x} \in M(x)\), \(x \propto v_{x}\) and if \(x \propto y\), then, for any \(v_{x} \in M(x)\) and any \(v_{y} \in M(y)\), \(v_{x} \propto v_{y}\), for all \(x, y \in\mathcal{E}\);

-

(b)

a comparison mapping M is said to be ordered rectangular, if for each \(x, y \in\mathcal{E}\), \(v_{x} \in M(x)\) and \(v_{y} \in M(y)\) such that

$$\bigl\langle v_{x}\odot v_{y}, -(x\oplus y)\bigr\rangle =0; $$ -

(c)

a comparison mapping M is said to be a γ-ordered rectangular with respect to A, if there exists a constant \(\gamma_{A} > 0\) for any \(x, y \in\mathcal{E}\), there exist \(v_{x}\in M(A(x))\) and \(v_{y}\in M(A(y))\) such that

$$\bigl\langle v_{x}\odot v_{y}, -\bigl(A(x)\oplus A(y) \bigr)\bigr\rangle \geq\gamma_{A} \bigl\Vert A(x)\oplus A(y) \bigr\Vert ^{2}, $$holds, where \(v_{x} \) and \(v_{y}\) are said to be \(\gamma _{A}\)-elements, respectively;

-

(d)

M is said to be a weak comparison mapping with respect to A, if, for any \(x, y \in\mathcal{E}\), \(x \propto y\), there exist \(v_{x}\in M(A(x))\) and \(v_{y}\in M(A(y))\) such that \(x \propto v_{x}\), \(y \propto v_{y}\), where \(v_{x}\) and \(v_{y}\) are said to be weak comparison elements, respectively;

-

(e)

M is said to be a λ-weak ordered different comparison mapping with respect to A, if there exists a constant \(\lambda> 0\) such that, for any \(x, y \in\mathcal{E}\), there exist \(v_{x}\in M(A(x))\) and \(v_{y}\in M(A(y))\), \(\lambda(v_{x}-v_{y})\propto (x-y)\) holds, where \(v_{x}\) and \(v_{y}\) are said to be λ-elements, respectively;

-

(f)

a weak comparison mapping M is said to be a \((\gamma_{A}, \lambda)\)-weak ARD mapping with respect to A, if M is a \(\gamma_{A}\)-ordered rectangular and λ-weak ordered different comparison mapping with respect to A and \((A+\lambda M)(\mathcal{E})= \mathcal{E}\), for \(\lambda> 0\) and there exist \(v_{x}\in M(A(x))\) and \(v_{y}\in M(A(y))\) such that \(v_{x}\) and \(v_{y}\) are \((\gamma_{A}, \lambda)\)-elements, respectively.

Definition 3.2

A set-valued mapping \(A:\mathcal{E} \rightarrow CB(\mathcal{E})\) is said to be \(\delta_{A}\)-Lipschitz continuous, if for each \(x, y \in\mathcal{E}, x \propto y\), there exists a constant \(\delta_{A}\) such that

Definition 3.3

Let \(M:\mathcal{E}\rightarrow CB(\mathcal{E})\) be a set-valued mapping, \(A:\mathcal{E}\rightarrow\mathcal{E}\) be a single-valued mapping and \(I:\mathcal{E}\rightarrow\mathcal{E}\) be an identity mapping. Then a weak comparison mapping M is said to be a \((\gamma^{\prime}, \lambda)\)-weak-ARD mapping with respect to \((I-A)\), if M is a \(\gamma^{\prime}\)-ordered rectangular and λ-weak ordered different comparison mapping with respect to \((I-A)\) and \([(I-A)+\lambda M](\mathcal{E})= \mathcal {E}\), for \(\lambda> 0\) and there exist \(v_{x} \in M((I-A)(x))\) and \(v_{y} \in M((I-A)(y))\) such that \(v_{x}\) and \(v_{y}\) are called \((\gamma^{\prime}, \lambda)\)-elements, respectively.

Definition 3.4

Let \(\mathfrak{C}\) be a normal cone with normal constant \(\lambda _{N_{C}}\) and \(M:\mathcal{E}\rightarrow CB(\mathcal{E})\) be a weak-ARD set-valued mapping. Let \(I:\mathcal{E}\rightarrow\mathcal {E}\) be the identity mapping and \(A:\mathcal{E}\rightarrow\mathcal{E}\) be a set-valued mapping and \(A:\mathcal{E}\rightarrow\mathcal{E}\) be a single-valued mapping. The relaxed resolvent operator \(R_{M,\lambda}^{(I-A)}: \mathcal {E}\rightarrow\mathcal{E}\) associated with I, A and M is defined by

The relaxed resolvent operator defined by (1) is single-valued, a comparison mapping and Lipschitz continuous.

Proposition 4

([2])

Let \(A:\mathcal{E}\rightarrow\mathcal{E}\) be a β-ordered compression mapping and \(M:\mathcal{E}\rightarrow CB(\mathcal{E})\) be the set-valued ordered rectangular mapping. Then the resolvent \(R_{M,\lambda}^{(I-A)}: \mathcal{E}\rightarrow\mathcal{E}\) is single-valued, for all \(\lambda > 0\).

Proposition 5

([2])

Let \(M:\mathcal{E}\rightarrow CB(\mathcal{E})\) be a \((\gamma_{A}, \lambda)\)-weak-ARD set-valued mapping with respect to \(R_{M,\lambda}^{(I-A)}\). Let \(A:\mathcal{E}\rightarrow\mathcal{E}\) be a strongly comparison mapping with respect to \(R_{M,\lambda}^{(I-A)}\) and \(I: \mathcal{E}\rightarrow\mathcal{E}\) be the identity mapping. Then the resolvent operator \(R_{M,\lambda}^{(I-A)}: \mathcal{E}\rightarrow\mathcal{E}\) is a comparison mapping.

Proposition 6

([2])

Let \(M:\mathcal{E}\rightarrow CB(\mathcal{E})\) be a \((\gamma_{A}, \lambda)\)-weak-ARD set-valued mapping with respect to \(R_{M,\lambda}^{(I-A)}\). Let \(A:\mathcal{E}\rightarrow\mathcal{E}\) be a strongly comparison and β-ordered compression mapping with respect to \(R_{M,\lambda}^{(I-A)}\) with condition \(\lambda\gamma_{A}> \beta+1\). Then the following condition survives:

4 Formulation of the problems

Let \(F_{i}: \mathcal{E}_{1}\times\mathcal{E}_{2}\times\cdots\times \mathcal{E}_{m}\rightarrow\mathcal{E}_{i}\), \(A_{i}: \mathcal{E}_{i} \rightarrow\mathcal{E}_{i}\) and \(g_{i}: \mathcal{E}_{i}\rightarrow \mathcal{E}_{i}\) to be single-valued mappings, for \(i, j= 1,2,3,\ldots, m\). Let \(U_{ij}: \mathcal{E}_{j} \rightarrow CB(\mathcal{E}_{j})\) be a set-valued map and \(M_{i}: \mathcal{E}_{i}\rightarrow CB(\mathcal {E}_{i})\) be set-valued weak-ARD mapping. Then we have the problem:

Find \((x_{1}^{\ast},x_{2}^{\ast}, \ldots, x_{m}^{\ast}) \in\mathcal {E}_{1}\times\mathcal{E}_{2}\times\cdots\times\mathcal{E}_{m}\) and \(u_{ij}^{\ast} \in U_{ij}(x_{j}^{\ast})\), for \(i, j= 1,2,3,\ldots m\), such that

where \(\rho_{i}\) and \(\lambda_{i}\) are given positive constants. Problem (2) is called a generalized set-valued system of nonlinear ordered variational inclusions problem for weak-ARD mappings.

If \(U_{ij}= T_{ij}\) is a single-valued mapping, then problem (2) becomes:

Find \(x_{j} \in\mathcal{E}_{j}\), such that

This problem is known as a generalized system of nonlinear ordered variational inclusions problem involving weak-ARD mappings.

Remark

Here, we discuss special cases for our problem (2), which was encountered by Li et al.

- Case 1.:

-

For \(i, j=1\), \(\rho_{i} = 1\), \(\lambda_{i} = 1\) and \(U_{ij}= g_{i} =I\), then problem (2) is reduced to finding \(x \in\mathcal{E}_{1}\) such that

$$ 0 \in F_{1}(x)\oplus M_{1}(x). $$(4)This problem was considered by Li et al. [23] and coined a general nonlinear mixed-order quasi-variational inclusion (GNMOQVI) involving the ⊕ operator in an ordered Banach space.

- Case 2.:

-

If \(F=0\) (zero mapping), then problem (4) is reduced to finding \(x \in\mathcal{E}\) such that

$$ 0 \in M(x). $$(5)This problem were considered by Li for ordered RME set-valued mappings [19] and \((\alpha, \lambda)\)-NODM set-valued mappings [20].

Lemma 4.1

Let \((x_{1}^{\ast},x_{2}^{\ast}, \ldots, x_{m}^{\ast}) \in\mathcal {E}_{1}\times\mathcal{E}_{2}\times\cdots\times\mathcal{E}_{m}\) and \(u_{ij}^{\ast} \in U_{ij}(x_{j}^{\ast})\) for \(i, j= 1,2,3,\ldots, m\). Then \((x_{1}^{\ast},x_{2}^{\ast}, \ldots, x_{m}^{\ast}, u_{11}^{\ast}, u_{12}^{\ast}, \ldots, u_{1m}^{\ast}, \ldots, u_{m1}^{\ast},u_{m2}^{\ast}, \ldots, u_{mm}^{\ast})\) is a solution of problem (2) if and only if it satisfies

where \(J_{\lambda_{i}, M_{i}}^{I_{i}-A_{i}}(x)= [(I_{i}-A_{i})+ \lambda _{i} M_{i}]^{-1}(x)\) and \(\rho_{i}, \lambda_{i} > 0\) for \(i=1, 2,\ldots , m\).

Proof

The proof follows from the definition of the relaxed resolvent operator. □

6 Main results

Theorem 6.1

Let \(A_{i}: \mathcal{E}_{i}\rightarrow\mathcal{E}_{i}\), \(g_{i}: \mathcal{E}_{i}\rightarrow\mathcal{E}_{i}\) and \(F_{i}: \mathcal {E}_{1}\times\mathcal{E}_{2}\times\cdots\times\mathcal {E}_{m}\rightarrow\mathcal{E}_{i}\) be the single-valued mappings such that \(A_{i}\) be \(\lambda_{A_{i}}\)-ordered compression mapping, \(g_{i}\) be \(\lambda_{g_{i}}\)-ordered compression, \((\alpha_{1}^{i},\alpha _{2}^{i})\)-ordered restricted-accretive mapping and \(F_{i}\) be \(\lambda_{ij}\)-ordered compression mapping with respect to the jth argument. Let \(U_{ij}: \mathcal{E}_{j} \rightarrow CB(\mathcal{E}_{j})\) be a \(D_{i}\)-\(\delta_{ij}\)-ordered Lipschitz continuous set-valued mapping. Let \(M_{i}: \mathcal{E}_{i}\rightarrow CB(\mathcal{E}_{i})\) be a \((\gamma_{A_{i}}, \lambda_{i})\)-weak rectangular different compression mapping with respect to \(A_{i}\) and if \(x_{i} \propto y_{i}\), \(J_{\lambda_{i}, M_{i}}^{I_{i}-A_{i}}(x_{i}) \propto J_{\lambda_{i}, M_{i}}^{I_{i}-A_{i}}(y_{i})\) and for all \(\lambda_{i}\), \(\rho_{i} > 0\), then the following condition holds:

for all \(j=1,2,3, \ldots,m\), which in turn, implies that problem (2) admits a solution \((x_{1}^{\ast},x_{2}^{\ast},\ldots, x_{m}^{\ast }, u_{11}^{\ast},u_{12}^{\ast},\ldots,u_{1m}^{\ast},\ldots ,u_{m1}^{\ast},\ldots,u_{mm}^{\ast})\), where \((x_{1}^{\ast},x_{2}^{\ast },\ldots,x_{m}^{\ast}) \in\mathcal{E}_{1}\times\mathcal{E}_{2}\times \cdots\times\mathcal{E}_{m}\) and \(u_{ij}^{\ast} \in U_{ij}(x_{j}^{\ast})\). Moreover, iterative sequences \(\{x_{j}^{n}\}\) and \(\{u_{ij}^{n}\}\) generated by Algorithm 1, converge strongly to \(x_{j}^{\ast}\) and \(u_{ij}^{\ast}\), for \(i, j=1, 2,\ldots,m\), respectively.

Proof

Using Algorithm 1 and Proposition 2, for \(i=1, 2,\ldots, m\), we have

Using Definition 2.2, Proposition 6 and Eq. (10), we get

Now, from Eq. (11), we compute

By the definition of \(F_{i}\) as a \(\lambda_{F_{ij}}\)-ordered compression map with respect to the jth argument, we have

Using Proposition 6 and Eq. (13) in Eq. (11), we obtain

which implies that

where

and

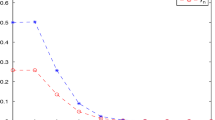

From Eq. (14), we know that the sequence \(\{\theta_{j}^{n}\}\) is monotonic decreasing and \(\theta_{j}^{n}\rightarrow\theta_{j}\) as \(n\rightarrow\infty\). Thus, \(f(\lambda)= \lim_{n\rightarrow\infty}f_{n}(\lambda)=\max_{1\leq j\leq m}\{1-\lambda+\lambda\theta_{j}\}\). Since \(0 < \theta_{j} < 1\) for \(j=1, 2,\ldots, m\). We get \(\theta= \max_{1\leq j \leq m}\{\theta_{j}\} \in(0, 1)\). By Lemma 2.1, we have \(f(\lambda)= 1- \lambda+ \lambda\theta\in (0, 1)\), from Eq. (14), it follows that \(\{x_{j}^{n}\}\) is a Cauchy sequence and there exists \(x_{j}^{\ast} \in\mathcal{E}_{j}\) such that \(x_{j}^{n} \rightarrow x_{j}^{\ast}\) as \(n \rightarrow\infty\) for \(j = 1, 2,\ldots, m\). Next, we show that \(u_{ij}^{n} \rightarrow u_{ij}^{\ast} \in U_{ij}(x_{j}^{\ast})\) as \(n \rightarrow\infty\) for \(i, j= 1, 2,\ldots, m\). It follows from Eq. (13) that the \(\{u_{ij}^{n}\}\) are also Cauchy sequences. Hence, there exists \(u_{ij}^{\ast} \in\mathcal{E}_{j}\) such that \(u_{ij}^{n} \rightarrow u_{ij}^{\ast}\) as \(n\rightarrow\infty\) for \(i, j= 1, 2,\ldots, m\). Furthermore,

Since \(U_{ij}(x_{j}^{\ast})\) is closed for \(i, j=1, 2,\ldots, m\), we have \(u_{ij}^{\ast} \in U_{ij}(x_{j}^{\ast})\) for \(i, j=1, 2,\ldots, m\). By using continuity \((x_{1}^{\ast}, x_{2}^{\ast}, \ldots, x_{m}^{\ast}) \in\mathcal{E}_{1}\times\mathcal{E}_{2}\times\cdots\times\mathcal {E}_{m}\) and \(u_{ij}^{\ast} \in U_{ij}(x_{j}^{\ast})\) for \(i, j=1, 2,\ldots, m\) satisfy Eq. (6) and so by Lemma 4.1, problem (2) has a solution \((x_{1}^{\ast},x_{2}^{\ast}, \ldots, x_{m}^{\ast}, u_{11}^{\ast}, u_{12}^{\ast}, \ldots, u_{1m}^{\ast}, \ldots, u_{m1}^{\ast},u_{m2}^{\ast}, \ldots, u_{mm}^{\ast})\), where \(u_{ij}^{\ast}\in U_{ij}(x_{j}^{\ast})\) for \(i, j=1, 2,\ldots, m\) and \((x_{1}^{\ast}, x_{2}^{\ast},\ldots, x_{m}^{\ast}) \in\mathcal {E}_{1}\times\mathcal{E}_{2}\times\cdots\times\mathcal{E}_{m}\). This completes the proof. □

Theorem 6.2

Suppose that \(A_{i}, g_{i}\) and \(M_{i}\) are the same as in Theorem 6.1 for \(i=1, 2,\ldots,m\). Let \(T_{ij}: \mathcal{E}_{j}\rightarrow\mathcal{E}_{j}\) be \(\gamma _{ij}\)-Lipschitz continuous and \(F_{i}:\mathcal{E}_{1}\times \mathcal{E}_{2}\times\cdots\times\mathcal{E}_{m}\rightarrow\mathcal {E}_{i}\) be \(\lambda_{F_{ij}}\)-ordered compression mapping with respect to the jth argument. Let there be constants \(\lambda_{j} >0\), for \(j=1,2,\ldots,m\) such that

Then problem (3) has a unique solution \((x_{1}^{\ast},x_{2}^{\ast },\ldots,x_{m}^{\ast})\in\mathcal{E}_{1}\times\mathcal{E}_{2}\times \cdots\times\mathcal{E}_{m}\). Moreover, the iterative sequence \(\{ x_{j}^{n}\}\) generated by Algorithm 2 converges strongly to \(x_{j}^{\ast }\) for \(j=1,2,\ldots,m\).

Proof

Let us define a norm \(\|\cdot\|_{\ast}\) on the product space \(\mathcal {E}_{1}\times\mathcal{E}_{2}\times\cdots\times\mathcal{E}_{m}\) by

Then it can easily be seen that \((\mathcal{E}_{1}\times\mathcal {E}_{2}\times\cdots\times\mathcal{E}_{m}, \|\cdot\|_{\ast})\) is a Banach space.

Setting

Define a mapping \(Q:\mathcal{E}_{1}\times\mathcal{E}_{2}\times\cdots \times\mathcal{E}_{m} \rightarrow\mathcal{E}_{1}\times\mathcal {E}_{2}\times\cdots\times\mathcal{E}_{m}\) as

For any \((x_{1}^{1},x_{2}^{1},\ldots,x_{m}^{1})\), \((x_{1}^{2},x_{2}^{2},\ldots,x_{m}^{2})\in\mathcal{E}_{1}\times\mathcal {E}_{2}\times\cdots\times\mathcal{E}_{m}\) we have

First of all, we have to calculate \((y_{i}^{1} \oplus y_{i}^{2})\) as follows:

From Definition 2.2 and Proposition 3, we have

Further, we calculate

Now we calculate the inner part estimate of the above expression with the help of the properties of the \(F_{i}\)-operator for \(i=1, 2,\ldots ,m\). We have

By using the Lipschitz continuity of \(T_{ij}\)-operator in Eq. (19), we have

Using Eq. (20) in Eq. (18) and then use it in Eq. (17), we have

Now, Eq. (16) can be rewritten as

where \(\theta= \max_{1\leq j \leq m}\theta_{j}\). Finally, from Eq. (22), Eq. (16) can be written as

It follows from the condition (9) that \(0 < \theta< 1\). This implies that \(Q: \mathcal{E}_{1}\times\mathcal{E}_{2}\times\cdots \times\mathcal{E}_{m} \rightarrow\mathcal{E}_{1}\times\mathcal {E}_{2}\times\cdots\times\mathcal{E}_{m}\) is a contraction which in turn implies that there exists a unique \((x_{1}^{\ast},x_{2}^{\ast},\ldots, x_{m}^{\ast}) \in\mathcal {E}_{1}\times\mathcal{E}_{2}\times\cdots\times\mathcal{E}_{m}\) such that \(Q(x_{1}^{\ast},x_{2}^{\ast},\ldots, x_{m}^{\ast})=(x_{1}^{\ast },x_{2}^{\ast},\ldots, x_{m}^{\ast})\). Thus, \((x_{1}^{\ast},x_{2}^{\ast },\ldots, x_{m}^{\ast})\) is the unique solution of problem (3). Now, we prove that \(x_{i}^{n}\rightarrow x_{i}^{\ast}\) as \(n \rightarrow\infty\) for \(i=1,2,\ldots, m\). In fact, it follows from Eq. (8) and the Lipschitz continuity of the relaxed resolvent operator that

From the previous calculations, we have

where \(a_{n}=\sum_{j=1}^{m}\|x_{j}^{n} - x_{j}^{\ast}\|\), \(b_{n}=\sum_{j=1}^{m}\|w_{j}^{n}\|\). Algorithm 2 yields \(\lim_{n\rightarrow\infty}b_{n}=0\). Now, Lemma 2.2 implies that \(\lim_{n\rightarrow\infty}a_{n}=0\), and so \(x_{j}^{n} \rightarrow x_{j}^{\ast}\) as \(n\rightarrow\infty\) for \(j=1,2,\ldots,m\). This completes the proof. □

7 Conclusion

Two of the most troublesome and imperative issues identified with inclusions are the foundation of generalized inclusions and the improvement of an iterative calculation. In this article, two systems of variational inclusions were presented and contemplated, which is a broader aim than the numerous current systems of generalized ordered variational inclusions in the literature. An iterative calculation is performed with a weak ARD mapping to an inexact solution of our systems, and the convergence criterion is likewise addressed.

We comment that our outcomes are new and valuable for additionally investigations. Considerably more work is required in every one of these regions to address utilizations of the system of general ordered variational inclusions in engineering and physical sciences.

References

Adly, S.: Perturbed algorithm and sensitivity analysis for a general class of variational inclusions. J. Math. Anal. Appl. 201, 609–630 (1996)

Ahmad, I., Ahmad, R., Iqbal, J.: A resolvent approach for solving a set-valued variational inclusion problem using weak-RRD set-valued mapping. Korean J. Math. 24(2), 199–213 (2016)

Ahmad, I., Ahmad, R., Iqbal, J.: Parametric ordered generalized variational inclusions involving NODSM mappings. Adv. Nonlinear Var. Inequal. 19(1), 88–97 (2016)

Ahmad, I., Mishra, V.N., Ahmad, R., Rahaman, M.: An iterative algorithm for a system of generalized implicit variational inclusions. SpringerPlus 5(1283), 1–16 (2016). https://doi.org/10.1186/s40064-016-2916-8

Ahmad, R., Ansari, Q.H.: An iteratlve algorithm for generalized nonlinear variational inclusions. Appl. Math. Lett. 13(5), 23–26 (2000)

Amann, H.: On the number of solutions of nonlinear equations in ordered Banach space. J. Funct. Anal. 11, 346–384 (1972)

Ansari, Q.H., Yao, J.C.: A fixed point theorem and its applications to a system of variational inequalities. Bull. Aust. Math. Soc. 59(3), 433–442 (1999)

Bianchi, M.: Pseudo P-Monotone Operators and Variational Inequalities, Report 6, Istitute di Econometria e Matematica per Le Decisioni Economiche. Universita Cattolica del Sacro Cuore, Milan (1993)

Cohen, G., Chaplais, F.: Nested monotonicity for variational inequalities over a product of spaces and convergence of iterative algorithms. J. Optim. Theory Appl. 59, 360–390 (1988)

Ding, X.P.: Perturbed proximal point algorithms for generalized quasi-variational inclusions. J. Math. Anal. Appl. 210, 88–101 (1997)

Du, Y.H.: Fixed points of increasing operators in ordered Banach spaces and applications. Appl. Anal. 38, 1–20 (1990)

Fang, Y.P., Huang, N.J.: H-Monotone operator and resolvent operator technique for variational inclusions. Appl. Math. Comput. 145, 795–803 (2003)

Fang, Y.P., Huang, N.J., Thompson, H.B.: A new system of variational inclusions with \((H,\eta )\)-monotone operators in Hilbert spaces. Comput. Math. Appl. 49, 365–374 (2005)

Ge, D.J.: Fixed points of mixed monotone operators with applications. Appl. Anal. 31, 215–224 (1988)

Ge, D.J., Lakshmikantham, V.: Couple fixed points of nonlinear operators with applications. Nonlinear Anal. TMA 3811, 623–632 (1987)

Hassouni, A., Moudafi, A.: A perturbed algorithm for variational inclusions. J. Math. Anal. Appl. 185, 706–712 (1994)

Lan, H.Y., Cho, Y.J., Verma, R.U.: Nonlinear relaxed cocoercive inclusions involving \((A,\eta )\)-accretive mappings in Banach spaces. Comput. Math. Appl. 51, 1529–1538 (2006)

Li, H.G.: Approximation solution for generalized nonlinear ordered variational inequality and ordered equation in ordered Banach space. Nonlinear Anal. Forum 13(2), 205–214 (2008)

Li, H.G.: Nonlinear inclusion problems for ordered RME set-valued mappings in ordered Hilbert spaces. Nonlinear Funct. Anal. Appl. 16(1), 1–8 (2011)

Li, H.G.: A nonlinear inclusion problem involving \((\alpha, \lambda )\)-NODM set-valued mappings in ordered Hilbert space. Appl. Math. Lett. 25, 1384–1388 (2012)

Li, H.G., Li, L.P., Jin, M.M.: A class of nonlinear mixed ordered inclusion problems for oredered \((\alpha_{A}, \lambda)\)-ANODM set-valued mappings with strong comparison mapping a. Fixed Point Theory Appl. 2014, 79 (2014)

Li, H.G., Pan, X.B., Deng, Z.Y., Wang, C.Y.: Solving GNOVI frameworks involving \((\gamma_{G}, \lambda )\)-weak-GRD set-valued mappings in positive Hilbert spaces. Fixed Point Theory Appl. 2014, 146 (2014)

Li, H.G., Qui, D., Zou, Y.: Characterization of weak-ANODD set-valued mappings with applications to approximate solution of GNMOQV inclusions involving ⊕ operator in ordered Banach space. Fixed Point Theory Appl. 2013, 241 (2013). https://doi.org/10.1186/1687-1812-2013-241

Lions, J.L., Stampacchia, G.: Variational inequalities. Commun. Pure Appl. Math. 20, 493–519 (1967)

Salahuddin: Solvability for a system of generalized nonlinear ordered variational inclusions in ordered Banach spaces. Korean J. Math. 25(3), 359–377 (2017). https://doi.org/10.11568/kjm.2017.25.3.359

Sarfaraz, M., Ahmad, M.K., Kiliçman, A.: Approximation solution for system of generalized ordered variational inclusions with ⊕ operator in ordered Banach space. J. Inequal. Appl. 2017, 81 (2017). https://doi.org/10.1186/s13660-017-1351-x

Schaefer, H.H.: Banach Lattices and Positive Operators. Springer, Berlin (1974). https://doi.org/10.1007/978-3-642-65970-6

Xiong, T.J., Lan, H.Y.: New general systems of set-valued variational inclusions involving relative \((A,\eta)\)-maximal monotone operators in Hilbert spaces. J. Inequal. Appl. 2014(407), 1 (2014)

Xiong, T.J., Lan, H.Y.: Strong convergence of new two-step viscosity iterative approximation methods for set-valued nonexpansive mappings in \(\operatorname{CAT}(0)\) spaces. J. Funct. Spaces 2018, Article ID 1280241 (2018)

Xu, Y.: Ishikawa and Mann iterative processes with errors for nonlinear strongly accretive operator equations. J. Math. Anal. Appl. 224(1), 91–101 (1998)

Acknowledgements

The authors are thankful to Aligarh Muslim University, Aligarh and Department of Mathematics, College of Arts and Sciences at Wadi Addawasir, Prince Sattam Bin Abdulaziz University, Riyadh Region, Kingdom of Saudi Arabia for providing excellent facilities to carry out this work.

Funding

No funding available.

Author information

Authors and Affiliations

Contributions

The authors contributed equally and significantly in writing this paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sarfaraz, M., Nisar, K.S., Morsy, A. et al. New generalized systems of nonlinear ordered variational inclusions involving ⊕ operator in real ordered Hilbert spaces. J Inequal Appl 2018, 252 (2018). https://doi.org/10.1186/s13660-018-1846-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-018-1846-0