Abstract

Let X be an observable random variable with unknown distribution function \(F(x) = \mathbb{P}(X \leq x)\), \(- \infty< x < \infty\), and let

We call θ the power of moments of the random variable X. Let \(X_{1}, X_{2}, \ldots, X_{n}\) be a random sample of size n drawn from \(F(\cdot)\). In this paper we propose the following simple point estimator of θ and investigate its asymptotic properties:

where \(\log x = \ln(e \vee x)\), \(- \infty< x < \infty\). In particular, we show that

This means that, under very reasonable conditions on \(F(\cdot)\), \(\hat {\theta}_{n}\) is actually a consistent estimator of θ.

Similar content being viewed by others

1 Motivation

The motivation of the current work arises from the following problem concerning parameter estimation. Let X be an observable random variable with unknown distribution function \(F(x) = \mathbb{P}(X \leq x)\), \(- \infty< x < \infty\), and let

We call θ the power of moments of the random variable X. Clearly θ is a parameter of the distribution of the random variable X. Now let \(X_{1}, X_{2}, \ldots, X_{n}\) be a random sample of size n drawn from the random variable X; i.e., \(X_{1}, X_{2}, \ldots, X_{n}\) are independent and identically distributed (i.i.d.) random variables whose common distribution function is \(F(\cdot)\). It is natural to pose the following question: Can we estimate the parameter θ based on the random sample \(X_{1}\), …, \(X_{n}\)?

This is a serious and important problem. For example, if \(\theta> 2\) and if the distribution of X is nondegenerate, then it is clear that \(0 < \operatorname{Var} X < \infty \) and so by the classical Lévy central limit theorem, the distribution of

is approximately normal (for all sufficiently large n) with mean 0 and variance \(\sigma^{2} = \operatorname{Var}X = \mathbb{E}(X - \mu)^{2}\) where \(\mu = \mathbb{E}X\). Thus the problem that we are facing is how can we conclude with a high degree of confidence that \(\theta> 2\).

In this paper we propose the following point estimator of θ and will investigate its asymptotic properties:

Here and below \(\log x = \ln(e \vee x)\), \(- \infty< x < \infty\).

Our main results will be stated in Sect. 2 and they all pertain to a sequence of i.i.d. random variables \(\{X_{n}; n \geq1\}\) drawn from the distribution function \(F(\cdot)\) of the random variable X. The proofs of our main results will be provided in Sect. 3.

2 Statement of the main results

Throughout, X is a random variable with unknown distribution \(F(x) = \mathbb{P}(X \leq x)\), \(-\infty< x < \infty\) and write

Clearly, just as θ as defined in Sect. 1 is a parameter of the distribution \(F(\cdot)\) of the random variable X, so are \(\rho_{1}\) and \(\rho_{2}\). These parameters satisfy

The main results of this paper are Theorems 2.1–2.5.

Theorem 2.1

Let \(\{X_{n}; n \geq1\}\) be a sequence of i.i.d. random variables drawn from the distribution function \(F(\cdot)\) of the random variable X. Then

and there exists an increasing positive integer sequence \(\{l_{n}; n \geq1 \}\) (which depends on the probability distribution of X when \(\rho_{1} < \infty\)) such that

Theorem 2.2

Let \(\{X_{n}; n \geq1\}\) be a sequence of i.i.d. random variables drawn from the distribution function \(F(\cdot)\) of the random variable X. Then

and there exists an increasing positive integer sequence \(\{m_{n}; n \geq1 \}\) (which depends on the probability distribution of X when \(\rho_{2} > 0\)) such that

Remark 2.1

We must point out that (2.2) and (2.4) are two interesting conclusions. To see this, let \(\{U_{n}; n \geq1 \}\) be a sequence of independent random variables with

Since

it follows from the Borel–Cantelli lemma that

However, for any sequences \(\{l_{n}; n \geq1\}\) and \(\{m_{n}; n \geq1 \}\) of increasing positive integers,

Remark 2.2

For an observable random variable X, it is often the case that \(\rho _{1} = \rho_{2}\). However, for any given constants \(\rho_{1}\) and \(\rho_{2}\) with \(0 \leq\rho_{1} < \rho_{2} \leq\infty\), one can construct a random variable X such that

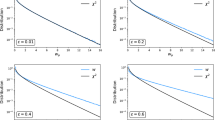

For example, if \(0 < \rho_{1} < \rho_{2} < \infty\), a random variable X can be constructed having probability distribution given by

where \(d_{n} = 2^{ (\rho_{2}/\rho_{1} )^{n}}\), \(n \geq1\) and

Combining Theorems 2.1 and 2.2, we establish a law of large numbers for \(\log\max_{1 \leq k \leq n} X_{k}\), \(n \geq1\) as follows.

Theorem 2.3

Let \(\{X_{n}; n \geq1\}\) be a sequence of i.i.d. random variables drawn from the distribution function \(F(\cdot)\) of the random variable X and let \(\rho\in[0, \infty]\). Then the following four statements are equivalent:

If \(0 \leq\rho< \infty\), then anyone of (2.5)–(2.8) holds if and only if there exists a function \(L(\cdot): (0, \infty) \rightarrow(0, \infty)\) such that

The following result concerns convergence in distribution for \(\log\max _{1 \leq k \leq n}X_{k}\), \(n \geq1\).

Theorem 2.4

Let \(\{X_{n}; n \geq1\}\) be a sequence of i.i.d. random variables drawn from the distribution function \(F(\cdot)\) of the random variable X. Suppose that there exist constants \(0 < \rho< \infty\) and \(-\infty< \tau< \infty\) and a monotone function \(h(\cdot): [0, \infty) \rightarrow(0, \infty)\) with \(\lim_{x \rightarrow\infty}h(x^{2})/h(x) = 1\) such that

Then

Also, by Theorems 2.1–2.3, we have the following result for the point estimator \(\hat{\theta}_{n}\).

Theorem 2.5

Let \(\{X_{n}; n \geq1\}\) be a sequence of i.i.d. random variables drawn from the distribution function \(F(\cdot)\) of the random variable X. Let

Then we have

and the following three statements are equivalent:

If \(0 \leq\theta< \infty\), then anyone of (2.12)–(2.14) holds if and only if there exists a function \(L(\cdot): (0, \infty) \rightarrow(0, \infty)\) such that

Remark 2.3

Let \(\{X_{n}; n \geq1\}\) be a sequence of i.i.d. random variables drawn from the distribution function \(F(\cdot)\) of some nonnegative random variable X. For each \(n \geq1\), let \(X_{n,1} \leq X_{n,2} \leq\cdots\leq X_{n,n}\) denote the order statistics based on \(X_{1}, X_{2}, \ldots, X_{n}\). To estimate the tail index of \(F(\cdot)\), the well-known Hill estimator, proposed by Hill [1], is defined by

where \(\{k_{n}; n \geq1 \}\) is a sequence of positive integers satisfying

Mason [2, Theorem 2] showed that, for some constant \(\theta\in (0, \infty)\),

if and only if

Since \(L(\cdot)\) defined in (2.17) is a slowly varying function,

is always true and hence (2.15) follows from (2.17). However, the following example shows that (2.15) does not imply (2.17). Thus condtion (2.15) is weaker than (2.17).

Example 2.1

Let \(\{X_{n}; n \geq1\}\) be a sequence of i.i.d. random variables drawn from the distribution function \(F(\cdot)\) of some nonnegative random variable X given by

where \(\theta\in(0, \infty)\) is the tail index of the distribution and \([t]\) denotes the integer part of t. Then (2.15) holds but (2.17) is not satisfied. To see this, let

Then

Since, for \(x \geq e\), \(0 \leq\ln x -[\ln x] \leq1\), we have

and hence (2.15) holds. However, for \(1 < a < e\) and \(x_{n} = e^{n}\), \(n \geq1\), we have

Thus, for \(\theta\in(0, \infty)\),

and hence

i.e., \(L(\cdot)\) is not a slowly varying function. Thus (2.17) is not satisfied and hence, for this example, the well-known Hill estimator cannot be used to estimate the tail index θ.

3 Proofs of the main results

Let \(\{A_{n}; n \geq1 \}\) be a sequence of events. As usual the abbreviation \(\{A_{n} \mbox{ i.o.} \}\) stands for the case that the events \(A_{n}\) occur infinitely often. That is,

For events A and B, we say \(A = B\) a.s. if \(\mathbb{P}(A \mathrel{\Delta} B) = 0\) where \(A \mathrel{\Delta}B = (A \setminus B) \cup(B \setminus A)\). To prove Theorem 2.1, we use the following preliminary result, which can be found in Chandra [3, Example 1.6.25(a), p. 48].

Lemma 3.1

Let \(\{b_{n}; n \geq1 \}\) be a nondecreasing sequence of positive real numbers such that

and let \(\{V_{n}; n \geq1 \}\) be a sequence of random variables. Then

Proof of Theorem 2.1

Case I: \(0 < \rho_{1} < \infty\). For given \(\epsilon> 0\), let \(r(\epsilon) = (\frac{1}{\rho_{1}} + \epsilon )^{-1}\). Then

and hence

By the Borel–Cantelli lemma, (3.1) implies that

By Lemma 3.1, we have

and hence

Thus

Letting \(\epsilon\searrow0\), we get

By the definition of \(\rho_{1}\), we have

which is equivalent to

Then, inductively, we can choose positive integers \(l_{n}\), \(n \geq1\) such that

Note that, for any \(0 \leq z \leq1\), \(1 - z \leq e^{-z}\). Thus, for all sufficiently large n, we have

Since \(\sum_{n=1}^{\infty} n^{-2} < \infty\), by the Borel–Cantelli lemma, we get

which ensures that

Clearly, (2.1) and (2.2) follow from (3.2) and (3.3).

Case II: \(\rho_{1} = \infty\). Using the same argument used in the first half of the proof for Case I, we get

and hence

Note that

We thus have

It thus follows from (3.4) and (3.5) that

proving (2.1) and (2.2) (with \(l_{n} = n\), \(n \geq1\)).

Case III: \(\rho_{1} = 0\). By the definition of \(\rho_{1}\), we have

which is equivalent to

Then, inductively, we can choose positive integers \(l_{n}\), \(n \geq1\) such that

Thus, for all sufficiently large n, we have by the same argument as in Case I

and hence by the Borel–Cantelli lemma

which ensures that

Thus (2.1) and (2.2) hold. This completes the proof of Theorem 2.1. □

Proof of Theorem 2.2

Case I: \(0 < \rho_{2} < \infty\). For given \(\rho_{2} < r < \infty\), let \(r_{1} = (r + \rho_{2} )/2\) and \(\tau= 1 - (r_{1}/r)\). Then \(\rho _{2} < r_{1} < r < \infty\) and \(\tau> 0\). By the definition of \(\rho_{2}\), we have

and hence, for all sufficiently large x,

Thus, for all sufficiently large n,

and hence

Since

by the Borel–Cantelli lemma, we have

which implies that

Letting \(r \searrow\rho_{2}\), we get

Again, by the definition of \(\rho_{2}\), we have

which is equivalent to

Then, inductively, we can choose positive integers \(m_{n}\), \(n \geq1\) such that

Then we have

Thus, by the Borel–Cantelli lemma, we get

which ensures that

Clearly, (2.3) and (2.4) follow from (3.6) and (3.7).

Case II: \(\rho_{2} = \infty\). By the definition of \(\rho_{2}\), we have

which is equivalent to

Then, inductively, we can choose positive integers \(m_{n}\), \(n \geq1\) such that

Thus

and hence by the Borel–Cantelli lemma

which ensures that

It is clear that

It thus follows from (3.8) and (3.9) that

Case III: \(\rho_{2} = 0\). Using the same argument used in the first half of the proof for Case I, we get

Letting \(r \searrow0\), we get

Thus

and hence (2.3) and (2.4) hold with \(m_{n} = n\), \(n \geq1\). □

Proof of Theorem 2.3

It follows from Theorems 2.1 and 2.2 that

Since (2.6) follows from (2.5), we only need to show that (2.6) implies (2.8). It follows from (2.6) that

Since, for \(n \geq3\),

and

it follows from (3.10) that

which is equivalent to (2.8).

For \(0 \leq\rho< \infty\), note that

We thus see that, if \(0 \leq\rho< \infty\), then (2.8) is equivalent to

(We leave it to the reader to work out the details of the proof.) We thus see that (2.8) implies (2.9) with \(L(x) = x^{\rho} \mathbb{P}(X > x)\), \(x > 0\). It is easy to verify that (2.8) follows from (2.9). This completes the proof of Theorem 2.3. □

Proof of Theorem 2.4

For fixed \(x \in(-\infty, \infty)\), write

Then

Since \(h(\cdot): [0, \infty) \rightarrow(0, \infty)\) is a monotone function with \(\lim_{x \rightarrow\infty}h(x^{2})/h(x) = 1\), \(h(\cdot)\) is a slowly varying function such that \(\lim_{x \rightarrow \infty} h(x^{r})/h(x) = 1\) \(\forall r > 0\) and hence

Clearly,

It thus follows from (2.10) that, as \(n \rightarrow\infty\),

so that

i.e., (2.11) holds. □

Proof of Theorem 2.5

Since \(\hat{\theta}_{n} = \frac{\log n}{\log\max_{1 \leq k \leq n} \vert X_{k} \vert }\), \(n \geq1\), Theorem 2.5 follows immediately from Theorems 2.1–2.3. □

4 Conclusions

In this paper we propose the following simple point estimator of θ, the power of moments of the random variable X, and investigate its asymptotic properties:

In particular, we show that

This means that, under very reasonable conditions on \(F(\cdot)\), \(\hat {\theta}_{n}\) is actually a consistent estimator of θ. From Remark 2.3 and Example 2.1, we see that, for a nonnegative random variable X, \(\hat{\theta}_{n}\) is a consistent estimator of θ whenever the well-known Hill estimator \(\hat{\alpha}_{n}\) is a consistent estimator of θ. However, the converse is not true.

References

Hill, B.M.: A simple general approach to inference about the tail of a distribution. Ann. Stat. 3, 1163–1174 (1975)

Mason, D.M.: Laws of large numbers for sums of extreme values. Ann. Probab. 10, 754–764 (1982)

Chandra, T.K.: The Borel–Cantelli Lemma. Springer, Heidelberg (2012)

Acknowledgements

The authors are grateful to the referee for carefully reading the manuscript and for offering helpful suggestions and constructive criticism which enabled them to improve the paper. The research of Shuhua Chang was partially supported by the National Natural Science Foundation of China (Grant #: 91430108 and 11771322) and the research of Deli Li was partially supported by a grant from the Natural Sciences and Engineering Research Council of Canada (Grant #: RGPIN-2014-05428).

Author information

Authors and Affiliations

Contributions

All authors contributed equally and significantly in writing this article. All the authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Chang, S., Li, D., Qi, Y. et al. A method for estimating the power of moments. J Inequal Appl 2018, 54 (2018). https://doi.org/10.1186/s13660-018-1645-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-018-1645-7