Abstract

Background

The need for systematic methods for reviewing evidence is continuously increasing. Evidence mapping is one emerging method. There are no authoritative recommendations for what constitutes an evidence map or what methods should be used, and anecdotal evidence suggests heterogeneity in both. Our objectives are to identify published evidence maps and to compare and contrast the presented definitions of evidence mapping, the domains used to classify data in evidence maps, and the form the evidence map takes.

Methods

We conducted a systematic review of publications that presented results with a process termed “evidence mapping” or included a figure called an “evidence map.” We identified publications from searches of ten databases through 8/21/2015, reference mining, and consulting topic experts. We abstracted the research question, the unit of analysis, the search methods and search period covered, and the country of origin. Data were narratively synthesized.

Results

Thirty-nine publications met inclusion criteria. Published evidence maps varied in their definition and the form of the evidence map. Of the 31 definitions provided, 67 % described the purpose as identification of gaps and 58 % referenced a stakeholder engagement process or user-friendly product. All evidence maps explicitly used a systematic approach to evidence synthesis. Twenty-six publications referred to a figure or table explicitly called an “evidence map,” eight referred to an online database as the evidence map, and five stated they used a mapping methodology but did not present a visual depiction of the evidence.

Conclusions

The principal conclusion of our evaluation of studies that call themselves “evidence maps” is that the implied definition of what constitutes an evidence map is a systematic search of a broad field to identify gaps in knowledge and/or future research needs that presents results in a user-friendly format, often a visual figure or graph, or a searchable database. Foundational work is needed to better standardize the methods and products of an evidence map so that researchers and policymakers will know what to expect of this new type of evidence review.

Systematic review registration

Although an a priori protocol was developed, no registration was completed; this review did not fit the PROSPERO format.

Similar content being viewed by others

Background

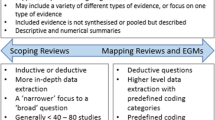

There is growing variation in evidence synthesis methodology to meet the different objectives evidence synthesis can support. The classic systematic review and meta-analysis are both rigorous and produce detailed information about narrow questions, but they are resource intense and the work burden limits the scope of what can be covered [1]. To meet a variety of user needs, offshoots of the classic model have been developed within the evidence synthesis realm; for example, rapid reviews cater to more urgent deadlines but may not adhere to all the methods of a systematic review [2], scoping reviews accommodate larger bodies of literature for which detailed synthesis is not needed [3], and realist reviews specialize in exploring how complex interventions work and frequently include evidence excluded from classic systematic reviews [4].

These new variants on the classical systematic review are at various phases in development. Determining the unique contributions and methods of each of these new synthesis method offshoots is a challenge. Systematic reviews and meta-analyses have a standardized process for conduct and reporting, codified in the Institute of Medicine standards and Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) reporting guidelines [5, 6]. The Realist And Meta-narrative Evidence Syntheses: Evolving Standards (RAMESES) publication standards for realist syntheses and meta-narrative reviews were published in 2013 [7, 8], and scoping review reporting guidance is underway as of 2014 using the Enhancing the QUALity and Transparency Of health Research (EQUATOR) Network [3]. Rapid reviews, also sometimes referred to as evidence summaries [9], have received increased interest, with a journal series and dedicated summit in 2015 [10, 11], as well as multiple articles on rapid review methodology [9, 12, 13], but no official standards have been released.

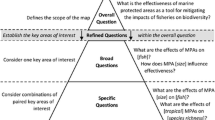

Evidence mapping is the newest of these new evidence review products. In 2002, there were no published evidence maps, and as recently as 2010, only ten such publications could be identified. In addition, evidence mapping has yet to undergo the scrutiny and development of these other methodologies, and it is not clear if those authors who are using the term are using a methodology unique from other developing methods. Both evidence maps and scoping reviews set out to map the literature. A scoping review recently was described as “a form of knowledge synthesis that addresses an exploratory research question aimed at mapping key concepts, types of evidence, and gaps in research related to a defined area or field by systematically searching, selecting, and synthesizing existing knowledge” [3]. They recommend a process described by Arksey and colleagues and enhanced by Levac and colleagues; these steps were also referenced in other publications comparing methods of evidence synthesis as the standard for scoping reviews [14–16]. Thus, there is some consensus surrounding the scoping review method and its components. A 2013 publication attempting to distinguish between the two methodologies concluded that scoping reviews include “a descriptive narrative summary of the results” whereas evidence maps identify evidence gaps, and both use a tabular format to depict a summary of literature characteristics [14]. Furthermore, multiple publications lay out differing recommendations for evidence maps, complicating this effort [14, 17, 18]. Hence, earlier attempts by Schmucker [14] and Snilstveit [15] to characterize evidence map methods and products relied on small numbers of evidence map publications in determining their results and reached conflicting conclusions. Schmucker and colleagues' review included seven evidence maps [14]; Snilstveit discussed three examples while primarily focusing on distinguishing these from what they termed an evidence gap map [15]. Since then, more evidence maps have been published, increasing the ability to determine what constitutes an “evidence map,” either in terms of methods or products. In 2014 alone, eleven evidence maps were published.

Our objectives are to identify and systematically review published evidence maps and to assess commonality and heterogeneity and to determine whether additional work is warranted to try to standardize methods and reporting. We compare and contrast the presented definitions of evidence mapping, the domains used to classify data in evidence maps, and the form of the evidence map takes.

Methods

Literature search

The librarian on our team, RS, conducted an electronic search of ten databases (PubMed, PsycINFO, Embase, CINAHL, Cochrane Database of Systematic Reviews (CDSR), Cochrane Central Register of Controlled Trials (CENTRAL), Cochrane Database of Abstracts of Reviews of Effects (DARE), Cochrane Methodology Register (CMR), SCOPUS, and Web of Science) from inception to 8/21/2015 for publications relating to our objective by using the search terms "evidence map" OR "evidence mapping" OR "evidence maps" OR "mapping evidence" OR “evidence map*” in a search of titles and abstracts. Because evidence mapping is a relatively new method in the biomedical literature synthesis repertoire, no Medical Subject Headings (MeSH) term is available. There were no language restrictions or restrictions on study design. Additional studies were identified through reference mining of identified studies and expert recommendations.

Study selection

Two reviewers independently screened titles and abstracts for relevance and obtained full text articles of publications deemed potentially relevant by at least one reviewer. Full text articles were screened against predetermined inclusion criteria by two independent reviewers, and any disagreements were reconciled through team discussion. To be included, authors must have presented results (i.e., no protocols were included) with a process called evidence mapping or figure called an evidence map. Because of the methodological focus of this review, any patient population, intervention, comparator, outcome, and setting were included.

Data abstraction

For all included publications, the following data were abstracted: use and definition of the term “evidence map,” research question or aim, search methods and years, number of citations included in the evidence map, and country of origin. The unit of analysis was also abstracted, since some maps considered all literature citations for inclusion, whereas others included systematic reviews only or aggregated all publications originating from the same study into one unit. For publications presenting maps, the domains used to classify studies in the map were also abstracted (e.g., interventions, outcomes, and literature size). Data were abstracted by one reviewer using a standardized form and verified by the second reviewer. The form was piloted and refined by both reviewers prior to abstraction. We applied no quality assessment criteria since we are unaware of any that exist for evidence map methods.

Data synthesis

Data were narratively synthesized in three sections, discussing key characteristics of definitions presented, domains used to classify literature in the mapping process (e.g., interventions), and what form an evidence map or evidence mapping methodology takes. Within this latter section, publications were grouped by whether they (1) presented a figure or table explicitly called an “evidence map” or called the results evidence mapping in the publication itself, (2) referred to an online database as the evidence map, or (3) said they used a mapping methodology but did not present a figure or table. No statistical analysis was planned or executed, given the focus on reporting of methods rather than a specific outcome and the heterogeneous nature of the included health topics. We created tables to summarize data on included articles to support the narrative synthesis.

Results

The total search results identified 145 titles through 8/21/2015. Reference mining and expert recommendations yielded one title each for a total of 147 titles for screening. Of these titles, we included 53 potentially relevant publications after title and abstract screening. Fourteen of these publications were rejected after full text review. Four of the excluded publications identified themselves as another type of synthesis (i.e., scoping review, realist synthesis, or systematic review) in their titles [19–22]. Three excluded publications presented discussions of evidence mapping and have been incorporated into discussion where relevant [14, 15, 23]. Two publications used the term “evidence map” outside the evidence synthesis methodology context (e.g., in the context of reporting the results of a hazard assessment) [24, 25]. Another two publications used evidence from an evidence map that was created in a separate project [26, 27], one full text publication was not available [28], one publication was a duplicate citation with another previously screened citation [29], and the final study excluded explicitly stated that they used a standard systematic review protocol developed according to systematic review guidelines [30]. Having met inclusion criteria, thirty-nine publications were included at the full text review. Of these, 34 publications presented evidence maps explicitly and five publications used a mapping methodology without presenting a map [18, 31–34] (see Fig. 1 for literature flow and Additional files 1 and 2 for PRISMA checklist and flow). The publications with explicit maps included those that presented the map in the publication (N = 26) [35–60], and those that discussed a map only available online (N = 8) [16, 17, 61–66].

Included publications came from the USA (N = 19), Australia (N = 10), the UK (N = 7), Canada (N = 2), and Japan (N = 1). Most publications are very recent, with no publications before 2003 (see Fig. 2). Since the last systematic review of evidence maps, conducted by Schmucker and colleagues during 2013, an additional 24 evidence maps have been published, doubling the literature quantity. More details on data extracted from included publications can be found in Table 1.

Although a variety of topics are represented in the 39 included evidence map publications, four broad topical areas account for nearly three quarters of the publications: mental health and learning disability related topics account for 11 of the publications (28 %), complimentary alternative medicine and nutrition-related topics account for seven publications each (18 % each), and traumatic brain injury and spinal cord injury account for four publications (10 %). Within the mental health group, four of the 11 publications come from the headspace research group, described in more detail below. Six of the seven complementary and alternative medicine publications come from the Veterans Affairs Evidence Synthesis Program, of which the authors of this review are a part. Three of the seven nutrition-related publications come from a research group based at Tufts University, and all four of the traumatic brain injury/spinal cord injury publications are a part of the Global Evidence Mapping (GEM) project. Taken together, these four research groups account for 44 % of the published evidence maps included in this review (17/39).

Definition of evidence map

Of the 39 included studies, eight were associated with two evidence mapping projects: GEM [16, 62, 64, 65] and headspace [17, 61, 63, 66]; these publications were grouped by project in the following discussion since publications from the same project employ the same definition. In two instances, there were two publications from a single mapping project, and these were also grouped, resulting in two evidence maps from these four publications [41, 48, 49, 58]. One publication provided neither definition nor citation [32]. Most studies did not explicitly outline a definition of “evidence map,” and often the research aims were used to capture an implicit definition (see Table 1 for research questions or aims for all included studies).

Thus, of the 31 evidence maps with elements of a definition, the most commonly stated component of the definition was a review of evidence to identify gaps or future research needs (67 %, 21/31). Another common component was that the process engage the audience and/or produce user-friendly products (58 %, 18/31). In emphasizing the user-friendly aspect, one definition stated that evidence maps should “provide access to user-friendly summaries of the included studies” [15], while another described evidence maps as “mak[ing] these vast bodies of literature accessible, digestible, and useable” [44]. Many definitions also qualified evidence maps as capturing a broad field (55 %, 17/31). Two components were less often explicitly stated in the definition, having a systematic process and visual depiction (48 %, 15/31 and 23 %, 7/31, respectively), but all included publications used a systematic process (i.e., documented search strategy and inclusion criteria) and most incorporated a visual depiction of the data as well (84 %, 26/31).

Only one evidence map definition explicitly stated all five of these components (i.e., identify gaps or needs, audience engagement/user-friendly products, broad field, systematic process, and visual depiction) [49]. Three other evidence maps met all criteria when their inclusion of a visual depiction was considered a part of the definition implicitly [39, 57, 63]. Seventeen of the evidence maps included four of the five definition components, including those that used a systematic process or visual depiction without explicitly stating this [16, 35–38, 40–42, 44–47, 51–53, 56]. Thus, 68 % (21/31) evidence maps with definitions included four or five of the five most common components.

Most of the 31 evidence maps did not provide any citations when referencing evidence mapping methodology (N = 15). Citations occurring more than twice included Arksey and colleagues [67] (N = 8), the GEM project [16] (N = 7), the headspace project [17] (N = 5), and Katz and colleagues [18] (N = 3). The publication by Arksey and colleagues provides a description of scoping review methods but was still the most cited article. The GEM and headspace projects are both large initiatives that have funded the exploration of a very broad topic, neurotrauma and mental health disorders affecting youth, respectively. The publication by Katz and colleagues, the oldest of the publications we identified, was published in 2003, at least 4 years before any of the other identified mapping publications [18]. This publication introduces the term, as well as a nine-step process: identify and convene the appropriate experts, apply expert opinion to define the region of evidence to be mapped, establish the coordinates to be used for positioning within the map, define the map boundaries in terms of pertinent coordinates, search the relevant “terrain,” draw the map, study the map to identify any needed revisions and to establish priorities for detailed assessments, perform detailed assessments in priority areas, and generate reports summarizing the “lay of the land.”

Domains used to classify data in evidence maps

Only publications that presented an evidence map as a figure or online database were considered relevant in determining domains, or data elements used to classify literature, since these publications were the ones with data presented for such domains. Of the 34 publications, 26 presented the maps in the publication itself, and eight were associated with an online map. As in the definition section, duplicate publications from the same evidence mapping project were collapsed together, leaving a total of 26 evidence maps with domains for this synthesis.

Thirteen evidence maps categorized their literature by the amount of literature relevant to a particular domain (literature size domain, 50 %, see Table 2). Other popular domains included intervention (N = 12, 46 %), study design (N = 10, 38 %), sample size (N = 10, 38 %), disorder/condition (N = 9, 35 %), and outcomes (N = 9, 35 %). Some maps grouped literature in subdomains within these larger domains, such as groups of conditions and then individual conditions within those groups. The average number of domains captured in an evidence map was four, the smallest number being two (i.e., outcomes and elements of service delivery) and the largest being seven (i.e., population characteristics, intervention, outcomes, setting, study design, sample size, and disorder/condition).

Form the evidence map takes once completed

Three main versions of an evidence map were found: publications with a visual representation of data in the publication (N = 26); publications that referenced a database housing data virtually that can be queried (N = 8); and publications that employed a process or methodology leading to recommendations or synthesized result (N = 5).

Visual representation of data

Twenty-six publications explicitly include figure(s) or table(s) which are referred to as evidence maps. They display a range of potential map formats, some quite similar to a classic systematic review evidence table or literature flow diagram. These formats incorporate various numbers of characteristics and types of characteristics. Details of map formats can be found in Table 2. As in prior sections, two projects with two publications each are collapsed into two groups [41, 48, 49, 58], and thus, 24 evidence maps are discussed in this section.

Most of the 24 published evidence maps used some variant of a cross-tabular format for their main findings, with counts or sums of publications arrayed across various domains (N = 10, 42 %). Eight bubble plots (33 %), two flow charts (8 %), and two bar charts (8 %) were used as the main findings diagram in evidence mapping publications. The last two publications arrayed included studies on a conceptual framework (8 %) and mapped the evidence on the relationships described in the model. Most publications presented data in more than one table or graphic (N = 16, 67 %), either with subsets of data in each map (e.g., one table for condition A and another for condition B) or with different domains covered in the different maps (e.g., one graphic describing population demographics covered by the literature and another with outcomes cross-tabulated with interventions of included studies). Figure 3 presents a figure simulating a bubble plot style evidence map as an example of one of the more commonly used formats.

Evidence map of acupuncture for pain. The bubble plot shows an estimate of the evidence base for pain-related indications judging from systematic reviews and recent large trials. The plot depicts the estimated number of RCTs (size of the bubble), the effect size (x-axis), and the strength of evidence (y-axis)

Map as online database

Eight publications discuss evidence maps in the context of online searchable databases. Four publications discuss GEM [16, 62, 64, 65] and four publications discuss headspace [17, 61, 63, 66]. Of the four publications discussing GEM, one provides the overview of the initiative and lays out the GEM mapping method [16], one discusses search strategies that the GEM group has developed [65], and the final one discusses the process of developing priority research areas for which evidence maps can be generated [62]. For this group of publications, there is one product: the searchable online GEM database.

In the case of the headspace publications, there is an overview publication that lays out the history and scope of the headspace program and their mapping methods [17], but each of the three additional publications presents data on a subsection of the overall evidence map [61, 63, 66]. While the overall program aims to improve evidence-based practice in youth mental health, the subtopics of interventions for depression, suicidal and self-harming behaviors, and psychosis are explored in depth in the latter publications, making it less clear if the overall database is the evidence map, or if the data presented within the topic-specific publications are the evidence maps. Although none of the figures are titled “evidence map,” they appear similar to some of those included in the prior section.

Use of evidence mapping as methodology

Five publications used a mapping methodology without presenting an explicit map [18, 31–34]. With or without producing either of the first two conceptions of an evidence map, the third idea was presented or discussed in all included publications. The process, either discussed or executed, usually included a search of multiple databases with standard search terms, discussion of inclusion criteria, and inclusion of stakeholders in defining and/or refining the scope of the product.

Discussion

The principal conclusion of our evaluation of studies that call themselves “evidence maps” is that the implied definition, as defined by a majority of studies reporting individual components, of what constitutes an evidence map is a systematic search of a broad field to identify gaps in knowledge and/or future research needs that presents results in a user-friendly format, often a visual figure or graph, or a searchable database. However, only four of 31 studies (12 %) satisfied all five of these components. Thus, the heterogeneity in methods and stated definitions means that stakeholders cannot necessarily know what to expect if they commission an evidence map or seek to identify existing maps.

Of all literature synthesis methods, this evidence mapping definition shares many similarities with the definition or goals of a scoping review. Both seek to review broad topics, often with the stated goal of identifying research gaps and areas for future research. In evidence mapping publications, the most often cited article for evidence mapping methods is a scoping methods publication [67]. Compared to a scoping review, using the framework suggested by Colquhoun and colleagues [3], the methods used in the evidence mapping publications are, on a whole, very similar. The main distinctions seem to be the involvement of stakeholders early in the research process, the rigor of the search strategy (e.g., all mapping publications describing systematic searches of online databases), and the production of a visual or searchable database, with the stated goal that such products are more “user-friendly” or digestible. Because neither methodology has established reporting guidelines, it is difficult to determine where one method ends and the other begins.

In terms of the “map,” the most common ways of organizing the data into a visual representation were using a cross-tabular format and categorizing literature according to interventions and/or study designs present. However, the domains chosen to display and means of presentation will necessarily vary for any particular map according to the aims of the review.

Limitations

Because there is no standard search term or repository for evidence maps, we may not have been able to identify all evidence maps through our search methods. Thus, our findings may be biased to represent those maps that were readily available on the ten searched databases or were cited in other sources we found.

Future research

With growing numbers of publications using the term “evidence map,” clarifying scoping and evidence mapping methods is an important topic for stakeholders so that they will know what to expect when commissioning, conducting, and interpreting results of an “evidence map.” A key part of this effort will be developing reporting guidelines for these methods. This is already underway for scoping reviews [3], but given the similarities between the two methods, working on both methods in tandem may produce more clear and distinct results.

A key strength of the evidence mapping method is the use of visuals or interactive, online databases. Keeping these data up to date and available online may prove to be a challenge in rapidly growing fields, and new audiences may be exposed to these resources who are unfamiliar with how to best make use of the products. As evidence synthesis methods evolve to meet modern demands, they must also meet new challenges.

Conclusions

The principal conclusion of our evaluation is that the implied definition of what constitutes an evidence map is a systematic search of a broad field to identify gaps in knowledge and/or future research needs that presents results in a user-friendly format, often a visual figure or graph, or a searchable database. However, there is diversity in methods and stated definitions, so that, as it stands now, stakeholders cannot necessarily know what to expect if they commission an evidence map or seek to identify existing maps.

Abbreviations

- GEM:

-

Global Evidence Mapping.

- MeSH:

-

Medical Subject Headings

- PROSPERO:

-

International Prospective Register of Systematic Reviews

References

Bastian H, Glasziou P, Chalmers I. Seventy-five trials and eleven systematic reviews a day: how will we ever keep up? PLoS Med. 2010;7(9):e1000326.

Tricco AC, Antony J, Zarin W, Strifler L, Ghassemi M, Ivory J, et al. A scoping review of rapid review methods. BMC Med. 2015;13(1):224.

Colquhoun HL, Levac D, O'Brien KK, Straus S, Tricco AC, Perrier L, et al. Scoping reviews: time for clarity in definition, methods, and reporting. J Clin Epidemiol. 2014;67(12):1291–4.

Pawson R, Greenhalgh T, Harvey G, Walshe K. Realist review–a new method of systematic review designed for complex policy interventions. J Health Serv Res Policy. 2005;10 suppl 1:21–34.

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151(4):264–9.

Institute of Medicine. Finding what works in health care: Standards for Systematic Reviews. Available from: https://iom.nationalacademies.org/Reports/2011/Finding-What-Works-in-Health-Care-Standards-for-Systematic-Reviews/Standards.aspx. Washington, DC2011. Accessed 9 February 2016.

Wong G, Greenhalgh T, Westhorp G, Buckingham J, Pawson R. RAMESES publication standards: realist syntheses. BMC Med. 2013;11(1):21.

Wong G, Greenhalgh T, Westhorp G, Buckingham J, Pawson R. RAMESES publication standards: meta-narrative reviews. BMC Med. 2013;11(1):20.

Khangura S, Konnyu K, Cushman R, Grimshaw J, Moher D. Evidence summaries: the evolution of a rapid review approach. Syst Rev. 2012;1(1):1–9.

Polisena J, Garritty C, Kamel C, Stevens A, Abou-Setta AM. Rapid review programs to support health care and policy decision making: a descriptive analysis of processes and methods. Syst Rev. 2015;4(1):1–7.

Varker T, Forbes D, Dell L, Weston A, Merlin T, Hodson S, et al. Rapid evidence assessment: increasing the transparency of an emerging methodology. J Eval Clin Pract. 2015.

Ganann R, Ciliska D, Thomas H. Expediting systematic reviews: methods and implications of rapid reviews. Implement Sci. 2010;5(1):56.

Harker J, Kleijnen J. What is a rapid review? A methodological exploration of rapid reviews in Health Technology Assessments. Int J Evid‐Based Healthc. 2012;10(4):397–410.

Schmucker C, Motschall E, Antes G, Meerpohl JJ. Methods of evidence mapping. A systematic review. Bundesgesundheitsblatt, Gesundheitsforschung, Gesundheitsschutz. 2013;56(10):1390–7. doi:10.1007/s00103-013-1818-y.

Snilstveit B, Vojtkova M, Bhavsar A, Gaarder M. Evidence gap maps—a tool for promoting evidence-informed policy and prioritizing future research. 2013.

Bragge P, Clavisi O, Turner T, Tavender E, Collie A, Gruen RL. The global evidence mapping initiative: scoping research in broad topic areas. BMC Med Res Methodol. 2011;11:92. doi:10.1186/1471-2288-11-92.

Hetrick SE, Parker AG, Callahan P, Purcell R. Evidence mapping: illustrating an emerging methodology to improve evidence-based practice in youth mental health. J Eval Clin Pract. 2010;16(6):1025–30. doi:10.1111/j.1365-2753.2008.01112.x.

Katz DL, Williams AL, Girard C, Goodman J, Comerford B, Behrman A, et al. The evidence base for complementary and alternative medicine: methods of evidence mapping with application to CAM. Altern Ther Health Med. 2003;9(4):22–30.

Costello LA, Lithander FE, Gruen RL, Williams LT. Nutrition therapy in the optimisation of health outcomes in adult patients with moderate to severe traumatic brain injury: findings from a scoping review. Injury. 2014. doi:10.1016/j.injury.2014.06.004.

Brown HE, Atkin AJ, Panter J, Corder K, Wong G, Chinapaw MJ, et al. Family-based interventions to increase physical activity in children: a meta-analysis and realist synthesis protocol. BMJ Open. 2014;4(8):e005439. doi:10.1136/bmjopen-2014-005439.

Boeni F, Spinatsch E, Suter K, Hersberger KE, Arnet I. Effect of drug reminder packaging on medication adherence: a systematic review revealing research gaps. Syst Rev. 2014;3:29. doi:10.1186/2046-4053-3-29.

Althuis MD, Weed DL, Frankenfeld CL. Evidence-based mapping of design heterogeneity prior to meta-analysis: a systematic review and evidence synthesis. Syst Rev. 2014;3:80. doi:10.1186/2046-4053-3-80.

John BE. Evidence-based practice in human-computer interaction and evidence maps. Proceedings of the 2005 workshop on Realising evidence-based software engineering. St. Louis, Missouri: ACM; 2005:1–5.

Wiedemann P, Schutz H, Spangenberg A, Krug HF. Evidence maps: communicating risk assessments in societal controversies: the case of engineered nanoparticles. Risk Anal. 2011;31(11):1770–83. doi:10.1111/j.1539-6924.2011.01725.x.

Chorpita BF, Daleiden EL. Mapping evidence-based treatments for children and adolescents: application of the distillation and matching model to 615 treatments from 322 randomized trials. J Consult Clin Psychol. 2009;77(3):566–79. doi:10.1037/a0014565.

Bayley MT, Teasell RW, Wolfe DL, Gruen RL, Eng JJ, Ghajar J, et al. Where to build the bridge between evidence and practice?: results of an international workshop to prioritize knowledge translation activities in traumatic brain injury care. J Head Trauma Rehabil. 2014;29(4):268–76. doi:10.1097/htr.0000000000000053.

Scott J. What interventions can be used in high risk offspring? An evidence map of currently available therapies, and their potential for preventing disease onset or progression. Int J Neuropsychopharmacol. 2014;17:14.

Clavisi O, Gruen R, Bragge P, Tavender E. A systematic method of identifying and prioritising research questions. Oral presentation at the 16th Cochrane Colloquium: Evidence in the era of globalisation; 2008 Oct 3–7; Freiburg, Germany [abstract]. Zeitschrift fur Evidenz, Fortbildung und Qualitat im Gesundheitswesen 2008.

Liu P, Parker A, Hetrick S, Purcell R. Evidence mapping for early psychotic disorders in young people. Schizophr Res. 2010;117(2–3):438–9.

Voigt-Radloff S, Ruf G, Vogel A, van Nes F, Hull M. Occupational therapy for elderly: evidence mapping of randomised controlled trials from 2004–2012. Z Gerontol Geriatr. 2013. doi:10.1007/s00391-013-0540-6.

Chapman E, Reveiz L, Chambliss A, Sangalang S, Bonfill X. Cochrane systematic reviews are useful to map research gaps for decreasing maternal mortality. J Clin Epidemiol. 2013;66(1):105–12. doi:10.1016/j.jclinepi.2012.09.005.

Wysocki A, Butler M, Shamliyan T, Kane RL. Whole-body vibration therapy for osteoporosis. 2011. Rockville MD.

Curran C, Burchardt T, Knapp M, McDaid D, Li B. Challenges in multidisciplinary systematic reviewing: a study on social exclusion and mental health policy. Social Policy Adm. 2007;41(3):289–312.

Frampton GK, Harris P, Cooper K, Cooper T, Cleland J, Jones J, et al. Educational interventions for preventing vascular catheter bloodstream infections in critical care: evidence map, systematic review and economic evaluation. Health Technol Assess. 2014;18(15):1–365. doi:10.3310/hta18150.

Althuis MD, Weed DL. Evidence mapping: methodologic foundations and application to intervention and observational research on sugar-sweetened beverages and health outcomes. Am J Clin Nutr. 2013;98(3):755–68. doi:10.3945/ajcn.113.058917.

Nihashi T, Dahabreh IJ, Terasawa T. PET in the clinical management of glioma: evidence map. AJR Am J Roentgenol. 2013;200(6):W654–60. doi:10.2214/ajr.12.9168.

Jaramillo A, Welch VA, Ueffing E, Gruen RL, Bragge P, Lyddiatt A, et al. Prevention and self-management interventions are top priorities for osteoarthritis systematic reviews. J Clin Epidemiol. 2013;66(5):503–10. doi:10.1016/j.jclinepi.2012.06.017. e4.

Greer N, Brasure M, Wilt TJ. Wheeled mobility (wheelchair) service delivery. 2012. Rockville MD.

Chung M, Dahabreh IJ, Hadar N, Ratichek SJ, Gaylor JM, Trikalinos TA, et al. Emerging MRI Technologies for Imaging Musculoskeletal Disorders Under Loading Stress [Internet]. Rockville (MD): Agency for Healthcare Research and Quality (US); 2011. http://www.ncbi.nlm.nih.gov/books/NBK82287/. Accessed 9 February 2016.

Anstee S, Price A, Young A, Barnard K, Coates B, Fraser S, et al. Developing a matrix to identify and prioritise research recommendations in HIV prevention. BMC Public Health. 2011;11:381. doi:10.1186/1471-2458-11-381.

Hempel S, Taylor SL, Solloway MR, Miake-Lye IM, Beroes JM, Shanman R, et al. Evidence Map of Acupuncture [Internet]. Washington (DC): Department of Veterans Affairs; 2014 Jan. Available from: http://www.ncbi.nlm.nih.gov/books/NBK185072/. Accessed 9 February 2016.

DeFrank JT, Barclay C, Sheridan S, Brewer NT, Gilliam M, Moon AM, et al. The psychological harms of screening: the evidence we have versus the evidence we need. J Gen Intern Med. 2014. doi:10.1007/s11606-014-2996-5.

Brennan LK, Brownson RC, Orleans CT. Childhood obesity policy research and practice: evidence for policy and environmental strategies. Am J Prev Med. 2014;46(1):e1–e16. doi:10.1016/j.amepre.2013.08.022.

Bailey AP, Parker AG, Colautti LA, Hart LM, Liu P, Hetrick SE. Mapping the evidence for the prevention and treatment of eating disorders in young people. J Eat Disord. 2014;2:5. doi:10.1186/2050-2974-2-5.

Berger S, Wang D, Sackey J, Brown C, Chung M. Sugars and health: Applying evidence mapping techniques to assess the evidence. FASEB Journal. 2014;28(1):Supplement 630.10.

Bonell C, Jamal F, Harden A, Wells H, Parry W, Fletcher A et al. Public Health Research. Systematic review of the effects of schools and school environment interventions on health: evidence mapping and synthesis. Southampton (UK): NIHR Journals Library; 2013.

Coast E, Leone T, Hirose A, Jones E. Poverty and postnatal depression: a systematic mapping of the evidence from low and lower middle income countries. Health Place. 2012;18(5):1188–97.

Coeytaux RR, McDuffie J, Goode A, Cassel S, Porter WD, Sharma P, et al. Evidence map of yoga for high-impact conditions affecting veterans. Washington (DC): Department of Veterans Affairs (US); 2014.

Duan-Porter W, Coeytaux RR, McDuffie J, Goode A, Sharma P, Mennella H, et al. Evidence map of yoga for depression, anxiety and post-traumatic stress disorder. J Phys Act Health. 2015. doi:10.1123/jpah.2015-0027.

El-Behadli AF, Neger EN, Perrin EC, Sheldrick RC. Translations of developmental screening instruments: an evidence map of available research. J Dev Behav Pediatr. 2015;36(6):471–83. doi:10.1097/dbp.0000000000000193.

Hempel S, Taylor SL, Marshall NJ, Miake-Lye IM, Beroes JM, Shanman R, et al. Evidence map of mindfulness. VA evidence-based synthesis program reports. Washington (DC): Department of Veterans Affairs (US); 2014.

Hempel S, Taylor SL, Solloway MR, Miake-Lye IM, Beroes JM, Shanman R, et al. Evidence map of Tai Chi. Washington (DC): Department of Veterans Affairs (US); 2014.

Hitch D. Better access to mental health: mapping the evidence supporting participation in meaningful occupations. Advances in Mental Health. 2012;10(2):181–9.

Kadiyala S, Harris J, Headey D, Yosef S, Gillespie S. Agriculture and nutrition in India: mapping evidence to pathways. Ann N Y Acad Sci. 2014;1331:43–56. doi:10.1111/nyas.12477.

Northway R, Davies R, Jenkins R, Mansell I. Evidencing good practice in adult protection: informing the protection of people with learning disabilities from abuse. J Adult Prot. 2005;7(2):28–36.

Sawicki C, Livingston K, Obin M, Roberts S, Chung M, McKeown N. Dietary fiber and the human gut microbiome: application of evidence mapping methodology. FASEB Journal. 2015;29(1):Supplement 736.27.

Singh K, Ansari M, Galipeau J, Garritty C, Keely E, Malcolm J, et al. An evidence map of systematic reviews to inform interventions in prediabetes. Can J Diabetes. 2012;36(5):281–91.

Taylor S, Hempel S, Solloway M, Miake-Lye I, Beroes J, Shekelle P. An evidence map of the effects of acupuncture. J Altern Complement Med. 2014;20(5):A91–2.

Vallarino M, Henry C, Etain B, Gehue LJ, Macneil C, Scott EM, et al. An evidence map of psychosocial interventions for the earliest stages of bipolar disorder. Lancet Psychiatry. 2015;2(6):548–63.

Wang DD, Shams-White M, Bright OJ, Chung M. Low-calorie sweeteners and health. FASEB Journal. 2015;29(1):Supplement 587.12.

De Silva S, Parker A, Purcell R, Callahan P, Liu P, Hetrick S. Mapping the evidence of prevention and intervention studies for suicidal and self-harming behaviors in young people. Crisis. 2013;34(4):223–32. doi:10.1027/0227-5910/a000190.

Clavisi O, Bragge P, Tavender E, Turner T, Gruen RL. Effective stakeholder participation in setting research priorities using a Global Evidence Mapping approach. J Clin Epidemiol. 2013;66(5):496–502. doi:10.1016/j.jclinepi.2012.04.002. e2.

Callahan P, Liu P, Purcell R, Parker AG, Hetrick SE. Evidence map of prevention and treatment interventions for depression in young people. Depress Res Treat. 2012;2012:820735. doi:10.1155/2012/820735.

Bragge P, Chau M, Pitt VJ, Bayley MT, Eng JJ, Teasell RW, et al. An overview of published research about the acute care and rehabilitation of traumatic brain injured and spinal cord injured patients. J Neurotrauma. 2012;29(8):1539–47. doi:10.1089/neu.2011.2193.

Parkhill AF, Clavisi O, Pattuwage L, Chau M, Turner T, Bragge P, et al. Searches for evidence mapping: effective, shorter, cheaper. J Med Libr Assoc. 2011;99(2):157–60. doi:10.3163/1536-5050.99.2.008.

Liu P, Parker AG, Hetrick SE, Callahan P, de Silva S, Purcell R. An evidence map of interventions across premorbid, ultra-high risk and first episode phases of psychosis. Schizophr Res. 2010;123(1):37–44. doi:10.1016/j.schres.2010.05.004.

Arksey H, O'Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005;8(1):19–32.

Acknowledgements

We were funded by the VA Evidence Synthesis Program.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

IML participated in the conception, design, data acquisition, and data analysis and drafted the manuscript. PGS participated in the conception, design, data analysis, and drafting of the manuscript. SH participated in the data interpretation and revising the manuscript. RS participated in data acquisition and helped to revise the manuscript. All authors read and approved the final manuscript.

Additional files

Additional file 1:

PRISMA Checklist. (DOC 63 kb)

Additional file 2:

PRISMA Flow. (DOC 57 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Miake-Lye, I.M., Hempel, S., Shanman, R. et al. What is an evidence map? A systematic review of published evidence maps and their definitions, methods, and products. Syst Rev 5, 28 (2016). https://doi.org/10.1186/s13643-016-0204-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13643-016-0204-x