Abstract

Contrast enhancement algorithms have been evolved through last decades to meet the requirement of its objectives. Actually, there are two main objectives while enhancing the contrast of an image: (i) improve its appearance for visual interpretation and (ii) facilitate/increase the performance of subsequent tasks (e.g., image analysis, object detection, and image segmentation). Most of the contrast enhancement techniques are based on histogram modifications, which can be performed globally or locally. The Contrast Limited Adaptive Histogram Equalization (CLAHE) is a method which can overcome the limitations of global approaches by performing local contrast enhancement. However, this method relies on two essential hyperparameters: the number of tiles and the clip limit. An improper hyperparameter selection may heavily decrease the image quality toward its degradation. Considering the lack of methods to efficiently determine these hyperparameters, this article presents a learning-based hyperparameter selection method for the CLAHE technique. The proposed supervised method was built and evaluated using contrast distortions from well-known image quality assessment datasets. Also, we introduce a more challenging dataset containing over 6200 images with a large range of contrast and intensity variations. The results show the efficiency of the proposed approach in predicting CLAHE hyperparameters with up to 0.014 RMSE and 0.935 R2 values. Also, our method overcomes both experimented baselines by enhancing image contrast while keeping its natural aspect.

Similar content being viewed by others

1 Introduction

Image enhancement consists of image quality improvement processes, allowing a better visual and computational analysis [1]. It is widely used in several applications due to its capability to overcome some of the limitations presented by image acquisition systems [2]. Deblurring, noise removal, and contrast enhancement are some examples of image enhancement operations. The idea behind contrast enhancement is to increase the dynamic range of the gray levels in the image being processed [3]. It plays a major role in digital image processing, computer vision, and pattern recognition [4].

Besides providing a better visual interpretation by improving the image appearance, the contrast enhancement may also be used to improve the performance of succeeding tasks, such as image analysis, object detection, and image segmentation [2, 4, 5]. In fact, it has contributed in a variety of fields like medical image analysis, high-definition television (HDTV), industrial X-ray imaging, microscopic imaging, and remote sensing [6].

Most of the contrast enhancement techniques are based on histogram adjusts, due to their straight forward and intuitive implementation qualities [5]. A comprehensive review of histogram-based techniques may be found in [6]. These techniques are often categorized in global or local techniques. The use of global contrast enhancement may be not suitable for images whose local details are necessary or for images containing varying lighting conditions. On the other hand, if the process is applied locally, it is possible to overcome those limitations [4, 7].

The Contrast Limited Adaptive Histogram Equalization (CLAHE) [8] is a popular method for local contrast enhancement that has been showing powerful and useful for several applications [4, 9, 10]. CLAHE has been extensively used to enhance image contrast in several computer vision and pattern recognition applications. In the medical field, it was successfully applied in breast ultrasound and mammography image enhancement [11, 12], in cell image segmentation [13, 14], in retinal vessel image processing [15, 16], and in enhancement of bone fracture images [17]. Beyond medical field, CLAHE was applied to enhance underwater images [18, 19], to perform fruit segmentation in agricultural systems [20, 21], and to assist driving systems to improve vehicle detection [22], traffic sign detection [23], and pedestrian detection [24].

The basic idea of CLAHE consists in performing the histogram equalization of non-overlapping sub-areas of the image, using interpolation to correct inconsistencies between borders [8, 25]. CLAHE has also two important hyperparameters: the clip limit (CL) and the number of tiles (NT). The first one (CL) is a numeric value that controls the noise amplification. Once the histogram of each sub-area is calculated, they are redistributed in such a way that its height does not exceed a desired “clip limit.” Then, the cumulative histogram is calculated to perform the equalization [7]. The second (NT) is an integer value which controls the amount of non-overlapping sub-areas: based on its value, the image is divided into several (usually squared) non-overlapping regions of equal sizes. According to [7], for 512 × 512 images, the number of regions is generally selected to be equal to 64 (NT=[8,8]). Both parameters (CL and NT) are exemplified in Fig. 1.

Thus, the main drawback in CLAHE, as reported in [26, 27], is an improper hyperparameter selection that leads to decrease in image quality. The authors also state that the quality of the enhanced image depends most on the CL hyperparameter.

Most work relies on fixed hyperparameter values empirically chosen to solve a specific problem [20, 28–30]. Nonetheless, an entropy-based method to automatically determine CLAHE’s hyperparameters was proposed in [26]. It takes advantage of the characteristics of two entropy curves (CL per entropy and NT per entropy). The method proposed by the authors determines the CL and NT values as the points with the maximum curvature in each entropy function curve. Obtaining these functions requires to calculate the entropy for every CLAHE’s output considering all possible combinations of CL and NT. Although experimental results showed the proposed approach is capable of enhancing the image contrast with low deterioration, the process is computationally impractical. Calculating the entropy values of all the possible combinations between these hyperparameters is an unfeasible task. Also, the proposed hyperparameter suggestion was poorly tested and validated, once only three images were used in their experiments.

A hyperparameter tuning for CLAHE based on multi-objective meta-heuristic was proposed by [25]. In addition to entropy, as proposed in [26], the authors used the Structural Similarity Index (SSIM) to find most promising CLAHE’s hyperparameters. Once SSIM is related to the level of image distortion, the goal was to maximize the information gain using the entropy while minimizing the image distortion via SSIM. It was found that besides these objective functions are contradictory, the use of different contrast levels could highlight different structures present in medical images, allowing specialists to handle different visualization options automatically.

At the time this research was conducted, besides [26], no other method to automatically determine adequate CLAHE’s hyperparameters to a given image was found. Considering the lack of solutions to this problem, especially fast ones, in this work, we present a learning-based hyperparameter selection method for the CLAHE algorithm called learning-based Contrast Limited Adaptive Histogram Equalization (LB-CLAHE). One of the main reasons that led us to choose a machine learning supervised approach to solve this problem was performance. While training a supervised classification or regression model is a complex computational task, the prediction task itself is substantially fast. Further, supervised machine learning methods have proven to be powerful in solving image-related problems [31–33]. Thus, we proposed a new supervised model, able to automatically determine CLAHE’s hyperparameters for images with different contrast distortions and scenarios.

It is important to mention that we are not proposing a new or improved version of CLAHE, but a method to find CLAHE’s hyperparameters. Therefore, during the experiments, our method will be compared with other CLAHE’s parametrization approaches.

The remaining of this work is organized into three sections. In Section 2, the methodology used to build and validate the proposed technique is described. After, Section 3 are presented, followed by our Section 4.

2 Materials and methods

Supervised learning is a machine learning (ML) task of inducing models from labeled data. In other words, using a training set composed of data samples with a defined target, it is possible to induce a model to predict the target values for new unseen samples. In ML research field, there are a wide range of algorithms able to deal with supervised classification and regression tasks [34]. These tasks differ in how they represent the target feature. In a classification task, the target is one among several categorical values, also called classes. If just two different values are provided, it defines a binary classification problem. If there are more than two, they will compose a multi-class one. On the other hand, in a regression task, the target is a continuous variable predicted by the model. Both types of problems may be evaluated from new unseen data (the test set) toward analyzing the predictive performance.

In this paper, we aim to build a well-performing regression model robust enough to predict the most promising CLAHE’s hyperparameter to adjust an image based on its features. Thus, it was required a suitable training set for the model induction.

In the computer vision field, obtaining the desired output/label to compose the training data is a roadblock. In fact, the labeling of datasets made by images (image dataset) is usually done manually by experts from a very specific application. This task is known as a difficult duty, especially in situations where a huge amount of examples are required to build the model [35].

Therefore, to create an adequate labeled training set, we automatically extract a set of features from images and the expected CLAHE’s optimal hyperparameters. The process relied on generating contrast distorted images from ideal contrast ones. We defined a grid with CLAHE’s hyperparameters CL and NT, and for each original image, we evaluated each single combination of (CL, NT). The CLAHE’s hyper-space considered in experiments is presented in Table 1. The selected values for CL range from [0,1] increased by a step of 0.001. For NT, the range is between {2, 32} with a step =2. Thus, each image was evaluated by 1001×16=16016 different hyperparameter settings. The pair of hyperparameters whose output image was the most similar to the original one was defined as the target value.

The overall flow of the proposed approach and experimental methods, showing the dataset labeling process, supervised model building, and validation steps, and the LB-CLAHE usage, can be seen in Fig. 2. It is important to highlight that the final application of the proposed approach depends on just the induced model. In other words, a given new image is adjusted using the hyperparameters predicted from the previously built model, induced only once.

The experimental methods adopted in this paper are presented in the following subsections. All the datasets and source code developed are freely availableFootnote 1.

2.1 Image datasets specification

Two image datasets were used in our experiments. The first one, dataset 1, was composed by 246 contrast distorted images from 54 ones. We merged two well-known image quality assessment (IQA) datasets to build the dataset 1: CSIQ [36] and TID2013 [37]. We generated the dataset 1 with the most popular IQA datasets in order to evaluate our method in different scenarios with quality distortions. Also, CSIQ and TID datasets were used in several recent contrast-related works [5, 38, 39].

In order to improve the generalizability of the proposed approach, and also to validate if it is facing more challenging scenes and distortions, we created another dataset (dataset 2). In this new version, different from before, we created distortions to every ideal image provided in following IQA datasets: [40–46]. For each one of the 149 ideal images available, we created a set of 42 distortions. Thus, dataset 2 was composed by 6258 contrast distorted images.

The set of 42 distortions was built using contrast and intensity variations. Each original image, considered as ideal by literature, had its contrast changed by histogram compression in six levels: { − 50%, 10%, 30%, 50%, 70%, 90%}. Furthermore, the mean intensity of every changed level was also shifted in six histogram bins: {10, 30, 60} for both left and right sides. In Fig. 3, all the contrast distortions from a given original image (without intensity shift) may be seen, while Fig. 4 depicts all intensity shifts of a contrast distorted image.

The histogram compression procedure, used to build the contrast distorted images, started by identifying the range of image intensities, in other words, by the extraction of a distance between the leftmost and the rightmost histogram bin. Then, this distance was decreased or increased to generate contrast distortions. We employed a linear distribution to obtain new values of left, right, and middle points for the compressed histogram. The Open Source Computer Vision Library (OpenCV) Footnote 2 was used to implement all distortions of dataset 2 images.

It is important to mention that, despite we used only the intensity (grayscale) channel from hue, saturation, and intensity (HSI) color space in our experiments, LB-CLAHE can also be applied to colored red, green, and blue (RGB) color space images. In this case, intensity channel must be, along with the original hue and saturation channels, converted back to RGB after enhancement. This process can be seen in Fig. 5.

2.2 Dataset labeling

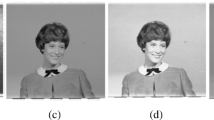

The key point of our labeling process is to relate the best pair of CLAHE’s hyperparameters automatically to a given contrast distorted image aiming its correction. In this way, we applied CLAHE with all its hyperparameters combination over the distorted images. Next, were compared with the original image, searching for the most similar image pair. In other words, we tried all possible hyperparameter settings to correct those distorted versions using the original image as the reference to find the best pair of h={CL, NT}. Figure 6 depicts the impact of the hyperparameter values chosen to a given distorted image, with (a) being the original/ideal, (b) being the distorted one, and (c–e) being CLAHE outputs. Contrast was increased less than desired in (c), optimally increased in (d), and over increased in (e). It shows that it is important to select adequate CLAHE hyperparameters for a given image.

Automatic labeling process to find best CLAHE hyperparameter pair. Original image (a). distorted image (b). CLAHE with inacurrate hyperparameters: CL=0.01 and NT=[8,8] (c). CLAHE with ideal hyperparameters: CL=0.025 and NT=[4,4] (d). CLAHE with inacurrate hyperparameters: CL=0.468 and NT=[16,16] (e)

This process is analog to image similarity analysis, which can be performed by different aspects. In this work, the most important one was the similarity related to the image quality, especially regarding the contrast. The IQA techniques, which match this objective, can be split into three groups: (i) full reference, (ii) reduced reference, and with (iii) no reference [38, 47]. In a scenario where a reference image is available to be compared to another, the full-reference techniques are preferred [38]. Therefore, we used three common IQA full-reference evaluation measures: mean squared error (MSE), peak signal-to-noise ratio (PSNR), and the structural similarity index (SSIM) index [47, 48].

It is known that the entropy value (a statistical measure of randomness) of a gray-level image is quite related with its contrast [26, 38, 49]. Thus, the entropy difference between both images (corrected and original) may be useful to identify images with similar contrast [49]. Here, we called it gray level entropy difference (GLED).

The MSE, PSNR, SSIM and GLED performance measures present values from different ranges and scales. To create a trustful method to compute an indication from the metrics, we ordered the four metrics through calculating an average rank. Thus, the highest ranked sample represents the most similar CLAHE/ideal image pair of hyperparameters.

The automatic labeling process is computationally expensive. A computer cluster composed of 18 computers (Intel Xeon E5-2430v2 and 24 GB RAM), with Windows 7 and Matlab, was used for this purpose.

2.3 Image features

Once our samples are properly labeled with the best pair of CLAHE’s hyperparameters, each distorted image needs to be described. Thus, the ML algorithms may be used to build models from the relations between image features and their labels.

The features from images already provide useful information for automatic classification and regression [50]. In our proposal, we explore a set of 28 features to predict effectively CL and NT values. These features may be divided into four main sub-groups: spatial, histogram, texture, and image quality.

As spatial image features (i.e., features extracted from each (x,y) pixel of the 2D image), two statistical moments (mean and standard deviation) and gray level entropy were selected. Based on the image histogram (tonal distribution of a digital image), we extracted the second (standard deviation), third (skewness), and fourth (kurtosis) statistical moments as suggested in [3, 51].

Image texture features provide information about the spatial arrangement of intensities in an image. They have been applied in a wide variety of image classification applications [52]. We used three common texture descriptors: local binary patterns (LBP) [53], gray-level co-occurrence matrix (GLCM) [52], and fast fourier transform (FFT) frequency domain features [50, 54].

Full-reference IQA techniques can not be used to compose the feature set, since an ideal image is requested to perform them. In other words, considering an image that needs to be corrected, in a real-life problem, the ideal image is unknown. Thus, we used three no-reference contrast-related IQA techniques to compose our feature vector: statistical naturalness measure (SNM) [55], Measurement of Enhancement by Entropy (EME) [56] and global contrast factor (GCF) [57]. The complete list of the image features used in experiments is described in Table 2.

All the image features were scaled between [0,1] before performing our regression task. This standardization avoids any influence that different features’ magnitudes could have during the model induction step. Besides, regarding the computational costs to extract the image features, the spatial and histogram feature groups calculation have the time cost of O(P) [58], being P the number of pixels in the considered image. In the texture group, both LBP and GLCM also present linear complexity concerning the number of image pixels (O(P)) [58]. The FFT transformation requires O(P logP) calculations to generate the frequency domain shifting [58]. All features in the no-reference IQA group present time complexity of O(N) [55–57]. Therefore, the time complexity for extracting image features is asymptotically dominated by the FFT calculation, i.e., our feature extraction step has time complexity of O(NP logP), considering that N images with the same spatial resolution are going to be processed.

2.4 Supervised regression algorithms

We evaluated a total of five different regression algorithms to determine which one would get the best performance in automatic CLAHE’s hyperparameter prediction. The choice was based on their extensive application in multiple predictive tasks, and also presenting different learning biases. In this way, the following algorithms were performed in our experiments: classification and regression tree (CART) [59], multilayer perceptron (MLP) artificial neural network [60], support vector machine (SVM) [61], random forest (RF) [62], and Extreme Gradient Boosting (XGBoost) [63]. All the regression techniques were performed using their standard hyperparameter settings, as defined in the corresponding R packages. The following sections briefly describe each one of the evaluated regression techniques.

2.4.1 CART

Classification and regression tree (CART) [59] is a decision tree (DT) induction algorithm that builds binary trees by recursively partitioning the data space into orthogonal hyper-planes and fitting a simple prediction model within each partition. DTs present good predictive performance on several domains and model interpretability [64]. Regression trees are used for dependent variables that assume continuous or ordered discrete values, with prediction error typically measured by the difference between the observed and predicted values. CART uses a generalization of the binomial variance called the Gini index [59]. In our experiments, we used the R package rpart [65] for building CART models.

2.4.2 MLP

Multilayer perceptron (MLP) feed-forward network [60] is an important class of artificial neural networks able to deal with classification and regression problems. MLPs are composed of multiple units (neurons), responsible for computing and propagating information through the network. These neurons are organized in layers, usually an input layer (which just receives the input values), one (or more) hidden layer(s), and an output layer. The information is propagated through the network feed-forwardly until the output layer. The back-propagation algorithm is often used to train the MLP networks. MLPs have been used to solve complex and diverse problems due to the generality of their application [60]. The R RSNNS package [66] was applied in our experiments.

2.4.3 SVM

Support vector machines (SVMs) [61] are kernel-based algorithms that performs non-linear classification/regression using a hyperspace transformation: it maps the inputs into a high-dimensional feature space where the problem is linearly separable. SVMs are known to be robust in handling a wide variety of problems, presenting high accuracy and capacity to treat high-dimensional data. In this work, SVM implementation from the e1071 R package [67] was used.

2.4.4 RF

Random forest (RF) [62] is an ensemble algorithm which consists of independently growing decision tree-based predictors, such as CART, on different subsets of training data. Different training sets are built for each tree using random sampling with replacement (bagging). Each tree also uses a subset of randomly chosen features. In each tree, the samples not randomly chosen for training are called out-of-bag (OOB) cases. They could be used to calculate an unbiased, internal, prediction error metric called out-of-bag error (OOBE), also employed in variables’ importance assessment [62]. For regression problems, the outcomes are formed by taking the average result over all trees in the forest. The randomForest R package was used in experiments.

2.4.5 XGBoost

Extreme Gradient Boosting (XGBoost) [63] is a scalable end-to-end tree ensemble boosting machine learning system based on the gradient boosting machine (GBM) framework that has provided state-of-the-art results on many problems. Sequentially, new trees are added to the ensemble aiming to minimize the actual error gradient (boosting) along a regularization term to avoid overfitting. In fact, XGBoost was reported as the best prediction technique in several data mining competitions. We performed our experiments using the XGBoost R package.

2.5 Evaluation measures

For model assessment, we used the root mean square error (RMSE) and the determination coefficient (R2) evaluation measures. The RMSE was computed with the ideal CLAHE’s hyperparameters determined through the dataset and the predicted values by the regression model. This metric is defined as the root of the quadratic difference between the true observed (yi) values and the obtained (\(\hat {y}_{i}\)) ones for each sample i of the n evaluated cases [68], as defined by Eq. 1. In our case, the y and \(\hat {y}\) values represented, respectively, the desired CLAHE parameters and its estimations made by the machine learning models. It measures how near of the expected values are the predictions made by the induced model.

The r-squared or R2 expresses the amount of the total variation associated with the use of an independent variable. Its values range from 0 to 1: the closer R2 is to 1, the higher is the proportion of the total variation in the output which is explained by introducing an independent variable in the regression scheme [69]. In this way, R2 shows how similar are the predicted values when compared with the real expected, in our case, the CLAHE’s hyperparameters. All these information regarding the experimental setup is also presented in Table 3.

For dataset 1, regarding CL, best results were achieved by XGBoost, followed by RF. Still, best results while predicting the NT hyperparameter were achieved by SVM, closely followed by RF. For dataset 2, RF obtained the best results to both hyperparameters, followed by XGBoost. It is possible to observe that, in general, better results were observed in ensemble methods. Worst results were reached by MLP, probably because it relies on its tuning to achieve better results.

Overall predictive performance was not high while predicting NT. It is justified since image quality mainly depends on the CL rather than NT [26]. Thus, different NT values are capable to provide adequate images.

The RMSE supports the comparison of possible method superiority through the application of the Friedman’s statistical test with significance level at α=0.05. The null hypothesis is based on the equivalent performances of the algorithms considering the averaged RMSE on each dataset. Looking for emphasizing a possible superiority of an algorithm, we applied the Friedman’s statistical test, but was not observed significantly different between them (at p value =0.1712). To select an algorithm to support the discussions related to image features and contrast enhancement, we chose RF due to best position in Friedman’s rank and capability of understanding the model by RF importance.

In this sense, the time complexity to build prediction models for the CLAHE hyperparameters would be related to the RF training cost. Non-prunned versions of traditional decision tree algorithms, such as CART, present time complexity of O(vN logN), where v represents the number of explaining features and N the number of training examples [59]. CART models are employed to compose the RF tree ensemble. Nonetheless, at each split point of the t trees in the forest, only s features are explored, being the last ones selected at random. The t and s RF’s hyperparameters are input by the user. Thus, the time complexity of training a RF predictor is O(tsN logN). In our solution, RF is trained twice, corresponding to each of the CLAHE hyperparameters. Therefore, the computational costs of our solution are the combination of the computations to extract the image features and to induce the regression models. Thus, the total asymptotic time complexity of our proposal is O(NP logP+2tsN logN), when considering N images with the same spatial resolution.

The experiments were performed using the 10-fold cross-validation strategy owing to support a fair comparison without overfitting.

3 Results and discussion

In this section, we present our main experimental results and findings. First, we present the results regarding the predictive performance of models from different ML algorithms. Afterwards, based on the best model performance, some results are discussed in the image enhancement context. Finally, a visual comparison of our method with [26] was performed.

3.1 Predictive performance

Table 4 presents results regarding the predictive performance of the induced models for dataset 1 and for dataset 2. There, we present RMSE and R2 values achieved by the selected ML algorithms.

3.2 Image features importance

When using RF, by permuting the values of a feature in OOB samples and recalculating the OOBE in the whole ensemble, it is possible to address the RF variable importance on prediction error. In other words, in the case of substituting the values of a particular feature by random values result in error boost, the feature is related to the positive problem comprehension. On the other hand, if the resulting importance is negative, the evaluated feature disrupts the task description and should be removed from modeling. This procedure could be performed for each feature toward explaining its impact [62].

We used the RF importance to investigate the contribution of each image feature in explaining the best CLAHE’s hyperparameter settings. In Fig. 7, RF variable importance of each feature is presented. In the case of no negative importance was obtained, no disrupted feature was selected in to build the model.

For CL, statistical moments of intensity image histogram (features 4–6) were important. It is interesting to note that feature 4 was important only to dataset 1 while features 5 and 6 were more important to dataset 2. Other features like 19 (energy of gray-level co-occurrence matrix), 22 (FFT energy), and 23 (FFT entropy) were also important to both datasets. Despite the importance peak of feature 10 in dataset 1, LBP features achieved average importance values while predicting the CL hyperparameter. Different than expected, two of the entropy-based features did not achieve high importance values: 3 (entropy of intensity) and 17 (entropy of gray-level co-occurrence matrix).

For NT hyperparameter, results were slightly similar with 22 (FFT energy) being the best parameter considering both datasets. Some other peaks were presented by features 1 (mean value of intensity image) and 2 (standard deviation of intensity image), suggesting that a good NT value may have some correlation with image intensity.

Overall, no feature was useless at all. To have a faster feature extraction process, removing the less important features only may not be profitable. A better option is to perform group-based feature selection, once the removal of a complete group may have a higher impact on time during feature extraction.

3.3 Full-reference image quality assessment

In addition to the four IQA techniques used in the dataset labeling step, two more were used to evaluate the similarity between the ideal (original) and obtained (contrast enhanced) images: absolute mean brightness error (AMBE) [70] and visual information fidelity (VIF) [71]. According to [72], most of the existing contrast enhancement evaluation measures are not related to human perception of enhancement quality. Thus, we selected AMBE and VIF due to being full-reference techniques and exhibiting positive correlations with perceptual quality of contrast enhancement.

Moreover, for comparison purposes, we used two baselines: (i) CLAHE-fixed hyperparameters and (ii) global histogram equalization (HE). We fixed the CLAHE hyperparameters as CL = 0.01 and NT = [8,8] (default of Matlab and others). Results of this comparison, for dataset 1, can be seen in Fig. 8. Regarding GLED, MSE, and AMBE, where lower values are desired, it is visible that LB-CLAHE achieved better results in most cases. On the other hand, regarding PSNR, SSIM, and VIF, higher values are desired. Likewise, LB-CLAHE achieved noticeably better results in most cases.

Results were similar in dataset 2. As will be discussed further in this section, there were a small set of images which LB-CLAHE was not superior. However, considering average values, LB-CLAHE achieved superior performance than the baselines to all the six IQA techniques experimented in both datasets. These values can be seen in Table 5. In general, there were no significant discrepancy between the six IQA techniques regarding the performance of the three compared methods.

Figure 9 shows samples obtained by LB-CLAHE with high similarity considering the original image according to the IQA techniques for different contrast scenarios. It can be observed that in scenarios of adequate contrast or more than enough (D), LB-CLAHE simply do not increase it. On the other hand, it properly enhances an image with low contrast (A, B, and C), giving it a natural aspect. Meanwhile, global HE and fixed CLAHE over-enhanced or under-enhanced the images in the four observed scenarios.

LB-CLAHE’s high performance according to the IQA techniques in different contrast scenarios. Heavy decrease in contrast (A), mean decrease in contrast (B), slight decrease in contrast (C), increase in contrast (D). Ideal image (1), distorted image (2), global HE (3), fixed CLAHE with CL=0.01 and NT=[8,8] (4), proposed LB-CLAHE (5)

As seen in Fig. 8, there is a small set of images which LB-CLAHE was not superior according to the majority of IQA techniques. As exposed in Fig. 10, in the first two scenarios (A and B), global HE was the best among most metrics, while in the last two (C and D), CLAHE with fixed hyperparameters was the best option. It is important to mention that, even in these scenarios, LB-CLAHE achieved adequate visual aspect. Also, to all images where LB-CLAHE was not superior, it was the second one with really close values to the best option in all metrics. Average similarity values, obtained between ideal (original) and obtained (contrast enhanced) images, are presented in Table 5. To all images evaluated in our experiments, LB-CLAHE achieved a suitable visual aspect.

3.4 Visual comparison

We applied our method to the same set of images proposed by [26]. Although a full-reference comparison is not possible due to irreproducibility of their work, we could observe in Fig. 11 that both methods achieved similar visual results to all images.

Visual comparison between the entropy-based method proposed in [26] and LB-CLAHE. Office1 image (a), aerial image (b), announce image (c)

Notwithstanding, the authors proposed a computationally costly task toward executing CLAHE with all possible hyperparameter combinations to reach the result for a single image. Different from [26], our method extracts the image features to predict the hyperparameters and perform CLAHE once. Also, we proved our method’s ability to enhance thousands of images over different contrast and illumination scenarios, while the method mentioned above was not extensively tested.

4 Conclusions

In this paper, we proposed the LB-CLAHE: a learning-based hyperparameter selection method for CLAHE using image features. We investigated the performance of our proposal with some well-known image sets and by the use of a huge distorted contrast image dataset. Several supervised regression algorithms were compared to build a suitable performance model. The best results were achieved by ensemble-based models induced with RF and XGBoost algorithm. Analysing the impact of each image feature in hyperparameter prediction, the RF importance showed that no feature significantly stood out from the others. The experimental result confirmed that LB-CLAHE, a learning-based hyperparameter selection method for CLAHE, achieved superior performance in comparison to the histogram equalization and CLAHE with fixed and standard hyperparameters. Our method was capable of adjusting the images from different scenarios, contrast, and illumination distortions. Furthermore, once the model was created, it can be applied to quickly suggest the CLAHE’s hyperparameters for a new image with superior performance over the compared methods.

4.1 Limitations and future works

Although experiments were conducted with thousands of real images, with different contrast levels and complex scenarios, our method may need some adaptation to be successfully applied in more specific computer vision applications. In future works, we intend to test our method’s ability to improve the performance of subsequent computer vision tasks (especially object detection). Also, we intend to better investigate group-based feature selection in our solution, aiming to make it faster and more suitable for real-time applications.

Notes

http://www.uel.br/grupo-pesquisa/remid/?page_id=145

Abbreviations

- AMBE:

-

Absolute mean brightness error

- CART:

-

Classification and regression tree

- CL:

-

Clip limit

- CLAHE:

-

Contrast Limited Adaptive Histogram Equalization

- DT:

-

Decision tree

- EME:

-

Measurement of enhancement by entropy

- FFT:

-

Fast fourier transform

- GBM:

-

Gradient boosting machine

- GCF:

-

Global contrast factor

- GLCM:

-

Gray-level co-occurrence matrix

- GLED:

-

Gray-level entropy difference

- HDTV:

-

High-definition television

- HE:

-

Histogram equalization

- HSI:

-

Hue, saturation, and intensity

- IQA:

-

Image quality assessment

- LB-CLAHE:

-

Learning-based contrast limited adaptive histogram equalization

- LBP:

-

Local binary patterns

- ML:

-

Machine learning

- MLP:

-

Multilayer perceptron

- MSE:

-

Mean squared error

- NT:

-

Number of tiles

- OOB:

-

Out-of-bag

- OOBE:

-

Out-of-bag error

- OpenCV:

-

Open source computer vision library

- PSNR:

-

Peak signal-to-noise ratio

- RF:

-

Random forest

- RGB:

-

Red, green, and blue

- RMSE:

-

Root mean square error

- SNM:

-

Statistical naturalness measure

- SSIM:

-

Structural similarity index

- SVM:

-

Support vector machine

- VIF:

-

Visual information fidelity

- XGBoost:

-

Extreme Gradient Boosting

References

M. H. Asmare, V. S. Asirvadam, A. F. M. Hani, Image enhancement based on contourlet transform. SIViP. 9(7), 1679–1690 (2015).

H. Hiary, R. Zaghloul, A. Al-Adwan, M. B. Al-Zoubi, Image contrast enhancement using geometric mean filter. SIViP. 11:, 833–840 (2016).

R. C. Gonzalez, R. E. Woods, Digital image processing (Pearson/Prentice Hall, Upper Saddle River, 2008).

T. V. H. Laksmi, T. Madhu, K. C. S. Kavya, S. E. Basha, Novel image enhancement technique using CLAHE and wavelet transforms. Int. J. Sci. Eng. Technol.5(11), 507–511 (2016).

A. Saleem, A. Beghdadi, B. Boashash, Image fusion-based contrast enhancement. EURASIP J. Image. Video Process.2012(1), 1–17 (2012).

S. Gupta, Y. Kaur, Review of different local and global contrast enhancement techniques for a digital image. Int. J. Comput. Appl.100(18), 18–23 (2014).

A. M. Reza, Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. J. VLSI Signal Process. Syst. Signal Image Video Technol.38(1), 35–44 (2004).

K. Zuiderveld, in Graphics Gems IV, ed. by P. S. Heckbert. Contrast limited adaptive histogram equalization (Academic Press Professional, Inc.San Diego, 1994), pp. 474–485.

M. Sundaram, K. Ramar, N. Arumugam, G. Prabin, Histogram modified local contrast enhancement for mammogram images. Appl. Soft Comput.11(8), 5809–5816 (2011).

S. E. Kim, J. J. Jeon, I. K. Eom, Image contrast enhancement using entropy scaling in wavelet domain. Signal Proc.127:, 1–11 (2016).

K. Akila, L. S. Jayashree, A. Vasuki, Mammographic image enhancement using indirect contrast enhancement techniques – a comparative study. Procedia Comput. Sci.47:, 255–261 (2015). Graph Algorithms, High Performance Implementations and Its Applications (ICGHIA 2014).

W. G. Flores, W. C. de Albuquerque Pereira, A contrast enhancement method for improving the segmentation of breast lesions on ultrasonography. Comput. Biol. Med.80:, 14–23 (2017).

Y. M. George, B. M. Bagoury, H. H. Zayed, M. I. Roushdy, Automated cell nuclei segmentation for breast fine needle aspiration cytology. Signal Proc.93(10), 2804–2816 (2013). Signal and Image Processing Techniques for Detection of Breast Diseases.

A. Tareef, Y. Song, W. Cai, H. Huang, H. Chang, Y. Wang, M. Fulham, D. Feng, M. Chen, Automatic segmentation of overlapping cervical smear cells based on local distinctive features and guided shape deformation. Neurocomputing. 221:, 94–107 (2017).

N. P. Singh, R. Srivastava, Retinal blood vessels segmentation by using Gumbel probability distribution function based matched filter. Comput. Methods Prog. Biomed.129:, 40–50 (2016).

S. Aslani, H. Sarnel, A new supervised retinal vessel segmentation method based on robust hybrid features. Biomed. Signal Process. Control.30:, 1–12 (2016).

N. R. S. Parveen, M. M. Sathik, in 2009 International Conference on Computer Technology and Development, vol. 2. Enhancement of bone fracture images by equalization methods, (2009), pp. 391–394.

L. Zheng, H. Shi, S. Sun, in 2016 IEEE International Conference on Information and Automation (ICIA). Underwater image enhancement algorithm based on CLAHE and USM, (2016), pp. 585–590.

X. Qiao, J. Bao, H. Zhang, L. Zeng, D. Li, Underwater image quality enhancement of sea cucumbers based on improved histogram equalization and wavelet transform. Inf. Process. Agric.4:, 206–213 (2017).

E. A. Murillo-Bracamontes, M. E. Martinez-Rosas, M. M. Miranda-Velasco, H. L. Martinez-Reyes, J. R. Martinez-Sandoval, H. Cervantes-de-Avila, Implementation of Hough transform for fruit image segmentation. Procedia Eng.35:, 230–239 (2012). International Meeting of Electrical Engineering Research 2012.

W. Ji, Z. Qian, B. Xu, Y. Tao, D. Zhao, S. Ding, Apple tree branch segmentation from images with small gray-level difference for agricultural harvesting robot. Optik - Int. J. Light Electron Opt.127(23), 11173–11182 (2016).

L. Unzueta, M. Nieto, A. Cortes, J. Barandiaran, O. Otaegui, P. Sanchez, Adaptive multicue background subtraction for robust vehicle counting and classification. IEEE Trans. Intell. Transp. Syst.13(2), 527–540 (2012).

A. Gudigar, S. Chokkadi, U. Raghavendra, U. R. Acharya, Local texture patterns for traffic sign recognition using higher order spectra. Pattern Recogn. Lett.94:, 202–210 (2017).

X. Wang, X. Liu, H. Guo, Q. Guo, N. Liu, Research on pedestrian detection method with motion and shape features. J. Comput. Theor. Nanosci.13(9), 5788–5793 (2016).

L. G. Moré, M. A. Brizuela, H. L. Ayala, D. P. Pinto-Roa, J. L. V. Noguera, in 2015 IEEE International Conference on Image Processing (ICIP). Parameter tuning of CLAHE based on multi-objective optimization to achieve different contrast levels in medical images, (2015), pp. 4644–4648.

B. S. Min, D. K. Lim, S. J. Kim, J. H. Lee, A novel method of determining parameters of CLAHE based on image entropy. Int. J. Softw. Eng. Appl.7(5), 113–120 (2013).

H. Hiary, R. Zaghloul, A. Al-Adwan, M. B. Al-Zoubi, Image contrast enhancement using geometric mean filter. SIViP.11(5), 833–840 (2017).

M. Sepasian, W. Balachandran, C. Mares, in Proceedings of the World Congress on Engineering and Computer Science. Image enhancement for fingerprint minutiae-based algorithms using CLAHE, standard deviation analysis and sliding neighborhood, (2008), pp. 22–24.

A. łoza, D. R. Bull, P. R. Hill, A. M. Achim, Automatic contrast enhancement of low-light images based on local statistics of wavelet coefficients. Digit. Signal Proc.23(6), 1856–1866 (2013).

N. M. Sasi, V. Jayasree, Contrast limited adaptive histogram equalization for qualitative enhancement of myocardial perfusion images. Engineering.5:, 326 (2013).

P. Li, L. Dong, H. Xiao, M. Xu, A cloud image detection method based on SVM vector machine. Neurocomputing. 169:, 34–42 (2015). Learning for Visual Semantic Understanding in Big Data ESANN 2014 Industrial Data Processing and Analysis.

M. Quintana, J. Torres, J. M. Menéndez, A simplified computer vision system for road surface inspection and maintenance. IEEE Trans. Intell. Transp. Syst.17(3), 608–619 (2016).

S. U. Sharma, D. J. Shah, A practical animal detection and collision avoidance system using computer vision technique. IEEE Access. 5:, 347–358 (2017).

T. M. Mitchell, Machine learning (McGraw Hill, New York, 1997).

Y. Yao, J. Zhang, F. Shen, X. Hua, J. Xu, Z. Tang, A new web-supervised method for image dataset constructions. Neurocomputing. 236:, 23–31 (2017).

E. C. Larson, D. M. Chandler, Most apparent distortion: full-reference image quality assessment and the role of strategy. J. Electron. Imaging. 19(1), 011006–011006 (2010).

N. Ponomarenko, O. Ieremeiev, V. Lukin, K. Egiazarian, L. Jin, J. Astola, B. Vozel, K. Chehdi, M. Carli, F. Battisti, et al., in Visual Information Processing (EUVIP), 2013 4th European Workshop On. Color image database tid2013: Peculiarities and preliminary results (IEEE, 2013), pp. 106–111.

A. Shokrollahi, A. Mahmoudi-Aznaveh, M. -N. B.Maybodi, Image quality assessment for contrast enhancement evaluation. AEU - Int. J. Electron. Commun.77:, 61–66 (2017).

A. Saleem, A. Beghdadi, B. Boashash, A distortion-free contrast enhancement technique based on a perceptual fusion scheme. Neurocomputing. 226:, 161–167 (2017).

A. Zarić, N. Tatalović, N. Brajković, H. Hlevnjak, M. Lončarić, E. Dumić, S. Grgić, VCL@ FER image quality assessment database. Automatika: Časopis Za Automatiku, Mjerenje, Elektroniku, Računarstvo I Komunikacije. 53(4), 344–354 (2012).

J. Y. Lin, S. Hu, H. Wang, P. Wang, I. Katsavounidis, A. Aaron, C. J. C-Kuo, Statistical study on perceived JPEG image quality via MCL-JCI dataset construction and analysis. Electron. Imaging. 2016(13), 1–9 (2016).

S. Hu, H. Wang, C. J. C-Kuo, in Acoustics, Speech and Signal Processing (ICASSP), 2016 IEEE International Conference On. A gmm-based stair quality model for human perceived jpeg images (IEEE, 2016), pp. 1070–1074.

J. Y. Lin, L. Jin, S. Hu, I. Katsavounidis, Z. Li, A. Aaron, C. J. C.-Kuo, in SPIE Optical Engineering+ Applications. Experimental design and analysis of jnd test on coded image/video (International Society for Optics and Photonics, 2015), pp. 95990–95990.

P. Le Callet, F. Autrusseau, Subjective quality assessment IRCCyN/IVC database (2005). http://www2.irccyn.ecnantes.fr/ivcdb/.

H. R. Sheikh, M. F. Sabir, A. C. Bovik, A statistical evaluation of recent full reference image quality assessment algorithms. IEEE Trans. Image Process.15(11), 3440–3451 (2006).

Z. Wang, A. C. Bovik, H. R. Sheikh, E. P. Simoncelli, Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process.13(4), 600–612 (2004).

H.Y.V., H. Y. Patil, Article: A survey on image quality assessment techniques, challenges and databases. IJCA Proc. Natl. Conf. Adv. Comput.NCAC 2015(7), 34–38 (2015). Full text available.

N. Thakur, S. Devi, A new method for color image quality assessment. Int. J. Comput. Appl.15(2), 10–17 (2011).

K. Gu, G. Zhai, W. Lin, M. Liu, The analysis of image contrast: From quality assessment to automatic enhancement. IEEE Trans. Cybern.46(1), 284–297 (2016).

M. S. Nixon, A. S. Aguado, Feature Extraction & Image Processing for Computer Vision (Academic Press, Oxford, 2012).

D. Li, N. Li, J. Wang, T. Zhu, Pornographic images recognition based on spatial pyramid partition and multi-instance ensemble learning. Knowl.-Based Syst.84:, 214–223 (2015).

R. M. Haralick, K. Shanmugam, I. Dinstein, Textural features for image classification. IEEE Trans. Syst. Man Cybern.SMC-3(6), 610–621 (1973).

T. Ojala, M. Pietikäinen, T. Mäenpää, Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern. Anal. Mach. Intell.24(7), 971–987 (2002).

H. Shen, P. Chen, L. Chang, Automated steel bridge coating rust defect recognition method based on color and texture feature. Autom. Constr.31(0), 338–356 (2013).

H. Yeganeh, Z. Wang, Objective quality assessment of tone-mapped images. IEEE Trans. Image Process.22(2), 657–667 (2013).

S. S. Agaian, K. Panetta, A. M. Grigoryan, Transform-based image enhancement algorithms with performance measure. IEEE Trans. Image Process.10(3), 367–382 (2001).

K. Matković, L. Neumann, A. Neumann, T. Psik, W. Purgathofer, in Proceedings of the First Eurographics Conference on Computational Aesthetics in Graphics, Visualization and Imaging. Global contrast factor - a new approach to image contrast (Eurographics AssociationAire-la-Ville, 2005), pp. 159–167.

O. A. Penatti, E. Valle, S. R.d.Torres, Comparative study of global color and texture descriptors for web image retrieval. J. Vis. Commun. Image Represent.23(2), 359–380 (2012).

L. Breiman, J. Friedman, C. J. Stone, R. A. Olshen, Classification and Regression Trees (CRC press, New York, 1984).

S. Haykin, Neural network: a compressive foundation (1999).

V. N. Vapnik, The Nature of Statistical Learning Theory (Springer, New York, 1995).

L. Breiman, Random forests. Mach. Learn.45(1), 5–32 (2001).

T. Chen, C. Guestrin, in Proceedings of the 22Nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. Xgboost: A scalable tree boosting system (ACMNew York, 2016), pp. 785–794.

L. Rokach, O. Maimon, Data Mining With Decision Trees: Theory and Applications, 2nd edn (World Scientific Publishing Co., Inc., River Edge, 2014).

T. Therneau, B. Atkinson, B. Ripley, Rpart: recursive partitioning and regression trees (2015). R package version 4.1-10. https://CRAN.R-project.org/package=rpart.

C. Bergmeir, J. M. Benítez, Neural networks in R using the Stuttgart neural network simulator: RSNNS. J. Stat. Softw.46(7), 1–26 (2012).

D. Meyer, E. Dimitriadou, K. Hornik, A. Weingessel, F. Leisch, E1071: Misc Functions of the Department of Statistics, Probability Theory Group (Formerly: E1071), TU Wien (2017). R package version 1.6-8. https://CRAN.R-project.org/package=e1071.

T. Chai, R. R. Draxler, Root mean square error (RMSE) or mean absolute error (mae)?. Geosci. Model Dev. Discuss.7:, 1525–1534 (2014).

J. A. Cornell, R. D. Berger, Factors that influence the value of the coefficient of determination in simple linear and nonlinear regression models. Phytopathology.77(1), 63–70 (1987).

S. -D. Chen, A. R. Ramli, Minimum mean brightness error bi-histogram equalization in contrast enhancement. IEEE Trans. Consum. Electron.49(4), 1310–1319 (2003).

H. R. Sheikh, A. C. Bovik, Image information and visual quality. IEEE Trans. Image Process.15(2), 430–444 (2006).

M. A. Qureshi, A. Beghdadi, M. Deriche, Towards the design of a consistent image contrast enhancement evaluation measure. Signal Process. Image Commun.58:, 212–227 (2017).

Acknowledgements

The authors would like to thank the Scientific Computing Lab at Londrina State University and Professor Taufik Abrão for providing the computer cluster which made this work possible, and to the grant #2012/23114-9 from São Paulo Research Foundation (FAPESP).

Funding

Not applicable.

Availability of data and materials

All the data supporting our findings, including datasets and source code developed, are freely available in http://www.uel.br/grupo-pesquisa/remid/?page_id=145.

Author information

Authors and Affiliations

Contributions

All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional information

Authors’ information

Not applicable.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Campos, G., Mastelini, S., Aguiar, G. et al. Machine learning hyperparameter selection for Contrast Limited Adaptive Histogram Equalization. J Image Video Proc. 2019, 59 (2019). https://doi.org/10.1186/s13640-019-0445-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13640-019-0445-4