Abstract

Dynamic situations and applications are supported by the diverse devices and communication technologies that constitute the Internet of Things concept. Despite this, communication backlogs are common due to rising network demand and insufficient resource allocation. This study provides a way to fix resource allocation problems using Mutable Resource Allocation and Distributed Federated Learning. Inadequacies and backlogs in resources are identified at the edge of the network. As part of this procedure, edge devices are assigned to link resources and users after independently determining which resources cannot be allocated and which shortcomings are linked with them. Adapting to demand and learning suggestions, this allocation is flexible. By classifying resources as sufficient or inadequate, the learning suggestions help avoid backlogs. This enables edge devices to choose between allocation and response, which improves network flexibility by prioritizing inadequate resource allocation. Accordingly, the recommendation factor periodically affects modifications to the edge connection and its interaction with the Internet of Things platform. The suggestion is particularly strong for situations with changing backlogs to ensure that subsequent resource allocations align with preference-based learning. Claiming to improve connection, services, and resource allocation while decreasing backlogs and allocation times, this approach is a hot commodity.

Similar content being viewed by others

1 Introduction

Allocating resources dynamically expands the kinds of computer systems and applications that may be used cheaply. System feasibility and efficiency are guaranteed by dynamic resource allocation. Edge networks built on the Internet of Things (IoT) exploit dynamic resource allocation [1]. The allocation of resources makes use of a wide variety of approaches. IoT edge networks mostly use deep reinforcement learning (DRL) algorithms for dynamic resource allocation. When applied to a network, DRL may identify the critical values and patterns needed to complete a job [2]. Resource allocation uses the retrieved key values to decrease scheduling and allocation delay. IoT edge networks use a data-driven, dynamic cloud and edge system (D3CES). Gathering information vital to carrying out network operations is the primary goal of D3CES. Decision-making errors are minimized using D3CES's effective resource management decisions. D3CES increases the quality of service (QoS) and performance range of systems by reducing the time and energy consumption ratio in allocation [3, 4]. System and application failure due to inadequate resource allocation is a major issue with the IoT. IoT uses certain methods and algorithms to address issues with inadequate resource allocation [5]. Server errors and a lack of resources are the most common causes of inadequate resource allocation. Every program that does a certain job within the specified time frame uses appropriate resource allocation strategies [6]. IoT systems often use blockchain-based resource allocation. As a resource allocation mechanism, blockchain uses DRL to pinpoint which application contents and resources are needed to complete a job [7]. DRL oversees the allocation of resources and ensures that they remain consistent and within an efficient range [8]. By reducing the amount of resources needed for the allocation process, the blockchain technique improves the overall accuracy of resource allocation [9].

IoT applications may also benefit from the service level agreement (SLA) approach to lessen the impact of inadequate resource allocation. When it comes time to allocate resources, SLA finds all the crucial characteristics and aspects. IoT systems benefit from SLA's enhanced energy efficiency and cost-effectiveness. An application's performance to QoS ratio is also enhanced by SLA [10]. Several domains use distributed learning techniques to improve system efficacy and dependability. The distributed learning approach also allocates resources in edge networks that the IoT enables. Distributed learning approaches are mostly used in resource allocation to address issues that arise during computing. The distributed learning approach may identify association issues and provide workable remedies for IoT networks [11, 12].

The backpropagation neural network algorithms are one method for distributing learning and obtaining useful datasets for allocating resources. These algorithms resolved the dispersed difficulties that arise in edge networks based on the IoT. IoT networks' energy efficiency and practicality are both enhanced by backpropagation neural network algorithms [13, 14]. The distributed learning approach also uses the deep neural network algorithm to lessen the time it takes to allocate resources. As part of allocating resources, this algorithm determines what distributed material is necessary to complete a given activity. IoT edge networks benefit from deep neural networks' enhanced resource distribution accuracy [15].

This paper presents a unique approach to decentralized model convergence and dynamic edge resource scheduling in IoT edge networks by integrating distributed federated learning (DFL) with mutable resource allocation (MRA). Resource reallocation decisions are adaptively driven by real-time variations in model training demands, device heterogeneity, and network fluctuations in this study's tightly coupled framework, which differs from traditional approaches that treat learning and resource allocation as separate optimization problems. Traditional approaches based on deep reinforcement learning sometimes fail to account for communication and computing limitations during continuous federated updates; however, the MRA mechanism allows for fine-grained allocation modifications at the edge node level. To further alleviate synchronization difficulties, the framework uses asynchronous scheduling. This enables scalable deployment even when dealing with non-IID data distributions and erratic task arrival patterns.

The main objectives of the study are stated as:

-

1.

To evaluate the efficiency of distributed federated learning (DFL) and mutable resource allocation (MRA) in the resource allocation process should be done.

-

2.

To improve network adaptability, recommendation-driven changes should be implemented.

-

3.

To establish how the suggestion factor impacts the adjustments made to edge connections and the interactions across IoT platforms.

A summary of the research is provided below. In Sect. 2, the current literature and study techniques are thoroughly examined. The research strategy, methodology, and processing procedures are detailed in Sect. 3. The results analysis is covered in Sect. 4. Section 5 explores the main conclusion and future work.

2 Related works

Ahmed et al. [16] introduced a Stackelberg game-based dynamic resource allocation (SBG-DRA) for edge federated 5G networks. Stackelberg game is mostly used to take advantage of the database presented edge resources. Realistic resources may be allocated when significant behaviors and factors have been identified. Here, additional features leverage mobile edge computing (MEC), making allocating resources faster and more accurate. The new allocation approach boosts network efficiency by decreasing the total time consumption ratio in allocation.

Gao et al. [17] presented limited resources make data processing and transmission difficult on the Internet of Vehicles. To solve these problems, MEC may work with the cloud. Previous offloading solutions failed to overcome task dependence and resource rivalry. Using multi-agent reinforcement learning is a new MEC offloading approach that improves performance. Multiple agents learn environmental changes such as mobile devices and task queues and adopt reinforcement learning strategies. Energy usage is better in Alibaba Cluster Dataset experiments.

Vergara et al. [18] declared that the rising number of linked items has spurred new applications in numerous sectors. The ever-changing nature of cloud and fog computing, as well as the heterogeneity of its devices, makes resource allocation a difficult task. Strategy, network properties, and decision-making variables are all covered in this literature review on resource allocation in the fog-cloud continuum. It also proposes future paths for academics and professionals involved in resource allocation and edge computing while highlighting unanswered research questions.

Tushar et al. [19] introduced novel approaches for cyber-physical systems (CPS) to work in many different industries, thanks to the proliferation of IoT, artificial intelligence (AI), and communication technology. To accomplish its goals, CPS employs game theory in its interactions. More in-depth research on CPS kinds, characteristics, and the use of game theory to simulate CPS is yet required. Key research difficulties for subsequent research are identified as several kinds of CPS and game-theoretic techniques are discussed and used to meet issues.

To help IoT networks that use MECs, Bolettieri et al. [20] developed an application-aware resource allocation method. Improved efficiency in data management systems is a direct result of the suggested method's precise allocation of resources. This article pinpoints the precise data flow between sensors and IoT services to aid in allocating resources. Results from experiments demonstrate that the suggested allocation strategy improves the QoS range in IoT networks as a whole.

Du et al. [21] proposed a latency-aware computation offloading-based resource allocation for software-defined networks-enabled MEC systems. This implementation of MEC resource allocation also uses the Deep Q-network algorithm. Optimization issues may be identified and addressed by reducing computing delay via offloading. The suggested latency-aware approach uses less time and energy during optimization and offloading than existing techniques.

Xie et al. [22] developed a DRL approach for resource allocation in 5G networks. Network slicing issues in multi-tenant networks are the primary target of the suggested method. Optimization issues may be addressed by identifying relevant characteristics and attributes. When it comes to making decisions, DRL is quite accurate. The suggested DRL method improves system performance and efficiency by decreasing the time and energy consumption range of network slicing.

Nematollahi et al. [23] displayed a proliferation of mobile and portable devices thanks to the IoT; however, these gadgets struggle to meet deadlines due to a lack of resources. The study suggests IMOAO, an upgraded multi-objective Aquila optimizer, to transfer tasks from IoT devices to fog nodes. The system finds the best solutions by diversifying the population via opposition-based learning. Out of all the optimization techniques tested, the IMOAO algorithm outperformed the others, including particle swarm optimization and the firefly algorithm, in terms of average reaction time and failure rate.

Liu et al. [24] created a multi-access edge computing (MEC)-based multi-objective resource allocation model (MRAM) for IoT settings. This case uses the Pareto achieved evolution strategy to identify efficient ways to allocate resources across several objectives. The primary goal of this Pareto strategy is to lessen the financial and environmental impact of MEC servers. Expanding the efficient range of resource allocation is another benefit of a multi-objective resource allocation model. The suggested model improves the efficiency of IoT systems by allocating resources with pinpoint precision.

Xiang et al. [25] proposed a new resource allocation method for IoT applications. The proposed method is widely used for IoT in edge-cloud networks. The study identifies the precise resources and variables to provide practical data for resource allocation procedures. An allocation that decreases the complexity range performs edge-cloud cooperation and functions. The suggested strategy enhances the performance and resilience of IoT applications compared to prior approaches.

Chen et al. [26] developed an energy-efficient task offloading and resource allocation for augmented reality in MEC networks. This case uses the DRL method, which finds resources for distribution and decision-making. The AR model gives the best answers to joint optimization issues in MEC systems. By decreasing the energy consumption ratio during job offloading, the suggested solution enhances the efficiency of augmented reality in MEC systems.

Feng et al. [27] designed a dynamic subchannel allocation and resource allocation (DSARA) for MEC systems. The suggested approach aims to boost the speed of MEC systems' central processing units. Subchannel modifications can fix issues with data flows and traffic. The suggested approach enhances system performance by decreasing allocation complexity and delay. In MEC systems, the suggested allocation technique optimizes the energy-efficiency range.

Wang et al. [28] introduced a distributed deep learning-based computation offloading and resource allocation (DDL-CORA) for software-defined and MEC. Computing offloading issues in MEC systems are identified using DDL. Regarding optimizing resource allocation, DDL offers the best methods to minimize delay. According to the findings of the experiments, the newly implemented DDL-CORA simplifies the allocation process, allowing software-defined MEC to achieve its QoS goals.

Huang et al. [29] developed a strategy for moving MEC's resources around depending on market forces. Here, evolutionary game theory forecasts how mobile edge servers will act. The retrieved behaviors provide practical information that may be used to lessen resource allocation mistakes. The suggested technique enhances MEC systems' performance and practicability, which decreases the energy usage and time consumption of resource allocation.

Han Wang et al. [30] presented the Training Channel-Based Method for Channel Parameter Estimation of mmWave MIMO System in Urban Traffic Scene. Given the sparsity of the subcarrier multi-channels and the realistic mmWave MIMO channel, the author formulated a multipath simultaneous matching tracking estimation technique by seeing the channel estimation issue as sparse channel recovery. Assuming a correlation exists between the practical channels' noise, this correlation influences the choice of the best atomic support set during channel recovery. The proposed method incorporates noise weighting as a result. According to the simulation results, this suggested method is validated in frequency-selective mmWave MIMO channels. Better local performance than traditional classical methods can be achieved using the suggested method without increasing the algorithm's complexity.

Xingwang Li et al. [31] suggested the reconfigurable intelligent surface (RIS) and simultaneous transmitting and reflecting RIS (STAR-RIS) for Wireless Communications with Randomly Distributed Blockages. The author calculates warden detection error probability in random wireless networks. To enhance the covert communication rate, the author improves STAR-RIS' passive beamforming for direct and indirect line-of-sight (LOS) connections. To solve this, the author uses a semi-definite programming (SDP)-based alternating optimization (AO) approach. Finally, numerical findings show that large-scale STAR-RIS deployment improves covert capabilities.

Lin Zhi et al. [32] proposed securing satellite-terrestrial integrated networks (STIN) using self-powered absorptive reconfigurable intelligent surfaces. This paper's objective is to optimize the earth station's (ES) possible secrecy rate within the restrictions of signal reception, the harvesting power threshold at the RIS, and the overall transmit power budget. The proposed dual decomposition approach, which is based on penalty functions, first converts the original issue into a two-layer optimization problem and then solves the non-convex problem. The external and internal issues are then addressed by calculating the reflective coefficient matrix and beamforming weight vectors using the Lagrange dual, Rayleigh quotient, and successive convex approximation techniques. The simulation results confirm that the suggested strategy successfully improves SDC security.

Zhi Lin et al. [33] recommended the Refracting RIS-Aided Hybrid Satellite-Terrestrial Relay Networks. The goal of the design is to decrease the satellite and BS's overall transmit power while still assuring customers' rate needs, considering the restricted onboard power resource. The author offers an alternating optimization scheme that iteratively optimizes the phase shifters using Taylor expansion and penalty function methods and optimizes the beamforming weight vectors using singular value decomposition and uplink–downlink duality. This is necessary because the optimized beamforming weight vectors at the satellite and BS and the phase shifters at the RIS are coupled, creating a mathematically intractable optimization problem.

Zhi Lin et al. [34] discussed the satellite-terrestrial integrated networks using secrecy-energy efficient hybrid beamforming. To maximize the achievable secrecy-energy efficiency while satisfying the signal-to-interference-plus-noise ratio constraints of both the earth stations (ESs) and cellular users, the hybrid beamformer at the base station and the digital beamformers at the satellite work together, assuming imperfect angles of departure for the wiretap channels. This study provides two resilient BF techniques to find approximation solutions with minimal complexity, given that the specified optimization problem is non-convex and theoretically intractable. This study first makes the non-convex issue solvable to get the BF weight vectors by combining the Charnes-Cooper method with an iterative search technique. This works for the case of a single ES. Using the sequential convex approximation approach, we transform the original issue into a linear one with numerous matrix inequalities and second-order cone constraints when dealing with many ESs. This study then gets a solution that performs well. Simulations employing actual terrestrial and satellite downlink channel models confirm the efficacy and excellence of the suggested strong BF design strategies.

The suggested method combines distributed federated learning (DFL) with mutable resource allocation (MRA) to alleviate communication congestion in IoT edge environments. However, the approach looks more like a structural integration of current techniques than a methodological innovation. Prior research on optimizing resources and training distributed models in edge networks has heavily used MRA and DFL. Here, the contribution is confined to merging two existing frameworks without adding new algorithmic features or architectural improvements. In contrast with the DFL implementation, which lacks advanced aggregation schemes, compression processes, or convergence optimization strategies beyond the standard protocols, the MRA strategy adheres to traditional principles of adaptive allocation without redefining the utility functions or optimization criteria.

3 Proposed resource allocation method

Communication backlogs are prevalent due to inadequate resource allocations and rising network demand. A wide variety of devices and communication technologies are housed under the IoT paradigm, which allows for ever-changing situations and applications. The paper presents an MRA that utilizes DFL to remedy the inadequate allocation of resources. At the periphery of the connected network, bottlenecks and shortages of resources are detected. During this procedure, the unavailable resources and the shortfall are detected separately. DFL is a method for learning algorithms that may be used for many users or decentralized IoT edge devices that store data samples independently. By enabling boundary exchange with the server while keeping datasets private to each IoT edge device, this DFL allows such planning to be used. The DFL technique opposes classical decentralized approaches, which often assume that local datasets are evenly distributed, and typical centralized learning methods involve transferring all the local data to a single server. Data censorship, security, privacy, and rights are some of the censorious concerns that DFL solves by allowing different users to build a shared learning technique without disclosing the data. With edge computing, computing and data storage are moved closer to the data resources. Using DFL at the edge of a network may improve both response time and resource availability. Edge computing is used to provide users with requests with minimal latency. This simplifies deploying and playing various functionalities on servers located at the edge. This feature helps search for the availability and flexibility of resources. IoT edge devices will also send it to the user as a response through DFL. This MRA-DFL is illustrated in Fig. 1.

Here, the user uses DFL to request edge devices via the IoT to verify resource availability. Once the edge device is aware of its availability and deficiency, it allocates resources using the DFL method. When resource allocation is inadequate, DFL is used to rectify the situation. At the periphery of the connected network, bottlenecks and shortages of resources are detected. During this procedure, the unavailable resources and the shortfall are detected separately. With this information, edge devices may be more efficiently distributed to link users and resources. This distribution may be changed according to suggestions from learning and demand. The learning suggestion is categorized according to the resources that are available or not accessible, which helps to avoid backlogs. DFL is used to verify the user's demand and the resource's connection. To ensure that users can access flexible resources, it will allocate additional edge devices if its allocation is inadequate. To improve the network's flexibility, this procedure favors allocating inadequate resources and allows the edge device to choose between allocation and response. As a result, the recommendation factor is used to regularly update the edge connection and its interaction with the IoT platform. The technique of device reallocation is accomplished by including more edge devices. This suggestion is strong for dynamic settings when the backlog is present; the learning paradigm's sequential resource allocation is preference-dependent. To allocate resources using the DFL, the user knows when such resources are available and requests the edge computing devices via the IoT devices. The procedure of sending the request to the edge devices through the IoT for resource allocation is explained by the following equation given below:

where (\({S}_{x})\) is denoted as the request sent by the user, \(\left({A}_{1},{B}_{1}\right)\) is denoted as the process done by the IoT to send the request to the edge computing devices, (\(\eta )\) is denoted as the process of identifying the resources, (\(a,b)\) is denoted as the calculation of the number of edge devices for the process of resource allocation, and (\({\theta }^{2})\) is denoted as the estimation of the availability of the resources. The request has been sent to the DFL to search for available resources. This also helps address the insufficiency of resource allocation and helps reduce the process's time lags. Resource insufficiency and backlogs are identified at the edge of the connecting network. After finding the resources for the process, the learning algorithm is classified based on the availability and insufficiency of the resources. This allocation is mutable based on demand and learning recommendations.

A user's demand and the learning endorsement determine how horrible the allocations made by the DFL learning algorithm are. The DFL verifies that the user can connect to the necessary resources to proceed with the procedure. A user's request, the amount of available resources, and other factors might cause the DFL to change. Resources that cannot be allocated may be found, and their defects can be discovered separately through this learning process. Allocating the edge computing devices that link the user to the resources is based on this identification that the learning algorithm finds. Through the IoT, this DFL algorithm finds the resources that meet the needs of edge devices according to user demand. The IoT will facilitate allocating resources for edge computing devices by linking users with available resources. Figure 2 displays the first allocation evaluation that was conducted using the DFL.

When MRA finds that resources are underutilized, it employs the DFL algorithm to discover why. The learning algorithm also aids in making the most of the user's relationship with the available resources. The DFL algorithm can show the user which resources are available, and for resource allocation to be successful, the edge devices are loaded to their limits. To enhance the network's adaptability, the DFL can identify the resources users need by learning when they are available and when they are not. Device communication backlogs are also reduced with this DFL (Fig. 2). Through the IoT, edge computing devices may assign resources to consumers based on availability. In addition to determining the resource availability, this procedure aids in determining the user connection level. The process of allocating the resources by using the DFL is explained by the following equations given below:

where (\(\sigma )\) is denoted as the process of learning algorithm, (\({P}_{H\sim s})\) is denoted as the identification of the deficiency of the resources (\({\varphi }_{i}\) is denoted as the identification of the backlogs of the resources, (\(O)\) is denoted as the process of resource allocation by the DFL, (\(\phi )\) is denoted as the network demand for the process of resource allocation, (\({\varepsilon }_{\sigma }f\left(\sigma \right))\) is denoted as the amount of connectivity between the user and the resources, (\(X)\) is denoted as the user demand for the process, and (\({V}_{i})\) is denoted as the communication between the devices. Now, the DFL helps search for available resources according to user demands. Resource insufficiency and backlogs are identified at the edge of the connecting network. In this process, the resources that cannot be allocated and the deficiencies are independently identified. The identification helps to allocate edge devices for connecting resources and users.

This distribution may be changed according to suggestions from learning and demand. The learning algorithm helps users make the most of available resources by allocating resources to users and optimizing their connections. By examining its backlogs, the DFL may distribute the resources that the user has requested. When allocating resources, we will check for communication backlogs between edge computing devices and take measures to eliminate them. The connected network's edge is used to determine where resources are lacking in response to user requests in devices via the IoT. More edge devices are added to accomplish the reallocation as part of the user's resource allocation method. Using DFL, the study identifies where our resources are lacking and prevents communication backlogs on the edge devices. Edge computing is used to provide users with requests with minimal latency. This learning system has the potential to amplify the connection between users and resources. Subsequently, it is possible to identify the unavailable resources and shortfalls independently. This simplifies deploying and playing various functionalities on servers located at the edge. Identification makes allocating edge devices to link resources and users easier. The process of identifying the resources according to the user request by using the learning algorithm is explained by the following equations given below:

where \({(\varepsilon }_{{\sigma }_{1\dots \dots .{\sigma }_{X}}})\) is denoted as the finding the resources in consonance with the user demand, (\(N)\) is denoted as the calculation of the communication backlogs that occurred, and (\({\sigma }^{-Q})\) is denoted as enhancing the connection between the user and the resources. The learning algorithm may now determine when resources are available and when they are insufficient. With this information, edge devices may be more efficiently distributed to link users and resources. This distribution may be changed according to suggestions from learning and demand. The learning suggestion is categorized according to the resources that are available or not accessible, which helps to avoid backlogs. The learning algorithm finds out how many resources are available and then gives them to the user based on their needs. Both the process's availability and the user's demand determine how resources are distributed to the user. The learning for availability check is illustrated in Fig. 3.

The availability of the resources can be identified by using the learning algorithm to allocate to the user based on their demands. Using the DFL for the user allows for censorious data resource allocation and improves the interaction between the user and the resources. With this information, edge devices may be more efficiently distributed to link users and resources. The demand and learning suggestions may change this allocation (Fig. 3). Resource availability aids in allocating resources following process user requests. The allocation of resources involves the addition of additional edge computing devices to increase connectivity if there is no connection between the user and the resources. Assigning resources according to user needs for the procedure puts a heavy burden on the edge devices. Changes to the user's assertions and learning suggestions may cause this distribution of resources to change. At the periphery of the connected network, bottlenecks and shortages of resources are detected. There is an assumption that the DFL can help with the problem of inadequate resource allocation. The DFL may help decrease backlogs by making more resources available to users during resource allocation. The process of finding the availability of the resources by using the learning algorithm is explained by the following equation is given below:

where (\({\mu }^{-Q})\) is denoted as the process of finding the availability of the resources by using the learning algorithm, (\(\alpha ,\beta\)) is represented as the calculation of the new edges for the connectivity, (\(K)\) is denoted as the process of finding the feasible resources for the user, and (\({\sigma }_{i}^{K-I+t})\) is denoted as the identification of the learning recommendations. Now, the resource insufficiency is found using the learning algorithm. With this information, edge devices may be more efficiently distributed to link users and resources. This distribution may be changed according to suggestions from learning and demand. The learning suggestion is categorized according to the resources that are available or not accessible, which helps to avoid backlogs. To improve the network's flexibility, this procedure favors allocating inadequate resources and allows the edge device to choose between allocation and response.

Reassigning edge devices to existing edge computing devices for resource allocation is done if inadequate resources are discovered. Backlogs in the process are caused by inadequate resource allocation and hint at no response or service for the user. The response time would be delayed or timed out if insufficient resources were allocated to the user based on their requests. It is preferable to initially allocate resources insufficiently to increase the network's flexibility. The learning suggestion is categorized according to the resources that are available or not accessible, which helps to avoid backlogs. Adding more computer devices to the network's periphery improves the network's adaptability and the user's connection to the resources. Figure 4 shows the method of identifying deficiency.

Under decentralized edge environments, the hyperparameters for the DFL-MRA learning model were chosen using iterative grid search and cross-validation. While conducting asynchronous updates, the learning rate was adjusted between {0.001, 0.003, 0.005, 0.007, 0.01}, with 0.005 resulting in the quickest convergence and the most stable model. At {2, 4, 5, 7, 10}, local epochs were examined, and reducing communication overhead to 5 while keeping acceptable model divergence across edge clients was achieved. From the options presented, batch size was chosen from {16, 32, 64}. On low-resource edge devices, 32 balanced computational load and gradient stability. Communication latency was decreased without a corresponding decline in global model accuracy when the aggregation frequency was set to occur every three updates instead of every two, three, or five local updates. We tuned the weight decay to 1e−4 and fixed the momentum coefficient at 0.9 to avoid overfitting when performing local updates.

The existing and newly added edge computing devices will also help reduce insufficiency. The edge devices are overloaded with new devices to attain the resource allocation process. The preference for insufficient resource allocation is given for improving the network resilience; the edge device switching between allocation and response is provided (Fig. 4). The insufficiency is identified to prevent resource backlogs. The process of finding the insufficiency of the resource allocation is found by using the learning algorithm is explained by the following equations given below:

where (\({f}^{\varphi }\left(\sigma \right))\) is denoted as the calculation of the insufficiency of the resources, (\({E}_{\gamma })\) is denoted as the estimation of the existing edge computing devices, and\((i,j)\) is denoted as the calculation of the newly added edge device to prevent backlogs. Now, with the help of the output of insufficient resources, the network flexibility is improved. Insufficient resource allocation is given to improve the network flexibility, providing the edge devices between the allocation and the response. Therefore, the edge connectivity and its interaction with the IoT platform periodically change using the recommendation factor. The user request helps in resource allocation and the process of reducing the insufficiency of the resources, thus increasing the flexibility of the network.

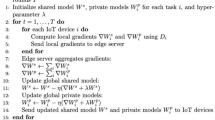

Algorithm 1: MRA using DFL.

At the moment, when processing user requests, Algorithm 1 distributes resources using DFL. Subsequently, it directs queries to edge devices via the IoT, which utilizes DFL to find accessible resources. Also, insufficient resources can be solved by adding more edge devices for flexibility. DFL organizes resources according to their kind, gives low priority one higher priority, increases the connection between users and resources, and decreases communication backlogs. In addition to responding to consumer inquiries, the algorithm enhances service distribution.

The insufficiency of the resources may occur due to the deficiency of the resources in the process of allocation. Resource insufficiency and backlogs are identified at the edge of the connecting network. Connectivity is also maximized by using the learning algorithm between the user and the resources. This allocation is mutable based on demand and learning recommendations. The learning recommendation is classified for available and insufficient resources preventing backlogs. The flexibility can be improved by adding new edge devices to avoid the insufficiency of resources. The response can be given to the edge devices with the ameliorate services to the user. This recommendation is high for backlog-facing dynamic scenarios; the consecutive resource allocation is preference-dependent using the learning paradigm. In this process, the preference for insufficient resource allocation is given to improve the network flexibility; the edge device switching between allocation and response is provided. The process of improving the network flexibility by reducing the insufficiency of the resources in the procedure of resource allocation is explained by the following equations given below:

where (\(T)\) is denoted as the calculation of the output of the insufficient resources, (\(C)\) is denoted as the improved flexibility, and (\(W)\) is denoted as the service provided by the process to the user. The response is sent to the user after completing the required process. This process helps improve flexibility, reducing the backlogs and the resources allocated to the user according to their demands. The process of sending the response to the user after the following equation explains the entire process is given below:

where (\({M}_{i}^{KH})\) is denoted as the response sent to the user after the resource allocation process according to the demand for edge computing devices through the IoT. The flexible resource allocation process post the DFL is presented in Fig. 5.

This method helps reduce the backlogs and increase the service distribution to the user. This also helps maximize the connectivity between the user and the resources. The resource allocation time also decreased in this process by using the learning algorithm. MRA using DFL is introduced to address resource allocation insufficiency (Fig. 5). Resource insufficiency and backlogs are identified at the edge of the connecting network. The identification helps to allocate edge devices for connecting resources and users.

4 Experimental result and analysis

The learning recommendation is classified for available and insufficient resources preventing backlogs. In this process, the preference for insufficient resource allocation is given to improve the network flexibility; the edge device switching between allocation and response is provided. Therefore, the edge connectivity and its interaction with the IoT platform periodically change using the recommendation factor. Therefore, this resource allocation method improves resource allocation, connectivity, and services. This method also reduces the backlogs and allocation time.

Optimizing dynamic resource allocation in decentralized, federated learning environment to handle computational imbalance and communication congestion in large-scale IoT edge networks is the focal point of this research problem. With real-time task demands and device statuses as inputs, the goal is to provide an adaptive framework that maintains federated model convergence and learning efficiency while reallocating heterogeneous edge resources (e.g., CPU cycles, memory, bandwidth, and energy) in response. Under dynamic connectivity constraints, the issue is defined over a multi-tier IoT edge architecture in which each edge node hosts multiple IoT devices that generate non-IID data. The suggested approach is suitable for medium-to-large-scale heterogeneous Internet of Things (IoT) edge networks with 50–200 edge nodes, where each node serves 10–50 IoT devices that do not use IID data distributions. The network design is believed to be a hierarchical multi-tier structure, where edge nodes function as mediators between IoT devices and cloud centers, with little inter-node coordination. With limited computational resources at the edge (e.g., 1.5–3 GHz CPUs with up to 4 GB RAM per node) and unpredictable uplink bandwidths ranging from 5 to 20 Mbps, the communication model functions.

4.1 Self-analysis

The self-analysis for the considered metrics is first presented in this sub-section. First, \(\theta\) and \({\varepsilon }_{\sigma }f\left(\theta \right) \forall \eta\) analysis is presented in Fig. 6.

As \(S\) increases, the new chance for augmentation is high, and therefore, \(\theta\) is high. Considering the availability for \(X\), \({V}_{i}\) is required to prevent new backlogs. Depending on \({\varepsilon }_{{\sigma }_{1}}\) to \({\varepsilon }_{{\sigma }_{X}}\), new allocations are performed. Different from these factors, \(\phi =0\) and \(\phi =1\) verification due to \(K\in \left[S+\left(a,b\right)\right]\). Therefore, \({\varepsilon }_{\sigma f\left(\theta \right)}\) is slow compared to \(\phi =0\) wherein the existing devices are used for allocation (Fig. 6). From \({\varepsilon }_{\sigma }f\left(\theta \right)\) maximization, its impact over \(X \forall K\) is then validated. This validation is performed to ensure precisely \(\left({\sigma }_{1}to {\sigma }_{X}\right)\) is mapped \(\forall\) addressing \({S}_{x}\). In Fig. 7, the validation is illustrated.

\(X(\%)\) increases as \({\varepsilon }_{\sigma }f\left(\theta \right)\) is strengthened using \((a,b)\) in the first allocations and \(S\) in the \({S}_{x}\) identified instances. Based on the available \(S\), new \({\mu }_{\alpha }^{-k+1}\) is defined for maximizing \(C\). Therefore, \(X\) is improved in all instances using learning recommendations as well. In the consecutive validations, \({S}_{x}\) is reduced by \(\eta\) (discovery) and identification of \(N\). Therefore, \(O\) and new allocations are classified using \(\sigma\). Based on \({\mu }^{-Q}=0\) case verification, new \(T\) is allocated. \(N\) in the previous \(T\) is responded to in the new level for improving \({M}_{i}^{KH} \forall\) users (Fig. 7).

4.2 Comparative analysis

The following metrics are used for comparison in this section: connection, backlogs, resource allocation and availability, and allocation duration. For the comparison study, the number of edge devices differs from 10 to 120, and the number of resources differs from 1 to 11. Within the scope of this comparative study, the MRAM [24], DSARA [27], and SBG-DRA [16], which are now in existence, are given consideration. SBG-DRA, DSARA, and MRAM based on MEC are three examples of existing models that offer different optimization approaches that account for different edge computing constraints. DSARA combines adaptive channel-state-aware allocation with real-time task offloading. MRAM uses multi-objective optimization to minimize latency and energy consumption across edge nodes, and SBG-DRA models resource negotiation as a leader–follower game between user nodes and edge infrastructure. When these models are not included in the comparison, the allocation dynamics are not considered, making it hard to measure how much better DFL-MRA is under decentralized, federated learning regarding convergence speed, model accuracy, energy efficiency, and communication overhead.

4.2.1 Resource allocation

The use of DFL results in a high level of resource allocation in this strategy. Depending on the user demand and the learning endorsement, the allocations that are carried out by the DFL learning algorithm are so terrible that they are just unacceptable. The DFL determines whether the user is connected to the resources used in the subsequent procedure. This allocation may be changed in response to changes in demand and suggestions based on learning. Insufficiency of resources and backlogs are recognized at the edge of the network linking such resources. This procedure recognizes the resources that cannot be distributed and the deficit separately. The identification assists in allocating edge devices to connect users and resources. This allocation may be changed in response to changes in demand and suggestions based on learning. To avoid backlogs, the learning advice is divided into two categories: available resources and inadequate resources. For backlog-facing dynamic situations, this proposal is highly recommended; the learning paradigm is used to determine the sequential allocation of resources, and the allocation of resources is preference-dependent. As a result, this approach is believed to improve resource allocation (refer to Fig. 8).

4.2.2 Resource availability

Through the use of the MRA and DFL, this strategy results in an adjustment to the availability of the resources. The use of this learning algorithm has the potential to amplify the connection that exists between the user and the resources. Subsequently, it is possible to determine both the shortfall and the resources that cannot be allocated independently. This makes it simpler to install and play various features on edge servers via edge servers. The identification assists in allocating edge devices to connect users and resources. To avoid backlogs, the learning advice is divided into two categories: available resources and inadequate resources. Within the framework of the method for resource allocation for the user, the reallocation is accomplished by adding more edge devices. The user requests the edge devices over the IoT to verify the availability of the resources. The DFL algorithm is used to process resource allocation. This approach is described in more detail below. The edge device uses the DFL algorithm to carry out the resource allocation process so that it is aware of its availability and insufficiency rates. Primarily, the use of the DFL for the user is used to carry out the censorious data resource allocation (refer to Fig. 9).

4.2.3 Connectivity

The identification assists in allocating edge devices to connect users and resources. If there is no connection between the user and the resources, additional edge computing devices are introduced to the resource allocation process to increase the connectivity between the user and the resources significantly. Regarding the ascendancy of distributing resources following the user requests for the proceeding, the edge devices are subject to excessive strain. Insufficiency of resources and backlogs are recognized at the edge of the network linking such resources. DFL determines the level of user demand and the level of connection between the user and the resource. Through the IoT, the edge of the connected network is used to determine whether or not the resources available in the devices are insufficient to meet the requirements put forth by the users. The use of this learning algorithm has the potential to amplify the connection that exists between the user and the resources. Additionally, this learning algorithm contributes to maximizing the connectedness between the user and the resources for the user. In light of this, it is believed that this resource allocation approach will improve connectivity, services, and resource allocation (refer to Fig. 10).

4.2.4 Backlogs

Utilizing the DF learning algorithm reduces the number of backlogs that the user experiences throughout the resource allocation process. The procedure of reassigning the edge devices with the existing edge computing devices for resource allocation is carried out if it is determined that the resources are inadequate. The lack of resource allocations results in backlogs in the process, which indicates that the user will not get a response or service. There will be a delay in the response time or a timed out for the response if there is an insufficient amount of resources available throughout the process of allocating resources to the user following their requirements. To avoid backlogs, the learning advice is divided into two categories: available resources and inadequate resources. Alongside the newly added edge computing devices, the already existing devices will also contribute to lowering the inadequacy via their contributions. Regarding resource allocation, the edge devices are flooded with new devices, which causes them to become exhausted. Figure 11 illustrates the deficiency that has been detected to prevent resource backlogs.

4.2.5 Allocation time

The use of the MRA in conjunction with the DFL algorithm results in a reduction in the amount of time required for the process of resource allocation during this approach. To tackle the problem of inadequate resource distribution, DFL has been implemented. Insufficient resources and backlogs are revealed on the periphery of the connecting network. The identification assists in allocating edge devices to link users and resources. The edge device switching between allocation and response is also offered throughout this process. The preference for inadequate resource allocation is given to improve the network's flexibility. To ensure that the resource allocation process is successful, the DFL algorithm may identify the resources for the user and load the edge devices to their maximum capacity. To enhance the network's adaptability, the DFL can identify the resources available to users by determining whether or not these resources are available and whether or not there is an insufficient amount of resources. Within the context of the available resources, this procedure also assists in determining the connection level of the users and the resources. Using the DFL and examining its backlogs, it is possible to allot the resources they need, as shown in Fig. 12. Both Table 1 and Table 2 summarize the comparative analysis and the results.

The suggested DFL-MRA technique's computational complexity involves local training and dynamic resource scheduling. Computational time grows in direct correlation with the training sample size and parameter dimensions as each edge node in the federated learning phase executes numerous rounds of local model updates based on the data volume and model size. The processing cost is significantly increased by aggregating models across nodes, especially as the number of nodes involved grows. As the number of requests and types of resources grows, the computational demand for the mutable allocation mechanism, which involves repeatedly evaluating and ranking resource demands, increases. The joint execution of learning and allocation processes introduces compounded overhead, especially under high-frequency resource reconfiguration. While the system remains feasible for medium-scale deployments, efficiency degradation becomes noticeable in large-scale environments with high device density or frequent task arrivals.

Distributed federated learning (DFL) with mutable resource allocation (MRA) aims to decrease computational overhead at edge nodes while keeping accuracy by integrating lightweight model compression methods such as quantization and pruning. This approach aims to balance system performance and complexity. MRA dynamically reallocates processor, memory, and bandwidth resources to provide adaptive control over system load according to task priorities and real-time node statuses. By executing intermediate model fusion at cluster levels before global synchronization, hierarchical aggregation procedures in DFL decrease communication latency. Optimized resource profiles and gradient contribution scores are given priority by client selection algorithms, which improve convergence efficiency and decrease computing cycles that are not essential.

5 Conclusion and future work

This article presents a technique for changeable resource allocation that utilizes DFL. Its purpose is to enhance the availability and service-based allocations of edge-IoT networks. The edge devices first provide smooth distributions by verifying resource availability and allocation. Over various time intervals and response instances, the verification takes advantage of the connection of the edge devices. Resource deficit is assessed if the resource discovery process or the triggering edge device connections are unavailable. DFL takes advantage of this estimate to suppress the issue in advance. Dispersed edge servers monitor device adaptability and user demand to determine whether to provide resources more often or add features to existing devices. The procedure is iterated until the connection between the edge devices and the resources is maximized. Instances based on the most recent connecting factor are used to conduct the mutable allocations utilizing the current and new edge devices. Consequently, the suggested approach decreases allocation time independent of user demands and edge devices. The suggested approach improves connectivity by 9.49%, resource allocation by 7.41%, and availability by 7.41%. Both backlogs and allocation time are cut by 11.42% and 11.75%, respectively, using this MRA-DFL. The study explores the scalability and adaptability of a DFL technique in large-scale edge-IoT networks, integrating advanced machine learning algorithms for dynamic resource allocation.

Availability of data and materials

The data that support the findings of this study are included within the manuscript.

Abbreviations

- IoT:

-

Internet of Things

- DRL:

-

Deep reinforcement learning

- D3CES:

-

Dynamic cloud and edge system

- QoS:

-

Quality of service

- SLA:

-

Service level agreement

- DFL:

-

Distributed federated learning

- MRA:

-

Mutable resource allocation

- SBG-DRA:

-

Stackelberg game-based dynamic resource allocation

- MEC:

-

Mobile edge computing

- CPS:

-

Cyber-physical systems

- MEC:

-

Multi-access edge computing ()

- MRAM:

-

Multi-objective resource allocation model

- DDL-CORA:

-

Distributed deep learning-based computation offloading and resource allocation

References

W. Bai, Y. Wang, Jointly optimize partial computation offloading and resource allocation in cloud-fog cooperative networks. Electronics 12(15), 3224 (2023)

X. Gao, R. Liu, A. Kaushik, H. Zhang, Dynamic resource allocation for virtual network function placement in satellite edge clouds. IEEE Trans. Netw. Sci. and Eng. 9, 2252–2265 (2022)

K. Li, J. Zhao, J. Hu, Y. Chen, Dynamic energy efficient task offloading and resource allocation for noma-enabled IoT in smart buildings and environment. Build. Environ. 226, 109513 (2022)

X. Shen, L. Wang, P. Zhang, X. Xie, Y. Chen, S. Lu, Computing resource allocation strategy based on cloud-edge cluster collaboration in internet of vehicles. IEEE Access 12, 10790–10803 (2024)

J. Lin, L. Huang, H. Zhang, X. Yang, P. Zhao, A novel lyapunov based dynamic resource allocation for UAVs-assisted edge computing. Comput. Netw. 205, 108710 (2022)

K. Lin, Y. Li, Q. Zhang, G. Fortino, AI-driven collaborative resousrce allocation for task execution in 6G-enabled massive IoT. IEEE Internet Things J. 8(7), 5264–5273 (2021)

M. Tay, A. Senturk, A research on resource allocation algorithms in content of edge, fog and cloud. Mater. Today: Proceed. 81, 26–34 (2022)

L. Zhang, B. Cao, G. Feng, Opportunistic admission and resource allocation for slicing enhanced IoT networks. Digit. Commun. Netw. 9, 1465–1476 (2022)

W. Wu, S. Hu, D. Lin, G. Wu, Reliable resource allocation with RF fingerprinting authentication in secure IoT networks. Sci. chin. Inf. Sci. 65(7), 1–16 (2022)

Liu, X. (2021). Towards blockchain-based resource allocation models for cloud-edge computing in iot applications. Wireless Personal Communications, 1–19.

Anjum, M., Shahab, S., Whangbo, T., & Ahmad, S. (2023). Preventing overloaded dissemination in healthcare applications using NonDelay tolerant dissemination technique. Heliyon, 9(8).

E. Tanghatari, M. Kamal, A. Afzali-Kusha, M. Pedram, Distributing DNN training over IoT edge devices based on transfer learning. Neurocomputing 467, 56–65 (2022)

X. Zhang, Y. Wang, DeepMECagent: multi-agent computing resource allocation for UAV-assisted mobile edge computing in distributed IoT system. Appl. Intell. 53, 1–12 (2022)

N.E. Nwogbaga, R. Latip, L.S. Affendey, A.R.A. Rahiman, Investigation into the effect of data reduction in offloadable task for distributed IoT-fog-cloud computing. J. Cloud Comput. 10(1), 1–12 (2021)

U.S. Begum, Federated and multi-modal learning algorithms for healthcare and cross-domain analytics. PatternIQ Min. 1(4), 38–51 (2024). https://doi.org/10.70023/sahd/241104

J. Ahmed, M.A. Razzaque, M.M. Rahman, S.A. Alqahtani, M.M. Hassan, A stackelberg game-based dynamic resource allocation in edge federated 5G network. IEEE Access 10, 10460–10471 (2022)

H. Gao, X. Wang, W. Wei, A. Al-Dulaimi, Y. Xu, Com-DDPG: task offloading based on multi-agent reinforcement learning for information-communication-enhanced mobile edge computing in the internet of vehicles. IEEE Trans. Vehicular Technol. 73, 348–361 (2023)

J. Vergara, J. Botero, L. Fletscher, A comprehensive survey on resource allocation strategies in fog/cloud environments. Sensors 23(9), 4413 (2023)

W. Tushar, C. Yuen, T.K. Saha, S. Nizami, M.R. Alam, D.B. Smith, H.V. Poor, A survey of cyber-physical systems from a game-theoretic perspective. IEEE Access 11, 9799–9834 (2023)

S. Bolettieri, R. Bruno, E. Mingozzi, Application-aware resource allocation and data management for MEC-assisted IoT service providers. J. Netw. Comput. Appl. 181, 103020 (2021)

T. Du, C. Li, Y. Luo, Latency-aware computation offloading and DQN-based resource allocation approaches in SDN-enabled MEC. Ad Hoc Netw. 135, 102950 (2022)

Y. Xie, Y. Kong, L. Huang, S. Wang, S. Xu, X. Wang, J. Ren, Resource allocation for network slicing in dynamic multi-tenant networks: a deep reinforcement learning approach. Comput. Commun. 195, 476–487 (2022)

M. Nematollahi, A. Ghaffari, A. Mirzaei, Task offloading in Internet of Things based on the improved multi-objective aquila optimizer. Signal, Image and Video Process. 18, 1–8 (2023)

Q. Liu, R. Mo, X. Xu, X. Ma, Multi-objective resource allocation in mobile edge computing using PAES for Internet of Things. Wireless Netw. 30, 1–13 (2020)

Z. Xiang, Y. Zheng, D. Wang, M. He, C. Zhang, Z. Zheng, Robust and Cost-effective Resource Allocation for Complex IoT Applications in Edge-Cloud Collaboration. Mobile Netw. and Appl. 27, 1–14 (2022)

X. Chen, G. Liu, Energy-efficient task offloading and resource allocation via deep reinforcement learning for augmented reality in mobile edge networks. IEEE Internet Things J. 8(13), 10843–10856 (2021)

J. Feng, L. Liu, Q. Pei, F. Hou, T. Yang, J. Wu, Service characteristics-oriented joint optimization of radio and computing resource allocation in mobile-edge computing. IEEE Internet Things J. 8(11), 9407–9421 (2021)

Z. Wang, T. Lv, Z. Chang, Computation offloading and resource allocation based on distributed deep learning and software defined mobile edge computing. Comput. Netw. 205, 108732 (2022)

X. Huang, W. Zhang, J. Yang, L. Yang, C.K. Yeo, Market-based dynamic resource allocation in mobile edge computing systems with multi-server and multi-user. Comput. Commun. 165, 43–52 (2021)

H. Wang, P. Xiao, X. Li, Channel parameter estimation of mmWave MIMO system in urban traffic scene: a training channel-based method. IEEE Trans. Intell. Transp. Syst. 25(1), 754–762 (2022)

X. Li, J. Zhao, G. Chen, W. Hao, D.B. Da Costa, A. Nallanathan, C. Yuen, STAR-RIS assisted covert wireless communications with randomly distributed blockages. IEEE Trans. Wireless Commun. (2025). https://doi.org/10.1109/TWC.2025.3543421

L. Zhi, N. Hehao, H. Yuanzhi, A. Kang, Z. Xudong, C. Zheng, X. Pei, Self-powered absorptive reconfigurable intelligent surfaces for securing satellite-terrestrial integrated networks. Chin. Commun. 21(9), 276–291 (2024)

Z. Lin, H. Niu, K. An, Y. Wang, G. Zheng, S. Chatzinotas, Y. Hu, Refracting RIS-aided hybrid satellite-terrestrial relay networks: Joint beamforming design and optimization. IEEE Trans. Aerosp. Electron. Syst. 58(4), 3717–3724 (2022)

Z. Lin, M. Lin, B. Champagne, W.P. Zhu, N. Al-Dhahir, Secrecy-energy efficient hybrid beamforming for satellite-terrestrial integrated networks. IEEE Trans. Commun. 69(9), 6345–6360 (2021)

Acknowledgements

The authors extend their appreciation to the Deanship of Research and Graduate Studies at King Khalid University for funding this work through Large Research Project under grant number RGP2/379/46. This research was supported by the Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2025R259), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Funding

This study is supported via funding from Prince Sattam bin Abdulaziz University project number (PSAU/2025/R/1446). This work was supported by the Researchers Supporting Project Number (UM-DSR-IG-2023-07) Almaarefa University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

Asma Aldrees helped in methodology, validation, formal analysis, resources, writing—review & editing, visualization, and funding acquisition. Ashit Kumar Dutta helped in methodology, resources, writing—review & editing, visualization, and funding acquisition. Ahmed Emara helped in conceptualization, methodology, resources, writing—original draft, and writing—review & editing. Sana Shahab helped in conceptualization, methodology, software, data curation, writing—original draft, writing—review & editing, visualization, and funding acquisition. Zaffar Ahmed Shaikh helped in methodology, validation, formal analysis, resources, data curation, and writing—review & editing. Yousef Ibrahim Daradkeh helped in methodology, validation, formal analysis, resources, data curation, and funding acquisition. Mohd Anjum helped in conceptualization, methodology, software, writing—original draft, and writing—review & editing.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no conflicts of interest and have no financial interest to report.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Aldrees, A., Dutta, A.K., Emara, A. et al. Enhancing dynamic resource management in decentralized federated learning for collaborative edge Internet of Things. J Wireless Com Network 2025, 32 (2025). https://doi.org/10.1186/s13638-025-02459-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1186/s13638-025-02459-8