Abstract

Compressive data gathering (CDG) is an adequate method to reduce the amount of data transmission, thereby decreasing energy expenditure for wireless sensor networks (WSNs). Sleep scheduling integrated with CDG can further promote energy efficiency. Most of existing sleep scheduling methods for CDG were formulated as centralized optimization problems which introduced many extra control message exchanges. Meanwhile, a few distributed methods usually adopted stochastic decision which could not adapt to variance in residual energy of nodes. A part of nodes were prone to prematurely run out of energy. In this paper, a reinforcement learning-based sleep scheduling algorithm for CDG (RLSSA-CDG) is proposed. Active nodes selection is modeled as a finite Markov decision process. The mode-free Q learning algorithm is used to search optimal decision strategies. Residual energy of nodes and sampling uniformity are considered into the reward function of the Q learning algorithm for load balance of energy consumption and accurate data reconstruction. It is a distributed algorithm that avoids large amounts of control message exchanges. Each node takes part in one step of the decision process. Thus, computation overhead for sensor nodes is affordable. Simulation experiments are carried out on the MATLAB platform to validate the effectiveness of the proposed RLSSA-CDG against the distributed random sleep scheduling algorithm for CDG (DSSA-CDG) and the original sparse-CDG algorithm without sleep scheduling. The simulation results indicate that the proposed RLSSA-CDG outperforms the two contrast algorithms in terms of energy consumption, network lifetime, and data recovery accuracy. The proposed RLSSA-CDG reduces energy consumption by 4.64% and 42.42%, respectively, compared to the DSSA-CDG and the original sparse-CDG, prolongs life span by 57.3%, and promotes data recovery accuracy by 84.7% compared to the DSSA-CDG.

Similar content being viewed by others

1 Introduction

Wireless sensor networks (WSNs) consist of a large amount of tiny sensor nodes which can collect physical information from real world for Internet of things (IoT) to make intelligent decisions. Sensor nodes in WSNs collect data and transmit it to the sink through wireless links. They usually have limited energy and computation and storage resources. Due to the expansion of IoT applications [1], the scale of WSNs is greatly extended. This is followed by a challenge of handling a large amount of data transmissions in resource-limited WSNs. Compressive data gathering (CDG) is an effective method to meet this challenge [2,3,4,5,6,7]. CDG applies compressive sensing (CS) to data gathering in WSNs [8]. Different from traditional compression algorithms, CDG compresses sensing data while sampling and greatly cuts down data transmissions. Meanwhile, the computation complexity of CDG is light-weighted, and prior and global information is not required. It is very suitable for resource-limited WSNs.

Sparse-CDG is one of significant CDG methods. In sparse-CDG, only a part of nodes are selected to sense data and constitute CS measurements for data recovery. From the aspect of energy efficiency, these nodes which are not chosen to participate in sparse CS sampling can switch to sleep mode for energy conservation. Hence, introducing sleep scheduling into CDG scheme can promote energy efficiency which is benefit to prolong life span of WSNs. References [9,10,11,12,13,14] proposed several different sleep scheduling methods for CDG. References [9,10,11] adopted centralized optimization and control methods that require lots of additional transmissions of control information. References [13, 14] randomly scheduled parts of nodes into active or asleep states. These stochastic methods cannot perceive residual energy fluctuations of nodes which may result to uneven energy expenditure among nodes and life span shortening in WSNs. Thus, the load balance of energy expenditure during the process of sleep scheduling for CDG can prolong lifetime of WSNs.

Reinforcement learning (RL) is a machine learning approach. RL imitates human’s behavior of learning from interaction with ambient environments. A RL agent observes environments and then takes an action and receives rewards of this action. So, the learning agent can adapt to variations in surroundings. Besides, RL algorithms are very flexible. Complexity of a RL algorithm can be tailored to a concrete problem. Hence, it is an adequate intelligent algorithm for resource-limited WSNs. References [15,16,17,18,19] applied various RL-based algorithms to restore coverage holes and plan routing paths, etc., in WSNs.

In order to achieve load balance of energy expenditure among nodes and then prolong life span of WSNs, a RL-based sleep scheduling algorithm for CDG (RLSSA-CDG) is proposed in this paper. Sleep/active state transition of nodes is treated as a finite Markov decision process (MDP). This MDP problem can be solved by the mode-free Q learning algorithm. The RLSSA-CDG is a distributed sleep scheduling approach. All the nodes cooperatively maintain a common Q table, and each node holds one row of this Q table. The main contributions of this paper are summarized as follows:

-

1.

A distributed RL-based sleep scheduling approach is integrated into the sparse-CDG scheme for promotion of energy efficiency and longevity of WSNs.

-

2.

The proposed algorithm perceives variations of residual energy among nodes and then performs an adaptive node scheduling strategy. Residual energy of nodes is consumed evenly which prevent a fraction of nodes from prematurely exhausting their energy and then shortening life span of WSNs.

-

3.

Sampling uniformity is considered into the reward function of the RLSSA-CDG which aims to evenly sample sensing data and accurately reconstruct the original data.

-

4.

It is a distributed method which is realizable in resource-limited WSNs. Each node participates in one step of the decision process. It is an affordable computation overhead for sensor nodes.

The rest of this paper is organized as follows. Section II gives a brief introduction to related works. Section III describes the system model of the proposed RLSSA-CDG. Detailed explanations of the algorithm are presented in Section IV. The results of simulation experiments for performance evaluation are shown in Section V. At last, Section VI summarizes this paper. For ease of reading, Table 1 lists notations used in this paper.

2 Related works

In this section, a review of research status related with our work is presented. Sleep scheduling is widely applied due to data redundancy caused by dense deployment of nodes in WSNs. It devotes to maintain coverage and connectivity of WSNs by least number of active nodes. It usually cooperates with other technologies to improve energy efficiency.

Mhatre et al. [20] proposed a sleep scheduling algorithm for opportunistic routing in one-dimensional queue networks. Sleep intervals of nodes were computed based on flow rate and residual energy. Mukherjee et al. [21] used sleep scheduling to compensate uneven energy harvesting intervals in hybrid solar energy harvesting industrial WSNs. Several non-harvesting nodes were active in less energy harvesting intervals and went to sleep in higher energy harvesting intervals. Reference [22] combined sleep scheduling with mobile wireless charging vehicles in rechargeable WSNs. Sensor nodes with low energy that could not keep alive before getting charged were arranged to sleep. Shagari et al. [23] aimed to save energy expenditure of idle listening in sleep scheduling. Nodes were grouped into pairs. A pair of nodes were alternately in active/sleep mode based on their remaining energy and traffic rate which avoid idle listening. Lin et al. [24] jointly considered deployment and sleep scheduling. They formulated a deployment problem with optimal active cover node sets. References [25, 26] proposed sleep scheduling algorithms based on redundant data. Reference [25] converted correlative nodes into a graph and adopted a coloring-map algorithm to select active nodes. In [26], if the difference of successive sensing data was zero or less than a threshold, this node went to sleep. References [27, 28], respectively, adopted a particle swarm optimization algorithm, an adaptive learning automata algorithm, and a deep reinforcement learning algorithm to choose active/sleep nodes. All these previous researches confirmed that various sleep scheduling methods performed well in promotion of energy efficiency in WSNs. However, the above works neglected the problem caused by high-dimensional data transmission. Sleep scheduling combined with data compression can further cut down energy consumption of WSNs.

It is proven that CDG is an adequate method for data dimension reduction in WSNs. Jain et al. [29] combined sparse-CDG with an energy harvesting technique. By virtue of sparse-CDG, very few nodes were required to sense and transmit data. The rest of nodes collected energy from surrounding circumstances. In [30, 31], meta-heuristic algorithms were introduced to CDG to search optimal solutions. In [30], a multi-objective genetic algorithm was used to determine the optimal number of CS measurements and the measurement matrix. Aziz et al. [31] searched the optimal sensing matrix with minimal mean square error of recovery data by a gray wolf optimization algorithm. Reference [32] firstly grouped sensor nodes into clusters according to data correlation. The required number of CS measurements in CDG was reduced. Then, an unmanned aerial vehicle was used to aid data gathering for energy conservation of cluster heads. In [33], a seed estimation algorithm produced an adaptive dynamic random seed by which the best random measurement matrix was generated with minimal reconstruction error. In [34], a deep learning network was trained to obtain a learned measurement matrix and a high-performance reconstructor. From these published works, we notice that the integration of CDG and other techniques represents the research trends of CDG. And CDG integrated with sleep scheduling is good at promoting energy efficiency of WSNs.

As mentioned before, references [9,10,11,12,13,14] proposed various sleep scheduling strategies for CDG. Chen et al. formulated the selection of active nodes as two centralized optimization problems which aimed at reaching the respective goals of minimizing required active nodes in [9] and sampling cost in [10]. Wu et al. [11] also used centralized sleep scheduling in which active nodes were selected by the gateway according to compression ratio. However, amounts of additional transmissions of control messages were inevitable in these centralized approaches. Manchanda et al. [12] adopted an alternately active manner between a pair of adjacent nodes. One node did not wake up until the other one run out of energy. References [13, 14] used distributed and stochastic sleep scheduling ways. Although these distributed methods averted frequently control message exchanges, nodes were activated randomly based on a certain probability which was independent of residual energy in nodes. Thus, the load balance of energy expenditure was not assured.

References [35, 36] and previous [17,18,19] adopted RL-based sleep scheduling algorithms in WSNs. According to RL algorithms, nodes observed surroundings and then autonomously decided their active/sleep states. It made nodes intelligent and adaptive to variances in WSNs. Although these existing works did not consider CDG scheme, they provided us a RL-based solution for this article. Thus, the proposed distributed RLSSA-CDG is designed based on RL frame that can perceive residual energy of nodes in order to achieve load balance of energy expenditure, thereby prolonging life span of WSNs.

3 System models and problem formulation

In this section, the wireless sensor network model, the sparse-CDG model, and the energy consumption model are introduced, respectively. And then, the problem is formulated.

3.1 Wireless sensor network model

A two-dimensional wireless sensor network (WSN) is adopted as the network model in this paper, as shown in Fig. 1. N static sensor nodes are randomly and uniformly deployed in a two-dimensional monitoring area Z. Nodes are powered by batteries and not rechargeable. These nodes continuously monitor physical phenomenon (such as temperature, humidity, and pressure) in the area Z and periodically report their sensing data to the sink through multi-hop routing paths. The sink is located at the center of Z and equipped with unrestricted storage and computation resources.

We denote SN as the set of all sensor nodes, i.e., \(SN = \{ sn_{1} ,sn_{2} , \ldots ,sn_{N} \}\). Each node sni in SN is homogeneous. Initial energy of sni is E0 and communication radius is R. Nodes located within the range of R are defined as neighbors of sni. \(Nbr_{i} = \{ sn_{i1} ,sn_{i2} , \ldots ,sn_{iw} \}\) represents the set of all neighbors of sni. Sensing data is denoted as the data vector \({\mathbf{X}} = \{ x_{1} ,x_{2} , \ldots ,x_{N} \}\), \({\mathbf{X}} \in {\mathbf{R}}^{{N \times {1}}}\), where xi is the sensing data of sni.

The sparse-CDG scheme in [7] combined with a RL-based sleep scheduling is employed in the WSN. A part of nodes are active and responsible to gather sensing data and the rest nodes are asleep. The process of data gathering is divided into two phases, that is, the sampling phase (the blue path in Fig. 1) and forwarding phase (the green path in Fig. 1). The whole WSN is a RL agent. The Q learning algorithm is used to select active nodes in data gathering from the two aspects of remaining energy and number of active times with the purpose of evenly energy consumption and accurate data reconstruction.

3.2 Sparse-CDG model

According to the CS theory [8], a sparse or compressible signal (data vector) can be reconstructed from far fewer samples than the number required by the traditional Nyquist Theorem. A sparse data vector means there are only K nonzero elements in this vector. A compressible data vector means that its transform in some domains is sparse. Then, the sparsity of this vector is defined as K. Due to spatial and temporal correlation, a vector X of sensing data in WSNs is generally compressible.

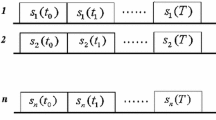

This compressible N-dimensional data vector X can be compressed to an M-dimensional vector y, as depicted in Eq. (1),

where \(y_{i} = \sum\nolimits_{j = 1}^{N} {\varphi_{ij} x_{j} }\) is termed as a CS measurement and \({{\varvec{\Phi}}}\) is called the measurement matrix or projection matrix and \(M < < N\). A row in \({{\varvec{\Phi}}}\) corresponds to once collection of a CS measurement. A column in \({{\varvec{\Phi}}}\) corresponds to M samplings of a node.

If the measurement matrix \({{\varvec{\Phi}}}\) meets the restricted isometry property condition, the original data X can be exactly reconstructed by M measurements in y. The independently and identically distributed Gaussian random measurement matrix and Bernoulli random matrix are universal CS measurement matrices. Besides, the measurement matrix \({{\varvec{\Phi}}}\) can be a sparse random matrix [37] as in Eq. (2),

where s is a constant to control the probability. Each element \(\varphi_{ij}\) in \({{\varvec{\Phi}}}\) equals nonzero with the probability \(\frac{1}{s}\). If \(\varphi_{ij} = 0\), the corresponding node is not sampled in a measurement. Sparse-CDG adopts a sparse random matrix as its measurement matrix and samples partial nodes to form CS measurements.

Data reconstruction can be implemented by solving the convex optimization problem in Eq. (3),

where \({{\varvec{\uptheta}}}\) is a sparse vector which is the transform of the compressive data set X under an orthogonal sparse basis \({{\varvec{\Psi}}}\). If X is a sparse vector, then \({{\varvec{\Psi}}}\) is the identity matrix.

3.3 Energy consumption model

A sensor node is typically composed of sensing module, data processing module, and wireless communication module. Wireless communication module expends most of energy and data processing module takes second place. In a sleep scheduling algorithm, active nodes handle transmitting and receiving data. Sleep nodes consume very little energy which is usually negligible. The classical energy consumption model of a sensor node in reference [38] is adopted in this paper. Computation formulae of energy consumption are shown in Eqs. (4)–(6).

where ETx and ERx represent the energy expenditures of transmitting and receiving l bits data. ETx composes energy depletion in processing circuits (ETx-elec) and transmitted amplifiers (ETx-amp), while ERx mainly considers energy consumption in processing circuits (ERx-elec). Processing circuits consume energy of Eelec = 50nJ/bit when transmitting or receiving 1 bit data. Euclidean distance between a pair of transmitter and receiver nodes is represented as d. And d0 is the reference distance in free space which is calculated by Eq. (6). When d < d0, the channel model in free space \((\varepsilon_{{{\text{fs}}}} = 10\;{\text{pJ/bit/m}}^{{2}} )\) is adopted; otherwise, the multi-path fading channel model \((\varepsilon_{{{\text{mp}}}} = 0.0013\;{\text{pJ/bit/m}}^{4} )\) is used.

3.4 Problem formulation

3.4.1 Definition of FND

The lifetime of WSNs means continuous working duration. When a node depletes its energy, we say the node dies. For data integrity, we usually take first node dies (FND) as the parameter of the lifetime of WSNs. FND is defined as the number of working rounds accomplished by a WSN before the first node dies.

3.4.2 Definition of MSE

Data recovery error is evaluated by mean square error (MSE) in Eq. (7), where \({\hat{\mathbf{X}}}\) is a recovery dataset.

3.4.3 Problem formulation

In this article, we model the WSN as a RL agent which successively wakes up asleep nodes to complete compressive data gathering. The objective is prolonging FND of the WSN with assurance of qualified MSE through achieving load balance of energy expenditure and sampling uniformity among nodes in the proposed sleep scheduling strategy. In the RL algorithm, this objective is assured by reward schemes. The goal of the RL agent is to maximize its received rewards. Thus, the objective function of this article is formulated in Eq. (8) as follows:

where \(\Pi\) is the sleep scheduling strategy, ak represents an action of the RL agent, and Rk+1 is the reward for ak.

4 Algorithm description

Specific descriptions of the proposed RLSSA-CDG algorithm are presented in this section. It is a sleep scheduling strategy for sparse-CDG with the purpose of promoting energy efficiency of WSNs. It is based on the RL frame and can be aware of residual energy of nodes which aim at achieving load balance of energy consumption. It is devoted to prolong lifetime of WSNs.

The RLSSA-CDG performs in the manner of rounds. Each round is divided into three phases, i.e., the initialization phase, the sampling phase, and the forwarding phase, as shown in Fig. 2. The initialized phase randomly generates M initial active nodes to start CS measurements. The sampling phase executes sparse-CDG through successively waking up active nodes. The forwarding phase finishes transmitting collected CS measurements to the sink through shortest routing paths.

4.1 Sleep scheduling mechanism

As mentioned in Section III, sparse-CDG samples a part of nodes to collect CS measurements. Those unsampled nodes can switch to sleep mode for energy conservation. It is the foundation of the proposed algorithm. Besides, the coverage and connectivity of the network by active nodes is the chief premise of a sleep scheduling mechanism.

Compared to traditional sleep scheduling, the coverage problem in the proposed algorithm converts to the requirement of accurate data recovery in sparse-CDG. It is proven in [7] that M = O(Klog(N/K)) independently sampled measurements with the number of t = O(N/K) sampled nodes are sufficient to reconstruct the original K-sparse data vector. That is, M times of independently and evenly sampling with t sampled nodes each time in the network meet the requirement of accurate data recovery in sparse-CDG.

The connectivity of the network is assured by successively arousing neighbor nodes in the proposed algorithm. The process of the proposed sleep scheduling mechanism is explained as follows. In the initialization phase, each node generates a random number λ with uniform distribution between 0 and 1. If λ is less than the threshold M/N, the node is active. In other words, there are around M initial nodes to start M CS measurements in the WSN. The rest nodes go to sleep mode. In the sampling phase, these initial nodes sense data and wake up one of their neighbors in Nbri based on the local Q table and then transmit sensing data to newly active nodes. Thereafter, these initial nodes return sleep mode and newly active neighbors repeat the above process until the required number t of samples are collected. In the forwarding phase, the last nodes in the sampling phase are responsible to send collected CS measurements to the sink through shortest routing paths. Then, the sink reconstructs data of the whole WSN based on received CS measurements.

The process of successively selecting active nodes can be modeled as a finite MDP. So, it can be treated as a RL task. The optimal policy of active nodes selection can be well solved by a Q learning algorithm. Q learning is one of the temporal-difference learning methods and can learn directly from raw experience without a model of the environment’s dynamics. Detailed description of the proposed Q learning algorithm is presented in the next part.

4.2 Q learning-based active nodes selection method

(1) Reinforcement learning: RL is one of machine learning algorithms. This algorithm imitates human’s behavior and learns from interaction with ambient environments. A learning agent attempts to find out correct actions through continuously trying rather than to be told what to do. The process of interaction between an agent and environments can be modeled as a finite MDP as shown in Fig. 3. At a time step k, the agent observes environments and gets a current state sk. Then, it takes action ak based on its observations. The action ak changes the environment into a new state sk+1. And then, the agent receives a reward Rk+1 from the environment.

The agent’s objective is to maximize total rewards over the long run. Thus, finding the optimal policy that achieves a lot of reward over the long run is a main task of a RL algorithm. Mean reward of an action is defined as value. In MDP, we use state–action value function Q(s,a) to evaluate actions. Q(s,a) represents the value of action a in state s. Thus, an optimal policy \(\pi\) can be defined as the policy resulted in maximum value as in Eq. (9).

For a known environment model, the best policy can be calculated through solving the Bellman optimality equation. However, environments in WSNs are usually unknown. Thus, we adopt the model-free Q learning algorithm in the RLSSA-CDG. The Q learning algorithm learns directly from raw experiences and updates the Q value function based on part of previous estimates without waiting for a final outcome. The iteration equation of Q(s,a) in Q learning is shown in Eq. (10).

where sk and sk+1 represent states at time k and k + 1; A is the set of all actions; ak, \(a_{k + 1} \in A\); ak and ak+1 represent actions at time k and k + 1; rk+1 is the instant reward of the action ak; \(\alpha\) is the learning parameter used to control the convergence speed; and \(\gamma \in [0,1][0,1]\) is the discount rate that balances instant rewards and long-run rewards.

If states of a Q learning problem are discrete, a Q table is usually adopted to record updates of Q value in Eq. (10). In other words, the Q table is the brain of an agent. In this work, the states are discrete and finite (see next part). A common Q table is established and maintained by the whole WSN, and each node holds one row of this Q table.

(2) Parameters design: The proposed RLSSA-CDG aims to select proper active nodes on the premise of load balance of energy consumption and accurate data recovery. The aim is achieved by designing reasonable parameters of the Q learning algorithm. The parameters of the Q learning algorithm consist of state vector S, action set A, and reward function R, which are described as follows:

(i) The state vector S.

The state vector S is the set of all states. A current active node sni is defined as a current state sk. In the current state sk, the agent takes an action of waking up one neighbor of the node sni. Then, this newly active node becomes the next state sk+1. All nodes will be active; thus, the states vector S is defined as the set of all nodes, as in Eq. (11).

(ii) The action set A.

The agent selects an action ak from the action set A, and the current state sk is transited to the next state sk+1. Considering connectivity of the WSN, the current active node needs to wake up one of its neighbors. In other words, the agent selects one neighbor of the current active node (the current state) as the newly active node (the next state). So, the action set A is designed as the neighbor set Nbri of the current active node sni, as in Eq. (12).

where sniw represents the ith node owns w neighbors.

(iii) The reward function R.

The RL agent can work as expected due to a well-designed reward scheme. In this work, we expect that nodes equally consume energy for load balance and nodes can be evenly sampled for accurate data reconstruction. Thus, two factors, i.e., residual energy ER and active times TA in a round of nodes, are incorporated into the reward function R. R is defined as in Eqs. (13)–(15).

where re is the reward for residual energy ER. ra is the reward for active times TA in a round. re is in direct proportion to residual energy ER. The less residual energy ER is, the less reward re is. ra is inversely proportional to active times TA in a round. If TA = 0, the node is not sampled yet, and it will have maximum reward ra and is prone to be sampled. \(\beta \in [0,1]\) is a constant coefficient used to balance the two factors of rewards. It is a simple and linear reward function. Computation resources of the WSN are bearable.

(iv) The action selection strategy.

The \(\varepsilon { - }greedy\) rule is adopted as the action selection strategy in this work. The agent selects the action with maximal Q value (Eq. (9)) with probability \(1 - \varepsilon\). Besides, in order to prevent falling into a locally optimal solution, the agent will explore other non-optimal actions and randomly choose an action from A with probability \(\varepsilon\).

(3) The procedure of RLSSA-CDG: The complete procedure of RLSSA-CDG is summarized in this part. The whole WSN is a learning agent, and nodes successively perform learning tasks in a distributed manner. The completion of collecting a CS measurement is an episode. The agent is required to finish a series of episodic tasks and then accumulates experiences of correct actions in forms of the updated Q table.

Table 2 gives descriptions of the pseudocode for the proposed algorithm. Each node sni creates an initial row Qi of the Q table and the corresponding action set Ai. The algorithm operates in rounds, and the maximum iteration number is denoted as rmax. The beginning of a round is triggered by the START message of the sink. There are three phases in a round, i.e., the initialization phase (lines 4–10), the sampling phase (lines 14–25), and the forwarding phase (lines 26). M active nodes are randomly generated to start CS measurements in the initialization phase. Then, the rest nodes switch to sleep mode. In the sampling phase, these M initial nodes begin to execute the learning algorithm. In the first round, the agent has no experience and the Q table equals zero. So, the agent randomly selects an action from Ai. But in the remaining rounds, the agent chooses the action with the maximal Q value with probability \(1 - \varepsilon\) and takes a random action with probability \(\varepsilon\). The M initial nodes can perform learning episodes in either parallel or serial manner. A learning episode is described as follows. An initial active node sni wakes up the selected neighbor snij and transmits its sensing data xi to this newly active node. snij receives xi and adds it to its own data xj. The reward Rk+1 is calculated by snij in the state sk+1 and then sent back to sni. sni updates its Q table and then goes to sleep. The newly active node snij repeats the above process. The episode is lasted until t nodes have been activated. The IDs of successive active nodes in a learning episode are recorded in a sequence path which is used to generate the CS measurement matrix for data reconstruction. In the forwarding phase, the last active nodes in episodes send collected data (i.e., CS measurements) to the sink by the shortest path routing strategy [39]. After receiving all measurements, the sink generates the measurement matrix based on the vector path and recovers the original data (line 28). And then, the sink broadcasts START message for the next round.

5 Performance evaluations

In this section, simulation experiments are implemented to evaluate the performance of the proposed RLSSA-CDG algorithm. We use MATLAB as the simulation platform. The simulation parameters are set as follows. A total of 512 sensor nodes are randomly and evenly distributed in a 2000 m × 1000 m monitoring area Z. The sink is located at the center of this area Z. The communication radius of nodes is set as 140 m. The length of a sensing data packet is set as 500 bits. Other specific parameters of the energy consumption model and the Q learning algorithm in the simulation are listed in Table 3.

We compare the proposed RLSSA-CDG with the distributed sleep scheduling algorithm for CDG (DSSA-CDG) [14] and the original sparse-CDG without sleep scheduling [7]. The DSSA-CDG is similar to the proposed method. The difference is that nodes in the DSSA-CDG are randomly activated according to the predetermined probability threshold. And data sampling and forwarding are based on random walk. The original sparse-CDG does not use sleep scheduling. The simulation programs are run on MATLAB R2018 and a PC with the 64-bit Microsoft Windows 10 operating system, 16 GB RAM, and 3 GHz Intel Core i5-8500 CPU. In order to avert random errors, each experiment is performed ten times and an average result is calculated.

5.1 Energy consumption

Energy efficiency is the main objectives of various technologies in WSNs. Due to parts of nodes are in energy-saving sleep mode, it is obvious that sleep scheduling can reduce energy expenditure of WSNs. The simulation result in Fig. 4 validates this conclusion. Both the proposed RLSSA-CDG and the DSSA-CDG are CDG methods integrated with sleep scheduling, and their energy expenditures are distinctly lower than the original sparse-CDG without sleep scheduling in Fig. 4. Furthermore, the proposed RLSSA-CDG slightly outperforms the DSSA-CDG in latter rounds. The advantage of the RL algorithm appears in about 140th round. It is indicated that the RL agent requires a process to accumulate experiences of correct actions. In the last round of the simulation, the proposed RLSSA-CDG cuts down the accumulated energy consumption by 4.64% and 42.42%, respectively, compared to the DSSA-CDG and the original sparse-CDG.

5.2 Number of alive nodes

Nevertheless, minimum energy consumption does not mean maximum longevity of WSNs. As aforementioned, FND is usually defined as the lifetime of WSNs. Thus, the lifetime of WSNs is determined by the number of alive nodes. Load balance of energy consumption among nodes which can prevent several nodes from prematurely exhausting their energy is an important factor affecting FND. It is a main consideration of the proposed RLSSA-CDG. Figure 5 gives the simulation result of the number of alive nodes in the proposed RLSSA-CDG, the DSSA-CDG, and the original sparse-CDG. The result confirms the effectiveness of the RLSSA-CDG for prolonging the life span of WSNs. The FND of the three algorithms is denoted as FNDRLSSA = 442, FNDDSSA = 281, and FNDspars-CDG = 51. The lifetime of the WSN in the proposed RLSSA-CDG is prolonged by 57.3% compared to the DSSA-CDG. Besides, from Fig. 5, the death speed of nodes in the RLSSA-CDG is much slower than the other two algorithms.

5.3 Data recovery accuracy

After receiving CS measurements, the sink reconstructs the original data X. We generate a compressible (sparse in frequency domain) synthetic data set as the sensing data set X for experiments. The CVX toolbox for MATLAB is used to solve the problem of data recovery in Eq. (3). If MSE of recovery data is no more than 10−2, data reconstruction is considered successful.

Figure 6 depicts the accumulated MSE of the three algorithms in 100 rounds. The accumulated MSE of the RLSSA-CDG is slightly lower than the other two algorithms. The average values of MSE are as follows: MSERLSSA = 0.002, MSEDSSA = 0.0128, MSEspars-CDG = 0.0132. It means that the three algorithms gain qualified accuracy of data recovery. Particularly, the RLSSA-CDG increases average data recovery accuracy by 84.7% compared to the DSSA-CDG.

6 Conclusions

In this article, a reinforcement learning-based sleep scheduling algorithm (RLSSA) for CDG is proposed. Sleep scheduling integrated with CDG can further improve energy efficiency and longevity of WSNs. The WSN acts as a RL agent which can perceive residual energy of nodes and active times in a round during the process of active nodes scheduling. Thus, nodes evenly consume energy in order to achieve better load balance of energy expenditure and prolong life span of the WSN. Besides, nodes can be sampled equally which assures precise data reconstruction. It is a RL-based distributed algorithm which is realizable for resource-limited WSNs. The selection of active nodes is modeled as a MDP. The Q learning algorithm is used to search the optimal decision strategy. The proposed algorithm is collaboratively executed by all the nodes in the WSN. Each node participates in one step of the decision process. So, computation overhead is acceptable for nodes.

The simulation experiments validate the effectiveness of the proposed algorithm. It is shown that the proposed RLSSA-CDG reduces energy consumption by 4.64% and 42.42%, respectively, compared to the DSSA-CDG and the original sparse-CDG, prolongs life span by 57.3%, and promotes data recovery accuracy by 84.7% compared to the DSSA-CDG. In future work, we will extend the learning algorithm to the data forwarding phase which currently uses the shortest path routing strategy. Then, the whole process of data gathering will be intelligent.

Availability of data and materials

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

Abbreviations

- CDG:

-

Compressive data gathering

- WSNs:

-

Wireless sensor networks

- IoTs:

-

Internet of things

- CS:

-

Compressive sensing

- RL:

-

Reinforcement learning

- MDP:

-

Markov decision process

References

Statista, Internet of Things (IoT) and Non-IoT Active Device Connections Worldwide From 2010 to 2025 (2021). http://www.statista.com/statistics/1101442/iot-number-of-connected-devices-worldwide/

C. Luo, F. Wu, J. Sun, and W. C. Chen, “Compressive data gathering for large-scale wireless sensor network,” in Fifth ACM International Conference on Mobile Computing and Networking (MOBICOM), Beijing, China (2009), pp. 145–156

C. Luo, F. Wu, J. Sun, W.C. Chen, Efficient measurement generation and pervasive sparsity for compressive data gathering. IEEE Trans. Wirel. Commun. 9(12), 3728–3738 (2010)

J. Luo and L. Xiang, “Does compressed sensing improve the throughput of wireless sensor networks?” in 2010 IEEE International Conference on Communications, Cape Town, South Africa (2010).

D. Ebrahimi, C. Assi, Optimal and efficient algorithms for projection-based compressive data gathering. IEEE Commun. Lett. 13(8), 1572–1575 (2013)

D. Ebrahimi, C. Assi, Compressive data gathering using random projection for energy efficient wireless sensor networks. Ad Hoc Netw. 16, 105–119 (2014)

H.F. Zheng, F. Yang, X.H. Tian, X.Y. Gan, X.B. Wang, Data gathering with compressive sensing in wireless sensor networks: a random walk based approach. IEEE Trans. Parallel Distrib. Syst. 26(1), 35–44 (2015)

E.J. Candѐs, M.B. Wakin, An introduction to compressive sampling. IEEE Signal Process. Mag. 25(2), 21–30 (2008)

W. Chen, I.J. Wassell, Optimized node selection for compressive sleeping wireless sensor networks. IEEE Trans. Veh. Technol. 65(2), 827–836 (2016)

W. Chen, I.J. Wassell, Cost-aware activity scheduling for compressive sleeping wireless sensor networks. IEEE Trans. Signal Process. 64(9), 2314–2323 (2016)

Y. Wu, Y. He, L. Shi, Energy-saving measurement in LoRaWAN-based wireless sensor networks by using compressed sensing. IEEE Access 8, 49477–49486 (2020)

R. Manchanda, K. Sharma, A novel framework for energy-efficient compressive data gathering in heterogeneous wireless sensor network. Int. J. Commun. Syst. 34(3), 4677 (2020)

Q. Ling, Z. Tian, Decentralized sparse signal recovery for compressive sleeping wireless sensor networks. IEEE Trans. Signal Process. 58(7), 3816–3827 (2010)

S. Mehrjoo, F. Khunjush, A. Ghaedi, Fully distributed sleeping compressive data gathering in wireless sensor networks. IET Commun. 14(5), 830–837 (2020)

N. Chauhan, P. Rawat, S. Chauhan, Reinforcement learning-based technique to restore coverage holes with minimal coverage overlap in wireless sensor networks. Arab. J. Sci. Eng. 47, 10847–10863 (2022)

A.F.E. Abadi, S.A. Asghari, M.B. Marvasti, G. Abaei, M. Nabavi, Y. Savaria, RLBEEP: Reinforcement- learning-based energy efficient control and routing protocol for wireless sensor networks. IEEE Access 10, 44123–44135 (2022)

H. Chen, X. Li, F. Zhao, A reinforcement learning-based sleep scheduling algorithm for desired area coverage in solar-powered wireless sensor networks. IEEE Sens. J. 16(8), 2763–2774 (2016)

P.S. Banerjee, S.N. Mandal, D. De, B. Maiti, RL-Sleep: temperature adaptive sleep scheduling using reinforcement learning for sustainable connectivity in wireless sensor networks. Sustain. Comput. Inform. Syst. 26, 1–18 (2020)

D. Ye, M. Zhang, A self-adaptive sleep/wake-up scheduling approach for wireless sensor networks. IEEE Trans. Cybern. 48(3), 979–992 (2018)

K.P. Mhatre, U.P. Khot, Energy efficient opportunistic routing with sleep scheduling in wireless sensor networks. Wirel. Pers. Commun. 112(2), 1243–1263 (2020)

M. Mukherjee, L. Shu, R.V. Prasad, D. Wang, G.P. Hancke, Sleep scheduling for unbalanced energy harvesting in industrial wireless sensor networks. IEEE Commun. Mag. 8, 197817–197827 (2019)

M. Tian, W. Jiao, G. Shen, The charging strategy combining with the node sleep mechanism in the wireless rechargeable sensor network. IEEE Access 8, 197817–197827 (2020)

N.M. Shagari, M.Y.I. Idris, R.B. Salleh, I. Ahmedy, G. Murtaza, H.A. Shehadeh, Heterogeneous energy and traffic aware sleep-awake cluster-based routing protocol for wireless sensor network. IEEE Access 8, 12232–12252 (2020)

C. Lin, Y. Peng, L. Chang, Z. Chen, Joint deployment and sleep scheduling of the internet of things. Wirel. Netw. 28(6), 2471–2483 (2022)

M. Ibrahim, H. Harb, A. Nasser, A. Mansour, C. Osswald, Aggregation-scheduling based mechanism for energy-efficient multivariate sensor networks. IEEE Sensors J. 22(16), 16662–16672 (2022)

H. Jaward, R. Nordin, A. Gharghan, A. Jawad, M. Ismail, M. Abu-AlShaeer, Power reduction with sleep/wake on redundant data (SWORD) in a wireless sensor network for energy-efficient precision agriculture. Sensors 18(10), 3450 (2018)

P. Rawat, S. Chauhan, Particle swarm optimization based sleep scheduling and clustering protocol in wireless sensor network. Peer-to-Peer Netw. Appl. 15(3), 1417–1436 (2022)

J. Akram, H.S. Munawar, A.Z. Kouzani, M.A.P. Mahmud, Using adaptive sensors for optimized target coverage in wireless sensor networks. Sensors 22(1083), 1–23 (2022)

N. Jain, V.A. Bohara, A. Gupta, iDEG: integrated data and energy gathering framework for practical wireless sensor networks using compressive sensing. IEEE Sens. J. 19(3), 1040–1051 (2019)

M.A. Mazaideh, J. Levendovszky, A multi-hop routing algorithm for WSNs based on compressive sensing and multiple objective genetic algorithm. J. Commun. Netw. 23(2), 138–147 (2021)

A. Aziz, W. Osamy, A.M. Khedr, A.A. EI-Sawy, K. Singh, Grey wolf based compressive sensing scheme for data gathering in IoT based heterogeneous WSNs. Wirel. Netw. 26(5), 3395–3418 (2020)

C. Lin, G.J. Han, X.Y. Qi, J.X. Du, T.T. Xu, M.M. Garcla, Energy-optimal data collection for unmanned aerial vehicle-aided industrial wireless sensor network-based agricultural monitoring system: a clustering compressed sampling approach. IEEE Trans. Ind. Inf. 17(6), 4411–4420 (2021)

A. Aziz, K. Singh, W. Osamy, A.M. Khedr, An efficient compressive sensing routing scheme for Internet of things based wireless sensor networks. Wirel. Pers. Commun. 114(3), 1905–1925 (2020)

M.Q. Zhang, H.X. Zhang, D.F. Yuan, M.G. Zhang, Learning-based sparse data reconstruction for compressed data aggregation in IoT networks. IEEE Internet Things J. 8(14), 11732–11742 (2021)

R. Sinde, F. Begum, K. Njay, S. Kaijage, Refining network lifetime of wireless sensor network using energy-efficient clustering and DRL-based sleep scheduling. Sensors 20(5), 1540 (2020)

Z. Guo, H. Chen, A reinforcement learning-based sleep scheduling algorithm for cooperative computing in event-driven wireless sensor networks. Ad Hoc Netw. 130, 102837 (2022)

W. Wang, M. Garofalakis, and K. Ramchandran, “Distributed sparse random projections for refinable approximation,” in 6th International Symposium on Information Processing Sensor Networks (2007) pp. 331–339

W. Heinzelman, A. Chandrakasan, H. Balakrishnan, An application-specific protocol architecture for wireless microsensor networks. IEEE Trans. Wireless Commun. 1(4), 660–670 (2002)

M. Shan, G. Chen, D. Luo, X. Zhu, X. Wu, Building maximum lifetime shortest path data aggregation trees in wireless sensor networks. ACM Trans. Sensor Netw. 11(1), 2629662 (2014)

Acknowledgements

The authors would like to thank all anonymous reviewers for their invaluable comments.

Funding

This research was supported by the National Natural Science Foundation of China (62061009).

Author information

Authors and Affiliations

Contributions

XW and HC conceived the original idea and completed the theoretical analysis. SL improved the system model and algorithm of the article. All authors provided useful discussions and reviewed the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, X., Chen, H. & Li, S. A reinforcement learning-based sleep scheduling algorithm for compressive data gathering in wireless sensor networks. J Wireless Com Network 2023, 28 (2023). https://doi.org/10.1186/s13638-023-02237-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13638-023-02237-4