Abstract

Heterogeneous image fusion is a technique of fusing images captured by different sensors into one image, then the fused image will present more information than the original images. This paper studies the compressive sensing image fusion algorithm and applies shearlet and wavelet transforms to represent the image sparsely. By compressing the sampled coefficients of the original images, the computational complexity in the image fusion process is reduced and the fusion efficiency is improved. We focus on the image fusion rules of compressive domain. Image coefficients of different frequencies are compressed by various sampling rates and fused according to different fusion rules. So an ideal fusion results can be obtained under a low sampling rate.

Similar content being viewed by others

1 Introduction

Heterogeneous sensor networks consist of multimodal sensors [1, 2] such as radar, optical, infrared, and acoustic. Infrared and visible image fusion in heterogeneous sensor networks is a hot research topic in the field of image processing [3–6]. The advantages of infrared and visible band imaging complement each other. The fused image contains two bands of information, which is widely used in visual monitoring, industrial testing, and many other fields [7, 8]. With the rapid improvement of image sensor production, high resolution cameras can show images quite clearly, but it is really a challenge to transmit and store so huge amount of image data. So in this paper, the image fusion algorithm based on compressive sensing domain is proposed [9, 10], which can reduce the amount of data storage and transmission and meanwhile lower the complexity of computation. So the overall system data processing efficiency can be improved.

2 Image sparse representation

The commonly used image sparse representation transforms are wavelet, curvelet, contourlet, and so on [11–13]. Shearlet transform is a new transform developed in recent years, which has more advantages than wavelet transform in high-dimensional data sparse representation, since it can express the singularity of space geometry much better [14, 15]. So we use shearlet transform and wavelet transform successively for image sparse representation. The advantage of this method is that it can combine the advantages of two kinds of multi-resolution analysis tools in image processing [16, 17] and increase the sparsity of the signals further, so we can use less data to complete the image fusion efficiently.

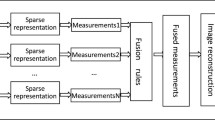

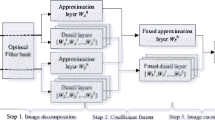

The original images can be decomposed into high-frequency coefficients and low-frequency coefficients after shearlet transform. Then, the coefficients will be processed by wavelet transform separately and four different frequency components of the coefficients can be obtained (shown in Fig. 1). The sparsity of different components also shows a big difference. Based on the sparsity of various frequency components, the coefficients are sampled at different sampling rates. Image sparse representation model is shown in Fig. 1.

The sparsity of the low-frequency coefficients is relatively low, so it is of little significance to compress the wavelet low-frequency coefficients of shearlet low-frequency components ( LL). Therefore, the LL components are not compressively sampled, but fused directly. The wavelet low-frequency coefficients of shearlet high-frequency components (LH) and the wavelet high-frequency coefficients of shearlet low-frequency components (HL) are sparser than LL components, so they can be compressively sampled and fused in the compressive sensing domain. The highest frequency components are wavelet high-frequency coefficients of shearlet high-frequency components (HH), which have the largest sparsity, so they can be compressively sampled with a lower sampling rate than the HL and LH components.

3 Image fusion rules

The image fusion rule determines the retention degree of each original image in the fused image. In this paper, the fusion rules are designed for the components of different frequency coefficients after compression.

The low-frequency coefficients (LL components) represent the approximation of the original image. The brightness and contrast of the fused image are mainly determined by the fusion rules of the LL components. The imaging effects of infrared and visible cameras are quiet different, because of their different imaging principle. In some environments which are favorable for visible light imaging, the visible image has large amount of information and the texture is rich while in the dark, foggy environments, the infrared imaging has its advantages of clear, stable and can indicate thermal information. For this part of the low-frequency coefficients, the local spatial frequency-based weighted fusion rule will be suitable, that is, the image with rich local spatial information will be set to a larger weight in the fusion process. Formula (1) shows the definition of local spatial frequency.

In the above formula, RF (x, y) represents the local (in the window with the dimension of W × W)) spatial frequency of the pixel (x, y) in row direction, and CF (x, y) represents the local spatial frequency in column direction; SF (x, y) is the local spatial frequency of the point (x, y).

The LH and HL image components correspond to the boundary of the smooth part of the image. In order to highlight the feature of the target region and ensure that the local region with large energy can be clearly reflected in the fused image, the fusion rule will be based on the regional energy feature. The regional energy feature is defined as follows:

The fusion rule of the LH and HL components pays much attention to retain the coefficients with large regional energy, so it is defined as follows:

The HH components are the highest frequency components after decomposition, corresponding to the image detail textures. So the fusion rule of these parts will adopt the absolute maximum fusion rule so that the fused image can keep rich texture information.

4 Experiment and data analysis

In order to verify the fusion effects of our algorithm, two sets of infrared and visible images are selected to perform experiments at different compressive sampling rates. One group is the cup images taken close-up indoor, and the other is the outdoor scene images taken in the yard. Each set of images consists of strictly registered infrared and visible images, and all the images are of the size of 512 × 512 (shown in Fig. 2).

Figures 3 and 4 are composed of six graphs, in which Fig. 3a and Fig. 4a are the fusion of the two original images after shearlet and wavelet transforms without compressive sampling, and the coefficients of each scale are fused according to the rule of absolute maximum. Figure 3b and Fig. 4b are the fused effects according to the fusion rule proposed in the paper without compressive sampling. The other figures in Figs. 3 and 4 use the principle of compressive sensing to compress all the coefficients except LL components. Among them, the coefficients of each layer in Fig. 3c and Fig. 4c are compressed to 300 pixels in each row and each column, that is, the coefficients sampling rate is 58.6%; the coefficients of each layer in Fig. 3d and Fig. 4d are compressed to 200 pixels in each row and each column, the coefficients sampling rate is 39.0% while the coefficients of each layer in Fig. 3e and Fig. 4e are compressed to 100 pixels in each row and each column, coefficient sampling rate is 19.5%; Fig. 3f and Fig. 4f use different sampling rates to compress HL, LH, and HH components. The sampling rate of HL and LH components is 39.0%, while the sampling rate of HH components is 19.5%, just the same as Fig. 3e and Fig. 4e.

The fusion effects of cup a The absolute maximum fusion rule, sampling rate =100%, b The proposed fusion rule, sampling rate =100%, c The proposed fusion rule, sampling rate =58.6%, d The proposed fusion rule, sampling rate =39.0%, e The proposed fusion rule, sampling rate =19.5%, f The proposed fusion rule, different sampling rate for different components

The fusion effects of yard a The absolute maximum fusion rule, sampling rate =100%, b The proposed fusion rule, sampling rate =100%, c The proposed fusion rule, sampling rate =58.6%, d The proposed fusion rule, sampling rate =39.0%, e The proposed fusion rule, sampling rate =19.5%, f The proposed fusion rule, different sampling rate for different components

From the subjective perception of the human eyes, the fusion rule proposed in this paper can take into account the advantages of two-band imaging, not only retains the rich texture information of visible light images but also the thermal information of infrared images, and the image brightness is uniform.

While the sampling rates decrease gradually, the quality of the fusion image shows a decreasing trend, which is mainly manifested in the blurring of the texture detail. In particular, the clarity of Fig. 3e and Fig. 4e drops a lot compared with Fig. 3c, d and Fig. 4c, d. But there is little difference between Fig. 3f, Fig. 4f and Fig. 3d, Fig. 4d because the sampling rate of Fig. f is different for different frequency components.

Piella method is an authoritative fusion image evaluation method. Q, Q W, and Q E are used to evaluate the image fusion performance [18]. Q is the fusion quality index, Q W is the weighted fusion quality index, and Q E is the edge-dependent fusion quality index. The range of the index is in [−1, 1], the closer to 1, the better the fusion effect. We use the Piella parameters to evaluate the performance of our algorithm. The results are shown in Table 1.

It can be concluded from the Piella parameters that the fusion method proposed in this paper has good image fusion effects, especially in the edge processing of the fused images. With the decrease of the image compressive rates, the image fusion effects decrease significantly, which is consistent with the subjective feelings of human eyes. The evaluation parameters of Fig. e vary a lot with that of Fig. d, since the sampling rate of Fig. e is quite low. Figure f uses different sampling rates for different frequency components, and its evaluation result is very close to Fig. d. So it can be explained that using different sampling rates and fusion rules for different frequency components is a good balance between fusion effects and image compressive ratio.

5 Conclusions

An image fusion algorithm in heterogeneous sensor networks of compressive sensing domain is proposed. Shearlet transform and wavelet transform are used successively to sparse represent the infrared and visible images, which can increase the signal sparsity obviously. Experiments show that the proposed algorithm can achieve a good balance between the fusion effects and the amount of data processing, because different sampling rates and fusion rules are applied to different frequency components of coefficients.

References

Q Liang, X Cheng, D Chen, Opportunistic sensing in wireless sensor networks: theory and applications. IEEE Trans Comput 63(8), 2002–2010 (2014)

Q Liang, Situation understanding based on heterogeneous sensor networks and human-inspired favor weak fuzzy logic system. IEEE Syst. J. 5(2), 156–163 (2011)

J Liang, W Zhang, L Ren, H Ju, E Qu, Polarimetric dehazing method for visibility improvement based on visible and infrared image fusion. Appl Optics 55(29), 8221–8226 (2016)

Z Liu, Y Feng, Y Zhang, X Li, A fusion algorithm for infrared and visible images based on RDU-PCNN and ICA-bases in NSST domain. Infrared Phys. Technol. 79, 183–190 (2016)

A. Wronkowicz, Automatic fusion of visible and infrared images taken from different perspectives for diagnostics of power lines. Quant. Infrared Thermogr. J. 13(2), 155–169 (2016).

D Bavirisetti, R Dhuli, Fusion of infrared and visible sensor images based on anisotropic diffusion and Karhunen-Loeve transform. IEEE Sensors J. 16(1), 203–209 (2016)

I Pavlidis, V Morellas, N Papanikolopoulos, A vehicle occupant counting system based on near-infrared phenomenology and fuzzy neural classification. IEEE Trans. Intell. Transp. Syst. 1(2), 72–85 (2000)

G Batchuluun, Y Kim, J Kim, H Hong, R Kang, Robust behavior recognition in intelligent surveillance environments. Sensors 16(7), 1010 (2016)

X Li, S Qin, Efficient fusion for infrared and visible images based on compressive sensing principle. LET Image Process 5(2), 141–147 (2011)

T Wan, Z Qin, An application of compressive sensing for image fusion. Int. J. Comput. Math. 88(18), 3915–3930 (2011)

R Soulard, P Carré, Elliptical monogenic wavelets for the analysis and processing of color images. IEEE Trans. Signal Process. 64(6), 1535–1549 (2016)

N Eslahi, A Aghagolzadeh, Compressive sensing image restoration using adaptive curvelet thresholding and nonlocal sparse regularization. IEEE Trans Image Process 25(7), 3126–3140 (2016)

MN Do, M Vetterli, The contourlet transform: an efficient directional multiresolution image representation. IEEE Trans Image Process 14(12), 2091–2106 (2005)

W Lim, The discrete shearlet transform: a new directional transform and compactly supported shearlet frames. IEEE Trans Image Process 19(5), 1166–80 (2010)

G. Kutyniok, J. Lemvig, W. Lim, Shearlets and optimally sparse approximations, Shearlets, 2011:145–197.

Y Tong, M Zhao, Z Wei, L Liu, Compressive sensing image-fusion algorithm in wireless sensor networks based on blended basis functions. EURASIP J. Wirel. Commun. Netw. 2014(1), 1–6 (2014)

L Ding, X Zhao, Shearlet-wavelet regularized semismooth newton iteration for image restoration. Math Probl Eng 2015(5), 1–12 (2015)

G Piella, H Heijmans, A new quality metric for image fusion. Image Processing 3, 173–176 (2003)

Acknowledgements

The authors would like to thank Tianjin Key Laboratory of Wireless Mobile Communications and Power Transmission for their support.

Funding

The authors would like to thank Tianjin Edge Technology and Applied Basic Research Project (14JCYBJC15800), Tianjin Normal University Doctoral Foundation (52XB1603), and Tianjin Normal University Application Development Foundation (52XK1601) in China for the funding.

Availability of data and materials

The datasets supporting the conclusions of this article are included within the article (and its additional file(s)).

Authors’ contributions

YT and JC conceived and designed the study. Both authors read and approved the manuscript.

Competing interests

The authors declare that they have no competing interests.

Consent for publication

Not applicable

Ethics approval and consent to participate

Not applicable

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Tong, Y., Chen, J. Compressive sensing image fusion in heterogeneous sensor networks based on shearlet and wavelet transform. J Wireless Com Network 2017, 52 (2017). https://doi.org/10.1186/s13638-017-0837-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13638-017-0837-z