Abstract

In this paper, we apply a latent class model (LCM) to the task of speaker diarization. LCM is similar to Patrick Kenny’s variational Bayes (VB) method in that it uses soft information and avoids premature hard decisions in its iterations. In contrast to the VB method, which is based on a generative model, LCM provides a framework allowing both generative and discriminative models. The discriminative property is realized through the use of i-vector (Ivec), probabilistic linear discriminative analysis (PLDA), and a support vector machine (SVM) in this work. Systems denoted as LCM-Ivec-PLDA, LCM-Ivec-SVM, and LCM-Ivec-Hybrid are introduced. In addition, three further improvements are applied to enhance its performance. (1) Adding neighbor windows to extract more speaker information for each short segment. (2) Using a hidden Markov model to avoid frequent speaker change points. (3) Using an agglomerative hierarchical cluster to do initialization and present hard and soft priors, in order to overcome the problem of initial sensitivity. Experiments on the National Institute of Standards and Technology Rich Transcription 2009 speaker diarization database, under the condition of a single distant microphone, show that the diarization error rate (DER) of the proposed methods has substantial relative improvements compared with mainstream systems. Compared to the VB method, the relative improvements of LCM-Ivec-PLDA, LCM-Ivec-SVM, and LCM-Ivec-Hybrid systems are 23.5%, 27.1%, and 43.0%, respectively. Experiments on our collected database, CALLHOME97, CALLHOME00, and SRE08 short2-summed trial conditions also show that the proposed LCM-Ivec-Hybrid system has the best overall performance.

Similar content being viewed by others

1 Introduction

Speaker diarization task aims to address the problem of “who spoke when” in an audio stream by splitting the audio into homogeneous regions labeled with speaker identities [1]. It has a wide application in automatic audio indexing, document retrieving and speaker-dependent automatic speech recognition.

In the field of speaker diarization, variational Bayes (VB) proposed by Patrick Kenny [2–5] and VB-hidden Markov model (HMM) introduced by Mireia Diez [6] have become the state-of-the-art approaches. This system has two characteristics. First, unlike mainstream approaches (i.e., segmentation and clustering approaches, discussed in the following section), it uses a fixed-length segmentation instead of speaker change point detection to do speaker segmentation, dividing an audio recording into uniform and short segments. These segments are short enough that they can be regarded as each containing only one speaker. This type of segmentation leaves the difficulty to the clustering stage and requires a better clustering algorithm that includes temporal correlation. Second, the VB approach utilizes a soft clustering approach that avoids premature hard decisions. Despite its accuracy, there are still some deficiencies of the approach. The VB approach is a single-objective method. Its goal is to increase the overall likelihood, which is based on a generative model, not to distinguish speakers. Furthermore, because the segmented segments are very short, the probability that an individual segment occurs given a particular speaker is inaccurate and may degrade system performance. In addition, some researchers have also noted that the VB system is very sensitive to its initialization conditions [7]. For example, if one speaker dominates the recording, a random prior tends to result in assigning the segments to each speaker evenly, leading to a poor result.

In this paper, to address the drawbacks of VB, we apply a latent class model (LCM) to speaker diarization. LCM was initially introduced by Lazarsfeld and Henry [8]. It is usually used as a way of formulating latent attitudinal variables from dichotomous survey items [9, 10]. This model allows us to compute \(p(\mathcal {X}_{m}, \mathcal {Y}_{s}, i_{{ms}})\), which represents the likelihood that both the segment representation \(\mathcal {X}_{m}\) and the estimated class representation \(\mathcal {Y}_{s}\) are from the same speaker, in a more flexible and discriminative way. We introduce the probabilistic linear discriminative analysis (PLDA) and support vector machine (SVM) into the computation, and propose LCM-Ivec-PLDA, LCM-Ivec-SVM, and LCM-Ivec-Hybrid systems. Furthermore, to address the problem caused by the shortness of each segment, in consideration of speaker temporal relevance, we take \(\mathcal {X}_{m}\)’s neighbors into account at the data and score levels to improve the accuracy of \(p(\mathcal {X}_{m},\mathcal {Y}_{s})\). A hidden Markov model (HMM) is applied to smooth frequent speaker changes. When the speakers are imbalanced, we use an agglomerative hierarchical cluster (AHC) approach [11] to address the system sensitivity to initialization.

The parameter selection experiments are mainly carried out on the NIST RT09 SPKD database [12] and our collected speaker imbalanced database. In practice, the number of speakers in a meeting or telephone call is relatively easy to be obtained. We assume that this number is known in advance. RT09 has two evaluation conditions: single distant microphone (SDM), where only one microphone channel is involved; and multiple distant microphone (MDM), where multiple microphone channels are involved. In this paper, we mainly consider the speaker diarization task under the SDM condition. We also conduct performance comparison experiments on the RT09, CALLHOME97 [13], CALLHOME00 (a subtask of NIST SRE00), and SRE08 short2-summed trial condition. Experiment results show that the proposed method has better performance compared with the mainstream systems.

The remainder of this paper is organized as follows. Section 2 describes mainstream approaches and algorithms. Section 3 introduces the latent class model (LCM), and Section 4 realizes the LCM-Ivec-PLDA, LCM-Ivec-SVM, and LCM-Ivec-Hybrid systems. Further improvements are presented in Section 5. Section 6 discusses the difference between our proposed methods and related works. Experiments are carried out and the results are analyzed in Section 7. Conclusions are drawn in Section 8.

2 Mainstream approaches and algorithms

Speaker diarization is defined as the task of labeling speech with the corresponding speaker. The most common approach consists of speaker segmentation and clustering [1, 14].

The mainstream approach to speaker segmentation is finding speaker change points based on a similarity metric. This includes Bayesian information criterion (BIC) [15], Kullback-Leibler [16], generalized likelihood ratio (GLR) [17], and i-vector/PLDA [18]. More recently, there are also some metrics based on deep neural networks (DNN) [19, 20], convolutional neural networks (CNN) [21, 22], and recurrent neural networks (RNN) [23, 24]. However, the DNN-related methods need a large amount of labeled data and might suffer from a lack of robustness when working in different acoustic environments.

In speaker clustering, the segments belonging to the same speaker are grouped into a cluster. The problem of measuring segment similarity remains the same as for speaker segmentation and the metrics described above can also be used for clustering. Cluster strategies based on hard decisions include agglomerative hierarchical clustering (AHC) [11] and division hierarchical clustering (DHC) [25]. A soft decision-based strategy is the variational Bayes (VB) [5], which is combined with eigenvoice modeling [2]. Taking temporal dependency into account, HMM [6] and hidden distortion models (HDM) [26, 27] are successfully applied in speaker diarization. There are also some DNN-based clustering strategies. In [28], a clustering algorithm is introduced by training a speaker separation DNN and adapting the last layer to specific segments. Another paper [29] introduces a DNN-HMM-based clustering method, which uses a discriminative model rather than a generative model, i.e., replacing GMMs with DNNs, for the estimation of emission probability, achieving better performance.

Some diarization systems based on i-vector, VB, or DNN are trained in advance, rely on the knowledge of application scenarios, and require large amount of matched training data. They perform well in fixed conditions. While some other diarization systems, such as BIC, HMM, or HDM, have little prior training. They are condition independent and more robust to the change of conditions. They perform better if the conditions, such as channels, noises, or languages, vary frequently.

2.1 Bottom-up approach

The bottom-up approach is the most popular one in speaker diarization [11], which is often referred to as an agglomerative hierarchical clustering (AHC). This approach treats each segment, divided by speaker change points, as an individual cluster, and merges a pair of clusters into a new one based on the nearest neighbor criteria. This merging process is repeated until a stopping criterion is satisfied. To merge clusters, a similarity function is needed. When clusters are represented by a single Gaussian or sometimes Gaussian mixture model (GMM), Bayesian information criterion (BIC) [30–32] is often adopted. When clusters are represented by i-vectors, cosine distance [33] or probabilistic linear discriminant analysis (PLDA) [34–37] is usually used. The stopping criteria can be based on thresholds, or on a pre-assumed number of speakers, alternatively [38, 39].

Bottom-up approach is more sensitive to nuisance variations (compared with the top-down approach), such as speech channel, speech content, or noise [40]. A similarity function, which is robust to these nuisance variations, is crucial to this approach.

2.2 Top-down approach

The top-down approach is usually referred to as a divisive hierarchical clustering (DHC) [25]. In contrast with the bottom-up approach, the top-down approach first treats all segments as unlabeled. Based on a selection criterion, some segments are chosen from these unlabeled segments. The selected segments are attributed to a new cluster and labeled. This selection procedure is repeated until no more unlabeled segments are left or until the stopping criteria, similar to those employed in the bottom-up approach, is reached. The top-down approach is reported to give worse performance on the NIST RT database [25] and has thus received less attention. However, paper [40] makes a thorough comparative study of these two approaches and demonstrates that these two approaches have similar performance.

The top-down approach is characterized by its high-computational efficiency but is less discriminative than the bottom-up approach. In addition, top-down is not as sensitive to nuisance variation and can be improved through cluster purification [25].

Both approaches have common pitfalls. They make premature hard decisions which may cause error propagation. Although these errors can be fixed by Viterbi resegmentation in next iterations [40, 41], a soft decision is still more desirable.

2.3 Hidden distortion model

Different from AHC or DHC, HMM takes temporal dependencies between samples into account. Hidden distortion model (HDM) [26, 27] can be seen as a generalization of HMM to overcome its limitations. HMM is based on the probabilistic paradigm while HDM is based on the distortion theory. In HMM, there is no regularization option to adjust the transition probabilities. In HDM, a regularization of transition cost matrix, used as a replacement of transition probability matrix, is a natural part of the model. Both HMM and HDM do not suffer from error propagation. They do re-segmentation via a Viterbi or forward-backward algorithm. And each iteration may fix errors in previous loops.

2.4 Variational Bayes

Variational Bayes (VB) is a soft speaker clustering method introduced to address speaker diarization task [2, 5, 6]. Suppose a recording is uniformly segmented into fixed-length segments \(\mathcal {X}= \{\mathcal {X}_{1},\cdots,\mathcal {X}_{m},\cdots,\mathcal {X}_{M}\}\), where the subscript m is the time index, 1≤m≤M. M is the segment duration. Let \(\mathcal {Y}=\{\mathcal {Y}_{1}, \cdots, \mathcal {Y}_{s}, \cdots, \mathcal {Y}_{S}\}\) be the speaker representation, where s is the speaker index, 1≤s≤S. S is the speaker number. I={ims}, where ims represents whether a segment m belongs to a speaker s or not. In speaker diarization, \(\mathcal {X}\) is the observable data, \(\mathcal {Y}\) and I are the hidden variables. The goal is to find proper \(\mathcal {Y}\) and I to maximize \(\log q(\mathcal {X})\). According to the Kullback-Leibler divergence, the lower bound of the log likelihood \(\log q(\mathcal {X})\) can be expressed as

The equality holds if and only if \(q(\mathcal {Y},I) = q(\mathcal {Y},I|\mathcal {X})\). The VB assumes a factorization \(q(\mathcal {Y},I) = q(\mathcal {Y}) q(I)\) to approximate the true posterior \(q(\mathcal {Y},I|\mathcal {X})\) [2]. Then, \(q(\mathcal {Y})\) and q(I) are iteratively refined to increase the lower bound of \(\log q(\mathcal {X})\). The final speaker diarization label can be assigned according to segment posteriors [2]. The implementation of VB approach is shown in Algorithm 1. Compared with the bottom-up or top-down approach, the VB approach uses a soft decision strategy and avoids a premature hard decision.

3 Latent class model

Suppose a sequence \(\mathcal {X}\) is divided into M segments, and \(\mathcal {X}_{m}\) is the representation of segment m, 1≤m≤M; \(\mathcal {Y}_{s}\) is the representation of latent class s, 1≤s≤S. Each segment belongs to one of S independent latent classes. This relationship is denoted by the latent class indicator matrix I={ims}

Our objective function is to maximizes the log-likelihood function with constraint that there are S classes, as follows

where Q={qms},qms is the posterior probability which will be explained later. Intuitively, if \(p(\mathcal {X}_{m}, \mathcal {Y}_{s}, i_{{ms}}) > p(\mathcal {X}_{m}, \mathcal {Y}_{s'}, i_{ms'}), s' \neq s, 1 \leq s, s' \leq S \), we will draw a conclusion that segment m belongs to class s. The above formula is intractable for the unknown \(\mathcal {Y}\) and I. We solve it through an iterative algorithm by introducing Q as follows:

-

1

The objective function is factorized as

$$ \begin{aligned} \sum\limits_{m=1}^{M} \log \sum\limits_{s=1}^{S} p(\mathcal{X}_{m}, \mathcal{Y}_{s}, i_{{ms}}) & = \sum\limits_{m=1}^{M} \log \sum\limits_{s=1}^{S} p(\mathcal{X}_{m}, \mathcal{Y}_{s}) p(i_{{ms}}|\mathcal{X}_{m}, \mathcal{Y}_{s}) \\ & = \sum\limits_{m=1}^{M} \log \sum\limits_{s=1}^{S} p(\mathcal{X}_{m}, \mathcal{Y}_{s}) q_{{ms}} \end{aligned} $$(3)In this step, \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) is assumed to be known. We use qms denote \(p(i_{{ms}}|\mathcal {X}_{m}, \mathcal {Y}_{s})\) for simplicity. Note that, qms≥0 and \({\sum \nolimits }_{s=1}^{S} q_{{ms}} = 1\). The (3) is optimized by Jensen’s inequality and Lagrange multiplier method. The updated \(q_{{ms}}^{(u)}\) is

$$ q_{{ms}}^{(u)} =\frac { q_{{ms}} p(\mathcal{X}_{m}, \mathcal{Y}_{s})} { {\sum\nolimits}_{s'=1}^{S} q_{ms'} p(\mathcal{X}_{m}, \mathcal{Y}_{s'})} $$(4)The explanation for step 1 is that qms is updated, given \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) is known.

-

2

The objective function is factorized as

$$ \begin{aligned} \sum\limits_{m=1}^{M} \log \sum\limits_{s=1}^{S} p(\mathcal{X}_{m}, \mathcal{Y}_{s}, i_{{ms}}) & = \sum\limits_{m=1}^{M} \log \sum\limits_{s=1}^{S} p(i_{{ms}}) p(\mathcal{X}_{m}, \mathcal{Y}_{s}|i_{{ms}}) \\ & \approx \sum\limits_{m=1}^{M} \log \sum\limits_{s=1}^{S} q_{{ms}} p(\mathcal{Y}_{s}) p(\mathcal{X}_{m} |\mathcal{Y}_{s}, i_{{ms}}) \end{aligned} $$(5)There are two approximations used in this step. First, we use the posterior probability qms in step 1 as the prior probability p(ims) in this step. Second, \(p(\mathcal {Y}_{s}|i_{{ms}}) = p(\mathcal {Y}_{s})\) is assumed. According to our understanding, \(\mathcal {Y}_{s}\) is the speaker representation and ims is the indicator between segment and speaker. Since \(\mathcal {X}_{m}\) is not referenced, \(\mathcal {Y}_{s}\) and ims are assumed to be independent of each other. A similar explanation is also given in Kenny’s work, see (10) in [2]. The goal of this factorization is to put \(\mathcal {Y}_{s}\) on the position of parameter, which provides a way to optimize it. And this step is to estimate \(\mathcal {Y}_{s}\), given p(ims) is known.

-

3

The objective function is factorized as

$$ \begin{aligned} \sum\limits_{m=1}^{M} \log \sum\limits_{s=1}^{S} p(\mathcal{X}_{m}, \mathcal{Y}_{s}, i_{{ms}}) & = \sum\limits_{m=1}^{M} \log \sum\limits_{s=1}^{S} p(i_{{ms}}) p(\mathcal{X}_{m}, \mathcal{Y}_{s}|i_{{ms}}) \\ & \approx \sum\limits_{m=1}^{M} \log \sum\limits_{s=1}^{S} q_{{ms}} p(\mathcal{X}_{m}) p(\mathcal{Y}_{s} |\mathcal{X}_{m}, i_{{ms}}) \end{aligned} $$(6)There are also two approximations used in this step. First, we use the posterior probability qms in step 1 as the prior probability p(ims) in this step. Second, \(p(\mathcal {X}_{m}|i_{{ms}}) = p(\mathcal {X}_{m})\) is assumed. According to our understanding, \(\mathcal {X}_{m}\) is the segment representation and ims is the indicator between segment m and speaker s. Since \(\mathcal {Y}_{s}\) is not referenced, \(\mathcal {X}_{m}\) and ims are assumed to be independent of each other. The explanation for step 3 is that \(p(\mathcal {X}_{m}, \mathcal {Y}_{s} |i_{{ms}})\) is calculated, given p(ims) and \(\mathcal {Y}_{s}\) are known. We compute the posterior probability \(p(\mathcal {Y}_{s} |\mathcal {X}_{m}, i_{{ms}})\) rather than \(p(\mathcal {X}_{m} |\mathcal {Y}_{s}, i_{{ms}})\) to approximate \(p(\mathcal {X}_{m}, \mathcal {Y}_{s}|i_{{ms}})\) with the goal that this factorization is to take advantages of S speaker constraint. In next loop, \(p(\mathcal {X}_{m}, \mathcal {Y}_{s}|i_{{ms}})\) is used as the approximation of \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) and go to step 1, see Fig. 1.

After a few iteration, the qms is used to make the final binary decision. We have several comments on the above iterations

-

Although the form of objective function (\(\arg _{Q,\mathcal {Y}} \max \log p(\mathcal {X}, \mathcal {Y}, I)\)) is the same in these three steps, the prior setting, factorized objective function and variables to be optimized are different, see Table 1 and Fig. 1. This will also be further verified in the next section.

-

The connection between step 1 and steps 2 and 3 are p(ims) and \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\), see the upper left text box in Fig. 1. We use the posterior probability (\(p(i_{{ms}}|\mathcal {X}_{m}, \mathcal {Y}_{s})\) and \(p(\mathcal {X}_{m}, \mathcal {Y}_{s}|i_{{ms}})\)) in the previous step or loop as the prior probability (p(ims) and \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\)) in the current step or loop.

-

The main difference between step 2 and step 3 is whether \(\mathcal {Y}_{s}\) is known, see the lower left text box in Fig. 1. The goal of step 2 is to make a more accurate estimation of speaker representation while the goal of step 3 is to compute \(p(\mathcal {X}_{m},\mathcal {Y}_{s}|i_{{ms}})\) in a more accurate way. The explicit functions in step 2 and step 3 can be different as long as \(\mathcal {Y}_{s}\) is the same.

-

A unified objective function or not? Not necessary. Of course, a unified objective function is more rigorous in theory, e.g., VB [2]. In fact, we can use the above model to explain the VB in [2]. The (15), (19), and (14) in [2] are corresponding to steps 1, 2, and 3, respectively Footnote 1. However, the prior setting in each step is different, as stated in Table 1, we can take advantage of it to make a better estimation or computation. For example, we have two additional ways to improve \(p(\mathcal {Y}_{s}, \mathcal {X}_{m}| i_{{ms}})\) in step 3, compared with the VB. First, the (14) in [2] is the eigenvoice scoring, given \(\mathcal {X}_{m}\) and \(\mathcal {Y}_{s}\) are known, which can be further improved by more effective scoring method, e.g., PLDA. Second, there are S classes constraint, turning the open-set problem into the close-set problem.

-

Whether the loop is converged? Not guaranteed. Since the estimation of \(\mathcal {Y}_{s}\) and computation of \(p(\mathcal {X}_{m},\mathcal {Y}_{s}|i_{{ms}})\) are choices of designers, the loop will not converge for some poor implementation. But, if \( p^{u}(\mathcal {X}_{m},\mathcal {Y}^{u}_{s^{*}}|i_{ms^{*}}=1) > p(\mathcal {X}_{m},\mathcal {Y}_{s^{*}}|i_{ms^{*}}=1)\) (monotonically increase with upper bound) is satisfied, the loop will converge to a local or global optimal. The notation with star means that it’s the ground truth. The \(\mathcal {Y}\) with a superscript u means the updated \(\mathcal {Y}\) in step 2 and the p with a superscript u means another (or updated) similarity function in step 3. This also implies that we have two ways to optimize the objective function. One is to use a better \(\mathcal {Y}\) (e.g., updated \(\mathcal {Y}\) in step 2) and the other one is to choose a more effective similarity function.

-

Whether the converged results conform to the diarization task? The Kullback-Leibler divergence between Q and I is \(D_{\text {KL}}(I\|Q) = - {\sum \nolimits }_{m=1}^{M} \log q_{{ms}}\). The minimization of KL divergence between Q and I is equal to the maximization of \({\sum \nolimits }_{m=1}^{M} \log q_{{ms}}\). According to (3), qms depends on \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\). If \(p(\mathcal {X}_{m}, \mathcal {Y}_{s^{*}}) > p(\mathcal {X}_{m}, \mathcal {Y}_{s'}), s^{*} \neq s'\) (\(\phantom {\dot {i}\!}i_{ms^{*}}=1\) is the ground truth), the converged results will satisfy the diarization task.

-

In addition to explicit unknown Q and \(\mathcal {Y}\), the unknown factors also include implicit functions, e.g., \(p(\mathcal {X}_{m},\mathcal {Y}_{s}| i_{{ms}})\) in steps 2 and 3. These implicit functions are statistical models selected by designers in implementation. What we want to emphasize is that we can do optimization on its parameters for a already selected function, we can also do optimization by choosing more effective functions based on known setting, e.g., from eigenvoice to PLDA or SVM scoring.

4 Implementation

If we regard speakers as latent classes, LCM will be a natural solution to a speaker diariazation task. The implementation needs to solve three things further: specify the segment representation \(\mathcal {X}_{m}\), specify the class representation \(\mathcal {Y}_{s}\) and \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) computation. Depending on different considerations, they can incorporate different algorithms. Given VB, LCM-Ivec-PLDA, and LCM-Ivec-SVM as examples,

-

1

In VB, \(\mathcal {X}_{m}\) is an acoustic feature. \(\mathcal {Y}_{s}\) is specified as a speaker i-vector. \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) is the eigenvoice scoring (Eq. (14) in [2]).

-

2

In LCM-Ivec-PLDA, \(\mathcal {X}_{m}\) is specified as a segment i-vector. \(\mathcal {Y}_{s}\) is specified as a speaker i-vector. \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) is calculated by PLDA.

-

3

In LCM-Ivec-SVM, \(\mathcal {X}_{m}\) is specified as a segment i-vector. \(\mathcal {Y}_{s}\) is specified as a SVM model trained on speaker i-vectors. \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) is calculated by SVM.

Actually, \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) can be regarded as a speaker verification task of short utterances, which will benefit from the large number of previous studies on speaker verification.

The implementation of presented LCM-Ivec-PLDA speaker diarization is shown in Fig. 2. Different from the above section, \(\mathcal {X}\) and \(\mathcal {Y}\) are abstract representations of segment m and speaker s. In this section, they are specified to explicit expressions. To avoid confusion, we use x, X, and w to denote an acoustic feature vector, an acoustic feature matrix and an i-vector. After front-end processing, the acoustic feature X of a whole recording is evenly divided into M segments, X={x1,⋯,xM}. Based on the above notations, the iterative procedures of LCM-Ivec-PLDA is as follows (Fig. 2):

-

1

segment i-vector wm is extracted from xm and its neighbors, which will be further explained in Section 5.

-

2

speaker i-vector ws is estimated based on Q={qms} and X={xm}.

-

3

\(p(\mathcal {X}_{m}, \mathcal {Y}_{s}) = p(\mathrm {w}_{m}, \mathrm {w}_{s})\) is computed through PLDA scoring.

-

4

Update qms by \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\).

This above process is repeated until the stopping criterion is met. The step 1 is a standard i-vector extraction procedure [42] and step 4 is realized by (4). So, we will put more attention on steps 2 and 3 in the following subsections.

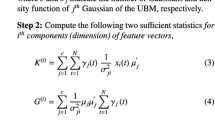

4.1 Estimate speaker i-vector ws

If T denotes the total variability space, our objective function [2] is as follows

where C is the number of Gaussian mixture components. \(\mathcal {N}\) is a Gaussian distribution. ωc,μubm,c, and Σubm,c are the weight, mean vector, and covariance matrix of the cth component of UBM, respectively. IR is an identity matrix with rank R. In contrast to speaker recognition in which the whole audio are assumed to be from one speaker, the segment m belongs to speaker s with a probability qms in the case of speaker diarization. We use Jensen’s inequality [43] again and obtain the lower bound as follows

where

The above objective function is a quadratic optimization problem with the optimal solution

where Ns and Fs are concatenations of Nsc and Fsc, respectively. Σ is a diagonal matrix whose diagonal blocks are Σubm,m. The Nsc,Fsc are defined as follows

In the above estimation, T and Σ are assumed to be known. These can be estimated on a large auxiliary database in a traditional i-vector manner.

4.2 Compute \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\)

To compute \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\), we first extract segment i-vectors wm from xm and its neighbors, and evaluate the probability that wm and ws are from the same speaker. We take advantages of PLDA and SVM to improve system performance, and propose LCM-Ivec-PLDA, LCM-Ivec-SVM, and LCM-Ivec-Hybrid systems.

4.2.1 PLDA

As each segment i-vector wm and speaker i-vector ws are known, the task reduces to a short utterance speaker verification task at this stage. We adopt a simplified PLDA [44] to model the distribution of i-vectors as follows:

where μI is the global mean of all preprocessed i-vectors, Φ is the speaker subspace, y is a latent speaker factor with a standard normal distribution, and residual term \(\varepsilon \sim \mathcal {N} \left (0, \Sigma _{\varepsilon } \right)\). Σε is a full covariance matrix. We adopt a two-covariance model and the PLDA scoring [45, 46] is

and the posterior probability with S speaker constraint is

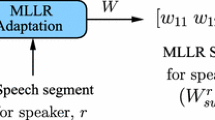

where κ is a scale factor set by experiments (κ=1 in the PLDA setting). The explanation of κ is similar to the κ of (1) in [47]. As \(p(\mathcal {X}_{m})\) is the same for S speakers and \(p(\mathcal {Y}_{s},\mathcal {X}_{m}| i_{{ms}}) = p(\mathcal {X}_{m}) p(\mathcal {Y}_{s}|\mathcal {X}_{m}, i_{{ms}}) \), the \(p(\mathcal {X}_{m})\) will be canceled in the following computation. The flow chart of LCM-Ivec-PLDA is shown in Fig. 3 without the flow path denoted as SVM.

4.2.2 SVM

Another discriminative option is using a support vector machine (SVM). After the estimation of ws, we train SVM models for all speakers. When training a SVM model (ηs,bs) with a linear kernel for speaker s, ws is regarded as a positive class and the other speakers \(\phantom {\dot {i}\!}\omega _{s'}\)(s′≠s) are regarded as negative classes. ηs,bs are linearly compressed weight and bias.

The SVM scoring is

and the posterior probability with S speaker constraint is

where κ is a also scale factor (κ=10 in the SVM setting). As \(p(\mathcal {X}_{m})\) is the same for S speakers and \(p(\mathcal {Y}_{s},\mathcal {X}_{m}| i_{{ms}}) = p(\mathcal {X}_{m}) p(\mathcal {Y}_{s}|\mathcal {X}_{m}, i_{{ms}}) \), the \(p(\mathcal {X}_{m})\) will be canceled in the following computation. The flow chart of LCM-Ivec-SVM is shown in Fig. 3 without the flow path denoted as PLDA.

4.2.3 Hybrid

The calculation of \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) is not explicitly specified in the LCM algorithm, which is just like the kernel function in SVM. As long as the kernel matrix satisfies the Mercer criterion [48], different choices may make the algorithm more discriminative and more generalized. In addition, multiple kernel learning is also possible by combining several kernels to boost the performance [49]. In the LCM algorithm, as long as the probability \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) satisfies the condition that the more likely both \(\mathcal {X}_{m}\) and \(\mathcal {Y}_{s}\) are from the same class s, the larger \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) will be, we can take it and embrace more algorithms, e.g., the abovementioned PLDA and SVM. We combine PLDA with SVM by iteration, see Fig. 3. This iteration takes advantages of both PLDA and SVM and is expected to reach a better performance. This hybrid iterative system is denoted as LCM-Ivec-Hybrid system.

5 Further improvements

5.1 Neighbor window

In fixed-length segmentation, each segment is usually very short to ensure its speaker homogeneity. However, this shortness will lead to inaccuracy when extracting segment i-vectors and calculating \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\). Intuitively, if a speaker s appears at time m, the speaker will appear at a great probability in the vicinity of time m. So its neighboring segments can be used to improve the accuracy of \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\). We propose two methods of incorporating neighboring segment information. At data level, we extract long-term segmental i-vector \(\mathcal {X}_{m}\) to use the neighbor information. At score level, we build homogeneous Poisson point process model to calculate \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\).

5.1.1 Data level window

At the data level, we extract wm using xm and its neighbor data. Let

where ΔMd is data level half window length, and ΔMd>0. We use Xm instead of xm to extract i-vector wm to represent segment m as shown in the lower part of Fig. 4. Since Xm is long enough to ensure more robust estimates, system performance can be improved. It should be noted that Xm may contain more than one speaker, but this does not matter. This is because the extracted wm only represents the time m, not the time duration (m−ΔMd,⋯,m+ΔMd). From another aspect, data level window can be seen as a sliding window with high overlapping to increase the segmentation resolution.

5.1.2 Score level window

At the score level, we update \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) with neighbor scores. Given the condition that mth segment belongs to speaker s, we consider the probability that (m+Δm)th segment does not belong to speaker s. If we define the appearance of a speaker change point as an event, the above process can be approximated as a homogeneous Poisson point process [50]. Under this assumption, the probability that a speech segment from m to m+Δm belongs to the same speaker is equivalent to the probability that the speaker change point does not appear from m to m+Δm, and can be expressed as

where λ is the rate parameter. It represents the average number of speaker change points in a unit time. We consider the contribution of \(p(\mathcal {X}_{m + \Delta m}, \mathcal {Y}_{s})\) to \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) by updating \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) as follows:

where ΔMs is score level half window length, ΔMs>0. It should be noted that, ΔMd,ΔMs and λ are experiment parameters and will be examined in the next section. As wm is extracted from \(\phantom {\dot {i}\!}X_{m} = ({\mathrm {x}}_{m - \Delta M_{d}}, \cdots, {\mathrm {x}}_{m + \Delta M_{d}})\), in fact, the updated \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) is related to \(({\mathrm {x}}_{m - \Delta M_{s} - \Delta M_{d}}, \cdots, {\mathrm {x}}_{m + \Delta M_{s} + \Delta M_{d}})\), as shown in Fig. 4. The full process of incorporating two neighbor windows is shown in Fig. 5.

5.2 HMM smoothing

After several iterations, speaker diarization results can be obtained according to qms. However, the sequence information is not considered in the LCM system, there might be a number of speaker change points in a short duration. To address the frequent speaker change problem, a hidden Markov model (HMM) is applied to smooth the speaker change points. The initial probability of HMM is \(\pi _{s} = p(\mathcal {Y}_{s})\). The self-loop transition probability is aii and the other transition probabilities are \(a_{{ij}} = \frac {1-a_{{ii}}}{S-1}, i \neq j\). Since the probability that a speaker transits to itself is much larger than that of changing to a new speaker, the self-loop probability is set to be 0.98 in our work. The emission probability is calculated based on PLDA (13) or SVM (16). With this HMM parameters, qms can be smoothed using the forward-backward algorithm.

5.3 AHC initialization

Although random initialization works well in most cases, LCM and VB systems tend to assign the segments to each speaker evenly in the case where a single speaker dominates the whole conversation, leading to poor results. According to the comparative study [40], we know that the bottom-up approach will capture comparatively purer models. Therefore, we recommend an informative AHC initialization method, similar to our previous paper [51]. After using PLDA to compute the log likelihood ratio between two segment i-vectors [34, 35], AHC is applied to perform clustering. Using the AHC results, two prior calculation methods, hard prior and soft prior, are proposed [51].

5.3.1 Hard prior

According to the AHC clustering results, if a segment m is classified to a speaker s, we will assign qms with a relatively larger value q. The hard prior is as follows:

where \(\mathcal {I} (\cdot)\) is the indicator function. \(\mathcal {I} \left ({\mathcal {X}}_{m} \in s \right)\) means a segment m is classified to speaker s.

5.3.2 Soft prior

b For the soft prior, we first calculate the center of each estimated speaker s

The distance between wm and \(\phantom {\dot {i}\!}{\mathrm {\mu }}_{\mathrm {w}_{s}}\) is \(d_{{ms}}=\lVert {\mathrm {w}}_{m} - {\mathrm {\mu }}_{\mathrm {w}_{s}} \rVert _{2}\). According to the AHC clustering results, if a segment m is classified to a speaker s, the prior probability for speaker s at time m is

where dmax,s= maxxm∈s(dms), k is a constant value. This soft prior probability varies from 0.5 to 1, ensuring that if ws is closer to \(\phantom {\dot {i}\!}{\mathrm {\mu }}_{\mathrm {w}_{s}}, q_{{ms}}\) will be larger. For other speakers at time m, the prior probability is (1−qms)/(S−1).

6 Related work and discussion

6.1 Core problem of speaker diarization

Different from some mainstream approaches, we take a different view for the basic concept of speaker diarization. Paper [40] summarizes that the task of speaker diarization is formulated as solving the following objective function:

where X is the observed data, S and G are speaker sequence and segmentation. In our work, we formulate the speaker diarization problem as follows:

where \(\mathcal {X}\) be the observed data, \(\mathcal {Y}\) and Q are hidden speaker representation and latent class probability matrix. Both objective functions can solve the problem of speaker diarization. However, the objective function (23) involves segmentation which introduces a premature hard decision that may degrade the system performance. The objective function (24) has difficulty in solving speaker overlapping problem and depends on the accurate estimate of speaker number.

6.2 Compared with VB

In VB, \(\mathcal {Y}_{s} \) is a speaker i-vector and \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) is the eigenvoice scoring (Eq. (14 in [2]), a generative model. In our paper, we replace eigenvoice scoring with PLDA or SVM scoring to compute \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) which benefits from the discriminability of PLDA or SVM. Both VB and LCM-Ivec-PLDA/SVM are iterative processes, and there are two important steps: Step 1 estimate Q based on \(\mathcal {X}\) and \(\mathcal {Y}\). Step 2 estimate \(\mathcal {Y}\) based on \(\mathcal {X}\) and Q.

The two algorithms are almost the same in the second step. However, in step 1, the calculation of Q is more accurate by introducing the PLDA or SVM. In recent speaker recognition evaluations (e.g., NIST SREs), the Ivec-PLDA performed better than eigenvoice model (or joint factor analysis, JFA) [3]. The SVM is suitable for classification task with small samples. This is the reason why we introduce these two methods to LCM. Compared with VB, the main benefit of LCM-Ivec-PLDA/SVM is that it takes advantages of PLDA or SVM to improve the accuracy of \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\). Besides, the \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) is enhanced by its neighbors both at the data and score level.

6.3 Compared with Ivec-PLDA-AHC

The PLDA has many applications in speaker diarization. Similar to GMM-BIC-AHC method, the Ivec-PLDA-AHC method has become popular in many research works. This way of using i-vector and PLDA follows the idea of segmentation and clustering. The role of PLDA is to evaluate the similarity of clusters divided by speaker change point, as done in paper [18, 34–37]. Based on the PLDA similarity matrix, AHC is applied to the clustering task. Although the performance is improved, it still has the premature hard decision problem.

6.4 Compared with PLDA-VB

In paper [7], PLDA is combined with VB, and is similar to ours. We believe that the probabilistic-based iterative framework, as depicted in the LCM, and not just the introduction of PLDA, is the key to solving the problem of speaker diarization. Our subsequent experiments also prove that using SVM can achieve a similar performance. The hybrid iteration inspired by the LCM can improve the performance further. In addition, we also study the use of neighbor information, HMM smoothing, and initialization method.

7 Experiments

Experiments have been implemented on five databases: NIST RT09 SPKD SDM (RT09), our own speaker imbalanced TL (TL), LDC CALLHOME97 American English speech (CALLHOME97) [13], NIST SRE00 subset of the multilingual CALLHOME (CALLHOME00), and NIST SRE08 short2-summed (SRE08) databases to examine the performance of LCM. Speaker error (SE) and diarization error rate (DER) are adopted as metrics to measure the system performance according to the RT09 evaluation plan [12] for RT09, TL, CALLHOME97, and CALLHOME00 database. Equal error rate (EER) and minimum detection cost function (MDCF08) are adopted as auxiliary metrics for SRE08 database.

7.1 Common configuration

Perceptual linear predictive (PLP) features with 19 dimensions are extracted from the audio recordings using a 25 ms Hamming window and a 10 ms stride. PLP and log-energy constitute a 20 dimensional basic feature. This base feature along with its first derivatives are concatenated as our acoustic feature vector. VAD is implemented using the frame log-energy and subband spectral entropy. The UBM is composed of 512 diagonal Gaussian components. The rank of the total variability matrix T is 300. For the PLDA, the rank of the subspace matrix is 150. For segment neighbors, ΔMd,ΔMs and λ are 40, 40, and 0.05, respectively.

7.2 Experiment results with RT09

The NIST RT09 SPKD database has seven English meeting audio recordings and is about 3 h in length. The BeamformIt toolkit [52] and Qualcomm-ICSI-OGI [53] front-end are adopted to realize acoustic beamforming and speech enhancement. We use Switchboard-P1, RT05, and RT06 to train UBM, T, and PLDA parameters. Three sets of experiments have been implemented to verify the performance of our proposed LCM systems, usage of neighbor window, and HMM smoothing on RT09 database, respectively.

7.2.1 Comparison among different methods

In the first set of experiments, we study the performance of different systems on the RT09 database. Table 2 lists the miss (Miss) rate and false alarm (FA) speech rate of LCM-Ivec-Hybrid system. It can be seen that the miss rate of the fifth recording reaches 20.0% percentage. This recording has much overlapping speech which is not well handled by our proposed approach.

Results of GMM-BIC-AHC, VB, and LCM-Ivec-PLDA/SVM/Hybrid systems are listed in Table 3. It can be seen that the performance of LCM systems is better than that of BIC system. This can be ascribed to the usage of qms for soft decisions instead of hard decisions. The performance of LCM is also better than VB system. This demonstrates that the introduction of a discriminative model is very effective. VB is a method with an iterative optimization based on a generative model. In contrast, LCM is a method with the computation of \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) based on discriminative model, which is in line with the basic requirements of the speaker diarization task and contributes to its performance improvement. Compared with the classical VB system, the DER of LCM-Ivec-PLDA, LCM-Ivec-SVM, and LCM-Ivec-Hybrid have an average relative improvement of 23.5%, 27.1%, and 43.0% on NIST RT09 database. For some recordings, which already have good DERs with PLDA or SVM, the performance improvement of hybrid system is relatively small. For others with poorer DERs, the improvement of the hybrid system is prominent. We infer that the hybrid system may help to jump out of a local optimum achieved by a single algorithm.

We also compare our system performance with other research work in the literature. Table 4 lists the average performance of different methods on the RT09 database. All of these systems except [54] is under a SDM condition. It can be seen that the Miss + FA of our method is relatively higher. This is ascribed to the VAD error and overlapping speech. Our method has the lowest SE and DER.

7.2.2 Effect of different neighbor window

In the second set of experiments, we study the influence of different neighbor windows at both data level and score level. For the data level window, Fig. 6 shows the DER varies with ΔMd of LCM-Ivec-Hybrid on the audio ’EDI_20071128-1500’. It can be seen that when ΔMd=0, that is to say no data level window is added, the performance of the speaker diarization is poor. As ΔMd becomes larger, DER firstly decreases and then increases slightly. This is because we can extract more speaker information from ΔMd as it gets larger, but if it grows too large, it begins to mix with other speaker’s information.

At the score level, the DER varied with ΔMs and λ is shown in Fig. 7. We can see that,when λ approaches to 0, the value of (18) approaches 1, and the Poisson window degrades to a rectangular window, DER also first decreases and then increases with ΔMs. As λ gets larger, the window becomes sharper, so DER is not so sensitive to a larger ΔMs.

Table 5 shows the experimental results of the LCM system with or without neighbor windows on RT09. All these systems are randomly initialized. It can be seen that, from left to right, the performance of each system is gradually improved. This demonstrates that taking segment neighbors into account improves the robustness and accuracy of \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) both in LCM-Ivec-PLDA and LCM-Ivec-SVM systems, thus enhancing the system performance.

7.2.3 Effect of HMM smoothing

Table 6 lists our third set of experiment results, from the LCM-Ivec-PLDA system with or without HMM smoothing. It can be seen that, for the first six audio recordings, the SE and DER of the LCM-Ivec-PLDA system with HMM smoothing are better than that without HMM smoothing. This can be ascribed to the HMM smoothing that makes the speaker changes less frequent. For the seventh recording, the performance of LCM with HMM smoothing is not better than without HMM smoothing. This is because the seventh recording has eleven speakers, and the speaker changes much more frequently than in the first six examples. We guess that the HMM oversmooths the speaker change points, which means the loop probability is too large for this case. In most cases, an HMM smoothing with proper parameters has positive effect.

7.3 Experiment results with TL

The AHC initialization aims to solve of problem of speaker imbalance. When there is one speaker dominating the whole conversation (> 80% of the speech), VB and LCM will be sensitive to the initialization. Random initialization results in poor performance. But, if the conversation is not speaker imbalance, the initialization method has little influence on the performance. All the experiments except this section are random initialized.

The AHC initialization experiment is carried out on our collected audio recordings TL. The training part of dataset TL contains 57 speakers (30 female and 27 male). The total duration is about 94 h. All of the recordings are natural conversations (Mandarin) recorded in a quiet office condition. The evaluation part of TL has three audio recordings (TL 7–9). These are also recorded in a quiet office, but there is one speaker who dominates the whole conversation (> 80% of the speech). Each recording has two speakers and is about 20 min. In the AHC initialization, q is set to be 0.7 in the hard prior setting and k is 10 in the soft prior setting, unless explicitly stated. Table 7 lists the SE and DER after AHC initialization before applying VB or LCM diarization. The number of speakers is assumed to be known in advance.

Figure 8 shows the DER of ’TL 7’ varies with k of soft prior (22). According to the variation trend, we choose k=10 in our experiment. From Table 8, we can see that random initialization gives poor results both in VB and LCM-Ivec-PLDA system in this case. The proposed AHC hard and soft prior improves the system performance significantly. The soft prior, which gives each segment an individual prior according to its distance to the estimated speaker centers, is more robust than the hard prior. With the AHC initialization, the LCM-Ivec-PLDA and VB system both have significant improvement compared with their random prior systems. The LCM-Ivec-PLDA system with hard/soft prior also surpasses the VB system with hard/soft prior with a relative improvement of 14.3%/14.2%. Tables 7 and 8 demonstrate that, although AHC initialization gets a not bad result, adding VB or LCM further improve the performance.

DER varies with k of soft prior (20)

7.4 Experiment results with CALLHOME97

The LDC CALLHOME97 American English speech database (CALLHOME97) consists of 120 conversations. Each conversation is about 30 min and includes about 10-min transcription. Only the transcribed parts are used. There are 109, 9, and 2 conversations containing 2, 3, and 4 speakers, respectively. We follow the practice of [55] and [56], conversations with 2 speakers are examined. We use Switchboard P1-3/Cell and SRE04-06 to train UBM, T, and PLDA parameters.

Scatter chart Fig. 9 enumerates VB-DER (blue diamond), VB-SE (orange square), LCM-Ivec-Hybrid-DER (LCM-DER, grey triangle), and LCM-Ivec-Hybrid-SE (LCM-SE, yellow cross) in the ascending order of VB-DER. Both LCM-DER and LCM-SE are lower than VB-DER and VB-SE in summary, see also Table 9.

We find an interesting thing. In the low region of DER (< 6%), the performance of VB and LCM systems is similar. In the middle-to-high region of DER (> 6%), LCM is not better than VB for all test conversations, but it has a significant performance improvement for a considerable number of conversations, see the distribution of blue diamonds and grey triangles in Fig. 9. The same situation is also reflected in Table 3. We believe that the VB is trapped in a local optimum for these segments. By contrast, the LCM avoids this situation by incorporating with different methods. In addition, the standard deviation of DER and SE of the LCM is smaller (Table 9), indicating that the performance of the LCM system is more stable.

Table 9 compares the results. It can be seen that compared with the VB system, the LCM-Ivec-Hybrid system has a relatively improvement of 26.6% and 17.3% in SE and DER, respectively. Compared with other listed methods, the LCM-Ivec-Hybrid system also performs best on the CALLHOME97 database. Diarization systems based on i-vector, VB, or LCM are trained in advance and perform well in fixed conditions. While diarization systems based on HDM have little prior training, it can perform better if test conditions vary frequently.

7.5 Experiment results with CALLHOME00

The CALLHOME00, a subtask of NIST SRE00, is a multilingual telephone database and consists of 500 recordings. Each recording is about 2∼5 min in duration, containing 2∼7 speakers. We use oracle speech activity marks and speaker numbers. Similar to [34, 38, 57–59], overlapping error is not accounted. So, the DER is identical to the SE in this section. We use Switchboard P1-3/Cell and SRE04-06 to train UBM, T, and PLDA parameters.

From Table 10, we may draw a conclusion that our proposed methods are optimal. However, it is not fair for [34, 57–59]. Paper [57, 59] do not use the oracle VAD, and paper [34, 57, 58] do not use the oracle speaker number. And both two factors have a great influence on the system performance. These results can only be used as an auxiliary reference. Paper [38] has the same setting with our work, and the proposed LCM-Ivec-Hybrid is slightly better. Based on the results of above three sections, we guess that our proposed system is more suitable for long speech, for the reason that \(\mathcal {Y}_{s}\) can be estimated more accurately from the long speech.

7.6 Experiment results with SRE08

The NIST SRE08 short2-summed channel telephone data consists of 1788 models and 2215 test segments. Each segment is about 5 min in duration (about 200 h in total). We find that there is no official speaker diarization key for the summed data. Thus, neither DER or SE is adopted for this set of experiments. The paper [2] reports that “We see that there is some correlation between EER and DER, but this is relatively weak.” So, we measure the effect of diarization through EER and MDCF08 in an indirect way. On the one hand, we use the NIST official trials (short2-summed, short2-summed-eng). On the other hand, we follow the practice of [60] and make extended trials (ext-short2-summed, ext-short2-summed-eng).

We use Switchboard P1-3/Cell and SRE04-06 to train UBM, T and PLDA parameters. Here, our speaker verification system is a traditional GMM-Ivec-PLDA system. The extracted 39 dimension PLP feature has 13 dimension static feature, Δ and ΔΔ. A diagonal GMM with 2048 components is gender-independent. The rank of the total variability matrix T is 600. For the PLDA, the rank of the subspace matrix is 150 [44].

To begin with, we give some experimental results on the NIST SRE08 core tasks, i.e., short2-short3-telephone (short2-short3) and short2-short3-telephone-English trials (short2-shor3-eng), to verify the performance of above speaker verification system, see Table 11. Compared with the classical paper [42], our results are normal. Subsequently, we present results of the same speaker verification system on the NIST SRE08 short2-summed condition. Without the front diarization, the EER and MDCF08 are as high as 16.94% and 0.686. Whether it is a VB + windows or LCM-Ivec-Hybrid, speaker diarization can significantly improve system performance. Comparing case 5,9,14,17 with case 6,10,15,18 in Table 11, we think that the performance improvement of LCM over VB is mainly due to the better diarization of LCM.

According to our literature research, there are few documents that report EER and MDCF08 on the short2-summed condition. We list state-of-the-art diarization-verification systems developed by the LPT [61, 62] in 2008 in Table 11. Paper [2] also presents the related EER in its Fig. 4. Compared with them, our system works better. Part of the reason is the advance of speaker verification system, and the other part is the effectiveness of our proposed methods.

Paper [60] gives results on the extended trials which is more convincing in our opinion. On the ext-short2-summed trials, although our EER (4.99%) is worse than their report (4.39%), but our MDCF08 (0.201) is better than their report (0.209). Besides, paper [60] is a fusion system but our work is a single system.

8 Conclusion

In this paper, we have applied a latent class model (LCM) to the task of speaker diarization. LCM provides a framework that allows multiple models to compute the probability \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\). Based on this algorithm, additional LCM-Ivec-PLDA, LCM-Ivec-SVM and LCM-Ivec-Hybrid systems are introduced. These approaches significantly outperform traditional systems.

There are five main reasons for this improvement: (1) introducing a latent class model to speaker diarization and using discriminative models in the computation of \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\) which enhances the system’s ability at distinguishing speakers. (2) Incorporating temporal context through neighbor windows, which increases speaker information extracted from each short segment. This incorporation is used both at the data level, taking \(\mathcal {X}_{m}\) and its neighbors to constitute Xm when extracting \(\mathcal {Y}_{m}\), and at the score level, considering the contribution of neighbors when calculating \(p(\mathcal {X}_{m}, \mathcal {Y}_{s})\). 3) Performing HMM smoothing, which takes the audio sequence information into consideration. (4) AHC initialization is also a crucial factor when the conversation is dominated by a single speaker. (5) The hybrid schema can avoid the algorithm falling into local optimum in some cases.

Finally, our proposed system has the best overall performance on NIST RT09, CALLHOME97, CALLHOME00, and SRE08 short2-summed database.

Availability of data and materials

The CALLHOME00, NIST RT05/RT06/RT09, and NIST SRE08 database were released by NIST during evaluation. The abovementioned data and LDC CALLHOME97 can also be obtained by LDC (https://www.ldc.upenn.edu/). For the TL database, please contact author for data requests (heliang@mail.tsinghua.edu.cn).

Notes

Note that, equal prior is assumed in (15) in [2].

References

X. A. Miro, S. Bozonnet, N. Evans, C. Fredouille, G. Friedland, O. Vinyals, Speaker diarization: a review of recent research. IEEE Trans. Audio Speech Lang. Process.20(2), 356–370 (2012). https://doi.org/10.1109/TASL.2011.2125954.

P. Kenny, D. Reynolds, F. Castaldo, Diarization of telephone conversations using factor analysis. IEEE J. Sel. Top. Signal Proc.4(6), 1059–1070 (2010). https://doi.org/10.1109/JSTSP.2010.2081790.

P. Kenny, Bayesian analysis of speaker diarization with eigenvoice priors. Technical report, CRIM (2008).

D. Reynolds, P. Kenny, F. Castaldo, in Proceedings of Interspeech 2009. A study of new approaches to speaker diarization (ISCA(International Speech Communication Association)Brighton, 2009), pp. 1047–1050.

F. Valente, Variational bayesian methods for audio indexing. PhD thesis, Eurecom, Sophia-Antipolis, France (2005). http://dx.doi.org/10.5075/epfl-thesis-2092. http://www.eurecom.fr/publication/1739.

M. Diez, P. M. L. Burget, in Odyssey The Speaker and Language Recognition Workshop. Speaker diarization based on bayesian hmm with eigenvoice priors (ISCALes Sables d’Olonne, 2018), pp. 147–154.

A. E. Bulut, H. Demir, Y. Z. Isik, H. Erdogan, in 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Plda-based diarization of telephone conversations (IEEEBrisbane, 2015), pp. 4809–4813. https://doi.org/10.1109/ICASSP.2015.7178884.

P. F. Lazarsfeld, N. W. Henry, Latent Structure Analysis (Houghton Mill, Boston, 1968).

J. Magidson, J. K. Vermunt, Latent class models for clustering: A comparison with kmeans. Can. J. Mark. Res.20:, 37–44 (2002).

L. M. Collins, S. T. Lanza, Latent Class and Latent Transition Analysis with Applications in the Social, Behavioral, and Health Sciences, 1st edn. (Wiley, New York, 2009).

K. J. Han, S. S. Narayanan, in Proceedings of Interspeech 2007. A robust stopping criterion for agglomera-tive hierarchical clustering in a speaker diarization system (ISCAAntwerp, 2007), pp. 1853–1856.

NIST, The 2009 (RT-09) Rich Transcription Meeting Recognition Evaluation Plan (2009). Available: https://www.nist.gov/itl/iad/mig/rich-transcription-evaluation.

L. data consortium, LDC97S42, Catalog (1997). Available: http://www.ldc.upenn.edu/Catalog/catalogEntry.jsp?catalogIdDLDC97S42. Accessed 29 Aug 2018.

M. Zelenák, H. Schulz, J. Hernando, Speaker diarization of broadcast news in albayzin 2010 evaluation campaign. EURASIP J. Audio Speech Music Process.2012(1), 19 (2012). https://doi.org/10.1186/1687-4722-2012-19.

C. Wooters, M. Huijbregts, The ICSI RT07s Speaker Diarization System. (R. Stiefelhagen, R. Bowers, J. Fiscus, eds.) (Springer, Berlin, Heidelberg, 2008).

V. Gupta, G. Boulianne, P. Kenny, P. Ouellet, P. Dumouchel, in 2008 IEEE International Conference on Acoustics, Speech and Signal Processing. Speaker diarization of french broadcast news, (2008), pp. 4365–4368. https://doi.org/10.1109/ICASSP.2008.4518622.

S. Meignier, T. Merlin, in CMU SPUD Workshop. Lium spkdiarization: an open source toolkit for diarization (HALDallas, 2010).

B. Desplanques, K. Demuynck, J. -P. Martens, in Proceedings of Interspeech 2015. Factor analysis for speaker segmentation and improved speaker diarization (ISCADresden, 2015), pp. 3081–3085.

S. Jothilakshmi, V. Ramalingam, S. Palanivel, Speaker diarization using auto associative neural networks. Eng. Appl. Artif. Intell.22(4), 667–675 (2009). https://doi.org/10.1016/j.engappai.2009.01.012.

V. Gupta, in 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Speaker change point detection using deep neural nets (IEEEQueensland, 2015), pp. 4420–4424. https://doi.org/10.1109/ICASSP.2015.7178806.

M. Hruz, Z. Zajic, in 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Convolutional neural network for speaker change detection in telephone speaker diarization system (IEEENew Orleans, 2017), pp. 4945–4949.

Z. Zajic, M. Hruz, L. Muller, in Proceedings of Interspeech 2017. Speaker diarization using convolutional neural network for statistics accumulation refinement (ISCAStockholm, 2017), pp. 3562–3566.

H. Bredin, in 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Tristounet: Triplet loss for speaker turn embedding (IEEENew Orleans, 2017), pp. 5430–5434.

R. Yin, H. Bredin, C. Barras, in Proceedings of Interspeech 2017. Speaker change detection in broadcast tv using bidirectional long short-term memory networks (ISCAStockholm, 2017), pp. 3827–3831.

S. Bozonnet, N. W. D. Evans, C. Fredouille, in 2010 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). The lia-eurecom rt’09 speaker diarization system: Enhancements in speaker modelling and cluster purification (IEEEDallas, 2010), pp. 4958–4961. https://doi.org/10.1109/ICASSP.2010.5495088.

I. Lapidot, J. -F. Bonastre, in Odyssey The Speaker and Language Recognition Workshop. On the importance of efficient transition modeling for speaker diarization (ISCASingapore, 2012), pp. 138–145.

I. Lapidot, J. -F. Bonastre, in Proceedings of Interspeech 2016. On the importance of efficient transition modeling for speaker diarization (ISCASan Francisco, 2016), pp. 2190–2193.

R. Milner, T. Hain, in Proceedings of Interspeech 2016. Dnn-based speaker clustering for speaker diarisation (ISCASan Francisco, 2016), pp. 2185–2189.

M. Najafian, J. H. L. Hansen, in 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Environment aware speaker diarization for moving targets using parallel dnn-based recognizers (IEEENew Orleans, 2017), pp. 5450–5454. https://doi.org/10.1109/ICASSP.2017.7953198.

X. Zhu, C. Barras, S. Meignier, J. -L. Gauvain, in Proceedings of Interspeech 2005. Combining speaker identification and bic for speaker diarization (ISCALisbon, 2005), pp. 2441–2444.

T. L. Nwe, H. Sun, B. Ma, H. Li, in Proceedings of Interspeech 2010. Speaker diarization in meeting audio for single distant microphone (ISCAMakuhari, 2010), pp. 1505–1508.

G. Friedland, A. Janin, D. Imseng, X. Anguera, L. Gottlieb, M. Huijbregts, M. T. Knox, O. Vinyals, The icsi rt-09 speaker diarization system. IEEE Trans. Audio Speech Lang. Process.20(2), 371–381 (2012). https://doi.org/10.1109/TASL.2011.2158419.

S. Shum, N. Dehak, E. Chuangsuwanich, D. Reynolds, J. Glass, in Proceedings of Interspeech 2011. Exploiting intra-conversation variability for speaker diarization (ISCAFlorence, 2011), pp. 945–948.

G. Sell, D. Garcia-Romero, in 2014 IEEE Spoken Language Technology Workshop (SLT). Speaker diarization with plda i-vector scoring and unsupervised calibration (IEEE, 2014), pp. 413–417. https://doi.org/10.1109/SLT.2014.7078610.

G. L. Lan, D. Charlet, A. Larcher, S. Meignier, in Proceedings of Interspeech 2016. Iterative plda adaptation for speaker diarization (ISCASan Francisco, 2016), pp. 2175–2179.

A. Sholokhov, T. Pekhovsky, O. Kudashev, A. Shulipa, T. Kinnunen, in 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Bayesian analysis of similarity matrices for speaker diarization (IEEEFlorence, 2014), pp. 106–110.

W. Zhu, J. Pelecanos, in 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Online speaker diarization using adapted i-vector transforms (IEEEShanghai, 2016), pp. 5045–5049. https://doi.org/10.1109/ICASSP.2016.7472638.

S. H. Shum, N. Dehak, R. Dehak, J. R. Glass, Unsupervised methods for speaker diarization: an integrated and iterative approach. IEEE Trans. Audio Speech Lang. Process.21(10), 2015–2028 (2013). https://doi.org/10.1109/TASL.2013.2264673.

G. Sell, A. McCree, D. Garcia-Romero, in Proceedings of Interspeech 2016. Priors for speaker counting and diarization with ahc (ISCASan Francisco, 2016), pp. 2194–2198.

N. Evans, S. Bozonnet, D. Wang, C. Fredouille, R. Troncy, A comparative study of bottom-up and top-down approaches to speaker diarization. IEEE/ACM Trans. Audio, Speech, Lang. Process.20(2), 382–392 (2012).

T. L. Nwe, H. Sun, B. Ma, H. Li, Speaker clustering and cluster purification methods for rt07 and rt09 evaluation meeting data. Audio Speech Lang. Process. IEEE Trans.20(2), 461–473 (2012). https://doi.org/10.1109/TASL.2011.2159203.

N. Dehak, P. J. Kenny, R. Dehak, P. Dumouchel, P. Ouellet, Front-end factor analysis for speaker verification. IEEE Trans. Audio Speech Lang. Process.19(4), 788–798 (2011). https://doi.org/10.1109/TASL.2010.2064307.

I. S. Gradshteyn, I. M. Ryzhik, Tables of Integrals, Series, and Products, 7th ed. (Academic Press, San Diego, 2000).

D. Garcia-Romero, C. Espy-Wilson, in Proceedings of Interspeech 2011. Analysis of i-vector length normalization in speaker recognition systems (ISCAFlorence, 2011), pp. 249–252.

N. Brümmer, E. D. Villiers, in Odyssey The Speaker and Language Recognition Workshop. The speaker partitioning problem (ISCABrno, 2010), pp. 194–201.

S. J. D. Prince, J. H. Elder, in 2007 IEEE 11th International Conference on Computer Vision. Probabilistic linear discriminant analysis for inferences about identity (IEEERio de Janeiro, 2007), pp. 1–8. https://doi.org/10.1109/ICCV.2007.4409052.

D. Povey, D. Kanevsky, B. Kingsbury, B. Ramabhadran, G. Saon, K. Visweswariah, in IEEE International Conference on Acoustics, Speech and Signal Processing. Boosted mmi for model and feature-space discriminative training (IEEELas Vegas, 2008), pp. 4057–4060.

M. J, Xvi. functions of positive and negative type, and their connection the theory of integral equations. Philos. Trans. R. Soc. Lond. A Math. Phys. Eng. Sci.209(441-458), 415–446 (1909). https://doi.org/10.1098/rsta.1909.0016. http://rsta.royalsocietypublishing.org/content/209/441-458/415.full.pdf.

F. R. Bach, G. R. G. Lanckriet, M. I. Jordan, in Proceedings of the Twenty-first International Conference on Machine Learning. ICML ’04. Multiple kernel learning, conic duality, and the smo algorithm (ACMNew York, 2004), p. 6. https://doi.org/10.1145/1015330.1015424. http://doi.acm.org/10.1145/1015330.1015424.

D. L. Snyder, M. I. Miller, Random Point Processes in Time and Space (Springer, New York, 1991). https://doi.org/10.1007/978-1-4612-3166-0.

L. He, X. Chen, C. Xu, Tianyu, J. Liu, in IEEE Workshop on Signal Processing Systems (SIPS). Ivec-plda-ahc priors for vb-hmm speaker diarization system (IEEELorient, 2017).

X. Anguera, C. Wooters, J. Hernando, Acoustic beamforming for speaker diarization of meetings. IEEE Trans. Audio Speech Lang. Process.15(7), 2011–2022 (2007). https://doi.org/10.1109/TASL.2007.902460.

A. Adami, L. Burget, S. Dupont, H. Garudadri, F. Grezl, H. Hermansky, P. Jain, S. Kajarekar, N. Morgan, S. Sivadas, in Proc. ICSLP. Qualcomm-icsi-ogi features for asr (ISCADenver, 2002), pp. 4–7.

S. H. Yella, H. Bourlard, in 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Information bottleneck based speaker diarization of meetings using non-speech as side information (IEEEFlorence, 2014), pp. 96–100. https://doi.org/10.1109/ICASSP.2017.7953097.

I. Lapidot, A. Shoa, T. Furmanov, L. Aminov, A. Moyal, J. -F. Bonastre, Generalized viterbi-based models for time-series segmentation and clustering applied to speaker diarization. Comput. Speech Lang.45:, 1–20 (2017).

Z. Zajic, M. Kunesova, V. Radova, Investigation of segmentation in i-vector based speaker diarization of telephone speech. Springer Int. Publ.45:, 411–418 (2016).

F. Castaldo, D. Colibro, E. Dalmasso, P. Laface, C. Vair, in IEEE International Conference on Acoustics, Speech and Signal Processing. Stream-based speaker segmentation using speaker factors and eigenvoices (IEEELas Vegas, 2008), pp. 4133–4136.

M. Senoussaoui, P. Kenny, T. Stafylakis, P. Dumouchel, A study of the cosine distance-based mean shift for telephone speech diarization. IEEE/ACM Trans. Audio Speech Lang. Process.22(1), 217–227 (2013).

D. Povey, A. Ghoshal, G. Boulianne, L. Burget, O. Glembek, N. Goel, M. Hannemann, P. Motlicek, Y. Qian, P. Schwarz, J. Silovsky, G. Stemmer, K. Vesely, in IEEE 2011 Workshop on Automatic Speech Recognition and Understanding. The kaldi speech recognition toolkit (IEEEWaikoloa, 2011). IEEE Catalog No.: CFP11SRW-USB.

C. Vaquero, A. Ortega, A. Miguel, E. Lleida, Quality assessment for speaker diarization and its application in speaker characterization. IEEE Trans. Audio Speech Lang. Process.21(4), 816–827 (2013).

E. Dalmasso, F. Castaldo, P. Laface, D. Colibro, C. Vair, in IEEE International Conference on Acoustics, Speech and Signal Processing. Loquendo - politecnico di torino’s 2008 nist speaker recognition evaluation system (IEEETaipei, 2009), pp. 4213–4216.

F. Castaldo, D. Colibro, C. Vair, S. Cumani, P. Laface, in IEEE International Conference on Acoustics, Speech and Signal Processing. Loquendo - politecnico di torino’s 2010 nist speaker recognition evaluation system (IEEEPrague, 2011), pp. 4213–4216.

Acknowledgements

We would like to thank the editor and anonymous reviewers for their careful work and thoughtful suggestions that have helped improve this paper substantially.

Funding

The work was supported by the National Natural Science Foundation of China under Grant No. 61403224.

Author information

Authors and Affiliations

Contributions

The contributions of each author are as follows: LH proposed the LCM methods, score level windowing, and the AHC initialization for speaker diarization. He realized these systems in C++ code (Aurora3 Project) and wrote the paper. XC did a lot of literature research, wrote the section of mainstream approaches and algorithms, and studied the data level windowing and HMM smoothing. CX did experiments on the database based on the code provided by He Liang. He also recorded and analyzed the results. YL provided the comparative systems. JL gave some advices. MTJ checked the whole article and polished English language usage.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

He, L., Chen, X., Xu, C. et al. Latent class model with application to speaker diarization. J AUDIO SPEECH MUSIC PROC. 2019, 12 (2019). https://doi.org/10.1186/s13636-019-0154-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13636-019-0154-z