Abstract

Target detection based on hyperspectral images refers to the integrated use of spatial information and spectral information to accomplish the task of localization and identification of targets. There are two main methods for hyperspectral target detection: supervised and unsupervised methods. Supervision method refers to the use of spectral differences between the target to be tested and the surrounding background to identify the target when the target spectrum is known. In ideal situations, supervised object detection algorithms perform better than unsupervised algorithms. However, the current supervised object detection algorithms mainly have two problems: firstly, the impact of uncertainty in the ground object spectrum, and secondly, the universality of the algorithm is poor. A hyperspectral target detection framework based on 3D–2D CNN and transfer learning was proposed to solve the problems of traditional supervised methods. This method first extracts multi-scale spectral information and then preprocesses hyperspectral images using multiple spectral similarity measures. This method not only extracts spectral features in advance, but also eliminates the influence of complex environments to a certain extent. The preprocessed feature maps are used as input for 3D–2D CNN to deeply learn the features of the target, and then, the softmax method is used to output and obtain the detection results. The framework draws on the ideas of integrated learning and transfer learning, solves the spectral uncertainty problem with the combined similarity measure and depth feature extraction network, and solves the problem of poor robustness of traditional algorithms by model migration and parameter sharing. The area under the ROC curve of the proposed method has been increased to over 0.99 in experiments on both publicly available remote sensing hyperspectral images and measured land-based hyperspectral images. The availability and stability of the proposed method have been demonstrated through experiments. A feasible approach has been provided for the development and application of specific target detection technology in hyperspectral images under different backgrounds in the future.

Highlights

-

An application-oriented land-based hyperspectral target detection framework based on 3D–2D CNN and transfer learning is proposed.

-

Integrating multiple spectral similarity evaluation indicators to extract spectral features.

-

Extracting spatial and spectral information from hyperspectral images using a 3D–2D network model.

Similar content being viewed by others

1 Introduction

Hyperspectral imaging technology can break through the limitations of two-dimensional space, and get the fine spectral information of the target while acquiring its spatial image information [1,2,3]. Compared with traditional imaging methods, the advantage of hyperspectral images lies in their ability to accurately obtain diagnostic spectral features of the target, thereby excellently completing tasks such as pixel level classification [4,5,6], scene classification [7], and object detection [8, 9]. Therefore, hyperspectral imaging technology is applied in both civilian and military fields, such as medicine [10, 11], agriculture [12, 13], environmental monitoring [14], and camouflage target detection [15]. Due to the limitations of imaging level, traditional hyperspectral target detection focuses more on quantitative analysis of spectral information [16]. The orthogonal subspace projection (OSP) algorithm [17] projects the original image onto an orthogonal matrix space, effectively suppressing background information. Harsanyi proposed the constrained energy minimization (CEM) algorithm [18]. A linear filter was designed in the CEM method, which can suppress and filter out the background in the image, filtering out the interested targets. On this basis, an object detection algorithm based on generalized likelihood ratio (GLRT), adaptive matched filter (AMF), and adaptive cosine estimator (ACE) has emerged [19,20,21]. The kernel-based object detection algorithm combines the ideas of kernel functions in machine learning with hyperspectral object detection algorithms, better utilizing the hidden nonlinear features in hyperspectral data. At present, many effective methods have been developed, such as KMF, KMSD, KASD, and KCEM [22,23,24,25,26]. However, there are some limitations in the application because there is no specific rule for the selection of the kernel function.

In recent years, with the development of statistical pattern recognition and deep learning under big data, new data-driven target detection algorithms based on data have begun to emerge. Deep learning-based methods can extract deep level features of targets, playing an important role in many stages of hyperspectral image processing such as noise processing [27], mixed pixel decomposition [28], and classification [29]. Although deep learning-based hyperspectral target detection techniques have made breakthroughs, the samples used in the process of training neural networks in most of the studies are derived from the same hyperspectral image as the actual test data. In theory, the target spectral data used in supervised object detection should come from the spectral library rather than from the hyperspectral image itself. Therefore, I personally believe that this approach to some extent avoids the issue of spectral uncertainty and is not conducive to the application of hyperspectral target detection technology. Of course, some researchers also recognize the above problems and use the transfer learning method to improve [30, 31]. Overall, the focus of most existing research results has been put on improving the model structure of deep neural networks, while the research in this paper is more focused on the application of hyperspectral target detection technology.

A multi-scale spectral feature extraction method was adopted in the preprocessing to reduce the influence of spectral uncertainty on target detection tasks. 3D–2D CNN can fully extract comprehensive and effective information during the feature extraction process. In addition, in response to the complex and variable background of the target being tested in practical applications, the proposed method utilizes multiple spectral similarity measures to replace the general dimension reduction method. This method converts simple background features into the degree of similarity between the background and the target, reducing the impact of complex backgrounds on target detection. The proposed method has certain reference significance for the practical application of hyperspectral object detection, laying the foundation for the development of real-time hyperspectral object detection technology.

2 Method and principle

2.1 Hyperspectral images under land-based imaging conditions

Land-based hyperspectral imaging systems refer to hyperspectral imaging systems based on various ground or near-ground imaging platforms. Land-based hyperspectral imaging differs greatly from traditional remote sensing imaging in terms of imaging platform, imaging environment, and target spectral characteristics. Li et al. analyzed the factors affecting spectral reflectance under land-based imaging conditions and studied the effects of solar zenith angle, detection zenith angle, and relative azimuthal angle on the spectral reflectance of features using the control variable method, respectively [32]. Figure 1 shows the hyperspectral images under two different imaging conditions, which can more intuitively show the characteristics of the two imaging conditions.

Remote sensing hyperspectral images and land-based hyperspectral images after PCA. a–c First principal component image, second principal component Image, third principal component image of the remote sensing hyperspectral image; d–f First principal component image, second principal component Image, third principal component image of the land-based hyperspectral image

In fact, the principle of hyperspectral imaging on any imaging platform is the same. The reflectivity of ground objects will constantly change with changes in the external environment; therefore, there is uncertainty in the spectrum of ground objects. The existence of spectral uncertainty makes it difficult to identify targets with unique spectral curves, which poses great difficulties for accurate detection and recognition of targets. Compared to remote sensing images, the imaging process of land-based hyperspectral images is relatively simple, and the inherent laws of spectral feature changes are easier to analyze. The bidirectional reflection distribution function (BRDF) model is often used to analyze the variation pattern of ground reflectance [33]. In terms of real-time requirements for applications, remote sensing hyperspectral imaging requires a relatively long process of acquisition, storage, correction, data processing, and long-distance transmission, resulting in a low level of real-time performance. Hyperspectral imaging under land-based imaging conditions mainly utilizes near-ground platforms without complex processes such as atmospheric corrections, and has high requirements for applications such as real time.

2.2 Spectral similarity evaluation indicators and stacking learning

Generally speaking, spectral similarity measurement methods are divided into distance based, projection based, information measure based, and statistical property based methods. Common spectral similarity measures include spectral angle, Euclidean distance, correlation coefficient, etc. These similarity measurement methods have a simple process and low computational complexity, but their evaluation aspect is single, while ignoring that the contribution of different bands to similarity is not entirely the same, and their practicality is very limited. Formulas (1)–(3) are spectral angular similarity (SAM), normalized Euclidean (NED) distance, and spectral correlation coefficient (CC), respectively [34, 35]. Assuming that the spectral reflectance vectors of different targets are represented by X and Y, respectively.

The generalized angle between X and Y is represented by \(\theta\). n represents the number of spectral bands. If \(\theta\) is smaller, the similarity in the shape of the spectral curve will be higher.

The distance between X and Y is represented by \(S\). n represents the number of spectral bands. If \(S\) is smaller, the similarity in the shape of the spectral curve will be higher.

where \(r\) is the correlation coefficient of two variables. If the \(r\) is larger, the correlation between the X and Y is stronger.

The different similarity evaluation indicators consider various factors such as the difference in amplitude value, angle value, shape of spectral curves, and changes in internal information of spectral vectors. Compared to using only individual similarity metrics, the method of combining multiple metrics has better performance and can demonstrate stronger discrimination.

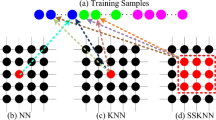

Stacking learning refers to integrating several different weak learners and training a metamodel to combine the outputs of these weak models as the final prediction result [36]. Figure 2 is the basic flowchart of stacking learning. This article draws inspiration from the core idea of stacking learning, uses different similarity evaluation indicators to obtain the feature maps to be input, and then uses the 3D–2D model as a metamodel to comprehensively utilize spatial spectral information.

2.3 3D convolution and multi-channel 2D convolution

3D convolution may appear to have the same number of channels as multi-channel 2D convolution, but there is a fundamental difference between the two. Figure 3 shows the principle of 3D convolution, where the channel depth of its input layer needs to be greater than the convolutional kernel. The 3D filter is moved in all three directions (height, width, and channel), providing numerical values for multiplication and addition at each position. Due to the filter sliding through a 3D space, the output values are also arranged according to the 3D space, and the output is also a 3D data. 3D convolution can simultaneously extract multidimensional features from data and has been widely used in many fields [37,38,39]. For hyperspectral image processing, 3D convolution can extract spatial and spectral information of targets simultaneously, effectively improving the efficiency of classification and detection [40].

The number of channels input data in the 2D convolutional kernel is the same as the number of channels in the input, and the parameters on the channels are compressed by summation. As shown in Fig. 4, the convolutional kernel of multi-channel 2D convolution can only move in both the length and width directions of the input. Therefore, the main function of 2D convolution is to extract spatial information.

2.4 Proposed method

As shown in Fig. 5, an application-oriented hyperspectral object detection framework is proposed in this article, which is aimed at detecting specific targets at different times and backgrounds. This method mainly includes the training stage and the detection stage. In the training phase, it can be roughly divided into three steps: acquisition of hyperspectral data, image preprocessing, and model parameter training.

Acquisition of hyperspectral data: Currently, obtaining land-based hyperspectral images mainly relies on field tripod imaging spectrometers or unmanned aerial vehicle imaging spectrometers. During the training phase, the obtained hyperspectral images should have both diversity and representativeness. In practical applications, the real-time nature of hyperspectral data acquisition should be emphasized.

Image preprocessing: The preprocessing of hyperspectral images refers to the process from the original hyperspectral images to the primary feature maps, which mainly includes two steps: multi-scale spectral feature extraction and spectral similarity calculation. Multi-scale spectral feature extraction can fully utilize spectral information from various bands and is widely used in hyperspectral image processing tasks [41, 42]. When extracting multi-scale spectral features, similarity calculations are performed using spectral vectors of different scales and steps to obtain feature maps.

Model parameter training: The feature extraction network used in this article draws inspiration from HybridSN [43]. The hyperspectral image data cube is divided into small overlapping 3D-patches, and the truth labels of which are determined by the label of the centered pixel. The specific network structure is shown in Table 1.

The trained model is used in the detection phase to get the detection results. In order to cope with the problem of spectral uncertainty revealed by the target under different conditions, the combined spectral similarity measure is employed in the proposed framework. In order to fully utilize the spectral information, multi-scale spectral feature extraction is performed during preprocessing is used. The 3D–2D CNN model is capable of deeply extracting the information of the target spatial dimension along with the spectral dimension, which further enhances the stability and detection effect of the framework.

2.5 Transfer learning and application system design

Transfer learning is a new task to improve learning by transferring knowledge from the learned related tasks. The proposed preprocessing method is used to extract target information, and the trained model is applied to subsequent target detection tasks. This part mainly designs the application system from both software and hardware aspects, as shown in Fig. 6. In terms of software, a practical solution was proposed to overcome the problems encountered, and then the trained model was hardware encapsulated. In terms of hardware, on the one hand, it is to improve image processing speed, and on the other hand, it is real-time imaging and display. Classical hyperspectral object detection algorithms focus on designing better filters to extract targets, while the systems designed above emphasize convenience and real-time performance during application, making them more practical and meaningful.

3 Experiments

Both the publicly available hyperspectral image dataset and the actual measured hyperspectral image dataset were utilized in the experiment. During the preprocessing process, each similarity evaluation method can utilize multi-scale spectral information to obtain 10 feature maps. The preprocessing process is shown in Fig. 7. The experiment first verified the effectiveness of the proposed method, and then analyzed the stability of different target detection algorithms under spectral uncertainty conditions. Finally, discussions were conducted. The test times of different algorithms were also compared.

3.1 Experimental data

The public dataset used in the experiment was two subregion images from the San Diego dataset. The San Diego dataset is widely used in object detection tasks. The image has a total of 400 × 400 pixels, each with 224 bands of information, with a spectral coverage ranging from 0.4 to 1.8 nm and spatial resolution of 3.5 m. In the experiment, the upper left corner 100 × 100 pixels were used as the training set, represented by "data1"; use the middle 200 × 200 pixels as the detection image, represented by "data2," as shown in Fig. 8. The corresponding target spectral average is represented by "target1" and "target2." The aircraft targets contained in the two sub images are considered as the same type of target under the influence of spectral uncertainty.

The actual measured data was captured in a certain area of Shijiazhuang City, Hebei Province, China, with the same material as the aircraft model, as shown in Fig. 9. The two sets of data are represented by "data3" and "data4," respectively, and the corresponding target spectral average is represented by "target3" and "target4." "data3" was filmed on March 10, 2023, with an image size of 400 × 170 pixels. "data4" was filmed on March 16, 2023, with an image size of 500 × 300 pixels.

3.2 Experiments on publicly available datasets

In the experiment targeting public datasets, "data1" is used as training data, and "data2" is used as the data to be detected. Firstly, use "target1" to detect three spectral similarities of "data1" to obtain the features of the target in different aspects. Based on the similarity relationship between the spectral vector at each pixel point in "data1" and the target spectral vector, preliminary results obtained from pre matching detection are obtained. As shown in Fig. 10, each row represents results of corresponding similarity method at different scales. Use the preprocessed data of 100 × 100 × 30 as the training input for the neural network model, and the corresponding labels as the output.

The superiority of the proposed method is that it does not need to know the spectral information of the target to be measured in advance, but uses the idea of transfer learning to detect targets. Therefore, when detecting targets in the public dataset "data2," "target1" is used as a prior information for preprocessing, as shown in Fig. 11.

Through the above series of processing, input for training and detection can be obtained. Throughout the entire process of object detection, the proposed method did not utilize "target2," but instead migrated "target1" to the detection task. If "target1" is used to identify the target in "data2," the images obtained by different types of algorithms after testing are shown in Fig. 12. Figure 13 shows the ROC curves of different algorithms, the AUC values of various object detection algorithms are shown in Table 2, and the test time of various object detection algorithms are shown in Table 3.

Even if "target1" is used to detect "data2," various target detection methods have certain effects, especially when the AUC value of the proposed method reaches 0.99 or above. The detection performance of GLRT and SACE methods is poor, indicating that these two algorithms have poor ability to cope with spectral uncertainty in target detection. The OSP and KCEM methods still have a certain degree of stability when conducting detection tasks. In terms of testing time, the OSP method has the shortest testing time, while the proposed method has a longer testing time.

3.3 Experiments on measured datasets

In the experiment on measured land-based hyperspectral image data, "data3" is used as training data, and "data4" is used as the data to be detected. The preprocessing steps for this part of the experiment are the same as those for public datasets, so we will not elaborate further. If "target3" is used to recognize targets in "data4," the results obtained by different types of algorithms are shown in Fig. 14. The ROC curves of different algorithms are shown in Fig. 15, AUC values of various object detection algorithms are shown in Table 4, and the test time of various object detection algorithms are shown in Table 5.

From the experimental results of measured hyperspectral data, it can be seen that the proposed method can still maintain a high AUC value and has good detection performance. From the perspective of AUC values alone, GLRT, SACE, and KCEM methods still have certain effectiveness in target detection of land-based hyperspectral images, but they are very limited. The detection performance of OSP method is the worst, indicating that this algorithm has poor ability to cope with spectral uncertainty in the process of target detection in land-based hyperspectral images.

3.4 Analysis of stability of different methods

For the sake of researching the detection capacity of various object detection algorithms in remote sensing hyperspectral images and land-based hyperspectral images, and analyze the stability of different algorithms in dealing with spectral uncertainty issues. Figure 16 shows the detection result images in various scenarios. Figure 17 shows the AUC values of various detection methods.

From the perspective of target detection capabilities of different algorithms, the OSP algorithm performs well in public remote sensing hyperspectral images and has a strong ability to cope with spectral uncertainty. However, the OSP algorithm is clearly not suitable for target detection tasks in land-based hyperspectral images. In comparison methods, the overall performance of SACE and GLRT methods is not as good as that of KCEM method. This may be due to the introduction of nonlinear features in KCEM method, which improves detection performance and stability. The proposed method achieves AUC values above 0.99 in target detection of different hyperspectral images, which combines high accuracy and stability.

3.5 Experimental results and discussion

The above experiment can be divided into two parts: one is based on publicly available hyperspectral datasets, and the other is based on measured hyperspectral datasets. In both parts of the experiment, the idea of target spectral migration was adopted. Compared to other hyperspectral target detection algorithms, the proposed method exhibits outstanding detection performance and stability. In summary, the experimental results can validate the following viewpoints.

-

1.

There are significant differences in image processing between land-based hyperspectral images and remote sensing hyperspectral images. Land-based hyperspectral images and remote sensing hyperspectral images exhibit their respective characteristics in both spatial and spectral dimensions. Early target detection algorithms were all aimed at remote sensing hyperspectral images, and experiments have shown that these algorithms are not entirely applicative for target detection in land-based hyperspectral images. For example, the OSP algorithm can achieve good results in remote sensing hyperspectral images, but its detection performance is poor in land-based hyperspectral images.

-

2.

The proposed framework is basically unaffected by the spectral uncertainty, and its detection results have strong stability compared to other supervised target detection algorithms. The proposed method utilizes the idea of ensemble learning and deep convolutional neural networks to effectively extract invariant features in the target spectrum. Whether in remote sensing hyperspectral images or land-based hyperspectral images, it has outstanding detection performance and robustness.

-

3.

There are still many urgent problems to be solved in current target detection methods for land-based hyperspectral image target detection tasks. Compared to hyperspectral remote sensing images, land-based hyperspectral images can obtain more detailed spatial structure information of targets. However, land-based hyperspectral images contain richer and more complex information. For the measured hyperspectral data, although the AUC values are all very high, from the detection images, the false alarm rate of this framework is still at a high level. On the one hand, this is due to the relatively simple composition of training samples, and on the other hand, it is determined by the characteristics of land-based hyperspectral images themselves, such as the influence of target shadows. The real-time performance of the proposed framework could be improved from the testing time.

4 Conclusion

An application-oriented land-based hyperspectral target detection framework based on 3D–2D CNN and transfer learning was proposed in this study, and the detection effect and robustness of this method were proved through experiments. The framework design adopts integrated learning, transfer learning, multi-scale spectral feature extraction, 3D–2D CNN model, and other strategies. Through experiments, it has been confirmed that the proposed target detection algorithm has strong robustness for different types of hyperspectral images. There are still some areas that can be further developed during the research process. Firstly, based on the analysis of spectral characteristics of land-based hyperspectral images, search for more invariant spectral features to address spectral uncertainty issues. Secondly, the selection of training samples should strive to balance quantity and representativeness. Thirdly, there is still great room for improvement in real-time performance of the proposed method, and improvements are still needed in the feature extraction network to improve detection speed and seek the best balance between detection speed and effectiveness. Fourthly, multiple evaluation indicators need to be utilized to evaluate the effectiveness of target detection. At present, real-time imaging devices have become a reality, and the proposed method provides a feasible solution for the future application of target detection in land-based hyperspectral images.

Availability of data and materials

The measured data belongs to the Army Engineering University and is not suitable for publication due to copyright protection. The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

M.J. Khan, H.S. Khan, A. Yousaf, K. Khurshid, A. Abbas, Modern trends in hyperspectral image analysis: a review. IEEE Access 6, 14118–14129 (2018). https://doi.org/10.1109/ACCESS.2018.2812999

S. Hussain, B. Lall, Depth separable-CNN for improved spectral super-resolution. IEEE Access 11, 23063–23072 (2023)

D. Lupu, I. Necoara, J.L. Garrett, T.A. Johansen, Stochastic higher-order independent component analysis for hyperspectral dimensionality reduction. IEEE Trans. Comput. Imaging 8, 1184–1194 (2022)

S. Li, W. Song, L. Fang, Y. Chen, P. Ghamisi, J.A. Benediktsson, Deep learning for hyperspectral image classification: an overview. IEEE Trans. Geosci. Remote Sens. 57(9), 6690–6709 (2019). https://doi.org/10.1109/TGRS.2019.2907932

X. He, Y. Chen, L. Huang, Bayesian deep learning for hyperspectral image classification with low uncertainty. IEEE Trans. Geosci. Remote Sens. 61, 1–16 (2023)

L. Yang, H. Su, C. Zhong, Z. Meng, H. Luo, X. Li, Y.Y. Tang, Y. Lu, Hyperspectral image classification using wavelet transform-based smooth ordering. Int. J. Wavelets Multiresolut. Inf. Process 17(6), 1950050 (2019)

C. Zhong, J. Zhang, S. Wu, Y. Zhang, Cross-scene deep transfer learning with spectral feature adaptation for hyperspectral image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 13, 2861–2873 (2020). https://doi.org/10.1109/JSTARS.2020.2999386

X. Shang et al., Target-constrained interference-minimized band selection for hyperspectral target detection. IEEE Trans. Geosci. Remote Sens. 59(7), 6044–6064 (2021). https://doi.org/10.1109/TGRS.2020.3010826

H. Gao, Y. Zhang, Z. Chen, Xu. Shufang, D. Hong, B. Zhang, A multidepth and multibranch network for hyperspectral target detection based on band selection. IEEE Trans. Geosci. Remote Sens. 61, 1–18 (2023)

R. Pike, G. Lu, D. Wang, Z.G. Chen, B. Fei, A minimum spanning forest-based method for noninvasive cancer detection with hyperspectral imaging. IEEE Trans. Biomed. Eng. 63(3), 653–663 (2016). https://doi.org/10.1109/TBME.2015.2468578

Q. Hao et al., Fusing multiple deep models for in vivo human brain hyperspectral image classification to identify glioblastoma tumor. IEEE Trans. Instrum. Meas. 70, 1–14 (2021). https://doi.org/10.1109/TIM.2021.3117634

D. Min, J. Zhao, G. Bodner, M. Ali, F. Li, X. Zhang, B. Rewald, Early decay detection in fruit by hyperspectral imaging–principles and application potential. Food Control (2023). https://doi.org/10.1016/j.foodcont.2023.109830

J. Wieme, K. Mollazade, I. Malounas, M. Zude-Sasse, M. Zhao, A. Gowen, D. Argyropoulos, S. Fountas, J. Van Beek, Application of hyperspectral imaging systems and artificial intelligence for quality assessment of fruit, vegetables and mushrooms: a review. Biosyst. Eng. 222, 156–176 (2022). https://doi.org/10.1016/j.biosystemseng.2022.07.013

S. Fadnavis, A. Sagalgile, S. Sonbawne et al., Comparison of ozonesonde measurements in the upper troposphere and lower Stratosphere in Northern India with reanalysis and chemistry-climate-model data. Sci. Rep. 13, 7133 (2023). https://doi.org/10.1038/s41598-023-34330-5

J. Zhao, B. Zhou, G. Wang, J. Liu, J. Ying, Camouflage target recognition based on dimension reduction analysis of hyperspectral image regions. Photonics 9, 640 (2022). https://doi.org/10.3390/photonics9090640

Y. Zhang, B. Du, Y. Zhang, L. Zhang, Spatially adaptive sparse representation for target detection in hyperspectral images. IEEE Geosci. Remote Sens. Lett. 14(11), 1923–1927 (2017). https://doi.org/10.1109/LGRS.2017.2732454

T. Tu, C. Chen, C. Chang, A noise subspace projection approach to target signature detection and extraction in an unknown background for hyperspectral images. IEEE Trans. Geosci. Remote Sens. 36(1), 171–181 (1998)

W.H. Farrand, J.C. Harsanyi, Mapping the distribution of mine tailings in the Coeur d’Alene river valley, Idaho, through the use of a constrained energy minimization technique. Remote Sens. Environ. 59, 64–76 (1997). https://doi.org/10.1016/s0034-4257(96)00080-6

R.M. Kay, Fundamentals of Statistical Signal Processing. Detection Theory, vol. 2 (Prentice-Hall, Englewood Cliffs, 1998)

D.G. Manolakis, G.A. Shaw, N. Keshava, Comparative analysis of hyperspectral adaptive matched filter detectors. Proc. SPIE 4049, 2–17 (2000)

D. Manolakis, D. Marden, G.A. Shaw, Hyperspectral image processing for automatic target detection applications. Lincoln Lab. J. 14(1), 79–116 (2003)

D. Jinming, Z. Li, A hyperspectral target detection framework with subtraction pixel pair features. IEEE Access 6, 45562–45577 (2018)

L. Zhang, Advance and future challenges in hyperspectral target detection. Geomat. Inf. Sci. Wuhan Univ. 39(12), 1387–1394 (2014)

H. Kwon, N.M. Nasrabadi, Kernel spectral matched filter for hyperspectral imagery. Int. J. Comput. Vis. 71(2), 127–141 (2007)

H. Kwon, N.M. Nasrabadi, Kernel matched subspace detectors for hyperspectral target detection. IEEE Trans. Pattern Anal. Mach. Intell. 28(2), 178–194 (2005)

H. Kwon, N.M. Nasrabadi, Kernel adaptive subspace detector for hyperspectral imagery. IEEE Geosci. Remote Sens. Lett. 3(2), 271–275 (2006)

Z.-S. Luo, X.-L. Zhao, T.-X. Jiang, Y.-B. Zheng, Y. Chang, Hyperspectral mixed noise removal via spatial-spectral constrained unsupervised deep image prior. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 14, 9435–9449 (2021). https://doi.org/10.1109/JSTARS.2021.3111404

V.S. Deshpande, J.S. Bhatt, A practical approach for hyperspectral unmixing using deep learning. IEEE Geosci. Remote Sens. Lett. 19, 1–5 (2022). https://doi.org/10.1109/LGRS.2021.3127075

Y. Ma, Z. Liu, C.L.P. Chen, Multiscale random convolution broad learning system for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 19, 1–5 (2022). https://doi.org/10.1109/LGRS.2021.3060876

Z.A. Lone, A.R. Pais, Object detection in hyperspectral images. Digit. Signal Process. 131, 103752 (2022). https://doi.org/10.1016/j.dsp.2022.103752

Z. Li, J. Li, P. Zhang, L. Zheng, Y. Shen, Q. Li, X. Li, T. Li, A transfer-based framework for underwater target detection from hyperspectral imagery. Remote Sens. 15, 1023 (2023). https://doi.org/10.3390/rs15041023

B. Zhou, B. Li, X. He, H. Liu, F. Wang, Analysis of typical ground objects and camouflage spectral influence factors under land-based conditions. Spectrosc. Spectr. Anal. 41(09), 2956–2961 (2021)

W. Zhu, D. You, J. Wen, Y. Tang, B. Gong, Y. Han, Evaluation of linear kernel-driven BRDF models over snow-free rugged terrain. Remote Sens. 15, 786 (2023). https://doi.org/10.3390/rs15030786

D. Manolakis, G. Shaw, Detection algorithms for hyperspectral imaging applications. IEEE Signal Process. Mag. 19, 29–43 (2002). https://doi.org/10.1109/79.974724

C. Jiang, J. Zhao, Y. Ding, G. Li, Vis–NIR spectroscopy combined with GAN data augmentation for predicting soil nutrients in degraded alpine meadows on the Qinghai-Tibet Plateau. Sensors 23, 3686 (2023). https://doi.org/10.3390/s23073686

C. Tao, H. Pan, Y. Li, Z. Zou, Unsupervised spectral-spatial feature learning with stacked sparse autoencoder for hyperspectral imagery classification. IEEE Geosci. Remote Sens. Lett. 12(12), 2438–2442 (2015). https://doi.org/10.1109/LGRS.2015.2482520

G. Zhu, L. Zhang, P. Shen, J. Song, S.A.A. Shah, M. Bennamoun, Continuous gesture segmentation and recognition using 3DCNN and convolutional LSTM. IEEE Trans. Multimed. 21(4), 1011–1021 (2019). https://doi.org/10.1109/TMM.2018.2869278

Y. Liu, T. Zhang, Z. Li, 3DCNN-based real-time driver fatigue behavior detection in urban rail transit. IEEE Access 7, 144648–144662 (2019). https://doi.org/10.1109/ACCESS.2019.2945136

Y. Jing, J. Hao, P. Li, Learning spatiotemporal features of CSI for indoor localization with dual-stream 3D convolutional neural networks. IEEE Access 7, 147571–147585 (2019). https://doi.org/10.1109/ACCESS.2019.2946870

Y. Li, H. Zhang, Q. Shen, Spectral-spatial classification of hyperspectral imagery with 3D convolutional neural network. Remote Sens. 9(1), 67 (2017). https://doi.org/10.3390/rs9010067

D. Zeng, S. Zhang, F. Chen, Y. Wang, Multi-scale CNN based garbage detection of airborne hyperspectral data. IEEE Access 7, 104514–104527 (2019). https://doi.org/10.1109/ACCESS.2019.2932117

X. Zheng, Y.Y. Tang, J. Zhou, A framework of adaptive multiscale wavelet decomposition for signals on undirected graphs. IEEE Trans. Signal Process. 67(7), 1696–1711 (2019). https://doi.org/10.1109/TSP.2019.2896246

S.K. Roy, G. Krishna, S.R. Dubey, B.B. Chaudhuri, HybridSN: exploring 3-D–2-D CNN feature hierarchy for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 17(2), 277–281 (2020). https://doi.org/10.1109/LGRS.2019.2918719

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

JZ was involved in conceptualization, data curation, writing—original draft preparation. BZ helped in conceptualization, methodology, software. GW and JL assisted in visualization, investigation, supervision. JY, JL, and BZ were involved in resources and supervision.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhao, J., Wang, G., Zhou, B. et al. Exploring an application-oriented land-based hyperspectral target detection framework based on 3D–2D CNN and transfer learning. EURASIP J. Adv. Signal Process. 2024, 37 (2024). https://doi.org/10.1186/s13634-024-01136-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13634-024-01136-0