Abstract

This paper studies the estimation problem for multisensor networked systems with mixed uncertainties, which include colored noises, same multiplicative noises in system parameter matrices, uncertain noise variances, as well as the one-step random delay (OSRD) and packet dropouts (PDs). This study utilizes the centralized fusion (CF) algorithm to combing all information received by each sensor, which improve the accuracy of the estimation. By using the augmentation method, de-randomization method and fictitious noise techniques, the original uncertain system is transformed into an augment model with only uncertain noise variances. Then, for all uncertainties within the allowable range, the robust CF steady-state Kalman estimators (predictor, filter, and smoother) are presented based on the worst-case CF system, in light of the minimax robust estimation principle. To demonstrate the robustness of the proposed CF estimators, the non-negative definite matrix decomposition method and Lyapunov equation approach are employed. It is proved that the robust accuracy of CF estimator is higher than that of each local estimator. Finally, the simulation example applied to the uninterruptible power system (UPS) with colored noises and multiple uncertainties illustrates the effectiveness of the proposed CF robust estimation algorithm.

Similar content being viewed by others

1 Introduction

1.1 Background

The multisensor information fusion technology uses computer to automatically analyze and synthesize the data from each sensor under certain criteria, so as to complete the required decision-making and estimation [1]. In recent years, the multisensor information fusion estimation has received considerable attention because it’s performance in accuracy and stability has a significant improvement compared to the single sensor system. According to whether raw data are directly utilized by the system, there are two most frequent information fusion techniques: distributed fusion and CF [2].

Kalman filtering method is a powerful tool in estimation field since the convenience to calculate on the computer. It is an algorithm that uses the linear system state equation to obtain the estimation of the state through the system input and output observation data. Since the observation data include the noise and destabilization in the system, the optimal estimation can also be regarded as a filtering process. For the conventional Kalman filtering approach to work, we should know precisely the model parameters and noise variances of the system [3]. However, this condition may not always hold in many engineering applications due to some uncertainties always appear in the system, such as stochastic parameters, uncertain perturbations, and unmodeled dynamics. One of the most well-known approach to deal the uncertainties is to introduce the robust Kalman filters [4], which was selected for its reliability and validity. The key characteristic of the robustness of the filters is that its actual filtering error variances are guaranteed to have a minimal upper bound when all of the permissible uncertainties are included.

State-dependent and noise-dependent multiplicative noises are the most common means to describe the stochastic parameter uncertainties [5,6,7]. Some previous studies have traditionally relied upon a basic fact that the state-dependent and noise-dependent multiplicative noises in the system model are completely different. The current study considers the same multiplicative noises in system parameter matrices, which allow us to resolve the unsettled issues.

Additionally, it is usually assumed that the noise in the uncertain systems is white noise. However, in engineering practice, the system is often disturbed by colored noise. The colored noise is also called self-correlation noise, that is, the state of noise at each time is not independent, but correlated with the state before this time [8, 9]. There are generally two methods to deal with the state estimation problem with colored noise: one is to transform the system into a new form with uncorrelated noise, and then obtain the estimator by apply the filter algorithm; the other is to directly construct a general estimation algorithm under the colored noise. The uncertainties of noise variances can be described by deterministic uncertainties. We can assume that the actual uncertain noise variances have the known conservative upper limits, because of the noise variance matrices are positive semi-definite [10, 11].

At present, the research on filtering of mixed uncertain networked systems with colored noises is also one of the hot fields. In the past years, too many researchers have been studied on the system with colored noises in observation equation or state equation, but few focuses on that the colored noises and uncertain noise variances exist simultaneously.

Compared with the traditional point-to-point control mode, the networked system reduces the system wiring, saves the system design cost, and enhances the system maintainability, interactivity and fault diagnosis ability [12,13,14]. It has been applied in many fields. Networked control has also become one of the core contents in the international control field. However, due to the limited bandwidth and energy in the communication process, it is inevitable to cause random uncertainties such as random sensor delays, PDs, and missing observations [15,16,17,18]. Using Bernoulli random variables with values of 0 or 1 to describe the uncertainty in networked systems is one of the common methods [19,20,21].

1.2 Related work

Over the past years, a great deal of research into robust or optimal state estimation has focused on the multisensor networked systems with mixed uncertainties [8, 9, 22,23,24,25,26,27,28,29].

For uncertain multi-rate sampled-data systems with norm bounded uncertain parameters, stochastic nonlinearities and the colored observation noises [8], a new fusion estimation scheme is proposed with the help of covariance intersection method, and the consistency of the proposed fusion estimation scheme is shown. However, the reference [8] failed to deal with the multiplicative noises and networked random uncertainties. For uncertain networked systems with state-dependent multiplicative noises, time-correlated additive noises and PDs, on the basis of the linear minimum variance (LMV) criterion, [9] designed the optimal linear recursive full-order state estimators. However, [9] have not been able to address the random sensor delays and the noise-dependent multiplicative noises. According the neighboring information from each sensor, [22] proposed the distributed filters for the multisensor systems with fading observations and time-correlated observation noises. However, the random sensor delays and multiplicative noises are not considered in [22]. By utilizing the Lévy–Ito theorem, for the discrete time-varying systems with non-Gaussian Lévy and time-correlated additive observation noises, [23] designed a modified recursive Tobit Kalman filter. However, [23] have not studied the multiplicative noises and networked random uncertainties. For linear discrete time-varying stochastic systems with multiple PDs and colored observation noises [24], based on the estimated observation values, the optimal estimators (filter, predictor, and smoother) are developed via an innovation analysis approach. However, [24] have not considered the random sensor delays and multiplicative noises in the system models. In the sense of minimum mean-square error, [25] have been established the recursive state estimation algorithms for the systems with OSRD, PDs, and time-correlated multiplicative noises. However, [25] have failed to consider the state-dependent and noise-dependent multiplicative noises in the system models.

Based on the transformed observations, [26] introduced the recursive distributed and CF estimation algorithms to solve the problem about multisensor systems with time-correlated observation noises in both the sensor outputs and the transmission connections. However, [26] have not taken the noise-dependent multiplicative noises and random sensor delays into account. For systems with multiplicative and time-correlated additive observation noises, a convergence condition of the optimal linear estimator is obtained in [27]. However, the studies in [27] have failed to take the noise-dependent multiplicative noises and networked random uncertainties into account. For multisensor system with random parameter matrices, colored observation noises, uncertain observations, random sensor delays, and PDs [28], the optimal linear CF estimators are obtained via an innovation approach. However, in [28], the uncertainties in system model do not contain the noise-dependent multiplicative noises.

According to the linear minimum mean square error criterion, [29] have proposed an optimal state estimator for the discrete-time linear systems with multiplicative observation noises and time-correlated additive observation noise. However, [29] have failed to address the multiplicative noises in the state matrix, the noise-dependent multiplicative noises, and the networked random uncertainties.

Additionally, the studies in [8, 9, 22,23,24,25,26,27,28,29] are all assume that the noise variances be exactly known. In many cases, however, this condition is not valid.

1.3 Innovation

The main innovation in this paper is as follows:

-

1.

The paper gives an innovative and comprehensive multisensor networked system model, which considered simultaneously the colored noises, multiplicative noises, OSRD, PDs, and uncertain noise variances. Previous studies generally assumed that the noise in the systems was white noises, and few studies focused on the robust estimation problem with colored noises.

-

2.

By using the augmented method, de-randomization method and the fictitious noise technique, as well as defining some perturbation direction matrices, the original system with colored noises and multiple uncertainties is transformed into the augmented CF system only with uncertain noise variances. In light of the minimax robust estimation principle, the robust CF steady-state Kalman estimators are proposed.

-

3.

By employing a mixed approach, which consists of non-negative definite matrix decomposition method and Lyapunov equation approach, the robustness of CF estimators for all allowable uncertainties is proved.

-

4.

A simulation example applied to the UPS with colored noises and mixed uncertainties is given, which verifies the effectiveness and applicability of the proposed method.

Nomenclature | |

|---|---|

Rn | n-Dimensional Euclidean space |

diag(·) | Diagonal matrix |

\(\Lambda^T\) | Transpose of matrix Λ |

Prob(·) | Occurrence probability of event “·” |

\(\text{tr}(\Lambda)\) | Trace of matrix Λ |

E[·] | Mathematical expectation operator |

Rn×n | Set of n×n real matrices |

\(\otimes\) | Kronecker product |

\(\Lambda^-1\) | Inverse of matrix Λ |

In | n by n identity matrix |

ρ(Λ) | Spectrum radius of matrix Λ |

0 | Zero matrix with suitable dimension |

2 Problem statement

The system model to be researched is as follows:

where \(x(t) \in R^{n}\) is the state to be estimated, \(z_{i} (t) \in R^{{m_{i} }}\) is the observation of ith sensor, \(y_{i} (t) \in R^{{m_{i} }}\) is the observation received by the estimator in network, \(w \left( t \right) \in R^r\) is the colored noise, \(g_{i} (t) \in R^{{m_{i} }} ,i = 1, \ldots ,L\) , and \(\eta (t) \in R^{r}\) are the additive white noises, \(\xi_{k} (t) \in R^{1} ,k = 1, \ldots ,q\) are the multiplicative noises. \(\Phi \in R^{n \times n} ,\Phi_{k} \in R^{n \times n} ,\Gamma \in R^{n \times r} ,\Gamma_{k} \in R^{n \times r} ,H_{i} \in R^{{m_{i} \times n}} ,H_{ik} \in R^{{m_{i} \times n}} ,C_{i} \in R^{{m_{i} \times r}} ,C_{ik} \in R^{{m_{i} \times r}}\) and \(D \in R^{r \times r}\) are known constant matrices with suitable dimensions, q is the number of multiplicative noises, L is the number of sensors.

Hypothesis 1

The probabilities of mutually uncorrelated scalar Bernoulli white noises \(\zeta_{i} (t) \in R^{1} ,i = 1, \ldots ,L\) is.

where \(\varsigma_{i} ,i = 1, \ldots ,L\) are known and \(0 \le \varsigma_{i} \le 1\), and \(\zeta_{i} (t)\) are uncorrelated with other stochastic signals.

The following results can be got from Hypothesis 1:

The aims of (4) are to describe the uncertainties in the networked system, including OSRD and PDs. If \(\zeta_{i} (t) = 1\), then \(y_{i} (t) = z_{i} (t)\), (no OSRD and PDs); if \(\zeta_{i} (t) = 0\) and \(\zeta_{i} (t - 1) = 0\), then \(y_{i} (t) = z_{i} (t - 1)\) (OSRD); if \(\zeta_{i} (t) = 0\) and \(\zeta_{i} (t - 1) = 1\), then \(y_{i} (t) = 0\) (PDs).

Hypothesis 2

\(\eta (t), g_{i} (t),i = 1, \ldots ,L\) and \(\xi_{k} (t),k = 1, \ldots ,q\) are mutually uncorrelated white noises with zero means. \(\text E\left[ {\eta (t)\eta^{\text T} (u)} \right] = \overline{R}_{\eta } \delta_{tu} ,\text E\left[ {g_{i} (t)g_{j}^{\text T} (u)} \right] = \overline{R}_{{g_{i} }} \delta_{ij} \delta_{tu}\) and \(\text E\left[ {\xi_{k} (t)\xi_{h}^{\text T} (u)} \right] = \overline{\sigma }_{{\xi_{k} }}^{2} \delta_{kh} \delta_{tu}\) are, respectively, their covariances, where the unknown uncertain actual (true) variances are, respectively, \(\overline{R}_{\eta } ,\overline{R}_{{g_{i} }}\) and \(\overline{\sigma }_{{\xi_{k} }}^{2}\). The Kronecker delta function δkj is defined as \(\delta_{kk} = 1,\delta_{kj} = 0(k \ne j)\).

Hypothesis 3

x(0) is uncorrelated with \(\eta (t),g_{i} (t),\xi_{k} (t)\), and \(\zeta_{i} (t)\), and \(\text E\left[ {x(0)} \right] = \mu_{0} ,\text E\left[ {(x(0) - {{{\mu}}}_{0} )(x(0) - {{{\mu}}}_{0} )^{{\text{T}}} } \right] = \overline{P}_{0}\).

Hypothesis 4

\(\overline{R}_{\eta } ,\overline{R}_{{g_{i} }} ,\overline{\sigma }_{{\xi_{k} }}^{2}\) and \(\overline{P}_{0}\) have, respectively, known conservative upper bounds \(R_{\eta } ,R_{{g_{i} }} ,\sigma_{{\xi_{k} }}^{2}\), and P0, that is

If the noise variances in system (1)–(4) take \(R_{\eta } ,R_{{g_{i} }} ,\sigma_{{\xi_{k} }}^{2}\), and P0, then the system (1)–(4) is called “worst-case” system. The minimax robust estimate principle means that, for the “worst-case” system, proposing the “minimum” variance estimator. The purpose of this paper is to introduce a estimators with robustness for state x(t) via the “minimax robust estimate principle”.

The meaning of robustness is that there are the minimal upper bounds Pc(N) for the actual CF steady-state estimation error variances \(\overline{P}_{c} (N)\), i.e., \(\overline{P}_{c} (N) \le P_{c} (N)\).

3 Methods

3.1 Augmented CF system

To begin this process, a new vector \(\delta_{i} (t)\) is introduced, which is defined as follows:

combining (2) and (7), we get that

meanwhile, combining (2), (4), and (7), the local observations yi(t) given by (4) can be converted into the following form:

The corresponding CF observations can be obtained by, respectively, combining the local observations given by (8) and (9)

where

Combining (1), (3), (10), and (11), the following augmented CF system can be obtained

where

By means of the de-randomization method, the system (13) and (14) with random parameter matrices can be transformed into the following system with constant parameter matrices and multiplicative noises

where

Noting that the matrices \(N_{i} \in R^{m \times m}\), and \(N_{1} = {\text{diag}}\left( {I_{{m_{1} }} \quad (0)_{{m_{2} \times m_{2} }} \quad \ldots \quad (0)_{{m_{L} \times m_{L} }} } \right)\), \(N_{2} = {\text{diag}}\left( {(0)_{{m_{1} \times m_{1} }} \quad I_{{m_{2} }} \quad (0)_{{m_{3} \times m_{3} }} \quad \ldots \quad (0)_{{m_{L} \times m_{L} }} } \right)\), etc. In addition, utilizing (5) yields that the statistic properties of \(\zeta_{iz} (t)\) are as follows:

Lemma 1

[10] Let Ri be the \(m_{i} \times m_{i}\) positive semi-definite matrix, i.e., \(R_{i} \ge 0\), then the block-diagonal matrix \(R_{\delta } = {\text{diag}}(R_{1} , \ldots ,R_{L} )\) is also positive semi-definite, i.e., \(R_{\delta } = {\text{diag}}(R_{1} , \ldots ,R_{L} ) \ge 0\).

From (12), and conservative variances \(\overline{R}_{g}^{(c)} = {\text{diag}}\left( {\overline{R}_{{g_{1} }} , \ldots ,\overline{R}_{{g_{L} }} } \right)\) and \(R_{g}^{(c)} = {\text{diag}}\left( {R_{{g_{1} }} , \ldots ,R_{{g_{L} }} } \right)\) of \(g^{(c)} (t)\) can be obtained. According to the Lemma 1 above, subtracting the actual variances \(\overline{R}_{g}^{(c)} = {\text{diag}}\left( {\overline{R}_{{g_{1} }} , \ldots ,\overline{R}_{{g_{L} }} } \right)\) from conservative variances \(R_{g}^{(c)} = {\text{diag}}\left( {R_{{g_{1} }} , \ldots ,R_{{g_{L} }} } \right)\) of \(g^{(c)} (t)\), the following inequality can be obtained:

From (15), for the white noise wa(t), we get its actual variances \(\overline{Q}_{a} = {\text{diag}}\left( {\overline{R}_{\eta } ,\overline{R}_{g}^{(c)} } \right)\) and conservative variances \(Q_{a} = {\text{diag}}\left( {R_{\eta } ,R_{g}^{(c)} } \right)\). Similarly, based on the Lemma 1, subtracting \(\overline{Q}_{a}\) from Qa, and utilizing (6), (19), the following relationship can be obtained:

3.2 Actual and conservative state second order non-central moments

On the basis of the form of xa(t) from (16), the actual second order non-central moments \(\overline{X}_{a} (t)\) and conservative value Xa(t) can be calculated

\(\overline{X}_{a} (0) = {\text{diag}}\left( {\begin{array}{*{20}c} {\overline{X}(0)} & {\left( 0 \right)_{r \times r} } & {\left( 0 \right)_{m \times m} } \\ \end{array} } \right),\overline{X}(0) = \overline{P}_{0} + \mu_{0} \mu_{0}^{{\text{T}}}\), and \(X_{a} (0) = {\text{diag}}\left( {\begin{array}{*{20}c} {X(0)} & {\left( 0 \right)_{r \times r} } & {\left( 0 \right)_{m \times m} } \\ \end{array} } \right),X(0) = P_{0} + \mu_{0} \mu_{0}^{{\text{T}}}\) are, respectively, the initial values of \(\overline{X}_{a} (t)\) and Xa(t).

Lemma 2

Under the conditions of Hypothesis 4, the relationship between actual and conservative state second order non-central moments of the state xa(t) can be obtained.

Proof

It is completely similar to the proof of Lemma 5 in [6], we can prove Lemma 2. The proof is completed. □

Lemma 3

Under the conditions of Hypotheses 1–4, if \(\rho (\overline{\Phi }) < 1,\overline{\Phi } = \Phi_{a}^{m} \otimes \Phi_{a}^{m} + \sum\limits_{k = 1}^{q} {\overline{\sigma }_{{\xi_{k} }}^{2} } \Phi_{a}^{\xi k} \otimes \Phi_{a}^{\xi k} + \sum\limits_{i = 1}^{L} {\sigma_{{\zeta_{iz} }}^{2} } \Phi_{a}^{\zeta i} \otimes \Phi_{a}^{\zeta i} + \sum\limits_{i = 1}^{L} {\sigma_{{\zeta_{iz} }}^{2} } \sum\limits_{k = 1}^{q} {\overline{\sigma }_{{\xi_{k} }}^{2} } \Phi_{a}^{ki} \otimes \Phi_{a}^{ki}\), then we have the following convergences.

Proof

If \({{{\rho}}}({\overline{{\Phi }}}) < 1\), similar to the proof of references [30, 31], by utilizing the practical application scene of the result in [32, 33], the Lemma 3 can be proved to be true. This completes the proof. □

Combining (23) and (24), we have that

3.3 Fictitious process and observation noises

A noise wf(t) is introduced as the fictitious process noise, which can compensate the multiplicative noise term in (16)

where the wf(t) is white noise with zero mean.

Thus, (16) will be rewritten in the following form:

From (28), the actual and conservative steady-state variances of fictitious process noise wf(t) are, respectively, calculated by

Define \(\Delta Q_{f} = Q_{f} - \overline{Q}_{f} ,\Delta X_{a} = X_{a} - \overline{X}_{a} ,\Delta \sigma_{{\xi_{k} }}^{2} = \sigma_{{\xi_{k} }}^{2} - \overline{\sigma }_{{\xi_{k} }}^{2}\), then subtracting (30) from (31) yields

by analyzing (32) through utilizing (20) and (27), we can get that \({{{\Delta}}}Q_{f} \ge 0\), i.e.,

Similarly, the noise vf(t) is introduced as the fictitious observation noise in (17)

and the vf(t) is white noise with zero mean.

Therefore, (17) will be rewritten in the following form:

The actual and conservative steady-state variances of vf(t) are, respectively,

Define \(\Delta R_{f} = R_{f} - \overline{R}_{f}\), subtracting (36) from (37) yields

utilizing (20) and (27), it is easy to prove that

Next, the correlate matrices of the two fictitious noises introduced above are calculated, and their actual and conserved values are as follows

Define \(\Delta S_{f} = S_{f} - \overline{S}_{f}\), subtracting (40) from (41) yields

Hypothesis 5

Assume that the pair \(\left( {\Phi_{a}^{m} ,H_{a}^{m} } \right)\) is completely detectable, and the pair \((\Phi_{m} ,\Upsilon )\) is completely stabilizable, where \(\Phi_{m} = \Phi_{a}^{m} - S_{m} H_{a}^{m} ,S_{m} = S_{f} R_{f}^{ - 1} ,\Upsilon \Upsilon^{\text T} = Q_{f} - S_{f} R_{f}^{ - 1} S_{f}^{\text T}\).

4 Results

4.1 Robust CF steady-state Kalman predictor

The CF system (29) and (35) with known conservative noise statistics Qf, Rf, and Sf are called worst-case conservative system. Under the conditions of Hypotheses 1–5, applying the standard Kalman filtering algorithm [3], for the worst-case conservative system, yields that the steady-state one-step Kalman predictor is given as

with the initial value \(\hat{x}_{a} (0| - 1) = \left[ {\begin{array}{*{20}c} {\mu_{0}^{\text T} } & {\left( {(0)_{r \times 1} } \right)^{\text T} } & {\left( {(0)_{m \times 1} } \right)^{\text T} } \\ \end{array} } \right]^{\text T}\), and \(\Psi_{ap}\) is stable.

The conservative steady-state prediction error variance Pa(− 1) satisfies the following steady-state Riccati equation

Remark 1

The local observations yi(t), produced by the “worst-case” system (1)–(4), are called conservative local observations and are unavailable (unknown). Thus, the conservative CF observations y(c)(t), consisted by conservative local observations yi(t), are also unavailable. The observations yi(t) generated from the actual system (1)–(4) with the actual variances \(\overline{R}_{\eta } ,\overline{R}_{{g_{i} }} ,\overline{\sigma }_{{\xi_{k} }}^{2}\), and \(\overline{P}_{0}\) are called actual observations and are available (known). Furthermore, the actual CF observations y(c)(t), consisted by actual local observations yi(t), are also available. In (43), replacing the conservative CF observations y(c)(t) by the actual CF observations y(c)(t), the actual CF Kalman predictor can be obtained.

The steady-state prediction error is \(\tilde{x}_{a} (t + 1|t) = x_{a} (t + 1) - \hat{x}_{a} (t + 1|t)\), subtracting (43) from (29) yields

where

the actual and conservative steady-state variances of augmented noises \(\lambda_{f} (t)\) are, respectively, calculated by

Furthermore, the actual and conservative CF steady-state prediction error variances satisfy the following Lyapunov equations, respectively,

Lemma 4

[10] If \(\Theta \in R^{m \times m}\) is the positive semi-definite matrix, i.e., \(\Theta \ge 0\), and \(\Theta_{\delta } = \left( {\Theta_{ij} } \right) \in R^{mL \times mL} ,\Theta_{ij} = \Theta ,i,j = 1,2, \ldots ,L\), then \(\Theta_{\delta } \ge 0\).

Lemma 5

Under the conditions of Hypothesis 4, we have that.

Proof

Define \(\Delta \Lambda_{f} = \Lambda_{f} - \overline{\Lambda }_{f}\), utilizing (32), (38), and (42), we have that

Partition \(\Delta \Lambda_{f}\) into \(\Delta \Lambda_{f} = \Delta \Lambda_{f}^{(1)} + \Delta \Lambda_{f}^{(2)} + \cdots + \Delta \Lambda_{f}^{(7)}\), with the definitions

Noting that \(\Delta \Lambda_{f}^{(1)} ,\Delta \Lambda_{f}^{(2)} ,\Delta \Lambda_{f}^{(3)} ,\Delta \Lambda_{f}^{(4)}\), and \(\Delta \Lambda_{f}^{(5)}\) can equivalently be expressed in the following forms

where

the application of (27) and Lemma 4 yields \(\Delta X_{f} \ge 0\), which yields \(\Delta \Lambda_{f}^{(1)} \ge 0\), \(\Delta \Lambda_{f}^{(3)} \ge 0\), \(\Delta \Lambda_{f}^{(4)} \ge 0\). According to the positive semi-definiteness of variance matrix, and applying Lemma 4 yields \(X_{f} \ge 0\), which yields \(\Delta \Lambda_{f}^{(2)} \ge 0\) and \(\Delta \Lambda_{f}^{(5)} \ge 0\).

Additionally, \(\Delta \Lambda_{f}^{(6)}\) and \(\Delta \Lambda_{f}^{(7)}\) can be expressed as

where

the application of (20) and Lemma 4 yields \(\Delta Q_{g} \ge 0\), which yields \(\Delta \Lambda_{f}^{(6)} \ge 0\) and \(\Delta \Lambda_{f}^{(7)} \ge 0\).

In conclusion, we obtain \(\Delta \Lambda_{f} = \Delta \Lambda_{f}^{(1)} + \Delta \Lambda_{f}^{(2)} + \cdots + \Delta \Lambda_{f}^{(7)} \ge 0\), i.e., (52) holds. This completes the proof. □

Lemma 6

[34] Consider the following Lyapunov equation.

where U, C and V are the n × n matrices, V is a symmetric matrix, C is a stable matrix (i.e., all its eigenvalues are inside the unit circle). If V ≥ 0, then U is symmetric and unique, and U ≥ 0.

Theorem 1

For the time-invariant augmented CF system (29) and (35), on the basis of Hypotheses 1–5, the actual CF steady-state Kalman predictor given by (43) is robust, i.e., for all admissible uncertainties, we have that.

, and Pa(− 1) is the minimal upper bound of \(\overline{P}_{a} ( - 1)\).

Proof

Letting \(\Delta P_{a}^{{}} ( - 1) = P_{a}^{{}} ( - 1) - \overline{P}_{a}^{{}} ( - 1)\), from (50) and (51) one has.

Using (52) yields \(\Delta_{f} \ge 0\). Noting that \(\Psi_{ap}\) is stable, accordingly, using Lemma 6 yields \(\Delta P_{a} ( - 1) \ge 0\), i.e., (53) holds. Taking \(\overline{R}_{\eta } = R_{\eta } ,\overline{R}_{{g_{i} }} = R_{{g_{i} }} ,\overline{\sigma }_{{\xi_{k} }}^{2} = \sigma_{{\xi_{k} }}^{2}\), and \(\overline{P}_{0} = P_{0}\), then the Hypothesis 4 still holds. From \(\overline{R}_{{g_{i} }} = R_{{g_{i} }}\) , one has \(\overline{R}_{g}^{(c)} = R_{g}^{(c)}\), further, we have that \(\overline{Q}_{a} = Q_{a}\). From \(\overline{X}(0) = \overline{P}_{0} + \mu_{0} \mu_{0}^{\text T}\) and \(X(0) = P_{0} + \mu_{0} \mu_{0}^{\text T}\) , we get that \(\overline{X}(0) = X(0)\), furthermore, we have that \(\overline{X}_{a} (0) = X_{a} (0)\). By way of recurrence, it is easy to prove that \(X_{a} (t) = \overline{X}_{a} (t)\). From Lemma 3, we have that \(X_{a} = \overline{X}_{a}\). Comparing (30) and (31) yields \(Q_{f} = \overline{Q}_{f}\), comparing (36) and (37) yields \(R_{f} = \overline{R}_{f}\), comparing (40) and (41) yields \(S_{f} = \overline{S}_{f}\). Accordingly, from (49) we obtain that \(\Lambda_{f} = \overline{\Lambda }_{f}\), further, we have that \(\Delta_{f} = 0\). Applying Lemma 6 yields \(\Delta P_{a} ( - 1) = 0\), that is \(\overline{P}_{a}^{{}} ( - 1) = P_{a}^{{}} ( - 1)\). If \(P_{a}^{*}\) is an arbitrary other upper bound of \(\overline{P}_{a}^{{}} ( - 1)\), then \(P_{a}^{{}} ( - 1) = \overline{P}_{a}^{{}} ( - 1) \le P_{a}^{*}\), this means that Pa(− 1) is the minimal upper bound of \(\overline{P}_{a}^{{}} ( - 1)\). The proof is completed. □

The actual CF steady-state Kalman predictor given by (43) is called robust CF steady-state Kalman predictor. The relation given by (53) is called its robustness.

4.2 Robust CF steady-state Kalman filter and smoother

For the worst-case time-invariant CF system (29) and (35) with conservative noise statistics Qf, Rf, and Sf, based on the actual CF steady-state Kalman one-step predictor \(\hat{x}_{a}^{{}} (t|t - 1)\), the actual CF steady-state Kalman filter (N = 0) and smoother (N > 0) \(\hat{x}_{a}^{{}} (t|t + N)\) are given as [35]

Similar to the derivation in [35], the steady-state filtering and smoothing errors \(\tilde{x}_{a}^{{}} (t|t + N) = x_{a} (t) - \hat{x}_{a}^{{}} (t|t + N)\) are given as

where \(\lambda_{f} \left( {t + p} \right)\) is defined by (48), and

Utilizing (56) yields that the actual and conservative steady-state estimation error variances are, respectively, computed by

Theorem 2

Under the conditions of Hypotheses 1–5, the actual CF steady-state Kalman filter and smoother given by (54) are robust, i.e.,

, and Pa(N) is the minimal upper bound of \(\overline{P}_{a}^{{}} (N)\).

Proof

Letting \(\Delta P_{a}^{{}} (N) = P_{a}^{{}} (N) - \overline{P}_{a}^{{}} (N)\), from (57) and (58) one has.

utilizing (52) and (53) yields \(\Delta P_{a}^{{}} (N) \ge 0\), i.e., (59) holds. In a similar way to the proof of Theorem 1, we can prove that Pa(N) is the minimal upper bound of \(\overline{P}_{a}^{{}} (N)\), the details are omitted. The proof is completed. □

Corollary 1

From the definition \(x_{a} (t) = \left[ {\begin{array}{*{20}c} {x^{\text T} (t)} & {w^{\text T} (t)} & {\delta^{(c)\text T} (t - 1)} \\ \end{array} } \right]^{\text T}\), the robust CF steady-state Kalman estimator of the original system (1)–(4) can be obtained as \(\hat{x}_{c} (t|t + N) = \left[ {\begin{array}{*{20}c} {I_{n} } & {(0)_{n \times r} } & {(0)_{n \times m} } \\ \end{array} } \right]\hat{x}_{a}^{{}} (t|t + N),N = - 1,N \ge 0\), and their actual and conservative CF steady-state estimation error variances are, respectively, given as.

the robust CF steady-state Kalman estimators \(\hat{x}_{c} (t|t + N)\) are robust, i.e.,

, and Pc(N) is the minimal upper bound of \(\overline{P}_{c} (N)\).

Corollary 2

It is completely similar to the derivation of (7)–(62), we easily obtain the robust local steady-state Kalman estimators \(\hat{x}_{i} (t|t + N),N = - 1,N \ge 0,i = 1, \ldots ,L\), of the original system (1)–(4), and their actual estimation error variances \(\overline{P}_{i} (N)\) have the corresponding minimal upper bounds Pi(N), i.e.,

Remark 2

Applying the projection theory, it can be proved that

Taking the trace operations to (62)–(65) yields the accuracy relations with the matrix trace inequalities as

Remark 3

In Remark 2, \({\text{tr}}\overline{P}_{c} (N)\) and \({\text{tr}}\overline{P}_{i} (N)\) are defined as the actual accuracies of the corresponding robust Kalman estimators, while trPc(N) and trPi(N) are defined as their robust accuracies (or global accuracies). The smaller trace means the higher accuracy. The robust accuracy of CF is higher than that of each local estimator.

5 Discussion

This section is to verify the effectiveness of the estimators produced by Sect. 4. We select the uninterruptible power system (UPS) [30] to complete the simulation experiment by MATLAB R2018a. Let the UPS with 1KVA and sample time 10 ms at half-load operating point, then the system with colored noises and multiple uncertainties is given as follows:

In the simulation experiment, we take \(\overline{R}_{\eta } = 0.2,R_{\eta } = 0.3,\overline{R}_{{g_{1} }} = 0.16, R_{{g_{1} }} = 0.2,\overline{R}_{{g_{2} }} = 0.13,R_{{g_{2} }} = 0.25,\overline{R}_{{g_{3} }} = 0.5,R_{{g_{3} }} = 0.8,\overline{\sigma}_{\xi_1}^{2}=0.02,\sigma_{\xi_1}^{2}=0.03,\overline{\sigma}_{\xi_2}^{2}=0.03,\sigma_{\xi_2}^{2}=0.04\), \(\overline{R}_{{g_{3} }} = 0.5,R_{{g_{3} }} = 0.8,\overline{\sigma }_{{\xi_{1} }}^{2} = 0.02,\sigma_{{\xi_{1} }}^{2} = 0.03,\overline{\sigma }_{{\xi_{2} }}^{2} = 0.03,\sigma_{{\xi_{2} }}^{2} = 0.04\), \(\varsigma_{1} { = }0.9,\varsigma_{2} { = }0.7,\varsigma_{3} { = }0.8\), D = 0.405. In the follow-up phase, the significant simulation results are given.

Table 1 below presents the comparison of actual and robust accuracies of local and CF robust steady-state estimators. By comparing the values in the table, the accuracy relations given by (66) and (67) are cleared, which meet our expectations.

The tracking results of \(\hat{x}_{i}^{(3)} (t|t + 2),i = 1,2,3\) and \(\hat{x}_{c}^{(3)} (t|t + 2)\) are, respectively, shown in Fig. 1. In order to show the best results, we select the third component of state x(t). Apparently, compared with the local smoothers, the CF smoother has better tracking performance.

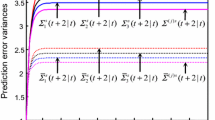

To illustrate the robustness of CF steady-state one-step smoother \(\hat{x}_{c} (t|t + 1)\), we take any three groups of different actual noise variances \(x(t)\), h = 1, 2, 3 satisfying (6), such that

We easily get the corresponding three robust CF smoothers \(\hat{x}_{c}^{(h)} (t|t + 1),h = 1,2,3\), as well as the steady-state one-step smoothing error variances \(\overline{P}_{c}^{(h)} (1)\) and Pc(1). The corresponding three actual smoothing error curves of the third component of \(\hat{x}_{c}^{(h)} (t|t + 1),h = 1,2,3\) and their 3-standard deviation bounds are shown in Fig. 2, where the actual standard deviation \(\overline{\sigma }_{c}^{(h)(3)} (1)\) are computed via the actual CF smoothing error variances \(\overline{P}_{c}^{(h)} (1)\) given by (60), whose (3, 3) diagonal element is \(\left( {\overline{\sigma }_{c}^{(h)(3)} (1)} \right)^{2}\), and the robust standard deviation \(\sigma_{c}^{(3)} (1)\) is computed via conservative CF smoothing error variances Pc(1) given by (61), whose (3, 3) diagonal element is \(\left( {\sigma_{c}^{(3)} (1)} \right)^{2}\). We see from Fig. 2 that for each error curve, over 99 percent of CF smoothing error values lie between \(- 3\overline{\sigma }_{c}^{(h)(3)} (1)\) and \(+ 3\overline{\sigma }_{c}^{(h)(3)} (1)\), and also lie between \(- 3\sigma_{c}^{(3)} (1)\) and \(+ 3\sigma_{c}^{(3)} (1)\), this verifies the robustness of the third component of \(\hat{x}_{c} (t|t + 1)\), and the correctness of the actual standard deviations \(\overline{\sigma }_{c}^{(h)(3)} (1)\).

Figure 3 gives the influence of multiplicative noises \(\xi_k(t),k=1,2\) on the robust accuracy of \(\hat{x}_{c} (t|t)\). In other words, the changing trend of trPc(0) with respect to \(\sigma_{\xi_1}^{2}\) and \(\sigma_{\xi_2}^{2}\) is illustrated in Fig. 3. Obviously, when the variances \(\sigma_{\xi_1}^{2}\) and \(\sigma_{\xi_2}^{2}\) increase, the values of trPc(0) increase, i.e., the robust accuracy of \(\hat{x}_{c} (t|t)\) decrease.

6 Conclusions

This study set out to explore the robust CF steady-state Kalman estimators (predictor, filter, and smoother) for multisensor networked systems with colored noises and multiple uncertainties. The OSRD and PDs are described by a Bernoulli distributed random variable with known probability, and random parameter uncertainties are described by multiplicative noises. The original system model has been converted into a CF system only with uncertain noise variances via using the augmented approach, de-randomization approach, and fictitious noise technique. The process and observation noises in the CF system are same, which avoids solving the correlation matrix between them. Based on the minimax robust estimation principle, the target estimators have been proposed. Their robustness has been proved by using decomposition approach of non-negative definite matrix and Lyapunov equation approach. The results of this study indicate that the robust accuracy of CF estimator is higher than that of each local estimator. Finally, a simulation example with application to UPS with mixed uncertainties has been proposed, which shows the applicability and correctness of the introduced estimators.

Availability of data and materials

Data sharing does not apply to this article because no data set was generated or analyzed during the current research period.

Abbreviations

- CF:

-

Centralized fusion

- OSRD:

-

One-step random delay

- PDs:

-

Packet dropouts

- UPS:

-

Uninterruptible power system

References

M.E. Liggins, D.L. Hall, J. Llinas, Handbook of Multisensor Data Fusion: Theory and Practice, 2nd edn. (CRC Press, New York, 2009)

S.L. Sun, Z.L. Deng, Multi-sensor optimal information fusion Kalman filter. Automatica 40, 1017–1203 (2004)

B.D.O. Anderson, J.B. Moore, Optimal Filtering (Prentice Hall, NJ, 1979)

F.L. Lewis, L.H. Xie, P. Dan, Optimal and Robust Estimation with an Introduction to Stochastic Control Theory, 2nd edn. (CRC Press, New York, 2008)

W.Q. Liu, X.M. Wang, Z.L. Deng, Robust centralized and weighted measurement fusion Kalman estimators for uncertain multisensor systems with linearly correlated white noises. Inf. Fusion 35, 11–25 (2017)

W.Q. Liu, G.L. Tao, Y.J. Fan et al., Robust fusion steady-state filtering for multisensor networked systems with one-step random delay, missing measurements, and uncertain-variance multiplicative and additive white noises. Int. J. Robust Nonlinear Control 29(14), 4716–4754 (2019)

W.Q. Liu, Z.L. Deng, Weighted fusion robust steady-state estimators for multisensor networked systems with one-step random delay and inconsecutive packet dropouts. Int. J. Adapt. Control Signal Process. 34(2), 151–182 (2020)

H.L. Tan, B. Shen, Y.R. Liu et al., Event-triggered multi-rate fusion estimation for uncertain system with stochastic nonlinearities and colored measurement noises. Inf. Fusion 36, 313–320 (2017)

J. Ma, S.L. Sun, Optimal linear recursive estimators for stochastic uncertain systems with time-correlated additive noises and packet dropout compensations. Signal Process. 176, 1–12 (2020)

W.J. Qi, P. Zhang, Z.L. Deng, Robust weighted fusion Kalman filters for multisensor time-varying systems with uncertain noise variances. Signal Process. 99, 185–200 (2014)

W.Q. Liu, G.L. Tao, C. Shen, Robust measurement fusion steady-state estimator design for multisensor networked systems with random two-step transmission delays and missing measurements. Math. Comput. Simulat. 181, 242–283 (2021)

X. Liu, X.Y. Zhang, M. Jia et al., 5G-based green broadband communication system design with simultaneous wireless information and power transfer. Phys. Commun. 28, 130–137 (2018)

S.L. Sun, H. Lin, J. Ma et al., Multi-sensor distributed fusion estimation with applications in networked systems: a review paper. Inf. Fusion 38, 122–134 (2017)

S.Y. Wang, H.J. Fang, X.G. Tian, Robust estimator design for networked uncertain systems with imperfect measurements and uncertain-covariance noises. Neurocomputing 230, 40–47 (2017)

F. Li, K.Y. Lam, X. Liu, Joint pricing and power allocation for multibeam satellite systems with dynamic game model. IEEE Trans. Veh. Technol. 67(3), 2398–2408 (2018)

X. Liu, X.Y. Zhang, NOMA-based resource allocation for cluster-based cognitive industrial internet of things. IEEE Trans. Ind. Inf. 16(8), 5379–5388 (2020)

X. Liu, X.P.B. Zhai, W.D. Lu, QoS-guarantee resource allocation for multibeam satellite industrial internet of things with NOMA. IEEE Trans. Ind. Informat. 17(3), 2052–2061 (2021)

X. Liu, X.Y. Zhang, Rate and energy efficiency improvements for 5G-based IoT with simultaneous transfer. IEEE Internet Things J. 6(4), 5971–5980 (2019)

J. Hu, Z.D. Wang, D.Y. Chen, Estimation, filtering and fusion for networked systems with network-induced phenomena: new progress and prospects. Inf. Fusion 31, 65–75 (2016)

S.L. Sun, L. Xie, W. Xiao et al., Optimal linear estimation for systems with multiple packet dropouts. Automatica 44(5), 1333–1342 (2008)

N. Nahi, Optimal recursive estimation with uncertain observation. IEEE Trans. Inf. Theory 15(4), 457–462 (1969)

W.L. Li, Y.M. Jia, J.P. Du, Distributed filtering for discrete-time linear systems with fading measurements and time-correlated noise. Digit, Signal Process. 60, 211–219 (2017)

H. Geng, Z.D. Wang, Y.H. Cheng et al., State estimation under non-Gaussian Lévy and time-correlated additive sensor noises: a modified Tobit Kalman filtering approach. Signal Process. 154, 120–128 (2019)

L.J. Zhang, L.X. Yang, L.D. Guo et al., Optimal estimation for multiple packet dropouts systems based on measurement predictor. IEEE Sens. J. 11(9), 1943–1950 (2011)

S.Y. Wang, Z.D. Wang, H.L. Dong et al., Recursive state estimation for linear systems with lossy measurements under time-correlated multiplicative noises. J. Frankl. Inst. 357(3), 1887–1908 (2020)

R.C. Águila, A.H. Carazo, J.L. Pérez, Networked fusion estimation with multiple uncertainties and time-correlated channel noise. Inf. Fusion 54, 161–171 (2020)

W. Liu, P. Shi, Convergence of optimal linear estimator with multiplicative and time-correlated additive measurement noises. IEEE Trans. Autom. Control 64(5), 2190–2197 (2019)

R.C. Águila, A.H. Carazo, J.L. Pérez, Centralized filtering and smoothing algorithms from outputs with random parameter matrices transmitted through uncertain communication channels. Digit. Signal Process. 85, 77–85 (2019)

W. Liu, Optimal estimation for discrete-time linear systems in the presence of multiplicative and time-correlated additive measurement noises. IEEE Trans. Signal Process. 63(17), 4583–4593 (2015)

J. Ma, S.L. Sun, Distributed fusion filter for networked stochastic uncertain systems with transmission delays and packet dropouts. Signal Process. 130, 268–278 (2017)

N. Li, S.L. Sun, J. Ma, Multi-sensor distributed fusion filtering for networked systems with different delay and loss rates. Digit. Signal Process. 34, 29–38 (2014)

G.M. Liu, W.Z. Su, Survey of linear discrete-time stochastic controls systems with multiplicative noises. Control Theory Appl. 30(8), 929–946 (2013)

Z.D. Wang, D.W.C. Ho, X.H. Liu, Robust filtering under randomly varying sensor delay with variance constraints. IEEE Trans. Circuits Syst. II Exp. Briefs 51(6), 320–326 (2004)

T. Kailath, A.H. Sayed, B. Hassibi, Linear Estimation (Prentice Hall, NJ, 2000)

X.J. Sun, G. Yuan, Z.L. Deng et al., Multi-model information fusion Kalman filtering and white noise deconvolution. Inf. Fusion 11(2), 163–173 (2010)

Acknowledgements

This work is supported by Heilongjiang Provincial Natural Science Foundation of China under Grant No. LH2019F035, by National Natural Science Foundation of China under Grant NSFC-61803148, by Scientific Research Fund of Zhejiang Provincial Education Department under Grant Y202147323, by the Zhejiang Gongshang University General Project of Graduate Research and Innovation Fund in 2021, and is granted from Zhejiang Gongshang University, Zhejiang Provincial Key Laboratory of New Network Standards and Technologies (No. 2013E10012).

Author information

Authors and Affiliations

Contributions

SL contributed to editing, experiments, and data analysis, WL contributed to the theory model and partial theoretical derivation, GT contributed to partial theoretical derivation and proof. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This article is ethical, and this research has been agreed.

Consent for publication

The picture materials quoted in this article have no copyright requirements, and the source has been indicated.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, S., Liu, W. & Tao, G. Centralized fusion robust filtering for networked uncertain systems with colored noises, one-step random delay, and packet dropouts. EURASIP J. Adv. Signal Process. 2022, 24 (2022). https://doi.org/10.1186/s13634-022-00857-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13634-022-00857-4