Abstract

An adaptive combination constrained proportionate normalized maximum correntropy criterion (ACC-PNMCC) algorithm is proposed for sparse multi-path channel estimation under mixed Gaussian noise environment. The developed ACC-PNMCC algorithm is implemented by incorporating an adaptive combination function into the cost function of the proportionate normalized maximum correntropy criterion (PNMCC) algorithm to create a new penalty on the filter coefficients according to the devised threshold, which is based on the proportionate-type adaptive filter techniques and compressive sensing (CS) concept. The derivation of the proposed ACC-PNMCC algorithm is mathematically presented, and various simulation experiments have been carried out to investigate the performance of the proposed ACC-PNMCC algorithm. The experimental results show that our ACC-PNMCC algorithm outperforms the PNMCC and sparse PNMCC algorithms for sparse multi-path channel estimation applications.

Similar content being viewed by others

1 Introduction

In recent years, wireless communication is the fastest growing and most widely used technology in the field of information communication. As for wireless communication channels, radio wave propagation through the channels will produce a direct wave and a ground reflection wave, which are the most common waves in the propagation process. In addition, there will also be scattered waves caused by various obstacles in the propagation paths [1, 2]. These phenomena eventually result in the multi-path effect [2–5], which often occurs and is often very serious. Such effect may lead to signal delays and to reduce communication quality. Furthermore, measurements show that the broadband channels are described as sparse multi-path channels, which are very common in the field of wireless communication [6, 7]. The general characteristic of these sparse multi-path channels is that most of the channel impulse response (CIR) coefficients are equal to zero. The very few nonzero coefficients are termed active coefficients [2, 7, 8]. Adaptive filters (AF) have been widely applied to the estimation of sparse channels to improve the quality of wireless communications [9–12]. The most traditional AF algorithm is the least mean square (LMS), which has been derived to minimize the mean square estimation error [9, 13]. It is widely employed in real-world applications due to its simplicity. However, LMS performance degrades in low signal-to-noise (SNR) situations and in sparse channel estimation. Motivated by compressive sensing principles [14], a series of sparse LMS algorithms have been recently proposed by incorporating l0-norm, l1-norm, or lp-norm penalties into the LMS cost function [14–21]. These algorithms have been shown to lead to superior performances than LMS both in steady-state error (SSE) and in convergence speed for sparse multi-path channel estimation. Nevertheless, the performances of such algorithms tend to degrade in non-Gaussian white noise environments (NGWNE), a frequently encountered situation in the real wireless communication systems [22–24].

Some recent efforts have also been directed to improve the performance of adaptive filters in the presence of non-Gaussian and impulsive disturbances. One of such efforts was to replace the MSE with information theoretic, entropy-related, cost functions [25–28]. At present, most entropy-based AF algorithms have been derived using the maximum correntropy criterion (MCC) and the minimum error entropy (MEE) criteria [25–27, 29–31]. Though MEE-based algorithms may lead to good estimation performance, they require a prohibitive computational effort for real-time application. MCC-based algorithms are less complex and have been successfully applied to channel estimation [26]. The MCC algorithm employs the correntropy as a local similarity measure, which tends to increase the robustness of the algorithm to outliers. It has been applied to channel estimation under NGWNE [26, 32, 33]. More recently, a generalized correntropy measure has been proposed which replaces the Gaussian kernel of the MCC with a generalized Gaussian kernel. The generalized MCC (GMCC) algorithm has been shown to outperform MCC in certain situations at the cost of an increase in computational complexity [34]. A normalized version of the MCC algorithm (NMCC) has also been proposed [35, 36] to make the convergence control less dependent on the input power.

To address the issue of sparse channel estimation in non-Gaussian noise, sparse MCC algorithms have been proposed by incorporating different norm penalties into the MCC cost function. Similar to the zero attracting LMS (ZA-LMS) algorithm [16], in [33], an l1-norm penalty was introduced into the MCC cost function as a zero attractor. The resulting algorithm was called zero-attracting MCC (ZA-MCC) algorithm. Then, a reweighting controlling factor ξ was incorporated into the ZA-MCC algorithm, yielding the reweighting ZA-MCC (RZA-MCC) algorithm [33]. The ZA-MCC and RZA-MCC were shown to improve the steady-state and convergence performances of MCC in estimating multi-path channels. Different zero attractors were added to MCC in [33, 37, 38] using an l0-norm and a correntropy-induced metric (CIM).

One drawback of the ZA-MCC algorithms is that it uniformly attracts all the channel coefficients to zero, which may result in a rather large estimation error for less sparse channels. Also, the selection of a good learning rate is not simple for these algorithms. Zero-attracting NMCC (ZA-NMCC) and reweighting ZA-MCC (RZA-NMCC) algorithms have also been developed in [39]. Moreover, a soft parameter function has also been introduced into the NMCC algorithm to attenuate the uniform attraction of coefficients to zero. The resulting algorithm is called SPF-NMCC [40]. The SPF-NMCC algorithm improved the steady-state performance of NMCC, but tends to converge slowly due to the large discrepancy between coefficient values in sparse channels. To remedy this drawback, proportionate-type [41, 42] NMCC algorithms were proposed. The proportionate NMCC (PNMCC) algorithm was developed in [43, 44] and led to faster convergence speeds than the MCC and NMCC algorithms since the filter coefficients are proportionately updated according to the magnitude of the estimates of the channel coefficients. Unfortunately, however, the convergence of the PNMCC algorithm tends to slow down an initial convergence period [43, 44], a known effect of the proportionate type of adaptation.

In this paper, we propose a new adaptive algorithm by effectively combining some of the aforementioned strategies to derive a method that combines the properties of the l0-norm, the l1-norm, and the proportionate adaptation. This is done by integrating an adaptive combination function (ACF) to the PNMCC cost function to create a new adaptive zero attractor. The resulting algorithm is called Adaptive Combination Constrained Proportionate Normalized Maximum Correntropy Criterion (ACC-PNMCC) algorithm. The performance of the proposed ACC-PNMCC algorithm is investigated through several different simulation experiments, and it is compared to the MCC, NMCC, PNMCC, and sparse PNMCC algorithms in terms of the SSE and convergence speed. The simulation results illustrate the potential of the ACC-PNMCC algorithm to provide a superior estimation performance for sparse multi-path channel estimation under non-Gaussian and impulsive noise environments.

The paper is organized as follows. The NMCC and PNMCC algorithms are briefly reviewed in Section 2. In Section 2, we derive the proposed ACC-PNMCC algorithm in the context of sparse multi-path channel estimation. Simulation experiments are presented in Section 3 to illustrate the algorithm performance, and we finally summarize our work in Section 4.

2 Channel estimation algorithms

2.1 The NMCC and PNMCC algorithms

Consider the estimation of an unknown channel with impulse response given by g0=[g1,g2,g3,⋯,gM]T. The M-dimensional input vector is \(\textbf {x}\left ({n}\right) = {\left [{x\left ({n}\right),x\left ({n - 1}\right),x\left ({n - 2}\right), \cdots \!,x\left ({n - M + 1}\right)} \right ]^{^{T}}}\), and the observed channel output is d(n)=xT(n)g0+r(n), where r(n) is the additive noise. Denoting \(\hat {\mathbf {g}}\left (n \right)\) the channel response estimate, the estimation error is given by \(e\left ({n}\right) = d\left ({n}\right) - {\textbf {x}^{{T}}}\left ({n}\right)\hat {\mathbf {g}}\left ({n}\right)\).

It has been show in [36] that the NMCC weight update equation is the solution of the following problem:

where ∥·∥2 represents the Euclidean norm of a vector, \(\hat e\left (n \right) = d\left (n \right) - {\textbf {x}^{{T}}}\left (n \right)\hat {\mathbf {g}}\left ({n + 1} \right)\), the parameter σ>0 is the bandwidth of the Gaussian kernel used to evaluate the instantaneous correntropy between the observed signal and the estimator output [26], and αNMCC>0 is a design parameter that affects the quality of estimation.

Solving (1) using the Lagrange multiplier technique [36] leads to the NMCC weight update equation

where αNMCC is the NMCC step size. If αNMCC=β∥x(n)∥2, (1) becomes the MCC algorithm update [26].

One way to modify the NMCC algorithm to improve adaptation performance for sparse channels is to adjust each adaptive weight using a different step size, what can be done through a diagonal step size matrix

The proportionate NMCC (PNMCC) algorithm assigns the step sizes mi such that

where γi(n), i=1,…,M are given by

with κ and η positive constants with typical values of κ=5/M and η=0.01 [42–45]. Parameter κ is used to prevent the coefficients from stalling when they are much smaller than the largest one, while η avoids the stalling of all coefficients when \(\hat {\mathbf {g}}\left (n \right) = {\textbf {0}_{M \times 1}}\) at initialization. The weight update equation for the PNMCC algorithm is

where θ is a regularization parameter, and αPNMCC is the PNMCC step size. Although the PNMCC algorithm improves the performance of the NMCC, its convergence rate tends to slow down after an initial period of fast convergence, a well known issue affecting the conventional (MSE based) PNLMS algorithm [21]. This basically happens due to the dominant effect of the inactive taps, whose step sizes tend to be excessively reduced as their weight estimates get closer to zero, reducing the convergence speed.

Among the several different strategies proposed to increase the PNLMS convergence rate after the initial convergence period, the l1 norm penalty recently proposed in [21] has led to very interesting results. In [21], the l1 norm of the weight vector pre-multiplied by the inverse of the step size matrix has been used. This makes the l1 penalty to be governed primarily by the inactive taps. The constrained optimization problem solved in [21] to yield the ZA-PNLMS algorithm is

One problem of enforcing sparsity through an l1 norm constraint is that the resulting algorithm may lose performance when identifying responses with different levels of sparsity. One approach recently proposed in [46] to mitigate this problem for the LMS algorithm is to employ a so-called non-uniform norm constraint, inspired in the p-norm frequently used in compressive sensing. The sparsity of the weight vector is enforced through an adaptive combination function (ACF) that is a mixture of l0-norm and l1-norm and whose general form is

where 0≤q≤1. We can see that the definition of the ACF is different from the classic Euclidean norm [47]. When q→0, it becomes to be

which approximates to be l0-norm that can count the number of non-zero coefficients in the sparse multi-path channels. Besides, the ACF is the l1-norm, which is described as

2.2 The proposed ACC-PNMCC algorithm

To address the problems of estimating a sparse response with possibly different levels of sparsity, obtaining a good convergence rate from initialization to steady-state, and providing robustness to impulsive noise, we propose to integrate an adaptive combination function (ACF) into the PNMCC’s cost function. This approach leads to the design of a new correntropy-based zero attractor algorithm that combines the techniques of [21, 46–50]. The proposed ACC-PNMCC algorithm is derived in the following.

Integrating the ACF into the cost function of the PNMCC algorithm with the weight scaling as in [21], we propose the following optimization problem:

where γACC is a trade-off parameter between the steady-state performance and the ACF penalty. Using Lagrange multipliers, the cost function associated with this optimization problem is

where λACC is the Lagrange multiplier.

As a first step to minimize (11) with respect to \({\hat {\mathbf {g}}\left ({n + 1} \right)}\), we impose

which yields the recursion

Now, imposing the equality constraint

and solving for λACC yields

Substituting (15) in (13) and rearranging the terms yields

In (16), we observe that the elements of the matrix \({ - \frac {{\textbf {x}(n){\textbf {x}^{T}}(n)\textbf {G}(n)}}{{{\textbf {x}^{T}}(n)\textbf {G}(n)\textbf {x}(n)}}}\) tend to be very small for reasonably large values of M. Hence, we simplify the algorithm recursion using the approximation \(\textbf {I}{ - \frac {{\textbf {x}(n){\textbf {x}^{T}}(n)\textbf {G}(n)}}{{{\textbf {x}^{T}}(n)\textbf {G}(n)\textbf {x}(n)}}}\approx \textbf {I}\). The same approximation has been successfully used in [21]. Then, (16) becomes

Evaluation of the gradient vector in (17) yields

where ⊙ denotes the Hadamard product, and

As (18) is a function of \(\hat {\mathbf {g}}(n+1)\), it substitution in (17) would not yield a recursive update for \(\hat {\mathbf {g}}(n)\). To obtain an implementable recursive update, we replace \(\hat {\mathbf {g}}(n+1)\) in (18) with \(\hat {\mathbf {g}}(n)\). This approximation tends to be reasonable for practical step sizes. The resulting weight update equation is then

Though recursion (19) should fulfill the objective of minimizing (11) at convergence, it is still too complex for real-time implementations due to the last term on the r.h.s., which is responsible for the zero-attracting property of the algorithm. Since we propose to use either an l0-norm or an l1-norm, q must assume a value equal to zero or one, respectively. For q=0, the last term in (19) is equal to zero. For q=1, it is equal to \(-\gamma _{\textrm {ACC}}\, {\boldsymbol {\psi }}(n) \odot \text {sgn}\left (\hat {\mathbf {g}}(n)\right)\), which is a vector whose ith element is given by \(-\gamma _{\textrm {ACC}}\,\text {sgn}\left (\hat {g}_{i}(n)\right)\).

A final decision to be made is how to choose the value of q. According to the definitions (8) and (9), it should affect the norm of the whole vector \(\hat {\mathbf {g}}(n)\). However, there is no clear measure to set the value of q to obtain good performance in practical applications. We propose instead to determine a different value of q for each element of \(\hat {\mathbf {g}}(n)\) and based on a threshold that depends on the recent behavior of each weight estimate:

Similar solutions have been employed in [47, 49, 50] to regularize different model misfit cost functions.

Given (20), and denoting becomes qi the value of q applied to qi(n), we set qi=0 if \({{\hat {g}}_{i}}(n) > {h_{i}}(n)\), and qi=1 if \({{\hat {g}}_{i}}(n) < {h_{i}}(n)\). Hence, those coefficients for which \({{\hat {g}}_{i}}(n) > {h_{i}}(n)\) will contribute to this term as if the norm l0 had been used. Conversely, those coefficients for which \({{\hat {g}}_{i}}(n) < {h_{i}}(n)\) will contribute to this term as if the norm l1 had been used.

We implement this strategy by defining a diagonal matrix

with elements

so that the last r.h.s. term of (19) becomes \( - {\gamma _{{\textrm {ACC}}}}\,\textbf {F}\,{\text {sgn}} \left ({\hat {\mathbf {g}}(n)} \right)\).

Finally, it has been verified [50] that the zero-attracting ability of \( - {\gamma _{\textrm {ACC}}}\textbf {F}{\text {sgn}} \left ({\hat {\mathbf {g}}(n)} \right)\) can be improved by a reweighting factor used in the RZA-MCC algorithm. By doing that, the updating equation of the proposed ACC-PNMCC algorithm becomes

where θ>0 is a very small positive constant, ξ is the reweighting factor, and αACC denotes the step size.

The last r.h.s. term in (23) is the newly designed zero attractor. Each coefficient is treated as if a different norm penalty had been applied to the cost function. This is done by comparing each channel coefficient estimate to the designed threshold. In the following, we will show that the proposed zero attractor speeds-up the convergence of the small coefficients after the initial convergence so that the proposed ACC-PNMCC algorithm outperforms the PNMCC algorithm. In addition, a better sparse multi-path channel estimation performance can be obtained by properly selecting the value of the reweighting factor ξ.

3 Results and discussions

In this section, several different simulation experiments are carried out to investigate the performance of the proposed ACC-PNMCC algorithm. The input signal x(n) is a random signal, while the noise signal r(n) is a mixed Gaussian signal with distribution [33, 37, 40]

where \( N\left ({{\mu _{i}},\nu _{i}^{2}} \right)\left ({i = 1,2} \right)\) denotes a Gaussian distribution with mean μi and variance \({\nu _{i}^{2}}\). The mixing parameter χ is used to balance the two Gaussian noises. In our simulation experiments, these parameters are set to be \(\left ({{\mu _{1}},{\mu _{2}},\nu _{1}^{2},\nu _{2}^{2},\chi } \right) = \left ({0,0,0.05,20,0.05} \right)\).

The performance of the proposed ACC-PNMCC algorithm is evaluated through the steady-state mean square deviation (MSD), which is defined as

From the updating Eq. (23), we notice that there are several key parameters which may affect the ACC-PNMCC performance and thus must be properly selected. To better select these parameters, we experimentally analyzed their effect on the MSD performance of the ACC-PNMCC algorithm. Herein, the regularization parameters include αACC, γACC, and ξ. In this paper, each parameter is optimized to obtain small MSD. At each optimization, only one parameter is changed while other parameters are set to the optimal values. In the experiments designed for this study, the number of nonzero coefficients is 1 and the total length of the unknown channel is set to be M=16. Firstly, γACC is studied under different SNRs [51]. In this simulation, we have set αACC=0.27 and ξ=5. The simulation results are shown in Fig. 1. It is observed that the MSD with different values of γACC decreases as the SNR increases from 1 to 40 dB. The SNR increases with different slopes for different values of γACC. This means that the effect of γACC is dependent on the SNR. As can be verified from Fig. 1, γACC=5×10−4 yields the smallest MSD for a SNR of 30 dB. Hence, we study the effect of the step size value αACC on the MSD performance for SNR = 30 dB and γACC=5×10−4. The corresponding MSD is shown in Fig. 2. We can verify that the MSD gradually decreases as the step size increases from 1×10−3 to 5×10−2, while the MSD increases for step sizes greater than 5×10−2. This indicates the importance of a proper choice of step size value. The effect of reweighting factor ξ is studied for a simple case on the zero attractor term. The results are shown in Fig. 3. From Fig. 3, we note that the reweighting factor mainly attracts to zero the coefficients that are smaller than the defined threshold, while the zero attractor term becomes zero for the coefficients with values greater than the threshold. In addition, the shrinkage ability of the reweighting factor decreases with ξ ranging from 0 to 25. Thus, the reweighting factor in the proposed ACC-PNMCC algorithm exerts strong zero attraction on the relatively small coefficients to improve their convergence after the initial transient.

Based on the above parameter selections, the MSD performance of the proposed ACC-PNMCC algorithm was verified for different sparsity levels at SNR = 30 dB. For comparison purposes, the conventional MCC, NMCC, PNMCC, and sparse PNMCC algorithms were also considered in the simulations. The sparse PNMCC (ZA-PNMCC and RZA-PNMCC) algorithms were obtained by using the l1-norm and are briefly described in the Appendix. In this experiment, the sparsity level of sparse multi-path channel was set as K. In other words, K represents the number of nonzero coefficients in the sparse multi-path channel. Firstly, there is only one nonzero channel coefficient (K=1), which is randomly distributed within the unknown sparse multi-path channel. To obtain the same initial convergence speed for all the compared algorithms, the related parameters for the MCC, NMCC, PNMCC, ZA-PNMCC, and RZA-PNMCC algorithms were set as follows: αMCC=0.03, αNMCC=0.4, αPNMCC=αZA=αRZA=0.3, γZA=5×10−5, γRZA=1×10−4, αACC=0.27, γACC=5×10−4, and ξ=5. αMCC, αZA, and αRZA are the step sizes of the MCC, ZA-PNMCC, and RZA-PNMCC algorithms, respectively. Moreover, γZA and γRZA are the trade-off parameters of the ZA-PNMCC and RZA-PNMCC algorithms, respectively. The MSD performance of the proposed ACC-PNMCC algorithm for K=1 is given in Fig. 4. It is observed that ACC-PNMCC achieves the lowest steady-state MSD when all the compared algorithms have the same initial convergence speed. Then, the corresponding steady-state MSDs for K=2, 4, and 8 are shown in Figs. 5, 6, and 7. We note that the steady-state MSDs of the ZA-PNMCC, RZA-PNMCC and ACC-PNMCC algorithms increased with the sparsity level increasing from K=1 to K=8. However, it is worth noting that the MSD of the ACC-PNMCC algorithm is still lower than those of the other algorithms. In addition, the effects on the ACC-PNMCC algorithm with a sparsity level K/M are shown in Fig. 8 to more intuitively understand the effect of sparsity level on the MSDs of compared algorithms. It is found that the MSDs of ZA-PNMCC, RZA-PNMCC, and ACC-PNMCC gradually increase as the sparsity level K/M increases from 0.0625 to 0.5, which is similar to the results in Figs. 4, 5, 6, and 7. The MSDs of the MCC, NMCC, and PNMCC algorithms remain almost invariant, as they do not have any zero attractor term that is sensitive to sparsity K/M. However, one should note that the MSDs of ZA-PNMCC and ACC-PNMCC are almost equal to 1×10−4 when K/M=0. This is because the ACC-PNMCC algorithm uses l1-norm when K/M=0, like the ZA-PNMCC algorithm. When K/M is greater than 0.0625, the ACC-PNMCC algorithm is always better than the other algorithms. Thus, we can say that the ACC-PNMCC algorithm is suitable for sparse multi-path channel estimation in practical applications.

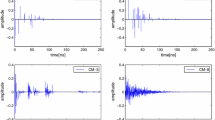

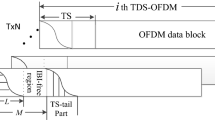

The third experiment considered the application of ACC-PNMCC to echo cancelation. The typical echo path of a 256-tap channel with 16 non-zero coefficients is shown in Fig. 9, which is also known as block-sparse path [52]. However, the data reusing, sign, and memory schemes have been considered in [52], which has high complexity. Additionally, the sparsity is not given by a formula. Herein, the sparsity characteristic of the echo channel is measured by \({\vartheta _{12}} = \frac {M}{{M - \sqrt {M}}}\left ({1 - {{{{\left \| \textbf {g} \right \|}_{1}}} / {\sqrt {M}{{\left \| \textbf {g} \right \|}_{2}}}}}\right)\) [40, 45, 49]. In this experiment, we use 𝜗12=0.8222 for the first 8000 iterations, while 𝜗12=0.7362 are set for the after 8000 iterations. Other simulation parameters are αMCC=0.0055, αNMCC=1.3, αPNMCC=αZA=αRZA=0.9, γZA=1×10−6, γRZA=1.5×10−6, αACC=0.8, γACC=5×10−6, and ξ=5. The corresponding steady-state MSDs are shown in Fig. 9. It can be seen that ACC-PNMCC outperforms the other algorithms in terms of both steady-state MSD and convergence speed. Although the sparsity is changed from 0.8222 to 0.7362, the performance of the proposed ACC-PNMCC algorithm is still superior to that of the other algorithms, indicating the effectiveness of the ACC-PNMCC algorithm for echo cancelation.

At last, the computational complexity of the proposed ACC-PNMCC algorithm is presented in Table 1 in comparison with the corresponding algorithms. Herein, the numerical complexities of the algorithms include multiplications, additions, exponentiations, divisions, and comparisons. Form Table 1, it can be seen that the computational complexity of the developed ACC-PNMCC algorithm is slightly higher than that of the ZA-PNMCC and RZA-PNMCC algorithms, which is owing to the calculation of the ACF. However, the proposed ACC-PNMCC algorithm obviously increases the convergence speed and reduces the MSD.

Based on the above experiment analysis, we infer that the proposed ACC-PNMCC algorithm has a superior steady-state MSD performance and convergence speed for sparse multi-path channel estimation applications. This is because ACC-PNMCC utilizes the l0-norm penalty for the channel coefficients which are larger than our designed threshold, while it exerts the l1-norm penalty on the channel coefficients that are smaller than the designed threshold to attract relatively small coefficients to zero to improve the convergence. Thus, the proposed ACC-PNMCC algorithm performed better than the other algorithms for sparse channel estimation.

4 Conclusions

In this paper, an ACC-PNMCC algorithm has been proposed for sparse multi-path channel estimation. The proposed ACC-PNMCC algorithm exploits inherent sparsity features of sparse multi-path channels by utilizing the designed zero attractor. The performance of the algorithm was investigated and compared with the performances of the MCC, NMCC, PNMCC, and sparse PNMCC algorithms for sparse multi-path channel estimation. Experimental results illustrated that the proposed ACC-PNMCC algorithm is superior to the competing algorithms in terms of both the steady-state MSD and convergence speed.

5 Appendix

Similar to the ZA-MCC and ZA-LMS algorithms [16, 33, 37, 38], the l1-norm is introduced into the cost function of the PNMCC algorithm to develop the zero-attracting PNMCC (ZA-PNMCC) algorithm. The cost function of the ZA-PNMCC algorithm is

where λZA is Lagrange multiplier. By using LMM, the updating equation of the ZA-PNMCC can be written as

where αZA and γZA are the step size and trade-off parameter of the ZA-PNMCC algorithm, respectively. Similarly to the RZA-MCC and RZA-LMS algorithms [16, 33, 37, 38], a reweighting factor is introduced into the ZA-PNMCC algorithm to develop the reweighting ZA-PNMCC (RZA-PNMCC) algorithm, the corresponding updating equation is

where ξ1 is reweighting factor, αRZA denotes step size and γRZA represents trade-off parameter of the RZA-PNMCC algorithm.

Abbreviations

- ACC-PNMCC:

-

An adaptive combination constrained proportionate normalized maximum correntropy criterion

- ACF:

-

Adaptive combination function

- AF:

-

Adaptive filter

- CIM:

-

Correntropy induced metric

- CIR:

-

Channel impulse response

- CS:

-

Compressive sensing

- GMCC:

-

Generalized maximum correntropy criterion

- LMS:

-

Least mean square

- MCC:

-

Maximum correntropy criterion

- MEE:

-

Minimum error entropy

- MSD:

-

Mean square deviation MSE: Mean square error

- NGWNE:

-

Non-Gaussian white noise environments

- PNMCC:

-

Proportionate normalized maximum correntropy criterion

- RZA-MCC:

-

Reweighting zero attracting MCC

- SNR:

-

Signal-to-noise

- SSE:

-

Steady-state error

- ZA-LMS:

-

Zero-attracting LMS

- ZA-MCC:

-

Zero-attracting MCC

- ZA-NMCC:

-

Zero-attracting NMCC

References

C. C. Chong, F. Watanabe, H. Inamura, in IEEE Ninth International Symposium on Spread Spectrum Techniques and Applications. Potential of UWB technology for the next generation wireless communications (IEEEBrazil, 2006), pp. 422–429.

S. F. Cotter, B. D. Rao, Sparse channel estimation via matching pursuit with application to equalization. IEEE Trans. Commun.50:, 374–377 (2002).

C. Li, Design and implementation of the software of multi-path effect analysis on radar signal. Electron. Meas. Technol.100:, 4361–4368 (2006).

Y. Li, M. Hamamura, Zero-attracting variable-step-size least mean square algorithms for adaptive sparse channel estimation. Int. J. Adapt. Control Signal Process. 29:, 1189–1206 (2015).

Y. Li, Y. Wang, T. Jiang, Sparse-aware set-membership NLMS algorithms and their application for sparse channel estimation and echo cancelation. AEU Int. J. Electron. Commun.70:, 895–902 (2016).

S. K. Sengijpta, Fundamentals of statistical signal processing: estimation theory. Technometrics.2(4), 728–728 (1995).

T. L. Marzetta, B. M. Hochwald, Fast transfer of channel state information in wireless systems. IEEE Trans. Signal Process.54(4), 1268–1278 (2006).

H. J. Trussell, D. M. Rouse, in Signal Processing Conference. Reducing non-zero coefficients in FIR filter design using POCS (IEEETurkey, 2005), pp. 1–4.

B. Widrow, S. D. Stearns, Adaptive signal processing (Prentice Hall, New Jersey, 1985).

Y. Wang, Y. Li, R. Yang, Sparse adaptive channel estimation based on mixed controlled l 2 and l p-norm error criterion. J. Frankl. Inst.354(15), 7215–7239 (2017).

G. Gui, L. Xu, W. Ma, et al, in IEEE International Conference on Digital Signal Processing. Robust adaptive sparse channel estimation in the presence of impulsive noises (IEEESingapore, 2015), pp. 628–632.

Y. Li, Y. Wang, T. Jiang, Sparse least mean mixed-norm adaptive filtering algorithms for sparse channel estimation application. Int. J. Commun. Syst.30(8), 1–14 (2017).

S. S. Haykin, B. Widrow, Least-mean-square adaptive filters (Wiley, USA, 2003).

D. L. Donoho, Compressed sensing. IEEE Trans. Inf. Theory.52(4), 1289–1306 (2009).

N. Kalouptsidis, G. Mileounis, B. Babadi, V. Tarkh, Adaptive algorithms for sparse system identification. Signal Process.91(8), 1910–1919 (2011).

Y. Chen, Y. Gu, A. O. Hero, in Proc. IEEE International Conference on Acoustic Speech and Signal Processing, Taipei, Taiwan. Sparse LMS for system identification (IEEETaiwan, 2009), pp. 3125–3128.

Y. Gu, J. Jin, S. Mei, L0 norm constraint LMS algorithms for sparse system identification. IEEE Signal Process. Lett.16(9), 774–777 (2009).

G. Gui, W. Peng, F. Adachi, in Wireless Communications and Networking Conference. Improved adaptive sparse channel estimation based on the least mean square algorithm (IEEEChina, 2013), pp. 3105–3109.

O. Taheri, S. A. Vorobyov, in IEEE International Conference on Acoustic, Speech and Signal Processing, (ICASSP). Sparse channel estimation with l p-norm and reweighted l 1-norm penalized least mean squares (IEEECzech Republic, 2011), pp. 2864–2867.

Y. Li, Y. Wang, T. Jiang, in 7th International Conference on Wireless Communications and Sinal Processing, (WCSP). Sparse channel estimation with l p-norm and reweighted l 1-norm penalized least mean squares (IEEEChina, 2015).

R. L. Das, M. Chakraborty, Improving the performance of the PNLMS algorithm using l 1 norm regularization. IEEE/ACM Trans. Audio Speech Lang. Process.24(7), 1280–1290 (2016).

B. Weng, K. E. Barner, Nonlinear system identification in impulsive environments. IEEE Trans. Signal Process.53(7), 2588–2594 (2005).

P. L. Brockett, M. Hinich, G. R. Wilson, Nonlinear and non-gaussian ocean noise. J. Acoust. Soc. Am.82(4), 1386–1394 (1987).

J. Zhang, Z. Zheng, Y. Zhang, J. Xi, X. Zhao, G. Gui, 3D MIMO for 5G NR: several observations from 32 to massive 256 antennas based on channel measurement. IEEE Commun. Mag.56(3), 62–70 (2018).

W. Liu, P. Pokharel, J. C. Principe, Correntropy: properties and applications in non-gaussian signal processing. IEEE Trans. Signal Process.55(11), 5286–5298 (2007).

A. Singh, J. C. Principe, in Proceedings of International Joint Conference on Neural Networks. Using correntropy as cost function in adaptive filters (IEEEAtlanta, 2009), pp. 2950–2955.

D. Erdogmus, J. Principe, Generalized information potential criterion for adaptive system training. IEEE Trans. Neural Netw.13(5), 1035–1044 (2002).

L. Y. Deng, The cross-entropy method: a unified approach to combinatorial optimization, monte-carlo simulation and machine learning. Technometrics. 48(1), 147–148 (2004).

B. Chen, Y. Zhum, J. Hu, Mean-square convergence analysis of ADALINE training with minimum error entropy criterion. IEEE Trans. Neural Netw.21:, 1168–1179 (2010).

B. Chen, J. C. Principe, Some further results on the minimum error entropy estimation. Entropy. 14:, 966–977 (2014).

B. Chen, J. C. Principe, On the smoothed minimum error entropy criterion. Entropy. 14:, 2311–2323 (2012).

B. Chen, J. Wang, H. Zhao, N. Zheng, J. C. Principe, Convergence of a fixed-point algorithm under maximum correntropy criterion. IEEE Signal Process. Lett.22:, 1723–1727 (2015).

W. Ma, H. Qu, G. Gui, L. Xu, J. Zhao, B. Chen, Maximum correntropy criterion based sparse adaptive filtering algorithms for robust channel estimation under non-gaussian environments. J. Frankl. Inst.352(7), 2708–2727 (2015).

B. Chen, L. Xing, H. Zhao, N. Zheng, J. C. Principe, Generalized correntropy for robust adaptive filtering. IEEE Trans. Signal Process.64(13), 3376–3387 (2016).

N. Bershad, Analysis of the normalized LMS algorithm with Gaussian inputs. IEEE Trans. Acoust. Speech Signal Proc.34(4), 793–806 (1986).

D. B. Haddad, M. R. petraglia, A. Petraglia, in Proc. of the 24th European Signal Processing Conference (EUSIPCO’16). A unified approach for sparsity-aware and maximum correntropy adaptive filters (EURASIPHungary, 2016), pp. 170–174.

Y. Wang, Y. Li, F. Albu, R. Yang, Group constrained maximum correntropy criterion algorithms for estimating sparse mix-noised channels. Entropy. 19(8), 1–18 (2017).

Y. Li, Z. Jin, Y. Wang, R. Yang, A robust sparse adaptive filtering algorithm with a correntropy induced metric constraint for broadband multi-path channel estimation. Entropy. 18(10), 1–14 (2016).

Y. Li, Z. Jin, Y. Wang, R. Yang, in Progress In Electromagnetics Research Symposium. Sparse normalized maximum correntropy criterion algorithm with l 1-norm penalties for channel estimation (The Electromagnetics AcademySt Petersburg, 2017).

Y. Li, Y. Wang, R. Yang, F. Albu, A soft parameter function penalized normalized maximum correntropy criterion algorithm for sparse system identification. Entropy. 19(1), 1–16 (2017).

H. Deng, M. Doroslovaki, Proportionate adaptive algorithms for network echo cancellation. IEEE Trans. Signal Process.54(5), 1794–1803 (2006).

R. L. Das, M. Chakraborty, On convergence of proportionate-type normalized least mean square algorithms. IEEE Trans. Circ. Syst. II Express Briefs.62(5), 491–495 (2015).

W. Ma, D. Zheng, Z. Zhang, et al., Robust proportionate adaptive filter based on maximum correntropy criterion for sparse system identification in impulsive noise environments. SIViP. 12(1), 117–124 (2018).

Z. Wu, S. peng, B. Chen, H. Zhao, J. C. Principe, Proportionate minimum error entropy algorithm for sparse system identification. Entropy. 17(9), 5995–6006 (2015).

Y. Wang, Y. Li, R. Yang, in The 25th European Signal Processing Conference (EUSIPCO). A sparsity-aware proportionate normalized maximum correntropy criterion algorithm for sparse system identification in non-gaussian environment (EURASIPGreece, 2017).

F. Y. Wu, F. Tong, Non-uniform norm constraint LMS algorithm for sparse system identification. IEEE Commun. Lett.17(2), 385–388 (2013).

Y. Li, Y. Wang, T. Jiang, Norm-adaption penalized least mean square/fourth algorithm for sparse channel estimation. Signal Proc.128:, 243–251 (2016).

H. Everett, Generalized lagrange multiplier method for solving problems of optimum allocation of resources. Oper. Res.11(3), 399–417 (1963).

Y. Wang, Y. Li, Sparse multipath channel estimation using norm combination constrained set-membership NLMS algorithms. Wirel. Commun. Mob. Comput.2017:, 1–10 (2017).

M. L. Aliyu, M. A. Alkassim, M. S. Salman, A p-norm variable step-size LMS algorithm for sparse system identification. Sig. Image Video Process.9(7), 1559–1565 (2015).

W. Jie, Y. Jie, X. Jian, S. Hikmet, G. Gui, Sparse hybrid adaptive filtering algorithm to estimate channels in various SNR environments. IET Commun. (2017). https://doi.org/10.1049/iet-com.2017.1276.

J. Liu, S. L. Grant, Block sparse memory improved proportionate affine projection sign algorithm. Electron. Lett.51(24), 2001–2003 (2015).

Acknowledgements

I would like to express our great appreciation to Prof. Felix Albu for his valuable suggestions for computing numerical complexities of the proposed ACC-PNMCC algorithm in this research work.

Funding

This work was supported by the PhD Student Research and Innovation Fund of the Fundamental Research Funds for the Central Universities (HEUGIP201707), National Key Research and Development Program of China (2016YFE0111100), National Science Foundation of China (61571149), the Key Research and Development Program of Heilongjiang (GX17A016), the Science and Technology innovative Talents Foundation of Harbin (2016RAXXJ044), the Opening Fund of Acoustics Science and Technology Laboratory (grant vo. SSKF2016001) and China Postdoctoral Science Foundation (2017M620918).

Availability of data and materials

Please contact authors for data requests.

Author information

Authors and Affiliations

Contributions

YW devised the code and did the simulation experiments, then she wrote the draft of this paper. YL helped to check the codes and simulations, and he also put forward to the idea of the ACC-PNMCC algorithm. JCMB and XH helped to check the derivation of the formulas and improved the English of this paper. All the authors wrote this paper together, and they have read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study does not involve human participants, human data, or human tissue.

Consent for publication

In the manuscript, there is no any individual person’s data.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wang, Y., Li, Y., M. Bermudez, J.C. et al. An adaptive combination constrained proportionate normalized maximum correntropy criterion algorithm for sparse channel estimations. EURASIP J. Adv. Signal Process. 2018, 58 (2018). https://doi.org/10.1186/s13634-018-0581-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13634-018-0581-5