Abstract

In this paper, a novel class-specific kernel linear regression classification is proposed for face recognition under very low-resolution and severe illumination variation conditions. Since the low-resolution problem coupled with illumination variations makes ill-posed data distribution, the nonlinear projection rendered by a kernel function would enhance the modeling capability of linear regression for the ill-posed data distribution. The explicit knowledge of the nonlinear mapping function can be avoided by using the kernel trick. To reduce nonlinear redundancy, the low-rank-r approximation is suggested to make the kernel projection be feasible for classification. With the proposed class-specific kernel projection combined with linear regression classification, the class label can be determined by calculating the minimum projection error. Experiments on 8 × 8 and 8 × 6 images down-sampled from extended Yale B, FERET, and AR facial databases revealed that the proposed algorithm outperforms the state-of-the-art methods under severe illumination variation and very low-resolution conditions.

Similar content being viewed by others

1 Introduction

Numerous studies [1] have been greatly proposed for face recognition recently. In realistic situations such as video surveillance, face recognition may encounter many great challenges, especially low-resolution problems, caused by the cameras at a distance. Additionally, the low-resolution (LR) problems might be coupled with other effects such as illumination variations. Therefore, it is desired to devise a face recognition method for both very low-resolution and illumination variation problems.

In the literature, numerous researches based on the subspace projection methods have been proposed to achieve successful face recognition. The principle component analysis (PCA) [2–6] and independent component analysis (ICA) [7–9] have been reported to be robust in noisy conditions. The linear discriminant analysis (LDA) [3–6] yields better results in clean conditions and lighting changes than the PCA. Moreover, the kernel variants such as kernel PCA (KPCA) [10–12] and kernel LDA (KLDA) [12–14] have been presented to achieve better performance by nonlinearly mapping the data from the original space to a very high-dimensional feature space, which is called the reproducing kernel Hilbert space (RKHS). Therefore, KPCA and KLDA by nonlinearly mapping could be improved from high-order statistics, whereas the PCA and LDA only utilize the first- and second-order statistics. Thus, for highly nonlinear data distribution, these kernel methods are more suitable for low-resolution and illumination variation conditions. Moreover, some geometrically motivated approaches such as locality preserving projection (LPP) [15] and neighboring preserving embedding (NPE) [16] have been shown effectiveness for face recognition.

Recently, the spare representation classification (SRC) [17, 18] and a linear regression classification (LRC) algorithms [19] have been proposed for face recognition. Although SRC-based approaches perform very well in many situations, the execution time in SRC-based approaches is more than that in LRC-based approaches. For pursuing the accuracy and speed, the LRC would be a good choice for further investigation. The LRC is based on that face images from a specific class are known to lie on a class-specific linear subspace [3, 20]. The regression coefficients can be estimated by using the least-square method, and the decision can be determined by the minimum reconstruction error. Experiments reported have shown that the down-sampled low-resolution face image could be used for face classification directly. However, as the results reported, the LRC could not withstand severe illumination variations. In addition, a robust linear regression classification algorithm (RLRC) [21] has been introduced to address the problem of robust face recognition. However, low-resolution problems did not be addressed.

The performances achieved by the existing methods, such as PCA, LDA, LPP, and NPE methods, decrease under low-resolution condition because of the loss of high-frequency information [22]. Boom et al. in [23] showed that low-resolution face images below 32 × 32 pixels degraded the performances of the PCA and LDA seriously. In [24], the face images with 20 × 20 and 10 × 10 resolution dramatically deteriorated the recognition performance compared with those with 40 × 40 pixels in video-based face recognition systems. In [25], face resolution below 36 × 48 reduced the expression recognition performance.

To overcome the problem of low-resolution face recognition, several works have been presented to resolve this problem by using the super-resolution (SR) method [26–30]. One is to train relationship between the low-resolution (LR) and its corresponding high-resolution (HR) face images [29]. The other uses the canonical correlation analysis to compute the coherent features between the LR and its HR face images [30]. Here, we can observe that the LR and HR image pairs are needed for SR methods.

1.1 Problem statements

In realistic situations, it may be a case that only the LR face images are available in training set for identifying criminals, so it is imperative to overcome the problem that some training individuals may not be the same as gallery. In other words, the HR face images for specific persons are not available for modeling the relationship and computing the similarity in SR approaches [26–30]. Thus, how to perform LR face recognition directly without the HR information is a critical and practical topic.

To conquer the illumination variation problems, several approaches [3, 31–35] have been proposed. For instance, numerous preprocessing methods, such as the histogram equalization, gamma correction, and logarithm transform, are widely used for the illumination normalization. Other methods including the gradient operation, Gabor filters, and LDA-based approaches are well-known illumination invariant methods. However, these methods would fail because the important features in high-frequency details for face recognition are lost under the LR problems.

1.2 Contributions

We propose a novel face recognition algorithm to improve the limitation of the LRC [15] by embedding the kernel method into the linear regression. The key of the proposed method is to apply a nonlinear mapping function to twist the original space into a higher dimensional feature space for better linear regression. Moreover, in order to make the proposed kernel projection feasible, a constrained low-rank approximation [36–38] is proposed to obtain low-rank-r singular value approximation. The low-rank approximation is a rank reduction method which minimizes the difference between a given matrix and an approximation matrix. Simulations carried on the extended Yale B, FERET, and AR facial databases reveal that the proposed kernel linear regression classification (KLRC) can achieve good performance for LR face recognition under variable lighting changes without any preprocessing. At the same time, the proposed algorithm can reconstruct the very low-resolution face image under illumination variations with high quality measured by quality assessments.

1.3 Paper outline

The rest of this paper is organized as follows. Section 2 reviews the LRC approach and presents the motivations. Section 3 formulates the proposed KLRC method with a constrained low-rank approximation algorithm. Section 4 shows the comparisons with the related work. Section 5 gives experimental results. Finally, we draw conclusions in Section 6.

2 Background and motivations

2.1 Linear regression classification (LRC)

Assume we have N subjects with p i training images from the ith class, i = 1, 2,…,N. Each gray scale training image is in size of a × b pixels and is represented as v i,j ∈ ℜ a × b, i = 1, 2,…,N and j = 1, 2,…,p i . Then, each training image is transformed to a column vector as w i,j ∈ ℜ q × 1, where q = a × b. For applying the linear regression to estimate the class-specific model, we stack all column vectors w i,j regarding the class-membership. Hence, for the ith class, we have

where each vector w i,j is a column vector of W i . Thus, in the training phase, the ith class is represented by a vector space W i , which is called the regressor for each subject.

If y belongs to the ith class, it can be represented as a linear combination of the training images from the ith class and can be defined as

where \( {\boldsymbol{\beta}}_i\in {\Re}^{p_i\times 1} \) is the vector of regression parameters and e is an error vector whose elements are independent random variables with zero mean and variance σ 2. The goal of the regression is to find \( {\tilde{\boldsymbol{\beta}}}_i \), which minimizes the residual errors as

The regression coefficients can be solved through the least-square estimation and can be written as a matrix form as

The vector of estimated parameters, \( {\tilde{\boldsymbol{\beta}}}_i \), and the predictor, W i , are used to predict the response vector ỹ i for the ith class as

By substituting (4) for \( {\tilde{\boldsymbol{\beta}}}_i \) in (5), the optimal prediction in the least-square sense becomes

Theoretically, we can treat the above equation as a class-specific projection as [39],

where ỹ i is the projection of y onto the subspace of the ith class by the projection matrix, \( {\boldsymbol{H}}_i={\boldsymbol{W}}_i{\left({\boldsymbol{W}}_i^T{\boldsymbol{W}}_i\right)}^{-1}{\boldsymbol{W}}_i^T \). It is noted that the projection matrix is a symmetric and idempotent matrix.

The LRC is developed based on the minimum reconstruction error. In other words, if the original vector belongs to the subspace of class i, the predicted response vector ỹ i will be the closest vector to the original vector. The identity i* could be determined by calculating the Euclidean distance measure between the predicted response vectors and the original vector as

2.2 Motivations

The LRC has been developed based on the concept that samples from a specific person are known to lie on a class-specific linear subspace and demonstrated that it could achieve good performance for the low-resolution face images, but not good for severe illumination variations. This is because illumination variations make the data distribution more complicated. So the face images captured under variable lighting conditions may cause the linear subspace approaches inappropriate. In other words, the linear subspace methods would fail when they violate the Lambertian assumption regarding the illumination problem. Especially, when the low-resolution problem is coupled with illumination variations, the linear subspace methods, such as the PCA and LDA, and the linear regression classification (LRC) could not counteract the problem. In this paper, a kernel linear regression classification (KLRC) with a constrained low-rank approximation is proposed for low-resolution face recognition under illumination variations. The KLRC with the nonlinear mapping function can evaluate the LRC in the higher dimensional feature space and can achieve good results.

3 KLRC

Assume the original input space can be always mapped to some higher dimensional feature space where the data set is distributed linearly. As shown in Fig. 1, the left figure shows that it is difficult to fit the data by a regression line because of nonlinear data distribution, whereas the right figure shows that it is easy to fit the data by a regression plane because of data distribution linearly by a mapping function from R 2 to R 3. Thus, it can be expected that a nonlinear mapping prior to linear regression could improve the limitation of the LRC under severe illumination variations. In order to formulate a general equation and solve the problem systematically, we will discuss it in details later. Here, we first introduce the kernel linear regression classification (KLRC) method. The KLRC is also developed based on the theory that samples from a specific class are known to lie on a linear subspace by a nonlinear mapping. The key is to apply a nonlinear mapping function to the input space and then evaluate it by the LRC in the higher dimensional feature space. The dimension of the resulting feature space could be very large. Fortunately, this explicit knowledge of the nonlinear mapping function can be computationally avoided by using the kernel trick [40].

For the following theoretical derivation, a vector space should be defined as

where each vector z i,j is a row vector of Z i . Specifically, each row vector in Z i is projected from the original space \( {\Re}^{p_i} \) to a high-dimensional space ℜ f by a nonlinear mapping function \( \begin{array}{cc}\hfill \boldsymbol{\varPhi} \left({\boldsymbol{z}}_{i,j}\right):{\Re}^{p_i}\to {\Re}^f,\hfill & \hfill f>{p}_i\hfill \end{array} \). Therefore, now, ℜ f is the space spanned by Φ(z i,j ). The projected row vectors can be used for linear regression as

Because of the increase in dimensionality, the mapping function Φ(z i,j ) is made implicitly by using the kernel function satisfying Mercer’s theorem. Furthermore, by using the dual representation β i = Φ(Z i )T α i , the linear regression stated in (10) becomes

where the kernel matrix K i is positive semi-definite. Typically, kernel functions include the polynomial kernel and Gaussian kernel, which satisfy Mercer’s theorem.

We first perform singular value decomposition (SVD) on the kernel matrix K i as

where U and V T are left and right SVD orthonormal matrices and S = diag{λ 1, λ 2, …, λ g } is an rectangular diagonal matrix with the descend-sorted singular values on the diagonal with λ 1 ≥ … ≥ λ k ≥ … ≥ λ g ≥ 0. To achieve a robust estimation, we propose a constrained rank-r approximation of K i defined as

where

by discarding (g − r) least SVD components. The number of principle SVD components, r, is determined by

where μ is a selected control factor, λ g is the smallest singular value, and \( {\lambda}_{{}_{\mathrm{median}}} \), which is the median of all singular values, is expressed as

After the construction of the constrained low-rank approximation stated in (13), we could obtain a kernel linear regression model for the ith class as

Then, the kernel linear regression aims to minimize the residual errors as

The above solution can be also solved by the least-square estimation since it has the same form as stated in (2). After the low-rank approximation, we can use the pseudo-inverse of \( {\boldsymbol{K}}_i^r \) to obtain the least-square solution as

where the pseudo-inverse of \( {\boldsymbol{K}}_i^r \) is expressed by

with

Since \( {\boldsymbol{K}}_i^r{\left({\boldsymbol{K}}_i^r\right)}^{-}\ne \boldsymbol{I} \), it will be feasible to compute the minimum reconstruction error between the original vector and projected vector for determining the classification results.

In the classification phase, the response vector ỹ i for the ith class can be predicted by

By substituting (19) in (22), we can obtain

and obtain a class-specific kernel projection matrix as

where ỹ i is the projection of y onto the kernel subspace of the ith class by the class-specific kernel projection matrix, P i . It is noted that \( {\boldsymbol{K}}_i^r{\left({\boldsymbol{K}}_i^r\right)}^{-}\ne \boldsymbol{I} \) is necessary for the KLRC computation.

The KLRC is also developed based on the minimum reconstruction error. So in the recognition phase, the identity i* could be determined by calculating the Euclidean distance measure between the predicted response vectors and the original vector as

4 Comparison with the related works

4.1 Analysis of the regression parameter

To simplify the analysis, we assume that W i is a square matrix. We have W i = U i D i V i T by SVD with U i T U i = I, because W i is a square matrix, U i T = U i − 1, and U i U i T = I. Similarly, V i V i T = V i T V i = I. In addition, the linear kernel k(z ij , z ij ) = < z ij , z ij > = z ij z ij T is used in the KLRC for the theoretical analysis below.

4.1.1 LRC

The goal of the LRC is to find \( {\tilde{\boldsymbol{\beta}}}_i \), which minimizes the residual errors. Statistically, the linear regression model is an unbiased estimate. Also, the variance of the regression parameter vector \( {\tilde{\boldsymbol{\beta}}}_i \) in the linear regression model is expressed as

where W i = U i D i V i T by SVD, v ij is the jth column eigenvector of V i , and d ij is the jth eigenvalue corresponding to the v ij .

4.1.2 KLRC

The goal of the KLRC is to find \( {\tilde{\boldsymbol{\alpha}}}_i \), which minimizes the residual errors. Statistically, the kernel linear regression model is also an unbiased estimator since the kernel linear regression model in (17) has the same form as in (2). On the other side, the variance of the regression parameter vector in the kernel linear regression model is expressed as

where r < J, \( {\boldsymbol{W}}_i^r={\boldsymbol{U}}_i^r{\boldsymbol{D}}_i^r{{\boldsymbol{V}}_i^r}^T \) by the SVD, and d ij is the jth eigenvalue corresponding to the jth eigenvector. Compared (26) with (27), the variance of the regression parameter vector in the KLRC is smaller than that in the LRC. Therefore, it can be expected that the KLRC can provide more reliable regression parameter to the regression model for classification.

5 Experimental results

For verifications, we examine the proposed algorithms on the facial images, which are down-sampled from the extended Yale B (EYB) [41], AR [42], and FERET [43] face databases. In the experiments, we evaluate the proposed method against low-resolution problems coupled with illumination variations. In this section, all experimental results report the top 1 recognition accuracy (%).

The experiments are designed to evaluate the effectiveness of the proposed method in coping with unseen lighting changes under the LR condition. In the experiments, we compare the proposed methods, KLRC-p and KLRC-g, with the PCA+Euclidean, PCA+Mahalanobis [44], KPCA-p, KPCA-g, LDA+Euclidean, LDA+Mahalanobis, KLDA-p, KLDA-g, LRC, RLRC, SRC, LPP, NPE, improved principal component regression (IPRC) [45], unitary regression classification (URC) [46], linear discriminant regression classification (LDRC) [47], and local binary pattern (LBP) [48], where p and g denote the polynomial kernel and Gaussian kernel, respectively. The PCA-based and LDA-based approaches utilize 85 % dimensionality for experiments. It should be noted that this paper assumes the corresponding HR face images for the LR face images are not available as stated in Section 1.1. Hence, the existing face recognition systems with the SR method are not suitable for this problem.

5.1 Experiments on EYB

The EYB contains images of 38 subjects with 9 poses and 64 illuminations per pose. The frontal face images of all subjects with 64 different illuminations are used for evaluation. The EYB is divided into five subsets based on the angle of the light source directions. As a result, there are total 2432 images: 266 (7 images per person), 456 (12 images per person), 456 (12 images per person), 532 (14 images per person), and 722 (19 images per person) images in subsets 1 to 5, respectively. All images are cropped, lowpassed, and resized to low-resolution images in size of 8 × 8 pixels, as shown in Fig. 2. Subset 1 is conducted for training, and the remaining subsets (subsets 2 to 5) are used for testing.

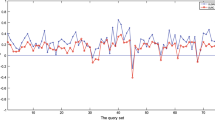

First of all, we investigate the performances under different image resolutions. Figure 3 shows the average recognition rate over the remaining subsets. Results reveal that the proposed KLRC-p and KLRC-g outperform the other methods consistently. Low-resolution face images with 8 × 8 pixels degrade the performances significantly. Nonetheless, the proposed KLRC algorithms still could perform well under the very low-resolution condition. Also, the results show that the performances achieved by the PCA-based, LDA-based, and the other subspace projection methods are drastically reduced for resolution below 16 × 16 pixels. Moreover, it is interesting to point out that the KLRC-p and KLRC-q under 8 × 8 pixels outperform the PCA-based and LDA-based approaches under 32 × 32 pixels significantly and achieve comparable recognition rate by the LRC-based approaches under 32 × 32 pixels. LBP [48] is a local feature method which can effectively defense the illumination variations under 32 × 32 pixels. However, LBP cannot perform very well under very low-resolution situation since the facial information is not enough for local feature extraction. This success should be attributed to perform linear regression in the higher dimensional feature space.

Then, we further focus on the low-resolution images. As shown in Table 1, the KLRC-p and KLRC-g outperform the LRC, RLRC, IPCR, URC, LDRC, PCA+Euclidean, PCA+Mahalanobis, kernel-based PCA, LDA+Euclidean, LDA+Mahalanobis, kernel-based LDA, SRC, LPP, NPE, and LBP for the low-resolution face recognition under illumination variations. The PCA-based methods are the worst ones. Note that although it is widely accepted that the discriminant-based approaches offer higher robustness to lighting variations than the PCA-based approaches [3], the discriminant-based approaches still cannot withstand the low-resolution problem coupled with illumination variations in our work. It is because the low-resolution image contains insufficient high-frequency components containing the discriminative information for discriminant analysis. In [45], Huang et al. had shown that the regression-based method can perform better than the discriminant-based approach for face recognition under illumination variations. The proposed KLRC-p and KLRC-q have gained improvement significantly. In addition, although the RLRC performs better than the LRC, the RLRC could not obtain satisfactory performance because of performing regression in the original linear space.

5.2 Experiments on AR

For further verifications, we conducted experiments on AR face database. The AR database, built by Martinez and Benavente, totally contains 3510 mug shots of 135 subjects (76 males and 59 females) with different facial expressions, lighting changes, and partially occlusions. Each subject contains 26 images in two sessions. The first session, containing 13 images, includes the neutral expression, smile, anger, screaming, different lighting changes, and two realistic partial occlusions with lighting changes. The second session duplicates the first session in the same way 2 weeks later.

To evaluate the effectiveness of the proposed approach in coping with variable illuminations, only the face images with illumination variations were considered in the experiments. All color face images in the AR are converted to gray levels, cropped, and down-sampled to the size of 8 × 6 pixels. Note that no face alignment is done in the copped face images. As shown in Fig. 4, 120 subjects with 8 face images under illumination variations, including no lighting, left lighting, right lighting, and full lighting, are chosen for evaluation. Training is conducted on the images with no lighting, and the remaining lighting conditions (left lighting, right lighting, and full lighting) are used for testing.

The experimental results are tabulated in Table 2, which reflects that the proposed KLRC-p and KLRC-g can attain higher recognition rate than the LRC, RLRC, IPCR, URC, LDRC, PCA+Euclidean, PCA+Mahalanobis, kernel-based PCA, LDA+Euclidean, LDA+Mahalanobis, kernel-based LDA, NPP, NPE, and LBP for low-resolution face recognition under illumination variations. From the experimental results, we can observe that SRC performs well as the proposed KLRC, especially under low ill-posed situation. However, the execution time in SRC-based approaches generally is more than that in LRC-based approaches [49]. The discriminant-based approaches, LRC, RLRC, IPCR, URC, and LDRC, could not work well for low-resolution face recognition under illumination variations.

5.3 Experiments on FERET

We further conduct experiments on the FERET face database to evaluate the performance on the illumination and expression variations since the different facial expression is inevitable to face recognition. The FERET includes 250 people with four frontal view images from each subject. These 1000 face images with illumination and expression variations are resized to 8 × 6 pixels. Two images of each person are randomly selected for training, and the other two images are for testing. The experimental results are tabulated in Table 3, which has reported that the KLRC-p and KLRC-g can achieve higher recognition rate than the LRC, RLRC, IPCR, URC, LDRC, PCA+Euclidean, PCA+Mahalanobis, kernel-based PCA, LDA+Euclidean, LDA+Mahalanobis, kernel-based LDA, SRC, NPP, NPE, and LBP. As the results shown, we can observe that the KLRC-p and KLRC-g can work well for low-resolution problems with illumination and expression variations. It is reasonable because the LR face image will lose facial expression information [25]. On the other hand, in FERET, the lighting variations are slight, so the improvement is limited.

6 Conclusions

In this paper, the statistical analyses and experiment results verified that the proposed class-specific kernel linear regression classification performs the best for the low-resolution face recognition under illumination variations. With the kernel trick, the nonlinear and increased-dimension mapping function enhances the modeling capability for low-resolution and illumination variations. Furthermore, the constrained low-rank approximation has been proposed to perform low-rank approximation automatically to make the kernel projection feasible for classification. The comparisons with the state-of-the-art methods indicate a comparable performance for the proposed KLRC-p and KLRC-g. We have demonstrated that the proposed KLRC-p and KLRC-g perform better than the PCA+Euclidean, PCA+Mahalanobis, KPCA-p, KPCA-g, LDA+Euclidean, LDA+Mahalanobis, KLDA-p, KLDA-g, LRC, RLRC, SRC, LPP, NPE, IPRC, URC, LDRC, and LBP for low-resolution face recognition under variable lighting. In summary, the KLRC-p and KLRC-g dramatically improve the LRC to possess good robustness for very low-resolution face recognition under severe illumination variations.

References

W Zhao, R Chellappa, A Rosenfeld, PJ Phillips, Face recognition: a literature survey. ACM Comput Surv 35(4), 399–458 (2003)

M. Turk and A. Pentland. Eigenfaces for recognition, Journal of Cognitive Science 3(1), pp.71-86, 1991.

P Belhumeur, J Hespanha, D Kriegman, Eigenfaces vs. fisherfaces: recognition using class specific linear projection. IEEE Trans on Pattern Analysis and Machine Intelligence 19, 7 (1997)

AM Martinez, AC Kak, PCA versus LDA. IEEE Trans on Pattern Analysis and Machine Intelligence 23, 228–233 (2001)

X Jiang, Linear subspace learning-based dimensionality reduction. IEEE Signal Process Mag 28(2), 16–26 (2011)

X Jiang, Asymmetric principal component and discriminant analyses for pattern classification. IEEE Trans on Pattern Analysis and Machine Intelligence 31(5), 931–937 (2009)

PC Yuen, JH Lai, Face representation using independent component analysis. Pattern Recogn 35(6), 1247–1257 (2002)

MS Bartlett, JR Movellan, TJ Sejnowski, Face recognition by independent component analysis. IEEE Trans on Neural Networks 13(6), 1450–1464 (2002)

J. Yang, D. Zhang, and J. Yang, Constructing PCA baseline algorithms to reevaluate ICA-based face-recognition performance, IEEE Trans. on Systems, Man, and Cybernetics 37(4), Part B, pp.1015-1021, 2007.

B Moghaddam, Principal manifolds and probabilistic subspaces for visual recognition. IEEE Trans on Pattern Analysis and Machine Intelligence 24(6), 780–788 (2002)

B Scholkopf, A Smola, K-R Muller, Nonlinear component analysis as a kernel eigenvalue problem. Neural Comput 10, 1299–1319 (1998)

M. H. Yang, Kernel eignefaces vs. kernel fisherfaces: face recognition using kernel methods, Proc. Fifth IEEE Int’l Conf. Automatic Face and Gesture Recognition, pp. 215-220, May 2002.

G Baudat, F Anouar, Generalized discriminant analysis using a kernel approach. Neural Comput 12, 2385–2404 (2000)

S. Mika, G. Ratsch, J. Weston, B. Scholkopf, and K. R. Muller, Fisher discriminant analysis with kernels, Neural Networks for Signal Proc. IX 1(1), pp. 41–48, 1999.

X He, S Yan, Y Hu, P Niyogi, HJ Zhang, Face recognition using Laplacianfaces. IEEE Trans on Pattern Analysis and Machine Intelligence 27, 3 (2005)

X He, D Cai, S Yan, H Zhang, Neighborhood preserving embedding. Proc ICCV’05 2, 1208–1213 (2005)

J Wright, AY Yang, A Ganesh, SS Sastry, Y Ma, Robust face recognition via sparse representation. IEEE Trans on Pattern Analysis and Machine Intelligence 31(2), 210–227 (2009)

X Jiang, J Lai, Sparse and dense hybrid representation via dictionary decomposition for face recognition. IEEE Trans on Pattern Analysis and Machine Intelligence 37(5), 1067–1079 (2015)

I Naseem, R Togneri, M Bennamoun, Linear regression for face recognition. IEEE Transon Pattern Analysis and Machine Intelligence 32(11), 2106–2112 (2010)

R Barsi, D Jacobs, Lambertian reflection and linear subspaces. IEEE Trans on Pattern Analysis and Machine Intelligence 25(3), 218–233 (2003)

I Naseem, R Togneri, M Bennamoun, Robust regression for face recognition. Pattern Recogn 45(1), 104–118 (2012)

Z. Wang and Z. Miao, Scale-robust feature extraction for face recognition, in 17th European Signal Processing Conference (EUSIPCO), pp. 1082-1086, 2009.

B. J. Boom, G. M. Beumer, L. J. Spreeuwers, and R. N. J. Veldhuis, The effect of image resolution on the performance of a face recognition system, in Proc. IEEE Int. Conf. CARV, pp. 1–6, 2006.

A. Hadid and M. Pietikainen, From still image to video-based face recognition: an experimental analysis, in Proc. IEEE Int. Conf. AFGR, pp. 813–818, 2004.

L. Tian, Evaluation of face resolution for expression analysis, in Proc. IEEE Int. Conf. CVPR, 82–82, 2004.

B Gunturk, A Batur, Y Altunbasak, MH Hayes III, R Mersereau, Eigenface-domain super-resolution for face recognition. IEEE Trans Image Process 12(5), 597–606 (2003)

SW Lee, J Park, SW Lee, Low-resolution face recognition based on support vector data description. Pattern Recogn 39(9), 1809–1812 (2006)

P. H. Hennings-Yeomans, S. Baker, and B. Vijaya Kumar, Simultaneous super-resolution and feature extraction for recognition of low-resolution faces, in IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8, 2008.

W.W.W. Zou and P.C. Yuen, Very low-resolution face recognition problem, IEEE Trans. on Image Processing 21(1), pp. 327–340, 2011.

H Huang, H He, Super-resolution method for face recognition using nonlinear mappings on coherent features. IEEE Trans on Neural Networks 22(1), 121–130 (2011)

S. Shan, W. Gao, B. Cao, and D. Zhao, Illumination normalization for robust face recognition against varying lighting conditions, in Proc. IEEE Workshop on AMFG, pp. 157–164, 2003.

W Chen, JE Meng, S Wu, Illumination compensation and normalization for robust face recognition using discrete cosine transform in logarithm domain. IEEE Trans on Systems, Man, and Cybernetics-Part B: Cybernetics 36, 2 (2006)

TP Zhang, YY Tang, B Fang, ZW Shang, XY Liu, Face recognition under varying illumination using gradientfaces. IEEE Trans Image Process 18(11), 2599–2606 (2009)

X Tan, B Triggs, Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans Image Process 19(6), 1635–1650 (2010)

B Wang, W Li, W Yang, Q Liao, Illumination normalization based on Weber’s law with application to face recognition. IEEE Signal Processing Letters 18, 462–465 (2011)

J Ye, Generalized low rank approximations of matrices. Mach Learn 61(1–3), 167–191 (2005)

K. L. Clarkson and D. P. Woodruff, Low rank approximation and regression in input sparsity time, Proceedings of the forty-fifth annual ACM symposium on Theory of computing, pp. 81–90, 2013.

D. Woodruff, Low rank approximation lower bounds in row-update streams, Advances in Neural Information Processing Systems, pp. 1781–1789, 2014.

DC Hoaglin, RE Welsch, The hat matrix in regression and ANOVA. Am Stat 32(1), 17–22 (1978)

KR Muller, S Mika, G Ratsch, K Tsuda, B Scholkopf, An introduction to kernel-based learning algorithms. IEEE Trans on Neural Networks 12(2), 181–201 (2001)

AS Georghiades, PN Belhumeur, DW Jacobs, From few to many: illumination cone models for face recognition under variable lighting and pose. IEEE Trans on Pattern Analysis and Machine Intelligence 23(6), 630–660 (2001)

A. Martinez and R. Benavente, The AR face database, CVC Technical Report 24, 1998.

PJ Phillips, H Moon, SA Rizvi, PJ Rauss, The FERET valuation methodology for face-recognition algorithms. IEEE Trans on Pattern Analysis and Machine Intelligence 22(10), 1090–1104 (2000)

V Perlibakas, Distance measures for PCA-based face recognition. Pattern Recogn Lett 25(6), 711–724 (2004)

SM Huang, JF Yang, Improved principal component regression for face recognition under illumination variations. IEEE Signal Processing Letters 19(4), 179–182 (2012)

SM Huang, JF Yang, Linear discriminant regression classification for face recognition. IEEE Signal Processing Letters 20(1), 91–94 (2013)

SM Huang, JF Yang, Unitary regression classification with total minimum projection error for face recognition. IEEE Signal Processing Letters 20(5), 443–446 (2013)

T Ahonen, A Hadid, M Pietikäinen, Face recognition with local binary patterns, in Computer vision-eccv (Springer, Berlin Heidelberg, 2004), pp. 469–481

J Qian, J Yang, X Yong, General regression and representation model for classification. PLoS One 9, 12 (2014)

Acknowledgements

This work was supported in part by National Science Council of Taiwan, under Grant NSC 103-2221-E-006-109 -MY3.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Chou, YT., Huang, SM. & Yang, JF. Class-specific kernel linear regression classification for face recognition under low-resolution and illumination variation conditions. EURASIP J. Adv. Signal Process. 2016, 28 (2016). https://doi.org/10.1186/s13634-016-0328-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13634-016-0328-0