Abstract

The applicability domain of machine learning models trained on structural fingerprints for the prediction of biological endpoints is often limited by the lack of diversity of chemical space of the training data. In this work, we developed similarity-based merger models which combined the outputs of individual models trained on cell morphology (based on Cell Painting) and chemical structure (based on chemical fingerprints) and the structural and morphological similarities of the compounds in the test dataset to compounds in the training dataset. We applied these similarity-based merger models using logistic regression models on the predictions and similarities as features and predicted assay hit calls of 177 assays from ChEMBL, PubChem and the Broad Institute (where the required Cell Painting annotations were available). We found that the similarity-based merger models outperformed other models with an additional 20% assays (79 out of 177 assays) with an AUC > 0.70 compared with 65 out of 177 assays using structural models and 50 out of 177 assays using Cell Painting models. Our results demonstrated that similarity-based merger models combining structure and cell morphology models can more accurately predict a wide range of biological assay outcomes and further expanded the applicability domain by better extrapolating to new structural and morphology spaces.

Graphical Abstract

Similar content being viewed by others

Introduction

The prediction of bioactivity, mechanism of action (MOA) [1], safety and toxicity [2] of compounds using only chemical structure is challenging given that such models are limited by the diversity in the chemical space of the training data [3]. The chemical space of this data on which the model is trained is used to define the applicability domain of the model [4]. Among the various ways to calculate a model’s applicability domain, Tanimoto similarity for chemical structure is commonly used as a benchmark similarity measure for compounds. Tanimoto distance-based Boolean applicability has been previously used to improve the performance of classification models [5]. Expanding the applicability domain of structural models will improve the reliability of a model to predict endpoints for new compounds. One way to achieve this would be to incorporate hypothesis-free high-throughput data, such as cell morphology [6], bioactivity data [7] or predicted bioactivities [8, 9] in addition to structural models [10]. This then has the potential to improve predictions for compounds structurally distant from the training data. This is because compounds having similar biological activity may not always have a similar structure; however, they may show similarities in the biological response space [11]. Recently, using Chemical Checker signatures derived from processed, harmonized and integrated bioactivity data, researchers demonstrated that similarity extends well beyond chemical properties into biological activity throughout the drug discovery pipeline (from in vitro experiments to clinical trials) [12]. Hence the use of biological data could significantly help predictive models that have often been trained solely on chemical structure [10].

In recent years, relatively standardized hypothesis-free cell morphology data can now be obtained from the Cell Painting assay [13]. Cell Painting is a cell-based assay that, after a given chemical or genetic perturbation, uses six fluorescent dyes to capture a snapshot of the cellular morphological changes induced by the aforementioned perturbation. The six fluorescent dyes allow for the visualization of eight cellular organelles, which are imaged in five-channel microscopic images. The microscopic images are typically further processed using image analysis software, such as Cell Profiler [14], which results in a set of morphological numerical features aggregated to the treatment level. These numerical features representing morphological properties such as shape, size, area, intensity, granularity, and correlation, among many others, are considered versatile biological descriptors of a system [6]. Previous studies have shown Cell Painting data to be predictive of a wide range of bioactivity and drug safety-related endpoints such as the mechanism of action [15], cytotoxicity [16], microtubule-binding activity [17], and mitochondrial toxicity [18]. Recently, it has also been used to identify phenotypic signatures of PROteolysis TArgeting Chimeras (PROTACs) [19] as well as to determine the impact of lung cancer variants [20]. Thus, Cell Painting data can be expected to contain a signal about the biological activity of the compound perturbation, [6] and in this work, we explored how best to combine Cell Painting and chemical structural models for the prediction of a wide range of biological assay outcomes.

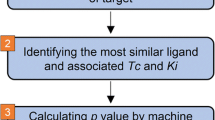

From the modeling perspective, several ensemble modeling techniques have been proposed to combine predictions from individual models [21]. One way to achieve this is an ensembling method shown in Fig. 1a, referred to as a soft-voting ensemble in this work. This method computes the mean of predicted probabilities from individual models and thus provides equal weight to individual model predictions. However, soft-voting ensemble models when combining two individual models give equal importance to each model [21]. This implies that if a model predicts a higher probability for a compound to be active and another model predicts the same compound to be inactive but with a lower probability, the first model prediction is considered final without considering the individual model’s reliability. As shown in Fig. 1b, another way to combine predictions from different models is via model stacking where the predictions of the individual models are used as features to build a second-level model (referred to as a hierarchical model in this work). Hierarchical models have previously been used by integrating classification and regression tasks in predicting acute oral systematic toxicity in rats [22]. The applicability range of predictions can be estimated by (i) the Random Forest predicted class estimates [23] (referred to as predicted probabilities in this study) and (ii) using the similarity of the test compound to training compounds (which in turn approximates the reliability of the prediction) [24]. Hence the hypothesis of the current work is that using the similarity of the test compound to training compounds in individual feature spaces and the predicted probabilities of the individual models built on those feature spaces, we can improve overall model performance.

Schematic Representation of workflow in this study to build (a) soft-voting ensemble models that compute the mean of predicted probabilities from individual models, (b) hierarchical models where the predictions of the individual models are used as features to build a second-level model, and (c) the similarity-based merger model. The similarity-based merger model combined predicted probabilities from individual models and the morphology and structural similarity of compounds to active compounds in training data

The various ways of fusing structural models with models trained on cell morphology were recently exploited by Moshkov et al. [27] who used chemical structures and cell morphology data (from the Cell Painting assay) to predict the compound activity of 270 anonymised bioactivity assays from academic screenings in the Broad Institute. They used a late data fusion (by using a majority rule on the prediction scores similar to soft-voting ensembles) to merge predictions for individual models. The late data fusion models were able to predict 31 out of 270 assays with AUC > 0.9, compared with 16 out of 270 assays for models using only structural features. This showed that fusing models built on two different feature spaces that provide complementary information was able to improve the prediction of bioactivity endpoints. Previous work has also shown that combinations of descriptors can significantly improve prediction for MOA classification [15, 25, 26] (using gene expression and cell morphology data), cytotoxicity [16], mitochondria toxicity [18] and anonymised assay activity [27] (using chemical, gene expression, cell morphology and predicted bioactivity data), prediction of sigma 1 (σ1) receptor antagonist [28] (using cell morphology data and thermal proteome profiling), and even organism-level toxicity [29] (using chemical, protein target and cytotoxicity qHTS data). Thus, the combination of models built from complementary feature spaces can expand a model’s applicability domain by allowing predictions in new structural space [30].

In this work, we explored merging predictions of assay hit calls from chemical structural models with predictions from another model using Cell Painting data for 88 assays from public datasets from PubChem and ChEMBL (henceforth referred to as public dataset, assay descriptions released as Additional file 1) and 89 anonymised assays from the Broad Institute [27] (henceforth referred to as Broad Institute dataset, assay descriptions released as Additional file 2). Cell Painting data, in general, may be assumed to be only highly predictive of the cell-based assay. However, in this study, we did not specifically select assays where this relation was obvious, as that would make our comparisons significantly favour the Cell Painting assay. In this work, we simply compare the two feature spaces, and for this, we use a wide range of assays (as mentioned above) while also later interpreting which feature spaces work better for which particular assays. That being noted, the Cell Painting assay is being constantly investigated for signals in not just in vitro assays but also in vivo effects; recent studies have established a signal for lung cancer [20] and drug polypharmacology [31].

From the modelling perspective, as shown in Fig. 1c, we merged predictions using a logistic regression model that not only takes the predicted probabilities from individual models but also the test compound’s similarity to the active compounds in the training data in different feature spaces (as shown in Additional file 5: Fig S1). That is, the models are also provided with the knowledge of how morphologically/structurally similar the test compound is to other active compounds in the training set. Here we emphasise using similarity-based merger models to improve the applicability domain of individual models (predicting compounds that are distant to training data in respective feature spaces) and the ability to predict a wider range of assays with the combined knowledge from the chemical structure and biological descriptors from Cell Painting assay.

Results and discussions

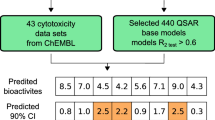

The 177 assays used in this study are a combination of the public dataset and anonymised assays from a Broad Institute dataset where required Cell Painting annotations were available (see Methods section for details). The public dataset comprising 88 assays (with at least 100 compounds) was collected from Hofmarcher et al. [40] and Vollmers et al. [42] (see Additional file 1 for assay descriptions) for which Cell Painting annotations were available from the Cell Painting assay [48]. The Broad Institute dataset comprises 89 assays (as shown in Additional file 2 for assay descriptions). We trained individual Cell Painting and structural models for all 177 assays. We used two baseline models for comparison, a soft-voting ensemble and a hierarchical model. Finally, we compared the results from the individual models and baseline ensemble models to the similarity-based merger models.

Similarity-based merger models outperform other baseline models

As shown in Fig. 2, we found that similarity-based merger models performed with significantly improved AUC-ROC (mean AUC 0.66 using similarity-based merger models,) compared with Cell Painting models (mean AUC 0.62 using, p-value from paired t-test of 5.6 × 10–4) and structural models (mean AUC 0.64, p-value from paired t-test of 7.3 × 10–3) for 171 out of the 177 assays (all models for the remaining 6 assays have AUC < 0.50, hence any improvement is insignificant as the models’ performance remains worse than random). Additional file 5: Fig S2 shows that similarity-based merger models significantly improved Balanced Accuracy and F1 scores compared with individual models. Overall, similarity-based merger models outperform other models in predicting bioactivity endpoints.

Distribution of AUC of all models, Cell Painting, Morgan Fingerprints, baseline models of a soft-voting ensemble, hierarchical model, and the similarity-based merger model, over 171 assays (out of 177 assays). An assay was considered for a paired significance test only if the balanced accuracy was > 0.50 and the F1 score was > 0.0 for at least one of the models

As shown in Fig. 3, 79 out of 177 assays achieved AUC > 0.70 with the similarity-based merger model, followed by hierarchical models for 55 out of 177 assays. Structural models achieved AUC > 0.70 in 65 out of 177 assays while for the Cell Painting models, this was the case in 50 out of 177 assays. Further 25 assays out of 177 were predicted with AUC > 0.70 with all methods while only 12 out of 177 assays did not achieve AUC > 0.70 with similarity-based merger models but did with the other models. When considering balanced accuracy, 51 out of 177 assays achieved a balanced accuracy > 0.70 with similarity-based merger models compared with 44 out of 177 assays for soft-voting ensemble models, as shown in Additional file 5: Fig S3.

(a) Number of assays predicted with an AUC above a given threshold. (b) Distribution of assays with AUC > 0.70 common and unique to all models, Cell Painting, Morgan Fingerprint, baseline models of a soft-voting ensemble, hierarchical model, and the similarity-based merger model, over all of the 177 assays used in this study

Comparing performance for the Cell Painting and structural models by AUC individually (Additional file 5: Fig S4) we observed that structural models and Cell Painting models were complementary in their predictive performance; while 96 out of 177 assays achieve a higher AUC with structural information alone, 81 out of 177 assays achieve a higher AUC using morphology alone as shown in Additional file 5: Fig S4a. Hierarchical models outperform soft-voting ensembles for 106 out of 177 assays as shown in Additional file 5: Fig S4b. Finally, the similarity-based merger model achieved a higher AUC score for 124 out of 177 assays compared with 52 out of 177 with hierarchical models and 132 out of 177 assays compared with 45 out of 177 with soft-voting ensembles as shown in Additional file 5: Fig S3c, d. This shows that the similarity-based merger model was able to leverage information from both Cell Painting and structural models to achieve better predictions in assays where no individual models were found to be predictive thus indicating a synergistic effect.

We next looked at the performance at the individual assay level (as shown in Additional file 3) as indicated by the AUC scores. We looked at 162 out of 177 assays where either the similarity-based merger model or the soft-voting ensemble performed better than a random classifier (\(\rm{AUC=0.50}\)) We observed that for 127 out of 177 assays (individual changes in a performance recorded in Additional file 5: Fig S5), the similarity-based merger models improved performance compared with the soft-voting ensemble (with the largest improvement recorded at 65.1%) and a decrease in performance was recorded in 35 out of 177 assays (largest decrease recorded at − 58.0% in performance). Further comparisons of AUC performance in Additional file 5: Fig S6 show that similarity-based merger models improved AUC compared with both structural models and Cell Painting models. This improvement in AUC was independent of the total number of compounds (or the ratio of actives to inactive compounds) in the assays as shown in Additional file 5: Fig S7. Thus, we conclude that the similarity-based merger model outperformed individual models by combining the rich information contained in cell morphology and structure-based models more efficiently than baseline models.

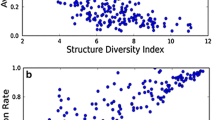

Similarity-based merger models expand the applicability domain compared with individual models

We next determined how individual and similarity-based merger model predictions differ with compounds that were structurally or morphologically similar/dissimilar to active compounds in the training set. We looked at predictions for each compound from the Cell Painting and structural models over the 177 assays and grouped them based on their morphological and structural similarity to active compounds in the training set respectively. We observed, as shown in Additional file 5: Fig S8, that similarity-based merger models correctly classified a higher proportion of test compounds which were less similar morphologically to active compounds in the training data. Further, as the structural similarity of test compounds with respect to active compounds in the training set increased, the structural models correctly classified a higher proportion of compounds while similarity-based merger models correctly classified test compounds with both low and high structural similarity. For example, out of 360 compounds with a low structural similarity between 0.20 and 0.30, models using chemical structure correctly classified 56.2% of compounds while similarity-based merger models correctly classified a much greater 63.6% of compounds. Moreover, similarity-based merger models were as effective as the models using chemical structure in classifying compounds with a higher structural similarity to training data (that is, there was no apparent worsening of performance). Out of 1525 such compounds in this study, with a higher structural similarity between 0.90 and 1.00, models using chemical structure correctly classified 75.5% of compounds while the similarity-based merger models that correctly classified 75.2% of compounds. Overall, our findings show that similarity-based merger models have a wider applicability domain, as they are able to correctly predict a larger proportion of compounds across a broader range of structural and morphological similarities to the training set.

For clarity of the reader, this is further illustrated in Additional file 5: Fig S9 as in the case of a particular assay, namely 240_714 from the Broad Institute, a fluorescence-based biochemical assay. Here, the structural model correctly predicted toxic compound activity when they were structurally similar to the training set. The Cell Painting model performed better over a wide range of structural similarities but was often limited when morphological similarity was low. The similarity-based merger models learned and adapted across individual models from local regions in this structural versus morphological similarity space in a manner best suited to compounds in that region to correctly classify a wider range of active compounds with lowered structural and morphological similarities to the training set.

Comparison of performance at gene ontology enrichment level

We next analysed the assays (and associated biological processes) where the Cell Painting model, the structural model, and the similarity-based merger model were most predictive and therefore if there was complementary information present in both feature spaces. Results presented here are from the PubChem dataset comprising 88 assays as the Broad Institute dataset is not annotated with complete biological metadata, which renders some of the more detailed analysis downstream not viable.

Figure 4a shows a protein–protein network (annotated by genes) from the STRING database labelled by the model performance where the respective individual model was better predictive (or otherwise equally predictive, which includes cases where different models are better predictive over multiple assays related to the same protein target). We found meaningful models (AUC > 0.50) were achieved for 27 out of 34 gene annotations when using the Cell Painting and for 25 out of 34 gene annotations using the structural model. Of these, the Cell Painting models were better predictive for 25 out of 32 gene annotations (mean AUC = 0.65) compared with the structural models which were better predictive for 23 out of 32 gene annotations (mean AUC = 0.56). We next compared the hierarchical model to the similarity-based merger model for 35 gene annotations where either model achieved AUC > 0.50. The hierarchical model performed with higher AUC (mean AUC = 0.57) for only 4 out of 34 gene annotations compared with the similarity-based merger model which was better predictive for 23 out of 34 gene annotations (mean AUC = 0.60). Thus, we observed that similarity-based merger models performed better over a range of assays (over 23 out of 34 gene annotations) capturing a wide range of biological pathways.

(a) STRING gene–gene interaction networks for 34 Genes annotations associated with 37 assays in the public dataset labelled by the model which was better predictive compared with the other models and a random classifier with an AUC > 0.50 (b) Molecular and functional pathway terms related to the 37 assays using the Cytoscape [45] v3.9.1 plugin ClueGO [46] labelled by percentage of gene annotations where an AUC > 0.70 was achieved by the Cell Painting, structural and similarity-based merger models

Cell Painting models performed better than structural models for assays associated with 6 gene annotations: ATAD5, FEN1, GMNN, POLI and STK33 (with an average AUC = 0.64 for Cell Painting models compared with AUC = 0.48 for structural models). These gene annotations were associated with molecular functions of ‘GO:0033260 Nuclear DNA replication’ and ‘GO:0006260 DNA replication’ which are processes resulting in morphological changes, which were captured by Cell Painting. Further, none of ATAD5, FEN1, GMNN, POLI, and STK33 was among the most abundant proteins present in U2OS cells [32]. Among gene annotations associated with the assays better predicted by structural models are TSHR, TAAR1, HCRTR1 and CHRM1 (with an average AUC = 0.70 for structural models compared with an AUC = 0.63 for Cell Painting models). These gene annotations are associated with the KEGG pathway of ‘neuroactive ligand-receptor interaction’ and the Reactome pathway of ‘amine ligand-binding receptors’ which were captured better by chemical structure. Hence, we see that Cell Painting models perform better on assays capturing morphological changes in cells or cellular compartments such as the nucleus, while structural models work better for assays associated with ligand-receptor activity. In addition, the KEGG term ‘amine ligand-binding receptors’ is defined on the chemical ligand level explicitly, making the classification of compounds falling into this category from the structural side easier. The similarity-based merger models hence combined the power of both spaces and were predictive for assays affecting morphological changes (average AUC = 0.58 for the similarity-based merger model) as well as related to the ligand-receptor binding activity (average AUC = 0.78 for similarity-based merger model).

This is further illustrated in Fig. 4b which shows enriched molecular and functional pathway terms from ClueGO [46] for the 34 gene annotations available. Both Cell Painting models and structure-based models were limited to predicting with AUC > 0.70 only 33% of gene annotations associated with only two pathways, namely, transcription coregulator binding and positive regulation of blood vessel endothelial cell migration pathways. On the other hand, similarity-based merger models predicted 25–67% gene annotations associated with multiple pathways with an AUC > 0.70. These pathways included transcription coregulator binding, positive regulation of blood vessel endothelial cell migration pathways, positive regulation of smooth muscle cell proliferation and G protein-coupled receptor signalling pathways among others. Hence this underlines the utility of similarity-merger models across a range of biological endpoints.

Comparison of performance by readout and assay type

Results presented here are from the Broad Institute dataset comprising 89 assays (as shown in Additional file 3) which were released with only information about assay type and readout type (for details see Additional file 2 and Additional file 5: Figure S10); we analysed the Cell Painting, structural and similarity-based merger model as a function of those.

As shown in Fig. 5, Cell Painting models perform significantly better with a relative 8.8% increase in AUC with assays measuring luminescence (mean AUC = 0.72) compared with assays measuring fluorescence (mean AUC = 0.66) while structural and similarity-based merger model show no significant differences in performances. The better predictions in the case of luminescence-based assays, which are readouts specifically designed to answer a biological question, and can be related to the use of a reporter cell line and a reagent that based on the ATP content of the cell, is converted to a luciferase substrate which leads to a cleaner datapoint [33]. On the other hand, Cell Painting is an unbiased high-content imaging assay that takes into consideration the inherent heterogeneity in cell cultures where we visualise cells (often even measuring at a single cell level), contrary to a luminescence assay where one measures the average signal of a cell population. Further Cell Painting models performed significantly better with a relative 18.1% increase in AUC for cell-based assays (mean AUC = 0.72) compared with biochemical assays (mean AUC = 0.61). This might be due to also the Cell Painting assay being a cellular assay, hence also implicitly including factors such as membrane permeability in measurements. Further, most similarity-based merger models outperform baseline models over assay and readout types as shown in Additional file 5: Figs S11, S12. Overall, Cell Painting models can hence be considered to provide complementary information to chemical structure regarding cell-based assays, which was particularly beneficial for the significant improvement in the performance of similarity-based merger models.

AUC performance of models using Cell Painting, structural models, and similarity-based merger model for 89 assays in the Broad Institute dataset based on readout type (fluorescence and luminescence) or the assay type (cell-based and biochemical). Further details are shown in Additional file 5: Figs S10, S11

Limitations of this work and future studies

One limitation of the study design is having to balance unequal data classes by under-sampling. Here, the data was therefore initially under-sampled to a 1:3 ratio of majority to minority class to build a similarity-based merger model, which leads to some loss of experimental data. Further, after splitting the dataset into training and test datasets, the training data needs to contain enough samples spread across the structural versus morphological similarity map for the models to work well. In this study, we did not use a scaffold-based split because it would overly disadvantage structural models and make prediction easier for models using Cell Painting data, which is not the aim of this work. The use of Cell Painting data to increase the applicability domain when the test data is distant from the training data has previously been explored [16, 18]. In this study, however, we used the random splitting approach which aims to give each model (using chemical structure or morphological features) an equal chance to contribute to the final prediction, rather than favouring one type of data over the others. In this way, we could reasonably test the similarity-based merger models. Finally, the current study design is also affected by methodological limitations such as feature selection required for Random Forest methods which affect the interpretation of features and biological endpoints [34, 35]. In the current study design, the explicit definition of similarity of a compound in chemical and morphological space, which although used here for better interpretability, could have been possible via different ways of learning the data directly, for example using Bayesian inference [36] or Graph Convolutional Neural Networks (GCNNs). While GCNNs have shown promise in the field of drug discovery, one of the main challenges in the context of our work is the limited size of the training datasets and the difficulty in interpreting similarity based on learned representations [37]. Additionally, GCNNs can be computationally intensive and may require significant resources to train and optimize multiple times in repeated nested cross-validation setting for 170 + tasks as needed in this study. While Random Forests are not the ultimate choice for this task, they remain widely used as a baseline in the field of chemoinformatics for their robustness.

From the side of feature spaces, Cell Painting data is derived from U2OS cell-based assays which are usually different from the cell lines used in measuring the activity endpoint. However previous work has shown that Cell Painting data is similar across different cell lines and the versatile information present was universal, that is, the genetic background of the reporter cell line does not affect the AUC values for MOA prediction [38]. Thus Cell Painting data can be used to model different assays with different cell lines. The utilization of Cell Painting data from the U2OS cell line may present significant limitations for assessing certain protein targets, especially those that are not sufficiently expressed in U2OS [32]. Although this issue did not arise in our study due to our focus on a limited subset of 34 relevant genes and proteins for drug discovery, it is possible that the use of the U2OS cell line may still impose some constraints for other protein targets. We believe that further exploration and validation of our approach using different cell lines and bioassays would be an interesting and such studies will also benefit from larger datasets, such as the JUMP-CP consortium [39].

Conclusions

Predictive models that use chemical structures as features are often limited in their applicability domain to compounds which are structurally similar to the training data. To the best of our knowledge, this is the first paper which uses both similarity and predictions from chemical structural and cell morphology feature spaces to predict assay activity. Our results should have clear implications for similarity-based merger models (that are shown to be comparatively better than baseline soft-voting ensembles and hierarchical models) and can be used to predict bioactivity over a wide range of small compounds. In this work, we developed similarity-based merger models to combine two models built on complementary feature spaces of Cell Painting and chemical structure and predicted assay hit calls from 177 assays (88 assays from the public dataset and 89 assays from a dataset released by the Broad Institute) for which Cell Painting data were available.

We found that Cell Painting and chemical structure contain complementary information and can predict assays associated with different biological pathways, assay types, and readout types. Cell Painting models achieved higher AUC better for cell-based assays and assays related to biological pathways such as DNA replication. Structural models achieved a higher AUC for biochemical and ligand-receptor interaction assays. The similarity-based merger models, combining information from the two feature spaces, achieved a higher AUC for cell-based (mean AUC = 0.77) and biochemical assays (mean AUC = 0.70) as well as assays related to both biological pathways (mean AUC = 0.58) and ligand-receptor based pathways (mean AUC = 0.74). Further, the similarity-based merger models outperformed all other models with an additional 20% assays with AUC > 0.70 (79 out of 177 assays compared with 65 out of 177 assays using structural models). We also showed that the similarity-based merger models correctly predicted a larger proportion of compounds which are comparatively less structurally and morphologically similar to the training data compared with the individual models, thus being able to improve the applicability domain of the models.

In conclusion, the similarity-based merger models greatly improved the prediction of assay outcomes by combining high predictivity of fingerprints in areas of structural space close to the training set with better generalizability of cell morphology descriptors at greater distances to the training set. On the practical side, Cell Painting assay is a single screen-based hypothesis-free assay that is inexpensive compared with dedicated assays. Being able to use such an assay for bioactivity prediction will greatly improve the cost-effectiveness of such assays. Similarity-based merger models used in this study can hence improve the performance of predictive models, particularly in areas of novel structural space thus contributing to overcoming the limitation of chemical space in drug discovery projects.

Methods

Bioactivity datasets

We retrieved drug bioactivity data as binary assay hit calls for 202 assays and 10,570 compounds from Hofmarcher et al. [40] who searched ChEMBL [41] for assays for which cell morphology annotations from the Cell Painting assay were available as shown in Additional file 5: Fig S13. We further added binary assay hit calls from another 30 assays not included in the source above from Vollmers et al. [42] who searched PubChem [43] assays for overlap with Cell Painting annotations. Additionally, we used 270 anonymised assays (with binary endpoints) from the Broad Institute [27] as shown in Additional file 5: Fig S13b. This dataset, although not annotated in with biological metadata, comprises assay screenings performed over 10 years at the Broad Institute and is representative of their academic screenings.

Gene ontology enrichment of bioactivity assays

From the public dataset of 88 assays used in this study where detailed assay data was available, 37 out of 88 assays where experiments used human-derived cell lines were annotated to 34 protein targets. Next, we determined using the STRING database [44], we annotated all 34 protein targets with the associated gene set and further obtained a set of Gene Ontology terms associated with the protein target. We used Cytoscape [45] v3.9.1 plugin ClueGO [46] to condense the protein target set by grouping them into functional groups to obtain the associated significance (using the baseline ClueGO p-value ≤ 0.05) molecular and functional pathway terms. In this manner, we associated individual assays with molecular and functional pathways for further evaluation of model performances.

Cell painting data

The Cell Painting assay used in this study, from the Broad Institute, contains a set of circa 1700 cellular morphological profiles for more than 30,000 small molecule perturbations [47, 48]. The morphological profiles in this dataset are composed of a wide range of feature measurements (share, area, size, correlation, texture etc. as shown in a demonstrative table in Additional file 5: Fig S13a). While preparing this dataset, the Broad Institute normalized morphological features to compensate for variations across plates and further excluded features having a zero median absolute deviation (MAD) for all reference cells in any plate [13]. Following the procedure from Lapins et al., we subtracted the average feature value of the neutral DMSO control from the particular compound perturbation average feature value on a plate-by-plate basis [15]. For each compound and drug combination, we calculated a median feature value. Where the same compound was replicated for different doses, we used the median feature value across all doses that were within one standard deviation of the mean dose. Finally, after SMILES standardisation and removing duplicate compounds using standard InChI calculated using RDKit [50], we obtained 1783 median Cell Painting features for 30,404 unique compounds (available on Zenodo at https://doi.org/10.5281/zenodo.7589312).

Overlap of datasets

For both the public and Broad dataset, as shown in Additional file 5: Fig S13b (step 1) we used MolVS [49] standardizer based on RDKit [50] to standardize and canonicalize SMILES for each compound which encompassed sanitization, normalisation, greatest fragment chooser, charge neutralisation, and tautomer enumeration described in the MolVS documentation [49]. We further removed duplicate compounds using standardised InChI calculated using RDKit [50].

Next, for the public dataset, we obtained the overlap with the Cell Painting dataset using standardised InChI as shown in Additional file 5: Fig S13b (step 2). From this, we removed assays which contained less than 100 compounds for the minority class with Cell Painting datasets (which were difficult to model due to limited data) as shown in Additional file 5: Fig S13b (step 3). For each assay, as shown in Additional file 5: Fig S13b (step 4) the majority class (most often the negative class) was randomly resampled to maintain a minimum 3:1 ratio with the minority class to ensure that models are fairly balanced. Finally, we obtained the public assay data for a sparse matrix of 88 assays and 9876 unique compounds (see Additional file 1 for assay descriptions). Similarly, for the Broad dataset, out of 270 assays provided, as shown in Additional file 5: Fig S13b, we removed assays that contained less than 100 compounds and randomly resampled to maintain a minimum 3:1 ratio with the minority class, resulting in a Broad Institute dataset as a sparse matrix of 15,272 unique compounds over 89 assays (see Additional file 2 for assay descriptions). Additional file 5: Fig S14 shows the distribution of the total number of compounds for 177 assays used in this study. Both datasets are publicly available on Zenodo at https://doi.org/10.5281/zenodo.7589312).

Structural data

We generated Morgan Fingerprints of radius 2 and 2048 bits using RDKit [50] used as binary chemical fingerprints in this study (as shown in a demonstrative table in Additional file 5: Fig S13a).

Feature selection

Firstly, we performed feature selection to obtain morphological features for each compound. From 1783 Cell Painting features, we removed 55 blocklist features that were known to be noise from Way et al. [51] For the compounds in the public assays, we further removed 1,012 features which had a very low variance below a 0.005 threshold using the scikit-learn [52] variance threshold module. Next, like the feature section implemented in pycytominer [53], we obtained the list of features such that no two features correlate greater than a 0.9 Pearson correlation threshold. For this, we calculated all pairwise correlations between features. To decide which features to drop, we first determined for each feature, the absolute sum of correlation across all features. We then dropped features with the highest absolute correlation (to all features), thus retaining the subset of feature combinations that pass the correlation threshold. In this way, we removed the 488 features with the highest pairwise correlations. Finally, we removed another 44 features if their minimum or maximum absolute value was greater than 15 (using the default threshold in pycytominer [53]). Hence, we obtained 184 Cell Painting features for 9876 unique compounds for the dataset comprising public assays. Analogously, for the Broad Institute dataset, we obtained 191 Cell Painting features for 15,272 unique compounds (both datasets are available on Zenodo at https://doi.org/10.5281/zenodo.7589312).

Next, we performed feature selection for the structural features of Morgan fingerprints. For the public assays, we removed 1891 bits that did not pass a near-zero variance (0.05) threshold since they were considered to have less predictive power. Finally, we obtained Morgan fingerprints of 157 bits for 9876 unique compounds. Analogously, for the Broad Institute dataset, we obtained Morgan fingerprints of 277 bits for 15,272 unique compounds (both datasets are available on Zenodo at https://doi.org/10.5281/zenodo.7589312).

Chemical and morphological similarity

We next defined the structural similarity score of a compound as the mean Tanimoto similarity of the 5 most similar active compounds. The morphological similarity score of a compound was calculated as the median Pearson correlation of the 5 most positively correlated active compounds.

Model training

For each assay, the data was split into training data (80%) and held out test data (20%) using a stratified splitting based on the assay hit call. First, on the training data, we performed fivefold nested cross-validation keeping aside one of these folds as a test-fold, on the rest of the 4 folds. We trained separate models, as shown in Fig. 1c step (1) and step (2), using Morgan fingerprints (157 bits for the public dataset; 277 bits for the Broad Institute dataset) and Cell Painting data (184 features for the public dataset, 191 features for the Broad Institute dataset) respectively for each assay. In this inner fold of the nested-cross validation, we trained separately, Random Forest models on the rest of the 4 folds with Cell Painting and Morgan fingerprints. These models were hyperparameter optimised (including class weight balancing with parameter spaces as shown in Additional file 4) using cross-validation with shuffled fivefold stratified splitting. For hyperparameter optimisation, we used a randomized search on hyperparameters as implemented in scikit-learn 1.0.1 [52]. This optimisation method iteratively increases resources to select the best candidates, using the most resources on the candidates that are better at prediction [54]. The hyperparameter optimised model was used to predict the test fold. To account for threshold balancing of Random Forest predicted probabilities (which is common in an imbalanced prediction problem), we calculated on the 4 folds, the Youden’s J statistic [55] (J = True Positive Rate—False Positive Rate) to detect an optimal threshold. The threshold for the highest J statistic value was used such that the model would no longer be biased towards one class and give equal weights to sensitivity and specificity without favouring one of them. This optimal threshold was then used for the test-fold predictions, and this was repeated 5 times in total for both models using Morgan fingerprints and Cell Painting features until predictions were obtained for the entire training data in the nested cross-validation manner. As the optimal thresholds for each fold were different, the predicted probability values were scaled using a min–max scaling such that this optimal threshold was adjusted back to 0.50 on the new scale. Further for each test-fold in the cross-validation, as shown in Fig. 1c step (3) and step (4), we also calculated the chemical and morphological similarity (as described above in the “Chemical and Morphological Similarity” section) for each compound in this test-fold with respect to the active compounds in the remaining of the 4 folds. This was also repeated 5 times in total until chemical and morphological similarity scores were obtained for the entire training data.

Finally, on the entire training data, two Random Forest models were trained with Cell Painting and Morgan fingerprints with hyperparameter-optimised (in the same way as above using fivefold cross-validation). This was used to predict the held-out data, as shown in Fig. 1c step (5) (with threshold balancing performed from cross-validated predicted probabilities of the training data). We calculated the chemical and morphological similarity of each compound in the held-out data compared with all active compounds in the training data and these were recorded as the chemical and morphological similarity scores respectively of the particular compound in the held-out dataset as shown in Fig. 1c step (6). The predicted probability values were again adjusted using a min–max scaling such that this optimal threshold was 0.50 on the new scale.

Similarity-based merger model

The similarity-based merger models presented here combined individual scaled predicted probabilities from individual models trained on Cell Painting and Structural data and the morphological and structural similarity of the compounds with respect to active compounds in the training data. In particular, for each assay, we evaluated the similarity-based merger model on the held-out data using information from the training data only to avoid any data or model leakage. We trained a Logistic Regression model (with baseline parameters of L2 penalty, an inverse of regularization strength of 1 and balanced class weights) on the training data which uses the Cell Painting and Morgan fingerprints models’ individual scaled predicted probabilities and the structural and morphological similarity scores (with respect to other folds in the training data itself) as features and the endpoint as the assay hit call of the compound, as shown in Fig. 1c step (7). Finally, this logistic equation was used to predict the assay hit call of the held-out compounds (which we henceforth call the similarity-based merger model prediction) and an associated predicted probability (which we henceforth call similarity-based merger model predicted probability), as shown in Fig. 1c step (8). There is no leak of any held-out data assay hit call information but only its structural similarity and morphological similarity to the active compounds in the training data, which can be easily calculated for any compound with a known structure.

Baseline models

For baseline models, we used two models, namely a soft-voting ensemble [21] and a hierarchical model [22]. The soft-voting ensemble, as shown in Fig. 1a, combines predictions from both the Cell Painting and Morgan fingerprints models using a majority rule on the predicted probabilities. In particular, for each compound, we averaged the re-scaled predicted probabilities of two individual models, thus in effect creating an ensemble with soft-voting. We applied a threshold of 0.50 (since predicted probabilities from individual models were also scaled to the optimal threshold of 0.50 as described above) to obtain the corresponding soft-voting ensemble prediction.

For the hierarchical model, as shown in Fig. 1b, we fit a baseline Random Forest classifier (hyperparameter optimised for estimators [100, 300, 400, 500] and class weight balancing using stratified splits and fivefold cross validations as implemented in scikit-learn [52]) on the scaled predicted probabilities for the entire training data from both individual the Cell Painting and Morgan fingerprints models (obtained from the nested-cross validation). We used this hierarchical model to predict the activity of the held-out test set compounds which gave us the predicted assay hit call (and a corresponding model predicted probability) which we henceforth call the hierarchical model prediction (and a corresponding hierarchical model predicted probability).

Model evaluation

We evaluated all models (both individual models, soft-voting ensemble, hierarchical and similarity-based merger model) based on precision, sensitivity, F1 scores of the minority class, specificity, balanced accuracy, Matthew’s Correlation Coefficient (MCC) and Area Under Curve- Receiver Operating Characteristic (AUC) scores.

Statistics and reproducibility

A detailed description of each analysis’ steps and statistics is contained in the methods section of the paper. Statistical methods were implemented using the pandas Python package [56]. Machine learning models, hyperparameter optimisation and evaluation metrics were implemented using scikit-learn [52], a Python-based package. We have released the datasets used in this study which are publicly available at Zenodo (https://doi.org/10.5281/zenodo.7589312). We released the Python code for the models which are publicly available on GitHub (https://github.com/srijitseal/Merging_Bioactivity_Predictions_CellPainting_Chemical_Structure_Similarity).

Availability of data and materials

We have released the datasets used in this study which are publicly available at Zenodo at https://doi.org/10.5281/zenodo.7589312. We released the Python code for the models which are publicly available on GitHub at https://github.com/srijitseal/Merging_Bioactivity_Predictions_CellPainting_Chemical_Structure_Similarity. The protein–protein network (annotated by genes) from the STRING can be accessed at https://version-11-5.string-db.org/cgi/network?networkId=bpH0WmWa1eZm.

References

Trapotsi M-A, Hosseini-Gerami L, Bender A (2022) Computational analyses of mechanism of action (MoA): data, methods and integration. RSC Chem Biol. https://doi.org/10.1039/D1CB00069A

Sazonovas A, Japertas P, Didziapetris R (2010) Estimation of reliability of predictions and model applicability domain evaluation in the analysis of acute toxicity (LD50). SAR QSAR Environ Res 21:127–148. https://doi.org/10.1080/10629360903568671

Kar S, Roy K, Leszczynski J (2018) Applicability domain: a step toward confident predictions and decidability for QSAR modeling. Methods Mol Biol 1800:141–169. https://doi.org/10.1007/978-1-4939-7899-1_6

Dimitrov S, Dimitrova G, Pavlov T et al (2005) A stepwise approach for defining the applicability domain of SAR and QSAR models. J Chem Inf Model 45:839–849. https://doi.org/10.1021/ci0500381

Bajusz D, Rácz A, Héberger K (2015) Why is tanimoto index an appropriate choice for fingerprint-based similarity calculations? J Cheminform 7:1–13. https://doi.org/10.1186/s13321-015-0069-3

Chandrasekaran SN, Ceulemans H, Boyd JD, Carpenter AE (2021) Image-based profiling for drug discovery: due for a machine-learning upgrade? Nat Rev Drug Discov 20:145–159. https://doi.org/10.1038/s41573-020-00117-w

Kauvar LM, Higgins DL, Villar HO et al (1995) Predicting ligand binding to proteins by affinity fingerprinting. Chem Biol 2:107–118. https://doi.org/10.1016/1074-5521(95)90283-X

Norinder U, Spjuth O, Svensson F (2020) Using predicted bioactivity profiles to improve predictive modeling. J Chem Inf Model 60:2830–2837. https://doi.org/10.1021/acs.jcim.0c00250

Bender A, Jenkins JL, Glick M et al (2006) “Bayes affinity fingerprints” Improve retrieval rates in virtual screening and define orthogonal bioactivity space: when are multitarget drugs a feasible concept? J Chem Inf Model 46:2445–2456. https://doi.org/10.1021/ci600197y

Liu A, Seal S, Yang H, Bender A (2023) Using chemical and biological data to predict drug toxicity. SLAS Discov. https://doi.org/10.1016/J.SLASD.2022.12.003

Petrone PM, Simms B, Nigsch F et al (2012) Rethinking molecular similarity: comparing compounds on the basis of biological activity. ACS Chem Biol 7:1399–1409. https://doi.org/10.1021/cb3001028

Duran-Frigola M, Pauls E, Guitart-Pla O et al (2020) Extending the small-molecule similarity principle to all levels of biology with the chemical checker. Nat Biotechnol 38:1087–1096. https://doi.org/10.1038/s41587-020-0502-7

Bray MA, Singh S, Han H et al (2016) Cell Painting, a high-content image-based assay for morphological profiling using multiplexed fluorescent dyes. Nat Protoc 11:1757–1774. https://doi.org/10.1038/nprot.2016.105

McQuin C, Goodman A, Chernyshev V et al (2018) Cell profiler 30: next-generation image processing for biology. PLoS Biol 16:e2005970. https://doi.org/10.1371/journal.pbio.2005970

Lapins M, Spjuth O (2019) Evaluation of Gene Expression and Phenotypic Profiling Data as Quantitative Descriptors for Predicting Drug Targets and Mechanisms of Action. bioRxiv 580654

Seal S, Yang H, Vollmers L, Bender A (2021) Comparison of cellular morphological descriptors and molecular fingerprints for the prediction of cytotoxicity- and proliferation-related assays. Chem Res Toxicol 34:422–437. https://doi.org/10.1021/acs.chemrestox.0c00303

Akbarzadeh M, Deipenwisch I, Schoelermann B et al (2022) Morphological profiling by means of the cell painting assay enables identification of tubulin-targeting compounds. Cell Chem Biol 29:1053-1064.e3. https://doi.org/10.1016/j.chembiol.2021.12.009

Seal S, Carreras-Puigvert J, Trapotsi MA et al (2022) Integrating cell morphology with gene expression and chemical structure to aid mitochondrial toxicity detection. Commun Biol 5:858. https://doi.org/10.1038/s42003-022-03763-5

Trapotsi MA, Mouchet E, Williams G et al (2022) Cell morphological profiling enables high-throughput screening for PROteolysis TArgeting chimera (PROTAC) phenotypic signature. ACS Chem Biol 17:1733–1744. https://doi.org/10.1021/acschembio.2c00076

Caicedo JC, Arevalo J, Piccioni F et al (2022) Cell painting predicts impact of lung cancer variants. Mol Biol Cell 33:49. https://doi.org/10.1091/mbc.E21-11-0538

Dietterich TG (2000) Ensemble methods in machine learning lect Notes Comput Sci (including subser lect notes artif intell lect notes bioinformatics). Springer, Berlin. https://doi.org/10.1007/3-540-45014-9_1

Li X, Kleinstreuer NC, Fourches D (2020) Hierarchical quantitative structure-activity relationship modeling approach for integrating binary, multiclass, and regression models of acute oral systemic toxicity. Chem Res Toxicol 33:353–366. https://doi.org/10.1021/acs.chemrestox.9b00259

Klingspohn W, Mathea M, Ter Laak A et al (2017) Efficiency of different measures for defining the applicability domain of classification models. J Cheminform 9:1–17. https://doi.org/10.1186/s13321-017-0230-2

Sheridan RP, Feuston BP, Maiorov VN, Kearsley SK (2004) Similarity to molecules in the training set is a good discriminator for prediction accuracy in QSAR. J Chem Inf Comput Sci 44:1912–1928. https://doi.org/10.1021/ci049782w

Way GP, Natoli T, Adeboye A, Litichevskiy L et al (2022) Morphology and gene expression profiling provide complementary information for mapping cell state. Cell Syst 13(11):911-923.e9. https://doi.org/10.1016/j.cels.2022.10.001

Haghighi M, Caicedo JC, Cimini B et al (2022) High-dimensional gene expression and morphology profiles of cells across 28,000 genetic and chemical perturbations. Nat Methods 19(12):1550–1557. https://doi.org/10.1038/s41592-022-01667-0

Moshkov N, Becker T, Yang K et al (2023) Predicting compound activity from phenotypic profiles and chemical structures. Nat Commun 14(1):1–11. https://doi.org/10.1038/s41467-023-37570-1

Wilke J, Kawamura T, Xu H et al (2021) Discovery of a σ1 receptor antagonist by combination of unbiased cell painting and thermal proteome profiling. Cell Chem Biol 28:848-854.e5. https://doi.org/10.1016/j.chembiol.2021.01.009

Allen CHG, Koutsoukas A, Cortés-Ciriano I et al (2016) Improving the prediction of organism-level toxicity through integration of chemical, protein target and cytotoxicity qHTS data. Toxicol Res 5:883–894. https://doi.org/10.1039/c5tx00406c

Liu R, Wallqvist A (2014) Merging applicability domains for in silico assessment of chemical mutagenicity. J Chem Inf Model 54:793–800. https://doi.org/10.1021/ci500016v

Chow YL, Singh S, Carpenter AE, Way GP (2022) Predicting drug polypharmacology from cell morphology readouts using variational autoencoder latent space arithmetic. PLoS Comput Biol 18:e1009888. https://doi.org/10.1371/journal.pcbi.1009888

Niforou K, Anagnostopoulos A, Vougas K et al (2008) The proteome profile of the human osteosarcoma U2OS cell line. Cancer Genom Proteom 5:63–78

Fan F, Wood KV (2007) Bioluminescent assays for high-throughput screening. Assay Drug Dev Technol 5:127–136. https://doi.org/10.1089/adt.2006.053

Medina-Franco JL, Martinez-Mayorga K, Fernández-de Gortari E et al (2021) Rationality over fashion and hype in drug design. F1000Res. https://doi.org/10.1268/f1000research.52676.1

Bender A, Cortes-Ciriano I (2021) Artificial intelligence in drug discovery: what is realistic, what are illusions? part 2: a discussion of chemical and biological data. Drug Discov Today 26:1040–1052. https://doi.org/10.1016/j.drudis.2020.11.037

van de Schoot R, Depaoli S, King R et al (2021) Bayesian statistics and modelling. Nat Rev Methods Prim 1:1–26. https://doi.org/10.1038/s43586-020-00001-2

Korolev V, Mitrofanov A, Korotcov A, Tkachenko V (2020) Graph convolutional neural networks as ‘general-purpose’ property predictors: the universality and limits of applicability. J Chem Inf Model 60:22–28

Cox MJ, Jaensch S, Van de Waeter J et al (2020) Tales of 1008 small molecules: phenomic profiling through live-cell imaging in a panel of reporter cell lines. Sci Rep 10:1–14. https://doi.org/10.1038/s41598-020-69354-8

JUMP-Cell Painting Consortium. https://jump-cellpainting.broadinstitute.org/. Accessed 2 May 2022

Hofmarcher M, Rumetshofer E, Clevert DA et al (2019) Accurate prediction of biological assays with high-throughput microscopy images and convolutional networks. J Chem Inf Model 59:1163–1171. https://doi.org/10.1021/acs.jcim.8b00670

Mendez D, Gaulton A, Bento AP, Chambers J, De Veij M, Felix E et al (2019) ChEMBL: towards direct deposition of bioassay data. Nucleic Acids Res 47(D1):D930–D940

Luis V (2021) Prediction of Cytotoxicity Related PubChem Assays Using High-Content-Imaging Descriptors derived from Cell-Painting [Unpublished master's thesis], TU Darmstadt.

PubChem. https://pubchem.ncbi.nlm.nih.gov/. Accessed 4 Jun 2022

Szklarczyk D, Gable AL, Lyon D et al (2019) STRING v11: protein-protein association networks with increased coverage, supporting functional discovery in genome-wide experimental datasets. Nucleic Acids Res 47:D607–D613. https://doi.org/10.1093/nar/gky1131

Shannon P, Markiel A, Ozier O et al (2003) Cytoscape: a software Environment for integrated models of biomolecular interaction networks. Genome Res 13:2498–2504. https://doi.org/10.1101/gr.1239303

Bindea G, Mlecnik B, Hackl H et al (2009) ClueGO: a cytoscape plug-in to decipher functionally grouped gene ontology and pathway annotation networks. Bioinformatics 25:1091–1093. https://doi.org/10.1093/bioinformatics/btp101

Bray MA, Gustafsdottir SM, Rohban MH et al (2017) A dataset of images and morphological profiles of 30 000 small-molecule treatments using the Cell Painting assay. Gigascience 6:1–5

GigaDB Dataset - DOI https://doi.org/10.5524/100351 - Supporting data for "A dataset of images and morphological profiles of 30,000 small-molecule treatments using the Cell Painting assay. http://gigadb.org/dataset/100351. Accessed 5 Oct 2022

Swain M (2019) MolVS: Molecule Validation and Standardization. In: MolVS. https://molvs.readthedocs.io/en/latest/. Accessed 15 Apr 2021

Landrum G (2006) RDKit: Open-source Cheminformatics. In: http://www.rdkit.org. Accessed 2 Mar 2022

Blocklist Features - Cell Profiler. https://figshare.com/articles/dataset/Blacklist_Features_-_Cell_Profiler/10255811. Accessed 11 Apr 2021

Pedregosa Fabianpedregosa F, Michel V, Grisel Oliviergrisel O et al (2011) Scikit-learn: machine learning in python. J Mach Learn Res 12:2825–2830

Cytomining/Pycytominer: Cytominer Python Package. https://github.com/cytomining/pycytominer. Accessed 4 Jun 2022

Bergstra J, Bengio Y (2012) Random search for hyper-parameter optimization. J Mach Learn Res 13:281–305

Fluss R, Faraggi D, Reiser B (2005) Estimation of the youden index and its associated cutoff point. Biometrical J 47:458–472. https://doi.org/10.1002/bimj.200410135

API reference — pandas 1.3.1 documentation. https://pandas.pydata.org/pandas-docs/stable/reference/index.html. Accessed 29 Jul 2021

Acknowledgements

The authors are grateful for guidance from Anne Carpenter (Broad Institute of MIT and Harvard) and Juan Caicedo (Broad Institute of MIT and Harvard) which helped improve an earlier version of the manuscript. S Seal would like to acknowledge Steffen Jaensch (Steffen Jaensch) for a discussion which helped correct an important mistake in the earlier version of the manuscript causing an information leak. S Seal would like to thank Chaitanya K. Joshi (University of Cambridge) and Alicia Curth (University of Cambridge) for useful discussions about this work. This work was performed using resources provided by the Cambridge Service for Data-Driven Discovery (CSD3) operated by the University of Cambridge Research Computing Service (www.csd3.cam.ac.uk), provided by Dell EMC and Intel using Tier-2 funding from the Engineering and Physical Sciences Research Council (capital grant EP/P020259/1), and DiRAC funding from the Science and Technology Facilities Council (www.dirac.ac.uk). This work was also performed using the Nest compute server (https://www.ch.cam.ac.uk/computing/nest-compute-server) provided by the Yusuf Hamied Department of Chemistry. The TOC Fig. 1, Additional file 5: Fig. S1, S13 were partly generated from https://bioicons.com/ which uses Servier Medical Art, provided by Servier, licensed under a Creative Commons Attribution 3.0 unported license.

Funding

Open access funding provided by Uppsala University. This project received funding from the Swedish Research Council (Grants 2020-03731 and 2020-01865), and FORMAS (Grant 2018-00924). S.S. acknowledges the Cambridge Commonwealth, European and International Trust, Boak Student Support Fund (Clare Hall), Jawaharlal Nehru Memorial Fund, Allen, Meek and Read Fund, and Trinity Henry Barlow (Trinity College) for providing funding for this study. S.S. acknowledges support with funding from the Cambridge Centre for Data-Driven Discovery and Accelerate Programme for Scientific Discovery under the project title “Theoretical, Scientific, and Philosophical Perspectives on Biological Understanding in the Age of Artificial Intelligence”, made possible by a donation from Schmidt Futures.

Author information

Authors and Affiliations

Contributions

SS designed and performed exploratory data analysis, and implemented, and trained the models. SS, HY and SS contributed to the design of the models. SS, MAT and HY contributed to analysing the results of the models. SS, JCP and OS analysed the biological interpretation of performance. SS wrote the manuscript with extensive discussions with OS and AB who supervised the project. All authors (SS, HY, MAT, SS, JCP, OS and AB) read and approved the final manuscript.

Corresponding authors

Ethics declarations

Ethical approval and consent to participate

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1:

Assay descriptions of the public dataset comprising 88 assays.

Additional file 2:

Assay types and readout types of Broad Institute dataset comprising 89 assays.

Additional file 3:

Performance of Cell Painting, structural models, soft-voting ensembles, hierarchical models, and the similarity-based merger models over all 177 assays used in this study.

Additional file 4:

Hyperparameters considered for optimising Random Forests.

Additional file 5: Figure S1.

Features used in the similarity-based merger models: a logistic regression model that takes the predicted probabilities from individual models and the test compound’s similarity to the active compounds in the training data in both feature spaces, structural and morphological. Figure S2. Distribution (a) Balanced Accuracy for 171 assays (out of 177 assays) and (b) F1 scores for 177 assays for all models, namely, Cell Painting, structural models, baseline models of soft-voting ensembles, hierarchical models, and the similarity-based merger models. An assay was considered for a paired significance test only if the the balanced accuracy>0.50 and F1 score>0.0 for at least one of the models. Figure S3. (a) Number of assays that were predicted with a Balanced Accuracy above a given threshold. (b) Distribution of assays with Balanced Accuracy > 0.70 common and unique to all models, Cell Painting, Morgan Fingerprints, baseline models of soft-voting ensemble, hierarchical model, and the similarity-based merger models, over 177 assays used in this study. Figure S4. Distribution of AUC Scores for 177 assays used in this study for (a) Cell Painting and Structural Models, (b) Soft-Voting Ensemble and Hierarchical Model, (c) Similarity-based merger model and Hierarchical Model, and (d) Similarity-based merger model and Soft-Voting Ensemble. Any assay above or below the diagonal (\(x=y\)) line performs better than the other model. Figure S5. Relative improvement (green) or deterioration (red) in performance on using similarity-based merger models compared to soft-voting ensemble methods over the public dataset comprising 162 assays out of 177 assays where either model performed better than a random classifier (AUC = 0.50). Figure S6. Relative improvement (green) or deterioration (red) in performance on (a) using similarity-based merger models compared to Cell Painting models over the public dataset comprising 163 assays out of 177 assays where either model performed better than a random classifier (AUC = 0.50) , and (b) using similarity-based merger models compared to structural models over the public dataset comprising 159 assays out of 177 assays where either model performed better than a random classifier (AUC = 0.50). Figure S7. Distribution of AUC scores achieved by individual models for all 177 assays used in this study in relation to (a) the total number of compounds, and (b) the ratio of the number of active compounds to the number of inactive compounds. Figure S8. Distribution of True and False Predictions on all compounds in the held-out test for the public dataset over all 177 assays from (a) Cell Painting model compared to the similarity in image space of the respective training set, (b) Structural model compared to the similarity structural space of the respective training set, and the similarity-based merger model compared to (c) the similarity in image space, and (d) the similarity structural space of the respective training set. Figure S9. Kernel density estimate (KDE) plot visualising the distribution of True Positives using a continuous probability density curve. The plot shows true positives in the held-out test of the assay 240_714 from the Broad Institute (a fluorescence based biochemical assay) from the individual Cell Painting model, structural model, soft-voting ensemble, hierarchical model, and the similarity-based merger model compared in the space of the similarity in image space and the similarity structural space to the training set used. Figure S10. Overview of the assay type and readout types of Broad Institute dataset comprising 89 assays. Figure S11. AUC performance of models using Cell Painting, Morgan Fingerprints, baseline models of soft-voting ensemble, hierarchical model, and the similarity-based merger models for 89 assays in the Broad Institute dataset based on readout type. Figure S12. AUC performance of models using Cell Painting, Morgan Fingerprints, baseline models of soft-voting ensemble, hierarchical model, and the similarity-based merger models for 89 assays in the Broad Institute dataset based on assay type. Figure S13. Datasets used in this study: (a) Demonstrative table of data values from bioactivity datasets (binary assay hitcalls), Cell Painting (continuous numerical values) and Morgan fingerprints (binary bit fingerprints) used in this study. (b) Workflow in pre-processing of both bioactivity datasets used in this study, the public dataset comprising 88 assays, and the Broad Institute dataset comprising 89 assays. Figure S14. Distribution of number of compounds in each of the 177 assays after under sampling as used in this study.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Seal, S., Yang, H., Trapotsi, MA. et al. Merging bioactivity predictions from cell morphology and chemical fingerprint models using similarity to training data. J Cheminform 15, 56 (2023). https://doi.org/10.1186/s13321-023-00723-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13321-023-00723-x