Abstract

Introduction

There has been a recent explosion of research into the field of artificial intelligence as applied to clinical radiology with the advent of highly accurate computer vision technology. These studies, however, vary significantly in design and quality. While recent guidelines have been established to advise on ethics, data management and the potential directions of future research, systematic reviews of the entire field are lacking. We aim to investigate the use of artificial intelligence as applied to radiology, to identify the clinical questions being asked, which methodological approaches are applied to these questions and trends in use over time.

Methods and analysis

We will follow the Preferred Reporting Items for Systematic Review and Meta-Analysis (PRISMA) guidelines and by the Cochrane Collaboration Handbook. We will perform a literature search through MEDLINE (Pubmed), and EMBASE, a detailed data extraction of trial characteristics and a narrative synthesis of the data. There will be no language restrictions. We will take a task-centred approach rather than focusing on modality or clinical subspecialty. Sub-group analysis will be performed by segmentation tasks, identification tasks, classification tasks, pegression/prediction tasks as well as a sub-analysis for paediatric patients.

Ethics and dissemination

Ethical approval will not be required for this study, as data will be obtained from publicly available clinical trials. We will disseminate our results in a peer-reviewed publication.

Registration number PROSPERO: CRD42020154790

Similar content being viewed by others

Key Points

-

This study presents a comprehensive methodology for a systematic review of the current state of Radiology Artificial Intelligence.

-

Detailed characteristics of studies will be collected and analysed, including nature of task, disease, modality, subspecialty and data processing.

-

Subgroup analysis will be performed to highlight differences in design characteristics between task, subspecialty modality and for trends in algorithm use over time.

Introduction

Background

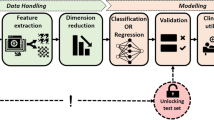

There have been huge advancements in computer vision following the success of Deep Convolutional Neural Networks (CNN) at the 2012 ImageNet challenge [1]. Deep learning is a subset of machine learning, which itself is a subset of artificial intelligence (AI) the border field of how computers mimic human behaviour. The senior author of that seminal AlexNet paper, Geoffrey Hinton, advised in 2016 that we should stop training radiologists, as it was obvious that within 5 years deep learning (DL) would have surpassed them. While there have been major leaps forward in DL powered computer vision as it applies to radiology, the progress in performance has not yet materialised as he predicted. Rather, specific “narrow” applications have proven successful; and generalised superhuman performance remains elusive. Problems such as generalisability, stability and implementation, crucial in the medical field, have seen the clinical application of AI in healthcare lag behind other industries [2]. While recent guidelines have been established to advise on ethics, data management and the potential directions of future research [3,4,5], systematic reviews of the entire field are lacking. Our systematic review aims to look at the radiology AI literature from a task-specific point of view. Many of the roles of the clinical radiologist can be decomposed into tasks commonly faced by computer engineers in related computer vision fields such as segmentation, identification, classification and prediction [6].

Objectives

This systematic review will aim to (1) assess the different methods and algorithms used to tackle these tasks, (2) to examine potential bias in methodology, (3) to consider the quality of data management in the literature and (4) outline trends in all the above.

Methods and analysis

This systematic review has been registered with PROSPERO (registration number: CRD42020154790). We will report this systematic review according to the Preferred Reporting Items for Systematic Review and Meta-analysis (PRISMA) guidelines and have completed the PRISMA-P checklist for this protocol (Table 1).

Inclusion/exclusion criteria for the selection of studies

Type of study design, participants

Two separate reviews are proposed, a primary review comprehensive of all literature and a secondary review in the paediatric literature only.

The comprehensive review will include all clinical radiological (not laboratory or phantom-based) deep learning papers that aim to complete a segmentation, identification, classification, or prediction task using computer vision techniques. Human hospital based studies that use computer vision techniques to aid in the care of patients through radiological diagnosis or intervention will be included. The paediatric review will include all machine learning and deep learning tasks as applied to paediatric clinical radiology.

Inclusion criteria

Clinical radiological papers that use DL computer vision techniques to complete a segmentation, identification, classification or prediction task based on radiographic, computed tomography (CT), magnetic resonance (MR), ultrasound (US) or nuclear medicine/molecular or hybrid imaging technique. Where the comparison group is a combined Human–AI performance, this will be specifically recorded.

Exclusion criteria

Functional MRI (fMRI) papers are not included as the techniques used in the computer analysis of fMRI data are quite separate from the computer vision-based tasks that are the subject of the review. To ensure focus on computer vision-based tasks and adequately assess these techniques, "radiomics" papers or those that focus on texture analysis or the identification of imaging biomarkers will be excluded from the primary review. Connectomics papers, quality assessment and decision support papers are not included. Image processing or registration papers are excluded. Image quality papers are excluded from the primary review. Papers solely for use in radiation therapy are also excluded. Non-human or phantom studies are excluded.

Type of intervention

We will not place a restriction on the intervention type and will include trials that study the clinical application of AI to radiology as outlined above.

Search method for the identification of trials

Electronic search

We will perform electronic searches on MEDLINE (Pubmed), EMBASE from 2015 until 31 December 2019. Zotero will be used as our reference manager, and the Revtools package on R will be used to eliminate duplicate records. The search will be conducted in English. The search terms used are reported in Table 2. The artificial intelligence and radiology terms were combined with the AND operator with the addition of the paediatric terms with the AND operator for the paediatric sub-section. Search terms agreed by consensus between the two co-principle investigators with backgrounds in radiology and computer science respectively.

Selection and analysis of trials

We will review the title and abstracts of studies to identify clinical radiological artificial intelligence studies for inclusion or exclusion. Studies with insufficient information to determine the use of AI computer vision methods will also be included for full-text review. We will then perform a full-text review to confirm studies that will be included in the final systematic review. This process will be summarised in a PRISMA flowchart. Abstract, title and full-text review will be performed by B.K. and S.B. Disagreements will be resolved by consensus or by a third reviewer (R.K.), if necessary.

Before full data extraction, all reviewers will complete the same 5% subsample and review answers to ensure there is a > 90% inter-reviewer agreement. Data extraction will be undertaken by three radiologists, two of whom are nationally certified and have a research interest in artificial intelligence (S.C. and G.H.). The third is a radiology resident in training with 4 years of experience who is a PhD candidate in radiology artificial intelligence.

Three reviewers will extract the following information in parallel and record in a custom database:

-

1.

Country of origin (Paediatric Review only)

-

2.

Radiology subspecialty

-

3.

Retro/prospective

-

4.

Supervised/unsupervised

-

5.

Number of participants

-

6.

Problem to be solved—i.e. segmentation, identification, classification, prediction.

-

7.

Target Condition and body region

-

8.

Reference Standard—Histology, rad report, surgery

-

9.

Method for assessment of standard

-

10.

Type of internal validation

-

11.

External validation

-

12.

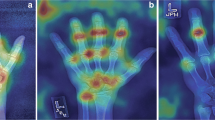

Indicator method for predictor measurement, exclusion of poor-quality imaging Heatmap provided? Other explicability?

-

13.

Algorithm—Architecture Transfer learning applied Ensemble architecture used

-

14.

Data source—Number of images for training/tuning, Source of data, Data range, Open-access data

-

15.

Was manual segmentation used?

Information will be extracted using a closed question format with an “add option” function if required. This is intended to maintain consistency while being flexible enough to account for the heterogeneity in the data. Please see Additional file 1. The full questionnaire will be made open access once the review is complete.

Assessment of the quality of the studies: risk of bias

Due to the study design, there will be a high degree of heterogeneity within the study. This has been acknowledged in the literature to date [7]. We will, however, use basic surrogates of risk of bias including inclusion and exclusion criteria, internal or external validation and performance indication to estimate bias.

Data synthesis

We will not perform a meta-analysis as part of this systematic review. A narrative synthesis of the data will be performed.

Analysis by subgroups

We will report overall outcomes and outcomes by task, i.e. segmentation, identification, classification and prediction tasks. Descriptive statistics will be used to illustrate trends in the data.

Study status

This systematic review will start in July 2020. We hope to have our first results in late 2020.

Patient and public involvement

Our research group has engaged with a specific patient group MS Ireland to discuss their ideas, concerns and expectations around the clinical application of AI to radiology and these discussions continue to inform our research decisions.

Ethics and dissemination

Ethical approval was not required for this study. We will publish the results of this systematic review in a peer-reviewed journal.

Discussion

The volume of medical imaging investigations has greatly increased over recent years [8]. The number of clinicians trained in the expert interpretation of these investigations, however, has failed to keep pace in demand [9]. AI has been suggested as one possible solution to this supply/demand issue [8]. A huge volume of research has been published in a short time. Furthermore, the number of reviewers with expertise in both radiology and AI is limited. Standards for publication have only recently been developed [10]. This has the potential for papers of different levels of quality to be published and has the potential to negatively impact on patient care. Furthermore, many of the papers focus on a small range of pathology and tasks which opens the possibility of unnecessary duplication of work.

We anticipate that there will be rapid growth in the number of included papers year-on-year. We also expect that papers will be concentrated in a narrow range of topics. We aim to identify which algorithms are the most popular for particular tasks and also to investigate the presence of unique or custom models compared to off-the-shelf models. The issue of hyperparameter optimisation (whether automated or handcrafted) will also be examined. Statistical analysis will also be a feature of the review with a focus on sample size calculation and performance metrics [11].

We hope the systematic nature of this review will identify smaller papers with proper methods that may have been overlooked as well as highlight papers where some methods may have been suboptimal and provide an evidence base for a framework methodological design.

This review will have potential limitations, including publication and reporting bias. We will not be able to include studies with unpublished data, and we will misclassify studies that do not have clear reporting of adaptive designs in their methodology. Furthermore, the heterogeneity of the included studies will not allow for meaningful meta-analysis of results. The expected high number of included articles (in the range of 1000 articles over the 5 years 2015–2019) will only allow for a high-level overview of certain themes.

Finally, we hope to raise awareness of among the radiology community of the questions being asked as well as the methods being used to answer them with the radiology AI literature and give an overview of techniques for those with an engineering or computer science background looking to contribute to the field.

Abbreviations

- AI:

-

Artificial intelligence

- CNN:

-

Convolutional neural networks

- CT:

-

Computed tomography

- DL:

-

Deep learning

- fMRI:

-

Functional MRI

- MR:

-

Magnetic resonance

- MS:

-

Multiple sclerosis

- PRISMA:

-

Preferred reporting items for systematic review and meta-analysis

- US:

-

Ultrasound

References

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105. http://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf

Recht MP, Dewey M, Dreyer K et al (2020) Integrating artificial intelligence into the clinical practice of radiology: challenges and recommendations. Eur Radiol 17:1–9

Langlotz CP, Allen B, Erickson BJ et al (2019) A roadmap for foundational research on artificial intelligence in medical imaging: from the 2018 NIH/RSNA/ACR/The Academy Workshop. Radiology 291(3):781–791

Willemink MJ, Koszek WA, Hardell C et al (2020) Preparing medical imaging data for machine learning. Radiology 295(1):4–15

Larson DB, Magnus DC, Lungren MP, Shah NH, Langlotz CP (2020) Ethics of using and sharing clinical imaging data for artificial intelligence: a proposed framework. Radiology 24:192536

Ranschaert ER, Morozov S, Algra PR (eds) (2019) Artificial intelligence in medical imaging: opportunities, applications and risks. Springer, Berlin

Liu X, Faes L, Kale AU et al (2019) A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: a systematic review and meta-analysis. Lancet Dig Health 1(6):e271–e297

De Fauw J, Ledsam JR, Romera-Paredes B et al (2018) Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat Med 24(9):1342–1350

Rimmer A (2017) Radiologist shortage leaves patient care at risk, warns royal college. BMJ 11:j4683

Mongan J, Moy L, Kahn CE Jr (2020) Checklist for artificial intelligence in medical imaging (CLAIM): a guide for authors and reviewers. Radiology Artificial Intelligence. https://doi.org/10.1148/ryai.2020200029

Di Leo G, Sardanelli F (2020) Statistical significance: p value, 0.05 threshold, and applications to radiomics—reasons for a conservative approach. Eur Radiol Exp 4(1):1–8

Funding

This work was performed within the Irish Clinical Academic Training (ICAT) Programme, supported by the Wellcome Trust and the Health Research Board (Grant No. 203930/B/16/Z), the Health Service Executive National Doctors Training and Planning and the Health and Social Care, Research and Development Division, Northern Ireland and the Faculty of Radiologists, Royal College of Surgeons in Ireland.

Author information

Authors and Affiliations

Contributions

BK, KY, CJ, AL and RK designed the study. All authors reviewed and approved the final version of the protocol. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Consent for publication

Not required.

Competing interests

None declared.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1

. Standard Data Extraction Questions.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kelly, B., Judge, C., Bollard, S.M. et al. Radiology artificial intelligence, a systematic evaluation of methods (RAISE): a systematic review protocol. Insights Imaging 11, 133 (2020). https://doi.org/10.1186/s13244-020-00929-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13244-020-00929-9