Abstract

Objective

To examine the feasibility, preference, and satisfaction of an interactive voice response (IVR) system versus a customized smartphone application (StudyBuddy) to capture gout flares

Methods

In this 24-week prospective, randomized, crossover, open-label pilot study, 44 gout patients were randomized to IVR vs. StudyBuddy and were crossed over to the other technology after 12 weeks. Flares were reported via weekly (and later daily) scheduled StudyBuddy or IVR queries. Feasibility was ascertained via response rate to scheduled queries. At 12 and 24 weeks, participants completed preference/satisfaction surveys. Preference and satisfaction were assessed using dichotomous or ordinal questions. Sensitivity was assessed by the frequency of flare reporting with each approach.

Results

Thirty-eight of 44 participants completed the study. Among completers, feasibility was similar for IVR (81%) and StudyBuddy (80%). Conversely, most (74%) preferred StudyBuddy. Measures of satisfaction (ease of use, preference over in-person clinic visits, and willingness for future use) were similar between the IVR and StudyBuddy; however, more participants deemed the StudyBuddy as convenient (95% vs. 73%, P = 0.01) and less disruptive (97% vs. 82%, P = 0.03). Although the per patient number of weeks in flare was not significantly different (mean 3.4 vs. 2.6 weeks/patient, P = 0.15), the StudyBuddy captured more of the total flare weeks (35%) than IVR (27%, P = 0.02).

Conclusion

A smartphone application and IVR demonstrated similar feasibility but overall sensitivity to capture gout flares and participant preference were greater for the smartphone application. Participant preference for the smartphone application appeared to relate to perceptions of greater convenience and lower disruption.

Trial registration

NCT, NCT02855437. Registered 4 August 2016

Similar content being viewed by others

Background

Flare prevention represents a major tenet of effective gout management. Gout flares are the key patient-reported outcome measure in randomized controlled clinical trials (RCTs) in gout [1,2,3,4,5]. However, multiple RCTs of urate-lowering therapies (ULT) have failed to detect differences in gout flares between treatment arms [6, 7]. This evidence gap around a clear flare reduction in clinical trials is one of the reasons [8] that the 2016 American College of Physicians (ACP) [9] clinical guidelines for gout management failed to endorse the “treat-to-target” approach (lowering and maintaining serum urate concentrations below 5 to 6 mg/dl) recommended by the American College of Rheumatology (ACR) [10] and the European League Against Rheumatism (EULAR) [11].

However, capturing gout flares in RCTs poses a substantial methodologic challenge, especially since these events usually occur between, rather than during, study visits. An additional limitation of using gout flares as an outcome in RCTs is the absence of a standardized method of flare ascertainment. Current methods used to capture gout flares, such as the use of patient diaries [12], patient self-report [1], or retrospectively during scheduled physician visits [6], have many practical limitations given their resource intensive nature and the limited veracity of the resultant data collected.

With the dramatic increased use of cell phones and other portable devices, mobile technology is more frequently being leveraged in healthcare management and has increasingly been deployed for remote data collection in the clinical trial and practice settings [13]. For example, smartphone applications have been used to track data and monitor some diseases like rheumatoid arthritis, psoriatic arthritis, and diabetes [14, 15] or symptoms such as pain [16, 17]. It demonstrated success in patient engagement and data collection. An alternative and less technologically intensive method to capture patient-reported outcomes remotely is an automated phone survey using an interactive voice response (IVR) system [18]. The automated IVR telephone system delivers prerecorded surveys that participants answer through verbal or touch screen response. IVR surveys have been used for clinical studies, disease management, medication adherence monitoring, and health education [19, 20].

Given concerns about the absence of reliable gout flare capture in RCTs, the purpose of our project was to develop and test two novel methods to promptly and efficiently detect gout flares for use in future clinical trials. In this study, we compared IVR and a custom smartphone application (StudyBuddy) for capturing gout flares. We examined the feasibility, patient preference, satisfaction, and sensitivity for detecting flare using the two methods.

Methods

Study population and design

We conducted a 24-week, prospective, randomized, crossover, open-label pilot study comparing participants’ preference and feasibility of using interactive voice response (IVR) telephone questionnaire/flare reporting system versus a mobile smartphone application developed by investigators at the University of Alabama at Birmingham, GoutPRO (Birmingham, AL), now known as StudyBuddy, to capture patient-reported outcomes and data (Fig. 1). The trial was approved by the Institutional Review Boards at the two academic medical centers conducting this study. Patients were enrolled at UAB and at the University of Nebraska Medical Center (UNMC, Omaha, NE) rheumatology clinics from September of 2016 to March of 2018. Patient eligibility criteria included age 18 years and older (19 years or older in Nebraska), physician-diagnosed gout, and hyperuricemia with serum urate level ≥ 6.8 mg/dl within the 3 months of screening in the absence of interval changes in ULT dosing. Other inclusion criteria included the self-report of > 2 flares in the previous 6 months and current use of an Apple™ or Android™ smartphone with the ability to download the StudyBuddy smartphone application.

Interventions and data collection

Eligible participants providing consent were randomized to either IVR followed by StudyBuddy or StudyBuddy followed by IVR using a permutated block randomization to achieve balance between crossover arms in a 1:1 allocation. At the initial visit, depending on randomization assignment, participants installed and tested the StudyBuddy application on their smartphones, or received an IVR call.

During each12-week intervention period, participants completed the same gout flare survey questions via weekly scheduled StudyBuddy interactions or programmed IVR calls at the time of their choice. A subgroup of 15 participants in the IVR arm was randomly assigned to receive daily programmed IVR calls. The weekly IVR calls were to be repeated within 1, 2, 24, and 48 h of the original call (in cases of no response) while the daily IVR calls were repeated in 1 and 2 h as necessary. Flare assessment was based on validated gout flare survey questions consistent with the published gout flare self-report definition of Gaffo and colleagues [21]. This definition has 85% sensitivity and 95% specificity in confirming the presence of a gout flare. It requires fulfillment of at least 3 of 4 criteria (patient-defined gout flare, pain at rest score of > 3 on a 0–10-point numerical rating scale, presence of ≥ 1 swollen joint, and presence of ≥ 1 warm joint). Our survey questions included (1) whether the recent flare was similar to past flares, (2) the number of swollen/warm joints, (3) pain at rest during the flare (assessed using a 0–10 numerical rating scale), and (4) additional questions specific to each flare including peak pain levels, location of pain (i.e., which joints), timing of the flare, and its duration. After the first 12 weeks (phase 1), study participants were crossed over to receive the alternative mode of gout flare assessment and continued in the study for an additional 12 weeks (phase 2). A washout period between methods was not utilized since no protracted risk of behavioral change was anticipated. At 12 weeks (crossover) and 24 weeks (end of study), participants completed satisfaction surveys, as well as a questionnaire about their preferred mode of reporting gout flares.

Outcomes

Primary outcomes

We compared IVR and the customized StudyBuddy smartphone application in this pilot study using three primary domains of interest: (1) feasibility, (2) preference, and (3) satisfaction. Feasibility was ascertained via proportion of responses to the scheduled queries with each approach during the study period. Preference was calculated as the proportion of total study population preferring IVR or StudyBuddy. Satisfaction was calculated using six separate self-reported measures extracted from the customizable Health Information Technology (IT) Usability Evaluation Scale (Health-ITUES) at 12 and 24 weeks. Measures of satisfaction included the participant ratings of (1) ease of use, (2) disruption to daily activities, (3) ability to respond to alerts (or convenience of alerts), (4) preference of either device to a clinic visit, (5) satisfaction with the contact frequency, and (6) willingness for future use (acceptability) [22]. The feasibility and satisfaction measures were also tested in the subgroup with daily IVR calls to test for differences compared to the weekly group.

Secondary outcomes

We also considered the relative frequency of gout flare reporting as a component of the sensitivity of each method to capture relevant flare events. In doing so, we made the assumption that since we randomized patients to the sequence of 12-week time periods (i.e., app vs. IVR for 12 weeks, then crossover), the frequency of flares should be similar in both time periods, and thus any difference in the reported flare rates was a consequence of greater sensitivity of the technology to capture flare. We compared the frequency of flare reporting with each approach. We assessed flares using the aforementioned questionnaire and reported as the number of weekly sampling periods per participant characterized by flare in addition to the total proportion of weeks for all individuals characterized by a flare. In addition, at the end of the 24-week study period, an open-ended qualitative questionnaire about common causes for not responding to alerts was collected from participants via a structured telephone interview with questions focused on smartphone application issues, connection issues, and time convenience.

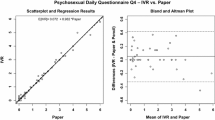

Statistical analysis

We summarized baseline characteristics of study participants as means with standard deviation (SD) for continuous and ordinal measures and number and percentage for categorical variables. All statistical analyses followed standard methods for a 2-by-2 crossover design [23]. Preference and satisfaction surveys used dichotomous questions, except the ease-of-use question which utilized a Likert scale (range 0 to 10; very easy to very difficult) and was analyzed as an ordinal variable. Differences between the StudyBuddy and IVR approach were evaluated using McNemar’s test for dichotomous variables, paired t test for continuous variables, and nonparametric Wilcoxon signed rank test for ordinal variables. Qualitative questionnaire data for lack of response were summarized as percentages. Carryover effects were examined using the standard two-sample t test.

We conducted sensitivity analyses with multiple imputations to examine the robustness of the reported results and the effects of missing data for both the as-treated (n = 38) and intent-to-treat populations (n = 44). We considered missing data to be missing at random. We also performed a complete case analysis with case-wise deletion. A two-sided alpha of 0.05 was used to determine significance. Since the study was a pilot study to demonstrate feasibility of recruiting and training gout patients to utilize StudyBuddy and IVR, a priori sample size calculations were not performed. All analyses were performed with SAS (version 9.4, SAS, Cary, NC).

Results

Of 48 participants screened, 44 were enrolled, 1 had no smartphone, 1 had < 2 flares within 6 months, and 2 decided against participation. By the end of the study, 38 completed both study arms, 1 withdrew immediately post-randomization, 3 (1 IVR, 2 StudyBuddy) were lost to follow-up during phase 1 [baseline to week 12], and 2 (1 IVR, 1 StudyBuddy) were lost to follow-up during phase 2 [week 13 through week 24]. The lost to follow-up participants did not complete the surveys and were unresponsive to follow-up calls. There were no significant differences observed in age, sex, or race between the 38 completers and the 6 individuals failing to complete the study. Participants were predominantly middle-aged men. Nearly half of the participants reported 2–3 gout flares within 6 months prior to enrollment with the remainder reporting four or more flares and nearly all were on ULT at enrollment (Table 1).

Primary outcomes

The two methods of flare capture demonstrated similar feasibility with participants responding to 81% of IVR queries and 80% of StudyBuddy queries (P = 0.94) (Table 2). At study completion, 28 (74%) preferred StudyBuddy delivered via smartphone, only 3 (8%) preferred IVR, and 7 (18%) had no preference. In assessments of satisfaction, the StudyBuddy was rated by participants as being numerically superior to IVR in terms of ease of use (0.58 ± 1.16 vs. 1.63 ± 2.5) [where 0 is very easy to use], although this difference did not achieve statistical significance (P = 0.79). Participants also reported the StudyBuddy to be more convenient, with 95% of participants reporting being able to answer questions when alerted, compared to 73% with IVR (P = 0.01). Moreover, participants found StudyBuddy to be disruptive < 3% of the time compared to 18% with IVR (P = 0.03). More than 80% of the participants preferred using either of the remote devices for reporting gout flare over in-person physician appointments. There was also no difference in the willingness for future use in both arms (Table 3). When we imputed missing data for measures of feasibility and satisfaction in both the as-treated and intent-to-treat populations, we found no significant difference in results. The complete case analysis with case-wise deletion also led to no changes in our overall results (data not shown).

Data on more frequent daily ascertainment involving more than a third of the participants in the IVR arm had little impact on measures of satisfaction, with the exception that participants were more likely to respond to weekly (87.0%) alerts compared to daily alerts (70.2%) (P < 0.05).

Secondary outcomes

The proportion of total weeks of observation with flares reported differed significantly per arm, with StudyBuddy weeks capturing more flares (35%) compared to IVR weeks (27%; P = 0.02). Likewise, the mean (± SD) number of weeks per participant characterized by a gout flare was numerically higher with StudyBuddy than IVR (3.4 ± 3.3 vs. 2.6 ± 2.5), although this difference did not achieve statistical significance (P = 0.15) (Table 2).

The reasons offered for not responding to the surveys in a timely fashion were mostly secondary to “inconvenience” associated with the timing of an alert, reported in 15 of 37 responses (40.5%). Other reasons noted included application malfunction (10.8%), smartphone running out of data/phone dysfunction (10.8%), or poor internet connection or reception. No carryover effects during phase 2 were identified.

Discussion

We found that both an IVR and customized smartphone application (StudyBuddy) were equally feasible when used in reporting gout flares. The StudyBuddy smartphone application was preferred by more patients and appeared to capture more total flares than the IVR. Participants also found the StudyBuddy smartphone application to be more convenient than IVR. Over 80% of participants in both arms reported that neither flare-reporting technology was disruptive. However, a small but significantly greater number of participants found the smartphone application less disruptive than IVR. Overall, both methods were reported to be easy to use and both were strongly preferred over an in-person clinic visit for gout flare reporting, and rendered similar willingness for future use in studies.

The feasibility of our smartphone application and IVR was generally consistent with that reported in prior studies that leveraged these technologies in pain monitoring, deploying nutritional assessment tools and medication adherence [24,25,26]. For instance, a prior study of patients with sickle cell disease reported a 75% compliance rate with daily queries with the use of a customized smartphone application [24]. Similarly, a feasibility study of a smartphone application for sleep apnea patients [27] demonstrated good feasibility with > 60% of participants completing the daily questionnaire, > 65% of the study time, and with a 94% satisfaction rates. Another small 3-month feasibility study in rheumatoid arthritis [28] measured the self-assessment of daily disease activity using a smartphone application. The vast majority of these participants (~ 88%) had no issues reporting their daily symptoms, and all were confident sharing their information. Nevertheless, challenges with reporting adherence and attrition have also been noted with smartphone applications [29, 30], a phenomenon that we saw in our study and would be anticipated in longer studies. In comparison with smartphone applications, the feasibility of IVR for collecting daily queries of patient-reported outcomes has been reported in different diseases. In a randomized clinical trial of a pain medication, 85% of the daily surveys were successfully completed by participants [31]. Another feasibility study on prostate cancer survivors demonstrated 87% response to IVR queries regarding the quality of life [32].

IVR is a well-tested approach to communicate instructions, collect health information, send reminders, and complete surveys to improve healthcare services [19]. Despite being slightly less favorably received, when we compared it to StudyBuddy in our study, it was still judged as being similarly acceptable for future use and far more preferred than an in-person clinic visit. This parallels findings from previous studies examining different patient populations where IVR use was also found to be preferable [33, 34] and acceptable for future use [35, 36]. The fact that > 80% of our participants reported the IVR was not disruptive and > 70% reported it as convenient may have been associated with pre-scheduled call times established according to the participants’ preference. To our knowledge, IVR has not previously been used to capture patient-reported outcomes, including gout flare, in arthritis studies. This technology may be of particular benefit among gout patients lacking access to or less comfortable with the use of smartphones or other more advanced technologies. The IVR approach has other advantages including ease of use, real-time data collection, cost effectiveness, and independence of patient literacy. However, it still requires adequate telephone access.

The higher participant preference for the StudyBuddy was consistent with higher ratings received in relation to its perceived convenience and lower disturbance rate. Similar to other studies in pain [37] and sickle cell disease [24] that utilized a similar approach, our study also showed that patients found the smartphone application to be acceptable with excellent response rates, underscoring its potential feasibility in clinical trials as well as in day-to-day practice. Similar findings were also seen in a juvenile idiopathic arthritis study, where a different smartphone application was used to remotely promote for self-management with good acceptability by pediatric patients and parents alike [38]. The smartphone application shares similar properties to the IVR. However, it provides more flexibility in collecting data, with the potential for real-time feedback. The smartphone technology also offers the ability to assess certain physiological parameters such as step counts and sleep. As a drawback noted above, a smartphone application use requires more fluency with computer technology. Other challenges of the smartphone technology include privacy concerns with data transmission [39]. The younger age of our cohort might partially explain the slight preference for the StudyBuddy. As a further general limitation, both the smartphone and the IVR lack a direct person-to-person communication [40]. Although smartphone applications have been previously developed to promote self-efficacy in gout [13], we are aware of no other study that systemically examined this technology as a means of remotely capturing gout flare in patients.

The difference observed in the total proportion of weekly queries characterized by flare suggests that the customized smartphone application used in our study yielded a higher sensitivity for flare capture than IVR. Whether this difference relates to the greater convenience and flexibility in responding to alerts with this application is unknown, although our results suggest this to be one possible explanation. This trend was also observed in per patient flare reporting, where use of the StudyBuddy also yielded more flare reports, even though this difference did not achieve significance likely owing to the limited sample size and high variability observed with these events. Although our results suggest that the StudyBuddy provides a more sensitive means of flare capture than IVR, larger studies will be needed to validate this finding.

To our knowledge, this is among the first studies to use smartphones to capture self-reported gout flares. Despite its strengths, particularly in terms of crossover design and innovation, there are limitations to the study. Designed and implemented as a pilot proof-of-concept study, the sample size was small and this limited power to detect differences for some of the outcomes examined, as expected in the design phase. During the conduct of this study, we encountered technical issues with approximately 1 in every 5 participants reporting at least sporadic issues related to poor internet network connectivity or application malfunction. Though infrequent and highly informative for future study planning, these technical issues could serve as a source of study response bias.

Conclusion

In summary, different technologies may offer variable benefits for gout flare assessment in future clinical trials since both forms of technology were equally feasible and reasonably well accepted. Our smartphone application, StudyBuddy, appeared to provide greater sensitivity, and our study participants found it to be more convenient and “user friendly” for gout flare reporting. While our pilot demonstrated the utility of using these technology tools to record self-reported gout outcomes, future studies examining patient preferences and satisfaction will be required in different populations to demonstrate both generalizability and reproducibility of our results as well as to better define the role of such technologies in future gout management trials.

Availability of data and materials

The datasets generated and/or analyzed during the current study are not publicly available due to complexity of data coding and data transformations needed for analyses but are available from the corresponding author on reasonable request.

Abbreviations

- ACP:

-

American College of Physicians

- ACR:

-

American College of Rheumatology

- EULAR:

-

European League Against Rheumatism

- IVR:

-

Interactive voice response

- NSAID:

-

Non-steroidal anti-inflammatory

- RCTs:

-

Randomized controlled trials

- StudyBuddy:

-

Smartphone mobile application

- ULT:

-

Urate-lowering therapies

References

Schumacher HR Jr, Becker MA, Wortmann RL, MacDonald PA, Hunt B, Streit J, et al. Effects of febuxostat versus allopurinol and placebo in reducing serum urate in subjects with hyperuricemia and gout: a 28-week, phase III, randomized, double-blind, parallel-group trial. Arthritis Rheum. 2008;59:1540–8.

So A, De Meulemeester M, Pikhlak A, Yücel AE, Richard D, Murphy V, et al. Canakinumab for the treatment of acute flares in difficult-to-treat gouty arthritis: results of a multicenter, phase II, dose-ranging study. Arthritis Rheum. 2010;62:3064–76.

Timilsina S, Brittan K, O'Dell J, Brophy M, Davis-Karim A, Henrie A, et al. Design and rationale for the veterans affairs “cooperative study program 594 comparative effectiveness in gout: allopurinol vs. febuxostat” trial. Contemp Clin Trials. 2018;68:102–8.

Schumacher HR Jr, Sundy JS, Terkeltaub R, Knapp HR, Mellis SJ, Stahl N, et al. Rilonacept (Interleukin-1 trap) in the prevention of acute gout flares during initiation of urate-lowering therapy: results of a phase II randomized, double-blind, placebo-controlled trial. Arthritis Rheum. 2012;64:876–84.

Saag K, Fitz-Patrick D, Kopicko J, Fung M, Bhakta N, Adler S, et al. FRI0320 Lesinurad, a selective URIC acid reabsorption inhibitor, in combination with allopurinol: results from a phase III study in gout patients having an inadequate response to standard of care (clear 1). Ann Rheum Dis. 2015;74:540.

Becker MA, Schumacher HR, Espinoza LR, Wells AF, MacDonald P, Lloyd E, et al. The urate-lowering efficacy and safety of febuxostat in the treatment of the hyperuricemia of gout: the CONFIRMS trial. Arthritis Res Ther. 2010;12:R63.

Becker MA, Schumacher HR Jr, Wortmann RL, MacDonald PA, Eustace D, Palo WA, et al. Febuxostat compared with allopurinol in patients with hyperuricemia and gout. N Engl J Med. 2005;353:2450–61.

Dalbeth N, Bardin T, Doherty M, Lioté F, Richette P, Saag KG, et al. Discordant American College of Physicians and international rheumatology guidelines for gout management: consensus statement of the gout, hyperuricemia and crystal-associated disease network (G-CAN). Nat Rev Rheumatol. 2017;13:561–8.

Qaseem A, Harris RP, Forciea MA. Management of acute and recurrent gout: a clinical practice guideline from the American College of Physicians. Ann Intern Med. 2017;166:58–68.

Khanna D, Fitzgerald JD, Khanna PP, Bae S, Singh MK, Neogi T, et al. 2012 American College of Rheumatology guidelines for management of gout. Part 1: systematic nonpharmacologic and pharmacologic therapeutic approaches to hyperuricemia. Arthritis Care Res. 2012;64:1431–46.

Richette P, Doherty M, Pascual E, Barskova V, Becce F, Castaneda-Sanabria J, et al. 2016 updated EULAR evidence-based recommendations for the management of gout. Ann Rheum Dis. 2017;76:29–42.

Schlesinger N, Mysler E, Lin H-Y, De Meulemeester M, Rovensky J, Arulmani U, et al. Canakinumab reduces the risk of acute gouty arthritis flares during initiation of allopurinol treatment: results of a double-blind, randomised study. Ann Rheum Dis. 2011;70:1264–71.

Nguyen AD, Baysari MT, Kannangara DR, Tariq A, Lau AY, Westbrook JI, et al. Mobile applications to enhance self-management of gout. Int J Med Inform. 2016;94:67–74.

Boulos MN, Wheeler S, Tavares C, Jones R. How smartphones are changing the face of mobile and participatory healthcare: an overview, with example from eCAALYX. Biomed Eng Online. 2011;10:24.

Lunde P, Nilsson BB, Bergland A, Kværner KJ, Bye A. The effectiveness of smartphone apps for lifestyle improvement in noncommunicable diseases: systematic review and meta-analyses. J Med Internet Res. 2018;20:e162.

Jibb LA, Stevens BJ, Nathan PC, Seto E, Cafazzo JA, Johnston DL, et al. Implementation and preliminary effectiveness of a real-time pain management smartphone app for adolescents with cancer: a multicenter pilot clinical study. Pediatr Blood Cancer. 2017;64:e26554.

W. Benjamin Nowell, Michelle Thai,Carole Wiedmeyer, Kelly Gavigan, Shilpa Venkatachalam, Seth Ginsberg, Jeffery Curtis. Digital interventions to build a patient registry for rheumatology research. Rheum Dis Clin North Am. 2019;45:173–86.

Bennett AV, Keenoy K, Shouery M, Basch E, Temple LK. Evaluation of mode equivalence of the MSKCC bowel function instrument, LASA quality of life, and subjective significance questionnaire items administered by web, interactive voice response system (IVRS), and paper. Qual Life Res. 2016;25:1123–30.

Lee H, Friedman ME, Cukor P, Ahern D. Interactive voice response system (IVRS) in health care services. Nurs Outlook. 2003;51:277–83.

Daftary A, Hirsch-Moverman Y, Kassie GM, Melaku Z, Gadisa T, Saito S, et al. A qualitative evaluation of the acceptability of an interactive voice response system to enhance adherence to isoniazid preventive therapy among people living with HIV in Ethiopia. AIDS Behav. 2017;21:3057–67.

Gaffo AL, Dalbeth N, Saag KG, Singh JA, Rahn EJ, Mudano AS, et al. Brief report: validation of a definition of flare in patients with established gout. Arthritis Rheumatol. 2018;70:462–7.

Yen PY, Wantland D, Bakken S. Development of a customizable health IT usability evaluation scale. AMIA Annu Symp Proc. 2010;2010:917–21.

Senn SS. Cross-over trials in clinical research, West Sussex: John Wiley & Sons Ltd; 2002.

Jonassaint CR, Shah N, Jonassaint J, De Castro L. Usability and feasibility of an mHealth intervention for monitoring and managing pain symptoms in sickle cell disease: the Sickle Cell Disease Mobile application to Record Symptoms via Technology (SMART). Hemoglobin. 2015;39:162–8.

Ambrosini GL, Hurworth M, Giglia R, Trapp G, Strauss P. Feasibility of a commercial smartphone application for dietary assessment in epidemiological research and comparison with 24-h dietary recalls. Nutr J. 2018;17:5.

Schroder KE, Johnson CJ. Interactive voice response technology to measure HIV-related behavior. Curr HIV/AIDS Rep. 2009;6:210–6.

Isetta V, Torres M, González K, Ruiz C, Dalmases M, Embid C, et al. A new mHealth application to support treatment of sleep apnoea patients. J Telemed Telecare. 2017;23:14–8.

Nishiguchi S, Ito H, Yamada M, Yoshitomi H, Furu M, Ito M, et al. Self-assessment of rheumatoid arthritis disease activity using a smartphone application. Methods Inf Med. 2016;55:65–9.

Anguera JA, Jordan JT, Castaneda D, Gazzaley A, Areán PA. Conducting a fully mobile and randomised clinical trial for depression: access, engagement and expense. BMJ Innov. 2016;2:14–21.

Crouthamel M, Quattrocchi E, Watts S, Wang S, Berry P, Garcia-Gancedo L, et al. Using a ResearchKit smartphone app to collect rheumatoid arthritis symptoms from real-world participants: feasibility study. JMIR mHealth uHealth. 2018;6:e177.

Heapy A, Sellinger J, Higgins D, Chatkoff D, Bennett TC, Kerns RD. Using interactive voice response to measure pain and quality of life. Pain Med. 2007;8:S145–54.

Skolarus TA, Holmes-Rovner M, Hawley ST, Dunn RL, Barr KL, Willard NR, et al. Monitoring quality of life among prostate cancer survivors: the feasibility of automated telephone assessment. Urology. 2012;80:1021–6.

Kassavou A, Sutton S. Reasons for non-adherence to cardiometabolic medications, and acceptability of an interactive voice response intervention in patients with hypertension and type 2 diabetes in primary care: a qualitative study. BMJ Open. 2017;7:e015597.

Heisler M, Halasyamani L, Resnicow K, Neaton M, Shanahan J, Brown S, et al. “I am not alone”: the feasibility and acceptability of interactive voice response-facilitated telephone peer support among older adults with heart failure. Congest Heart Fail. 2007;13:149–57.

Oake N, van Walraven C, Rodger MA, Forster AJ. Effect of an interactive voice response system on oral anticoagulant management. CMAJ. 2009;180:927–33.

Hahn DL, Plane MB. Feasibility of a practical clinical trial for asthma conducted in primary care. J Am Board Family Pract. 2004;17:190–5.

Lalloo C, Jibb LA, Rivera J, Agarwal A, Stinson JN. “There’s a pain app for that”: review of patient-targeted smartphone applications for pain management. Clin J Pain. 2015;31:557–63.

Cai RA, Beste D, Chaplin H, Varakliotis S, Suffield L, Josephs F, et al. Developing and evaluating JIApp: acceptability and usability of a smartphone app system to improve self-management in young people with juvenile idiopathic arthritis. JMIR mHealth uHealth. 2017;5:e121.

Dorsey ER, Yvonne Chan YF, McConnell MV, Shaw SY, Trister AD, Friend SH. The use of smartphones for health research. Acad Med. 2017;92:157–60.

Abu-Hasaballah K, James A, Aseltine RH Jr. Lessons and pitfalls of interactive voice response in medical research. Contemp Clin Trials. 2007;28:593–602.

Acknowledgements

UAB Training Program in Rheumatic and Musculoskeletal Diseases Research, Ruth L. Kirschstein National Research Service Award (Kirschstein-NRSA) Institutional training grant awarded to UAB by the National Institute of Arthritis and Musculoskeletal and Skin Diseases (NIAMS) of the National Institutes of Health (NIH, T32 AR069516). Dr. Mikuls receives support from the National Institute of General Medical Sciences (U54GM115458).

Funding

Ironwood Pharmaceuticals funded the study but had no input into the design of the study, content of the manuscript, or the decision to publish.

Author information

Authors and Affiliations

Contributions

NE had contributions to the study conception and design, data analysis and interpretation, and preparation of the manuscript. BWC had contributions to the study conception and design. JF had contributions to the acquisition of data and interpretation. AM had contributions to the analysis and interpretation of data. JM had contributions to the acquisition of data. DB had contributions to the acquisition of data. SY had contributions to the acquisition of data and analysis. DR analyzed and interpreted the data. LC contributed to the study conception and design. CF had contributions to the acquisition of data. JRC had contributions to the study conception and design and data acquisition and interpretation. TRM had contributions to the study conception and design, interpretation of data and design, and manuscript preparation. KS had contributions to the study conception and design and had substantial contributions to the analysis and interpretation of data and manuscript preparation. All authors critically revised the manuscript, and all authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The trial was approved by the Institutional Review Boards at the two academic medical centers conducting this study, University of Alabama at Birmingham (UAB) and at the University of Nebraska Medical Center (UNMC, Omaha, NE).

Consent for publication

Not applicable.

Competing interests

T. Mikuls had a research support from Ironwood/Astra Zeneca and Horizon. K. Saag had a research support from Ironwood, Horizon, Takeda, and Sobi and is a consultant at Horizon, Sobi, Shanton, and Takeda. The other authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Elmagboul, N., Coburn, B.W., Foster, J. et al. Comparison of an interactive voice response system and smartphone application in the identification of gout flares. Arthritis Res Ther 21, 160 (2019). https://doi.org/10.1186/s13075-019-1944-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13075-019-1944-5