Abstract

Introduction

We evaluated an intervention designed to teach 10- to 12-year-old primary school children to assess claims about the effects of treatments (any action intended to maintain or improve health). We report outcomes measured 1 year after the intervention.

Methods

In this cluster-randomised trial, we included primary schools in the central region of Uganda that taught year 5 children (aged 10 to 12 years). We randomly allocated a representative sample of eligible schools to either an intervention or control group. Intervention schools received the Informed Health Choices primary school resources (textbooks, exercise books and a teachers’ guide). The primary outcomes, measured at the end of the school term and again after 1 year, were the mean score on a test with two multiple-choice questions for each of the 12 concepts and the proportion of children with passing scores.

Results

We assessed 2960 schools for eligibility; 2029 were eligible, and a random sample of 170 were invited to recruitment meetings. After recruitment meetings, 120 eligible schools consented and were randomly assigned to either the intervention group (n = 60 schools; 76 teachers and 6383 children) or the control group (n = 60 schools; 67 teachers and 4430 children). After 1 year, the mean score in the multiple-choice test for the intervention schools was 68.7% compared with 53.0% for the control schools (adjusted mean difference 16.7%; 95% CI, 13.9 to 19.5; P < 0.00001). In the intervention schools, 3160 (80.1%) of 3943 children who completed the test after 1 year achieved a predetermined passing score (≥ 13 of 24 correct answers) compared with 1464 (51.5%) of 2844 children in the control schools (adjusted difference, 39.5%; 95% CI, 29.9 to 47.5).

Conclusion

Use of the learning resources led to a large improvement in the ability of children to assess claims, which was sustained for at least 1 year.

Trial registration

Pan African Clinical Trial Registry (www.pactr.org), PACTR201606001679337. Registered on 13 June 2016.

Similar content being viewed by others

Background

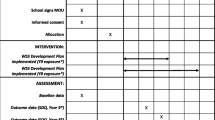

We identified Informed Health Choices (IHC) key concepts that people need to understand and apply when assessing claims about treatments [1, 2]. Together with teachers in Uganda, we determined which of those concepts were relevant for primary school children [3]. We then prototyped, user-tested and piloted learning resources to teach 12 key concepts (Table 1) to children [5], and we developed and validated a test to measure their ability to apply those concepts [6,7,8,9,10].

The resulting learning resources, which were printed in English, included a textbook, a teachers’ guide, an exercise book, a poster, and cards for an activity. The textbook [11] consists of a story in a comic book format (Fig. 1), instructions for classroom activities, exercises, a checklist summarising the concepts in the book, and a glossary of keywords with definitions in English and translations to Luganda and Swahili. In addition to the textbooks, we provided intervention schools with a guide [4] for each teacher, an exercise book for each child, a poster of the checklist for the classroom, and activity cards for the seventh lesson [12]. The contents of the book and the teachers’ guide are shown in Table 2. While most teachers considered the IHC content to be new, many found the design of the IHC lessons to be compatible with their teaching styles, particularly the use of multiple examples in the teachers’ guide [13]. We did not intervene in the control schools.

We conducted a cluster-randomised trial to evaluate the effects of using the learning resources [14, 15]. The intervention included a 2-day introductory workshop for the teachers, as well as providing them with the learning resources. The trial showed that the intervention resulted in a large improvement in the ability of children to assess claims about the effects of treatments, measured at the end of the term during which the intervention was delivered [14]. In this paper, we report on outcomes measured 1 year after the intervention. We report a process evaluation in a separate paper [13].

Methods

Details regarding the study methods can be found in the trial protocol [15] and report of the initial results [14]. They are briefly summarised here.

Participants

Between April 11, 2016, and June 8, 2016, we randomly selected 170 of 2029 eligible schools in central Uganda and recruited 120 of those schools (Fig. 2). We randomly sampled schools proportionately from lists of randomly selected districts, stratifying by school ownership (private or public) and location (urban, semi-urban and rural). We excluded international schools, special needs schools for children with visual and audio impairments, schools that had participated in user testing and piloting of the resources, infant and nursery schools and adult education schools. We included all year 5 children in the eligible schools.

Random allocation and blinding

We randomly allocated schools to the intervention or control group using a computer-generated sequence. We used stratified randomisation to help ensure equal distribution of schools for school ownership (public or private) and geographical location (urban, semi-urban or rural). Research assistants labelled opaque envelopes with the unique codes, inserted cards with the study group allocated to each code in the envelopes, and sealed them. After obtaining consent from 120 schools, 2 research assistants selected each school from a list of the schools; identified the appropriate randomisation list to be used for that school, based on its geographical location and ownership; and assigned the next available code from that list.

We informed the participating head teachers and year 5 teachers about the objectives of the study [15]. After randomisation, they knew whether they were in the intervention or control arm. The consent form stated that the outcome measure consisted of ‘multiple-choice questions that assess an individual’s ability to apply concepts that people must be able to understand and apply to assess treatment claims and to make informed healthcare choices.’ We did not show them the test until the end of the school term. Children in both arms of the trial were informed of the purpose of the test when their teachers asked them to complete it at the end of the term and again after 1 year.

Interventions

We designed the learning resources to be used over 9 weeks, with one double-period (80 min) per week, during a single school term, and 1 h to complete the test at the end of the term and again after 1 year. There was an expectation on the part of the head teachers and teachers that any content displaced by the lessons would be compensated, so that time was not taken away from other lessons. Each school decided how to do this, with some schools using the library lessons while boarding schools preferred to teach in the evenings and on weekends [13]. The intervention was delivered between June and August 2016.

We invited all participating teachers in the intervention group to attend an introductory workshop. At the workshop, we informed them about the study objectives and procedures, including the general nature of the outcome measure; went through all nine lessons outlined in the primary school resources; and addressed any questions or concerns that arose.

We invited year 5 teachers in the control schools to a 2-h introductory meeting in each district. At these meetings, we informed them about the study procedures, including the general nature of the test that we would be using as the outcome measure. We told them that they would receive the primary school resources at the end of the study. We did not introduce them to the resources.

Outcomes

The primary outcomes, measured using the same test taken at the end of the term when the intervention was delivered, were as follows:

- 1.

The mean test score (percentage of correct answers) on the same test 1 year later

- 2.

The proportion of children with a passing score

Secondary outcomes were as follows:

- 1.

The proportion of children with a score indicating mastery of the concepts

- 2.

For each concept, the proportion of children who answered both questions correctly

- 3.

The children’s intended behaviours and self-efficacy

- 4.

Self-reported behaviours

- 5.

Mean scores, passing scores and mastery scores for the teachers, who took the same test as the children at the end of the intervention term and again 1 year later

Most teachers completed the test at the same time as the children. We contacted teachers who were not available on the day of the exam to arrange completion of the questionnaire on another day. The children and the teachers were aware that missing answers would be scored as wrong.

The test included 24 multiple-choice questions (2 for each concept) (Additional file 1) [9]. The questions had between two and four response options, with an overall probability of answering 39% of the questions correctly by chance alone. Two additional multiple-choice questions were included because the test used in this trial was also used in a linked randomised trial evaluating a podcast given to the parents of some of the children [16]. These two extra questions were not included in the primary analyses.

The test also included questions that assessed intended behaviours, self-efficacy, attitudes and reading skills (Additional file 1). For questions about intended behaviours and self-efficacy, we dichotomised the responses (e.g., very unlikely or unlikely versus very likely or likely) in the analysis, and we reported the proportions of children for each of the four responses. We used the answers to the reading skills questions as a covariate in exploratory analyses. In the test taken after 1 year, we also collected data on self-reported behaviours (Table 3). We made the comparisons shown in Additional file 2: Table S1 with the corresponding hypotheses. These were not specified in the original protocol for the study but were planned prior to collecting the 1-year follow-up data.

Children were counted as ‘passing’ or ‘failing’ depending on whether they met a pre-specified passing score (a minimum of 13 of 24 questions answered correctly) [6]. We used a second cut-off for a score that indicated mastery of the 12 concepts (a minimum of 20 of 24 questions answered correctly).

We also report attendance and scores on national examinations for the intervention term and for the following term. These comparisons were originally planned as part of the process evaluation [13]. We asked participating schools to provide us with school attendance records and summary score sheets containing all pupils’ end-of-intervention term examination scores. The summary score sheet (Table 4) contains percentage scores for each end-of-intervention term examination and a total score across subjects (Additional file 2: Table S2). The children receive marks for English, mathematics, social studies, and science. We measured the mean difference between the intervention and control groups for each subject and for their total score (out of 100). We hypothesised higher scores in the intervention schools for English (because of the time spent reading and learning new words in English during the IHC lessons), science (based on results of randomised trials of other interventions to teach critical thinking [17,18,19], and stimulation of interest in science), and average scores (due to expected higher scores in English and science).

We asked teachers to record unexpected adverse events and problems that might pose risks to the children or others and to report these to the investigators or to the Institutional Review Board at Makerere University College of Health Sciences. Teachers in the intervention arm of the trial were given instructions for recording adverse events and problems in journals that they were asked to keep [13].

Statistical analysis

Statistical analysis was carried out using the University of Aberdeen Health Services Research Unit’s Cluster Sample Size Calculator, applying the following assumptions: 70 children per cluster; an intraclass correlation coefficient (ICC) of 0.5, based on ICCs from a meta-analysis of randomised trials of school interventions and an international comparison of ICCs for educational achievement outcomes [20, 21]; 0% as the proportion of children expected to achieve a passing score without our intervention, based on findings from pilot testing; 10% as the smallest difference we wanted to be able to detect; an alpha of 0.05; and a power of 90%. On the basis of these assumptions, we estimated that we would need a minimum of 55 schools in each arm to detect a difference of 10% in the proportion of children with a passing score [14].

For the primary and secondary outcomes, we used mixed models with a random effects term for the clusters and the stratification variables modelled as fixed effects, using logistic regression for dichotomous outcomes and linear regression for continuous outcomes. The statistical analyses were performed with R version 3.3.2 software (R Core Team, Vienna, Austria). We used a penalized-maximum likelihood logistic regression (R package ‘logistf’) for the secondary outcome of passing scores for teachers because of rare events (only one teacher in the intervention group did not have a passing score). We converted odds ratios from logistic regression analyses to adjusted differences using the intervention group percentage as the reference. All the children and teachers who completed the test were included in the analyses.

For questions about intended behaviours and self-efficacy, we dichotomised the responses in the analysis and reported the proportions of children for each of the four response options. For comparisons of how frequently participants in both groups reported hearing treatment claims, we analysed the data as ordinal data using mixed ordinal logistic regression, and we dichotomised the responses.

User testing of the questions about self-reported behaviours by 40 children who did not participate in the trial suggested that the questions are understood by children in Uganda. In addition, we used open-ended questions to ensure that the children understood these questions correctly: ‘Please write down the treatment claim that you last heard. What did they say the treatment would change or not change about someone’s health?’ (Table 5). We coded answers to these questions as ‘correct’ or ‘incorrect’, and we excluded from the comparisons in (Table 6) all participants who did not correctly identify the type of treatment (Additional file 2: Table S3) or who did not report a treatment claim. For attendance, we compared rates in the intervention and control groups. For marks, we compared mean exam scores (Additional file 2: Table S5), the proportions of children with passing scores (≥ 35), and the proportions of children with distinction scores (≥ 70).

To explore the risk of bias due to attrition, which was larger in the control schools than in the intervention schools, we conducted two sensitivity analyses. First, we conducted an analysis using inverse probability weighting. In this, the children in each school were given a weight equal to the inverse of the proportion of children in the school who had completed the test. Second, using the Lee bounds approach [22], we calculated upper and lower bounds for the mean difference in test scores. The bounds are constructed by trimming the group with less attrition at the upper and lower tails of the outcome (test score) distribution, respectively. In this analysis, the sample was trimmed in the intervention schools so that the proportion of children included in the analysis was equal for both groups. We did not adjust for covariates in the sensitivity analysis.

We conducted two additional sensitivity analyses to explore why the effects for the primary outcomes were smaller after 1 year than they were at the end of the intervention term. First, we calculated the differences between effects (adjusted mean differences and odds ratios) between the first and second tests based on mixed models with a random effects term for the clusters (schools) and individuals (who are used twice in these analyses), and the stratification variables modelled as fixed effects, using linear regression for the mean scores and logistic regression for the proportions with a passing score. Second, we estimated the effects at the end of the intervention term, excluding children who did not take the second test, using the same model as described above.

We explored whether there were differences in the effect of the intervention for children with advanced reading skills (all four literacy questions answered correctly) versus basic reading skills (both basic literacy questions correct and one or two of the advanced literacy questions wrong) versus lacking basic reading skills (one or both basic literacy questions wrong). In order to put the effect of the intervention in the context of effect sizes reported for other interventions to improve critical thinking or learning in primary schools [23], we calculated the adjusted standardised mean difference (Hedges’ g) and its 95% confidence interval using formulae described by White and Thomas [24].

Parents of 675 children in either the intervention or control group were recruited to participate in a parallel trial [16]. That trial evaluated the effects of a podcast designed to teach the parents of primary school children nine IHC key concepts, eight of which were included in the primary school resources. We conducted a second subgroup analysis to explore whether having a parent who listened to the podcast improved the scores of the children and whether there was an interaction between the effect of the podcast and the primary school resources. Because the parents allocated to listen to the podcast did not do so until after the children had completed the tests the first time, we only conducted this analysis for the 1-year follow-up study. We used statistical models as described above for this analysis; the main effects of the podcast were also included in these analyses.

Results

All 120 schools that were randomised provided data for the primary outcome measures and were included in the primary analyses. Most of the schools in both groups were urban or semi-urban (Table 7). There were more public schools in the control group (55% versus 50%). For the 1-year follow-up, there were fewer teachers who taught science as their main subject. Teachers in Ugandan primary schools frequently move and switch the major subject that they teach due to changes in staffing. Therefore, changes in the main subject taught by teachers are not unusual. There were otherwise only minor differences in the characteristics of the participants between the end of the intervention term and the 1-year follow-up, and between the intervention and control groups.

Six intervention schools had more than one year 5 class (with a different teacher for each class). This resulted in nine more teachers receiving training and being included in the intervention schools. No teachers were added in the control schools, because the teachers in the control schools did not receive training. For the 1-year follow-up, 78 (92%) of the teachers in the intervention schools and 59 (88%) of the teachers in the control schools completed the same test that the children took at the end of the term.

Altogether, 6787 children completed the 1-year follow-up test (Table 7). As was the case with the test taken at the end of the intervention term, more children completed the follow-up test in the intervention schools (62%) than in the control schools (45%). We think this is because teachers who taught the lessons were more motivated to arrange for the children whom they had taught to take the test. The proportion of girls (55%) and the median age of children (12 years; 25th to 75th percentile, 10 to 14) in the two groups were the same. Most of the children answered all the questions. The proportion of missing values (unanswered questions) for each question was between 0.25% and 3.38%, and the number of missing values was similar between the intervention and control schools (Additional file 2: Table S4).

Only 64 schools provided data on the secondary outcome of school attendance. Ninety-three schools provided data on examination scores for the intervention term, and 99 provided data for the following term (Additional file 2: Table S5).

Primary outcomes and sensitivity analyses

The average score for children in the intervention schools was 68.7% compared with 53.0% in the control schools (Table 8). The adjusted mean difference (based on the regression analysis) was 16.7% (95% CI, 13.9% to 19.5%; P < 0.00001) higher in the intervention than in the control group. The distribution of test scores is shown in Additional file 3. In the intervention schools, 80.1% of the children had a passing score (≥ 13 of 24 correct answers) compared with 51.5% in the control schools (Table 8). The adjusted difference (based on the odds ratio from the logistic regression analysis) was 39.5% more children who passed (95% CI, 29.9% to 47.5%) in the intervention group than in the control group. Although the average score and the proportion of children with a passing score in the intervention group were higher after 1 year than at the end of the intervention term, the differences between the intervention and control schools were smaller, because the scores increased more in the control schools than in the intervention schools between the first and second tests.

We conducted two sensitivity analyses to investigate possible explanations for the small effect estimates after 1 year. To explore whether the apparent differences might have occurred by chance alone, we calculated the probability of a difference as large as or larger than what we observed having occurred by chance (Additional file 2: Table S18). It is highly unlikely that the differences in the effect estimates would have occurred by chance (P > 0.00001). To explore whether the differences might reflect bias resulting from differential loss to follow-up, we calculated the effects at the end of the intervention term, excluding children who did not take the second test (Additional file 2: Table S19). The effect estimates are similar. We consider other possible explanations in the ‘Discussion’ section below.

We conducted two sensitivity analyses to assess the potential risk of bias from attrition (i.e., children who did not take the test) (Table 9). There was very little difference between the results of analysis using inverse probability weighting and the primary analysis (Additional file 2: Table S6), suggesting that the results are robust. In the second analysis, we calculated Lee bounds for the mean difference in test scores. This resulted in lower (worst case) and upper (best case) mean differences of 6.4% and 26.6%, respectively (95% CI, 6.6% to 26.5%). This indicates that even with the worst-case scenario, the average test score in the intervention schools was still 6.4% higher than in the control schools. Moreover, the worst-case scenario, which removed 17% of the children with the highest test scores from the intervention group, is unlikely. This is equivalent to assuming that the children in the control schools who did not take the test would have had scores that corresponded to the top 17% of the children in the intervention schools, had they taken the test (Additional file 2: Table S7). It is more likely that the children who were lost to follow-up and did not take the test would have done worse rather than better than the children who did take the test.

Secondary outcomes

-

The proportion of children with a score indicating mastery of the concepts

In the intervention schools, 28.9% of the children had a score indicating mastery of the 12 key concepts (≥ 20 of 24 correct answers) compared with 4.9% of the children in the control schools (Table 8). The adjusted difference was 25.0% more children in the intervention schools who mastered the concepts (95% CI, 23.2% to 26.5%). This is a larger difference than there was at the end of the term during which the intervention had been delivered (18.0%). The proportion of children with a score indicating mastery increased from 18.6% to 28.9% in the intervention group between the first and second tests, compared with an increase from 0.9% to 4.9% in the control group.

For each concept, the proportion of children who answered both questions correctly

For each concept, the proportion of children who answered both questions correctly was higher in the intervention schools than in the control schools, including for the concept that was not covered in the primary school resources (P < 0.0001 for all 13 concepts after a Bonferroni correction for multiple comparisons) (Table 10).

Children’s intended behaviours and self-efficacy

Compared with children in the control schools, children in the intervention schools were more likely to respond that they would find out the basis for a claim (adjusted difference, 8.1%; 95% CI, 3.7% to 12.6%) and to participate in a research study if asked (adjusted difference, 7.7%; 95% CI, 2.0% to 13.5%) (Additional file 2: Table S8). These findings are similar to those we found 1 year earlier. However, there was little if any difference in how likely they were to find out if a claim was based on research (adjusted difference, 2.6%; 95% CI, − 1.9% to 7.2%). This contrasts with what we found 1 year earlier (10.8%; 95% CI, 6.3% to 15.1%).

Self-reported behaviours

Similar to what we found 1 year earlier, children in the intervention schools were more likely to consider it easy to assess whether a claim is based on research than children in the control schools (adjusted difference, 14.8%; 95% CI, 8.9% to 20.5%) (Table 11). They were also more likely to consider it easy to find information about treatments based on research (adjusted difference, 7.2%; 95% CI, 2.6% to 11.5%) (Table 12), whereas 1 year earlier, we had detected little if any difference (Additional file 2: Table S9). We detected little if any difference in how easy children thought it was to assess how sure they could be about the results of research or to assess how relevant research findings are to them. One year earlier, compared with children in the control group, the children in the intervention group were less likely to consider it easy to assess how sure they could be about the results of research.

The children in the intervention schools were more likely to report hearing one or more treatment claims daily or weekly (Table 13) than were children in the control schools (adjusted difference, 7.0%; 95% CI, 0.5% to 12.9%) (Additional file 2: Table S10). The children in the intervention schools were less likely to be very sure or not to know whether a claim could be trusted (Table 14) (adjusted difference, − 15%; 95% CI, − 9.9% to − 19.7%) and more likely to assess the trustworthiness of a claim consistently with what they identified as the basis of the claim (adjusted difference, 7.6%; 95% CI, 3.5% to 11.1%) (Additional file 2: Table S11). However, there were only slight differences in how likely children in the intervention schools were to think about the basis of the last claim that they heard (Table 15) (adjusted difference, 4.1%; 95% CI, − 1.2% to 9.6%) (Additional file 2: Table S12 and S13), as well as in their assessments of the advantages and disadvantages of the most recent treatment they had used (Table 16) (Additional file 2: Table S14). The difference in attendance or examination scores was also small (Additional file 2: Table S5). As reported previously [14], none of the teachers or research assistants who observed the lessons reported any adverse events.

Mean, passing and mastery scores for teachers

After 1 year, most teachers in both the intervention and control groups (98.7% and 85.9%, respectively) had passing scores (adjusted difference, 8.6%; 95% CI, 1% to 55.5%) (Table 8). The teachers in the intervention group were much more likely to have a score indicating mastery of the concepts (67.9% versus 21.9%; adjusted difference, 46.3%; 95% CI, 31.5% to 56.6%). These results are similar to those we found at the end of the intervention term.

Subgroup analyses

As was the case at the end of the intervention term, the intervention still had positive effects 1 year later, regardless of reading skills (Table 17), but with larger effects for children with better reading skills (Additional file 2: Table S15). Compared with the control schools (Table 18), reading skills were better in the intervention schools at the end of the intervention term and after 1 year (Additional file 2: Table S16). They had improved by about the same amount in both the intervention and control schools after 1 year. We did not detect an interaction between having a parent who listened to the podcast and the primary school intervention (Table 19) (adjusted difference for the interaction, 3.8%; 95% CI, − 3.9% to 11.4%) (Additional file 2: Table S17).

Discussion

The large effect that the Informed Health Choices intervention had on the ability of primary school children in Uganda to assess claims about treatment effects was sustained after 1 year. The mean score and the proportions of children with passing and mastery scores increased in the intervention schools (Table 8). However, because the scores in the control schools increased more than the scores in the intervention schools, the differences between the intervention and control schools for the mean score and the proportion of children with a passing score were smaller, albeit still large. On the other hand, the difference in the proportion of children with a mastery score increased.

We considered five possible explanations for these findings, none of which seem likely. First, the apparent differences in the effect estimates between the first and second measurements is unlikely to have occurred by chance alone (Additional file 2: Table S18). Second, bias resulting from differential loss to follow-up is also unlikely to explain the differences (Additional file 2: Table S19). A third possible explanation is that there was a learning effect from taking the test the first time, which was greater in the control schools than in the intervention schools. It is possible that the learning effect of taking the test alone would be greater than the added learning effect of taking the test after having been exposed to the IHC lessons. ‘Testing effects’—gains in learning that occur when students take a practice test—are well documented [25, 26]. They occur with and without feedback [26] and for higher-level thinking (‘application’ in Bloom’s taxonomy) as well as for recall of basic facts [25]. However, most studies investigating testing effects have been conducted over a much shorter time frame [26], and we are not aware of any studies that have documented a difference in testing effects between students who studied before taking a practice test and others who did not study. A fourth possible explanation is that children learn to think critically about treatment claims naturally as they grow older or through the existing curriculum, and the control schools were catching up with the intervention schools because of this. However, as documented in our process evaluation, the content of the lessons was new for all of the teachers and not something that they had previously taught. Furthermore, we did not deliver the learning resources to the control schools until after the follow-up data had been collected. Fifth, it also seems unlikely that the improvement was due to an improvement in reading skills in the control schools, because the change in reading skills was similar in the intervention and control schools.

The effects that we found for the children for each IHC key concept, as well as the effects that we found for the teachers, were similar to those we found at the end of the intervention term. Overall, these findings support the conclusion that the effects of the intervention were sustained, even though we are unable to explain why the children’s scores increased more in the control schools than in the intervention schools.

Other findings provide modest support for the conclusion that the children in the intervention schools were more likely to use what they had learned. The children in the intervention schools remained more likely than those in control schools to find out the basis for a treatment claim, more confident in their ability to assess whether a treatment claim is based on research, and more likely to participate in a research study if asked. They also appeared to be somewhat more aware of treatment claims, more sceptical of treatment claims, and more likely to assess the trustworthiness of treatment claims. However, all of these differences were smaller than the difference for the primary outcome measures. Moreover, at the end of the intervention term, children in the intervention schools were more likely than children in the control schools to say they would find out if a treatment claim was based on research, but after 1 year there was little difference.

The data we were able to collect for attendance and national examinations were incomplete, but based on those data, there was little difference between children in the intervention and control schools (Table 20). This contrasts with findings of studies in the United Kingdom, which have shown beneficial effects of critical thinking or meta-cognition interventions on academic achievement [17,18,19]. Possible explanations for this include the limitations of the data we were able to collect for these outcomes and differences between the interventions and the contexts in which they were delivered.

The main limitations of our follow-up study are similar to those discussed in our report of effects found immediately after the intervention [14]. First, we cannot rule out some degree of bias due to attrition. However, sensitivity analyses suggest that the effect estimates are robust. Second, we used an outcome measure that we developed ourselves. Outcome measures developed by the study authors for use in a study may be more likely to find larger effects than studies using established measures of critical thinking [23]. We developed the outcome measure because there was no pre-existing outcome measure suitable for our study [8]. Although we have demonstrated the validity and reliability of the outcome measure [6, 7, 9, 10], one should be cautious about comparing our results with the effects of other critical thinking interventions. Moreover, we are unaware of any other directly comparable studies [20, 23, 27,28,29,30]. Other interventions in primary schools have been found to improve critical thinking [23], but these studies have been conducted in high-income countries, few have measured outcomes after 1 year, and neither the interventions nor the outcome measures are directly comparable [27, 29].

It remains uncertain how transferable the findings of this study are to other countries. However, pilot testing in Kenya, Norway and Rwanda suggest that it may be possible to use the IHC primary school resources without substantial modifications. They have already been translated to Kiswahili, Kinyarwanda, Spanish, French and Farsi. There are plans or expressions of interest to translate them to other languages, including Chinese, German and Italian. Pilot studies have been completed or planned in several other countries, including Ireland and South Africa. The resources are open access, and we have prepared a guide for translating, contextualising and testing them [31].

However, we believe that a one-off intervention is unlikely to have large long-term effects on decision-making, health behaviours or health. Rather, we view this as the first step in developing a set of interventions for a spiral curriculum [32, 33]. Using this approach, some of the IHC key concepts would be introduced, as we did in this study. Then those concepts would be reinforced in subsequent cycles, and other, more complex concepts would be introduced.

Conclusions

It is possible to teach young children in a low-income country to think critically about the trustworthiness of claims about the benefits and harms of treatments, and children retain what they have learned for at least 1 year. In this study, we were also able to document modest effects on self-reported behaviours, because young children seldom make actual health choices independently. We believe it is highly desirable to begin teaching the IHC key concepts at a young age, and we have shown that this is possible.

Availability of data and materials

The data files for the 1-year follow-up are available from the Norwegian Centre for Research Data (http://www.nsd.uib.no/nsd/english/index.html).

Abbreviations

- IHC:

-

Informed Health Choices Project

References

Chalmers I, Oxman AD, Austvoll-Dahlgren A, Ryan-Vig S, Pannell S, Sewankambo N, et al. Key Concepts for Informed Health Choices: a framework for helping people learn how to assess treatment claims and make informed choices. BMJ Evid Based Med. 2018;23(1):29–33.

Austvoll-Dahlgren A, Oxman AD, Chalmers I, Nsangi A, Glenton C, Lewin S, et al. Key concepts that people need to understand to assess claims about treatment effects. J Evid Based Med. 2015;8:112–25.

Nsangi A, Semakula D, Oxman DA, Sewankambo KN. Teaching children in low income countries to assess claims about treatment effects: a prioritisation of key concepts. J Evid Based Med. 2015;8(4):173–80.

Informed Health Choices Group. Teachers’ guide for the Health Choices Book: learning to think carefully about treatments. A health science book for primary school children. Oslo: Norwegian Institute of Public Health; 2016. https://www.informedhealthchoices.org/wp-content/uploads/2016/08/IHC-V3-Teachers-guide_with-cover_Nov2016_lowres.pdf. Accessed 11 Feb 2019.

Nsangi A, Semakula D, Rosenbaum S, Oxman AD, Oxman M, Morelli A, et al. Development of the Informed Health Choices resources in four countries to teach primary school children to assess claims about treatment effects. 2019. In press. https://www.informedhealthchoices.org/wp-content/uploads/2016/08/IHC-primary-school-resources-development_IHC-Working-paper-2017.pdf

Davies A, Gerrity M, Nordheim L, Peter O, Opiyo N, Sharples J, et al. Measuring ability to assess claims about treatment effects: establishment of a standard for passing and mastery. In: IHC Working Paper. Oslo: Norwegian Institute of Public Health; 2017. http://www.informedhealthchoices.org/wp-content/uploads/2016/08/Claim-cut-off-IHC-Working-Paper-2017-01-09.pdf. Accessed 11 Feb 2019.

Semakula D, Nsangi A, Øystein G, Oxman AD, Sewankambo NK, A. A-D. Measuring ability to assess claims about treatment effects in English and Luganda: evaluation of multiple-choice questions from the “Claim Evaluation Tools” database using Rasch modelling. In: Informed Health Choices Working Paper, 2017. Oslo: Norwegian Institute of Public Health; 2017. https://www.informedhealthchoices.org/wp-content/uploads/2016/08/Claim-2nd-Rasch-analysis-in-Uganda-2017-03-17.pdf. Accessed 11 Feb 2019.

Austvoll-Dahlgren A, Nsangi A, Semakula D. Interventions and assessment tools addressing key concepts people need to know to appraise claims about treatment effects: a systematic mapping review. Syst Rev. 2016;5:215.

Austvoll-Dahlgren A, Semakula D, Nsangi A, Oxman AD, Chalmers I, Rosenbaum S, et al. Measuring ability to assess claims about treatment effects: the development of the ‘Claim Evaluation Tools’. BMJ Open. 2017;7(5):e013184.

Austvoll-Dahlgren A, Guttersrud Ø, Semakula D, Nsangi A, Oxman AD, et al. Measuring ability to assess claims about treatment effects: a latent trait analysis of items from the ‘Claim Evaluation Tools’ database using Rasch modelling. BMJ Open. 2017;7:e013185.

Informed Health Choices Group. The Health Choices Book: Learning to think carefully about treatments. A health science book for primary school children. Oslo: Norwegian Institute of Public Health; 2016.

Primary School Resources. Informed Health Choices. https://www.informedhealthchoices.org/primary-school-resources/. Accessed 11 Feb 2019.

Nsangi A, Semakula D, Glenton C, Lewin S, Oxman AD, Oxman M, et al. Informed Health Choices intervention to teach primary school children in low-income countries to assess claims about treatment effects: process evaluation. BMJ Open 2019;9(9):e030787.

Nsangi A, Semakula D, Oxman AD, Austvoll-Dahlgren A, Oxman M, Rosenbaum S, et al. Effects of the Informed Health Choices primary school intervention on the ability of children in Uganda to assess the reliability of claims about treatment effects: a cluster-randomised controlled trial. Lancet. 2017;390(10092):374–88.

Nsangi A, Semakula D, Oxman AD, Oxman M, Rosenbaum S, Austvoll-Dahlgren A, et al. Does the use of the Informed Healthcare Choices (IHC) primary school resources improve the ability of grade-5 children in Uganda to assess the trustworthiness of claims about the effects of treatments: protocol for a cluster-randomised trial. Trials 2017;18:223.

Semakula D, Nsangi A, Oxman AD, Oxman M, Austvoll-Dahlgren A, Rosenbaum SE, et al. Effects of the Informed Health Choices podcast on the ability of parents of primary school children in Uganda to assess claims about treatment effects: a randomised controlled trial. Lancet. 2017;390(10092):389–98.

Education Endowment Foundation. Meta-cognition and self-regulation. The Sutton Trust Education Endowment Foundation Teaching and Learning Toolkit. London: Education Endowment Foundation; 2018. https://educationendowmentfoundation.org.uk/evidence-summaries/teaching-learning-toolkit/meta-cognition-and-self-regulation/. Accessed 11 Feb 2019

Hanley P, Slavin R, Elliot L. Thinking, doing, talking science: evaluation report and executive summary. London: Education Endowment Foundation; 2015. https://v1.educationendowmentfoundation.org.uk/uploads/pdf/Oxford_Science.pdf. Accessed 11 Feb 2019

Gorard S, Siddiqui N, See BH. Philosophy for children: evaluation report and executive summary. London: Education Endowment Foundation; 2015. https://educationendowmentfoundation.org.uk/public/files/Support/Campaigns/Evaluation_Reports/EEF_Project_Report_PhilosophyForChildren.pdf. Accessed 11 Feb 2019

McEwan PJ. Improving learning in primary schools of developing countries: a meta analysis of randomised experiments. Rev Educ Res. 2015;85:385–94.

Zopluoglu C. A cross-national comparison of intra-class correlation coefficient in educational achievement outcomes. J Meas Eval Educ Psychol. 2012;3:242–78.

Lee DS. Training, wages, and sample selection:estimating sharp bounds on treatment effects. Rev Econ Stud. 2009;76:1071–102.

Abrami PC, Bernard RM, Borokhovski E, Waddinton DI, Wade CA, Persson T. Strategies for teaching students to think critically: a meta analysis. Rev Educ Res. 2015;85:275–314.

White IR, Thomas J. Standardised mean differences in individually-randomised and cluster randomised trials, with applications to meta-analysis. Clin Trials. 2005;2:141–51.

Marsh EJ, Roediger HL, Bjork RA, Bjork EL. The memorial consequences of multiple-choice testing. Psychon Bull Rev. 2007;14:194–9.

Adesope OO, Trevisan DA, Sundararajan N. Rethinking the use of tests: a meta-analysis of practice testing. Rev Educ Res. 2017;(87):659–701.

Nordheim L, Gundersen MW, Espehaug B, Guttersrud O, Flottorp S. Effects of school based educational interventions for enhancing adolescents’ abilities in critical appraisal of health claims: a systematic review. PLoS One. 2016;11:0161485.

Castle JC, Chalmers I, Atkinson P, et al. Establishing a library of resources to help people understand key concepts in assessing treatment claims—the “Critical thinking and Appraisal Resource Library” (CARL). PLoS One. 2017;12:e0178666.

Cusack L, Del Mar CB, Chalmers I, Gibson E, Hoffmann TC. Education interventions to improve people’s understanding of key concepts in assessing the effects of health interventions: a systematic review. Syst Rev. 2018;7:68.

Snilstveit B, Stevenson J, Phillips D, et al. Interventions for improving learning outcomes and access to education in low- and middle-income countries: a systematic review. London: International Initiative for Impact Evaluation; 2015. http://www.3ieimpact.org/en/evidence/systematic-reviews/details/259/. Accessed 11 Feb 2019

Informed Health Choices Group. Guide for piloting the Informed Health Choices (IHC) school resources. In: Informed Health Choices Working Paper. Oslo: Norwegian Institute of Public Health; 2017. http://www.informedhealthchoices.org/wp-content/uploads/2016/08/GUIDE-for-piloting-IHC-primary-school-resources.pdf. Accessed 11 Feb 2019.

Harden RM, Stamper N. What is a spiral curriculum? Med Teach. 1999;21:141–3.

Bruner JS. The process of education. Cambridge: Harvard University Press; 1960.

Kopelman LM. Minimal risk as an international ethical standard in research. J Med Philos. 2004;29:351–78.

Weijer C, Grimshaw JM, Talijaard M, et al. Ethical issues posed by cluster randomized trials in health research. Trials. 2011;12:100.

Acknowledgements

We are grateful to the Global Health and Vaccination Research (GLOBVAC) programme of the Research Council of Norway for support of this research and to the UK National Institute for Health Research for supporting Iain Chalmers and the James Lind Initiative. This work was also partially supported by a Career Development Award from the DELTAS (Developing Excellence in Leadership, Training and Science) Africa Initiative (grant DEL-15-011 to THRiVE-2). The DELTAS Africa Initiative is an independent funding scheme of the African Academy of Sciences (AAS)’s Alliance for Accelerating Excellence in Science in Africa (AESA) and supported by the New Partnership for Africa’s Development Planning and Coordinating Agency (NEPAD Agency) with funding from the Wellcome Trust (grant 107742/Z/15/Z) and the UK government. The views expressed in this publication are those of the author(s) and not necessarily those of AAS, NEPAD Agency, Wellcome Trust or the UK government. Alun Davies, Lena Nordheim, Peter O. Okebukola, Newton Opiyo, Jonathan Sharples, Helen Wilson and Charles Shey Wiysonge determined the cut-off scores for passing and mastery. Miriam Grønli was responsible for the textbook colouring, and Nora Rosenbaum assisted. Aisha Hashi, Sara Jaber, Rida Shah and Katie Tveiten helped test prototypes. Michael Mugisha, Anne-Marie Uwitonze and Jenny Moberg helped with piloting and user-testing an earlier version of the learning resources. We thank Dr. Daniel Nkaada at the Ugandan Ministry of Education for technical guidance; Sarah Natunga at the National Curriculum Development Centre in Uganda for reviewing the materials; Martin Mutyaba, Esther Nakyejwe, Margaret Nabatanzi, Hilda Mwebaza, Peter Lukwata, Rita Tukahirwa, David Ssimbwa, Adonia Sebulime, Enock Steven Ddamulira and Solomon Segawa for their help with data management; and all the research assistants who helped with data collection and entry. We also thank the Informed Health Choices advisory group. We are especially grateful to the many teachers and children in Uganda, Kenya, Rwanda and Norway who helped with the development of the Informed Health Choices primary school resources and to all the children, teachers and head teachers at the schools who participated in this trial.

Funding

This trial was funded by the Research Council of Norway (project number 220603/H10). The funder had no role in the study design, data collection, data analysis, data interpretation or writing of the report. The principal investigator had full access to all the data in the study and had final responsibility for the decision to submit the manuscript for publication.

Author information

Authors and Affiliations

Contributions

AN and DS are the principal investigators. They drafted the protocol with help from the other investigators and were responsible for the day-to-day management of the trial. NKS and ADO had primary responsibility for overseeing the trial. MO and SR had primary responsibility for developing the primary school resources. AM shared primary responsibility for developing the teachers’ guide. All the investigators other than KYD contributed to the development of the resources and to the protocol. AAD had primary responsibility for developing and validating the outcome measure. AN and DS had primary responsibility for data collection. KYD did the statistical analysis. The Norwegian Institute of Public Health, recipient of the grant from the Research Council of Norway, is the coordinating centre for the Informed Health Choices project. ADO, SR, AAD and IC are principal members of the coordinating group for the trial and, together with NKS and the principal investigators, acted as the steering committee for the trial. They were responsible for final decisions about the protocol and reporting of the results. All the investigators including CG, SL, MK and AF reviewed the manuscript, provided input, and agreed on the final version for publication. YD did the statistical analyses. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethics approval was obtained from the institutional review board at Makerere University College of Health Sciences School of Medicine (reference number 2013-105) and the Uganda National Council for Science and Technology (reference number SS3328) at the beginning of the study, and renewal of approval was sought for the follow-up study. Informed consent for all grade 5 classes to participate in the trial was obtained from school heads (the head teacher or school director) and grade 5 teachers. We provided the head teacher of each school with information about the study and obtained written consent from them on behalf of their school to participate in the first trial (at the end of the intervention term) and the second trial (1-year follow up). In addition, we obtained written consent from the primary 5 (year 5 of primary school) teachers identified by the head teachers. Informed consent was not required from the children or their parents. We did not obtain assent from individual primary 5 children or consent from their parents, because the intervention posed minimal risk and no more risk than other teaching materials [34], almost none of which have been evaluated [20, 30]. Informed consent by individual children or their parents, in effect, would be meaningless once the decision to participate was taken by the head teacher and the teachers, who have the responsibility and authority to make decisions about lesson plans and the administration of tests [35]. Individual children and their parents had the same right to refuse participation as they do for any other lesson or test in primary schools.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1.

The claim evaluation tools.

Additional file 2: Table S1.

Comparisons related to self-reported behaviours in the 1-year follow-up. Table S2. Ranges of marks and points awarded for each subject. Table S3. Exclusion criteria for self-reported behaviours. Table S4. Number of missing values for each question. Table S5. Attendance and national examinations. Table S6. Sensitivity analyses – 1-year follow-up. Table S7. Attrition, differences in test scores across strata of schools. Table S8. Intended behaviours – 1-year follow-up. Table S9. Self-efficacy. Table S10. Self-reported behaviour – awareness of treatment claims. Table S11. Self-reported behaviour – assessment of trustworthiness of treatment claims. Table S12. Consistent (correct) answers regarding certainty about treatment claims. Table S13. Self-reported behaviour – assessment of the basis of treatment claims. Table S14. Self-reported behaviour – assessment of advantages and disadvantages of treatments. Table S15. Subgroup analysis – reading skills. Table S16. Differences in reading skills. Table S17. Subgroup analysis – parent who listened to the podcast. Table S18. Exploratory analyses – P values for differences between first (end of intervention term) and second (1-year follow-up) effects. Table S19. Exploratory analyses excluding children who did not take the test both times.

Additional file 3.

Distribution of scores and curves.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Nsangi, A., Semakula, D., Oxman, A.D. et al. Effects of the Informed Health Choices primary school intervention on the ability of children in Uganda to assess the reliability of claims about treatment effects, 1-year follow-up: a cluster-randomised trial. Trials 21, 27 (2020). https://doi.org/10.1186/s13063-019-3960-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13063-019-3960-9