Abstract

Background

The CONSORT (Consolidated Standards of Reporting Trials) Statement was developed to help biomedical researchers report randomised controlled trials (RCTs) transparently. We have developed an extension to the CONSORT 2010 Statement for social and psychological interventions (CONSORT-SPI 2018) to help behavioural and social scientists report these studies transparently.

Methods

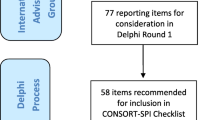

Following a systematic review of existing reporting guidelines, we conducted an online Delphi process to prioritise the list of potential items for the CONSORT-SPI 2018 checklist identified from the systematic review. Of 384 international participants, 321 (84%) participated in both rating rounds. We then held a consensus meeting of 31 scientists, journal editors, and research funders (March 2014) to finalise the content of the CONSORT-SPI 2018 checklist and flow diagram.

Results

CONSORT-SPI 2018 extends 9 items (14 including sub-items) from the CONSORT 2010 checklist, adds a new item (with 3 sub-items) related to stakeholder involvement in trials, and modifies the CONSORT 2010 flow diagram. This Explanation and Elaboration (E&E) document is a user manual to enhance understanding of CONSORT-SPI 2018. It discusses the meaning and rationale for each checklist item and provides examples of complete and transparent reporting.

Conclusions

The CONSORT-SPI 2018 Extension, this E&E document, and the CONSORT website (www.consort-statement.org) are helpful resources for improving the reporting of social and psychological intervention RCTs.

Similar content being viewed by others

Background

CONSORT-SPI 2018 explanation and elaboration

The CONSORT (Consolidated Standards of Reporting Trials) Statement was developed to help authors report randomised controlled trials (RCTs) [1]. It has improved the quality of reports in medicine [2,3,4,5], and has been officially endorsed by over 600 journals and prominent editorial groups [6]. A smaller number of journals have implemented CONSORT—particularly its extension statements—as a requirement for the manuscript submission, peer-review, and editorial decision-making process [6, 7]. There are extensions of the CONSORT Statement (http://www.consort-statement.org/extensions) for specific trial designs [8,9,10,11], types of data (e.g. patient-reported outcomes, harms, and information in abstracts) [12,13,14], and interventions [15,16,17].

Several reviews have shown that RCTs of social and psychological interventions are often not reported with sufficient accuracy, comprehensiveness, and transparency to replicate these studies, assess their quality, and understand for whom and under what circumstances the evaluated intervention should be delivered [18,19,20,21,22]. Moreover, behavioural and social scientists may be prevented from reproducing or synthesising previous studies because trial protocols, outcome data, and the materials required to implement social and psychological interventions are often not shared [23,24,25,26,27,28]. These inefficiencies contribute to suboptimal dissemination of effective interventions [29, 30], overestimation of intervention efficacy [31], and research waste [29]. Transparent and detailed reporting of social and psychological intervention RCTs is needed to minimise reporting biases and to maximise the credibility and utility of this research evidence [32, 33].

We developed an extension of the CONSORT 2010 Statement for Social and Psychological Interventions (CONSORT-SPI 2018) [34]. To delineate the scope of CONSORT-SPI 2018, we defined interventions by their mechanisms of action [35, 36]. We define social and psychological interventions as actions intended to modify processes and systems that are social and psychological in nature (such as cognitions, emotions, behaviours, norms, relationships, and environments) and are hypothesised to influence outcomes of interest [37, 38]. Social and psychological interventions may be offered to individuals who request them or as a result of policy, may operate at different levels (e.g., individual, group, place), and are usually “complex” [19]. CONSORT-SPI 2018 is designed primarily for reports of RCTs, though some parts of this guidance may be useful for researchers conducting other types of clinical trials [39] or who are interested in developing and evaluating complex interventions [40]. In addition, although terms in this report are most appropriate for parallel-group trials, the guidance is designed to apply to other designs (e.g. stepped wedge and N of 1).

Methods

We previously reported the methods used to develop CONSORT-SPI 2018 [41]. In summary, we first conducted systematic reviews of existing guidance and quality of trial reporting [18]. Second, we conducted an international online Delphi process between September 2013 and February 2014 to prioritise the list of potential items for the CONSORT-SPI 2018 checklist and flow diagram that were identified in the systematic review. Survey items can be accessed at the project’s ReShare site: 10.5255/UKDA-SN-851981. Third, we held a consensus meeting to finalise the content of the checklist and flow diagram. The meeting was held in March 2014 and comprised 31 scientists, journal editors, and research funders. A writing group drafted CONSORT-SPI 2018, and consensus group participants provided feedback and agreed to the final manuscript for the CONSORT-SPI 2018 Extension Statement [42], and this Explanation and Elaboration (E&E) document (Additional file 1: Table S1).

The CONSORT-SPI 2018 checklist extends 9 of the 25 items (incorporating 14 sub-items) found in CONSORT 2010 (Table 1; new items are in the ‘CONSORT-SPI 2018’ column) and includes a modified flow diagram. Participants also voted to add a new item about stakeholder involvement, and they recommended modifications to existing CONSORT 2010 checklist items (Table 2). This E&E document briefly summarises the content from the CONSORT 2010 E&E document for each CONSORT 2010 item [43], tailored to a behavioural and social science audience, and it provides an explanation and elaboration for the new items in CONSORT-SPI 2018. Specifically, for each item from CONSORT 2010 and CONSORT-SPI 2018, this E&E provides the rationale for the checklist item and examples of reporting for a behavioural and social science audience (Additional file 2: Table S2). Throughout this article, we use the term ‘participants’ to mean ‘participating units’ targeted by interventions, which might be individuals, groups, or places (i.e. settings or locations) [44]. While we include a brief statement about each item in the CONSORT 2010 checklist, readers can find additional information about these items on the CONSORT website (www.consort-statement.org) and the CONSORT 2010 E&E [43].

Results and Discussion

Explanation and elaboration of the CONSORT-SPI

Title and abstract

Item 1a: identification as a randomised trial in the title

Placing the word ‘randomised’ in the title increases the likelihood that an article will be indexed correctly in bibliographic databases and retrieved in electronic searches [45]. Authors should consider providing information in the title to assist interested readers, such as the name of the intervention and the problem that the trial addresses [46]. We advise authors to avoid uninformative titles (e.g. catchy phrases or allusions) because they reduce space that could be used to help readers identify relevant manuscripts [45, 46].

Item 1b: structured summary of trial design, methods, results, and conclusions (for specific guidance, see CONSORT for Abstracts) [13, 47]

Abstracts are the most widely read section of manuscripts [46], and they are used for indexing reports in electronic databases [45]. Authors should follow the CONSORT Extension for Abstracts, which provides detailed advice for structured journal article abstracts and for conference abstracts. We have tailored the CONSORT Extension for Abstracts for social and psychological intervention trials with relevant items for the objective, trial design, participants, and interventions from the CONSORT-SPI 2018 checklist (Table 3 and Additional file 3) [47].

Introduction

Item 2a: scientific background and explanation of rationale

A structured introduction should describe the rationale for the trial and how the trial contributes to what is known [48]. In particular, the introduction should describe the targeted problem or issue [49] and what is already know about the intervention, ideally by referencing systematic reviews [46].

Item 2b: specific objectives or hypotheses

The objectives summarise the research questions, including any hypotheses about the expected magnitude and direction of intervention effects [48, 50]. For social and psychological interventions that have multiple units of intervention and multiple outcome assessments (e.g. individuals, groups, places), authors should specify to whom or to what each objective and hypothesis applies.

CONSORT-SPI 2018 item 2b: if pre-specified, how the intervention was hypothesised to work

Describing how the interventions in all groups (i.e. all experimental and comparator groups) were expected to affect outcomes provides important information about the theory underlying the interventions [46]. For each intervention evaluated, authors should describe the 'mechanism of action' [51], also known as the 'theory of change' [52], 'programme theory' [53], or 'causal pathway' [54]. Authors should state how interventions were thought to affect outcomes prior to the trial, and whether the hypothesised mechanisms of action were specified a priori, ideally with reference to the trial registration and protocol [55]. Specifically, authors should report: how the components of each intervention were expected to influence modifiable psychological and social processes, how influencing these processes was thought to affect the outcomes of interest, the role of context, facilitators of and barriers to intervention implementation, and potential adverse events or unintended consequences [48, 56]. Graphical depictions—such as a logic model or analytic framework—may be useful [19].

Methods: trial design

Item 3a: description of trial design (such as parallel, factorial) including allocation ratio

Unambiguous details about trial design help readers assess the suitability of trial methods for addressing trial objectives [46, 57], and clear and transparent reporting of all design features of a trial facilitates reproducibility and replication [58]. Authors should explain their choice of design (especially if it is not an individually randomised, two-group parallel trial) [9]; state the allocation ratio and its rationale; and indicate whether the trial was designed to assess the superiority, equivalence, or noninferiority of the interventions [10, 59, 60].

CONSORT-SPI 2018 item 3a: if the unit of random assignment is not the individual, please refer to CONSORT for Cluster Randomised Trials

Randomising at the cluster level (e.g. schools) has important implications for trial design, analysis, inference, and reporting. For cluster randomised trials, authors should follow the CONSORT Extension to Cluster Randomised Trials. Because many social and psychological interventions are cluster randomised, we provide the extended items from this checklist in Tables 4 and 5 [8]. Authors should also report the unit of randomisation, which might be social units (e.g. families), organisations (e.g. schools, prisons), or places (e.g. neighbourhoods), and specify the unit of each analysis, especially when the unit of analysis is not the unit of randomisation (e.g. randomising at the cluster level and analysing outcomes assessed at the individual level).

Item 3b: important changes to methods after trial commencement (such as eligibility criteria), with reasons

Deviations from planned trial methods are common, and not necessarily associated with flawed or biased research. Changes from the planned methods are important for understanding and interpreting trial results. A trial report should refer to a trial registration (Item 23) [61,62,63] and protocol (Item 24) developed in advance of assigning the first participant [55], and to a pre-specified statistical analysis plan [64]. The report should summarise all amendments to the protocol and statistical analysis plan, when they were made, and the rationale for each amendment. Because selective outcome reporting is pervasive [65], authors should state any changes to the outcome definitions during the trial.

Methods: participants

Item 4a: eligibility criteria for participants

Eligibility criteria should describe how participants (i.e. individuals, groups, or places) were recruited. Readers need this information to understand who could have entered the trial and the generalisability of findings. Authors should describe all inclusion and exclusion criteria used to determine eligibility, as well as the methods used to screen and assess participants to determine their eligibility [46, 48].

CONSORT-SPI 2018 item 4a: when applicable, eligibility criteria for settings and those delivering the interventions

In addition to the eligibility criteria that apply to individuals, social and psychological intervention trials often have eligibility criteria for the settings where participants will be recruited and interventions delivered, as well as intervention providers [44]. Authors should describe these criteria to help readers compare the trial context with other contexts in which interventions might be used [48, 66, 67].

Item 4b: settings and locations of intervention delivery and where the data were collected

Information about settings and locations of intervention delivery and data collection are essential for understanding trial context. Important details might include the geographic location, day and time of trial activities, space required, and features of the inner setting (e.g. implementing organisation) and outer setting (e.g. external context and environment) that might influence implementation [68]. Authors should refer to the mechanism of action when deciding what information about setting and location to report.

Methods: interventions

Item 5: the interventions for each group with sufficient details to allow replication, including how and when they were actually administered

Complete and transparent information about the content and delivery of all interventions in all groups (experimental and comparator) [44] is vital for understanding, replicating, and synthesising intervention effects [54, 69]. Essential information includes: naming the interventions, what was actually delivered (e.g. materials and procedures), who provided the interventions, how, where, when, and how much [70]. Details about providers should include their professional qualifications and education, expertise or competence with the interventions or area in general, and training and supervision for delivering the interventions [71]. Tables or diagrams showing the sequence of intervention activities, such as the participant timeline recommended in the Standard Protocol Items: Recommendations for Interventional Trials (SPIRIT) 2013 Statement [55], are often useful [72]. Authors should avoid the sole use of labels such as ‘treatment as usual’ or ‘standard care’ because they are not uniform across time and place [48].

CONSORT-SPI 2018 item 5a: extent to which interventions were actually delivered by providers and taken up by participants as planned

Frequently, interventions are not implemented as planned. Authors should describe the actual delivery by providers and uptake by participants of interventions for all groups, including methods used to ensure or assess whether the interventions were delivered by providers and taken up by participants as intended [69]. Quantitative or qualitative process evaluations [51] may be used to assess what providers actually did (e.g. recording and coding sessions), the amount of an intervention that participants received (e.g. recording the number of sessions attended), and contamination across intervention groups [73, 74]. Authors should distinguish planned systematic adaptations (e.g. tailoring) from modifications that were not anticipated in the trial protocol. When this information cannot be included in a single manuscript, authors should use online supplements, additional reports, and data repositories to provide this information.

CONSORT-SPI 2018 item 5b: where other informational materials about delivering the interventions can be accessed

Authors should indicate where readers can find sufficient information to replicate the interventions, such as intervention protocols [75], training manuals [48], or other materials (e.g. worksheets and websites) [54]. For example, new online platforms such as the Open Science Framework allow researchers to share some or all of their study materials freely (https://osf.io).

CONSORT-SPI 2018 item 5c: when applicable, how intervention providers were assigned to each group

Some trials assign specific providers to different conditions to prevent expertise and allegiance from confounding the results. Authors should report whether the same people delivered the experimental and comparator interventions, whether providers were nested within intervention groups, and the number of participants assigned to each provider.

Methods: outcomes

Item 6a: completely define pre-specified outcomes, including how and when they were assessed

All outcomes should be defined in sufficient detail for others to reproduce the results using the trial data [50, 76]. An outcome definition includes: (1) the domain (e.g. depression), (2) the measure (e.g. the Beck Depression Inventory II Cognitive subscale), (3) the specific metric (e.g. a value at a time point, a change from baseline), (4) the method of aggregation (e.g. mean, proportion), and (5) the time point (e.g. 3 months post-intervention) [50]. In addition, authors should report the methods and persons used to collect outcome data, properties of measures or references to previous reports with this information, methods used to enhance measurement quality (e.g. training of outcome assessors), and any differences in outcome assessment between trial groups [46]. Authors also should indicate where readers can access materials used to measure outcomes [24]. When a trial includes a measure (e.g. a questionnaire) that is not available publicly, authors should provide a copy (e.g. through an online repository or as an online supplement).

Item 6b: any changes to trial outcomes after the trial commenced, with reasons

All outcomes assessed should be reported. If the reported outcomes differ from those in the trial registration (Item 23) or protocol (Item 24), authors should state which outcomes were added and which were removed. To allow readers to assess the risk of bias from outcome switching, authors should also identify any changes to level of importance (e.g. primary or secondary) [77]. Authors should provide the rationale for any changes made and state whether these were done before or after collecting the data.

Methods: sample size

Item 7a: how sample size was determined

Authors should indicate the intended sample size for the trial and how it was determined, including whether the sample size was determined a priori using a sample size calculation or due to practical constraints. If an a priori sample size calculation was conducted, authors should report the effect estimate used for the sample size calculation and why it was chosen (e.g. the smallest effect size of interest, from a meta-analysis of previous trials). If an a priori sample size calculation was not performed, authors should not present a post hoc calculation, but rather the genuine reason for the sample size (e.g. limitations in time or funding) and the actual power to detect an effect for each result (Item 17).

Item 7b: when applicable, an explanation of any interim analyses and stopping guidelines

Multiple statistical analyses can lead to false-positive results, especially when using stopping guidelines based on statistical significance. Any interim analyses should be described, including which analyses were conducted (i.e. the outcomes and methods of analysis), when they were conducted, and why (particularly whether they were pre-specified [78]). Authors should also describe the reasons for stopping the trial, including any procedures used to determine whether the trial would be stopped early (e.g. regular meetings of a data safety monitoring board) [79].

Methods: randomisation—sequence generation

Item 8a: method used to generate the random allocation sequence

In a randomised trial, participants are assigned to groups by chance using processes designed to be unpredictable. Authors should describe the method used to generate the allocation sequence (e.g. a computer-generated random number sequence), so that readers may assess whether the process was truly random. Authors should not use the term ‘random’ to describe sequences that are deterministic (e.g. alternation, order of recruitment, date of birth).

Item 8b: type of randomisation; details of any restriction (such as blocking and block size)

Some trials restrict randomisation to balance groups in size or important characteristics. Blocking restricts randomisation by grouping participants into 'blocks' and by assigning participants using a random sequence within each block [46]. When blocking is used, authors should describe how the blocks were generated, the size of the blocks, whether and how block size varied, and if trial staff became aware of the block size. Stratification restricts randomisation by creating multiple random allocation sequences based on site or characteristics thought to modify intervention effects [46]. When stratification is used, authors should report why it was used and describe the variables used for stratification, including cut-off values for categories within each stratum. When minimisation is used, authors should report the variables used for minimisation and include the statistical code. When there are no restrictions on randomisation, authors should state that they used ‘simple randomisation’ [43].

Methods: randomisation—allocation concealment mechanism

Item 9: mechanism used to implement the random allocation sequence, describing any steps taken to conceal the sequence until interventions were assigned

In addition to generating a truly random sequence (Item 8a), researchers should conceal the sequence to prevent foreknowledge of the intervention assignment by persons enrolling and assigning participants. Otherwise, recruitment and allocation could be affected by knowledge of the next assignment. Authors should report whether and how allocation was concealed [80, 81]. When allocation was concealed, authors should describe the mechanism and how this mechanism was monitored to avoid tampering or subversion (e.g. centralised or 'third-party' assignment, automated assignment system, sequentially numbered identical containers, sealed opaque envelopes). While masking (blinding) is not always possible, allocation concealment is always possible.

Methods: randomisation—implementation

Item 10: who generated the random allocation sequence, who enrolled participants, and who assigned participants to interventions

In many individually randomised trials, staff who generate and conceal the random sequence are different from the staff involved in implementing the sequence. This can prevent tampering or subversion [48]. Other procedures may be used to ensure true randomisation in trials in which participants (e.g. groups, places) are recruited and then randomised at the same time. Authors should indicate who carried out each procedure (i.e. generating the random sequence, enrolling participants, and assigning participants to interventions) and the methods used to protect the sequence.

Methods: awareness of assignment

Item 11a: who was aware after assignment to interventions (for example, participants, providers, those assessing outcomes), and how any masking was done

Masking (blinding) refers to withholding information about assigned interventions post-randomisation from those involved in the trial [46]. Masking can reduce threats to internal validity arising from an awareness of the intervention assignment by those who could be influenced by this knowledge. Authors should state whether and how (a) participants, (b) providers, (c) data collectors, and (d) data analysts were kept unaware of intervention assignment. If masking was not done (e.g. because it was not possible), authors should describe the methods, if any, used to assess performance and expectancy biases (e.g. masking trial hypotheses, measuring participant expectations) [82]. Although masking of providers and participants is often not possible, masking outcome assessors is usually possible, even for outcomes assessed through interviews or observations. If examined, authors should report the extent to which outcome assessors remained masked to participants’ intervention status.

Item 11b: if relevant, description of the similarity of interventions

Particularly because masking providers and participants is impossible in many social and psychological intervention trials, authors should describe any differences between interventions delivered to each group that could lead to differences in the performance and expectations of providers and participants. Important details include differences in intervention components and acceptability, co-interventions (or adjunctive interventions) that might be available to some groups and not others, and contextual differences between groups (e.g. differences in place of delivery).

Methods: analytical methods

Item 12a: statistical methods used to compare group outcomes

Complete statistical reporting allows the reader to understand the results and to reproduce analyses [48]. For each outcome, authors should describe the methods of analysis, including transformations and adjustment for covariates, and whether the methods of analysis were chosen a priori or decided after data were collected. In the United States, trials funded by the National Institutes of Health must deposit a statistical analysis plan on www.ClinicalTrials.gov with their results [62, 63]. Authors with other funding sources should ascertain whether there are similar requirements. For cluster randomised trials, authors should state whether the unit analysed differs from the unit of assignment, and if applicable, the analytical methods used to account for differences between the unit of assignment, level of intervention, and the unit of analysis [8, 44, 46]. Authors should also note any procedures and rationale for any transformations to the data [46]. To facilitate full reproducibility, authors should report software used to run analyses and provide the exact statistical code [24].

Extended CONSORT-SPI item 12a: how missing data were handled, with details of any imputation method

Missing data are common in trials of social and psychological interventions for many reasons, such as participant discontinuation, missed visits, and participant failure to complete all items or measures (even for participants who have not discontinued the trial) [46]. Authors should report the amount of missing data, evidence regarding the reasons for missingness, and assumptions underlying judgements about missingness (e.g. missing at random) [83]. For each outcome, authors should describe the analysis population (i.e. participants who were eligible to be included in the analysis) and the methods for handling missing data, including procedures to account for missing participants (i.e. participants who withdrew from the trial, did not complete an assessment, or otherwise did not provide data) and procedures to account for missing data items (i.e. questions that were not completed on a questionnaire) [76]. Imputation methods, which aim to estimate missing data based on other data in the dataset, can influence trial results [84]. When imputation is used, authors should describe the variables used for imputation, the number of imputations performed, the software procedures for executing the imputations, and the results of any sensitivity analyses conducted to test assumptions about missing data [84]. For example, it is often helpful to report results without imputation to help readers evaluate the consequences of imputing data.

Item 12b: methods for additional analyses, such as subgroup analyses, adjusted analyses, and process evaluations

In addition to analysing impacts on primary and secondary outcomes, trials often include additional analyses, such as subgroup analyses and mediation analyses to investigate processes of change [51, 85]. All analyses should be reported at the same level of detail. Authors should indicate which subgroup analyses were specified a priori in the trial registration or protocol (Items 23 and 24), how subgroups were constructed, and distinguish confirmatory analyses from exploratory analyses. For adjusted analyses, authors should report the statistical procedures and covariates used and the rationale for these.

Additionally, qualitative analyses may be used to investigate processes of change, implementation processes, contextual influences, and unanticipated outcomes [51]. Authors should indicate whether such analyses were undertaken or are planned (and where they are or will be reported if so). Authors should report methods and results of qualitative analyses according to reporting standards for primary qualitative research [86].

Results: participant flow

Item 13a: for each group, the numbers randomly assigned, receiving intended treatment, and analysed for the outcomes

CONSORT-SPI item 13a: where possible, the number approached, screened, and eligible prior to random assignment, with reasons for non-enrolment

Attrition after randomisation can affect internal validity (i.e. by introducing selection bias), and attrition before or after randomisation can affect generalisability [46]. Authors should report available information about the total number of participants at each stage of the trial, with reasons for non-enrolment (i.e. before randomisation) or discontinuation (i.e. after randomisation). Key stages typically include: approaching participants, screening for potential eligibility, assessment to confirm eligibility, random assignment, intervention receipt, and outcome assessment. As there may be delays between each stage (e.g. between randomisation and initiation of the intervention) [87], authors should include a flow diagram to describe trial attrition in relation to each of these key stages (Fig. 1; Additional file 4)

Item 13b: for each group, losses and exclusions after randomisation, together with reasons

Authors should report participant attrition and data exclusion by the research team for each randomised group at each follow-up point [48]. Authors should distinguish between the number of participants who deviate from the intervention protocol but continue to receive an intervention, discontinue an intervention but continue to provide outcome data, discontinue the trial altogether, and were excluded by the investigators. Authors should provide reasons for each loss (e.g. lost contact, died) and exclusion (e.g. excluded by the investigators because of poor adherence to intervention protocol), and indicate the number of persons who discontinued for unknown reasons.

Results: recruitment

Item 14a: dates defining the periods of recruitment and follow-up

The dates of a trial and its activities provide readers some information about the historical context of the trial [48]. The SPIRIT 2013 Statement includes a table that authors can use to provide a complete schedule of trial activities, including recruitment practices, pre-randomisation assessments, periods of intervention delivery, a schedule of post-randomisation assessments, and when the trial was stopped [55]. In the description, authors should define baseline assessment and follow-up times relative to randomisation. For example, by itself, ‘4-week follow-up’ is unclear and could mean different things if meant after randomisation or after the end of an intervention.

Item 14b: why the trial ended or was stopped

Authors should state why the trial was stopped. Trials might be stopped for reasons decided a priori (e.g. sample size reached and predetermined follow-up period completed) or in response to the results. For trials stopped early in response to interim analyses (Item 7b), authors should state the reason for stopping (e.g. for safety or futility) and whether the stopping rule was decided a priori. If applicable, authors should describe other reasons for stopping, such as implementation challenges (e.g. could not recruit enough participants) or extrinsic factors (e.g. a natural disaster). Authors should indicate whether there are plans to continue collecting outcome data (e.g. long-term follow-up).

Results: baseline data

Item 15: a table showing baseline characteristics for each group

CONSORT-SPI item 15: include socioeconomic variables where applicable

Authors should provide a table summarising all data collected at baseline, with descriptive statistics for each randomised group. This table should include all important characteristics measured at baseline, including pre-intervention data on trial outcomes, and potential prognostic variables. Authors should pay particular attention to topic-specific information related to socioeconomic and other inequalities [88,89,90]. For continuous variables, authors should report the average value and its variance (e.g. mean and standard deviation). For categorical variables, authors should report the numerator and denominator for each category. Authors should not use standard errors and confidence intervals for baseline data because these are inferential (rather than descriptive): inferential statistics assess the probability that observed differences occurred by chance, and all baseline differences in randomised trials occur by chance [91].

Results: numbers analysed

Item 16: for each group, number included in each analysis and whether the analysis was by original assigned groups

While a flow diagram is helpful for indicating the number of participants at each trial stage, the number of participants included in each analysis often differs across outcomes and analyses [44]. Authors should report the number of participants per intervention group for each analysis, so readers can interpret the results and perform secondary analyses of the data. For each outcome, authors should also identify the analysis population and the method used for handling missing data (Item 12a) [76].

Results: outcomes and estimation

Item 17a: for each outcome, results for each group, and the estimated effect size and its precision (such as 95% confidence interval)

For each outcome in a trial, authors should report summary results for all analyses, including results for each trial group and the contrast between groups, the estimated magnitude of the difference (effect size), the precision or uncertainty of the estimate (e.g. 95% confidence interval or CI), and the number of people included in the analysis in each group. The p value does not describe the precision of an effect estimate, and authors should report precision even if the difference between groups is not statistically significant [46]. For categorical outcomes, summary results for each analysis should include the number of participants with the event of interest. The effect size can be expressed as the risk ratio, odds ratio, or risk difference and its precision (e.g. 95% CI). For continuous outcomes, summary results for each analysis should include the average value and its variance (e.g. mean and standard error). The effect size is usually expressed as the mean difference and its precision (e.g. 95% CI). Summary results are often more clearly presented in a table rather than narratively in text.

CONSORT-SPI item 17a: indicate availability of trial data

As part of the growing open-science movement, triallists are increasingly expected to maintain their datasets, linked via trial registrations and posted in trusted online repositories (see http://www.bitss.org/resource-tag/data-repository/), to facilitate reproducibility of reported analyses and future secondary data analyses. Data sharing is also associated with higher citations [92]. Authors should indicate whether and how to obtain trial datasets, including any metadata and analytic code needed to replicate the reported analyses [24, 93]. Any legal or ethical restrictions on making the trial data available should be described [93].

Item 17b: for binary outcomes, presentation of both absolute and relative effect sizes is recommended

By themselves, neither relative measures nor absolute measures provide comprehensive information about intervention effects. Authors should report relative effect sizes (e.g. risk ratios) to express the strength of effects and absolute effect sizes (e.g. risk differences) to indicate actual differences in events between interventions [94].

Results: ancillary analyses

Item 18: results of any other analyses performed, including subgroup analyses, adjusted analyses, and process evaluations, distinguishing pre-specified from exploratory

Authors should report the results for each additional analysis described in the methods (Item 12b), indicating the number of analyses performed for each outcome, which analyses were pre-specified, and which analyses were not pre-specified. When evaluating effects for subgroups, authors should report interaction effects or other appropriate tests for heterogeneity between groups, including the estimated difference in the intervention effect between each subgroup with confidence intervals. Comparing tests for the significance of change within subgroups is not an appropriate basis for evaluating differences between subgroups. If reporting adjusted analyses, authors should provide unadjusted results as well. Authors reporting any results from qualitative data analyses should follow reporting standards for qualitative research [86], though adequately reporting these findings will likely require more than one journal article [51].

Results: harms

Item 19: all important harms or unintended effects in each group (for specific guidance, see CONSORT for Harms) [14]

Social and psychological interventions have the potential to produce unintended effects, both harmful and beneficial [95]. These may be identified in the protocol and relate to the theory of how the interventions are hypothesised to work (Item 2b) [56], or they may be unexpected events that were not pre-specified for assessment. Harms may include indirect effects such as increased inequalities at the level of groups or places that result from the intervention [89]. When reporting quantitative data on unintended effects, authors should indicate how they were defined and measured, and the frequency of each event per trial group. Authors should report all results from qualitative investigations that identify possible unintended effects because this information may help readers make informed decisions about using interventions in future research and practice.

Discussion: limitations

Item 20: trial limitations, addressing sources of potential bias, imprecision, and, if relevant, multiplicity of analyses

Authors should provide a balanced discussion of the strengths and limitations of the trial and its results. Authors should consider issues related to risks of bias, precision of effect estimates, the use of multiple outcomes and analyses, and whether the intervention was delivered and taken up as planned.

Discussion: generalisability

Item 21: generalisability (external validity, applicability) of the trial findings

Authors should address generalisability, or the extent to which the authors believe that trial results can be expected in other situations [66]. Authors should explain how statements about generalisability relate to the trial design and execution. Key factors to consider discussing include: recruitment practices, eligibility criteria, sample characteristics, facilitators and barriers to intervention implementation, the choice of comparator, what outcomes were assessed and how, length of follow-up, and setting characteristics [46, 66].

Discussion: interpretation

Item 22: interpretation consistent with results, balancing benefits and harms, and considering other relevant evidence

Authors should provide a brief interpretation of findings in light of the trial’s objectives or hypotheses [46]. Authors may wish to discuss plausible alternative explanations for results other than differences in effects between interventions [44]. Authors should contextualise results and identify the additional knowledge gained by discussing how the trial adds to the results of other relevant literature [96], including references to previous trials and systematic reviews. If theory was used to inform intervention development or evaluation (Item 2b), authors should discuss how the results of the trial compare with previous theories about how the interventions would work [97, 98]. Authors should consider describing the practical significance of findings; the potential implications of findings to theory, practice and policy; and specific areas of future research to address gaps in current knowledge [49]. Authors should avoid distorted presentation or 'spin' when discussing trial findings [99, 100].

Important information: registration

Item 23: registration number and name of trial registry

Trial registration is the posting of a minimum information set in a public database, including: eligibility criteria, all outcomes, intervention protocols, and planned analyses [101]. Trial registration aids systematic reviews and meta-analyses, and responds to decades-long calls to prevent reporting biases [102,103,104]. Trial registration is now required for all trials published by journals that endorse the International Committee of Medical Journal Editors guidelines and for all trials funded by the National Institutes of Health in the United States as well as the Medical Research Council and National Institute for Health Research in the UK [62, 63, 105, 106].

Trials should be registered prospectively, before beginning enrolment, normally in a publicly accessible website managed by a registry conforming to established standards [101, 105]. Authors should report the name of the trial registry, the unique identification number for the trial provided by that registry, and the stage at which the trial was registered. If authors did not register their trial, they should report this and the reason for not registering. Registries used in clinical medicine (e.g. www.ClinicalTrials.gov) are suitable for social and psychological intervention trials with health outcomes [107, 108], and several registries exist specifically for social and psychological interventions (http://www.bitss.org/resource-tag/registry/).

Important information: protocol

Item 24: where the full trial protocol can be accessed, if available

Details about trial design should be described in a publicly accessible protocol (e.g. published manuscript, report in a repository) that includes a record of all amendments made after the trial began. Authors should report where the trial protocol can be accessed. Guidance on developing and reporting protocols has recently been published [55]. Authors of social and psychological intervention trials who face difficulty finding a journal that publishes trial protocols could search for journals supporting the Registered Reports format (https://cos.io/rr/) or upload their trial protocols to relevant preprint servers such as PsyArXiv (https://psyarxiv.com/) and SocArXiv (https://osf.io/preprints/socarxiv).

Important information: funding

Item 25a: sources of funding and other support, role of funders

Information about trial funding and support is important in helping readers to identify potential conflicts of interest. Authors should identify and describe all sources of monetary or material support for the trial, including salary support for trial investigators and resources provided or donated for any phase of the trial (e.g. space, intervention materials, assessment tools). Authors should report the name of the persons or entities supported, the name of the funder, and the award number. They should also specifically state if these sources had any role in the design, conduct, analysis, and reporting of the trial, and the nature of any involvement or influence. If funders had no involvement or influence, authors should specifically report this.

CONSORT-SPI item 25b: declaration of any other potential interests

In addition to financial interests, it is important that authors declare any other potential interests that may be perceived to influence the design, conduct, analysis, or reporting of the trial following established criteria [109]. Examples include allegiance to or professional training in evaluated interventions. Authors should err on the side of caution in declaring potential interests. If authors do not have any financial, professional, personal, or other potential interests to declare, they should declare this explicitly.

Important information: stakeholder involvement

CONSORT-SPI new item – Item 26a: any involvement of the intervention developer in the design, conduct, analysis, or reporting of the trial

Intervention developers are often authors of trial reports. Because involvement of intervention developers in trials may be associated with effect sizes [110, 111], authors should report whether intervention developers were involved in designing the trial, delivering the intervention, assessing the outcomes, or interpreting the data. Authors should also disclose close collaborations with the intervention developers (e.g. being a former student of the developer, serving on an advisory or consultancy board related to the intervention), and any legal or intellectual rights related to the interventions, especially if these could lead to future financial interests.

CONSORT-SPI new item – Item 26b: other stakeholder involvement in trial design, conduct, or analyses

Researchers are increasingly called to consult or collaborate with those who have a direct interest in the results of trials, such as providers, clients, and payers [112]. Stakeholders may be involved in designing trials (e.g. choosing outcomes) [76], delivering interventions, or interpreting trial results. Stakeholder involvement may help to better ensure the acceptability, implementability, and sustainability of interventions as they move from research to real-world settings [113]. When applicable, authors should describe which stakeholders were involved, how they were recruited, and how they were involved in various stages of the trial [114]. Authors may find reporting standards on public involvement in research useful [115].

CONSORT-SPI new item – Item 26c: incentives offered as part of the trial

Incentives offered to participants, providers, organisations, and others involved in a trial can influence recruitment, engagement with the interventions, and quality of intervention delivery [116]. Incentives include monetary compensation, gifts (e.g. meals, transportation, access to services), academic credit, and coercion (e.g. prison diversion) [48]. When incentives are used, authors should make clear at what trial stage and for what purpose incentives are offered, and what these incentives entail. Authors also should state whether incentives differ by trial group, such as compensation for participants receiving the experimental rather than the comparator interventions.

Conclusions

The results of RCTs are of optimal use when authors report their methods and results accurately, completely, and transparently. The CONSORT-SPI 2018 checklist can help researchers to design and report future trials, and provide guidance to peer reviewers and editors for evaluating manuscripts, to funders in setting reporting criteria for grant applications, and to educators in teaching trial methods. Each item should be addressed before or within the main trial paper (e.g. in the text, as an online supplement, or by reference to a previous report). The level of detail required for some checklist items will depend on the nature of the intervention being evaluated [70], the trial phase [117], and whether the trial involves an evaluation of process or implementation [74].

CONSORT-SPI 2018, like all CONSORT guidance, is an evolving document with a continuous and iterative process of assessment and refinement over time. The authors welcome feedback about the checklist and this E&E document, particularly as new evidence in this area and greater experience with this guidance develop. For instance, interested readers can provide feedback on whether some of the new items in CONSORT-SPI 2018 that are applicable to other types of trials (e.g. handling missing data and availability of trial data) should be incorporated into the next update of the main CONSORT Statement. We also encourage journals in the behavioural and social sciences to join the hundreds of medical journals that endorse CONSORT guidelines, and to inform us of such endorsement. The ultimate benefit of this collective effort should be better practices leading to better health and quality of life.

Abbreviations

- CI:

-

Confidence interval

- CONSORT:

-

Consolidated Standards of Reporting Trials

- CONSORT-SPI:

-

Consolidated Standards of Reporting Trials for Social and Psychological Interventions

- E&E:

-

Explanation and Elaboration

- RCT:

-

Randomised controlled trial

- SPIRIT:

-

Standard Protocol Items: Recommendations for Interventional Trials

- UK:

-

United Kingdom

- US:

-

United States

References

Begg C, Cho M, Eastwood S, Horton R, Moher D, Olkin I. Improving the quality of reporting of randomized controlled trials. The CONSORT statement. JAMA. 1996;276:637–9.

Moher D, Jones A, Lepage L, for the CONSORT Group. Use of the CONSORT statement and quality of reports of randomized trials: A comparative before-and-after evaluation. JAMA. 2001;285:1992–5.

Plint AC, Moher D, Morrison A, et al. Does the CONSORT checklist improve the quality of reports of randomised controlled trials? A systematic review. Med J Aust. 2006;185:263–7.

Turner L, Shamseer L, Altman DG, et al. Consolidated standards of reporting trials (CONSORT) and the completeness of reporting of randomised controlled trials (RCTs) published in medical journals. Cochrane Database Syst Rev. 2012;11:MR000030.

Devereaux PJ, Manns BJ, Ghali WA, Quan H, Guyatt GH. The reporting of methodological factors in randomized controlled trials and the association with a journal policy to promote adherence to the consolidated standards of reporting trials (CONSORT) checklist. Control Clin Trials. 2002;23:380–8.

Shamseer L, Hopewell S, Altman DG, Moher D, Schulz KF. Update on the endorsement of CONSORT by high impact factor journals: a survey of journal ‘instructions to authors’ in 2014. Trials. 2016;17(1):301.

Hopewell S, Altman DG, Moher D, Schulz KF. Endorsement of the CONSORT statement by high impact factor medical journals: a survey of journal editors and journal ‘Instructions to Authors’. Trials. 2008;9:20.

Campbell MK, Piaggio G, Elbourne DR, Altman DG. Consort 2010 statement: extension to cluster randomised trials. BMJ. 2012;345:e5661.

Piaggio G, Elbourne DR, Pocock SJ, Evans SJW, Altman DG, for the CONSORT Group. Reporting of noninferiority and equivalence randomized trials: extension of the CONSORT 2010 statement. JAMA. 2012;308(24):2594–604.

Zwarenstein M, Treweek S, Gagnier JJ, et al. Improving the reporting of pragmatic trials: an extension of the CONSORT statement. BMJ. 2008;337:a2390.

Vohra S, Shamseer L, Sampson M, et al. CONSORT extension for reporting N-of-1 trials (CENT) 2015 statement. BMJ. 2015;350:h1738.

Calvert M, Blazeby J, Altman DG, et al. Reporting of patient-reported outcomes in randomized trials: the CONSORT PRO extension. JAMA. 2013;309(8):814–22.

Hopewell S, Clarke M, Moher D, et al. CONSORT for reporting randomised trials in journal and conference abstracts. Lancet. 2008;371:281–3.

Ioannidis JP, Evans SJ, Gotzsche PC, et al. Better reporting of harms in randomized trials: an extension of the CONSORT statement. Ann Intern Med. 2004;141:781–8.

Boutron I, Moher D, Altman DG, Schulz K, Ravaud P, for the CONSORT group. Methods and processes of the CONSORT group: example of an extension for trials assessing nonpharmacologic treatments. Ann Intern Med. 2008;148(4):W60–6.

Gagnier JJ, Boon H, Rochon P, Moher D, Barnes J, Bombardier C. Reporting randomized, controlled trials of herbal interventions: an elaborated CONSORT statement. Ann Intern Med. 2006;144:364–7.

MacPherson H, Altman DG, Hammerschlag R, et al. Revised STandards for reporting interventions in clinical trials of acupuncture (STRICTA): extending the CONSORT statement. PLoS Med. 2010;7(6):e1000261.

Grant SP, Mayo-Wilson E, Melendez-Torres GJ, Montgomery P. Reporting quality of social and psychological intervention trials: a systematic review of reporting guidelines and trial publications. PLoS One. 2013;8(5):e65442.

Craig P, Dieppe P, Macintyre S, Michie S, Nazareth I, Petticrew M. Developing and evaluating complex interventions: the new Medical Research Council guidance. BMJ. 2008;337:a1655.

Michie S, Fixsen D, Grimshaw J, Eccles M. Specifying and reporting complex behaviour change interventions: the need for a scientific method. Implement Sci. 2009;4:40.

Mayo-Wilson E. Reporting implementation in randomized trials: proposed additions to the consolidated standards of reporting trials statement. Am J Public Health. 2007;97(4):630–3.

Glasziou P, Meats E, Heneghan C, Shepperd S. What is missing from descriptions of treatment in trials and reviews? BMJ. 2008;336(7659):1472–4.

Open Science Collaboration. Estimating the reproducibility of psychological science. Science. 2015;349(6251):aac4716.

Nosek BA, Alter G, Banks GC, et al. Promoting an open research culture. Science. 2015;348:1422–5.

McNutt M. Reproducibility. Science. 2014;343(6168):229.

Open Science Collaboration. An open, large-scale, collaborative effort to estimate the reproducibility of psychological science. Perspect Psychol Sci. 2012;7(6):657–60.

Tajika A, Ogawa Y, Takeshima N, Hayasaka Y, Furukawa TA. Replication and contradiction of highly cited research papers in psychiatry: 10-year follow-up. Br J Psychiatry. 2015; Available online July 2015: https://doi.org/10.1192/bjp.bp.1113.143701.

Michie S, Wood CE, Johnston M, Abraham C, Francis JJ, Hardeman W. Behaviour change techniques: the development and evaluation of a taxonomic method for reporting and describing behaviour change interventions (a suite of five studies involving consensus methods, randomised controlled trials and analysis of qualitative data). Health Technol Assess. 2015;19(99):1–187.

Glasziou P, Altman DG, Bossuyt P, et al. Reducing waste from incomplete or unusable reports of biomedical research. Lancet. 2014;383(9913):267–76.

Moher D, Glasziou P, Chalmers I, et al. Increasing value and reducing waste in biomedical research: Who’s listening? Lancet. 2015; Available online 27 September 2015:doi: 10.1016/S0140-6736(1015)00307-00304

Driessen E, Hollon SD, Bockting CLH, Cuijpers P, Turner EH. Does publication bias inflate the apparent efficacy of psychological treatment for major depressive disorder? A systematic review and meta-analysis of us national institutes of health-funded trials. PLoS One. 2015;10(9):e0137864.

Ioannidis JP, Munafo MR, Fusar-Poli P, Nosek BA, David SP. Publication and other reporting biases in cognitive sciences: detection, prevalence, and prevention. Trends Cogn Sci. 2014;18(5):235–41.

Cybulski L, Mayo-Wilson E, Grant S. Improving transparency and reproducibility through registration: the status of intervention trials published in clinical psychology journals. J Consult Clin Psychol. 2016;84(9):753.

Mayo-Wilson E, Grant S, Hopewell S, Macdonald G, Moher D, Montgomery P. Developing a reporting guideline for social and psychological intervention trials. Trials. 2013;14(1):242.

Fraser MW, Galinsky MJ, Richman JM, Day SH. Intervention research: developing social programs. New York: Oxford University Press; 2009.

Medical Research Council. Developing and evaluating complex interventions: New guidance. London: Medical Research Council; 2008. www.mrc.ac.uk/documents/pdf/complex-interventions-guidance.

Grant S. Development of a CONSORT extension for social and psychological interventions. Oxford: Social Policy & Intervention, University of Oxford; 2014.

Institute of Medicine. Psychosocial interventions for mental and substance use disorders: a framework for establishing evidence-based standards. Washington, DC: The National Academies Press; 2015.

Riley W. New NIH Clinical Trials Policies: Implications for Behavioral and Social Science Researchers. 2016; https://obssr.od.nih.gov/new-nih-clinical-trials-policies-implications-for-behavioral-and-social-science-researchers/.

Möhler R, Köpke S, Meyer G. Criteria for reporting the development and evaluation of complex interventions in healthcare: revised guideline (CReDECI 2). Trials. 2015;16:204.

Montgomery P, Grant S, Hopewell S, et al. Protocol for CONSORT-SPI: an extension for social and psychological interventions. Implement Sci. 2013;8(1):99.

Montgomery P, Grant S, Mayo-Wilson E, et al. Reporting randomised trials of social and psychological interventions: The CONSORT-SPI 2017 Extension. TRLS-D-18-00052.

Moher D, Hopewell S, Schultz KF, et al. CONSORT 2010 explanation and elaboration: updated guidelines for reporting parallel group randomised trials. BMJ. 2010;340:c869.

Des Jarlais DC, Lyles C, Crepaz N, the TREND Group. Improving the reporting quality of nonrandomized evaluations of behavioral and public health interventions: the TREND statement. Am J Public Health. 2004;94(3):361–6.

Petticrew M, Roberts H. Systematic reviews in the social sciences: a practical guide. Oxford: Blackwell Publishing Ltd; 2006.

Cooper H. Reporting research in psychology: how to meet journal article reporting standards. Washington, DC: APA; 2011.

Hopewell S, Clarke M, Moher D, et al. CONSORT for reporting randomized controlled trials in journal and conference abstracts: explanation and elaboration. PLoS Med. 2008;5(1):e20.

Davidson KW, Goldstein M, Kaplan RM, et al. Evidence-based behavioral medicine: what is it and how do we achieve it? Ann Behav Med. 2003;26(3):161–71.

Moberg-Mogren E, Nelson DL. Evaluating the quality of reporting occupational therapy randomized controlled trials by expanding the CONSORT criteria. Am J Occup Ther. 2006;60:226–35.

Zarin DA, Tse T, Williams RJ, Califf RM, Ide NC. The ClinicalTrials. gov results database—update and key issues. N Engl J Med. 2011;364(9):852–60.

Moore GF, Audrey S, Barker M, et al. Process evaluation of complex interventions: Medical Research Council guidance. BMJ. 2015;350:h1258.

Breuer E, Lee L, De Silva M, Lund C. Using theory of change to design and evaluate public health interventions: a systematic review. Implement Sci. 2016;11(1):63.

Davidoff F, Dixon-Woods M, Leviton L, Michie S. Demystifying theory and its use in improvement. BMJ Qual Saf. 2015;24(3):228–38.

Abraham C, Michie S. A taxonomy of behavior change techniques used in interventions. Health Psychol. 2007;27:379–87.

Chan AW, Tetzlaff JM, Altman DG, et al. SPIRIT 2013 statement: defining standard protocol items for clinical trials. Ann Intern Med. 2013;158(3):200–7.

Bonell C, Jamal F, Melendez-Torres GJ, Cummins S. ‘Dark logic’: theorising the harmful consequences of public health interventions. J Epidemiol Community Health. 2015;69(1):95–8.

American Educational Research Association. Standards for reporting on empirical social science research in AERA publications. Educ Res. 2006;35(6):33–40.

Goodman SN, Fanelli D, Ioannidis JPA. What does research reproducibility mean? Sci Transl Med. 2016;8(341):341.

Piaggio G, Elbourne DR, Altman DG, Pocock SJ, Evans SJW, for the CONSORT Group. Reporting of noninferiority and equivalence randomized trials: an extension of the CONSORT statement. JAMA. 2006;294(10):1152–60.

Shamseer L, Sampson M, Bukutu C, et al. CONSORT extension for N-of-1 trials (CENT) guidelines. BMC Complement Altern Med. 2012;12(Suppl 1):P140.

Zarin DA, Tse T, Williams RJ, Carr S. Trial reporting in ClinicalTrials. gov—the final rule. N Engl J Med. 2016;375(20):1998–2004.

Hudson KL, Lauer MS, Collins FS. Toward a new era of trust and transparency in clinical trials. JAMA. 2016;316(13):1353–4.

National Institutes of Health. NIH policy on dissemination of NIH-funded clinical trial information. Fed Regist. 2016;81:183.

Simmons JP, Nelson LD, Simonsohn U. False-positive psychology: undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychol Sci. 2011;22(11):1359–66.

Scott A, Rucklidge JJ, Mulder RT. Is mandatory prospective trial registration working to prevent publication of unregistered trials and selective outcome reporting? An observational study of five psychiatry journals that mandate prospective clinical trial registration. PLoS One. 2015;10(8):e0133718.

Shadish WR, Cook TD, Campbell DT. Experimental and quasi-experimental designs for generalized causal inference. Belmont: Wadsworth; 2002.

Wells M, Williams B, Treweek S, Coyle J, Taylor J. Intervention description is not enough: evidence from an in-depth multiple case study on the untold role and impact of context in randomised controlled trials of seven complex interventions. Trials. 2012;13(1):95.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4(1):50.

Montgomery P, Underhill K, Gardner F, Operario D, Mayo-Wilson E. The Oxford implementation index: a new tool for incorporating implementation data into systematic reviews and meta-analyses. J Clin Epidemiol. 2013;66(8):874–82.

Hoffmann TC, Glasziou P, Boutron I, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ. 2014;348:g1687.

Appelbaum M, Cooper H, Kline RB, Mayo-Wilson E, Nezu AM, Rao SM. Journal article reporting standards for quantitative research in psychology: the APA publications and communications board task force report. Am Psychol. 2018;73(1):3

Perera R, Heneghan C, Yudkin PA. Graphical method for depicting randomised trials of complex interventions. BMJ. 2007;334:127–9.

Torgerson DJ. Contamination in trials: is cluster randomisation the answer? BMJ. 2001;322:355–7.

Moore G, Audrey S, Barker M, et al. Process evaluation in complex public health intervention studies: the need for guidance. J Epidemiol Community Health. 2013;68(2):101–2.

West R. Providing full manuals and intervention descriptions: addiction policy. Addiction. 2008;103:1411.

Mayo-Wilson E, Fusco N, Li T, et al. Multiple outcomes and analyses in clinical trials create challenges for interpretation and research synthesis. J Clin Epidemiol. 2017;86:39–50.

Altman DG, Moher D, Schulz KF. Harms of outcome switching in reports of randomised trials: CONSORT perspective. BMJ. 2017;356:j396.

Chalder T, Deary V, Husain K, Walwyn R. Family-focused cognitive behaviour therapy versus psycho-education for chronic fatigue syndrome in 11- to 18-year-olds: a randomized controlled treatment trial. Psychol Med. 2010;40:1269–79.

Slutsky AS. Data safety and monitoring boards. N Engl J Med. 2004;350(11):1143–7.

Schulz KF, Grimes DA. Allocation concealment in randomised trials: defending against deciphering. Lancet. 2002;359(9306):614–8.

Cuijpers P, Cristea IA. How to prove that your therapy is effective, even when it is not: a guideline. Epidemiol Psychiatr Sci. 2016;25(5):428–35.

Wilson DB. Comment on ‘developing a reporting guideline for social and psychological intervention trials’. J Exp Criminol. 2013;9:375–7.

Little RJ, Rubin DB. Statistical analysis with missing data. 2nd ed. Hoboken: Wiley; 2002.

Li T, Hutfless S, Scharfstein DO, et al. Standards should be applied in the prevention and handling of missing data for patient-centered outcomes research: a systematic review and expert consensus. J Clin Epidemiol. 2014;67(1):15–32.

Gardner F, Hutchings J, Bywater T, Whitaker C. Who benefits and how does it work? Moderators and mediators of outcome in an effectiveness trial of a parenting intervention. J Clin Child Adolesc Psychol. 2010;39(4):568–80.

Tong A, Flemming K, McInnes E, Oliver S, Craig J. Enhancing transparency in reporting the synthesis of qualitative research: ENTREQ. BMC Med Res Methodol. 2012;12(1):181.

Boutron I, Altman D, Moher D, Schulz KF, Ravaud P. CONSORT statement for randomized trials of nonpharmacologic treatments: a 2017 update and a CONSORT extension for nonpharmacologic trial abstracts. Ann Intern Med. 2017;167(1):40–7.

Welch V, Jull J, Petkovic J, et al. Protocol for the development of a CONSORT-equity guideline to improve reporting of health equity in randomized trials. Implement Sci. 2015;10(1):146.

Welch V, Petticrew M, Tugwell P, et al. PRISMA-equity 2012 extension: reporting guidelines for systematic reviews with a focus on health equity. PLoS Med. 2012;9(10):e1001333.

O'Neill J, Tabish H, Welch V, et al. Applying an equity lens to interventions: using PROGRESS ensures consideration of socially stratifying factors to illuminate inequities in health. J Clin Epidemiol. 2014;67(1):56–64.

Knol MJ, Groenwold R, Grobbee DE. P-values in baseline tables of randomised controlled trials are inappropriate but still common in high impact journals. Eur J Prev Cardiol. 2012;19:231–2.

Piwowar HA, Day RS, Fridsma DB. Sharing detailed research data is associated with increased citation rate. PLoS One. 2007;2:e308.

Taichman DB, Sahni P, Pinborg A, et al. Data sharing statements for clinical trials: a requirement of the International Committee of Medical Journal Editors. JAMA. 2017;317(24):2491–2.

Lipsey MW, Wilson DB. Practical meta-analysis. Thousand Oaks: Sage; 2001.

McCord J. Cures that harm: unanticipated outcomes of crime prevention programs. Ann Am Acad Polit Soc Sci. 2003;587:16–30.

Chalmers I. Trying to do more good than harm in policy and practice: the role of rigorous, transparent, up-to-date evaluations. Ann Am Acad Polit Soc Sci. 2003;589(22):22–40.

Fletcher A, Jamal F, Moore G, Evans RE, Murphy S, Bonell C. Realist complex intervention science: applying realist principles across all phases of the Medical Research Council framework for developing and evaluating complex interventions. Evaluation. 2016;22(3):286–303.

Moore GF, Evans RE. What theory, for whom and in which context? Reflections on the application of theory in the development and evaluation of complex population health interventions. SSM-Popul Health. 2017;3(132–5.

Boutron I, Dutton S, Ravaud P, Altman DG. Reporting and interpretation of randomized controlled trials with statistically nonsignificant results for primary outcomes. JAMA. 2010;303(20):2058–64.

Chiu K, Grundy Q, Bero L. ‘Spin’ in published biomedical literature: a methodological systematic review. PLoS Biol. 2017;15(9):e2002173.

World Health Organization. WHO Trial Registration Data Set (Version 12.1). 2017; http://www.who.int/ictrp/network/trds/en/. Accessed 26 Feb 2017.

Meinert CL. Toward prospective registration of clinical trials. Control Clin Trials. 1988;9(1):1–5.

Simes R. Publication bias: the case for an international registry of clinical trials. J Clin Oncol. 1986;4(10):1529–41.

Dickersin K. Report from the panel on the case for registers of clinical trials at the eighth annual meeting of the Society for Clinical Trials. Control Clin Trials. 1988;9(1):76–81.

De Angelis C, Drazen JM, Frizelle FA, et al. Clinical trial registration: a statement from the International Committee of Medical Journal Editors. N Engl J Med. 2004;351(12):1250–1.

International Clinical Trials Registry Platform (ICTRP). Joint statement on public disclosure of results from clinical trials 2017.

Harrison BA, Mayo-Wilson E. Trial registration: understanding and preventing reporting bias in social work research. Res Soc Work Pract. 2014;24(3):372–6.

Cybulski L, Mayo-Wilson E, Grant S. Improving transparency and reproducibility through registration: the status of intervention trials published in clinical psychology journals. J Consult Clin Psychol. 2016;84(9):753–67.

Huth EJ. Guidelines on authorship of medical papers. Ann Intern Med. 1986;104(2):269–74.

Petrosino A, Soydan H. The impact of program developers as evaluators on criminal recidivism: results from meta-analyses of experimental and quasi-experimental research. J Exp Criminol. 2005;1(4):435–50.

Eisner M. No effects in independent prevention trials: can we reject the cynical view? J Exp Criminol. 2009;5(2):163–83.

Concannon TW, Meissner P, Grunbaum JA, et al. A new taxonomy for stakeholder engagement in patient-centered outcomes research. J Gen Intern Med. 2012;27(8):985–91.

Keown K, Van Eerd D, Irvin E. Stakeholder engagement opportunities in systematic reviews: knowledge transfer for policy and practice. J Contin Educ Health Prof. 2008;28(2):67–72.

Concannon TW, Fuster M, Saunders T, et al. A systematic review of stakeholder engagement in comparative effectiveness and patient-centered outcomes research. J Gen Intern Med. 2014;29(12):1692–701.

Staniszewska S, Brett J, Simera I, et al. GRIPP2 reporting checklists: tools to improve reporting of patient and public involvement in research. BMJ. 2017;358:j3453.

Bower P, Brueton V, Gamble C, et al. Interventions to improve recruitment and retention in clinical trials: a survey and workshop to assess current practice and future priorities. Trials. 2014;15(1):399.

Gottfredson D, Cook T, Gardner F, et al. Standards of evidence for efficacy, effectiveness, and scale-up research in prevention science: next generation. Prev Sci. 2015;16(7):893–926.

Acknowledgements

We thank all of the stakeholders who have provided their input on the project.

CONSORT-SPI Group: J. Lawrence Aber (Willner Family Professor of Psychology and Public Policy and University Professor, Steinhardt School of Culture, Education, and Human Development, New York University), Doug Altman (Professor of Statistics in Medicine and Director of the UK EQUATOR Centre, Centre for Statistics in Medicine, University of Oxford), Kamaldeep Bhui (Professor of Cultural Psychiatry & Epidemiology, Wolfson Institute of Preventive Medicine, Queen Mary University of London), Andrew Booth (Reader in Evidence Based Information Practice and Director of Information, Information Resources, University of Sheffield), David Clark (Professor and Chair of Experimental Psychology, Experimental Psychology, University of Oxford), Peter Craig (Senior Research Fellow, MRC/CSO Social and Public Health Sciences Unit, Institute of Health & Wellbeing, University of Glasgow), Manuel Eisner (Wolfson Professor of Criminology, Institute of Criminology, University of Cambridge), Mark W. Fraser (John A. Tate Distinguished Professor for Children in Need, School of Social Work, The University of North Carolina at Chapel Hill), Frances Gardner (Professor of Child and Family Psychology, Department of Social Policy and Intervention, University of Oxford), Sean Grant (Behavioural & Social Scientist, Behavioural & Policy Sciences, RAND Corporation), Larry Hedges (Board of Trustees Professor of Statistics and Education and Social Policy, Institute for Policy Research, Northwestern University), Steve Hollon (Gertrude Conaway Vanderbilt Professor of Psychology, Psychological Sciences, Vanderbilt University), Sally Hopewell (Associate Professor, Oxford Clinical Trials Research Unit, University of Oxford), Robert Kaplan (Professor Emeritus, Department of Health Policy and Management, Fielding School of Public Health, University of California, Los Angeles), Peter Kaufmann (Leader, Behavioural Medicine and Prevention Research Group, National Heart, Lung, & Blood Institute, National Institutes of Health), Spyros Konstantopoulos (Professor, Department of Counselling, Educational Psychology, and Special Education, Michigan State University), Geraldine Macdonald (Professor of Social Work, School for Policy Studies, University of Bristol), Evan Mayo-Wilson (Assistant Scientist, Division of Clinical Trials and Evidence Synthesis, Department of Epidemiology, Johns Hopkins University), Kenneth McLeroy (Regents & Distinguished Professor, Health Promotion and Community Health Sciences, Texas A&M University), Susan Michie (Professor of Health Psychology and Director of the Centre for Behaviour Change, University College London), Brian Mittman (Research Scientist, Department of Research and Evaluation, Division of Health Services Research and Implementation Science, Kaiser Permanente), David Moher (Senior Scientist, Clinical Epidemiology Program, Ottawa Hospital Research Institute), Paul Montgomery (Professor of Social Intervention, Department of Social Work and Social Care, University of Birmingham), Arthur Nezu (Distinguished University Professor of Psychology, Department of Psychology, Drexel University), Lawrence Sherman (Director of the Jerry Lee Centre for Experimental Criminology and Chair of the Cambridge Police Executive Programme, the Institute of Criminology, University of Cambridge.), Edmund Sonuga-Barke (Professor of Developmental Psychology, Psychiatry, & Neuroscience, Institute of Psychiatry, Kings College London), James Thomas (Professor Social Research and Policy, UCL Institute of Education, University College London), Gary VandenBos (Executive Director, Office of Publications and Databases, American Psychological Association), Elizabeth Waters (Jack Brockhoff Chair of Child Public Health, McCaughey VicHealth Centre for Community Wellbeing, Melbourne School of Population & Global Health, University of Melbourne), Robert West (Professor of Health Psychology and Director of Tobacco Studies, Health Behaviour Research Centre, Department of Epidemiology and Public Health) and Joanne Yaffe (Professor, College of Social Work, University of Utah).

Funding

This project is funded by the UK Economic and Social Research Council (ES/K00087X/1).

Availability of data and materials

Methodological protocols, data collection materials, and data from the Delphi process and consensus meeting can be requested from ReShare, the UK Data Service’s online data repository (doi: https://doi.org/10.5255/UKDA-SN-851981).

Author information

Authors and Affiliations

Consortia

Contributions

SG, EMW, and PM conceived of the idea for the project and contributed equally to this manuscript. SG, EMW, PM, GM, SM, SH, and DM led the project and wrote the first draft of the manuscript. All authors have contributed to and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethics approval was obtained from the Department Research Ethics Committee for the Department of Social and Intervention, University of Oxford (reference 2011-12_83). Informed consent was obtained from all participants.

Consent for publication

Not applicable.

Competing interests

SG’s spouse is a salaried-employee of Eli Lilly and Company, and owns stock. SG has accompanied his spouse on company-sponsored travel. SH and DM are members of the CONSORT Group. All other authors declare no competing interest.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

Table S1. The CONSORT-SPI group. (DOCX 68 kb)

Additional file 2:

Table S2. Examples of information to include when reporting randomised trials of social and psychological interventions. (DOCX 132 kb)

Additional file 3:

Table S3. Examples of abstracts adherent to the CONSORT-SPI Extension for Abstracts. (DOCX 73 kb)

Additional file 4:

Figure S1. Example of a participant flow diagram. (DOCX 111 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Grant, S., Mayo-Wilson, E., Montgomery, P. et al. CONSORT-SPI 2018 Explanation and Elaboration: guidance for reporting social and psychological intervention trials. Trials 19, 406 (2018). https://doi.org/10.1186/s13063-018-2735-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13063-018-2735-z