Abstract

Recent advances in single-cell biotechnologies have resulted in high-dimensional datasets with increased complexity, making feature selection an essential technique for single-cell data analysis. Here, we revisit feature selection techniques and summarise recent developments. We review their application to a range of single-cell data types generated from traditional cytometry and imaging technologies and the latest array of single-cell omics technologies. We highlight some of the challenges and future directions and finally consider their scalability and make general recommendations on each type of feature selection method. We hope this review stimulates future research and application of feature selection in the single-cell era.

Similar content being viewed by others

Introduction

High-throughput biotechnologies are at the centre of modern molecular biology, where typically a sheer number of biomolecules are measured in cells and tissues. While significantly higher coverage of molecules is achieved by high-throughput biotechnologies compared to traditional biochemical assays, the variation in sample quality, reagents and workflow introduces profound technical variation in the data. The high dimensionality, redundancy and noise commonly found in these large-scale molecular datasets create significant challenges in their analysis and can lead to a reduction in model generalisability and reliability. Feature selection, a class of computational techniques for data analytics and machine learning, is at the forefront in dealing with these challenges and has been an essential driving force in a wide range of bioinformatics applications [1].

Until recently, the global molecular signatures generated from most high-throughput biotechnologies have been the average profiles of mixed populations of cells from tissues, organs or patients, and feature selection techniques have been predominately applied to such ‘bulk’ data. However, the recent development of technologies that enables the profiling of various molecules (e.g. DNA, RNA, protein) in individual cells at the omics scale has revolutionised our ability to study various molecular programs and cellular processes at the single-cell resolution [2]. The accumulation of large-scale and high-dimensional single-cell data has seen renewed interests in developing and the need for applying feature selection techniques to such data given their increased scale and complexity compared to their bulk counterparts.

To foster research in feature selection in the new era of single-cell sciences, we set out to revisit the feature selection literature, summarise its advancement in the last decade and recent development in the field of deep learning and review its current applications in various single-cell data types. We then discuss some key challenges and opportunities that we hope would inspire future research and development in this fast-growing interdisciplinary field. Finally, we consider the scalability and applicability of each type of feature selection methods and make general recommendations to their usage.

Basics of feature selection techniques

Feature selection refers to a class of computational methods where the aim is to select a subset of useful features from the original feature set in a dataset. When dealing with high-dimensional data, feature selection is an effective strategy to reduce the feature dimension and redundancy and can alleviate issues such as model overfitting in downstream analysis. Different from dimension reduction methods (e.g. principal component analysis) where features in a dataset are combined and/or transformed to derive a lower feature dimension, feature selection methods do not alter the original features in the dataset but only identify and select features that satisfy certain pre-defined criteria or optimise certain computational procedures [3]. The application of feature selection in bioinformatics is widespread [1]. Some of the most popular research directions include selecting genes that can discriminate complex diseases such as cancers from microarray data [4, 5], selecting protein markers that can be used for disease diagnosis and prognostic prediction from mass spectrometry-based proteomics data [6], identifying single nucleotide polymorphisms (SNPs) and their interactions that are associated with specific phenotypes or diseases in genome-wide association studies (GWAS) [7], selecting epigenetic features that mark cancer subtypes [8] and selecting DNA structural properties for predicting genomic regulatory elements [9]. Traditionally, feature selection techniques fall into one of the three categories including filters, wrappers and embedded methods (Fig. 1). In this section, we revisit the key properties and defining characteristics of the three categories of feature selection methods. Please refer to [10] for a comprehensive survey of feature selection methods.

Filter methods typically rank the features based on certain criteria that may facilitate other subsequent analyses (e.g. discriminating samples) and select those that pass a threshold judged by the filtering criteria (Fig. 1A). In bioinformatics applications, commonly used criteria are univariate methods such as t statistics, on which most ‘differential expression’ (DE) methods for biological data analysis are built [11], and multivariate methods that take into account relationships among features [12]. The main advantages of filter methods lie in their simplicity, requiring less computational resources in general and ease of applications in practice [13]. However, filter methods typically select features independent from the induction algorithms (e.g. classification algorithms) that are applied for downstream analyses, and therefore, the selected features may not be optimal with respect to the induction algorithms in the subsequent applications.

In comparison, wrappers utilise the performance of the induction algorithms to guide the feature selection process and therefore may lead to features that are more conducive to the induction algorithm used for optimisation in downstream analyses [14] (Fig. 1B). A key aspect of wrapper methods is the design of the feature optimisation algorithms that maximise the performance of the induction algorithms. Since the feature dimensions are typically very high in bioinformatics applications, exhaustive search is often impractical. To this end, various greedy algorithms, such as forward and backward selection [15], and nature-inspired algorithms, such as the genetic algorithm (GA) [16] and the particles swarm optimisation (PSO) [17], were employed to speed up the optimisation and feature selection processes. Nevertheless, since the induction algorithms are included to iteratively evaluate feature subsets, wrappers are typically computationally intensive compared to filter methods.

While filters and wrappers separate feature selection from downstream analysis, embedded methods typically perform feature selection as part of the induction algorithm itself [18] (Fig. 1C). Akin to wrappers, embedded methods optimise selected features with respect to an induction model and therefore may lead to more suited features for the induction algorithm in subsequent tasks such as sample classification. Since the embedded methods perform feature selection and induction simultaneously, it is also generally more computationally efficient than wrapper methods albeit less so when compared to filter methods [19]. Nevertheless, as feature selection is part of the induction algorithm in embedded methods, they are often specific to the algorithmic design and less generic compared to filters and wrappers. Popular choices of embedded methods in bioinformatics applications include tree-based methods [20, 21] and shrinkage-based methods such as LASSO [22].

Advance of feature selection in the past decade

Besides the astonishing increase in the number of feature selection techniques in the last decade, we have also seen a few notable trends in their development. Here, we summarise three aspects that have shown proliferating research in various fields and applications, including bioinformatics.

First, a variety of approaches have been proposed for ensemble feature selection, including those for filters [23, 24], wrappers [25] and embedded methods such as tree-based ensembles [26]. Ensemble learning is a well-established approach where instead of building a single model, multiple ‘base’ models are combined to perform tasks [27]. Supervised ensemble classification models are popular among bioinformatics applications [28] and have recently seen their increasing integration with deep learning models [29]. Similar to their counterpart in supervised learning, ensemble feature selection methods, typically, rely on either perturbation to the dataset or hyperparameters of the feature selection algorithms for creating ‘base selectors’ from which the ensemble could be derived [30]. Examples include using different subsets of samples for creating multiple filters or using different learning parameters in an induction algorithm of a wrapper method. Key attributes of ensemble feature selection methods are that they generally achieve better generalisability in sample classification [31] and higher reproducibility in feature selection [32, 33]. Although these improvements in performance typically come with a cost on computational efficiency, ensemble feature selection methods are increasingly popular given the increasing computational capacity in the last decade and the parallelisation in some of their implementations [34,35,36].

Second, various hybrid methods have been proposed to combine filters, wrappers and embedded methods [37]. While these methods closely resemble ensemble approaches, they do not rely on data or model perturbations but instead use heterogeneous feature selection algorithms for creating a consensus [38]. Typically, these include combining different filter algorithms or different types of feature selection algorithms (e.g. stepwise combination of filter and wrapper). Generally, hybrid methods are motivated by the aim of taking advantage of the strengths of individual methods while alleviating or avoiding their weaknesses [39]. For example, in bioinformatics applications, several methods combine filters with wrappers in that filters are first applied to reduce the number of features from high dimension to a moderate number so that wrappers can be employed more efficiently for generating the final set of features [40, 41]. As another example, genes selected by various feature selection methods are used for training a set of support vector machines (SVMs) for achieving better classification accuracy using microarray data [42]. While many hybrid feature selection algorithms are intuitive and numerous studies have reported favourable results compared to their individual components, a fundamental issue of these methods is their ad hoc nature, complicating the formal analysis of their underlying properties, such as theoretical algorithmic complexity and scalability.

Third, a recent evolution in feature selection has been its development and implementation using deep learning models. These include models based on perturbation [43, 44], such as randomly excluding features to test their impact on the neural network output, and gradient propagation, where the gradient from the trained neural network is backpropagated to determine the importance of the input feature [45, 46]. These deep learning feature selection models share a common concept of ‘saliency’ which was initially designed for interpreting black-box deep neural networks by highlighting input features that are relevant for the prediction of the model [47]. Some examples in bioinformatics applications include a deep feature selection model that uses a neural network with a weighted layer to select key input features for the identification and understanding regulatory events [48]; and a generative adversarial network approach for identifying genes that are associated with major depressive disorders using gradient-based methods [49]. While feature selection methods that are based on deep learning generally require significantly more computational resources (e.g. memory) and may be slower than traditional methods (especially when compared to filter methods), their capabilities for identifying complex relationships (e.g. non-linearity, interaction) among features have attracted tremendous attention in recent years.

Feature selection in the single-cell era

Until recently, the global molecular signatures generated from most biotechnologies are the average profiles from mixed populations of cells, masking the heterogeneity of cell and tissue types, a foundational characteristic of multicellular organisms [50]. Breakthroughs in global profiling techniques at the single-cell resolution, such as single-cell RNA-sequencing (scRNA-seq), single-cell Assay for Transposase Accessible Chromatin using sequencing (scATAC-seq) [51] and cellular indexing of transcriptomes and epitopes by sequencing (CITE-seq) [52], have reshaped many of our long-held views on multicellular biological systems. These advances of single-cell technologies create unprecedented opportunities for studying complex biological systems at resolutions that were previously unattainable and have led to renewed interests in feature selection for analysing such data. Below we review some of the latest developments and applications of feature selection across various domains in the single-cell field. Table 1 summarises the methods and their applications with additional details included in Additional file 1: Table S1.

Feature selection in single-cell transcriptomics

By far, the most widely applied single-cell omics technologies are single-cell transcriptomics [53] made popular by an array of scRNA-seq protocols [54]. Given the availability of a huge amount of scRNA-seq data and the large number of genes profiled in these datasets, a similar characteristic of their bulk counterparts, most of recent feature selection applications in single-cell transcriptomics have been concentrated on gene selection from scRNA-seq data for various upstream pre-processing and downstream data analyses.

Among these, some of the most popular methods are univariate filters designed for identifying differential distributed genes, including t statistics or ANOVA based DE methods [55, 56] and other statistical approaches such as differential variability (DV) [57] and differential proportion (DP) [58]. While differential distribution-based methods can often identify genes that are highly discriminative for downstream analysis, they require labels such as cell types to be pre-defined, limiting their applicability when such information is not available. A less restrictive and widely used alternative approach is to filter for highly variable genes (HVGs), which is implemented in various methods including the popular Seurat package [59]. Other methods that do not require label information include SCMarker which relies on testing the number of modalities of each gene through its expression profile [60], M3Drop which models the relationship between mean expression and dropout rate [61], and OGFSC, a variant of HVGs, based on modelling coefficient of variance of genes across cells [62]. Many scRNA-seq clustering algorithms also implement HVGs and its variants for gene filtering to improve the clustering of cells [63]. Besides the above univariate filters, recent research has also explored multivariate approaches. Examples include COMET which relies on a modified hypergeometric test for filtering gene pairs [64] and a multinomial method for gene filtering using the deviance statistic [65].

While filters are the most common options for pre-processing and feature selection from single-cell transcriptomics data, the application of wrapper methods is gaining much attention with a range of approaches built and extends on classic methods with the primary goal of facilitating downstream analyses such as cell type classification. Some examples include the application of classic methods such as greedy-based optimisation of entropy [66], nature-inspired optimisation such as using GA [67, 68], and their hybrid with filters [69,70,71] or embedded methods [72]. More advanced methods include active learning-based feature selection using SVM as a wrapper [73] and optimisation based on data projection [74]. The impact of optimal feature selection using wrapper methods on improving cell type classification is well demonstrated through these studies.

Due to the simplicity in their application, the popularity of embedded methods is growing quickly in the last few years especially in studies that treat feature selection as a key goal in their analyses. These include the discovery of the minimum marker gene combinations using tree-based models [75], discriminative learning of DE genes using logistic regression models [76], regulatory gene signature identification using LASSO [77] and marker gene selection based on compressed sensing optimisation [78].

Lastly, several studies have compared the effect of various feature selection methods on the clustering of cell types [63] and investigated factors that affect feature selection in cell lineage analysis [79]. Together, these studies demonstrate the utility and flexibility of feature selection techniques in a wide range of tasks in single-cell transcriptomic data analyses.

Feature selection in single-cell epigenomics

Besides single-cell transcriptomic profiling, another fast-maturing single-cell omics technology is single-cell epigenomics profiling using scATAC-seq [51]. In particular, scATAC-seq measures genome-wide chromatin accessibility and therefore can provide a clue regarding the activity of epigenomic regulatory elements and their transcription factor binding motifs in single cells. Such data can offer additional information that is not accessible to scRNA-seq technologies and hence can complement and significantly enrich scRNA-seq data for characterising cell identity and gene regulatory networks (GRNs) in single cells [80]. Although most application of feature selection has been on investigating single-cell transcriptomes, recent studies have broadened the view to single-cell epigenomics primarily through their application in scATAC-seq data analysis. These analyses enable us to expand the gene expression analysis to also include regulatory elements such as enhancers and silencers in understanding molecular and cellular processes.

Feature selection methods could be directly applied to scATAC-seq data for identifying differential accessible chromatin regions or one can summarise scATAC-seq data to the gene level using tools such as those reviewed in [81] and then feature selection be performed for selecting ‘differentially accessible genes’ (DAGs) using such summarised data. For instance, Scasat, a tool for classifying cells using scATAC-seq data, implements both information gain and Fisher’s exact test for filtering and selecting differential accessible chromatin regions [82]. Similarly, scATAC-pro, a pipeline for scATAC-seq analysis at the chromatin level, employs Wilcoxon test as the default for filtering differential accessible chromatin regions, while also implements embedded methods such as logistic regression and negative binomial regression-based models as alternative options [83]. Another example is SnapATAC [84] which performs differential accessible chromatin analysis using the DE method implemented in edgeR [85]. In contrast, Kawaguchi et al. [86] summarised scATAC-seq data to the gene level using SCANPY [87] and performed embedded feature selection using either logistic LASSO or random forests to identify DAGs [86]. Muto et al. [88] performed filter-based differential analysis on both chromatin and gene levels based on Cicero estimated gene activity scores [89]. Finally, DUBStepR [71], a hybrid approach that combines a correlation-based filter and a regression-based wrapper for gene selection from scRNA-seq data, can also be applied to scATAC-seq data. Collectively, these methods and tools demonstrate the utility and impact of feature selection on scATAC data for cell-type identification, motif analysis, regulatory element and gene interaction detection among other applications.

Feature selection for single-cell surface proteins

Owing to the recent advancement in flow cytometry and related technologies such as mass cytometry [90, 91], and single-cell multimodal sequencing technologies such as CITE-seq [52], surface proteins of the cells have now also become increasingly accessible at the single-cell resolution.

A key application of feature selection methods to flow and mass cytometry data has been for finding optimal protein markers for cell gating [92]. A representative example is GateFinder which implements a random forest-based feature selection procedure for optimising stepwise gating strategies on each given dataset [93]. Besides automated gating, several studies have also explored the use of feature selection for improving model performance on sample classification. For example, in their study, Hassan et al. [94] demonstrated the utility of shrinkage-based embedded models for classifying cancer samples. Another application of feature selection techniques was recently demonstrated by Tanhaemami et al. [95] for discovering signatures from label-free single cells. In particular, the authors employed a GA for feature selection and verified its utility in predicting lipid contents in algal cells under different conditions. Together, these studies illustrate the wide applicability of feature selection methods in a wide range of challenges in flow and mass cytometry data analysis.

Recent advancement in single-cell multimodal sequencing technologies such as CITE-seq and other related techniques such as RNA expression and protein sequencing (REAP-seq) [96] has enabled the profiling of both surface proteins and gene expressions at the single-cell level. While still at its infancy, feature selection techniques have already found their use in such data. One example is the application of a random forest-based approach for selecting marker proteins that can distinguish closely related cell types profiled using CITE-seq from PBMCs isolated from the blood of healthy human donors [97]. Another example is the use of a greedy forward feature selection wrapper that maximises a logistic regression model for identifying surface protein markers for each cell type from a given CITE-seq dataset [98].

Feature selection in single-cell imaging data

Other widely accessible data at the single-cell resolution are imaging-related data types such as those generated by image cytometry [99] and various single-cell imaging techniques [100]. Although the application of feature selection methods in this domain is very diverse, the following examples provide a snapshot of different types of feature selection techniques used for single-cell imaging data analysis.

To classify cell states using imaging flow cytometry data, Pischel et al. [101] employed a set of filters, including mutual information maximisation, maximum relevance minimum redundancy and Fisher score, for feature selection and demonstrated their utility on apoptosis detection. To predict cell cycle phases, Hennig et al. [102] implemented two embedded feature selection techniques, gradient boosting and random forest, for selecting the most predictive features from image cytometry data. These implementations are included in the CellProfiler, open-source software for imaging flow cytometry data analysis. To improve data interpretability of single-cell imaging data, Peralta and Saeys [103] proposed a clustering-based method for selecting representative features from each cluster and thus significantly reducing data dimensionality. To classify cell phenotypes, Doan et al. [104] implemented supervised and weakly supervised deep learning models in a framework called Deepometry for feature selection from imaging cytometry data. To classify cells according to their response to insulin stimulation, Norris et al. [105] used a random forest approach for ranking the informativeness of various temporal features extracted from time-course live-cell imaging data. Finally, to select spatially variable genes from imaging data generated by multiplexed single-molecule fluorescence in situ hybridization (smFISH), Svensson et al. [106] introduced a model based on the Gaussian process regression that decomposes expression and spatial information for gene selection.

Upcoming domains and future opportunities

The works reviewed above covers some of the most popular single-cell data types. Nevertheless, the technological advances in the single-cell field are extending our capability at a breakneck speed, enabling many other data modalities [107] as well as the spatial locations [108] of individual cells to be captured in high-throughput. For instance, recent development in single-cell DNA-sequencing provides the opportunity to analyse SNPs and copy-number variations (CNVs) in individual cells from cancer and normal tissues [109, 110], and single-cell proteomics seems now on the horizon [111, 112], holding great promises to further transform the single-cell field. Given the high feature-dimensionality of such data (e.g. numbers of SNPs, proteins and spatial locations), we anticipate feature selection techniques to be readily adopted for these single-cell data types when they become more available.

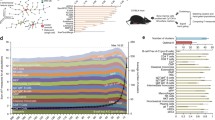

Another fast-growing capability in the single-cell field is increasingly towards multimodality. CITE-seq and REAP-seq are examples where both the gene expression and the surface proteins are measured in each individual cell. Nevertheless, many more recent techniques now also enable other combinations of modalities to be profiled at the single-cell level (Fig. 2). Some examples include ASAP-seq for profiling gene expression, chromatin accessibility and protein levels [113]; scMT-seq for profiling gene expression and DNA methylation [114] and its extension, scNMT-seq, for gene expression, chromatin accessibility and DNA methylation [115]; SHARE-seq and SNARE-seq for gene expression and chromatin accessibility [116, 117]; scTrio-seq for CNVs, DNA methylation and gene expression [118]; and G&T-seq for genomic DNA and gene expression [119]. Given the complexity in the data structure in these single-cell multimodal data, feature selection methods that can facilitate integrative analysis of multiple data modalities are in great need. While some preliminary works have emerged recently [120], research on integrative feature selection is still at its infancy and requires significant innovation in their design and implementation.

On the design of feature selection techniques in the single-cell field, most current studies directly use one of the three main types of methods (i.e. filters, wrappers and embedded methods). While we found a small number of them employed hybrid approaches (e.g. [71, 72]), most are relatively straightforward combinations (such as stepwise application of filter and then wrapper methods) as have been used previously for bulk data analyses. The application of ensemble and deep learning-based feature selection methods is even sparser in the field. One ensemble feature selection method is EDGE which uses a set of weak learners to vote for important genes from scRNA-seq data [121], and the current literature on deep learning-based feature selection in single cells are a study for identifying regulatory modules from scRNA-seq data through autoencoder deconvolution [122]; and another for identifying disease-associated gene from scRNA-seq data using gradient-based methods [49]. Owing to the non-linear nature of the deep learning models, feature selection methods that are based on deep learning are well-suited to learn complex non-linear relationships among features. Given the widespread non-linearity relationships, such as gene-gene and protein-protein interactions, and interactions among genomic regulatory elements and their target genes in biological systems, and hence the data derived from them, we anticipate more research to be conducted on developing and adopting deep learning-based feature selection techniques in the single-cell field in the near future.

Applicability considerations

The works we have reviewed above showcase diverse feature selection strategies and promising future directions in single-cell data analytics. In practice, scalability and robustness are critical in choosing feature selection techniques and are largely dependent on the algorithm structure and implementation. Here, we discuss several key aspects specific to the utility and applicability of feature selection methods with the goal of guiding the choice of methods from each feature selection category for readers who are interested in their application.

Scalability towards the feature dimension

A key aspect in the applicability of a feature selection method rests upon its scalability to large datasets. Univariate filter algorithms are probably the most efficient in terms of scalability towards the feature dimension since, in general, the computation time of these algorithms increases linearly with the number of features. We therefore recommend univariate filters as the first choice when working with datasets with very high feature dimensions. In comparison, wrapper algorithms generally do not scale well with respect to the number of features due to their frequent reliance on combinatorial optimisation and therefore will remain applicable to datasets with a relatively small number of features. While other factors such as available computational resources and specific algorithm implementations also affect the choice of methods, wrapper algorithms are generally applied to datasets with up to a few hundred features. Embedded methods offer a good trade-off and both tree- and shrinkage-based methods computationally scale well with the number of features [19]. Nevertheless, like wrapper methods, embedded methods rely on an induction algorithm for feature selection and therefore are sensitive to model overfitting when dealing with data with a small sample size. We recommend choosing embedded methods for datasets with up to a few thousand features when the sample size (e.g. number of cells) is moderate or large. Similarly, hybrid algorithms that combine the filter with wrappers or filter with embedded methods also make a useful compromise and can be applied to the dataset with relatively high to very high feature dimensions, depending on the reduced feature dimension following the filtering step.

Scalability towards the sample size

With the advance of biotechnologies, the number of cells profiled in an experiment is growing exponentially. Hence, apart from the feature dimensionality, the scalability of the feature selection algorithm towards the sample size, typically in terms of the number of cells, is also a central determinant of its applicability to large-scale single-cell datasets. Although classic feature selection algorithms such as filters scale linearly towards the feature dimension, this does not necessarily mean they also scale linearly with the increasing number of cells [55]. To this end, the choice is more dependent on the specific implementation of the feature selection algorithms. Methods that purely rely on estimating variabilities (e.g. HVGs) without using cell type labels and fitting models generally scale better due to the extra steps taken by the latter for learning various data characteristics (e.g. zero-inflation). Another aspect to note is the memory usage. Most filter methods require the entire dataset to be loaded into the computer memory before feature selection can be performed. This can be an issue when the size of the dataset exceeds the size of the computer memory. Interestingly, deep learning-based feature selection methods could be better suited for analysing datasets with a very large number of cells. This is due to the unique characteristic of these methods where the neural network can be trained using small batches of input data sequentially and therefore alleviates the need to load the entire dataset into the computer memory.

Robustness and interpretability

Besides algorithm scalability, robustness and interpretability are also important criteria for assessing and selecting feature selection methods. This is especially crucial when the downstream applications are to identify reproducible biomarkers, where the selection of robust and stable features is essential, or to characterise gene regulatory networks, where model interpretability will be highly desirable. A key property of ensemble feature selection methods is their robustness to noise and slight variations in the data, which leads to better reproducibility in selected features [32, 33]. We thus recommend exploring ensemble feature selection methods when the task is related to identify reproducible biomarkers such as marker genes for cells of a given type. In terms of interpretability, complex models, while often offering better performance in downstream analyses such as cell classification, may not be the most appropriate choices given the difficulties in their model interpretation. To this end, simpler models such as tree-based methods can provide clarity, for example, to how selected features are used to classify a cell and hence can facilitate the characterisation of gene regulatory networks underlying cell identity. Notably, however, significant progress has been made to improve interpretability especially for deep learning models [123]. Given the increasing importance of downstream analyses that involves biomarker discovery and pathway/network characterisation in single-cell research, we anticipate increasing efforts to be devoted to improving the robustness and interpretability of advanced methods such as deep learning models in feature selection applications.

Other considerations

Finally, the choice of feature selection methods also depends on other factors such as programming language, computing platform, parallelisation and whether they are well documented and easy to use. While most recent methods are implemented using popular programming languages such as R and Python which are well supported in various computing platforms including Windows, macOS and Linux/Unix and its variants, their difficulty in application varies and requires different levels of expertise from interacting with a simple graphical user interface to more complex execution that involves programming (e.g. loading packages in the R programming environment). Methods that optimise for computation speed may use C/C++ as their programming language and may also offer parallelisation. However, these methods are often computing platform-specific and may require more expertise from a specific operating system and programming language from users for their application. Lastly, the quality of the documentation of methods can have a significant impact on their ease of use. Methods that have comprehensive documentations with testable examples could help popularise their application. To this end, methods that are implemented under standardised framework such as Bioconductor [124] generally provide well-documented usages and examples known as ‘vignette’ for supporting users and therefore can be a practical consideration in their choices.

Conclusions

The explosion of single-cell data in recent years has led to a resurgence in the development and application of feature selection techniques for analysing such data. In this review, we revisited and summarised feature selection methods and their key development in the last decade. We then reviewed the recent literature for their applications in the single-cell field, summarising achievements so far and identifying missing aspects in the field. Based on these, we propose several research directions and discuss practical considerations that we hope will spark future research in feature selection and their application in the single-cell era.

Availability of data and materials

Not applicable.

References

Saeys Y, Inza I, Larranaga P. A review of feature selection techniques in bioinformatics. Bioinformatics. 2007;23(19):2507–17. https://doi.org/10.1093/bioinformatics/btm344.

Efremova M, Teichmann SA. Computational methods for single-cell omics across modalities. Nature Methods. 2020;17(1):14–7. https://doi.org/10.1038/s41592-019-0692-4.

Guyon I, Elisseeff A. An introduction to variable and feature selection. Journal of Machine Learning Research. 2003;3:1157–82.

Lazar C, Taminau J, Meganck S, Steenhoff D, Coletta A, Molter C, et al. A survey on filter techniques for feature selection in gene expression microarray analysis. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2012;9(4):1106–19. https://doi.org/10.1109/TCBB.2012.33.

Bolón-Canedo V, Sánchez-Marono N, Alonso-Betanzos A, Benítez JM, Herrera F. A review of microarray datasets and applied feature selection methods. Information Sciences. 2014;282:111–35. https://doi.org/10.1016/j.ins.2014.05.042.

Levner I. Feature selection and nearest centroid classification for protein mass spectrometry. BMC Bioinformatics. 2005;6(1):1–14. https://doi.org/10.1186/1471-2105-6-68.

Yang P, Ho JW, Zomaya AY, Zhou BB. A genetic ensemble approach for gene-gene interaction identification. BMC Bioinformatics. 2010;11(1):1–15. https://doi.org/10.1186/1471-2105-11-524.

Model F, Adorjan P, Olek A, Piepenbrock C. Feature selection for DNA methylation based cancer classification. Bioinformatics. 2001;17(Suppl 1):S157–64. https://doi.org/10.1093/bioinformatics/17.suppl_1.S157.

Gan Y, Guan J, Zhou S. A comparison study on feature selection of DNA structural properties for promoter prediction. BMC Bioinformatics. 2012;13(1):1–12. https://doi.org/10.1186/1471-2105-13-4.

Chandrashekar G, Sahin F. A survey on feature selection methods. Computers & Electrical Engineering. 2014;40(1):16–28. https://doi.org/10.1016/j.compeleceng.2013.11.024.

Ritchie ME, Phipson B, Wu D, Hu Y, Law CW, Shi W, et al. limma powers differential expression analyses for RNA-sequencing and microarray studies. Nucleic Acids Research. 2015;43(7):e47–7. https://doi.org/10.1093/nar/gkv007.

Ding C, Peng H. Minimum redundancy feature selection from microarray gene expression data. Journal of Bioinformatics and Computational Biology. 2005;3(02):185–205. https://doi.org/10.1142/S0219720005001004.

Bommert A, Sun X, Bischl B, Rahnenführer J, Lang M. Benchmark for filter methods for feature selection in high-dimensional classification data. Computational Statistics & Data Analysis. 2020;143:106839. https://doi.org/10.1016/j.csda.2019.106839.

Kohavi R, John GH. Wrappers for feature subset selection. Artificial Intelligence. 1997;97(1-2):273–324. https://doi.org/10.1016/S0004-3702(97)00043-X.

Aha, D. W. & Bankert, R. L. A comparative evaluation of sequential feature selection algorithms. In Learning From Data, 199–206 (Springer, 1996).

Li L, Weinberg CR, Darden TA, Pedersen LG. Gene selection for sample classification based on gene expression data: study of sensitivity to choice of parameters of the GA/KNN method. Bioinformatics. 2001;17(12):1131–42. https://doi.org/10.1093/bioinformatics/17.12.1131.

Yang P, Xu L, Zhou BB, Zhang Z, Zomaya AY. A particle swarm based hybrid system for imbalanced medical data sampling. BMC Genomics. 2009;10(Suppl 3):S34. https://doi.org/10.1186/1471-2164-10-S3-S34.

Lal, T. N., Chapelle, O., Weston, J. & Elisseeff, A. Embedded methods. In Feature Extraction, 137–165 (Springer, 2006).

Bolón-Canedo V, Sánchez-Maroño N, Alonso-Betanzos A. A review of feature selection methods on synthetic data. Knowledge and Information Systems. 2013;34(3):483–519. https://doi.org/10.1007/s10115-012-0487-8.

Deng, H. & Runger, G. Feature selection via regularized trees. In The 2012 International Joint Conference on Neural Networks (IJCNN), 1–8 (IEEE, 2012).

Breiman L. Random forests. Machine Learning. 2001;45(1):5–32. https://doi.org/10.1023/A:1010933404324.

Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society: Series B (Methodological). 1996;58:267–88.

Saeys, Y., Abeel, T. & Van de Peer, Y. Robust feature selection using ensemble feature selection techniques. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases, 313–325 (Springer, 2008).

Abeel T, Helleputte T, Van de Peer Y, Dupont P, Saeys Y. Robust biomarker identification for cancer diagnosis with ensemble feature selection methods. Bioinformatics. 2010;26(3):392–8. https://doi.org/10.1093/bioinformatics/btp630.

Yang, P., Liu, W., Zhou, B. B., Chawla, S. & Zomaya, A. Y. Ensemble-based wrapper methods for feature selection and class imbalance learning. In Pacific-Asia conference on knowledge discovery and data mining, 544–555 (Springer, 2013).

Tuv E, Borisov A, Runger G, Torkkola K. Feature selection with ensembles, artificial variables, and redundancy elimination. The Journal of Machine Learning Research. 2009;10:1341–66.

Dietterich, T. G. Ensemble methods in machine learning. In International Workshop on Multiple Classifier Systems, 1–15 (Springer, 2000).

Yang P, Hwa Yang Y. B Zhou, B. & Y Zomaya, A. A review of ensemble methods in bioinformatics. Current Bioinformatics. 2010;5(4):296–308. https://doi.org/10.2174/157489310794072508.

Cao Y, Geddes TA, Yang JYH, Yang P. Ensemble deep learning in bioinformatics. Nature Machine Intelligence. 2020;2:500–8.

Bolón-Canedo V, Alonso-Betanzos A. Ensembles for feature selection: a review and future trends. Information Fusion. 2019;52:1–12. https://doi.org/10.1016/j.inffus.2018.11.008.

Brahim AB, Limam M. Ensemble feature selection for high dimensional data: a new method and a comparative study. Advances in Data Analysis and Classification. 2018;12(4):937–52. https://doi.org/10.1007/s11634-017-0285-y.

Yang, P., Zhou, B. B., Yang, J. Y.-H. & Zomaya, A. Y. Stability of feature selection algorithms and ensemble feature selection methods in bioinformatics. Biological Knowledge Discovery Handbook, 333–352 (2013).

Pes B. Ensemble feature selection for high-dimensional data: a stability analysis across multiple domains. Neural Computing and Applications. 2020;32(10):5951–73. https://doi.org/10.1007/s00521-019-04082-3.

Hijazi, N. M., Faris, H. & Aljarah, I. A parallel metaheuristic approach for ensemble feature selection based on multi-core architectures. Expert Systems with Applications 115290 (2021).

Tsai C-F, Sung Y-T. Ensemble feature selection in high dimension, low sample size datasets: Parallel and serial combination approaches. Knowledge-Based Systems. 2020;203:106097. https://doi.org/10.1016/j.knosys.2020.106097.

Soufan O, Kleftogiannis D, Kalnis P, Bajic VB. Dwfs: a wrapper feature selection tool based on a parallel genetic algorithm. PloS one. 2015;10(2):e0117988. https://doi.org/10.1371/journal.pone.0117988.

Chen C-W, Tsai Y-H, Chang F-R, Lin W-C. Ensemble feature selection in medical datasets: combining filter, wrapper, and embedded feature selection results. Expert Systems. 2020;37:e12553.

Seijo-Pardo B, Porto-Díaz I, Bolón-Canedo V, Alonso-Betanzos A. Ensemble feature selection: homogeneous and heterogeneous approaches. Knowledge-Based Systems. 2017;118:124–39. https://doi.org/10.1016/j.knosys.2016.11.017.

Jovic´, A., Brkic´, K. & Bogunovic´, N. A review of feature selection methods with applications. In 2015 38th international convention on information and communication technology, electronics and microelectronics (MIPRO), 1200–1205 (Ieee, 2015).

Yang P, Zhou BB, Zhang Z, Zomaya AY. A multi-filter enhanced genetic ensemble system for gene selection and sample classification of microarray data. BMC Bioinformatics. 2010;11(S1):1–12. https://doi.org/10.1186/1471-2105-11-S1-S5.

Chuang L-Y, Yang C-H, Wu K-C, Yang C-H. A hybrid feature selection method for dna microarray data. Computers in Biology and Medicine. 2011;41(4):228–37. https://doi.org/10.1016/j.compbiomed.2011.02.004.

Nanni L, Brahnam S, Lumini A. Combining multiple approaches for gene microarray classification. Bioinformatics. 2012;28(8):1151–7. https://doi.org/10.1093/bioinformatics/bts108.

Ribeiro, M. T., Singh, S. & Guestrin, C. “Why should I trust you?” explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data mining, 1135–1144 (2016).

Bach S, Binder A, Montavon G, Klauschen F, Müller KR, Samek W. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PloS One. 2015;10(7):e0130140. https://doi.org/10.1371/journal.pone.0130140.

Simonyan, K., Vedaldi, A. & Zisserman, A. Deep inside convolutional networks: visualising image classification models and saliency maps. In In Workshop at International Conference on Learning Representations (Citeseer, 2014).

Shrikumar, A., Greenside, P. & Kundaje, A. Learning important features through propagating activation differences. In International Conference on Machine Learning, 3145–3153 (PMLR, 2017).

Cancela B, Bolón-Canedo V, Alonso-Betanzos A, Gama J. A scalable saliency-based feature selection method with instance-level information. Knowledge-Based Systems. 2020;192:105326. https://doi.org/10.1016/j.knosys.2019.105326.

Li Y, Chen C-Y, Wasserman WW. Deep feature selection: theory and application to identify enhancers and promoters. Journal of Computational Biology. 2016;23(5):322–36. https://doi.org/10.1089/cmb.2015.0189.

Bahrami M, Maitra M, Nagy C, Turecki G, Rabiee HR, Li Y. Deep feature extraction of single-cell transcriptomes by generative adversarial network. Bioinformatics. 2021;37(10):1345–51. https://doi.org/10.1093/bioinformatics/btaa976.

Buettner F, Natarajan KN, Casale FP, Proserpio V, Scialdone A, Theis FJ, et al. Computational analysis of cell-to-cell heterogeneity in single-cell rna-sequencing data reveals hidden subpopulations of cells. Nature Biotechnology. 2015;33(2):155–60. https://doi.org/10.1038/nbt.3102.

Cusanovich DA, Daza R, Adey A, Pliner HA, Christiansen L, Gunderson KL, et al. Multiplex single-cell profiling of chromatin accessibility by combinatorial cellular indexing. Science. 2015;348(6237):910–4. https://doi.org/10.1126/science.aab1601.

Stoeckius M, Hafemeister C, Stephenson W, Houck-Loomis B, Chattopadhyay PK, Swerdlow H, et al. Simultaneous epitope and transcriptome measurement in single cells. Nature Methods. 2017;14(9):865–8. https://doi.org/10.1038/nmeth.4380.

Aldridge S, Teichmann SA. Single cell transcriptomics comes of age. Nature Communications. 2020;11:1–4.

Mereu E, Lafzi A, Moutinho C, Ziegenhain C, McCarthy DJ, Álvarez-Varela A, et al. Benchmarking single-cell RNA-sequencing protocols for cell atlas projects. Nature Biotechnology. 2020;38(6):747–55. https://doi.org/10.1038/s41587-020-0469-4.

Soneson C, Robinson MD. Bias, robustness and scalability in single-cell differential expression analysis. Nature Methods. 2018;15(4):255–61. https://doi.org/10.1038/nmeth.4612.

Vans, E., Patil, A. & Sharma, A. Feats: feature selection-based clustering of single-cell rna-seq data. Briefings in bioinformatics bbaa306.

Lin, Y. et al. scclassify: sample size estimation and multiscale classification of cells using single and multiple reference. Molecular Systems Biology 16, e9389 (2020).

Korthauer KD, Chu LF, Newton MA, Li Y, Thomson J, Stewart R, et al. A statistical approach for identifying differential distributions in single-cell rna-seq experiments. Genome Biology. 2016;17(1):1–15. https://doi.org/10.1186/s13059-016-1077-y.

Stuart T, Butler A, Hoffman P, Hafemeister C, Papalexi E, Mauck WM III, et al. Comprehensive integration of single-cell data. Cell. 2019;177(7):1888–902. https://doi.org/10.1016/j.cell.2019.05.031.

Wang F, Liang S, Kumar T, Navin N, Chen K. Scmarker: ab initio marker selection for single cell transcriptome profiling. PLoS Computational Biology. 2019;15(10):e1007445. https://doi.org/10.1371/journal.pcbi.1007445.

Andrews TS, Hemberg M. M3drop: dropout-based feature selection for scrnaseq. Bioinformatics. 2019;35(16):2865–7. https://doi.org/10.1093/bioinformatics/bty1044.

Hao J, Cao W, Huang J, Zou X, Han Z-G. Optimal gene filtering for single-cell data (ogfsc)—a gene filtering algorithm for single-cell rna-seq data. Bioinformatics. 2019;35(15):2602–9. https://doi.org/10.1093/bioinformatics/bty1016.

Su K, Yu T, Wu H. Accurate feature selection improves single-cell RNA-seq cell clustering. Briefings in Bioinformatics. 2021;22(5). https://doi.org/10.1093/bib/bbab034.

Delaney C, Schnell A, Cammarata LV, Yao-Smith A, Regev A, Kuchroo VK, et al. Combinatorial prediction of marker panels from single-cell transcriptomic data. Molecular systems biology. 2019;15(10):e9005. https://doi.org/10.15252/msb.20199005.

Townes FW, Hicks SC, Aryee MJ, Irizarry RA. Feature selection and dimension reduction for single-cell RNA-seq based on a multinomial model. Genome Biology. 2019;20(1):1–16. https://doi.org/10.1186/s13059-019-1861-6.

Lall, S., Ghosh, A., Ray, S. & Bandyopadhyay, S. sc-REnF: an entropy guided robust feature selection for clustering of single-cell rna-seq data. bioRxiv (2020).

Aliee H, Theis FJ. Autogenes: automatic gene selection using multi-objective optimization for RNA-seq deconvolution. Cell Systems. 2021;12(7):706–715.e4. https://doi.org/10.1016/j.cels.2021.05.006.

Gupta S, Verma AK, Ahmad S. Feature selection for topological proximity prediction of single-cell transcriptomic profiles in drosophila embryo using genetic algorithm. Genes. 2021;12(1):28. https://doi.org/10.3390/genes12010028.

Zhang, J. & Feng, J. Gene selection for single-cell RNA-seq data based on information gain and genetic algorithm. In 2018 14th International Conference on Computational Intelligence and Security (CIS), 57–61 (IEEE, 2018).

Zhang, J., Feng, J. & Yang, X. Gene selection for scRNA-seq data based on information gain and fruit fly optimization algorithm. In 2019 15th International Conference on Computational Intelligence and Security (CIS), 187–191 (IEEE, 2019).

Ranjan B, Sun W, Park J, Mishra K, Schmidt F, Xie R, et al. DUBStepR is a scalable correlation-based feature selection method for accurately clustering single-cell data. Nature Communications. 2021;12(1):5849. https://doi.org/10.1038/s41467-021-26085-2.

Yuan F, Pan XY, Zeng T, Zhang YH, Chen L, Gan Z, et al. Identifying cell-type specific genes and expression rules based on single-cell transcriptomic atlas data. Frontiers in Bioengineering and Biotechnology. 2020;8:350. https://doi.org/10.3389/fbioe.2020.00350.

Chen, X., Chen, S. & Thomson, M. Active feature selection discovers minimal gene-sets for classifying cell-types and disease states in single-cell mRNA-seq data. arXiv preprint arXiv:2106.08317 (2021).

Dumitrascu B, Villar S, Mixon DG, Engelhardt BE. Optimal marker gene selection for cell type discrimination in single cell analyses. Nature Communications. 2021;12(1):1–8. https://doi.org/10.1038/s41467-021-21453-4.

Aevermann, B. D. et al. A machine learning method for the discovery of minimum marker gene combinations for cell-type identification from single-cell RNA sequencing. Genome Research, gr–275569 (2021).

Ntranos V, Yi L, Melsted P, Pachter L. A discriminative learning approach to differential expression analysis for single-cell RNA-seq. Nature Methods. 2019;16(2):163–6. https://doi.org/10.1038/s41592-018-0303-9.

Huynh, N. P., Kelly, N. H., Katz, D. B., Pham, M. & Guilak, F. Single cell RNA sequencing reveals heterogeneity of human MSC chondrogenesis: Lasso regularized logistic regression to identify gene and regulatory signatures. bioRxiv 854406 (2019).

Vargo AH, Gilbert AC. A rank-based marker selection method for high throughput scRNA-seq data. BMC Bioinformatics. 2020;21(1):1–51. https://doi.org/10.1186/s12859-020-03641-z.

Chen B. Herring, C. A. & Lau, K. S. pyNVR: investigating factors affecting feature selection from scRNA-seq data for lineage reconstruction. Bioinformatics. 2019;35(13):2335–7. https://doi.org/10.1093/bioinformatics/bty950.

Buenrostro JD, Wu B, Litzenburger UM, Ruff D, Gonzales ML, Snyder MP, et al. Single-cell chromatin accessibility reveals principles of regulatory variation. Nature. 2015;523(7561):486–90. https://doi.org/10.1038/nature14590.

Chen H, Lareau C, Andreani T, Vinyard ME, Garcia SP, Clement K, et al. Assessment of computational methods for the analysis of single-cell ATAC-seq data. Genome Biology. 2019;20(1):1–25. https://doi.org/10.1186/s13059-019-1854-5.

Baker SM, Rogerson C, Hayes A, Sharrocks AD, Rattray M. Classifying cells with scasat, a single-cell ATAC-seq analysis tool. Nucleic acids research. 2019;47(2):e10–0. https://doi.org/10.1093/nar/gky950.

Yu W, Uzun Y, Zhu Q. Chen, C. & Tan, K. scATAC-pro: a comprehensive workbench for single-cell chromatin accessibility sequencing data. Genome Biology. 2020;21(1):1–17. https://doi.org/10.1186/s13059-020-02008-0.

Fang R, Preissl S, Li Y, Hou X, Lucero J, Wang X, et al. Comprehensive analysis of single cell atac-seq data with snapatac. Nature communications. 2021;12(1):1–15. https://doi.org/10.1038/s41467-021-21583-9.

Robinson MD. McCarthy, D. J. & Smyth, G. K. edgeR: a bioconductor package for differential expression analysis of digital gene expression data. Bioinformatics. 2010;26(1):139–40. https://doi.org/10.1093/bioinformatics/btp616.

Kawaguchi RK, et al. Exploiting marker genes for robust classification and characterization of single-cell chromatin accessibility. BioRxiv. 2021.

Wolf FA, Angerer P, Theis FJ. SCANPY: large-scale single-cell gene expression data analysis. Genome Biology. 2018;19(1):1–5. https://doi.org/10.1186/s13059-017-1382-0.

Muto Y, Wilson PC, Ledru N, Wu H, Dimke H, Waikar SS, et al. Single cell transcriptional and chromatin accessibility profiling redefine cellular heterogeneity in the adult human kidney. Nature Communications. 2021;12(1):1–17. https://doi.org/10.1038/s41467-021-22368-w.

Pliner HA, Packer JS, McFaline-Figueroa JL, Cusanovich DA, Daza RM, Aghamirzaie D, et al. Cicero predicts cis-regulatory DNA interactions from single-cell chromatin accessibility data. Molecular Cell. 2018;71(5):858–71. https://doi.org/10.1016/j.molcel.2018.06.044.

Brummelman J, Haftmann C, Núñez NG, Alvisi G, Mazza EMC, Becher B, et al. Development, application and computational analysis of high-dimensional fluorescent antibody panels for single-cell flow cytometry. Nature Protocols. 2019;14(7):1946–69. https://doi.org/10.1038/s41596-019-0166-2.

Spitzer MH, Nolan GP. Mass cytometry: single cells, many features. Cell. 2016;165(4):780–91. https://doi.org/10.1016/j.cell.2016.04.019.

Saeys Y, Van Gassen S, Lambrecht BN. Computational flow cytometry: helping to make sense of high-dimensional immunology data. Nature Reviews Immunology. 2016;16(7):449–62. https://doi.org/10.1038/nri.2016.56.

Aghaeepour N, Simonds EF, Knapp DJHF, Bruggner RV, Sachs K, Culos A, et al. GateFinder: projection-based gating strategy optimization for flow and mass cytometry. Bioinformatics. 2018;34(23):4131–3. https://doi.org/10.1093/bioinformatics/bty430.

Hassan, S. S., Ruusuvuori, P., Latonen, L. & Huttunen, H. Flow cytometry-based classification in cancer research: a view on feature selection. Cancer Informatics 14, CIN–S30795 (2015).

Tanhaemami M, Alizadeh E, Sanders CK, Marrone BL, Munsky B. Using flow cytometry and multistage machine learning to discover label-free signatures of algal lipid accumulation. Physical Biology. 2019;16(5):055001. https://doi.org/10.1088/1478-3975/ab2c60.

Peterson VM, Zhang KX, Kumar N, Wong J, Li L, Wilson DC, et al. Multiplexed quantification of proteins and transcripts in single cells. Nature Biotechnology. 2017;35(10):936–9. https://doi.org/10.1038/nbt.3973.

Kim HJ, Lin Y, Geddes TA, Yang JYH, Yang P. CiteFuse enables multi-modal analysis of CITE-Seq data. Bioinformatics. 2020;36(14):4137–43. https://doi.org/10.1093/bioinformatics/btaa282.

Hao Y, Hao S, Andersen-Nissen E, Mauck WM III, Zheng S, Butler A, et al. Integrated analysis of multimodal single-cell data. Cell. 2021;184(13):3573–3587.e29. https://doi.org/10.1016/j.cell.2021.04.048.

Weissleder R, Lee H. Automated molecular-image cytometry and analysis in modern oncology. Nature Reviews Materials. 2020;5(6):409–22. https://doi.org/10.1038/s41578-020-0180-6.

Stender AS, Marchuk K, Liu C, Sander S, Meyer MW, Smith EA, et al. Single cell optical imaging and spectroscopy. Chemical Reviews. 2013;113(4):2469–527. https://doi.org/10.1021/cr300336e.

Pischel D, Buchbinder JH, Sundmacher K, Lavrik IN, Flassig RJ. A guide to automated apoptosis detection: how to make sense of imaging flow cytometry data. PloS One. 2018;13(5):e0197208. https://doi.org/10.1371/journal.pone.0197208.

Hennig H, Rees P, Blasi T, Kamentsky L, Hung J, Dao D, et al. An open-source solution for advanced imaging flow cytometry data analysis using machine learning. Methods. 2017;112:201–10. https://doi.org/10.1016/j.ymeth.2016.08.018.

Peralta D, Saeys Y. Robust unsupervised dimensionality reduction based on feature clustering for single-cell imaging data. Applied Soft Computing. 2020;93:106421. https://doi.org/10.1016/j.asoc.2020.106421.

Doan, M. et al. Deepometry, a framework for applying supervised and weakly supervised deep learning to imaging cytometry. Nature Protocols 1–24 (2021).

Norris, D. et al. Signaling heterogeneity is defined by pathway architecture and intercellular variability in protein expression. iScience 24, 102118 (2021).

Svensson V, Teichmann SA, Stegle O. SpatialDE: identification of spatially variable genes. Nature Methods. 2018;15(5):343–6. https://doi.org/10.1038/nmeth.4636.

Macaulay IC, Ponting CP, Voet T. Single-cell multiomics: multiple measurements from single cells. Trends in Genetics. 2017;33(2):155–68. https://doi.org/10.1016/j.tig.2016.12.003.

Burgess DJ. Spatial transcriptomics coming of age. Nature Reviews Genetics. 2019;20(6):317–7. https://doi.org/10.1038/s41576-019-0129-z.

Velazquez-Villarreal EI, Maheshwari S, Sorenson J, Fiddes IT, Kumar V, Yin Y, et al. Single-cell sequencing of genomic DNA resolves sub-clonal heterogeneity in a melanoma cell line. Communications Biology. 2020;3(1):1–8. https://doi.org/10.1038/s42003-020-1044-8.

Luquette LJ, Bohrson CL, Sherman MA, Park PJ. Identification of somatic mutations in single cell DNA-seq using a spatial model of allelic imbalance. Nature Communications. 2019;10(1):1–14. https://doi.org/10.1038/s41467-019-11857-8.

Marx V. A dream of single-cell proteomics. Nature Methods. 2019;16(9):809–12. https://doi.org/10.1038/s41592-019-0540-6.

Kelly RT. Single-cell proteomics: progress and prospects. Molecular & Cellular Proteomics. 2020;19(11):1739–48. https://doi.org/10.1074/mcp.R120.002234.

Mimitou, E. P. et al. Scalable, multimodal profiling of chromatin accessibility, gene expression and protein levels in single cells. Nature Biotechnology 1–13 (2021).

Hu Y, Huang K, An Q, du G, Hu G, Xue J, et al. Simultaneous profiling of transcriptome and DNA methylome from a single cell. Genome Biology. 2016;17(1):1–11. https://doi.org/10.1186/s13059-016-0950-z.

Clark SJ, Argelaguet R, Kapourani CA, Stubbs TM, Lee HJ, Alda-Catalinas C, et al. scNMT-seq enables joint profiling of chromatin accessibility DNA methylation and transcription in single cells. Nature Communications. 2018;9(1):1–9. https://doi.org/10.1038/s41467-018-03149-4.

Ma S, Zhang B, LaFave LM, Earl AS, Chiang Z, Hu Y, et al. Chromatin potential identified by shared single-cell profiling of RNA and chromatin. Cell. 2020;183(4):1103–16. https://doi.org/10.1016/j.cell.2020.09.056.

Chen S, Lake BB, Zhang K. High-throughput sequencing of the transcriptome and chromatin accessibility in the same cell. Nature Biotechnology. 2019;37(12):1452–7. https://doi.org/10.1038/s41587-019-0290-0.

Hou Y, Guo H, Cao C, Li X, Hu B, Zhu P, et al. Single-cell triple omics sequencing reveals genetic, epigenetic, and transcriptomic heterogeneity in hepatocellular carcinomas. Cell Research. 2016;26(3):304–19. https://doi.org/10.1038/cr.2016.23.

Macaulay IC, Haerty W, Kumar P, Li YI, Hu TX, Teng MJ, et al. G&t-seq: parallel sequencing of single-cell genomes and transcriptomes. Nature Methods. 2015;12(6):519–22. https://doi.org/10.1038/nmeth.3370.

Liang S, Mohanty V, Dou J, Miao Q, Huang Y, Müftüoğlu M, et al. Single-cell manifold-preserving feature selection for detecting rare cell populations. Nature Computational Science. 2021;1(5):374–84. https://doi.org/10.1038/s43588-021-00070-7.

Sun X, Liu Y, An L. Ensemble dimensionality reduction and feature gene extraction for single-cell RNA-seq data. Nature Communications. 2020;11(1):1–9. https://doi.org/10.1038/s41467-020-19465-7.

Kinalis S, Nielsen FC, Winther O, Bagger FO. Deconvolution of autoencoders to learn biological regulatory modules from single cell mRNA sequencing data. BMC Bioinformatics. 2019;20(1):1–9. https://doi.org/10.1186/s12859-019-2952-9.

Samek, W. et al. Explainable artificial intelligence: understanding, visualizing and interpreting deep learning models. arXiv:1708.08296 (2017).

Gentleman RC, Carey VJ, Bates DM, Bolstad B, Dettling M, Dudoit S, et al. Bioconductor: open software development for computational biology and bioinformatics. Genome Biology. 2004;5(10):R80. https://doi.org/10.1186/gb-2004-5-10-r80.

Acknowledgements

The authors thank the feedback from the members of the Sydney Precision Bioinformatics Alliance.

Peer review information

Barbara Cheifet was the primary editor of this article and managed its editorial process and peer review in collaboration with the rest of the editorial team.

Review history

The review history is available as Additional file 2.

Funding

P.Y. is supported by a National Health and Medical Research Council Investigator Grant (1173469).

Author information

Authors and Affiliations

Contributions

P.Y. conceptualised this work. The authors reviewed the literature, drafted the manuscript and wrote and edited the manuscript. The authors read and approved the final manuscript.

Authors’ information

Twitter handles: @PengyiYang82 (Pengyi Yang); @haohuang1999 (Hao Huang); @ChunleiLiu0 (Chunlei Liu).

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1: Table S1.

A list of studies that applied feature selection techniques to the single-cell field.

Additional file 2:

Review history.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Yang, P., Huang, H. & Liu, C. Feature selection revisited in the single-cell era. Genome Biol 22, 321 (2021). https://doi.org/10.1186/s13059-021-02544-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13059-021-02544-3